3.1. 1D Model

Although our main interest is in two and three-dimensional problems, in order to help the reader use our approach, we still include the 1D problem.

Let

with domain

be the test function. We are going to solve

for

with

and

.

The approximate solution will be of the form , where and are constants to be determined. The sample points , are randomly generated and scattered in the unit interval, except that two of them are 0 and 1, respectively. The choice of c will be made according to Case 3 of the MN curves.

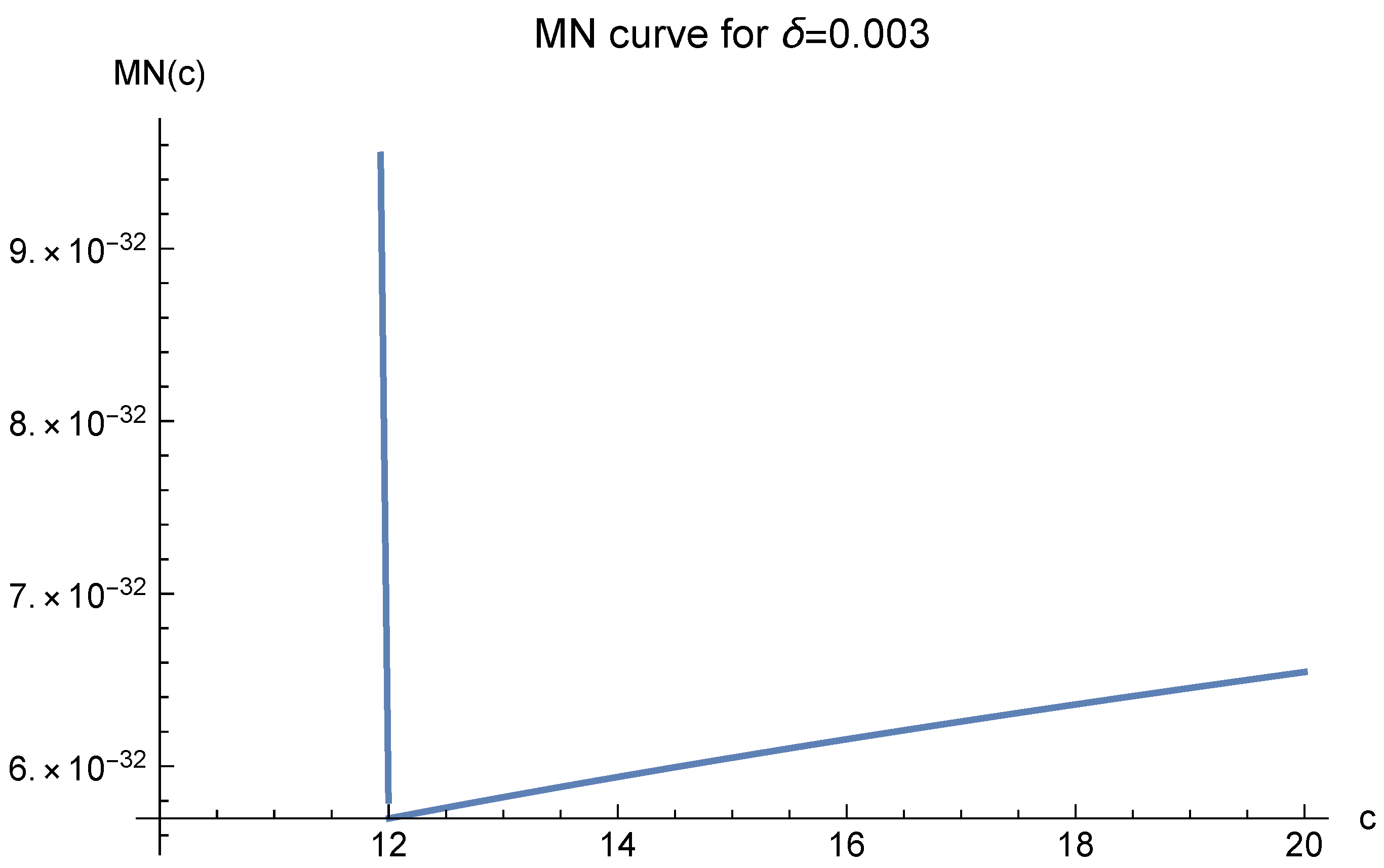

The MN function value greatly depends on the parameter

in the definition of

. We present three curves for

and three for

in

Figure 1,

Figure 2 and

Figure 3 and

Figure 4,

Figure 5 and

Figure 6, respectively. In the figures,

denotes the fill distance,

denotes the domain diameter,

d is the dimension, and

is the parameter in the definition of the multiquadrics (1).

Note that in these figures,

always corresponds to the minimal value of

. It suggests that one should choose

in our approximate solution to the Poisson equation. We present the experimental results in

Table 1. In the table,

denotes the number of data points used, and

,

denote the number of interior and boundary data points, respectively. We use

test points to measure the quality of the approximation

As in our previous papers,

denotes the diameter of the domain, and COND denotes the condition number of the system of the linear Equation (

6).

Table 1.

1D experiment, .

Table 1.

1D experiment, .

| 7 | 12 | 22 | 42 | 82 |

| 5 | 10 | 20 | 40 | 80 |

| 2 | 2 | 2 | 2 | 2 |

| RMS | | | | | |

| COND | | | | | |

In order to cope with the problem of ill-conditioning, enough effective digits were adopted for each step of the calculations. For example, for , we adopted 300 effective digits to the right of the decimal point to handle its huge corresponding condition number. Even so, it took only one second for the computer to solve the linear system. All these were achieved in virtue of the computer software Mathematica.

If c is chosen arbitrarily, say , then the RMS will be for . As for the comparison with other choices of c values, we are not going to present in this subsection for three reasons. Firstly, the results for are already quite good. Secondly, in our theory, the prediction is reliable only when enough data points are used, and then the condition number will be extremely large for and . For example, experimentally, we found that the optimal value of c is 1600 for , when and . In order to obtain the predicted value , we have to increase the number of data points so that , as in the experiments for interpolation. Then, the condition number will become much larger than , although the RMS will be smaller than . In practice we do not need such accuracy. Thirdly, such comparisons can be perfectly handled in the 2D and 3D experiments.

3.2. 2D Model

Our test function is now

on

. This function obviously lies in the Sobolev space. The Poisson equation is then

where

for

and

.

We are trying to find an approximate solution , where and , are constants to be determined. The sample points are scattered in the domain . Our focus is the choice of c.

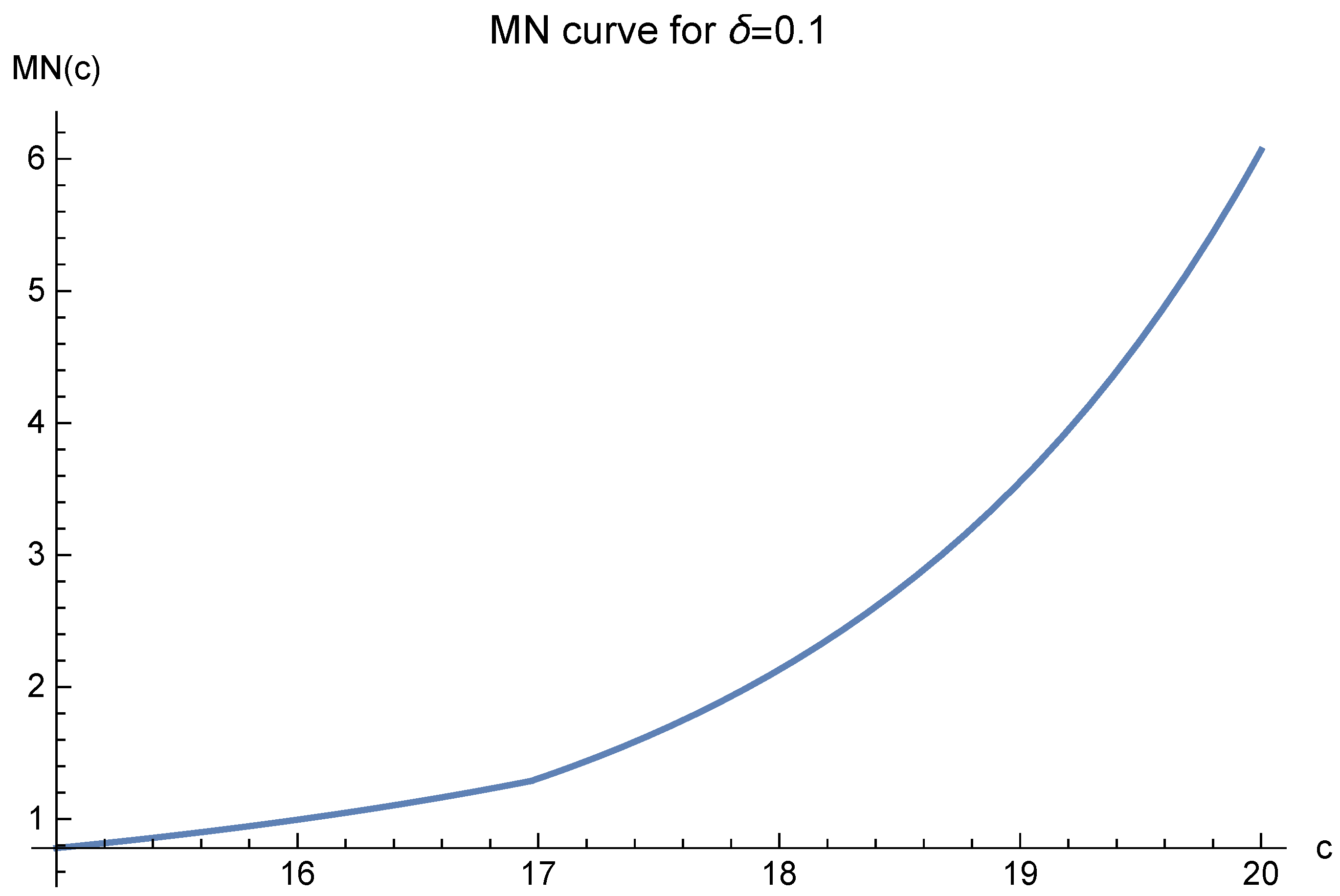

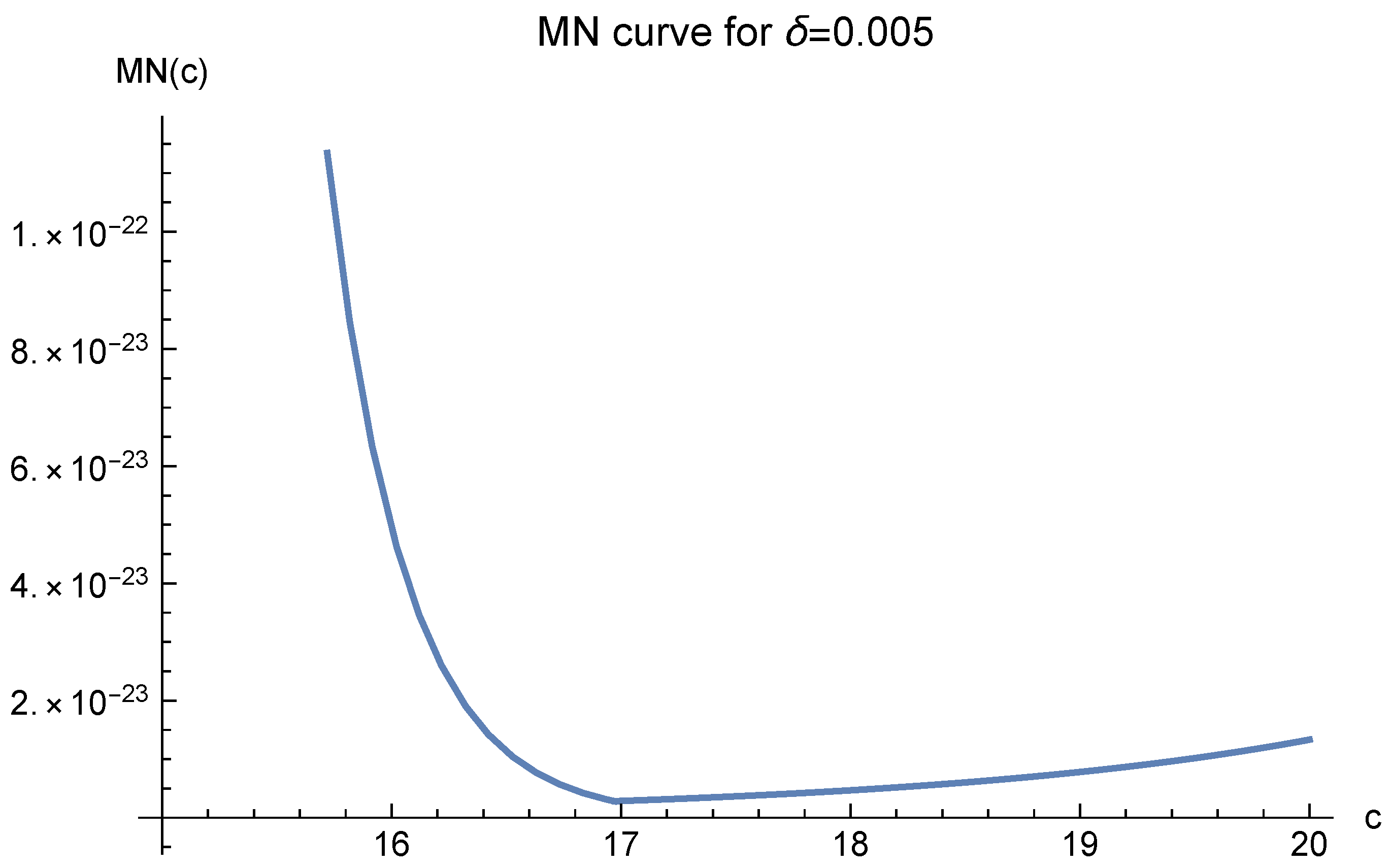

Now, let us analyze the MN curves. We first let and list five MN curves with different fill distances .

In

Figure 7,

Figure 8,

Figure 9,

Figure 10 and

Figure 11, it is clearly observed that as the fill distances decrease, the lowest points of the curves move to a fixed value

which is just

in Case 3 of the definition of

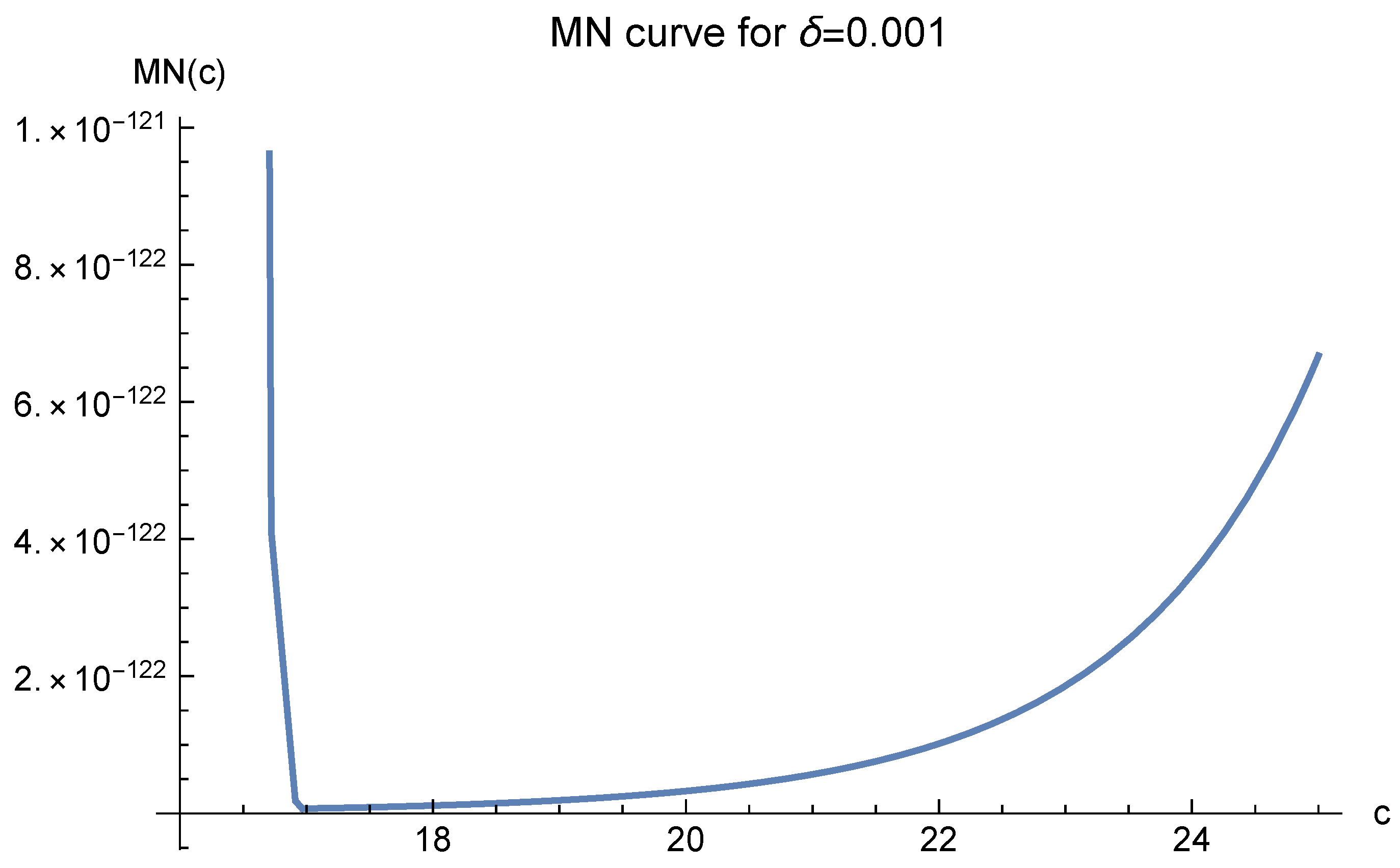

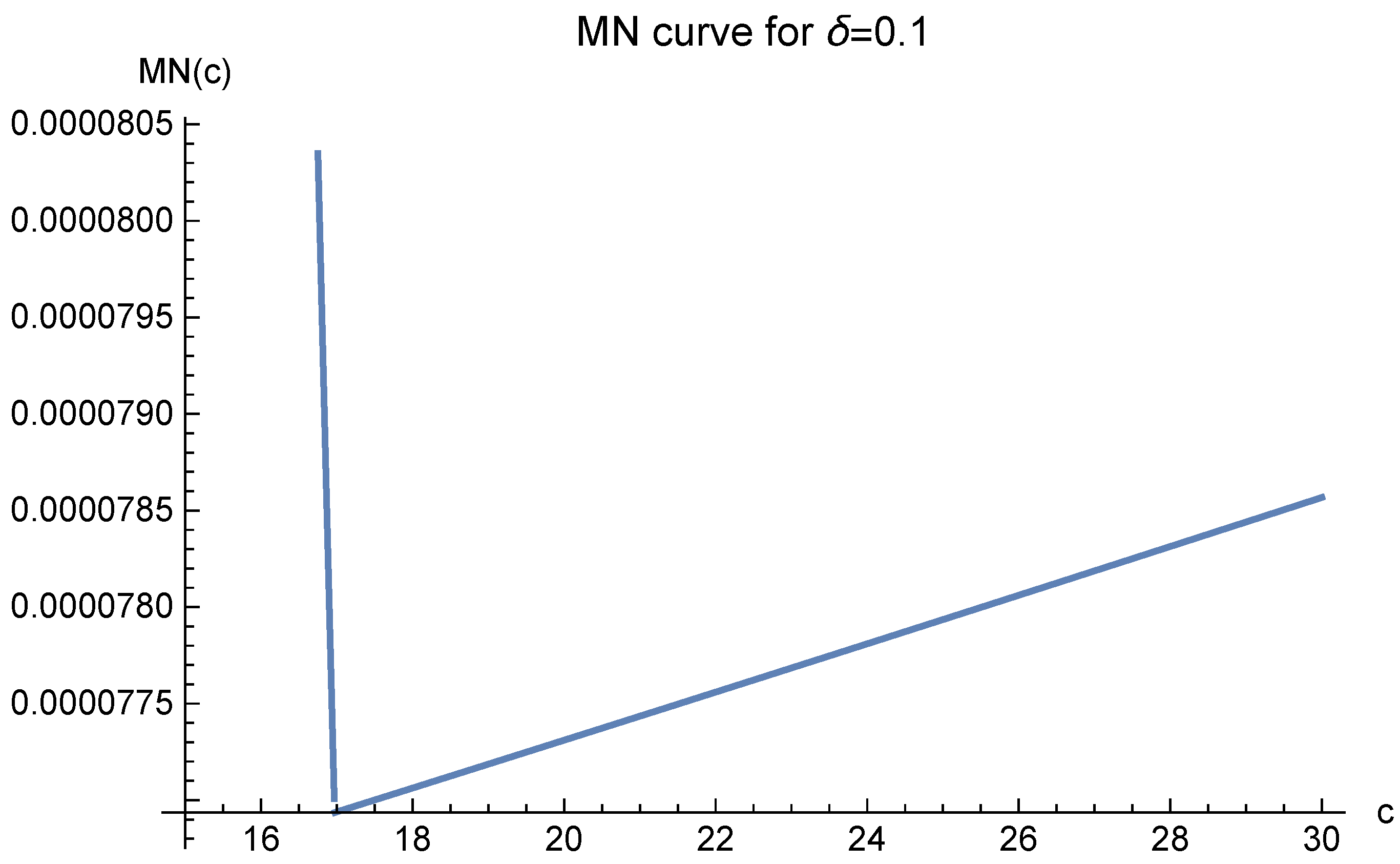

. Now, we investigate

.

Figure 12,

Figure 13 and

Figure 14 also demonstrate that the optimal choice of

c is 17. In fact, if we test other

’s, the same result will appear. In order to save space, we do not list them.

All the MN curves strongly suggest that one should choose

whenever enough data points are used. Thus, we investigate its quality and present the results in

Table 2. Here,

and

denote the numbers of data points located on the boundary and interior of the domain, respectively. Then,

and

denote the total numbers of data points and test points, respectively. The root-mean-square error, used to measure the approximation error, is defined by

As before, denotes the diameter of the domain and COND is the condition number of the linear system involved. The most time-consuming work of solving the linear system took only two seconds for 341 data points. Hence, we did not put the computer time into the table.

Figure 7.

MN curve for where and .

Figure 7.

MN curve for where and .

Figure 8.

MN curve for where and .

Figure 8.

MN curve for where and .

Figure 9.

MN curve for where and .

Figure 9.

MN curve for where and .

Figure 10.

MN curve for where and .

Figure 10.

MN curve for where and .

Figure 11.

MN curve for where and .

Figure 11.

MN curve for where and .

Figure 12.

MN curve for where and .

Figure 12.

MN curve for where and .

Figure 13.

MN curve for where and .

Figure 13.

MN curve for where and .

Figure 14.

MN curve for where and .

Figure 14.

MN curve for where and .

Table 2.

2D experiment, .

Table 2.

2D experiment, .

| 46 | 91 | 141 | 191 | 341 |

| 5 | 50 | 100 | 150 | 300 |

| 41 | 41 | 41 | 41 | 41 |

| RMS | | | | | |

| COND | | | | | |

For simplicity, the test points were evenly spaced in the domain. The interior data points were purely scattered and generated randomly by Mathematica in the domain, but the boundary data points were evenly spaced just for ease of programming. The problem of ill-conditioning was overcome by keeping enough effective digits to the right of the decimal point for each step of the computation. For example, when , we adopted 200 digits and successfully defeated the large condition number . In fact, 110 digits are already good enough and will lead to the same result. Even with 200 digits, it took only two seconds to solve the linear system. All these were achieved by the help of the arbitrarily precise computer software Mathematica.

Although

leads to satisfactory results, a comparison with other choices of

c is also needed.

Table 3 offers such a comparison. We fix

and

for all choices of

c.

In

Table 3, it is clear that our theoretically predicted optimal value

coincides exactly with the experimentally optimal value. Moreover, as depicted by the MN curves in

Figure 12,

Figure 13 and

Figure 14, the approximation errors become large very slowly for

. This is fully reflected by our experimental results.

The three-dimensional experiment is more challenging and is expected to be much more time-consuming. Fortunately, it takes only five minutes to compute the linear system, as we shall see in the next subsection.

3.3. 3D Model

The test function is now

on

. It lies in the Sobolev space. The Poisson equation is

where

is just the restriction of

to the six surfaces of the domain cube. In other words, the Dirichlet condition is adopted.

The approximate solution will be of the form where and , are constants to be determined. The sample points , are still scattered in the interior of the domain cube and evenly spaced on the boundary. We are going to find c such that the approximation error is as small as possible.

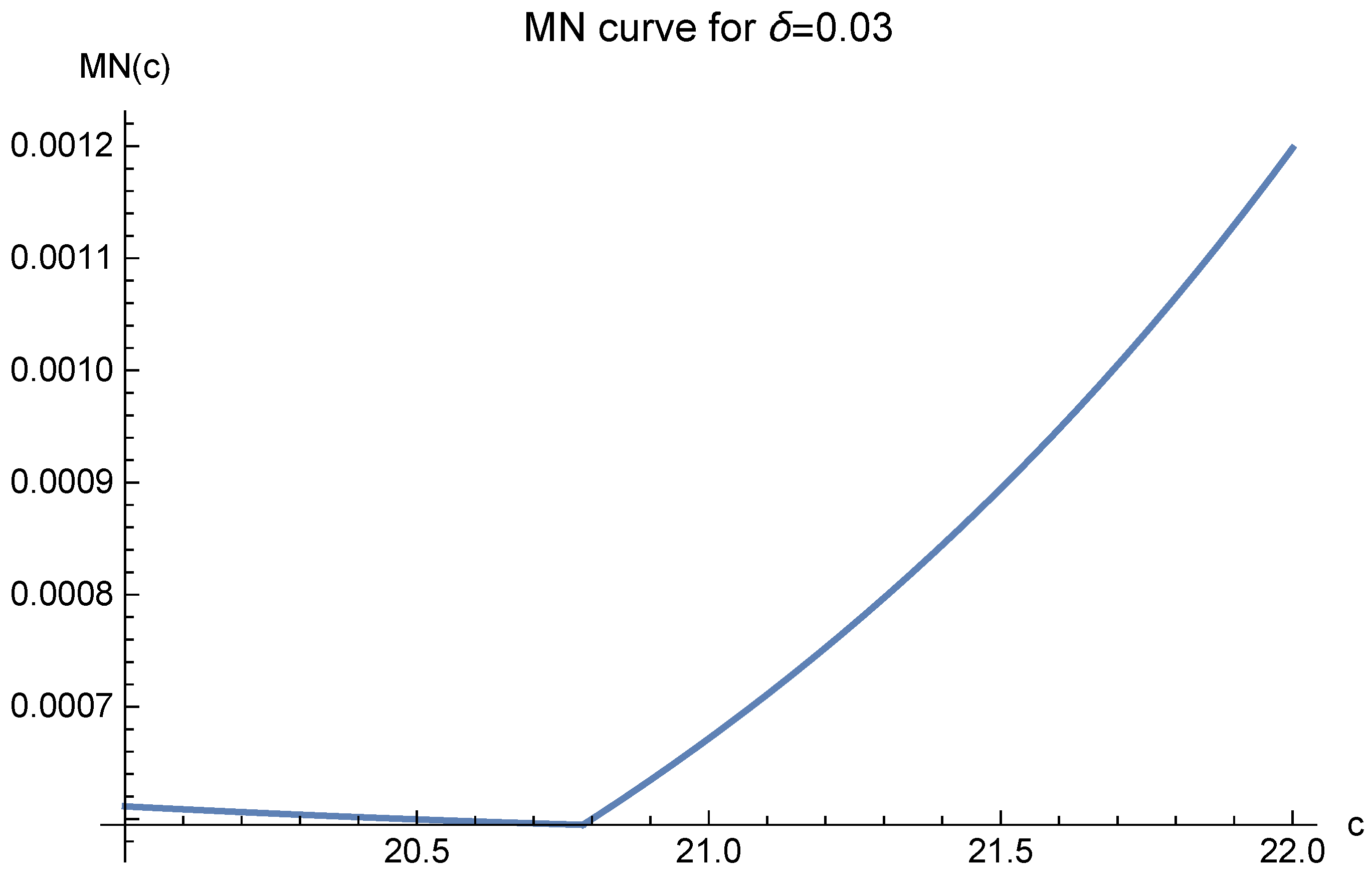

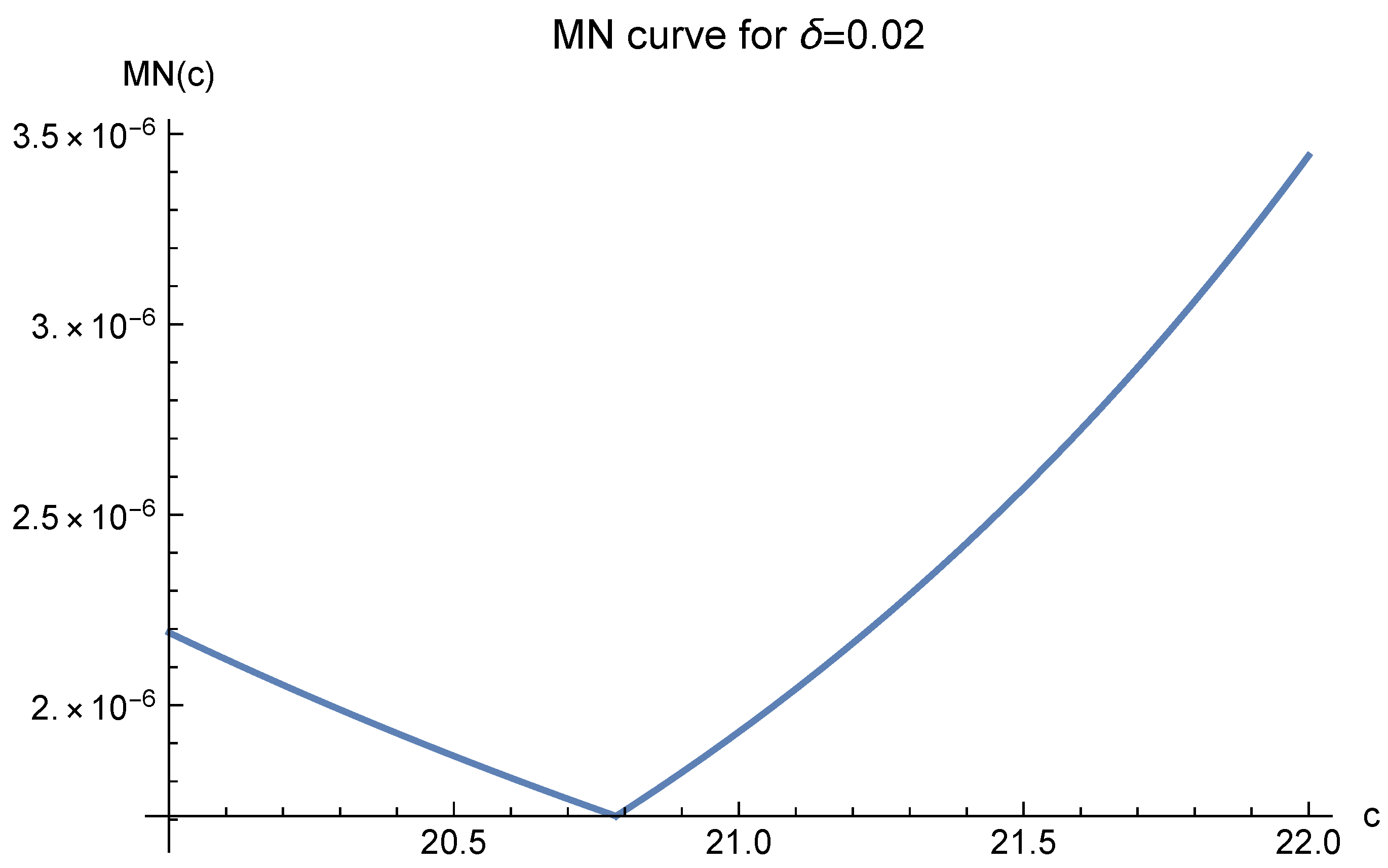

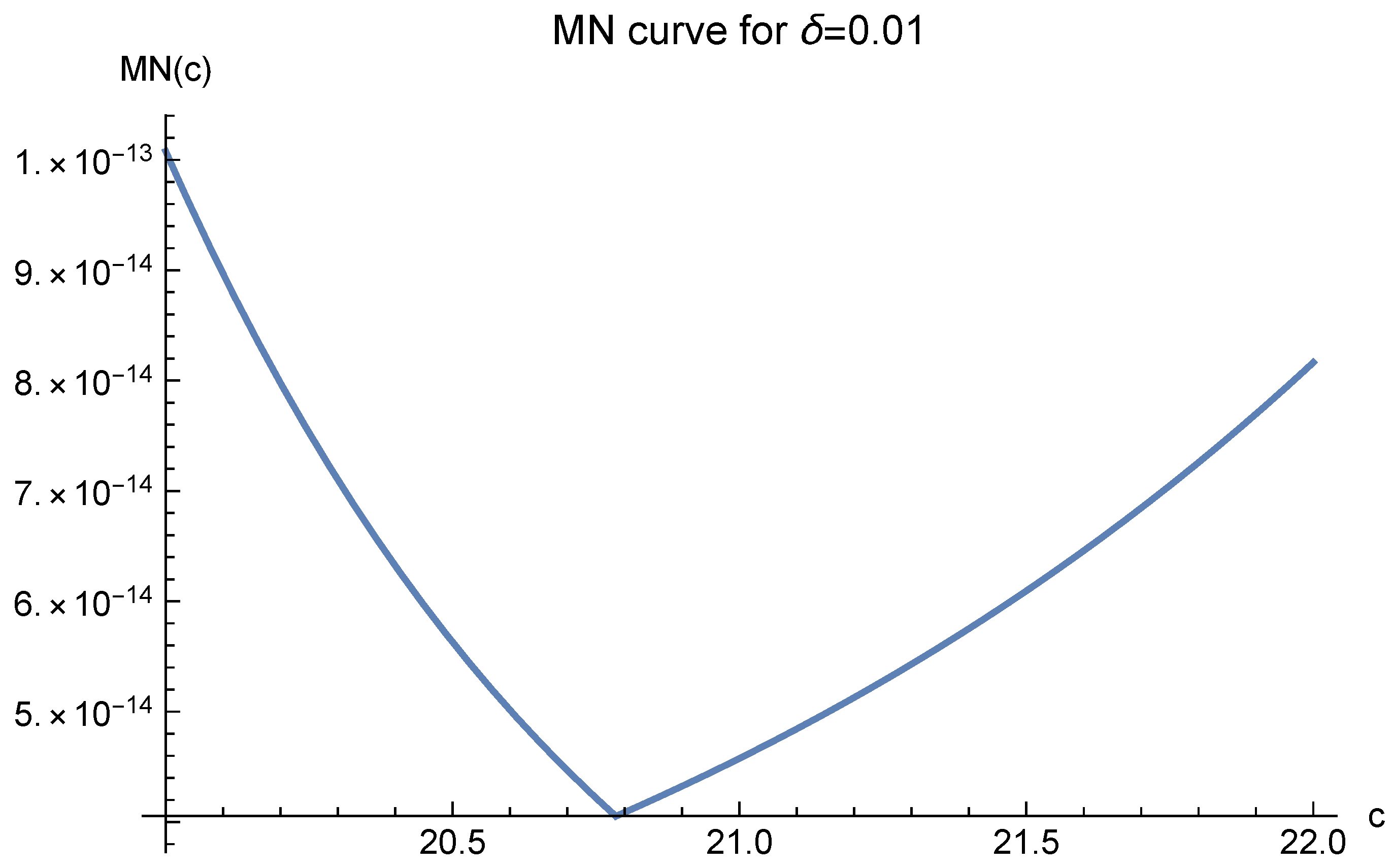

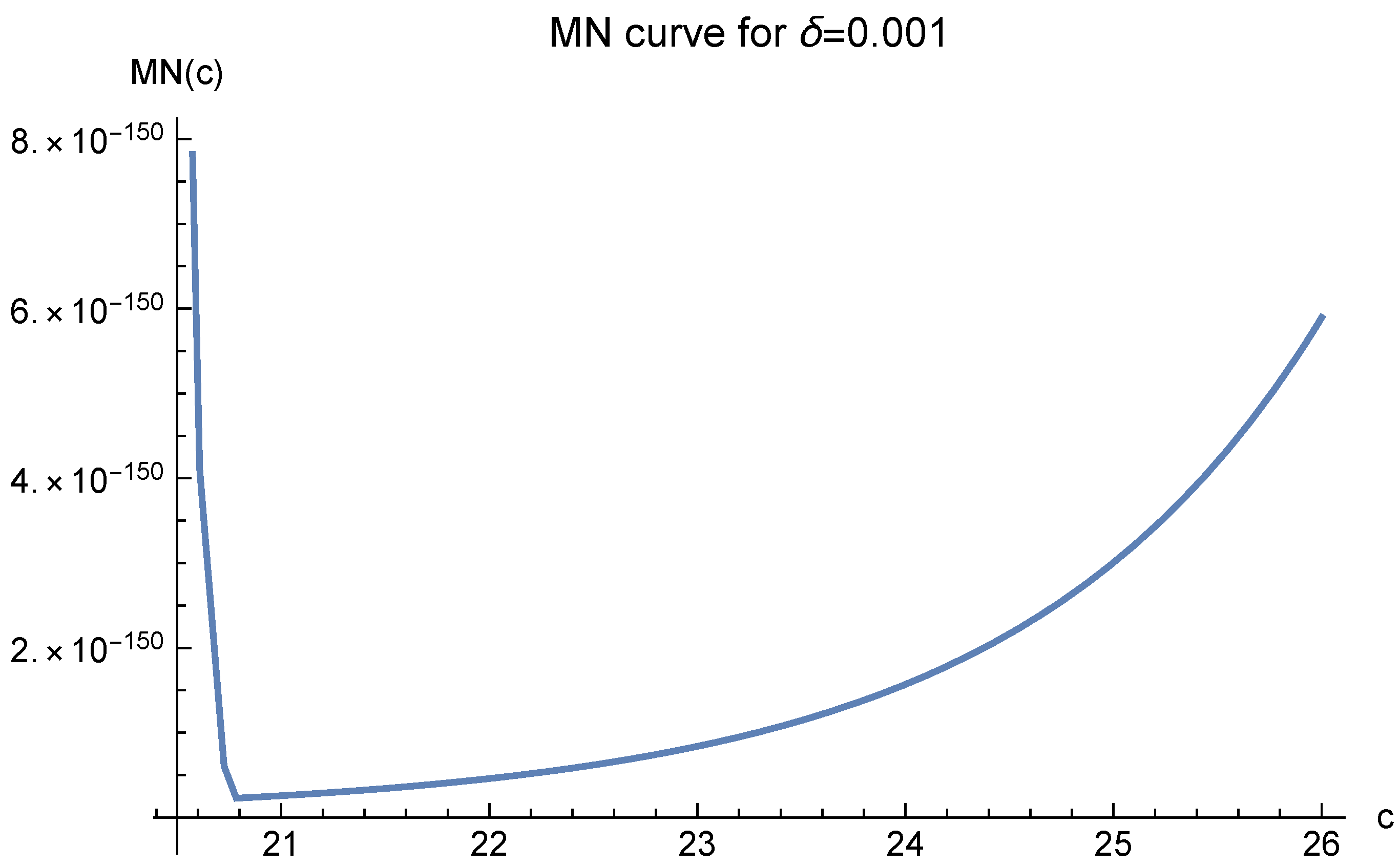

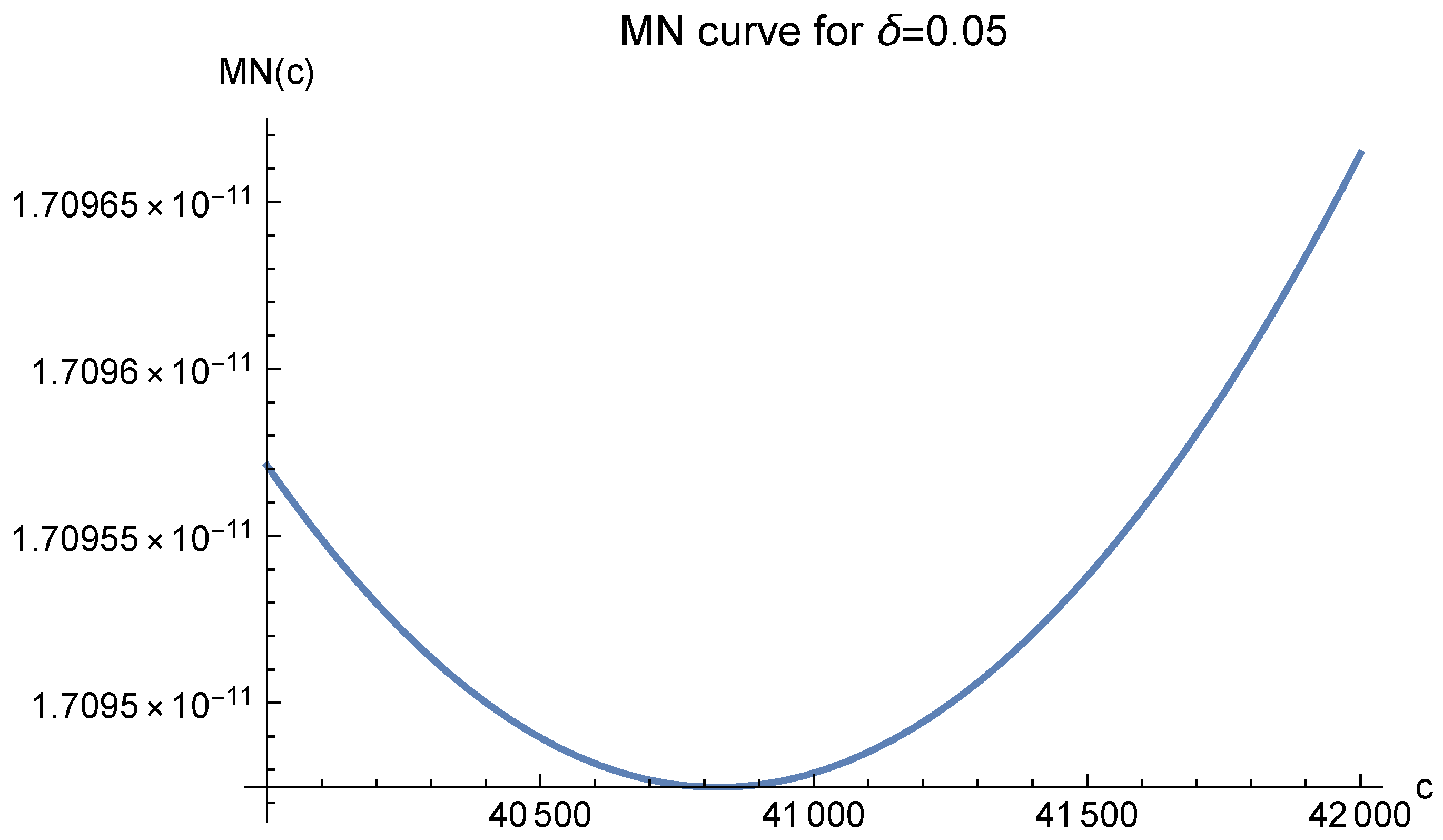

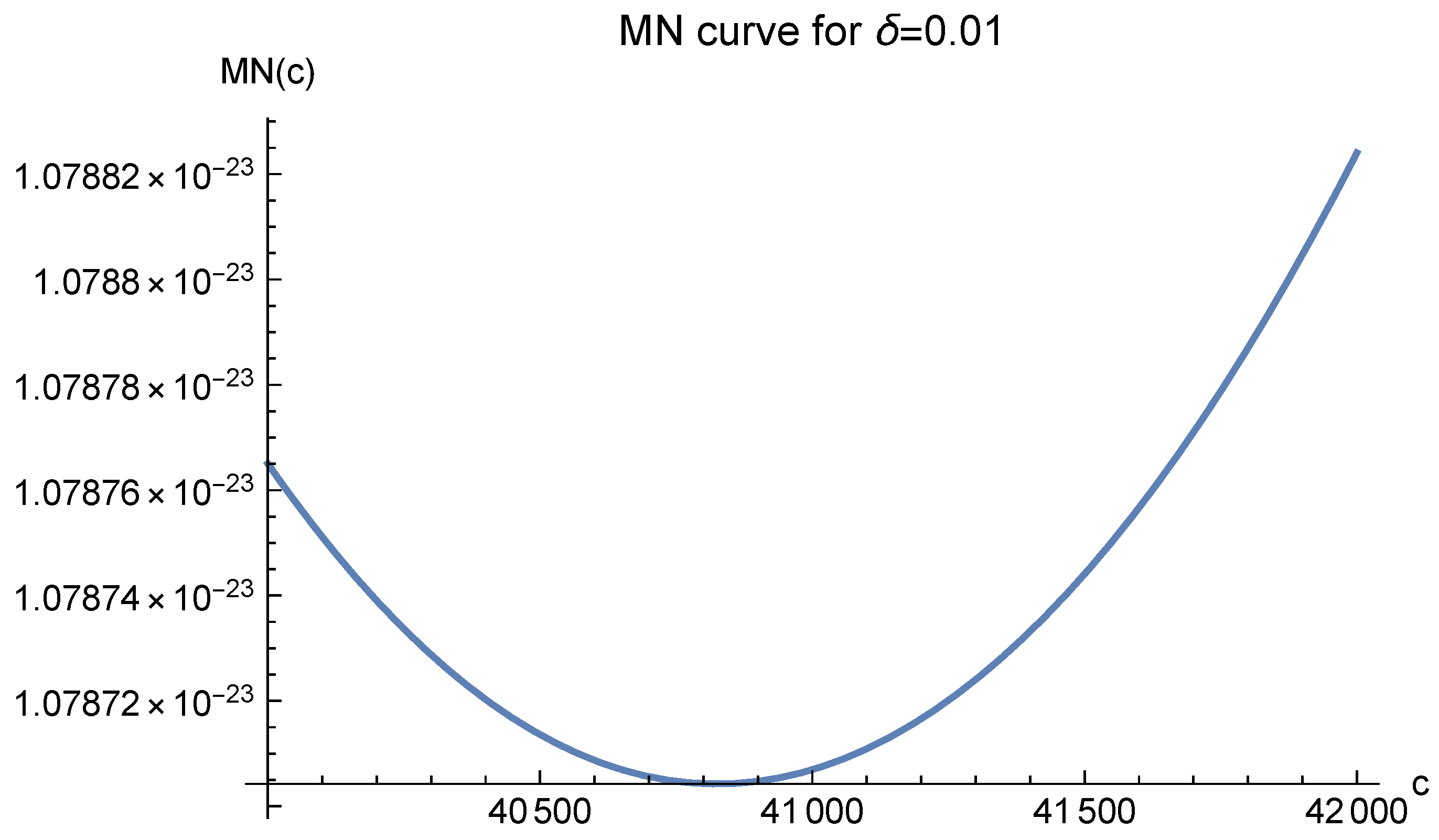

In order to find a suitable

c, one has to analyze the MN curves first. Again, Case 3 of

applies. However, for 3D MN functions, different

’s indicate different optimal values of

c. For

, the optimal values of

c are shown in

Figure 15,

Figure 16,

Figure 17,

Figure 18 and

Figure 19. These figures show that as long as

is small enough, the optimal value of

c is 20.7846, which is just

in the definition of

.

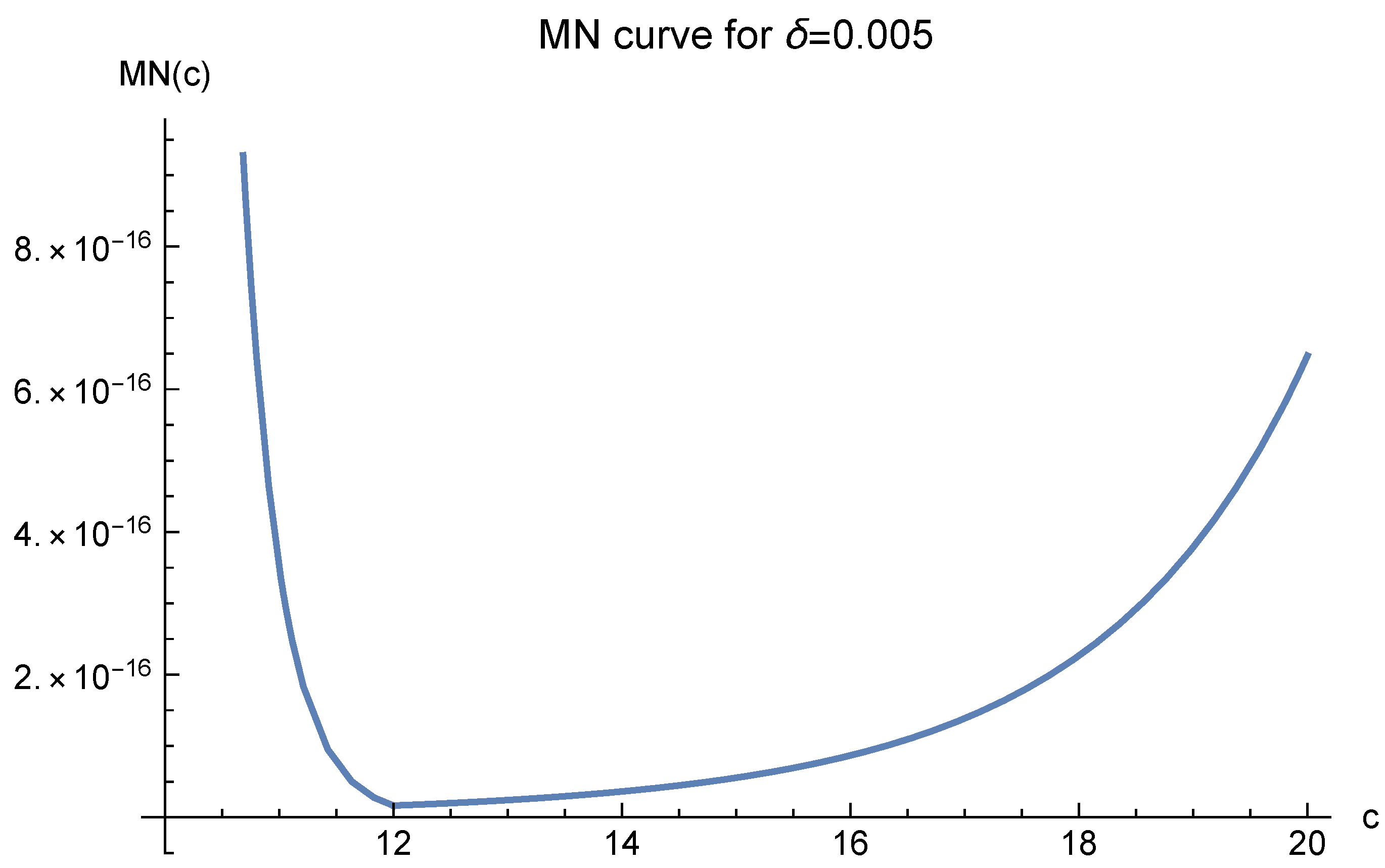

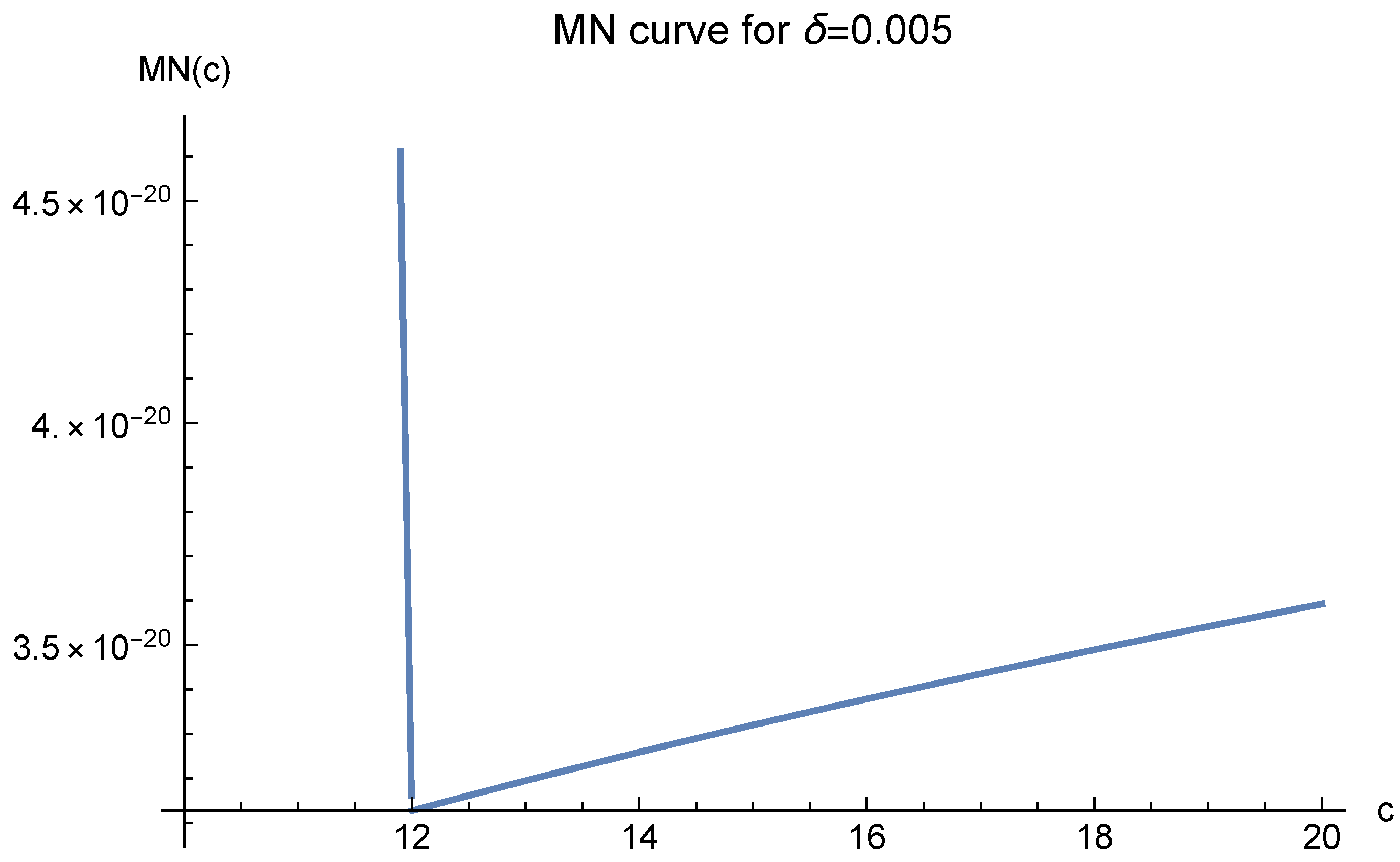

Now, we test

. The MN curves are presented in

Figure 20,

Figure 21 and

Figure 22. They demonstrate that one should choose

.

The three different optimal values of

c are all logically correct. However, when

is very small,

in (4) will be extremely large, making (4) meaningless. Hence, we should choose

according to

Figure 15,

Figure 16,

Figure 17,

Figure 18 and

Figure 19, where

is larger and the

values are reasonably small.

For

, we compare different numbers of data points. The results are presented in

Table 4.

Figure 15.

MN curve for where and .

Figure 15.

MN curve for where and .

Figure 16.

MN curve for where and .

Figure 16.

MN curve for where and .

Figure 17.

MN curve for where and .

Figure 17.

MN curve for where and .

Figure 18.

MN curve for where and .

Figure 18.

MN curve for where and .

Figure 19.

MN curve for where and .

Figure 19.

MN curve for where and .

Figure 20.

MN curve for where and .

Figure 20.

MN curve for where and .

Figure 21.

MN curve for where and .

Figure 21.

MN curve for where and .

Figure 22.

MN curve for where and .

Figure 22.

MN curve for where and .

Figure 23.

MN curve for where and .

Figure 23.

MN curve for where and .

Figure 24.

MN curve for where and .

Figure 24.

MN curve for where and .

Figure 25.

MN curve for where and .

Figure 25.

MN curve for where and .

Table 4.

3D experiment, .

Table 4.

3D experiment, .

| 616 | 666 | 766 | 966 | 1366 |

| 50 | 100 | 200 | 400 | 800 |

| 566 | 566 | 566 | 566 | 566 |

| 1200 | 1200 | 1200 | 1200 | 1800 |

| RMS | | | | | |

| COND | | | | | |

The computation is very efficient. Even though we adopted 200 effective digits for each step of the calculations, the most time-consuming work of solving the linear system took only two seconds for and five minutes for . In fact, keeping only 100 effective digits would have obtained the same RMS’s and COND’s. We stopped adding more data points at because the RMS is already good enough.

The comparison among different values of

c is presented in

Table 5. We fixed

,

and

. In order to cope with the problem of ill-conditioning, enough effective digits were adopted for the calculations. For

, we used 50 digits, and for

, 140 digits were used. The most time-consuming work of solving the linear system always took less than six minutes’ computer time.

Note that

Figure 15,

Figure 16,

Figure 17,

Figure 18 and

Figure 19 show that if

is small enough, or, equivalently, if the number of data points is large enough, the values of MN(

c) become large very slowly for

. Numerical investigations also demonstrate this.

Figure 20,

Figure 21,

Figure 22,

Figure 23,

Figure 24 and

Figure 25 even tend to move the optimal value of

c to the right. All these are supported by the RMS’s in

Table 5. Although for

, the choice of

c does not influence the approximation error much, one still has to choose

because its corresponding condition number is smaller. In other words, the theoretically predicted optimal value of

c coincides with the experimentally optimal one.