Abstract

This paper mainly studies the distributed optimization problems in a class of undirected networks. The objective function of the problem consists of a smooth convex function and a non-smooth convex function. Each agent in the network needs to optimize the sum of the two objective functions. For this kind of problem, based on the operator splitting method, this paper uses the proximal operator to deal with the non-smooth term and further designs a distributed algorithm that allows the use of uncoordinated step-sizes. At the same time, by introducing the random-block coordinate mechanism, this paper develops an asynchronous iterative version of the synchronous algorithm. Finally, the convergence of the algorithms is proven, and the effectiveness is verified through numerical simulations.

Keywords:

distributed optimization; non-smooth convex function; proximal operator; random-block coordinate MSC:

68W15

1. Introduction

In this paper, we study a class of distributed multi-agent problems on networks. Each agent in the network system has the following private objective function to be solved

where is the decision variable, is a Lipschitz-differentiable convex function, and is a non-smooth convex function. Examples of include quadratic functions and logistic functions [1], and applications of function include the elastic-net norm, L1-norm, and indicator functions [2].

For the network system, we consider that each agent in the system is only allowed to interact with neighbor agents, and there is no central agent to process data; then we can obtain

where is the local estimation for and represents a collection of edges in the network. This distributed computing architecture captures various areas containing distributed information processing and decision making, networked multi-vehicle coordination, distributed estimation, etc. Typical applications include power systems control [3], model predictive control [4], statistical inference and learning [5], and distributed average consensus [6].

In recent years, most of the literature has mainly focused on the case that the optimization objective function contains only one smooth convex function. At the same time, many centralized algorithms with excellent performance, such as proximal gradient descent, sub-gradient algorithm, Newton method, and so on, solve these problems by extending to a distributed form. The sub-gradient algorithm is the most commonly used method. In [7], Nedić and Ozdaglar apply this method to the distributed optimization problem on time-varying networks and creatively propose the distributed sub-gradient method (DGD). Shi et al. [8] propose an exact first-order algorithm (EXTRA) and prove the linear convergence of the algorithm. The algorithm makes use of the error between adjacent iterations of the DGD algorithm. Then, [9] designs a distributed first-order algorithm by combining DGD and the gradient tracking method. In order to further accelerate the convergence of the algorithm, researchers successively propose the distributed ADMM algorithm in [10,11,12,13]. However, these algorithms can only solve the optimization problem of a single function.

For (2) this composite distributed optimization problem with a non-smooth term, many research results have emerged. The authors of [14] design a proximal gradient method by combining Nesterov acceleration mechanisms. However, each iteration will lead to the consumption of more computing resources because more internal iteration steps are required. In undirected networks, Shi et al. design a proximal gradient exact first-order algorithm (PG-EXTRA) for composite optimization problems based on the classical first-order distributed optimization algorithm (EXTRA) [8] in [15]. The algorithm can accurately converge to the optimal solution of the problem by using a fixed step-size, so it is different from most algorithms that must use attenuation step-size. The authors of [16] propose a communication-efficient random walk named Walkman by using a Markov chain. By analyzing the relationship between optimization accuracy and network connectivity, this method obtains the explicit expression of communication complexity and the communication efficiency of the system. Further, considering that the complex situation of the real scene causes most agents in the network to transmit data in a directed way, ref. [17] uses the push sum mechanism to eliminate the information imbalance caused by the directed network and proposes the PG-ExtraPush algorithm on the basis of [8] and maintains the same convergence property.

Recently developed, the operator splitting technology has become the mainstream method to deal with this kind of complex optimization problem. Operator splitting technology is applied for the first time to composite optimization since Combettes and Pesquet designed a fully splitting algorithm, refs. [18,19,20,21] and others successively propose various algorithms for composite optimization. However, operator splitting technology is rarely applied to distributed composite optimization. Based on this, this paper aims to design a distributed algorithm with excellent performance by using the operator splitting method and based on the theory of operator monotonicity.

Contributions: Compared with most existing distributed optimization algorithms, the main contributions of this paper are summarized as follows:

- 1.

- To solve problem (2), this paper develops a novel, fully distributed algorithm based on the operator splitting method, which has superiorities in flexibility and efficiency compared with relatively centralized counterparts [18,19,20,21].

- 2.

- Based on a class of randomized block-coordinate methods, an asynchronous iterative version of the proposed algorithm is also derived, wherein only a subset of agents that are independently activated participate in the updates. Note that such an activation scheme is more flexible compared with the single coordinate activation [22].

- 3.

- Both proposed algorithms allow not only local information interaction among neighboring agents but also the use of uncoordinated step-sizes, without any requirement of coordinated or dynamic ones considered in [7,8,9,14,23]. Additionally, the convergence of both algorithms is ensured under the same mild assumptions. In particular, the consideration of the local Lipschitz assumption avoids the conservative selections of step-sizes, unlike the global one assumed in [8,14,15,17].

Organization: The contents of the remaining sections of the paper are as follows. Section 2 provides the symbols, lemmas, definitions, and assumptions that will be used in the paper. We give the specific process of algorithm derivation in Section 3. In Section 4, we show the convergence analysis of the proposed algorithms. Section 5 presents the simulation experiment to verify the algorithms. Finally, Section 6 gives the conclusion of the paper.

2. Preliminaries

In this section, we give the notations and display the definitions and lemmas that will be used in the paper. Then, we give two important assumptions.

Above all, we introduce some knowledge about graph theory. Let represent an undirected network composed of n agents, where denotes the set of agents and denotes the set of edges. The neighborhood of the i-th agent is recorded as . Specifically, when there is at least one path in any two agents in an undirected network , the network is connected.

Let denote the n-dimensional Euclidean space and denote the Euclidean norm of a vector . The notation is the spectral radius of a matrix, and represents the set of positive integers. Then let denote the collection of all proper lower semi-continuous convex functions from to . When denotes a positive definite matrix, using as the diagonal element can form a positive definite diagonal matrix . Let denote the interior of a convex subset and denote the effective domain of f. The subdifferential of function is expressed as The proximity operator of a function related to is defined by The convex conjugate function of f is written as .

At the same time, we give the following lemmas and assumptions.

Lemma 1

([24]). Let , then for vectors , the following relation holds:

Lemma 2

([25]). Let , then both and satisfy a firmly nonexpansive relationship.

Lemma 3

([19]). For a fixed point iteration , will converge to the fixed point of T when it satisfies the following conditions:

- 1.

- T is continuous,

- 2.

- is non-increasing,

- 3.

Definition 1.

For all , if an operator T satisfies , then T is a nonexpansive operator. Further, if T satisfies , then T is firmly a nonexpansive operator.

Definition 2.

When exist for a constant and operator T, then operator T satisfies -strongly monotone.

The following assumptions will also be used.

Assumption 1.

Graph satisfies undirected and connected operators.

Assumption 2.

The following three points are satisfied:

- 1.

- is a smooth convex function, let be Lipschitz constant, then satisfies

- 2.

- is a convex non-smooth function,

- 3.

3. Algorithm Development

In this section, we design and derive the synchronous algorithm and asynchronous algorithm.

We next carry on the equivalent transformation to problem (2) to facilitate the subsequent algorithm design. The constraint, in (2) can be written as the edge-based form

where for , and otherwise. Then, define the following linear operator:

with the compact variable . We stack all to get the following operator:

with the dimension , where is the number of edges of the network . Considering the set

Then, constraint (4) can be further reformulated in the following form:

Based on the above analysis, problem (2) can be transformed into

where represents the indicator function, i.e.,

Then, let

and ( denotes the Cartesian product). Hence, the compact form of problem (8) can be expressed by

3.1. Synchronous Algorithm 1

According to the fixed point theory, we design the distributed optimization algorithm of problem (2) from (9). We define the step-size matrices , , and , where we let , and then introduce the following operators:

where and with are the fixed points of T. In particular, and are maintained by i and j, respectively. Considering the update variables , , and with the edge-based variable , we give the Picard sequence of T and obtain the following update rules:

Next, we split (15a)–(15c) in a distributed manner. It follows from (5) and (6) that the i-th component of is . Note that (15a) can be decomposed into

For (15b), multiply both sides of the equality by . As done in (16), we also split (15b) and (15c) and use the result to get the semi-distributed form:

Note that (17b) is not fully distributed due to the structure . By using (4) and (5), we can derive that the projection of vectors to is expressed as

which contributes to the local update of (17b), i.e.,

Therefore, according to (17a), (17c), and the update of , we can summarize the synchronous distributed algorithm as follows:

Remark 1.

Notice that Algorithm 1 is completely distributed without involving any global parameters. For example, each agent individually maintains the private primal variable , auxiliary variable , and edge-based variables . For each edge in the network, as an auxiliary profile contains two components, i.e., and , which are respectively kept by i and j. Meanwhile, the information exchange is locally conducted; that is, agent i shares its updated data and with its all neighbors . On the other hand, the proposed algorithm takes uncoordinated constant positive step-sizes, , essentially distinguished from the global and dynamic ones in [7,8,9,14,23]. It is also worth noting that the edge-based step-size , held by agents i and j linked by the edge , can be seen as inherent parameters of the communication network, revealing the quality of the communication.

| Algorithm 1 Distributed algorithm based on proximal operators |

| Input: For all agents , , and , where . And select proper positive step-sizes or parameters, and . For : 1. 2. 3. 4. Send , to j for , 5. Until the approaches zero. End Output: The primal variable as the optimal solution . |

3.2. Asynchronous Algorithm 2

Here, we extend the synchronous Algorithm 1 to the asynchronous iterative version based on the random-block coordinate mechanism in [2]. Combining with the principle of this mechanism, we define the diagonal matrix (where denotes the number of edges of the graph ) diagonal elements of 0 or 1 to represent the coordinate matrix, and then divide the vector into m blocks. At the same time, we define the activation vector of -valued, where is a binary string with length m. When , it means that the agent i is activated at the k-th iteration; otherwise it is not activated.

In order to describe the activation state of different coordinate blocks and ensure random activation, we give the following assumption.

Assumption 3.

The following two points are satisfied:

- 1.

- The sum of satisfies ,

- 2.

- is a ϕ-valued vector satisfying identical independent distributionsand its probability is

Then, based on the given assumption, we can develop the asynchronous algorithm as follows:

It can be seen that Algorithm 2 allows each agent to awaken with an independent probability, which means that a subset of randomly activated agents will participate in the updates while inactivated ones stay in previous states. Such a scheme is more flexible than the single waking-up scheme [22] or other activated block coordinates that are uniformly selected [26]. In addition, the probability is completely independent of the others, which does not meet some strict conditions, such as .

| Algorithm 2 Asynchronous distributed version |

| Input: For all agents , , and , where . And select proper positive step-sizes or parameters, and . For

Output: The primal variable as the optimal solution . |

In order to facilitate the subsequent derivation of convergence, we need to give a compact form of Algorithm 2. By making , we get

where and operator T can be seen in Equation (11).

4. Convergence Analysis

The convergence proof of the algorithms is provided in this section. The following assumption is the condition to be met for the convergence of the algorithms.

Assumption 4.

Recall the local Lipschitz constant in Assumption 2. It is assumed that the step-sizes satisfy the following conditions:

Lemma 4.

Proof.

Use the first-order optimal condition of (9) to obtain , where is the optimal solution. According to the definition of matrix step-sizes, we further obtain

Use Lemma 1 and let to get

Lemma 5.

Let Assumptions 1 and 2 hold, then there are

Proof.

It is further concluded that

Here we introduce an equality. For a positive definite matrix and , we have

Combining the above two results, we derive

Using subdifferential properties to obtain

and equivalent

Moreover, there is

Therefore, we deduce

Lemma 6.

Let Assumptions 1 and 2 hold. Set . For matrix and , there is

Proof.

Further, we have

Then we deal with some terms in the above inequality. For (20) combined with Lemma 1 can deduce

Further using subdifferential properties, we have

Meanwhile, because is -strongly monotone, there is

Lemma 7.

Under Assumptions 1–4, is non-increasing and .

Proof.

If Assumption 4 holds, we can deduce that satisfies non-increasing operators.

When N tends to infinity, we can get

This means

Meanwhile, if Assumption 4 holds, is a symmetric positive definite. Therefore, we can get

Next, we give the following theorem to prove the convergence of Algorithm 1.

Theorem 1.

Under Assumptions 1–4, and converge to the optimal solution of (2) and the fixed points of T, respectively.

Proof.

Because and are firmly nonexpansive, T is continuous. Then, and the sequence satisfies non-increasing are obtained from Lemma 7. Based on Lemma 3, the sequence converges to a fixed point of T. According to Lemma 4, it can be concluded that converges to a solution to (2). □

At the same time, we also give the following theorem to prove the convergence of Algorithm 2.

Theorem 2.

Under Assumptions 1–4, relative to the solution set , the sequence satisfies stochastic Fejér monotonicity [27]:

Further, the sequence converges almost surely to some .

Proof.

Before proving, we give some definitions. Here denotes the probability matrix, and is ca onditional expectation, and its abbreviation is , where represents the filtration generated by . We use to map the components of to .

Based on the definition of , we have

Using the idempotent property of , we have

Then according to Lemma 6 and (23), we get

Therefore, if Assumption 4 holds, we can obtain the convergence of (37) according to [28] Th. 3, [27] Prop. 2.3, and the Robbins–Siegmund lemma in [29]. □

5. Numerical Experiments

5.1. Case Study I: Performance Examination

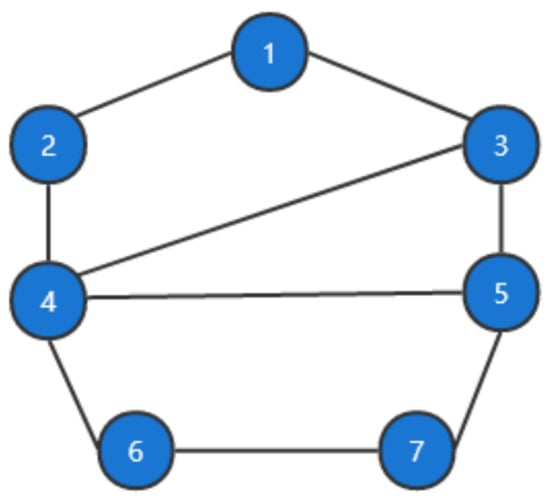

We present the effectiveness of the algorithms in this section by solving a class of quadratic programming problems on undirected networks. The network topology is shown in Figure 1.

Figure 1.

Graph topology.

The quadratic programming problem model is as follows:

where is the decision variable of each agent. Matrix in the objective function is a diagonal matrix, and its elements are randomly selected in [−8, 8], and the elements of vector are randomly selected in [−10, −5]. For the box constraint of , the range of is [−10, −5], and the range of is [5, 10].

To solve problem (38), we need to convert the problem into the form of problem (8). Defining the set and defining the indicator function , then we can get the following problem:

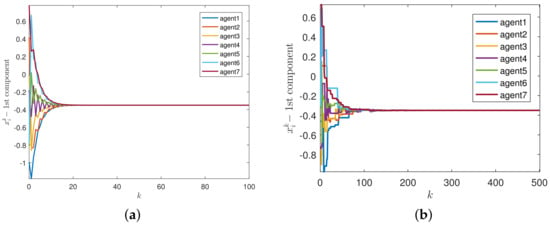

Figure 2a shows that the agent finally converges to a consistent state through synchronous Algorithm 1. In Figure 2b, we use asynchronous Algorithm 2 with activation probability to describe the state of the agent under the same parameter conditions.

Figure 2.

Convergence results of the two algorithms. (a) Algorithm 1. (b) Algorithm 2.

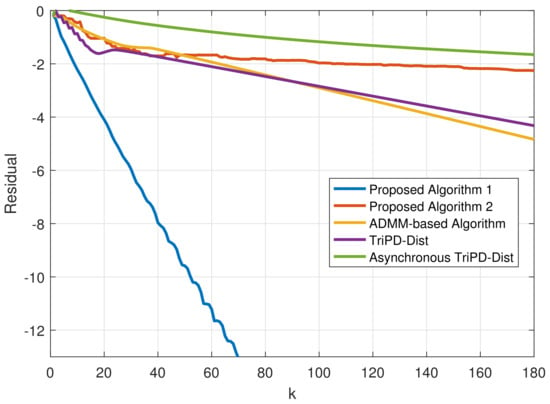

In Figure 3, the performance of both proposed algorithms is depicted through a comparison with existing algorithms, i.e., an ADMM-based method [30], TriPD-Dist, and its asynchronous version [2]. It can be shown that Algorithm 1 outperforms the ADMM-based method and TriPD-Dist, and the proposed asynchronous algorithm (Algorithm 2) also has a faster convergence speed than asynchronous TriPD-Dist, mainly by estimating the logarithmic values of .

Figure 3.

Performance comparison.

5.2. Case Study II: First-Order Dynamics System

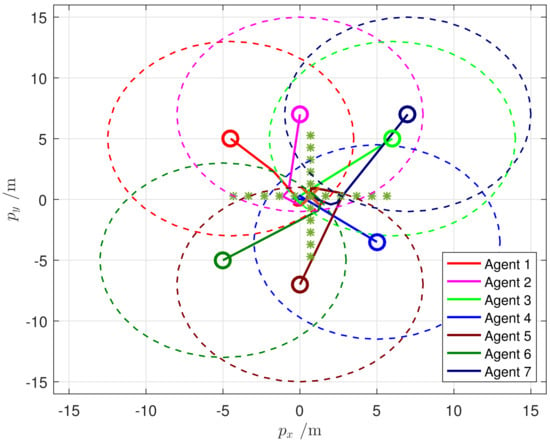

In this subsection, we apply the proposed synchronous algorithm to solve a first-order dynamics system problem in a 2-D space [31], where each agent has its own cost function , with the action response , and the private reference positions and . The goal of the considered problem is that all agents cooperatively find the optimal position under the local constraints , where is the initial position of agent . Let , then the distributed problem can be formulated as

where is the local estimation action for . In light of (1), we can set . The selections of step-sizes are the same as that of Case Study I.

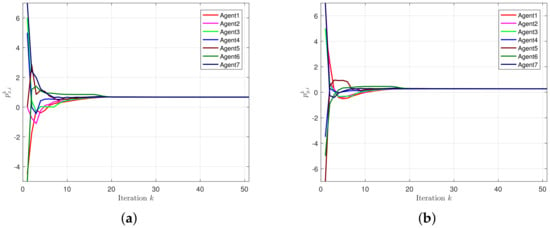

The results are described in Figure 4 and Figure 5. To be specific, Figure 4a,b reflect the trajectories of . Figure 5 depicts the motions of the entire system over iterations, where the optimal position is marked by a cross at the intersection of two star lines, the circles with a dotted line are the corresponding motion areas of agents, and the solid ones are the initial positions.

Figure 4.

Evaluations of positions. (a) Evaluations of . (b) Evaluations of .

Figure 5.

Motions of all agents in the 2-D space.

6. Conclusions

This paper mainly studies a class of distributed composite optimization problems with non-smooth convex functions. To solve this kind of problem, this paper proposes two completely distributed algorithms. At the same time, the algorithms are verified in theory and simulation. However, there are still some aspects worthy of improvement in this paper. For example, in the network structure, we can consider expanding from an undirected graph to a directed graph, and we can also combine it with more practical application scenarios, such as resource allocation.

Author Contributions

Y.S.: Data curation, Formal analysis, Investigation, Methodology, Resources, Software, Supervision, Writing—original draft. L.R.: Data curation, Formal analysis, Software. J.T.: Formal analysis, Software, Writing—original draft. X.W.: Methodology, Software, Formal analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, Z.; Shi, W.; Yan, M. A decentralized proximal-gradient method with network independent step-sizes and separated convergence rates. IEEE Trans. Signal Process. 2019, 67, 4494–4506. [Google Scholar] [CrossRef]

- Latafat, P.; Freris, N.M.; Patrinos, P. A new randomized block-coordinate primal-dual proximal algorithm for distributed optimization. IEEE Trans. Autom. Control 2019, 64, 4050–4065. [Google Scholar] [CrossRef]

- Bai, L.; Ye, M.; Sun, C.; Hu, G. Distributed economic dispatch control via saddle point dynamics and consensus algorithms. IEEE Trans. Control Syst. Technol. 2019, 27, 898–905. [Google Scholar] [CrossRef]

- Jin, B.; Li, H.; Yan, W.; Cao, M. Distributed model predictive control and optimization for linear systems with global constraints and time-varying communication. IEEE Trans. Autom. Control 2020, 66, 3393–3400. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Olshevsky, A. Linear time average consensus and distributed optimization on fixed graphs. SIAM J. Control. Optim. 2017, 55, 3990–4014. [Google Scholar] [CrossRef]

- Nedić, A.; Ozdaglar, A. Distributed subgradient methods for multi-agent optimization. IEEE Trans. Autom. Control 2009, 54, 48–61. [Google Scholar] [CrossRef]

- Shi, W.; Ling, Q.; Wu, G.; Yin, W. Extra: An exact first-order algorithm for decentralized consensus optimization. SIAM J. Optim. 2015, 25, 944–966. [Google Scholar] [CrossRef]

- Qu, G.; Li, N. Harnessing smoothness to accelerate distributed optimization. IEEE Trans. Control. Netw. Syst. 2018, 5, 1245–1260. [Google Scholar] [CrossRef]

- Chang, T.H.; Hong, M.Y.; Wang, X.F. Multi-agent distributed optimization via inexact consensus ADMM. IEEE Trans. Signal Process. 2015, 63, 482–497. [Google Scholar] [CrossRef] [Green Version]

- Iutzeler, F.; Bianchi, P.; Ciblat, P.; Hachem, W. Explicit convergence rate of a distributed alternating direction method of multipliers. IEEE Trans. Autom. Control 2016, 61, 892–904. [Google Scholar] [CrossRef]

- Shi, W.; Ling, Q.; Yuan, K.; Wu, G.; Yin, W.T. On the linear convergence of the ADMM in decentralized consensus optimization. IEEE Trans. Signal Process. 2014, 62, 1750–1761. [Google Scholar] [CrossRef]

- Wei, E.; Ozdaglar, A. Distributed alternating direction method of multipliers. In The 51st IEEE Conference on Decision and Control; IEEE: Maui, HI, USA, 2012; pp. 5445–5450. [Google Scholar]

- Chen, A.I.; Ozdaglar, A. A fast distributed proximal-gradient method. In Proceedings of the Annual Allerton Conference on Communication, Control, and Computing, Monticello, IL, USA, 1–5 October 2012; pp. 601–608. [Google Scholar]

- Shi, W.; Ling, Q.; Wu, G.; Yin, W. A proximal gradient algorithm for decentralized composite optimization. IEEE Trans. Signal Process. 2015, 63, 6013–6023. [Google Scholar] [CrossRef]

- Mao, X.; Yuan, K.; Hu, Y.; Gu, Y.; Sayed, A.; Walkman, W.Y. A communication-efficient random-walk algorithm for decentralized optimization. IEEE Trans. Signal Process. 2020, 68, 2513–2528. [Google Scholar] [CrossRef]

- Zeng, J.; He, T.; Wang, M. A fast proximal gradient algorithm for decentralized composite optimization over directed networks. Syst. Control. Lett. 2017, 107, 36–43. [Google Scholar] [CrossRef]

- Condat, L. A primal-dual splitting method for convex optimization involving lipschitzian, proximable and linear composite terms. J. Optim. Theory Appl. 2013, 158, 460–479. [Google Scholar] [CrossRef]

- Chen, P.; Huang, J.; Zhang, X. A primal-dual fixed point algorithm for minimization of the sum of three convex separable functions. Fixed Point Theory Appl. 2016. [Google Scholar] [CrossRef]

- Latafat, P.; Patrinos, P. Asymmetric forward-backward-adjoint splitting for solving monotone inclusions involving three operators. Comput. Optim. Appl. 2017, 68, 57–93. [Google Scholar] [CrossRef]

- Yan, M. A new primal-dual algorithm for minimizing the sum of three functions with a linear operator. J. Sci. Comput. 2018, 76, 1698–1717. [Google Scholar] [CrossRef]

- Boyd, S.; Ghosh, A.; Prabhakar, B.; Shah, D. Randomized gossip algorithms. IEEE Trans. Inf. Theory 2006, 52, 2508–2530. [Google Scholar] [CrossRef] [Green Version]

- Ren, X.; Li, D.; Xi, Y.; Shao, H. Distributed subgradient algorithm for multi-agent optimization with dynamic stepsize. IEEE/CAA J. Autom. Sin. 2021, 8, 1451–1464. [Google Scholar] [CrossRef]

- Micchelli, C.A.; Shen, L.; Xu, Y. Proximity algorithms for image models: Denoising. Inverse Probl. 2011, 27, 45009. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: New York, NY, USA, 2011. [Google Scholar]

- Hong, M.; Chang, T.-H. Stochastic proximal gradient consensus over random networks. IEEE Trans. Signal Process. 2017, 65, 2933–2948. [Google Scholar] [CrossRef]

- Combettes, P.L.; Pesquet, J.C. Stochastic quasi-Fejér block- coordinate fixed point iterations with random sweeping. SIAM J. Optim. 2015, 25, 1221–1248. [Google Scholar] [CrossRef]

- Bianchi, P.; Hachem, W.; Iutzeler, F. A coordinate descent primal-dual algorithm and application to distributed asynchronous optimization. IEEE Trans. Autom. Control 2016, 61, 2947–2957. [Google Scholar] [CrossRef]

- Robbins, H.; Siegmund, D. A convergence theorem for non negative almost supermartingales and some applications. In Herbert Robbins Selected Papers; Lai, T.L., Siegmund, D., Eds.; Springer: New York, NY, USA, 1985; pp. 111–135. [Google Scholar]

- Aybat, N.S.; Wang, Z.; Lin, T.; Ma, S. Distributed linearized alternating direction method of multipliers for composite convex consensus optimization. IEEE Trans. Autom. Control 2018, 63, 5–20. [Google Scholar] [CrossRef]

- Li, H.; Su, E.; Wang, C.; Liu, J.; Xia, D. A primal-dual forward-backward splitting algorithm for distributed convex optimization. IEEE Trans. Emerg. Top. Comput. Intell. 2021. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).