1. Introduction

Almost all scientific and engineering fields can readily translate into optimization problems [

1,

2,

3], given the different real-world requirements of various application domains [

4]. These optimization problems often include many needs and requirements, such as uncertainty, multi-objective, and high dimensions, whereas finding a solution strategy for optimization problems is usually a specification [

5]. In contrast, various complex issues emerge as the different domains are deepened. Therefore, a more robust and faster solution is needed to solve them. This trend suggests that scientists need to find an algorithm adaptable to various applications to meet the complex situations of difficult problems in cutting-edge engineering fields [

6,

7]. A wide variety of optimization algorithms are found in delving into the literature on the proposed optimization algorithms [

8,

9]. These evolved from traditional optimization techniques using planning techniques to nature-inspired metaheuristic algorithms, each with its highlights [

10,

11]. As a general-purpose optimization method, traditional algorithms often fail to provide solutions that can solve the problem when faced with optimization problems of high complexity and high gradient variability in high dimensions [

12,

13]. In contrast, the MH benefits from multi-group cooperation through shared experience to find the optimal solution accurately, bypassing the optimal local solution. It has the advantages of being easy to understand, converging quickly, and avoiding getting trapped in a local optimum [

14,

15,

16].

In the latest decade, many MH algorithms have been proposed. In general, there is no standard/unique classification of metaheuristic algorithms. In this research, MH algorithms can be classified into four categories based on the source of inspiration: population intelligence (SI)-based, human-based algorithms, physics/chemistry-based algorithms, and evolutionary algorithms.

SI methods are usually inspired by the real-life and cooperative behaviors of different plants and animals in nature, and these methods search for the optimal solution adaptively by exchanging and sharing the information flow of multiple candidate solutions. Also, this approach is the most extended. Methods of this type include but are not limited to krill herd (KH) [

17], GOA [

18], moth flame optimization algorithm (MFO) [

19], gray wolf optimizer (GWO) [

20], red fox optimization algorithm (RFO) [

21], slime mould algorithm (SMA) [

22], aquila optimizer (AO) [

23], Harris hawks optimization (HHO) [

8], whale optimization algorithm (WOA) [

24], sparrow search algorithm (SSA) [

25], and butterfly optimization algorithm (BOA) [

26].

Genetic laws often influence evolutionary algorithms, mimicking the crossover and variation behavior therein. Methods of this type include, but are not limited to, evolutionary strategy (ES) [

27], genetic algorithm (GA) [

28].

Physics-based and chemistry-based methods mimic physical theorems or chemical phenomena prevalent in the universe and life, usually based on universal rules to distinguish the interactions between candidate solutions. There are various methods in this class, such as atomic search optimization (ASO) [

29], thermal exchange optimization (TEO) [

30], multi-verse optimization algorithm (MVO) [

31], chemical reaction optimization (CRO) [

32], and gravity search algorithms (GSA) [

33].

The final method classified in this research is a human-based algorithm, mainly motivated by social and natural behavior and guided by autonomous human thought. This method contains human mental search (HMS) [

34], student psychology based-optimization algorithm (SPBO) [

35], and TLBO [

36].

SI-based method, as a subset of the MH algorithm, has some more significant advantages: (1) More frequent information exchange. (2) The structure of the algorithm is more straightforward. (3) It is less prone to fall into local optimal solutions. Therefore, SI-based methods have been applied to deal with complex sequential optimization issues such as feature selection [

37] and surface shape parameter optimization [

12,

38]. However, many methods in SI are not powerful enough and often cannot handle optimization situations with a wide range of scope areas, which may be due to the low level of development and exploration and poor diversity of some methods [

39]. However, the balance between exploration and development for an SI method is an area that requires too much attention. With the increasing demands of optimization problems, many studies have proposed search strategies to ensure that balance [

40,

41,

42]. Recently, Abualigah et al. established a proposed search algorithm—reptile search algorithm—which is capable of selecting both local and global searches to solve complex optimization problems [

7]. The method mainly simulates the encirclement mechanism in the exploration stage and the hunting mechanism in the exploitation stage of the crocodile.

However, some experimental results also demonstrate that RSA also has the problem of poor convergence and slow convergence when facing high-dimensional complex nonlinear optimization problems. Therefore, this paper uses some promising modification strategies to enhance the RSA algorithm capability. Firstly, Lévy flight has been considered an excellent strategy to help optimization algorithms improve their performance [

43]. Zhang et al. applied the Lévy flight strategy to the backtracking search algorithm to solve the parameter estimation of the PV model [

44]. OB-LF-ALO is an efficient algorithm optimized by Lévy flight and is employed to deal with some specific mechanical optimization in life [

45]. Feng et al. proposed a Lévy flight-based gravitational search algorithm for solving ecological scheduling of step hydro power plants [

46]. Further, Gao et al. suggested an enhanced chicken flock algorithm based on Lévy flight for the multi-objective optimization problem of integrated energy for smart communities, considering the utility of decision-makers [

47]. Therefore, the Lévy flight strategy is introduced into the reptile search algorithm in this paper to improve the global search capability of RSA and guarantee the search to escape the local optimum. Moreover, influenced by the crossover operator [

48] and the difference operator [

49], an interactive crossover strategy is proposed in this paper. In this strategy, the candidate solutions are more influenced by the iterative process, making the difference between the preliminary search and the later development more obvious. Therefore, an improved reptile search algorithm (LICRSA) based on Lévy flight and interactive crossover is proposed in this study based on these two strategies.

The remainder of this paper is structured as follows:

Section 2 presents a comprehensive description of the reptile search algorithm.

Section 3 depicts the contents of both Lévy flight and crossover strategies. In

Section 4, the algorithm is evaluated using 23 benchmark functions and CEC2020 and compared with different MH methods.

Section 5 selects five commonly used mechanical engineering optimization problems the proposed algorithm solves.

Section 6 summarizes the work.

2. Description of the Reptile Search Algorithm (RSA)

The reptile search algorithm mainly imitates the predation strategy and social behavior of crocodiles in nature [

7]. The first is the encirclement mechanism in the exploration phase, and the second is the hunting mechanism in the exploitation phase. A standard MH algorithm tends to be first applied to a set of candidate solutions before the iteration starts. It is a random strategy generated.

where

zi,j represents the

jth dimension of the

ith crocodile.

N is all the crocodiles, and

d denotes dimension.

Z is

N candidate solutions randomly generated by Equation (2).

where

rand is a stochastic number;

up and

low are the maximum and minimum limits of the optimization problem.

Encircling behavior is the hallmark of the globalized search of RSA. The process is divided into two behaviors, aerial and abdominal walk. These two actions often do not allow crocodiles to get close to food. However, the crocodile will find the general area of the target food by chance after several search attempts because it is a global search of the whole solved spatial range. Meanwhile, ensure that the stage can be continuously adapted to the next developmental stage. The process is often limited to the first half of the total iteration. Equation (3) simulates the encircling behavior of the crocodile and is shown below.

where

besti,j (

t) is the best-positioned crocodile at

t iterations, and

r is a stochastic number of 0 to 1.

TMax represents the maximum iteration.

ηi,j is the operator of the

ith crocodile at the

jth dimension and is given by Equation (4).

β is a sensitive parameter controlling the search accuracy and is given as 0.1 in the original text [

7].

Ri,j is the reduce function, and the equation is used to decrease the explored area and is computed by Equation (5).

r1 is a stochastic integer between 1 and

N, and

zr1,j is the

jth dimension of the crocodile at position

r1.

ES(

t) is an arbitrary decreasing probability ratio between 2 and −2 and is given in Equation (6).

where

r2 is a random integer between 1 and

N, and

r3 is an integer number of −1 to 1.

ε is a small value.

Pi,j represents the percentage difference between the crocodile in the best position and crocodiles in the current position, updated as in Equation (7).

where

M (

zi) is the average position of the crocodile of

zi and is given in Equation (8),

upj and

lowj are the maximum and minimum limits of the

jth dimension.

α is the same as

β as a sensitive parameter to control the search accuracy. It was set to 0.1 in the original paper [

7].

Connected to the search process of RSA is the hunting process of local symbolic exploitation, which has two strategies in this part: coordination and cooperation. After the influence of the encirclement mechanism, the crocodiles almost lock the location of the target prey, and the hunting strategy of the crocodiles will make it easier for them to approach the target. The development phase will often find the near-optimal candidate solution after several iterations. The mathematical model of its simulated crocodile hunting behavior is presented in Equation (9). This development hunting strategy occurs in the second half of the iterations.

where

bestj is the optimal position of the crocodile,

ηi,j is the operator of the

ith crocodile at the

jth dimension and is given by Equation (4).

Ri,j is the reduce function, and the equation is used to decrease the explored area and is computed by Equation (5). Algorithm 1 is the pseudo-code for RSA.

| Algorithm 1: The framework of the RSA |

| 1: Input: The parameters of RSA including the sensitive parameter α, β, crocodile size (N), and the maximum generation TMax. |

| 2: Initializing n crocodile, zi and calculate fi. |

| 3: Determine the best crocodile bestj. |

| 4: while (t ≤ tMax) do |

| 5: Update the ES by Equation (6). |

| 6: for i = 1 to N do |

| 7: for i = 1 to N do |

| 8: Calculate the η, R, P by Equations (4), (5) and (7). |

| 9: if t ≤ TMax/4 then |

| 10: zi,j (t + 1) = bestj (t) × −ηi,j × β − Ri,j (t) × r. |

| 11: else if TMax/4 ≤ t < 2 * TMax/4 |

| 12: zi,j (t + 1) = bestj (t) × zi,j × ES (t) × r. |

| 13: else if 2*TMax/4 ≤ t < 3*TMax/4 |

| 14: zi,j (t + 1) = bestj (t) × Pi,j (t) × r. |

| 15: else |

| 16: zi,j (t + 1) = bestj (t) −ηi,j × ε − Ri,j (t) × r. |

| 17: end if |

| 18: end for |

| 19: end for |

| 20: Find the best crocodile. |

| 21: t = t + 1. |

| 22: end while |

| 23: Output: The best crocodile. |

3. The Proposed Hybrid RSA (LICRSA)

In this paper, the Lévy flight strategy and the interaction crossover strategy are chosen, respectively. The Lévy flight strategy helps candidate solutions leap out of local solutions and enhances the algorithm’s precision. An interaction crossover strategy mainly enhances the ability of algorithm development. The two methods are introduced primarily in the following.

3.1. Lévy Flight Strategy

The Lévy flight strategy is used in this paper to generate a random number with a replacement rand with the following characteristics: (1) The random numbers generated are occasionally large but mostly interspersed with small numbers in between. (2) The probability density function of the steps is heavy-tailed. This random number will help update the position to produce oscillations, perform a small search in the neighborhood by the fluctuations of the random number at one iteration, and help the candidate solution leap out of the local optimum.

The definition of Lévy distribution is given by Equation (10) below.

The step length of the Lévy flight can be calculated as in Equation (11).

where, both

U and

V follow Gaussian distribution as shown in Equation (12).

where

σU and

σV meet the following Equations (13) and (14).

where

is a standard Gamma function and

β is a parameter with a fixed 1.5.

Lévy flight strategy is deployed for the crocodile’s high and belly walking in the encircling mechanism to expand the surrounding search area effectively. Meanwhile, the Lévy flight strategy is also deployed for hunting coordination and cooperation in the hunting behavior phase to increase the flexibility of the optimal exploitation phase. The encircling mechanism based on Lévy flight is displayed in Equation (15). λ is a parameter with a fixed 0.1.

The hunting behavior (hunting coordination and cooperation) based on Lévy flight is displayed in Equation (16).

3.2. Interaction Crossover Strategy

The interaction crossover strategy helps the candidate in the current position readjust by exchanging the information of the two candidate solutions and the candidate at the optimal position. The new position draws the information on the optimal solution and other candidate solutions to improve the searchability of the candidate solutions. First, a parameter controlling the activity of the crocodile population is defined, which has a linearly decreasing trend with the number of iterations.

CF is defined as follows:

where

t represents the current iteration,

TMax stands for all iterations. The crocodile population is randomly divided into two parts with the same number of crocodiles. Two positions of crocodiles

zk1 and

zk2 are selected from these two parts, and then the positions are interacted with to update the two crocodiles. The updated strategy is the same as that provided by Equations (18) and (19).

where

bestj is the best positioned crocodile,

c1 and

c2 are stochastic numbers in the interval 0 to 1, and

zk1 is the crocodile in position

k1.

Figure 1 illustrates the introduction graph of the interaction crossover.

For positions of the updated crocodiles, this paper set up an elimination mechanism: retaining the crocodiles with better positions after the interactive crossover and eliminating the crocodiles with poorer abilities. The updates are as follows:

3.3. Specific Implementation Steps of LICRSA

The Lévy flight strategy and the interactive crossover strategy are incorporated in RSA. These strategies effectively improve the accuracy and exploitation of the RSA algorithm while obtaining a better traversal of the cluster. The specific operation steps of LICRSA are displayed below.

Step1: Provide all the relevant parameters of LICRSA, such as the number of alligators N, the dimension of variables Dim, the upper limit up of all variables, the lower limit low of all variables, all iterations TMax, and the sensitive parameter β.

Step2: Initialize N random populations and calculate the corresponding adaptation values. The best crocodile individuals are selected.

Step3: Update ES values by Equation (6) and calculate η, R, and P values, respectively.

Step4: While t < Miter, the search phase of RSA: If t < TMax/4, update the new situation of the crocodile by the first part of Equation (15), and similarly, if t is between TMax/4 and 2TMax/4, then update the crocodile position according to the second part of Equation (15).

Step5: The development phase of RSA: if t is between 2TMax/4 and 3TMax/4, the position of the crocodile is updated by the first part of Equation (16), and in the final stage of the iteration, when t > 3TMax/4, the position of the crocodile is updated by the second part of Equation (16).

Step6: The fitness of the crocodile positions is re-evaluated, and the crocodiles with function values worse than the last iteration are eliminated, while the position of the optimal crocodile is updated.

Step7: Interactive crossover strategy: firstly, the crocodile population is divided into two parts, and then crocodiles are randomly selected from the two groups in turn for crossover, and their information is interacted by the positions of the two crocodiles updated by Equations (18) and (19), respectively.

Step8: According to the principle of Equation (20), crocodile individuals with poor predation ability are eliminated, and the optimal ones are renewed.

Step9: Determine if the iteration limit is exceeded and output the optimal value.

To show the hybrid RSA algorithm more clearly, in this paper, the pseudo-code of LICRSA is provided in Algorithm 2. Where line 10, line 12, line 14, and line 16 are the encirclement and predation phases of the Lévy flight strategy improvement. Lines 20–26 are the strategies for the interaction crossover.

Figure 2 shows the flow chart of the LICRSA algorithm.

| Algorithm 2: The framework of the LICRSA |

| 1: Input: The parameters of LICRSA including the sensitive parameter α, β, crocodile size (N), the maximum generation TMax, and variable range ub, lb. |

| 2: Initializing n crocodile, zi (i = 1, 2, …, N) and calculate the fitness fi. |

| 3: Determine the best crocodile bestj. |

| 4: while (t ≤ tMax) do |

| 5: Update the ES by Equation (6). |

| 6: for i = 1 to N do |

| 7: for i = 1 to N do |

| 8: Calculate the η, R, P by Equations (4), (5) and (7). |

| 9: if t ≤ TMax/4 then |

| 10: zi,j (t + 1) = bestj (t) × −ηi,j × β − Ri,j (t) × λ × levy(γ). |

| 11: else if TMax/4 ≤ t < 2 * TMax/4 |

| 12: zi,j (t + 1) = bestj (t) × zi,j × ES (t) × λ × levy(γ). |

| 13: else if 2*TMax/4 ≤ t < 3*TMax/4 |

| 14: zi,j (t + 1) = bestj (t) × Pi,j (t) × λ × levy(γ). |

| 15: else |

| 16: zi,j (t + 1) = bestj (t) −ηi,j × ε − Ri,j (t) × λ × levy(γ). |

| 17: end if |

| 18: end for |

| 19: end for |

| 20: Update the fitness and location of the crocodile. |

| 21: Replace the optimal location and optimal fitness. |

| 22: Group = permutate(n). |

| 23: for i = 1 to N/2 do |

| 24: let k1 = Group(2 × i − 1) and k2 = Group(2 × i). |

| 25: Update the CF by Equation (17). |

| 26: zk1,j (t + 1) = zk1,j (t) + CF × (bestj(t) − zk1,j (t)) + c1 × (zk1,j (t) − zk2,j (t)) |

| 27: zk1,j (t + 1) = zk1,j (t) + CF × (bestj(t) − zk1,j (t)) + c1 × (zk1,j (t) − zk2,j (t)) |

| 28: end for |

| 29: Find the best crocodile. |

| 30: t = t + 1. |

| 31: end while |

| 32: Output: The best crocodile. |

3.4. Considering the Time Complexity of LICRSA

The estimation of LICRSA time complexity is mainly based on RSA with the addition of the interaction crossover part, and the Lévy strategy only improves the update strategy of RSA. The time complexity of RSA is:

The adaptation value evaluation mainly depends on the complexity of the problem, so it is not considered in this paper. In the crossover phase, the method is a two-part population operation process, so the time complexity is:

where

d is the variables,

N is all the crocodiles, and

TMax is the maximum generation.

4. Simulation Experiments

To demonstrate the effectiveness of this improvement plan, 23 standard benchmark functions and CEC2020 will be used to test the local exploitation capability of LICRSA, global search capability, and ability to step out of local optimization. The experimental parameters of this section are set: the crocodile size

N = 30 and the maximum iterations

TMax = 1000. In addition, for each test function, all methods will be run 30 times independently in the same dimension, and the numerical results will be counted. The comparative MH algorithms in this section include reptile search algorithm (RSA) [

7], aquila optimizer (AO) [

23], arithmetic optimization algorithm (AOA) [

4], differential evolution (DE) [

49], crisscross optimization algorithm (CSO) [

48], dwarf mongoose optimization (DMO) [

6], white shark optimizer (WSO) [

50], whale optimization algorithm (WOA) [

24], wild horse optimizer (WHOA) [

15], seagull optimization algorithm (SOA) [

51], and gravitational search algorithm (GSA) [

33] in 23 test functions. Additionally, reptile search algorithm (RSA) [

7], aquila optimizer (AO) [

23], arithmetic optimization algorithm (AOA) [

4], differential evolution (DE) [

49], dwarf mongoose optimization (DMO) [

6], grasshopper optimization algorithm (GOA) [

18], gravitational search algorithm (GSA) [

33], honey badger algorithm (HBA) [

52], HHO [

8], SSA [

11], and WOA [

24] in CEC2020. It is worth noting that to guarantee the equity of the experiments, the parameter settings of all MH methods are the same as those provided in

Table 1 and are consistent with the source paper.

4.1. Exploration–Exploitation Analysis

The two main parts of different MH algorithms are the exploration and exploitation phases. The role of exploration is that distant regions of the search domain can be explored, ensuring better search candidate solutions. Alternatively, exploitation is the convergence to the region where the optimal solution is promising and expected to be found through a local convergence strategy. Maintaining a balance between these two conflicting phases is critical for the optimization algorithm to locate the optimal candidate solution to the optimization problem [

53]. Dimensional diversity in the search procedure can be expressed in Equations (23) and (24).

where

zi,j is the position of the

ith crocodile in the

jth dim,

Divj is the average diversity of dimension

j, and

median(

zj) is the median value of the

jth dim of the candidate solution.

N is all the crocodiles,

d is the dim, and

TMax is the maximum iteration. The following equation calculates the percentage of exploration and exploitation.

where

max(

Div) is the maximum diversity of the iterative process.

The test set of CEC2020 is selected in this section, and

Figure 3 demonstrates the exploration and exploitation behavior of LICRSA in the CEC2020 test set [

5] during the search process. Analyzing

Figure 3, LICRSA mainly starts from high exploration and low exploitation. Still, as the exploration proceeds, LICRSA quickly switches to high exploitation and converges to the region where the optimal solution is expected to be found. There is a partial addition of the exploration process during the iteration process, which is energetic in both exploration and utilization. Therefore, LICRSA can find better optimal solutions than other MH algorithms. In addition,

Figure 4 shows the percentage of different functions, the rate of exploration is generally between 10% and 30%, which indicates that LICRSA provides enough exploration time. The rest of the effort is consumed in development. This result means that LICRSA maintains a sufficiently high exploration percentage. On the other hand, LICRSA development utilization is high.

4.2. Performance Evaluation for the 23 Classical Functions

The 23 benchmark functions include 7 unimodal, 6 multimodal, and 10 fixed-dimensional functions [

5]. Among them, unimodal functions are used to explore MH algorithms, and multimodal functions are often used to understand the development of algorithms. The dimension function aims to know how well different exploration algorithms leap out of the local optimum and how accurately algorithms converge. It should be noted that the objective of all 23 functions is to minimize the problem. In addition, four criteria are used in this part of the experiment, including maximum, minimum, mean, and standard deviation (Std). In addition, the Wilcoxon signed-rank test (significance level = 0.05) is used to test for the numerical cases. Where “+” means that an algorithm is more accurate than LICRSA, “=” indicates that there is no significant difference between the two, and “−” indicates that an algorithm is less accurate than LICRSA. We also provide the

p-values as reference data.

Table 2 provides the numerical case of 12 MH algorithms in solving 23 benchmark problems, where the bold indicates the most available mean and standard deviation of all algorithms. From the table, LICRSA ranked first with an average of 3.8696, and LICRSA ranked first in 10 of these test problems and second or higher in 13. The LICRSA algorithm performs the best in single-peak, ranking second or higher in 6 out of 7 unimodal functions. Meanwhile, LICRSA also performed well in multimodal functions, obtaining optimal solutions in 4 of the 6 functions (F9, F10, F11, F13). In addition, AO ranked first in 5, outperforming other MH algorithms on F5, F11, and F21, second only to LICRSA. In addition, GSA showed dominance in the fixed dimension, achieving the best runs on F16, F17, F19, F20, F22, and F23. The results show that LICRSA has more robust solution accuracy than other existing algorithms in unimodal and multimodal functions when solving the 23 benchmark problems, indicating that LICRSA can obtain a greater chance of convergence success to the optimal value resulting in good development performance. It performs better than most search algorithms in the fixed dimension.

Figure 5 provides a bar plot of the mean rank of different algorithms.

In this section, the convergence analysis of the search algorithm is performed on 23 benchmark test suites.

Figure 6 provides the number of functions better than LICRSA, significantly the same as LICRSA, and worse than LICRSA.

Figure 7 shows the results of the problems for the 23 test problems. Some of these functions have images for which we have taken logarithmic data processing. From the results in the figure, it can be noticed that LICRSA starts with a fast convergence and mutation behavior on most of the problems, which is more pronounced than the other tested algorithms. This result is due to the excellent exploration capability of the added interaction crossover strategy. Moreover, the results display that LICRSA has the fastest convergence on most functions than AO, AOA, RSA, CSO, DE, GSA, WOA, WHOA, DMO, SOA, and WSO. This result is due to the reduced search space and enhanced exploitation capability at iteration time by Lévy flight and crossover strategies. Also, from the convergence curves, LICRSA converges quickly to the global optimum for most unimodal and multimodal functions (F1, F3, F5, F9, F10, F11), indicating that LICRSA has better convergence capability. The results further verify the effectiveness of the Lévy flight and the interaction crossover strategy, which enhances the local search capability. In addition,

Figure 8 provides the box plots of the 23 test functions, from which it is clear that the box of LICRSA is narrower, which also indicates the stability ability of LICRSA for multiple runs, and then LICRSA has some instability in the face certain fixed dimensional test functions.

Table 3 provides the final results of

p-values and statistics between each algorithm and LICRSA. 1/11/11, 6/6/11, 0/6/17, 5/1/17, 1/6/16, 3/6/14 for RSA, AO, AOA, CSO, DE, and DMO, respectively, and 7/4/12, 7/4/12, 7/7/13, 7/7/13, and 7/7/13 for WSO, WOA, WHOA, SOA, and GSA. 3/7/13, 7/8/8, 1/6/16, 5/6/12.

4.3. Performance Evaluation for the CEC-2020 Test Functions

These 10 CEC2020 include a multi-peak function, 3 BASIC functions, 3 hybrid functions, and 3 composite functions [

5]. The multimodal functions are usually employed to understand the development of the search algorithm, and hybrid functions can better combine the properties of sub-functions and maintain the continuity of global/local optima. The composite function combines various hybrid functions and can be employed to evaluate the convergence accuracy of the algorithm. Note that the objective of all 10 CEC2020 functions is also the minimization problem, and again, this section still uses the maximum, minimum, average, and standard deviation (Std) in the results of the numerical experiments. In addition, time metrics and

p-values are also important factors in the evaluation system. In addition, the Wilcoxon signed-rank test (significance level = 0.05) is used to test the numerical cases; where “+” indicates that an algorithm is more accurate than LICRSA, “=” means that LICRSA is not different from MH, and “−” shows that a method is less accurate than LICRSA.

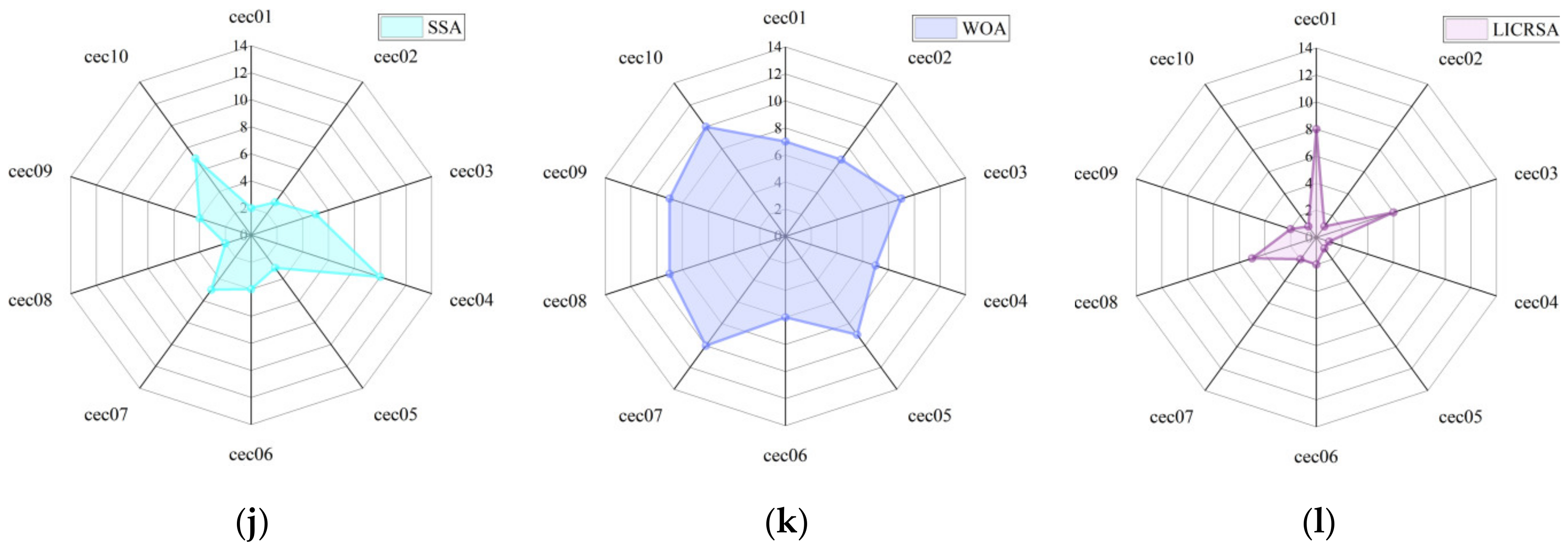

Table 4 provides a comparison between LICRSA and different MH algorithms. The table indicates that LICRSA has the best running results in four test functions, cec02, cec04, cec05, cec10, and the second ranking in cec06, cec07, and cec09, achieving the second-ranking. The overall average order of LICRSA of 2.9 is better than the other MH algorithms, indicating that LICRSA can handle this test function well.

Figure 9 and

Figure 10 provide the iterative and box-line plots for the 10 test functions of this test set, respectively, from which it is found that LICRSA can find the neighborhood of the optimal case in the earlier part of the iteration for most of the test functions, and has a faster convergence rate compared to the other comparative search algorithms. Additionally, in

Figure 10 it can be found that LICRSA tends to have a narrower box. It has very stable results when run many times.

Figure 11 provides the radar plots of the 12 algorithms, and the more concentrated shaded parts represent a smaller rank ranking and better performance in CEC2020. It can be seen from the figure that LICRSA has a more minor shaded part.

Table 5 and

Table 6 provide the

p-values of all the search algorithms run in the CEC2020 test set and the running time statistics, respectively. From the tables, it can be found that RSA, AO, AOA, DMO, DE, and GOA are 0/1/9, 1/3/6, 0/2/8, 3/2/5, 0/3/7, 1/1/8, while GSA, HBA, HHO, SSA, and WOA are 3/1/6, 3/4/3, 1/1/8, 3/1/6, 1/0/9. In

Table 6, it can be found that LICRSA takes a longer running time, but the increase in running time is a typical situation due to the added improvement policy, while the running time of RSA itself is longer, and the increase in running time of LICRSA compared to RSA is not too much.

7. Conclusions

This paper proposes an improved reptile search algorithm based on Lévy flight and interactive crossover strategies. To improve the performance of the original RSA algorithm, two main approaches are employed to address its shortcomings. As a general modification technique to improve its optimization capability, the Lévy flight strategy enhances the global exploration of the algorithm and jumps out of local solutions. Meanwhile, the interactive crossover strategy, as a variant of the crossover operator, inherits the local exploitation ability and enhances the fit with iterations. Twenty-three benchmark test functions, the IEEE Conference Evolutionary Computation (CEC2020) benchmark, and five engineering design problems are used to test the proposed LICRSA algorithm and verify the effectiveness of LICRSA. The experimental results show that LICRSA obtains more suitable optimization results and significantly outperforms competing algorithms, including DMO, WOA, HHO, DE, AOA, and WSO. The LICRSA has a more significant global search capability and higher accuracy solutions. In addition, the results of the tested mechanical engineering problems prove that LICRSA outperforms other optimization methods in total cost evaluation criteria. LICRSA has excellent performance and can be utilized by other researchers to tackle complex optimization problems.

Similarly, the applicability of RSA will be investigated. For example, to further improve the spatial search capability, we try to integrate RSA with other general intelligent group algorithms to improve the global performance of RSA. We use RSA to provide optimal parameters for machine learning models in privacy-preserving and social networks in practical applications. Furthermore, the proposed LICRSA algorithm may also have excellent application potency in solving other complex optimization problems, such as shop scheduling problems [

70], optimal degree reduction [

71], image segmentation [

72], shape optimization [

73], and feature selection [

74], etc.