Application of ANN in Induction-Motor Fault-Detection System Established with MRA and CFFS

Abstract

:1. Introduction

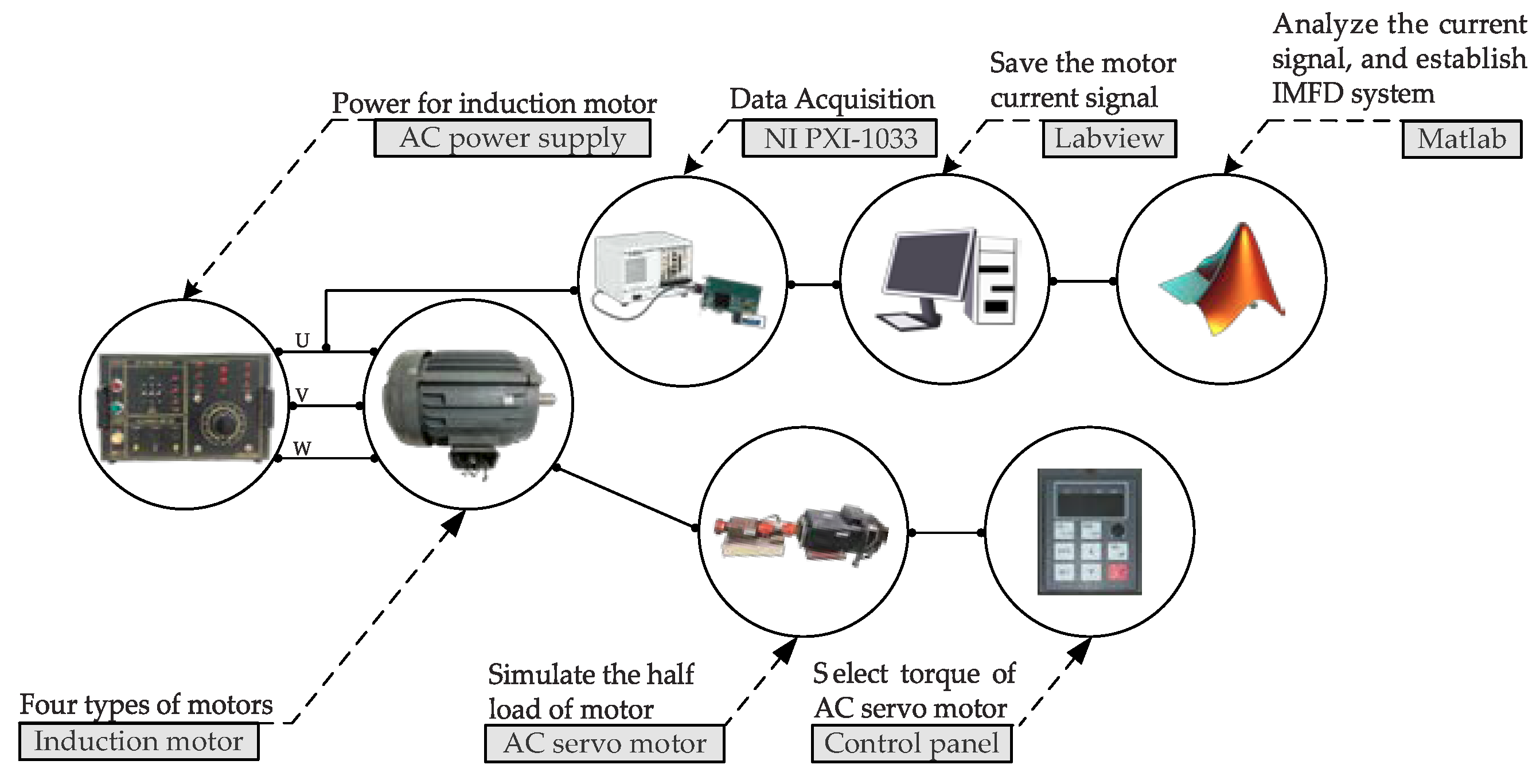

2. Measure and Analyze the Current Signals

2.1. MRA and Feature Distribution of Current Signals

- (1)

- Tmax: maximum of each coefficient in time domain;

- (2)

- Tmin: minimum of each coefficient in time domain;

- (3)

- Tmean: average of each coefficient in time domain;

- (4)

- Tmse: root mean square of each coefficient in time domain;

- (5)

- Tstd: standard of each coefficient in time domain;

- (6)

- Fmax: maximum of each coefficient in frequency domain;

- (7)

- Fmin: minimum of each coefficient in frequency domain;

- (8)

- Fmean: average of each coefficient in frequency domain;

- (9)

- Fmse: root mean square of each coefficient in frequency domain;

- (10)

- Fstd: standard of each coefficient in frequency domain.

2.2. Hilbert–Huang Transform and Feature Distribution of Current Signals

- (1)

- max: maximum of w1 to w7 and c1 to c7;

- (2)

- min: minimum of w1 to w7 and c1 to c7;

- (3)

- mean: average of w1 to w7 and c1 to c7;

- (4)

- mse: root mean square of w1 to w7 and c1 to c7;

- (5)

- std: standard of w1 to w7 and c1 to c7.

3. Feature-Selection Approaches for Features of the MRA and HHT

3.1. ReliefF

| Algorithm 1: ReliefF |

| 1: repeat |

| 2: Choose one of the features ; |

| 3: Choose one value randomly from ; |

| 4: Choose the nearest values and with ; |

| 5: Calculate the correlation in (8); |

| 6: until obtain all correlations with ReliefF for feature selection |

| 7: Choose the best performance of feature set for establish ANN |

3.2. CFS

| Algorithm 2: CFS |

| 1: (I) The feature correlation: |

| 2: repeat |

| 3: Choose two of the features and ; |

| 4: Choose one value randomly from ; |

| 5: Choose the nearest values and with ; |

| 6: Calculate the correlation between and with (9); |

| 7: until obtain all correlation with Relief. |

| 8: (II) The correlation between feature and classification: |

| 9: Use ReliefF to calculate RfF in (8); |

| 10: (III) Calculate the Merit value: |

| 11: repeat |

| 14: Calculate the Merit value in (10); |

| 15: until obtain the whole Merit value. |

| 16: Choose the best performance of feature set for establish ANN. |

3.3. CFFS

| Algorithm 3: CFFS |

| 1: (I) The correlation between features: |

| 2: Use Relief to calculate the correlation; |

| 3: (II) The correlation between feature and classification: |

| 4: Use ReliefF to calculate the correlation; |

| 5: (III) Calculate the Merit value: |

| 6: Use CFS to calculate the Merit value; |

| 7: (IV) Calculate the Merit_new value: |

| 8: repeat |

| 9: Select the feature set to training ANN with PSO; |

| 10: Calculate the fitness value Wfi from PSO; |

| 11: Calculate the Merit_new value in (11); |

| 12: until obtain all the Merit_new value. |

| 13: Choose the best performance of feature set for establish ANN. |

4. The Result of Induction-Motor Fault Detection

4.1. Parameter Setting of ANN

4.2. Compare the Signal-Processing Aproaches: The MRA, and the HHT

- (1)

- In ∞ dB, MRA: 94.8%, HHT: 85.8%;

- (2)

- In 40 dB, MRA: 92.2%, HHT: 84.4%;

- (3)

- In 30 dB, MRA: 92%, HHT: 81.9%;

- (4)

- In 20 dB, MRA: 88.2%, HHT: 68.4%;

- (5)

- In 10 dB, MRA: 69.2%, HHT: 43.9%.

- (1)

- In ∞ dB, MRA: 94.8%, HHT: 85.9%;

- (2)

- In 40 dB, MRA: 94.5%, HHT: 83.4%;

- (3)

- In 30 dB, MRA: 93.7%, HHT: 81.9%;

- (4)

- In 20 dB, MRA: 87.7%, HHT: 68%;

- (5)

- In 10 dB, MRA: 70.3%, HHT: 44.1%.

- (1)

- In ∞ dB, MRA: 92%, HHT: 83.5%;

- (2)

- In 40 dB, MRA: 91.8%, HHT: 82.7%;

- (3)

- In 30 dB, MRA: 91.3%, HHT: 81.5%;

- (4)

- In 20 dB, MRA: 91%, HHT: 73.3%;

- (5)

- In 10 dB, MRA: 89.8%, HHT: 66%.

4.3. Compare the Feature-Selection Approaches: ReliefF, CFS, and CFFS

- (1)

- In ∞ dB, ReliefF: 10 features and 92.8%, CFS: 7 features aFnd 92.02%, CFFS: 3 features and 93%;

- (2)

- In 40 dB, ReliefF: 10 features and 92.7%, CFS: 7 features and 91.9%, CFFS: 3 features and 93%;

- (3)

- In 30 dB, ReliefF: 10 features and 90.4%, CFS: 7 features and 90.7%, CFFS: 3 features and 93%;

- (4)

- In 20 dB, ReliefF: 14 features and 87.6%, CFS: 11 features and 88.3%, CFFS: 4 features and 92.8%;

- (5)

- In 10 dB, ReliefF: 22 features and 70.3%, CFS: 20 features and 70.3%, CFFS: 6 features and 92%.

- (1)

- In ∞ dB, ReliefF: 9 features and 78.2%, CFS: 13 feature and 81.3%, CFFS: 7 features and 74.8%;

- (2)

- In 40 dB, ReliefF: 9 features and 77.6%, CFS: 13 features and 79.6%, CFFS: 6 features and 73.5%;

- (3)

- In 30 dB, ReliefF: 9 features and 72.9%, CFS: 13 features and 75.2%, CFFS: 6 features and 73%;

- (4)

- In 20 dB, ReliefF: 9 features and 60.4%, CFS: 13 features and 62.9%, CFFS: 6 features and 72.3%;

- (5)

- In 10 dB, ReliefF: 9 features and 43.8%, CFS: 13 features and 44.6%, CFFS: 6 features and 71.5%.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| aj | approximation coefficient |

| instantaneous amplitude | |

| ci | intrinsic mode function |

| dj | detail coefficient |

| distance between fh and fnh | |

| distance between fh and fnm | |

| sum of the distance between fh and fnmb | |

| one value of | |

| nearest values of with | |

| nearest values of with | |

| nearest values of other classification different with | |

| g0 | filter coefficients 1 |

| h0 | filter coefficients 2 |

| maximum times of sampling | |

| the class belong | |

| nf | number of features |

| the all classification | |

| correlation between feature and classification | |

| the average of RfFi | |

| the average of Rfij | |

| initial value of correlation | |

| ψ(t) | wavelet function |

| φ(t) | scaling function |

| instantaneous phase angle | |

| instantaneous frequency |

References

- Zhang, P.; Shu, S.; Zhou, M. An online fault detection model and strategies based on SVM-grid in clouds. IEEE/CAA J. Autom. Sin. 2018, 5, 445–456. [Google Scholar] [CrossRef]

- Wang, H.; Lu, S.; Qian, G.; Ding, J.; Liu, Y.; Wang, Q. A two-step strategy for online fault detection of high-resistance connection in BLDC motor. IEEE Trans. Power Electron. 2020, 35, 3043–3053. [Google Scholar] [CrossRef]

- Mao, W.; Chen, J.; Liang, X.; Zhang, X. A new online detection approach for rolling bearing incipient fault via self-adaptive deep feature matching. IEEE Trans. Instrum. Meas. 2019, 69, 443–456. [Google Scholar] [CrossRef]

- Antonino-Daviu, J.; Aviyente, S.; Strangas, E.G.; Riera-Guasp, M. Scale invariant feature extraction algorithm for the automatic diagnosis of rotor asymmetries in induction motors. IEEE Trans. Ind. Inform. 2013, 9, 100–108. [Google Scholar] [CrossRef]

- Bazurto, A.J.; Quispe, E.C.; Mendoza, R.C. Causes and failures classification of industrial electric motor. In Proceedings of the 2016 IEEE Andescon, Arequipa, Peru, 19–21 October 2016; pp. 1–4. [Google Scholar]

- Rodriguez-Donate, C.; Romero-Troncoso, R.J.; Garcia-Perez, A.; Razo-Montes, D.A. FPGA based embedded system for induction motor failure monitoring at the start-up transient vibrations with wavelets. IEEE Trans. Instrum. Meas. 2010, 59, 63–72. [Google Scholar]

- Romero-Troncoso, R. Multirate signal processing to improve FFT-based analysis for detecting faults in induction motors. IEEE Trans. Ind. Electron. 2016, 13, 1291–1300. [Google Scholar] [CrossRef]

- Riera-Guasp, M.; Pineda-Sanchez, M.; Pérez-Cruz, J.; Puche-Panadero, R.; Roger-Folch, J.; Antonino-Daviu, J.A. Diagnosis of induction motor faults via Gabor analysis of the current in transient regime. IEEE Trans. Instrum. Meas. 2012, 61, 1583–1596. [Google Scholar] [CrossRef]

- Climente-Alarcon, V.; Antonino-Daviu, J.A.; Haavisto, A.; Arkkio, A. Diagnosis of induction motors under varying speed operation by principal slot harmonic tracking. IEEE Trans. Ind. Appl. 2015, 51, 3591–3599. [Google Scholar] [CrossRef] [Green Version]

- Ali, M.Z.; Liang, X. Threshold-based induction motors single- and multifaults diagnosis using discrete wavelet transform and measured stator current signal. Can. J. Electr. Comput. Eng. 2020, 43, 136–145. [Google Scholar] [CrossRef]

- Fan, H.; Shao, S.; Zhang, X.; Wan, X.; Cao, X.; Ma, H. Intelligent fault diagnosis of rolling bearing using FCM clustering of EMD-PWVD vibration images. IEEE Access 2020, 8, 45194–145206. [Google Scholar] [CrossRef]

- Huo, Z.; Zhang, Y.; Francq, P.; Shu, L.; Huang, J. Incipient fault diagnosis of roller bearing using optimized wavelet transform based multi-speed vibration signatures. IEEE Access 2017, 5, 19442–19456. [Google Scholar] [CrossRef] [Green Version]

- Trujillo-Guajardo, L.A.; Rodriguez-Maldonado, J.; Moonem, M.A.; Platas-Garza, M.A. A multiresolution Taylor–Kalman approach for broken rotor bar detection in cage induction motors. IEEE Trans. Instrum. Meas. 2018, 67, 1317–1328. [Google Scholar] [CrossRef]

- Rabbi, S.F.; Little, M.L.; Saleh, S.A.; Rahman, M.A. A novel technique using multiresolution wavelet packet decomposition for real time diagnosis of hunting in line start IPM motor drives. IEEE Trans. Ind. Appl. 2017, 53, 3005–3019. [Google Scholar] [CrossRef]

- Mishra, M.; Rout, P.K. Detection and classification of micro-grid faults based on HHT and machine learning techniques. IET Gener. Transm. Distrib. 2017, 12, 388–397. [Google Scholar] [CrossRef]

- Alvarez-Gonzalez, F.; Griffo, A.; Wang, B. Permanent magnet synchronous machine stator windings fault detection by Hilbert-Huang transform. In Proceedings of the International Conference on Power Electronics, Machines and Drives, Liverpool, UK, 17–19 April 2018; pp. 3505–3509. [Google Scholar]

- Esfahani, E.T.; Wang, S.; Sundararajan, V. Multisensor wireless system for eccentricity and bearing fault detection in induction motors. IEEE/ASME Trans. Mech. 2014, 19, 818–826. [Google Scholar] [CrossRef]

- Espinosa, A.G.; Rosero, J.A.; Cusido, J.; Romeral, L.; Ortega, J.A. Fault detection by means of Hilbert–Huang transform of the stator current in a PMSM with demagnetization. IEEE Trans. Energy Convers. 2010, 25, 312–318. [Google Scholar] [CrossRef] [Green Version]

- Oyamada, M. Extracting feature engineering knowledge from data science notebooks. In Proceedings of the IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019. [Google Scholar]

- Al-Otaibi, R.; Jin, N.; Wilcox, T.; Flach, P. Feature construction and calibration for clustering daily load curves from smart-meter data. IEEE Trans. Ind. Inform. 2016, 12, 6452–6654. [Google Scholar] [CrossRef] [Green Version]

- Neshatian, K.; Zhang, M.; Andreae, P. A filter approach to multiple feature construction for symbolic learning classifiers using genetic programming. IEEE Trans. Evol. Comput. 2012, 16, 645–661. [Google Scholar] [CrossRef]

- Imani, M.; Ghassemian, H. Band clustering-based feature extraction for classification of hyperspectral images using limited training samples. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1325–1329. [Google Scholar] [CrossRef]

- Godse, R.; Bhat, S. Mathematical morphology-based feature-extraction technique for detection and classification of faults on power transmission line. IEEE Access 2020, 8, 38459–38471. [Google Scholar] [CrossRef]

- Yang, H.; Meng, C.; Wang, C. Data-driven feature extraction for analog circuit fault diagnosis using 1-D convolutional neural network. IEEE Access 2020, 8, 18305–18315. [Google Scholar] [CrossRef]

- Panigrahy, P.S.; Santra, D.; Chattopadhyay, P. Feature engineering in fault diagnosis of induction motor. In Proceedings of the 2017 3rd International Conference on Condition Assessment Techniques in Electrical Systems (CATCON), Rupnagar, India, 16–18 November 2017. [Google Scholar]

- Rauber, T.W.; de Assis Boldt, F.; Varejão, F.M. Heterogeneous feature models and feature selection applied to bearing fault diagnosis. IEEE Trans. Ind. Electron. 2015, 62, 637–646. [Google Scholar] [CrossRef]

- Van, M.; Kang, H.J. Bearing-fault diagnosis using non-local means algorithm and empirical mode decomposition-based feature extraction and two-stage feature selection. Sci. Meas. Technol. 2015, 9, 671–680. [Google Scholar] [CrossRef]

- Lee, C.Y.; Wen, M.S. Establish induction motor fault diagnosis system based on feature selection approaches with MRA. Processes 2020, 8, 1055. [Google Scholar] [CrossRef]

- Hu, B.; Li, X.; Sun, S.; Ratcliffe, M. Attention recognition in EEG-based affective learning research using CFS+KNN algorithm. IEEE/ACM Trans. Comput. Biol. Bioinform. 2018, 15, 38–45. [Google Scholar] [CrossRef]

- Fu, R.; Wang, P.; Gao, Y.; Hua, X. A new feature selection method based on Relief and SVM-RFE. In Proceedings of the 2014 12th International Conference on Signal Processing (ICSP), Hangzhou, China, 19–23 October 2014. [Google Scholar]

- Fu, R.; Wang, P.; Gao, Y.; Hua, X. A combination of relief feature selection and fuzzy k-nearest neighbor for plant species identification. In Proceedings of the 2016 International Conference on Advanced Computer Science and Information Systems (ICACSIS), Malang, Indonesia, 15–16 October 2016. [Google Scholar]

- Huang, Z.; Yang, C.; Zhou, X.; Huang, T. A hybrid feature selection method based on binary state transition algorithm and ReliefF. IEEE J. Biomed. Health Inform. 2019, 23, 1888–1898. [Google Scholar] [CrossRef]

- Singh, A.; Thakur, N.; Sharma, A. A review of supervised machine learning algorithms. In Proceedings of the 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016; pp. 1310–1315. [Google Scholar]

- Wilamowski, B.M.; Yu, H. Improved computation for Levenberg-Marquardt training. IEEE Trans. Neural Netw. 2010, 21, 930–937. [Google Scholar] [CrossRef]

- Fu, X.; Li, S.; Fairbank, M.; Wunsch, D.C.; Alonso, E. Training recurrent neural networks with the Levenberg—Marquardt Algorithm for optimal control of a grid-connected converter. IEEE Trans. Neural Netw. Learn. Syst. 2014, 26, 1900–1912. [Google Scholar] [CrossRef] [Green Version]

- Lv, C.; Xing, Y.; Zhang, J.; Na, X.; Li, Y.; Liu, T.; Cao, D.; Wang, F.-Y. Levenberg–Marquardt backpropagation training of multilayer neural networks for state estimation of a safety-critical cyber-physical system. IEEE Trans. Ind. Inform. 2018, 14, 3436–3446. [Google Scholar] [CrossRef] [Green Version]

- Ngia, L.S.H.; Sjoberg, J. Efficient training of neural nets for nonlinear adaptive filtering using a recursive Levenberg–Marquardt algorithm. IEEE Trans. Signal Process. 2000, 48, 1915–1927. [Google Scholar] [CrossRef]

- Mallat, G. A theory for multi-resolution signal decomposition (The wavelet representation). IEEE Trans. Pattern Anal. Mach. Intell. 1989, PAMI-11, 674–693. [Google Scholar] [CrossRef] [Green Version]

- Yi, L.; Hao, A.; Shuangshuang, B. Hilbert-Huang transform and the application. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Information Systems (ICAIIS), Dalian, China, 20–22 March 2020; pp. 534–539. [Google Scholar]

- Lee, C.Y.; Tuegeh, M. An optimal solution for smooth and non-smooth cost functions-based economic dispatch problem. Energies 2020, 13, 3721. [Google Scholar] [CrossRef]

- Fernández-Martínez, J.L.; García-Gonzalo, E. Stochastic stability analysis of the linear continuous and discrete PSO models. IEEE Trans. Evol. Comput. 2011, 15, 405–423. [Google Scholar] [CrossRef]

| a5 | d5 | d4 | d3 | d2 | d1 | |

|---|---|---|---|---|---|---|

| Tmax | F1 | F2 | F3 | F4 | F5 | F6 |

| Tmin | F7 | F8 | F9 | F10 | F11 | F12 |

| Tmean | F13 | F14 | F15 | F16 | F17 | F18 |

| Tmse | F19 | F20 | F21 | F22 | F23 | F24 |

| Tstd | F25 | F26 | F27 | F28 | F29 | F30 |

| Fmax | F31 | F32 | F33 | F34 | F35 | F36 |

| Fmin | F37 | F38 | F39 | F40 | F41 | F42 |

| Fmean | F43 | F44 | F45 | F46 | F47 | F48 |

| Fmse | F49 | F50 | F51 | F52 | F53 | F54 |

| Fstd | F55 | F56 | F57 | F58 | F59 | F60 |

| max | min | mean | mse | std | ||

|---|---|---|---|---|---|---|

| EMD | c1 | F1 | F2 | F3 | F4 | F5 |

| c2 | F6 | F7 | F8 | F9 | F10 | |

| c3 | F11 | F12 | F13 | F14 | F15 | |

| c4 | F16 | F17 | F18 | F19 | F20 | |

| c5 | F21 | F22 | F23 | F24 | F25 | |

| c6 | F26 | F27 | F28 | F29 | F30 | |

| c7 | F31 | F32 | F33 | F34 | F35 | |

| HT | w1 | F36 | F37 | F38 | F39 | F40 |

| w2 | F41 | F42 | F43 | F44 | F45 | |

| w3 | F46 | F47 | F48 | F49 | F50 | |

| w4 | F51 | F52 | F53 | F54 | F55 | |

| w5 | F56 | F57 | F58 | F59 | F60 | |

| w6 | F61 | F62 | F63 | F64 | F65 | |

| w7 | F66 | F67 | F68 | F69 | F70 | |

| Signal Processing | Feature-Selection Approach | Features Order of 1st to 10th | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1st | 2nd | 3rd | 4th | 5th | 6th | 7th | 8th | 9th | 10th | ||

| MRA | ReliefF | F35 | F24 | F54 | F60 | F27 | F57 | F30 | F21 | F51 | F58 |

| CFS | F35 | F54 | F60 | F24 | F57 | F51 | F58 | F30 | F27 | F21 | |

| CFFS | F35 | F57 | F58 | F27 | F24 | F51 | F60 | F30 | F22 | F54 | |

| HHT | ReliefF | F5 | F4 | F61 | F32 | F56 | F12 | F40 | F58 | F44 | F10 |

| CFS | F39 | F40 | F38 | F5 | F4 | F13 | F64 | F65 | F14 | F45 | |

| CFFS | F39 | F5 | F4 | F13 | F64 | F43 | F25 | F46 | F24 | F45 | |

| Parameters | Value |

|---|---|

| Hidden layer size | 10 |

| Output layer size | 4 |

| Training ratio | 75/100 |

| Testing ratio | 25/100 |

| Training function | Levenberg-Marquardt |

| Learning rate | 0.007 |

| Iteration | 50 |

| Activation function | Softmax |

| Performance function | Cross-Entropy |

| Transfer function | Hyperbolic tangent sigmod |

| SNR | Feature Numbers | Accuracy (%) | The Elements of the Feature Vector |

|---|---|---|---|

| ∞ | 10 | 92.8 | F35, F24, F54, F60, F27, F57, F30, F21, F51, F58 |

| 40 | 10 | 92.7 | F35, F24, F54, F60, F27, F57, F30, F21, F51, F58 |

| 30 | 10 | 90.4 | F35, F24, F54, F60, F27, F57, F30, F21, F51, F58 |

| 20 | 14 | 87.6 | F35, F24, F54, F60, F27, F57, F30, F21, F51, F58, F34, F36, F28, F22 |

| 10 | 22 | 70.3 | F35, F24, F54, F60, F27, F57, F30, F21, F51, F58, F34, F36, F28, F22, F52, F33, F9, F3, F49, F19, F31, F13 |

| SNR | Feature Numbers | Accuracy (%) | The Elements of the Feature Vector |

|---|---|---|---|

| ∞ | 7 | 92.02 | F35, F54, F60, F24, F57, F51, F58 |

| 40 | 7 | 91.9 | F35, F54, F60, F24, F57, F51, F58 |

| 30 | 7 | 90.7 | F35, F54, F60, F24, F57, F51, F58 |

| 20 | 11 | 88.3 | F35, F54, F60, F24, F57, F51, F58, F30, F27, F21, F52 |

| 10 | 20 | 70.3 | F35, F54, F60, F24, F57, F51, F58, F30, F27, F21, F52, F34, F36, F28, F22, F33, F49, F59, F55, F31 |

| SNR | Feature Numbers | Accuracy (%) | The Elements of the Feature Vector |

|---|---|---|---|

| ∞ | 3 | 93 | F35, F57, F58 |

| 40 | 3 | 93 | F35, F57, F58 |

| 30 | 3 | 93 | F35, F57, F58 |

| 20 | 4 | 92.8 | F35, F57, F58, F27 |

| 10 | 6 | 92 | F35, F57, F58, F27, F24, F51 |

| SNR | Feature Numbers | Accuracy (%) | The Elements of the Feature Vector |

|---|---|---|---|

| ∞ | 9 | 78.2 | F5, F4, F61, F32, F56, F12, F40, F58, F44 |

| 40 | 9 | 77.6 | F5, F4, F61, F32, F56, F12, F40, F58, F44 |

| 30 | 9 | 72.9 | F5, F4, F61, F32, F56, F12, F40, F58, F44 |

| 20 | 9 | 60.4 | F5, F4, F61, F32, F56, F12, F40, F58, F44 |

| 10 | 9 | 43.8 | F5, F4, F61, F32, F56, F12, F40, F58, F44 |

| SNR | Feature Numbers | Accuracy (%) | The Elements of the Feature Vector |

|---|---|---|---|

| ∞ | 13 | 81.3 | F39, F40, F38, F5, F4, F13, F64, F65, F14, F45, F63, F15, F46 |

| 40 | 13 | 79.6 | F39, F40, F38, F5, F4, F13, F64, F65, F14, F45, F63, F15, F46 |

| 30 | 13 | 75.2 | F39, F40, F38, F5, F4, F13, F64, F65, F14, F45, F63, F15, F46 |

| 20 | 13 | 62.9 | F39, F40, F38, F5, F4, F13, F64, F65, F14, F45, F63, F15, F46 |

| 10 | 13 | 44.6 | F39, F40, F38, F5, F4, F13, F64, F65, F14, F45, F63, F15, F46 |

| SNR | Feature Numbers | Accuracy (%) | The Elements of the feature Vector |

|---|---|---|---|

| ∞ | 7 | 74.8 | F39, F5, F4, F13, F64, F43, F25 |

| 40 | 6 | 73.5 | F39, F5, F4, F13, F64, F43 |

| 30 | 6 | 73 | F39, F5, F4, F13, F64, F43 |

| 20 | 6 | 72.3 | F39, F5, F4, F13, F64, F43 |

| 10 | 6 | 71.5 | F39, F5, F4, F13, F64, F43 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, C.-Y.; Wen, M.-S.; Zhuo, G.-L.; Le, T.-A. Application of ANN in Induction-Motor Fault-Detection System Established with MRA and CFFS. Mathematics 2022, 10, 2250. https://doi.org/10.3390/math10132250

Lee C-Y, Wen M-S, Zhuo G-L, Le T-A. Application of ANN in Induction-Motor Fault-Detection System Established with MRA and CFFS. Mathematics. 2022; 10(13):2250. https://doi.org/10.3390/math10132250

Chicago/Turabian StyleLee, Chun-Yao, Meng-Syun Wen, Guang-Lin Zhuo, and Truong-An Le. 2022. "Application of ANN in Induction-Motor Fault-Detection System Established with MRA and CFFS" Mathematics 10, no. 13: 2250. https://doi.org/10.3390/math10132250

APA StyleLee, C.-Y., Wen, M.-S., Zhuo, G.-L., & Le, T.-A. (2022). Application of ANN in Induction-Motor Fault-Detection System Established with MRA and CFFS. Mathematics, 10(13), 2250. https://doi.org/10.3390/math10132250