Abstract

A Hopfield neural network is described by a system of nonlinear ordinary differential equations. We develop a broad range of numerical schemes that are applicable for a wide range of computational problems. We review here our study on an approximate solution of the Fredholm integral equation, and linear and nonlinear singular and hypersingular integral equations, using a continuous method for solving operator equations. This method assumes that the original system is associated with a Cauchy problem for systems of ordinary differential equations on Hopfield neural networks. We present sufficient conditions for the Hopfield networks’ stability defined via coefficients of systems of differential equations.

Keywords:

Hopfield neural network; singular; hypersingular integral equations; nonlinear differential equations; stability; Cauchy problem; continuous method MSC:

37C99; 45G05; 65J20; 68V99

1. Introduction

Modeling problems of mathematical physics on Hopfield neural networks (HNN) is based on modeling of an artificial neuron implemented as an electronic circuit by a nonlinear ordinary differential equation. Within this approach, the i-th neuron connected to the N neurons of the network (including itself) is modeled by the system of differential equations

where are the synaptic weights of the neurons, are the currents representing an external bias, are the induced local fields at the input of the nonlinear activation functions , and are the leakage resistances and the leakage capacitances, respectively.

Since the 1980s, the methods of modeling numerical solutions on artificial neural networks have attracted a serious attention [1,2,3,4,5,6,7,8,9,10,11,12]. A detailed bibliography of the works carried out in this direction can be found in [1,2,3,4,5,12]. The books [1,4,5] are of encyclopedic character. They describe—with different degrees of detail—the most principal results concerning the architecture, training and practical applications of artificial neural networks (ANN). The article [13] is a pioneering one; it has demonstrated an opportunity in solving computational problems with the devices assembled from a large number of quite simple standardized elements. The paradigm of Hopfield neural networks has also been introduced in this work.

Some of the listed works are devoted to solving of particular problems on the basis of artificial neural networks. For instance, the works [14,15] concern the optimization problem, the works [16,17] describe solving linear and nonlinear algebraic equations with neural networks, and the works [3,7,9,10,11] exploit the application of ANN to ordinary and partial differential equations. The work [6] is devoted to a more difficult and special problem of solving Diofant equations. General problems of information science are elucidated in the context of ANN in the book [2]. The problem of dynamical system parametric identification with HNNs is described in [18].

Along with modeling known numerical methods on artificial neural networks, it is of interest to develop special modeling methods designed to solve problems of mathematical physics with the ANNs and, first of all, with the HNNs. One of these methods is the continuous operator method [19]. Here is a brief overview of the works carried out in this direction.

The predecessors of the HNN are, to some extent, analog computers.

In the second half of the 20th century, a separate direction in computer technology associated with analog computers was actively developing. Analog computers had a high performance (operations performed at the speed of light), but low accuracy due to imperfection of the element base, and by the end of the century, they were ousted from many fields of application, in particular, from computational mathematics, by digital computers. Currently, analog computers are used as part of specialized control systems. However, we note that recently there has been a renaissance in relation to analog computers, albeit on a different element base [20].

Note that analog computers are used to solving the following systems of nonlinear differential equations

Here, are real numbers, are continuous functions, and are continuous functions. The parameter T is determined by the problem to be solved and the design of the machine, and H is determined by the design of the machine.

From the comparison of Equations (1) and (2), it follows that the fields of applications of Hopfield’s neural networks and analog computers coincide. Therefore, the results presented in this article in terms of Hopfield neural networks are applicable to analog computers. A detailed presentation of the methods for solving systems of algebraic and ordinary differential equations on analog computers can be found in [21].

A review of works devoted to approximate methods for solving systems of algebraic equations and systems of ordinary differential equations on analog computers and NNH shows that regular (not singular) equations have mostly been solved.

Meanwhile, a large class of problems from various branches in mathematics, physics and engineering has come out of NNH applications. They are ill-posed problems in a Hadamard sense, spectrum problems, inverse problems and many others. Applying the continuous operator method [19] to solve various ill-posed problems (coefficient problems for parabolic and hyperbolic equations, solving systems of linear algebraic equations with non-inversible matrices) has demonstrated the capabilities of the method.

Obviously, the continuous operator method can be implemented directly on NNH of any technological origin. This extends the range of problems implemented on NNH.

The main goal of this work is to demonstrate the relations between the continuous method for solving nonlinear operator equations and NNH, and, thereby, to extend the range of problems solved on NNH.

In [13], J.J. Hopfied researched the application of “biological computers” to computer design. The architecture of machines built by analogy with biological objects is based on a very large number of interconnected and very simple computing nodes of the same type, called neurons.

In the article [14], J.J. Hopfied and D.W. Tank have demonstrated the possibility of implementing such computers, called Hopfield neural networks, using simple circuits made up of resisters, capacitors and inductors. In [14], the energy function was introduced and the stability of neural networks was studied based on the second Lyapunov method. Note that the stability of Hopfield neural networks [22], Ref. [23] has been studied based on the first Lyapunov method.

Starting from [13,14], Hopfield neural networks began to be widely used to solve optimization problems [15], matrix inversion [16], and parametric identification of dynamic systems [18]. In [17], Hopfield neural networks are applied to solve systems of nonlinear algebraic equations.

In works known to the authors, numerical methods for solving problems of mathematical physics on artificial neural networks (ANN) are based on methods for minimizing the corresponding functionals.

In this paper, we review the works of the authors devoted to methods for solving the equations of mathematical physics on the HNN. These methods are based on conditions for stability of the solutions to systems of ordinary differential equations.

Stability of the neural network is crucial for modeling numerical methods with the network. Usually, stability of the network is substantiated on the grounds of symmetry of the synaptic matrix, and the HNN energy is used as the Lyapunov function. Here, we obtain more general stability conditions that are applicable even for non-symmetric synaptic matrices.

2. Notation and Basic Definitions

Let us first introduce the notation used in the work.

Let B be a Banach space, and , K is a linear operator on B, is the logarithmic norm [24] of the operator K, is the conjugate operator to K, and I is the identity operator.

The analytical expressions for logarithmic norms are known for operators in many spaces. We restrict ourselves to a description of the following three norms. Let , , be a matrix. In the n-dimensional space of vectors the following norms are often used:

- octahedral—

- cubic—

- spherical (Euclidean)—

Here are the analytical expressions for the logarithmic norm of a matrix consistent with vector space norms given above:

octahedral logarithmic norm

cubic logarithmic norm

spherical (Euclidean) logarithmic norm

where is the conjugate matrix for

Note that the logarithmic norm of the same matrix can be positive in one space and negative in another. Moreover, it is known that a linear combination with positive coefficients of any finite number of norms is also a norm.

The logarithmic norm has some properties very useful for numerical mathematics.

Let be matrices with complex elements and , , , are n-dimensional vectors with complex components. Let the following systems of algebraic equations: and be given. The norm of a vector and its subordinate operator norm of the matrix are agreed upon; the logarithmic norm corresponds to the operator norm.

Theorem 1

([25]). If , the matrix A is non-singular and .

Theorem 2

([25]). Let , and , . Then

Some properties of the logarithmic norm in a Banach space which are useful in numerical mathematics are given in [24].

3. Continuous Methods for Solving Operator Equations

Extensive literature is devoted to approximate methods for solving nonlinear operator equations, and a detailed bibliography on this subject can be found in the books [26,27]. At the same time, discrete methods have mainly been considered, among which, first of all, we should note the methods of simple iteration and Newton-Kantorovich. The study of continuous analogues of the Newton-Kantorovich method began, apparently, with the article [28]. Later, continuous analogs of the Newton-Kantorovich method were widely used for solving numerous problems in physics [29,30].

Let us present several statements about continuous methods for solving operator equations, which will be used below when substantiating computational methods.

Let the nonlinear operator equation

map from the Banach space B to B. Here, is a non-linear operator.

Consider the Cauchy problem in a Banach space B

We assume that the operator A has a continuous Gateaux (Frechet) derivative.

Theorem 3

Theorem 4

([19]). Let Equation (3) have a solution Let the following conditions be satisfied on any differentiable curve in a ball :

(1) for any , the inequality

holds;

(2) the following equality is true:

If the conditions (6) and (7) are not satisfied, it is necessary to transform Equation (3) and the Cauchy problem (4). For this purpose, we employ a symmetrizing version of the operator. The symmetrization is performed by an adjoint derivative operator acting on Equation (3) from the left. As a result, the derivative of the operator becomes symmetric and non-negative. Let be the Gateaux (Frechet) derivative of operator We will transform Equation (3) to the form

where is the operator conjugated to .

The following statement is valid.

Theorem 5.

Let Equation (8) have a unique solution in the ball Let the following conditions be satisfied for any differentiable curve laying in the ball :

(1) for any , the inequality

occurs;

(2) the equality

is valid.

The proof of the theorem is based on the sufficient condition for stability of solutions of differential equations in Banach spaces.

Let the differential equation be in a Banach space B

where the nonlinear operator has a Gateaux derivative, , and the spectrum of the operator is in the left half-plane of the complex plane and on the imaginary axis.

Theorem 6

([31]). Let the following conditions be satisfied on any differentiable curve φ that lays in the ball : (1) (2)

Then, a trivial solution to Equation (13) is asymptotically stable.

The validity of Theorem 5 follows from this statement. Indeed, the spectrum of the operator is in the left half-plane of the complex plane and on the imaginary axis. The asymptotic stability for the solution of Equation (9) for any initial value in the ball follows from Theorem 6. Thus, and Therefore, the solution of Equation (9) converges to the solution

If conditions (11) and (12) are not met, it is necessary to use regularization. Consider the Cauchy problem

Here, is a regularization parameter.

The Cauchy problem (14) has a solution for any initial value. Moreover, this solution satisfies the equation

and does not satisfy Equation (8).

The continuous method for solving nonlinear equations has the advantages over the standard Newton-Kantorovich method. They are:

- (1)

- The existence of an inverse operator is not required for the Gateaux (Frechet) derivative of the nonlinear operator;

- (2)

- If the inequality holds on any differentiable function then the convergence of the method does not depend on initial conditions.

4. Representation of Functions of Multiple Variables on Hopfield Neural Networks

In this section we study the methods of representation for functions of multiple variables and for localization of the minimum (maximum) of such functions using an artificial neural network.

When constructing neural networks for solving numerous problems in physics and engineering, the problem of representation for the functions of multiple variables on neural networks arises. The issue is due to the fact that the Hopfield networks compute linear and nonlinear functions of one dynamic variable. They also perform superposition and addition operations when networks [3,5] are cascaded. Therefore, algorithms for representing multiple variables’ functions by superpositions of one variable’s functions and the addition operation are of great interest. Representations of multiple variables’ functions by superpositions of one variable’s functions and the addition operation were obtained by V.I. Arnold and A.N. Kolmogorov [32,33]. The representations have a rather complicated form. Their application to designing neural networks is difficult. Thus, the exact representation of multiple variables’ functions on neural networks is hardly possible, and approximate methods are of considerable interest.

An approximate method for function representation on neural networks is based on Stone’s [2] theorem. The possibility of approximating multiple variables’ functions with any degree of accuracy by superpositions and linear combinations of one variable’s functions was studied in [2,4]. An important question of approximation accuracy, and especially of the choice of the best basis, are open. A number of research results and an extensive literature on representation of continuous functions with neural networks is given in [34].

The second approach to an approximate representation of functions of multiple variables by functions of one variable uses various sweep methods [35]. However the application of these methods in neural networks seems to be problematic. Therefore, it is of interest to develop an easy-to-implement and sufficiently accurate approximate method for representing multiple variables’ function in neural networks. One of such methods is presented below. Note that this method also allows us to find extreme values of functions of multiple variables.

Today, the problem of finding the extreme values of functions of multiple variables is widely studied. A detailed literature review on the subject can be found in [35].

Let . Let be prime numbers, .

Show that the extreme values of the function are approximated with high degree by the extreme values of the function . It is sufficient to consider the minimum of the function. We assume that the function satisfies the Lipschitz condition

Without loss of generality, we assume that the function in D has a unique maximum at . Let yield its maximum at .

Let be a residue class t modulo The set , for form a set of parallel lines, which for intersect at . Obviously, .

Let . We want to prove .

Let be the value of t such that , and yields its minimum . However, from (16), it follows that .

Thus, it was shown that the extreme value of is located at near .

Take several sets of natural numbers . Find the minima of the functions . Construct near , , of points , , .

The extreme point is at the intersection of the neighborhoods.

This way the problem of the extreme values of multiple variables’ functions is reduced to the problem of finding the extreme values of functions of one variable. Numerous works are devoted to the solution of the latter problem on artificial neural networks (see [3,5]).

5. Multiple Integrals’ Evaluations on Hopfield Neural Networks

This section is devoted to evaluation of multiple integrals of periodic functions on Hopfield networks. The suggested method is applied to the evaluation of multiple variable integral of any finite dimension. Without loss of generality, we consider two-dimensional integrals. The method for evaluating integrals of multiple variables’ functions was presented in [36]. It was suggested that functions expand in a Fourier series. An accuracy estimation of the method on certain classes of functions was also given.

The method is based on the following statement ([36,37]).

Lemma 1.

Let be a continuous periodic function with respect to each variable, and N is a natural, are prime and Then,

where

where is the best approximation of by a trigonometric polynomial of N degree with respect to each variable.

The convergence rate is defined in terms of constants C that here do not depend on N, and the functional classes are defined as follows.

Evaluating the integral in the right-hand side of (17) with the rectangle quadrature formula, we have

where are prime numbers,

The estimate holds

Recall the definition of the class of functions Let the function be continuous in an l-dimensional cube defined by the inequalities and be periodic with a period in each variable . stands for the Fourier coefficients of the function. We also introduce defined as for , and for . We say that belongs to the class , if , where , and the constant A does not depend on .

One can evaluate multiple periodic function integrals on Hopfield networks by formula (17). To do so, it is enough to solve the Cauchy problem

Then,

This method for integral evaluation can be used to calculate the Fourier coefficients of multiple variable functions on the basis of trigonometric functions.

6. Approximate Solution of Systems of Algebraic Equations on Hopfield Neural Networks

Consider the system of linear algebraic equations

where

Let the logarithmic norm of matrix A be negative.

We associate the system of algebraic Equations (20) with the Cauchy problem

From results of Section 2, it follows that for , the solution of the Cauchy problem (21) converges to the solution of the system of algebraic Equations (20) for any initial value .

Thus, modeling the Cauchy problem (21) on Hopfield neural networks is possible for sufficiently large values of t to obtain a good approximation to the solution of the system (20). It is easy to see that the solution is stable to perturbation of the initial values for Cauchy problem (21). It is also stable to the perturbation of the coefficients and right-hand sides of Equation (20). The proof of this statement and the corresponding theorems can be found in [38].

Consider the application of the continuous operator method for solving systems of nonlinear algebraic equations on Hopfield neural networks. For generality, we assume operator equations in Banach spaces.

Consider the nonlinear operator equation

acting from the Banach space X to Here, is a non-linear operator.

Let the Equation (22) have an isolated solution Let us consider the Cauchy problem

By Theorem 4, if for any smooth curve defined in the ball of a Banach space B, the inequalities

hold, then

Remark 1.

Examples of implementations can be found in [38].

7. Approximate Solutions for Fredholm Integral Equations on Hopfield Neural Networks

In this section we solve Fredholm integral equations on Hopfield neural networks. For demonstration, we will use a one-dimensional integral equation of the second kind

with continuous kernels in the right-hand side.

Weakly singular integral equations and multidimensional integral equations are treated similarly.

Let Equation (25) have an isolated solution in

The approximate solution (25) is obtained from the system of equations

where and are coefficients and nodes of the quadrature formula

It is essential to take

In [27] (Theorem 19.5, Chapter 4), solvability conditions of system (26) were presented. The convergence of approximate solutions of (26) to exact solutions of (25) using nodes , was also demonstrated.

We set the following condition to the function : at any interior point of in space there exists the derivative , . Here, stands for the partial derivative with respect to the third variable.

Consider the matrix , where , ; , .

It follows from Theorem 4 that if for then the solution of the system of differential equations

converges to the solution of the system of Equation (26) at the nodes , .

We illustrate the method described above with solving the following equation:

The exact solution of the equation reads . Equation (28) has been approximated with a system of 10 ordinary differential equations, solved using Euler’s method with a time step In Table 1 we show the numerical error as a function of the number of iterations N.

Table 1.

Convergence of the method to the solution of the linear integral Equation (28) with respect to the number of iterations. .

8. Approximate Solution of Linear Hypersingular Integral Equations on Hopfield Neural Networks

Let us recall the Hadamard definition of hypersingular integrals [39]. The integral of the type

for an integer , is defined as the limit of the sum

at if one assumes that has p derivatives in the neighborhood of the point b. Here, is any function that satisfies the following two conditions:

- (i)

- The above limit exists;

- (ii)

- has at least p derivatives in the neighborhood of the point .

An arbitrary choice of is unaffected by the value of the limit in the condition (i). The condition (ii) defines values of the () first derivatives of at a point b, so that an arbitrary additional term in the numerator is an infinitely small quantity, at least of order .

Chikin in [40] introduced the definition of the Cauchy–Hadamard-type integral that generalizes the notion of the singular integral in the Cauchy principal sense and in the Hadamard sense.

The Cauchy–Hadamard principal value of the integral

is defined as the limit of the following expression

where is a function constructed so that the limit exists.

Consider the one-dimensional linear hypersingular integral equation

We impose the following conditions on the coefficients and the right-hand side of Equation (31):

- (1)

- (2)

- (3)

- Equation (31) is uniquely solvable and its solution

Introduce nodes and Let be intervals

An approximate solution (31) is sought in the form of piecewise constant functions

The values are determined from the system of linear algebraic equations

The following statement is true.

Theorem 7

([38,41]). Let the following conditions be satisfied: (1) Equation (31) has the unique solution ; (2) the inequality holds for ; (3) the function is satisfied the Lipschitz condition with respect to the second variable. Then, for sufficiently large N, the system of Equations (33) has the unique solution .

The conditions of Theorem 7 are sufficient for the system of Equations (33) to be solved on Hopfield neural networks.

System (33) should be represented in the form

.

When the conditions given in Section 2 are met, the solution of the system

for converges to solution of the system (34) for any initial value.

Note that the conditions of Theorem 7 guarantee just a unique solvability for the system (33). To prove the convergence of the approximate solution of Equation (31) to its exact solution, one has to construct a more complicated algorithm.

In doing so, let . Divide the interval into subintervals at the points . We seek an approximate solution of (31) in the form of a piecewise continuous function

where , is a family of basis functions.

For nodes the corresponding basis elements are determined by

For boundary nodes and , the corresponding basis elements are defined as

and

The coefficients in (36) are determined from the following system of linear algebraic equations and obtained by approximating the kernel with a polygon and applying a collocation procedure

The system (40) can be rewritten as

Here, indicates a summation over . The system (41) is equivalent to the system

The system (42) can be written in the matrix form

where , , , . The values of , and are determined by matching to the corresponding terms in (42).

The cubic logarithmic norm of the matrix D is estimated as

If by Theorem 2 we can see that the system (31) has a unique solution and It is obvious that is a solution of the system of Equation (41).

Theorem 8.

Let the following conditions be satisfied:

- Equation (31) has the unique solution

- For all , it holds that

- where indicates a summation over

Then, the system of Equation (40) has a unique solution and the following estimate holds .

The system of Equation (35) can be solved by any numerical method. Examples of solving linear hypersingular integral equations by the continuous operator method are given in [38].

Let us now study approximate methods for solving nonlinear hypersingular integral equations

Consider Equation (43) with An approximate solution of (43) is sought in the form of a piecewise constant function (32), in which coefficients are determined from the system of nonlinear algebraic equations

where

Write Equation (44) in the operator form where is a matrix.

We assume that Equation (44) has a solution in the ball in the space and for any differentiable curve , the inequality holds.

Then, the solution of the system of differential equations

converges to the solution of the systems of Equations (44).

Remark 2.

If p is odd, the algorithms proposed and justified in [42] should be used as a basis to construct computational process.

Remark 3.

Study in Section 6 is based on numerical results provided in [38].

9. Continuous Method for Solving Gravity Exploration Problems

Introduce a Cartesian rectangular coordinate system with the axis pointing down.

If an ore body is located at the depth of its lower surface coincides with the plane and the upper surface is described by function with a non-negative function and then the gravitational field on Earth’s surface is described by the equation

where G is the gravitational constant; is the density of the body.

It is assumed that the density outside the body and that the density is differentiable with respect to

To simplify further calculations, we assume that the density does not depend on Then, we obtain the equation

Linearization of Equation (46) leads to

Below, we assume that the density is constant and, for convenience, let

Represent Equation (47) in the form

The problem of logarithmic potential leads to nonlinear integral equations

where is an function describing the surface of a body; is the depth of body location.

A detailed review of the literature of approximate methods for solving inverse problems of gravity exploration is given in [44,45,46].

Here, H is the depth of the body location, is its density, and stands for the contact surface shape.

Similarly, Equation (49) is approximated by

There are three unknown variables in (51). They are the depth of the gravitating body H, the density of the body and the shape of the contact surface . To find them it is necessary to have three linear and independent information sources.

Assume that gravity field values are known on surfaces , , Denote , , by , , , respectively. Suppose , , in (51), and we have

Introduce functions , . This way, the system (53) is turned into a linear system of new variables

Applying the Fourier transformation to (54), we have

To solve the systems (54) and (55) we used a continuous method for solving nonlinear operator equations similar to the methods for Fredholm integral equations and for systems of algebraic linear equations described above. The detailed description of computations and their justification is given in [47]. More details on the logarithmic potential case can be found in [48].

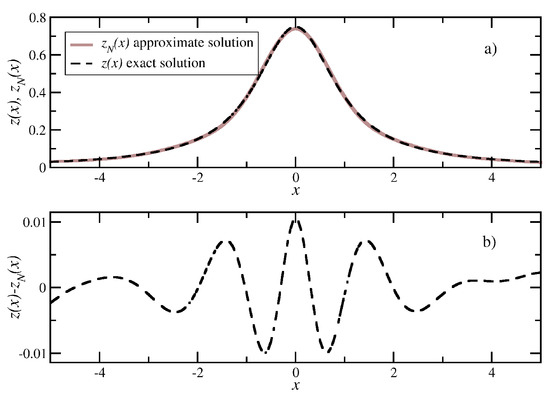

In Figure 1, we show a numerical example of solving Equation (49) using the NNH. In the works cited above, the NNH has been applied to nonlinear approximations of the original equation. Here, we demonstrate an application of the NNH to the original nonlinear equation with the logarithmic potential. Consider Equation (49) with the following parameters: . The exact solution reads . Equation (49) has been solved numerically by solving the Cauchy problem for the following system of nonlinear differential equations

where and the initial state . The system has been solved by Euler’s method with time step . In Figure 1a, we show the final state for N = 180 after 100 iterations together with the exact solution. The numerical and the exact solutions are indistinguishable in the scale of the figure. The corresponding numerical error is shown in Figure 1b.

Figure 1.

Exact and numerical solutions of Equation (49): (a) the solutions, (b) the numerical error for 180 spatial grid points, time step for 100 iterations.

10. Implementation and Numerical Experiments

We noted above that the continuous operator method has been applied for solving inverse coefficients problems [49], hypersingular integral equations [38], and inverse problems in astrophysics [50]. In each of these works, the obtained results were compared with the known ones.

The most significant advantages of the continuous method for solving nonlinear operator equations compared with iterative methods are as follows:

(1) Implementation of the basic method does not require Gateaux or Frechet derivatives of the operator to solve the equation; (2) Implementation of the modified method does not require invertibility of the Gateaux or Frechet derivatives of the operator to solve the equation; (3) The operator continuous method is based on Lyapunov’s stability theory for solving differential equations. The method is stable for coefficient perturbations in right-hand sides of equations.

In this section, the method’s efficiency is illustrated on the example of solving inverse problems for logarithmic potential. Hereby, we solve the exact equation without any simplifications. As far as the authors know, the inverse problem for logarithmic potential has not been previously solved in such a formulation.

Let us return to Equation (49) and write it in a more convenient form:

where is the function describing the surface of the body; is the density of the body, and H is the occurrence depth of the body.

Recall the issue. There is a gravitating body infinitely extended along the Y-axis and homogeneous along y. In this case, it can be treated as two-dimensional and we restrict ourselves to considering Thus, we consider a body lying in the region Let stand for perturbation of the Earth’s external field. It is required to restore the function given data about

Similar problems are of great practical importance.

It was mentioned above that there are extensive studies devoted to the study of equations of the form (56). They considered either linearized equations or nonlinear approximations of Equation (56).

Below we demonstrate Hopfield neural networks for solving the original Equation (56).

Let , and Here, Introduce the nodes

We will seek an approximate solution in the form of a piecewise constant function

where

The coefficients are determined from the system of nonlinear algebraic equations

The system of Equation (57) is associated with the Cauchy problem

The coefficients are selected so that the logarithmic norm of the Jacobian of the right side of the system (58) is negative.

The system (58) can be solved by any numerical method.

Application of the Euler method leads to the iterative scheme

. Here, h is the step of the Euler method.

The algorithm described above was applied to solve the problem of restoring a gravitating body with the data:

The exact solution of the problem is The function has been computed by the quadrature rule

Detailed computational results are shown in Table 2.

Table 2.

Convergence of the continuous method to solution of nonlinear integral Equation (56) by a number of iterations, .

11. Conclusions

The paper is devoted to approximate methods for solving linear and nonlinear equations of mathematical physics. In doing so, we used a spline-collocation method based on a continuous method for solving nonlinear operator equations [19]. It has been shown that a continuous method computational scheme for solving nonlinear operator equations can be implemented with Hopfield neural networks. The efficiency and flexibility of the approach has been shown by evaluating multiple integrals, and solving Fredholm integral equations and hypersingular integral equations. In addition to the listed examples, the method was efficiently applied to a direct and inverse electromagnetic wave scattering problem [51], amplitude-phase problem [52,53], solving Ambartsumian’s systems of equations (astrophysics) [50], solving inverse problems of gravity and magnetic prospecting [47], and solving direct and inverse problems for parabolic and hyperbolic equations [54].

The authors intend to continue the study of the applicability of the continuous method for solving nonlinear operator equations, Hopfield neural networks, to new classes of equations. First, we want to use it for solving inverse problems in optics. Two points should be taken into account. First, the authors started to study the amplitude-phase problem [52,53]. Second, a couple of works devoted to applications of neural networks for inverse problems of restoration have been published recently [55,56]. It is of interest to investigate the possible use of the continuous operator method application for these issues.

As noted above, the continuous method for solving nonlinear operator equations is based on Lyapunov’s stability theory. The authors have studied the stability of Hopfield neural networks [22,57] and considered some issues of stabilization for dynamic systems [58,59]. A new statement of the problem of stabilization for dynamical systems was given in [60,61]. The authors intend to use this formulation in coming works.

Author Contributions

Conceptualization, I.B.; Data curation, V.R. and A.B.; Formal analysis, I.B. and V.R.; Investigation, I.B. and V.R.; Methodology, I.B.and V.R.; Software, V.R. and A.B.; Writing—original draft, I.B.; Writing—review & editing, V.R. and A.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Galushkin, A.I. Theory of Neural Networks; IPRZHR: Moscow, Russia, 2000; 416p. [Google Scholar]

- Gorban, A.N.; Dunin-Barkovsky, V.L.; Kirdin, A.N.; Mirkes, E.M.; Novokhod’ko, A.Y.; Rossiev, D.A.; Terekhov, S.A.; Senashova, M.Y.; Tzargorodtzev, V.G. Neuroinformatics; Siberian Enterprise “Science”: Novosibirsk, Russia, 1998; 296p. [Google Scholar]

- Gorbachenko, V.I. Neurocomputers in Solving Boundary Value Problems of Field Theory; Radio Engineering: Moscow, Russia, 2003; 336p. [Google Scholar]

- Gupta, M.M.; Jin, L.; Hamma, N. Static and Dynamic Neural Networks from Fundamentals to Advanced Theory; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2005; 722p. [Google Scholar]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Prentice Hall: Hoboken, NJ, USA, 1999; 842p. [Google Scholar]

- Joya, G.; Atencia, M.A.; Sandoval, F. Application of high-order Hopfield neural networks to the solution of diophante equations. Lect. Notes Comput. Sci. 1991, 540, 395–400. [Google Scholar]

- Lagaris, I.E.; Likas, A.C.; Fotiadis, D.I. Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans. Neural Netw. 1998, 9, 987–1000. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lagaris, I.E.; Likas, A.C.; Papageorgiou, D.G. Neural-network methods for boundary value problems with irregular boundaries. IEEE Trans. Neural Netw. 2000, 11, 1041–1049. [Google Scholar] [CrossRef] [Green Version]

- Lee, H.; Kang, I.S. Neural algorithm for solving differential equations. J. Comput. Phys. 1990, 91, 110–131. [Google Scholar] [CrossRef]

- Mehdi, D.; Mojtaba, N.; Menhaj, M.B. Numerical solution of Helmholtz equation by the modified Hopfield finite difference technique. Numer. Partial Differ. Equ. 2009, 25, 637–656. [Google Scholar]

- Nesterenko, B.B.; Novotarsky, M.A. Solution of boundary value problems on discrete cellular neural networks. Artif. Intell. 2008, 3, 568–578. [Google Scholar]

- Tarkhov, D.A. Neural Networks as a Means of Mathematical Modeling; Radio Engineering: Moscow, Russia, 2006; 48p. [Google Scholar]

- Hopfield, J.J. Neural Networks and Physical Systems with Emergent Collective Computational Abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef] [Green Version]

- Hopfield, J.J.; Tank, D.W. Neural Computation of decision in Optimization problems. Biol. Cybern. 1985, 52, 141–152. [Google Scholar] [CrossRef]

- Tank, D.W.; Hopfield, J. Simple Neural Optimization: An A/D Converter, a Single Decision Cir- cuit and Linear Programming Circuit. IEEE Trans. Circuit Syst. 1991, 33, 137–142. [Google Scholar]

- Jang, J.S.; Lee, S.Y.; Shin, S.Y. An Optimization Network for Solving a Set of Simultaneous Linear Equations. In Proceedings of the IJCNN International Joint Conference on Neural Networks, Baltimore, MD, USA, 7–11 June 1992; pp. 516–521. [Google Scholar]

- Mishra, D.; Kalra, P.K. Modified Hopfield Neural Network Approach for Solving Nonlinear Algebraic Equations. Eng. Lett. 2007, 14, 135–142. [Google Scholar]

- Atencia, M.; Joya, G.; Sandoval, F. Hopfield Neural Networks for Parametric Identification of Dynamical Systems. Neural Process. Lett. 2005, 21, 143–152. [Google Scholar] [CrossRef]

- Boikov, I.V. On a continuous method for solving nonlinear operator equations. Differ. Equ. 2012, 48, 1308–1314. [Google Scholar] [CrossRef]

- Potapov, A.A.; Gilmutdinov, A.K.; Ushakov, P.A. Fractal Elements and Radio Systems: Physical Aspects; Radio Engineering: Moscow, Russia, 2009; 200p. [Google Scholar]

- Eterman, I.I. Analogue Computers; Pergamon Press: New York, NY, USA, 1960; 264p. [Google Scholar]

- Boikov, I.V. Stability of Hopfield neural networks. Autom. Remote Control 2003, 64, 1474–1487. [Google Scholar] [CrossRef]

- Boikov, I.V. Stability of Solutions of Differential Equations; Publishing House of Penza State University: Penza, Russia, 2008; 244p. [Google Scholar]

- Daletskii, Y.L.; Krein, M.G. Stability of Solutions of Differential Equations in Banach Space; Nauka: Moscow, Russia, 1970; 536p. [Google Scholar]

- Lozinskii, S.M. Note on a paper by V.S. Godlevskii. USSR Comput. Math. Math. Phys. 1973, 13, 232–234. [Google Scholar] [CrossRef]

- Kantorovich, L.V.; Akilov, G.P. Functional Analysis in Normed Spaces; Pergamon Press: Oxford, UK, 1982; 604p. [Google Scholar]

- Krasnoselskii, M.A.; Vainikko, G.M.; Zabreiko, P.P.; Rutitcki, J.B.; Stecenko, V.J. Approximated Solutions of Operator Equations; Walters and Noordhoff: Groningen, The Netherlands, 1972; 484p. [Google Scholar]

- Gavurin, M.K. Nonlinear functional equations and continuous analogues of iterative methods. Izv. Univ. Math. 1958, 5, 18–31. [Google Scholar]

- Puzynina, T.P. Modified Newtonian Schemes for the Numerical Study of Quantum Field Models. Abstract of. Doctoral Dissertation, Tver State University, Tver, Russia, 2003. [Google Scholar]

- Puzynin, I.V.; Boyadzhiev, T.L.; Vinitsky, S.I.; Zemlyanaya, E.V.; Puzynina, T.P.; Chuluunbaatar, O. On the methods of computational physics for the study of models of complex physical processes. Phys. Elem. Part. At. Nucl. 2007, 38, 144–232. [Google Scholar]

- Boikov, I.V. On the stability of solutions of differential and difference equations in critical cases. Sov. Math. Dokl. 1990, 42, 630–632. [Google Scholar]

- Arnold, V.I. On functions of three variables. Dokl. AN SSSR 1957, 144, 679–681. [Google Scholar]

- Kolmogorov, A.N. On the representation of continuous functions of several variables as superpositions of continuous functions of one variable and addition. Dokl. AN SSSR 1957, 114, 953–956. [Google Scholar]

- Kurkova, V.; Sanguineti, M. Bounds on rates of variable. Basis and neural network approximations. IEEE Trans. Inf. Theory 2001, 47, 2659–2665. [Google Scholar] [CrossRef]

- Strongin, R.G.; Sergeev, Y.D. Global Optimization with Non-Convex Constants. Sequential and Parallel Algorithms; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2000; 728p. [Google Scholar]

- Boykov, I.V. Optimal Function Approximation Methods and Calculation of Integrals; Publishing House of Penza State University: Penza, Russia, 2007; 236p. [Google Scholar]

- Boikov, I.V.; Roudnev, V.A.; Boikova, A.I. Approximate solution of problems of mathematical physics on Hopfield neural networks. Neurocomput. Dev. Appl. 2013, 10, 13–22. [Google Scholar]

- Boykov, I.V.; Roudnev, V.A.; Boykova, A.I.; Baulina, O.A. New iterative method for solving linear and nonlinear hypersingular integral. Appl. Numer. Math. 2018, 127, 280–305. [Google Scholar] [CrossRef]

- Hadamard, J. Lectures on Cauchy’s Problem in Linear Partial Differential Equations; Dover Publication Inc.: New York, NY, USA, 1952; 334p. [Google Scholar]

- Chikin, L.A. Special cases of the Riemann boundary value problems and singular integral equations. Sci. Notes Kazan State Univ. 1953, 1953 113, 53–105. [Google Scholar]

- Boykov, I.V.; Ventsel, E.S.; Roudnev, V.A.; Boykova, A.I. An approximate solution of nonlinear hypersingular integral equations. Appl. Numer. Math. 2014, 86, 1–21. [Google Scholar] [CrossRef]

- Boykov, I.V.; Boykova, A.I. Approximate solution of hypersingular integral equations with odd singularities of integer order. Univ. Proc. Volga Reg. Phys. Math. Sci. Math. 2010, 3, 15–27. [Google Scholar]

- Strakhov, V.N. Some questions of the plane problem of gravimetry. Proc. Acad. Sci. USSR Phys. Earth 1970, 12, 32–44. [Google Scholar]

- Boikov, I.V.; Boikova, A.I. Approximate Methods for Solving Direct and Inverse Problems of Gravity Exploration; Publishing House of the Penza State University: Penza, Russia, 2013; 510p. [Google Scholar]

- Mudretsova, E.A.; Veselov, K.E. (Eds.) Gravity Exploration; Nedra: Moscow, Russia, 1990; 607p. [Google Scholar]

- Zhdanov, M.S. Integral Transforms in Geophysics; Springer: Berlin/Heidelberg, Germany, 1988; 350p. [Google Scholar]

- Boikov, I.V.; Ryazantsev, V.A. On Simultaneous Restoration of Density and Surface Equation in an Inverse Gravimetry Problem for a Contact Surface. Numer. Anal. Appl. 2020, 13, 241–257. [Google Scholar] [CrossRef]

- Boikov, I.V.; Boikova, A.I.; Baulina, O.A. Continuous Method for Solution of Gravity Prospecting Problems. In Practical and Theoretical Aspects of Geological Interpretation of Gravitational, Magnetic and Electric Fields; Nurgaliev, D., Khairullina, N., Eds.; Springer: Cham, Switzerland, 2019; pp. 55–68. [Google Scholar]

- Boikov, I.V.; Ryazantsev, V.A. An Approximate Method for Solving Inverse Coefficient Problem for the Heat Equation. J. Appl. Ind. Math. 2021, 15, 175–189. [Google Scholar] [CrossRef]

- Boykov, I.V.; Pivkina, A.A. Iterative methods of solution Ambartsumyan’s equations. Part 2. Univ. Proc. Volga Reg. Phys. Math. Sci. Math. 2021, 4, 71–87. [Google Scholar]

- Boykov, I.V.; Roudnev, V.A.; Boykova, A.I.; Stepanov, N.S. Continuous operator method application for direct and inverse scattering. Zhurnal SVMO 2021, 23, 247–272. [Google Scholar] [CrossRef]

- Boikov, I.V.; Zelina, Y.V. Approximate Methods of Solving Amplitude-Phase Problems for Continuous Signals. Meas. Tech. 2021, 64, 386–397. [Google Scholar] [CrossRef]

- Boikov, I.V.; Zelina, Y.V.; Vasyunin, D.I. Approximate methods for solving amplitude-phase problem for discrete signals. J. Phys. Conf. Ser. 2021, 2099, 012002. [Google Scholar] [CrossRef]

- Boikov, I.V.; Ryazantsev, V.A. On an iterative method for solution of direct problem for nonlinear hyperbolic differential equations. Zhurnal SVMO 2020, 22, 155–163. [Google Scholar] [CrossRef]

- Yin, W.; Yang, W.; Liu, H. A neural network scheme for recovering scattering obstacles with limited phaseless far-field. J. Comput. Phys. 2020, 417, 109594. [Google Scholar] [CrossRef]

- Gao, Y.; Hongyu, L.; Wang, X.; Zhang, K. On an artificial neural network for inverse scattering problems. J. Comput. Phys. 2022, 448, 110771. [Google Scholar] [CrossRef]

- Boykov, I.; Roudnev, V.; Boykova, A. Stability of Solutions to Systems of Nonlinear Differential Equations with Discontinuous Right-Hand Sides: Applications to Hopfield Artificial Neural Networks. Mathematics 2022, 10, 1524. [Google Scholar] [CrossRef]

- Boikov, I.V. The Brockett stabilization problem. Autom. Remote 2005, 66, 745–751. [Google Scholar] [CrossRef]

- Boykov, I.V.; Krivulin, N.P. Methods for Control of Dynamical Systems with Delayed Feedback. J. Math. Sci. 2021, 255, 561–573. [Google Scholar] [CrossRef]

- Halik, A.; Wumaier, A. Synchronization on the non-autonomous cellular neural networks with time delays. J. Nonlinear Funct. Anal. 2020, 2020, 51. [Google Scholar]

- Hao, J.; Zhu, W. Architecture self-attention mechanism: Nonlinear optimization for neural architecture search. J. Nonlinear Var. Anal. 2021, 5, 119–140. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).