1. Introduction

The field of multi-objective optimization has always received a lot of attention from researchers. Multi-objective optimization problems (MOPs) [

1,

2] are dedicated to optimizing multiple objectives simultaneously to obtain an approximate optimal solution. They have a wide range of applications, including environmental applications [

3], biology [

4], space robots [

5], and energy allocation [

6,

7]. Multi-objective evolutionary algorithms (MOEAs) [

8,

9,

10,

11,

12] are considered an efficient choice to deal with MOPs by finding the most plausible individuals in the objective space to compose the POS. The function value of the POS in the objective space is called the POF [

13,

14].

There are two kinds of multi-objective optimization problems in total, including static multi-objective optimization problems (SMOPs) and dynamic multi-objective optimization problems (DMOPs). Compared with SMOPs, DMOPs have more difficulties and challenges, because their POS or POF shape changes with time and is irregular in many cases. Accordingly, dynamic multi-objective optimization algorithms (DMOAs) are powerful tools for solving DMOPs and are widely employed to solve many real-life problems. Common application areas include scheduling [

15,

16,

17], control [

18], chemistry [

19], industry [

20], and energy design [

21]. For example, when solving vehicle routing problems for package delivery, not only do the number of vehicles, path lengths, and other objectives need to be optimized in a static manner, but the time window of customers and the dynamically changing topology of customers should be considered to satisfy the needs of practical scenarios [

22]. There are two basic requirements to solve DMOPs. The first is to accurately identify changes and then react to them. Many efficient identification mechanisms [

23,

24] have now been proposed. The second is a dynamic response mechanism [

25,

26]. When the environment changes, the algorithm is capable of tracing the POF quickly and precisely according to the type of change. In this paper, we consider the environment to be constantly changing, thus mainly concentrating on dynamic response mechanisms.

According to the description in [

27], DMOPs can be classified into four types based on whether the POF or POS varies. The specific classification is as follows. Type I indicates that the POS changes but the POF stays the same. Type II denotes that both POF and POS change. Type III represents that the POF changes and the POS remains unchanged. Type IV means that both POS and POF remain unchanged. For the fourth type, there is not much research significance. According to the above type classification, DMOPs can be divided into two categories: predictable-change and unpredictable-change problems [

28]. In predictable-change problems, the POF or POS have a certain similarity and regularity through the continuous environmental changes, and the response can be adjusted or predicted according to the previous POS and POF. However, in unpredictable-change problems, the POF changes irregularly and is likely to change very drastically, which means that strategies such as simple prediction will yield very poor results.

There are numerous algorithms and mechanisms to deal with DMOPs, and the most common strategies are prediction-based methods and diversity-maintenance-based methods. It is well recognized that prediction-based methods can accelerate convergence [

29,

30,

31], but for unpredictable changes, the accuracy and validity of the prediction are dramatically reduced, resulting in the loss of diversity. Therefore, diversity-maintaining-based methods are essential [

32]. Nowadays, most of the algorithms’ strategies are limited to simply improve convergence or maintain diversity. Based on the above analysis, a prediction-based approach and an approach based on maintaining diversity can be perfectly integrated so that both rapid convergence and good diversity maintenance can be achieved concurrently. With these thoughts in mind, we propose a new response mechanism that combines key-points-based transfer learning and hybrid prediction strategies (KPTHP).

In this paper, key-points-based transfer learning is adept at coping with unpredictable changes, and it focuses on transferring based on three types of key points: the center point, the polar point, and the boundary point. The transfer process can respond perfectly and quickly to nonlinear changes, and on the contrary, the center-point-based prediction has more advantages in solving linear changes. Consequently, the integration of key-points-based transfer learning and hybrid prediction makes full use of their strengths to make more accurate transfers and predictions and accelerates responding to different types of predictable changes. Experimental results on various test functions demonstrate that KPTHP is very competitive in handling problems with different dynamic characteristics, obtaining better convergence and diversity.

The contributions of this paper are summarized as follows:

- (1)

Key-points-based transfer learning exploits the predicted key points to guide the future search process and the evolution of the population, filtering out partial high-quality individuals with better diversity. As a result, it can cope with nonlinear environmental changes with high efficiency, exploring the most promising optimization space in decision space and decreasing the probability of negative transfer caused by drastic environmental changes.

- (2)

The center-point-based prediction strategy not only can respond quickly to the same successive environmental changes but also has the flexibility to tackle two environmental changes that are similarly distributed or partially different. It adopts a first-order feed-forward difference linear model to anticipate the positions of the individuals at the next moment, which in turn complements key-points-based transfer learning to attain excellent convergence.

The remainder of the paper is structured as follows.

Section 2 presents the background, including some basic concepts and related work. The proposed KPTHP is described in detail in

Section 3.

Section 4 introduces the configurations, the results, and analysis of the experiments in terms of different test functions. The algorithms are further discussed in

Section 5. Finally, conclusions are drawn in

Section 6.

3. Proposed KPTHP

In this section, we introduce the details of the proposed KPTHP.

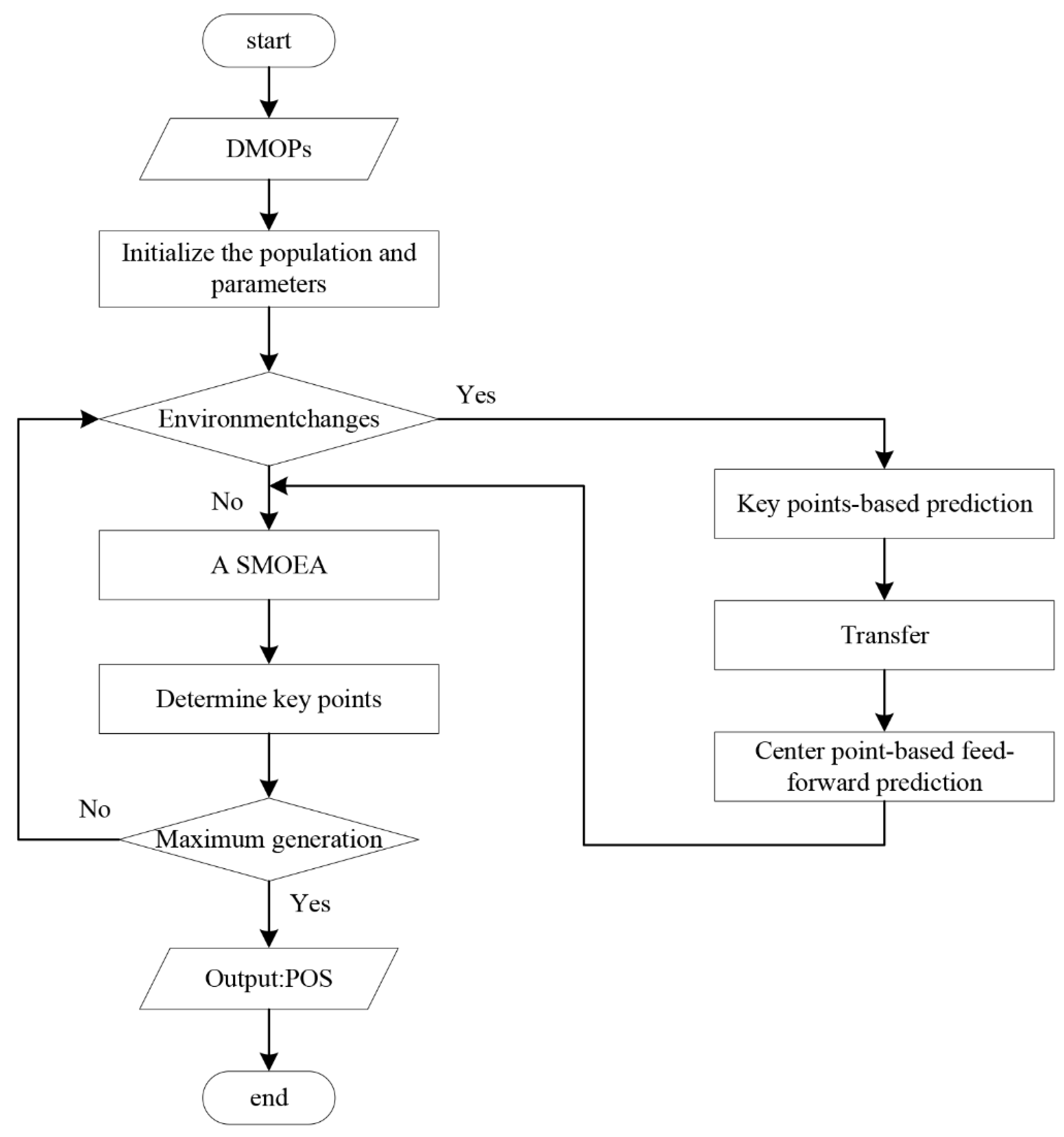

Figure 1 depicts the KPTHP procedure. To be specific, the framework of the proposed algorithm is outlined first. Afterwards, the details of determining key points, key-points-based prediction, transfer, and center-point-based feed-forward prediction in the framework are described. Finally, we analyze the computational complexity of proposed KPTHP.

3.1. Overall Framework

The main framework of the KPTHP is presented in Algorithm 1. When the environment change is detected, during the first two changes, we use a static multi-objective optimization evolutionary algorithm (SMOEA) [

59,

60,

61] to evolve. In the changes that follow, we first determine the key points predicted (Algorithms 2 and 3) for transfer (Algorithm 4) at moment

t to obtain portion of high-quality individuals and then derive center-point-based predicted individuals using feed-forward prediction model (Algorithm 5). The transferred individuals and the predicted individuals are merged to form the initial population. Following every evolution, we are required to identify the key points of POS (Algorithm 2).

| Algorithm 1: The overall framework of KPTHP |

| Input: The dynamic problem Ft(x), the size of the population: N, a SMOEA.

|

| Output: The POS of the Ft(x) at the different moments.

|

|

Initialize population and related parameters;

|

| while the environment has changed do |

|

if t == 1 || t == 2 then

|

|

Initialize randomly the population initPop;

|

|

POSt = SMOEA(initPop, Ft(x), N);

|

|

KPointst = DKP(POSt, Ft(x), N);

|

|

Generate randomly dominated solutions Pt;

|

|

Else |

|

PreKPointst = KPP(KPointst−1, KPointst−2);

|

|

TransSol = TF(POSt−1, PreKPointst);

|

|

FeeforSol = CPFF(POSt−1, KPointst−1, KPointst−2);

|

|

POSt = SMOEA(TransSol, FeeforSol, Ft(x), N);

|

|

KPointst = DKP(POSt, Ft(x), N);

|

|

Generate randomly dominated solutions Pt;

|

|

end if |

|

t = t + 1;

|

|

return POSt;

|

| end while |

3.2. Determine Key Points

Many researchers have tried to identify special points on the POF that have considerable properties or represent local features of the POF. There are several types of points that have received more attention, including the center point, the boundary point, the ideal point, and the knee point. In this section, we mainly select three types of key points for the subsequent transfer and feed-forward prediction process, which are the center point, the boundary point, and the ideal point. The schematic diagram of the key points is shown in

Figure 2. The center point is located at the center of the population, and the ideal point and boundary points can maintain the perimeter and boundary of the population, collectively reflecting the overall situation of the population. The concept and method of deriving the three types of key points are as follows.

Let

POSt = [

39] be the Pareto-optimal set at

t moment. The center point [

62] of

POSt could be estimated by

For the minimization problem, the boundary point is the individual with the smallest target value in one dimension of the target space. The number of the boundary point is determined by the dimension of the target space. If the dimension of the target space is 2, then the number of boundary points is 2, and the same is true for higher dimensions.

where

p is the number of the Pareto-optimal solution at the current moment

t.

denotes the Pareto front value in the

d dimension of the target space at moment

t.

where

m indicates the dimension of the target space. The set of the boundary point

Boundarys consists of the boundary points of each dimension.

Ideal points are classified as positive and negative ideal points, and they are defined as follows. The positive point

, where

is the maximum of

fi(

x),

for every

. The negative point

, where

is the minimum of

fi(

x),

for every

. It is worth noting that the ideal point we use in this paper is the near-ideal point called the polar point. The procedure for solving the polar point is as described below.

| Algorithm 2: Determine key points (DKP) |

| Input: The dynamic problem Ft(x), Pareto-optimal set POSt at the moment t, the size of the population N.

|

| Output: Obtained key points KPointst in POSt.

|

| KPointst = ∅

|

| Calculate the center points Centers in each dimension according to Formula (5);

|

| Obtain POFt of each non-dominated solution in POSt;

|

| Compute the minimum value of each dimension in POFt;

|

| Determine boundary points Boundarys according to Formula (7);

|

| Determine the polar point Ideals by using the TOPSIS method;

|

| KPointst = Centers Boundarys Ideals; |

| return KPointst; |

In this section, we choose TOPSIS method [

63,

64] to obtain the polar point. First, TOPOSIS calculates the weighted decision matrix and determines the positive ideal solution and negative ideal solution. Afterwards, the grey correlations

d+ d− between each individual and the positive and negative ideal solution are calculated, respectively. Finally, the grey correlation occupancy

DS of each individual was calculated according to Formula (8), and the individual with the largest

DS value was selected. Algorithm 2 describes the strategy of determining key points.

| Algorithm 3: Key-points-based prediction (KPP) |

| Input: Key points KPointst−1 and KPointst−2 at moment t − 1 and t − 2.

|

| Output: Predicted key points PreKPointst at moment t.

|

| Determine the evolutionary direction between the key points by (9);

|

| Obtain predicted key points PreKPointst at moment t by (10);

|

| Add Gaussian noise with individuals in PreKPointst to PreKPointst;

|

| return PreKPointst; |

3.3. Key-Points-Based Prediction

For solving DMOPs, many methods based on some special regions or points on the POF have been designed [

29,

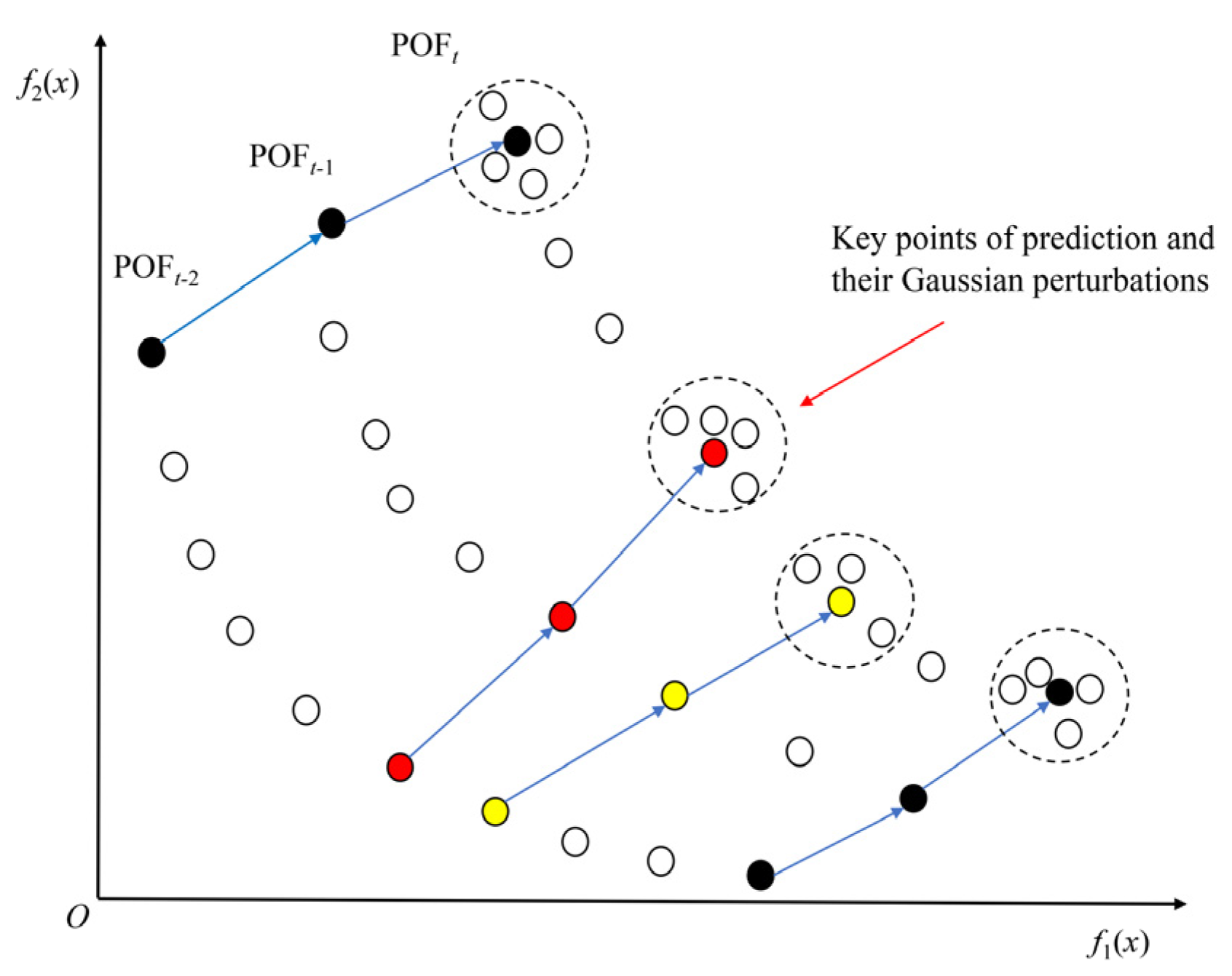

65]. In this section, the key points we consider are the center point, the boundary point, and the polar point. The center point shows the average characteristics of the whole population, and the boundary point and the polar point maintain the diversity of population, which can make the predicted population track POF more precisely and improve the convergence speed toward the POF.

Figure 3 shows the process of prediction based on key points.

According to Algorithm 3, once the key points at time

t − 1 and

t − 2 are determined, they will be used to obtain the key points at time

t. The process of solving is given as follows. First of all, we need identify key points

KPointst−1 and

KPointst−2 in

POSt−1 and

POSt−2. Thereafter, the distance between the key points is calculated by the following formula, respectively.

With Formula (9), each key point can determine an evolutionary direction for itself. Furthermore, the key points at time

t can be predicted by Formula (10):

Finally, based on Formula (11), we add Gaussian noise with individuals in

KPointst:

where

Gauss(0,

δ) indicates the Gaussian perturbation with mean 0 and standard deviation

δ. Here,

δ is defined as:

where

is the Euclidean distance between

KPointst−1 and

KPointst−2 and

n is size of the search space.

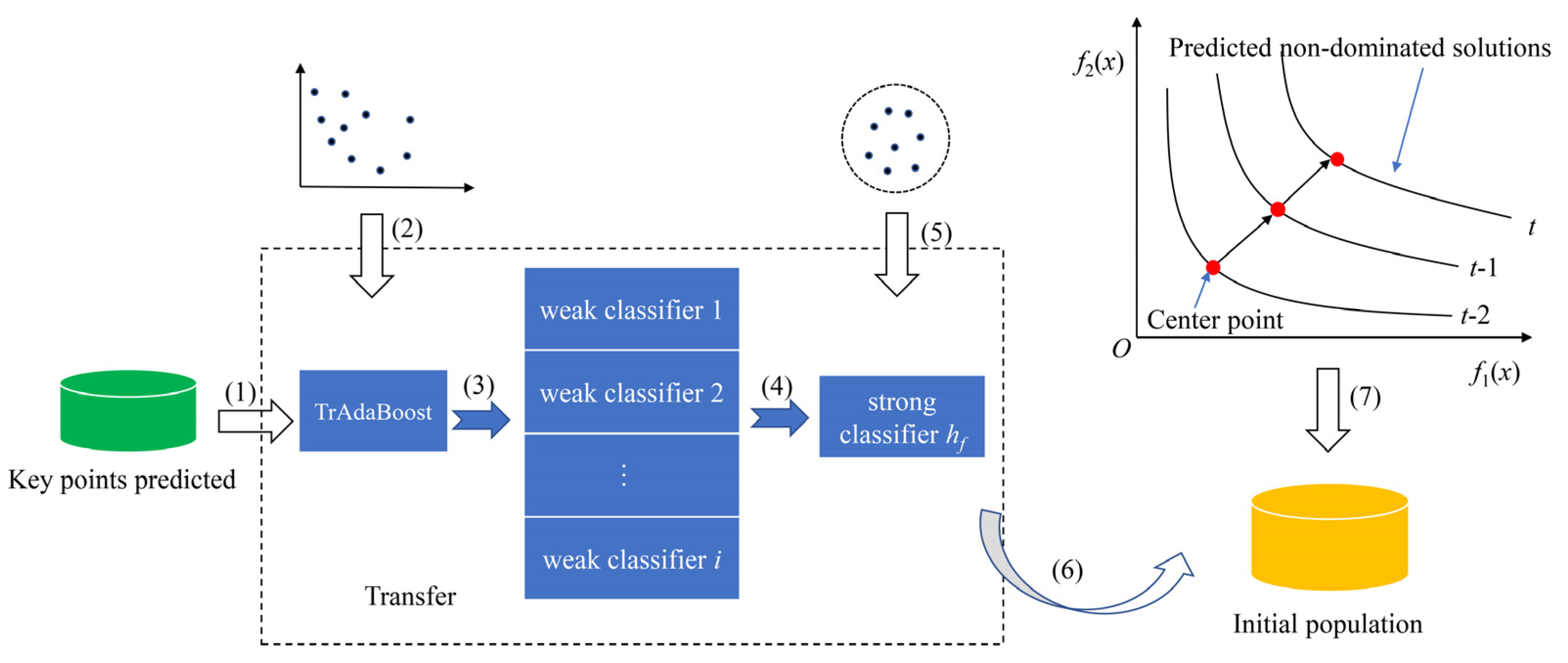

3.4. Transfer

When the key points predicted are generated, the transfer procedure will begin. The main idea of TrAdaBoost is to train a strong classifier hf by using the obtained key points and the optimal solutions of the previous generation. Once the training process is completed, the classifier hf can identify the good individuals among the randomly generated individuals as the predicted individuals. During the transfer procedure, the key points obtained above are regarded as the target domain Xta, and the Pareto-optimal solutions of the previous generation are considered as the source domain Xso.

The basic learning algorithm TrAdaBoost continuously adjusts the weights and parameters of the weak classifiers

hq and then merges the weak classifiers with different weights into strong classifiers. During this period, if an individual in the target domain is misclassified by

hq, its weight value should be increased, indicating that it is critical in the subsequent training. On the other hand, if an individual in the source domain is misclassified by

hq, its weight value should be diminished, suggesting that it is more different from the individuals in the target domain.

| Algorithm 4: Transfer (TF) |

| Input: Pareto-optimal set POSt−1 at the moment t − 1, predicted key points PreKPointst at moment t.

|

| Output: Transferred individuals TransSol.

|

| Xta = PreKPointst;

|

| Xso = POSt−1 Pt−1;

|

| Initialize the weight vector w1(x) = when xXso, w1(x) = when xXta;

|

| Set a based classifier TrAdaBoost and the number of iterations Qmax;

|

| for i = 1 to Qmax do

|

| Use TrAdaBoost to train a weak classifier with data Xso Xta;

|

| Calculate β according to Formula (14);

|

| Calculate βi according to Formula (15);

|

| Update the weights according to Formula (16);

|

| end

|

| Get the strong classifier hf by synthesizing Qmax weak classifiers according to Formula (17);

|

| Randomly generate a large number of solutions xtest;

|

| return TransSol = {x|hf(x)=+1, xxtest}; |

The weight update process is as follows. To begin with, we calculate the error rate

εi of

hq on the target domain

Xta at the

ith iteration based on Formula (13).

Then, the coefficients

β and

βi of

hq are calculated as:

Lastly, we update the new weight vectors.

After several iterations, the weight values of individuals that are similar to those in the target domain are increased, and the classification ability of the weak classifier is gradually enhanced. When the iterations are completed, we construct the strong classifier

hf using the following approach.

Obtaining hf, we randomly generate many solutions as the training data set xtest, which are put into hf to classify. Those individuals identified as “good” by hf will form transferred individuals TransSol. The detailed transfer algorithm procedure can be referred to Algorithm 4.

3.5. Center-Point-Based Feed-Forward Prediction

Diversity maintenance strategies of populations are critical in dynamic evolution. By transferring the excellent individuals of the previous generation, we acquire part of the key points that can be used for evolution while reflecting the overall population status. However, considering the diversity of the whole population, we adopt a feed-forward prediction of the center point to complement the transfer process. Consequently, the initial population is composed of individuals predicted by transfer and individuals generated with the aid of feed-forward prediction model. The specific procedure is shown in

Figure 4.

It is well recognized that most people use feed-forward prediction to obtain the entire population, which obviously yields many worthless individuals. Therefore, we only use feed-forward prediction methods to predict non-dominated individuals. We first extract the center points

Centerst−1 and

Centerst−2 from the key points

KPointst−1 and

KPointst−2 at moments

t − 1 and

t − 2, respectively, and then calculate the set of non-dominated solution

APOSt at moment

t using the following equation:

where

Gauss(0,

d) refers to a Gaussian noise with mean 0 and standard deviation

d.

| Algorithm 5: Center-point-based feed-forward prediction (CPFF) |

| Input: Pareto-optimal set POSt−1 at the moment t − 1, key points KPointst−1 and KPointst−2.

|

| Output: Predicted individuals FeeforSol.

|

| Obtain the center points Centerst−1 and Centerst−2 from KPointst−1 and KPointst−2, respectively;

|

| Calculate predicted individuals APOSt according to Formula (18);

|

| Adjust value of APOSt to the predefined range;

|

| return FeeforSol = APOSt; |

3.6. Computational Complexity Analysis

This section analyses the computational complexity of KPTHP at one iteration. The major calculation of KPTHP originates from the following aspects according to Algorithm 1. (1) The complexity of the DKP process mainly lies in finding the polar point using TOPSIS method. Calculating TOPSIS score requires O(N) computation, and determining the positive ideal point and negative ideal point requires O(MN) computation, where N is population size and M is the objective number. (2) The complexity of the KPP is derived from calculation of the predicted key points, and the computational complexity is O(k2), where k is number of key points and k is much smaller than N. (3) The TF of KPTHP follows the computational complexities of weak classifiers. In this paper, we refer to TrAdaBoost as a weak classifier, which requires O(N2n), where n is the dimension of decision variables. (4) The computational complexity of CPFF process is O(d2), where d is the total amount of non-dominated individuals. In summary, the computational complexity of the KPTHP in this work is O(N2n).

The computational complexities of the compared algorithms IT-DMOEA, MMTL-DMOEA, KDMOP, and KT-DMOEA are

O(

N2nI),

O(

M3n),

O(

N2M), and

O(

N2n) according to the settings of the original papers, and

I is the number of iterations for individual’s transfer. It can be clearly seen that the computational complexity of KPTHP is similar to KDMOP and KT-DMOEA but lower than IT-DMOEA and MMTL-DMOEA. The compared algorithms will be presented in detail in

Section 4.3.

4. Experimental Configuration and Results Analysis

In this section, we first focus on some configurations of the experiments used to evaluate how well KPTHP performs, including benchmark problems in

Table 1, performance metrics, compared algorithms, and parameter configurations. Then, the experimental results of KPTHP and six other state-of-the-art algorithms on the diverse benchmark problems are presented. The statistic results of MIGD, DMIGD, HVD, and MMS values for all test instances are summarized in

Table 2,

Table 3,

Table 4 and

Table 5, respectively. In particular, the values with the best results have a darker color. For the sake of fairness, the change frequency

τt and change severity

nt are fixed to 5 and 20 among all algorithms in this section of the experiment. All experimental procedures are performed under the same hardware configuration, all in MATLAB R2020b.

4.1. Benchmark Problems

To solve DMOPs, the proposed KPTHP is tested on sixteen test problems, including five DF problems [

66], six F problems [

34], two dMOP problems [

27,

67], and three FDA problems [

27,

67,

68]. The attributes and characteristics of the benchmark problems are described in

Table 1. The POFs of these test functions considered have different characteristics, including linear and nonlinear, continuous and discontinuous, and convex and concave.

In the test suite DF, POF and POS are dynamic, and DF2 has severe diversity loss. The problems F5–F8 have nonlinear correlation between decision variables. In F9, POF jumps from one scope to another scope occasionally, and in F10, the geometric shapes of two consecutive POFs are totally different from each other. In the test suite FDA, the POF and/or POS vary over time, while the number of decision variables, the number of targets, and the boundaries of the search space remain fixed. The dMOP problems are the extensions of FDA problems. Among all the tested functions, F8 and FDA4 refer to three-objective functions, while the remaining functions are two-objective ones. The time instance t is defined as , where nt, τ, and τt represent the severity of change, maximum number of iterations, and the frequency of change, respectively.

4.2. Performance Metrics

Performance metrics play an important role in assessing the performance of algorithms in different aspects. In our experimental study, four metrics were adopted, including modified inverted generational distance (MIGD), DMIGD, modified maximum spread (MMS), and hypervolume difference (HVD).

4.2.1. MIGD

IGD [

32,

34,

69] is a widely adopted metric to evaluate the convergence and diversity of multi-objective evolutionary algorithms. It represents the shortest distance between the true POF and the POF obtained by the evolutionary algorithm. The calculation formula is as follows:

where

and

denote the true Pareto front and the approximate Pareto front obtained by the algorithm at the moment

t, respectively, and

indicates the individual number of true POF. The smaller the IGD value, the better the convergence and diversity of the obtained solutions.

On the basis of IGD, we calculate the average IGD value for the whole environment change as MIGD:

where

T is the number of environment changes in one run and |

T| is the cardinality of

T.

4.2.2. DMIGD

DMIGD [

56] is used to evaluate the overall performance of each benchmark function at different change frequencies and severities, where it is expected that the smaller, the better:

where

C represents different environment configurations and

E denotes all the environmental conditions.

4.2.3. MMS

MMS [

70,

71] is used to measure the mean ability of the acquired solution to cover the true POF. A larger MMS indicates wider coverage of the obtained solutions. MMS is defined as:

where

and

denote the maximum and minimum of the

kth objective in true POF, respectively;

and

denote the maximum and minimum of the

kth objective in the obtained POF, respectively.

4.2.4. HVD

HVD [

72,

73,

74] represents the hypervolume difference between

and

and is computed as:

where

HV(

PF) represents the hypervolume of POF. A smaller HVD indicates better convergence and diversity performance of the algorithm.

4.3. Comparison Algorithms and Parameter Settings

Six state-of-the-art dynamic multi-objective evolutionary algorithms are identified for the purpose of comparison with KPTHP, including PPS, GM-DMOP, MMTL-DMOEA, IT-DMOEA, KT-DMOEA, and KDMOP. Meanwhile, an introduction of each algorithm and the related parameter configuration will be presented as follows.

- (1)

PPS [

34]: Zhou et al. proposed a dynamic multi-objective evolutionary algorithm based on population prediction strategy (PPS), where a Pareto set is divided into two parts: a center point and a manifold. The individuals of the population at the next moment consist of the predicted center point and estimated manifold together. This method has an excellent performance in dealing with linear or nonlinear correlation between design variables.

- (2)

GM-DMOP [

37]: Wang et al. introduced a grey prediction model into dynamic multi-objective optimization for the first time. The basic idea of GM-DMOP is that the centroid point at the next moment is predicted by a grey prediction model when detecting the environmental changes. One of the highlights of the algorithm is dividing the population into clusters and predicting the centroid points of each cluster to increase the accuracy of the prediction. It has been proven that the grey prediction method brings excellent population convergence and diversity.

- (3)

MMTL-DMOEA [

71]: MMTL-DMOEA is a new memory-driven manifold transfer-based evolutionary algorithm for dynamic multi-objective optimization. The initial population is composed of the elite individuals obtained from both experience and future prediction. The approach is capable of improving the computational speed while acquiring a better quality of solutions.

- (4)

IT-DMOEA [

70]: Jiang et al. designed an individual transfer-based algorithm, where a presearch strategy is used for filtering out some high-quality individuals with better diversity. The merit of IT-DMOEA is that it maintains the advantages of transfer learning methods and reduces the occurrence of negative transfer.

- (5)

KT-DMOEA [

75]: KT-DMOEA adopted a method based on a trend prediction model and imbalance transfer learning to effectively track the moving POF or POS. It integrates a small number of high-quality individuals with the imbalance transfer learning technique seamlessly, greatly improving the performance of dynamic optimization.

- (6)

KDMOP [

64]: KDMOP was proposed recently by Yen et al., and it introduces a well-regarded multi-attribute decision-making strategy called TOPSIS. TOPSIS is utilized to obtain the initial population in a new environment, and it is used to select good individuals in mating selection and environmental selection.

The relevant experimental parameters are set as follows. For all algorithms, the population size is set to 100 for bi-objective optimization problems and 200 for tri-objective optimization, and the dimension of decision variables is set according to [

66,

67,

68]. All the problems are run 30 times independently on benchmark problems, and the environmental changes are set to 20 times in each run. For a fair comparison, the specific parameters for PPS, GM-DMOP, MMTL-DMOEA, IT-DMOEA, KT-DMOEA, and KDMOP are set according to their original publications [

34,

37,

64,

70,

71,

75]. For KPTHP, in the transfer stage, most of the parameters in the TrAdaBoost are set by default [

76]. The typical static multi-objective optimizers contain RM-MEDA [

77], NSGA-II [

78], MOEA/D [

79], etc. In this paper, we choose RM-MEDA as the SMOEA optimizer.

4.4. Results on DF and F Problems

Table 2 shows the MIGD values obtained by the seven algorithms. KPTHP has the smallest MIGD values for the eleven DF and F test functions, while KDMOP and KT-DMOEA have the smallest MIGD values only in F8 and DF4, respectively. The distribution of the algorithms obtaining the second-best value is more fragmented. KPTHP may be more advantageous while dealing with problems where there is a nonlinear correlation between decision variables. For F9 and F10, their Pareto sets occasionally jump from one area to another, or the geometry of consecutive POFs is completely different, yet KPTHP obviously performs better and more competitively compared with the other algorithms.

The statistical results of the HVD metrics for the seven algorithms are presented in

Table 3. We can clearly observe that the distribution of MIGD values and HVD values for the well-performing test functions is almost the same. The only difference is that KPTHP obtains the best HVD value instead of KT-DMOEA in DF4. There are two main explanations. The first is that one of the characteristics of DF4 is its dynamically changing boundary values, and the second is that KPTHP pays more attention to the boundary points in evaluating the performance of the algorithm, while KT-DMOEA mainly focuses on the effect of knee points and pays little attention to boundary points.

Table 4 shows the MS values and standard deviations of the seven algorithms. It is obvious that KPTHP has a better performance than the other six comparison algorithms on many of the tested functions. MMTL-DMOEA performs best on the two test functions, followed by GM-DMOP and IT-DMOEA gaining one best result each. The above experimental results demonstrate that KPTHP can obtain a great many individuals with excellent convergence and diversity. For DF5 and F8, on which the results are not the best, however, KPTHP is remarkably similar in performance to MMTL-DMOEA with the best results.

4.5. Results on FDA and dMOP Problems

The FDA and dMOP test functions are linearly linked between the decision variables. For the MIGD, HVD, and MMS values in

Table 2,

Table 3, and

Table 4, it can be observed that KPTHP has a better performance than the other six algorithms in many of the tested functions, and especially in MMS indicator is completely superior to them. In the remaining six algorithms, PPS utilizes substantial prime points to train the AR model, but in effect, there are simply not enough high-quality prime points to support accurate predictions at this early stage of evolution, rendering performance incompetent. IT-DMOEA, MMTL-DMOEA, and KT-DMOEA are based on transfer learning methods. Although they reduce the occurrence of negative transfer to some extent, the quality of transferred individuals is inferior to KPTHP. KDMOP mainly predicts individuals depending on the direction of point evolution, and consequently, there is not much superiority in the test functions where POF changes frequently. The performance of the algorithms is further analyzed as follows according to the characteristics of the test functions.

The POFs of dMOP2, FDA1, and FDA4 all vary in a sinusoidal pattern with periodic and symmetric variation. It can be inferred that the three test functions have similar variations. The values of MIGD, HVD, and MMS of KPTHP are extremely outstanding compared to other algorithms. Therefore, KPTHP combining key-points-based transfer learning and hybrid prediction strategies can predict more accurately the individuals at the next moment and has a significantly better performance on the three test functions. The POFs of both dMOP3 and FDA4 are constant. According to the three indicators, it can be introduced that KPTHP may be also very effective in solving the test functions that have static POF during the change. It is worth mentioning that FDA4 is a test function of the three objectives. Based on the MMS values, KPTHP can acquire better diversity in solving the triple-objective function.

4.6. Results on DMIGD Metric

In total, we performed experimental comparisons on five pairs of parameters of

nt and

τt, including (5, 5), (5, 10), (5, 15), (5, 20), and (10, 5).

Table 5 shows the DMIGD values for each algorithm on all tested functions. KPTHP obtained the best values on fourteen test functions, followed by MMTL-DMOEA and KDMOP on only one test function each, DF4 and F8, respectively. It can be straightforward to deduce that KPTHP can obtain good convergence performance and maintain great diversity. The excellent assignment of DMIGD values to the test functions is very similar to the MIGD values, which indicates that KPTHP is excellent not only in terms of single parameter configuration but also in terms of overall performance.

4.7. Analyzing the Evolutionary Processes of the Compared Algorithms

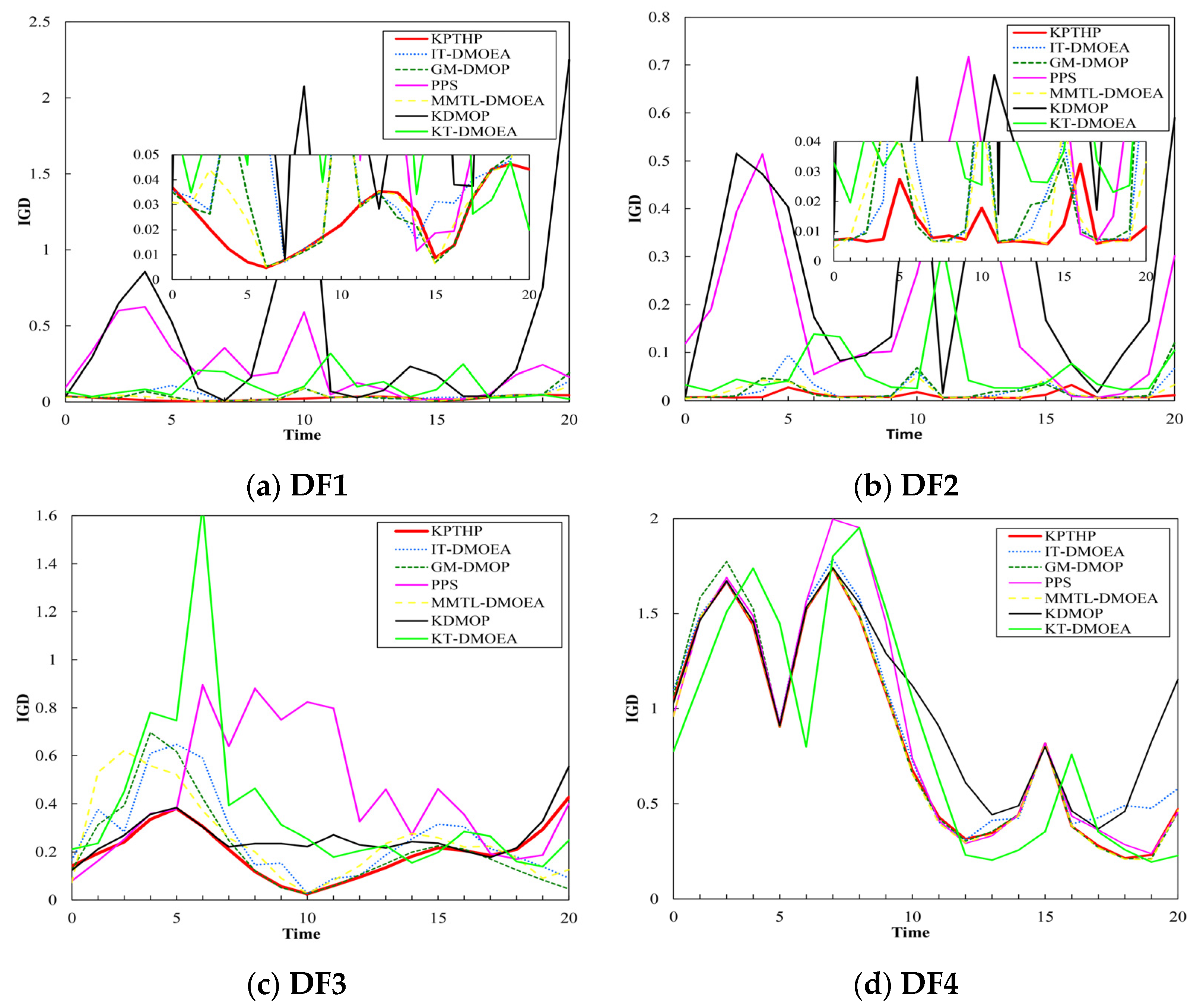

Above, we analyzed the performance of the algorithm running on the test functions under the four metrics. In the following, we present a visualization of the excellent performance of the algorithm, including the IGD evolution curves of the sixteen functions at different moments and the POFs of FDA1, dMOP2, and DF2 obtained by the seven algorithms. The change frequency τt and change severity nt of all the algorithms are fixed to (5, 20).

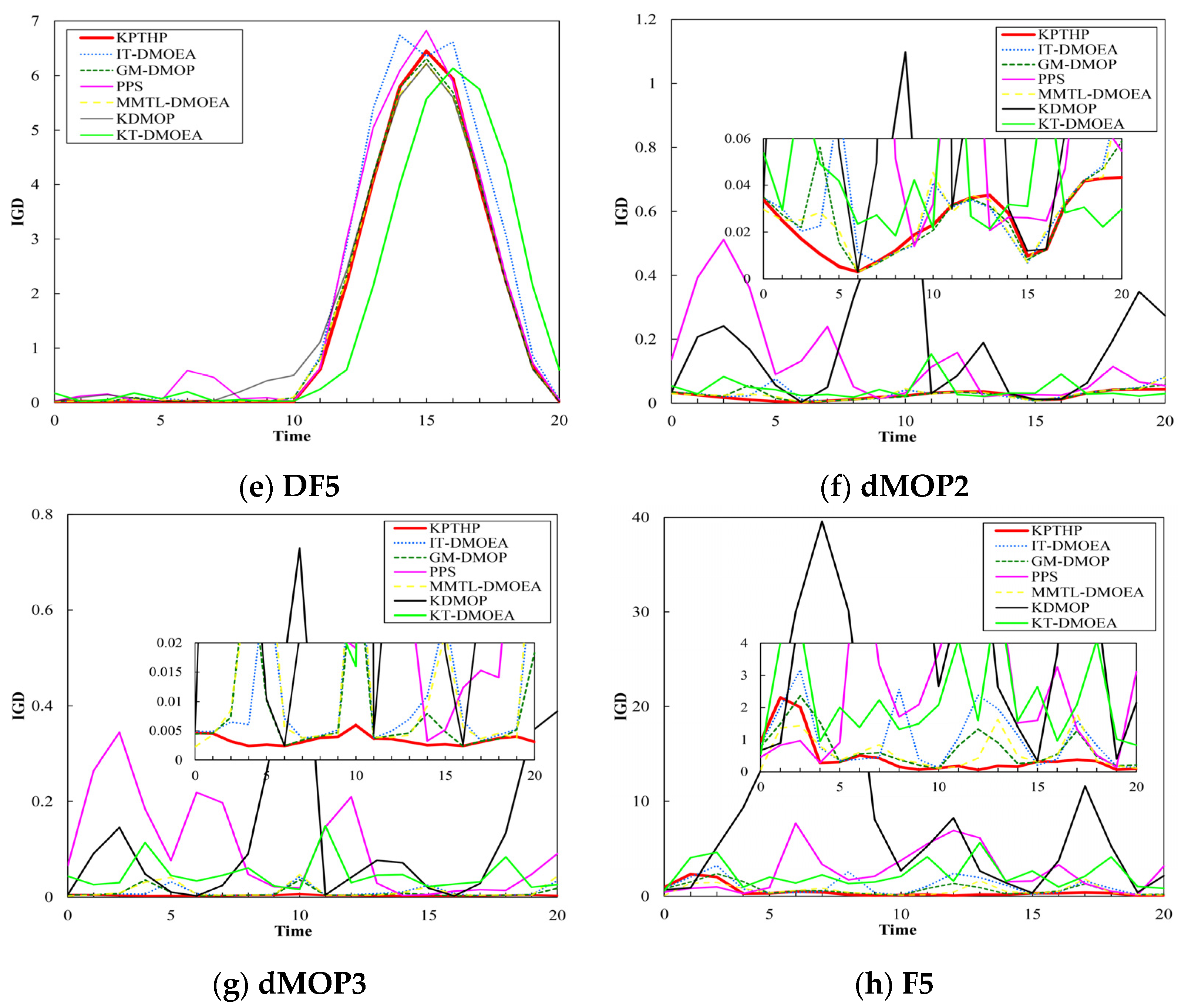

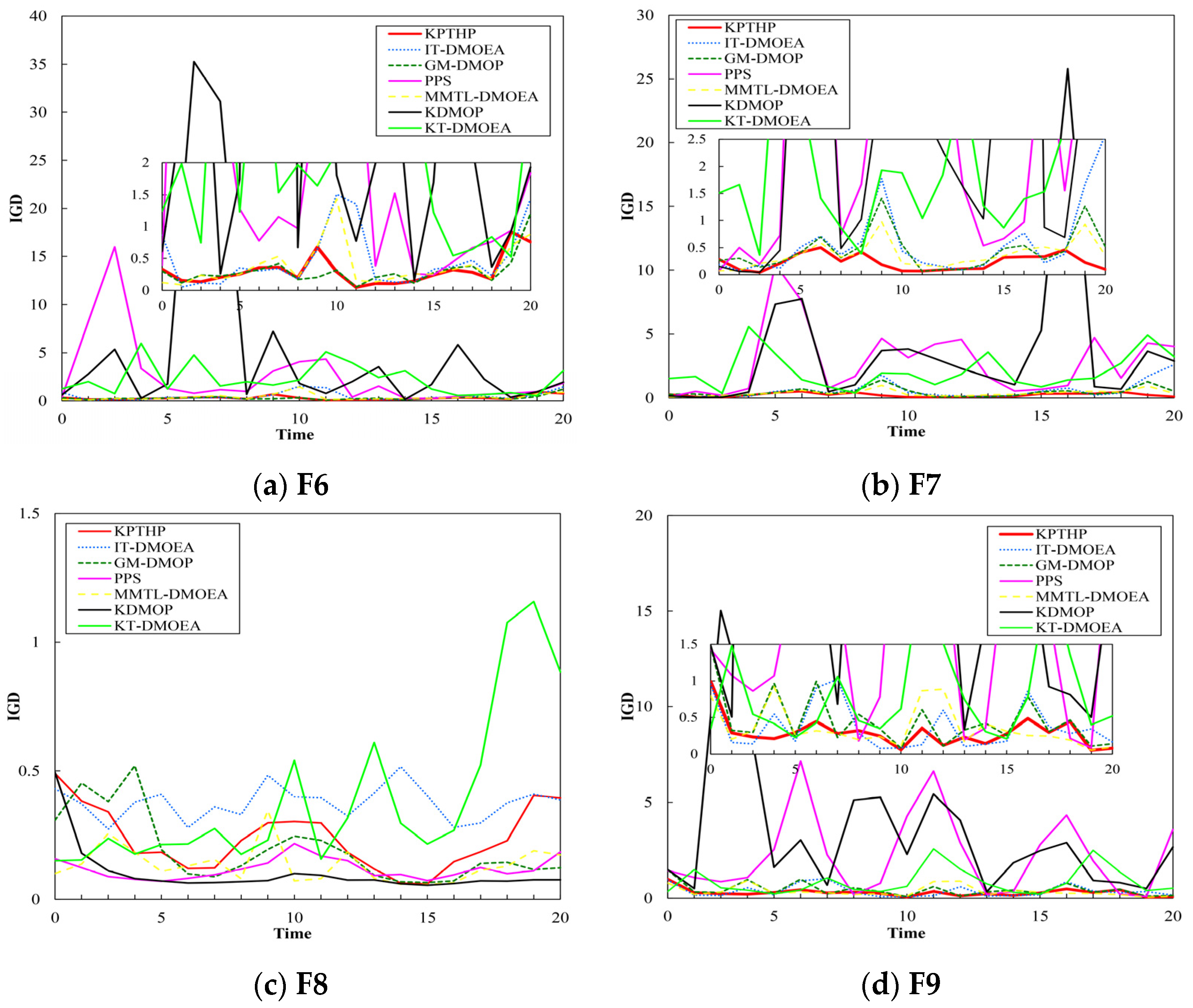

Figure 5 and

Figure 6 show the IGD variation curves for all the tested functions. It can be visually observed that KPTHP has outstanding performance in most of the tested functions, which indicates that KPTHP is more able to cope with the effects of environmental changes. From the figures, we can see that KDMOP often has IGD peaks in some test problems, such as dMOP2, dMOP3, F6, and F10, which indicates the instability of this algorithm in the evolutionary process. The possible reasons for this occurrence are the drastic nature of POF change and the nonlinear correlation, which can cause errors in the predicted direction. On FDA4 and F8, IT-DMOEA does not perform satisfactorily, probably because the guided population based on the reference vector does not behave well in three-objective problems.

What can be clearly noticed is that KT-DMOP fluctuates more significantly in most of the tested functions, especially DF3 and F8, which may indicate a lack of predictive accuracy capability of the algorithm in the case of irregular knee points. However, KPTHP maintains stable variation in the vast majority of cases, except for DF4 and DF5. An important reason for this is that the transfer based on key points predicted is able to predict the adequate key points at the next moment with a large degree of accuracy, avoiding large deviations from the true POF. Another essential reason is that the center-point-based feed-forward prediction can directly predict non-dominated solutions at the next moment, which plays an important complementary role to the transfer procedure. Although this method alone is inefficient and has poor accuracy in mixed convexity–concavity or POFs with strong variations, it can provide a partially high-quality prediction of individuals to some extent regardless of whether the POFs vary linearly or non-linearly.

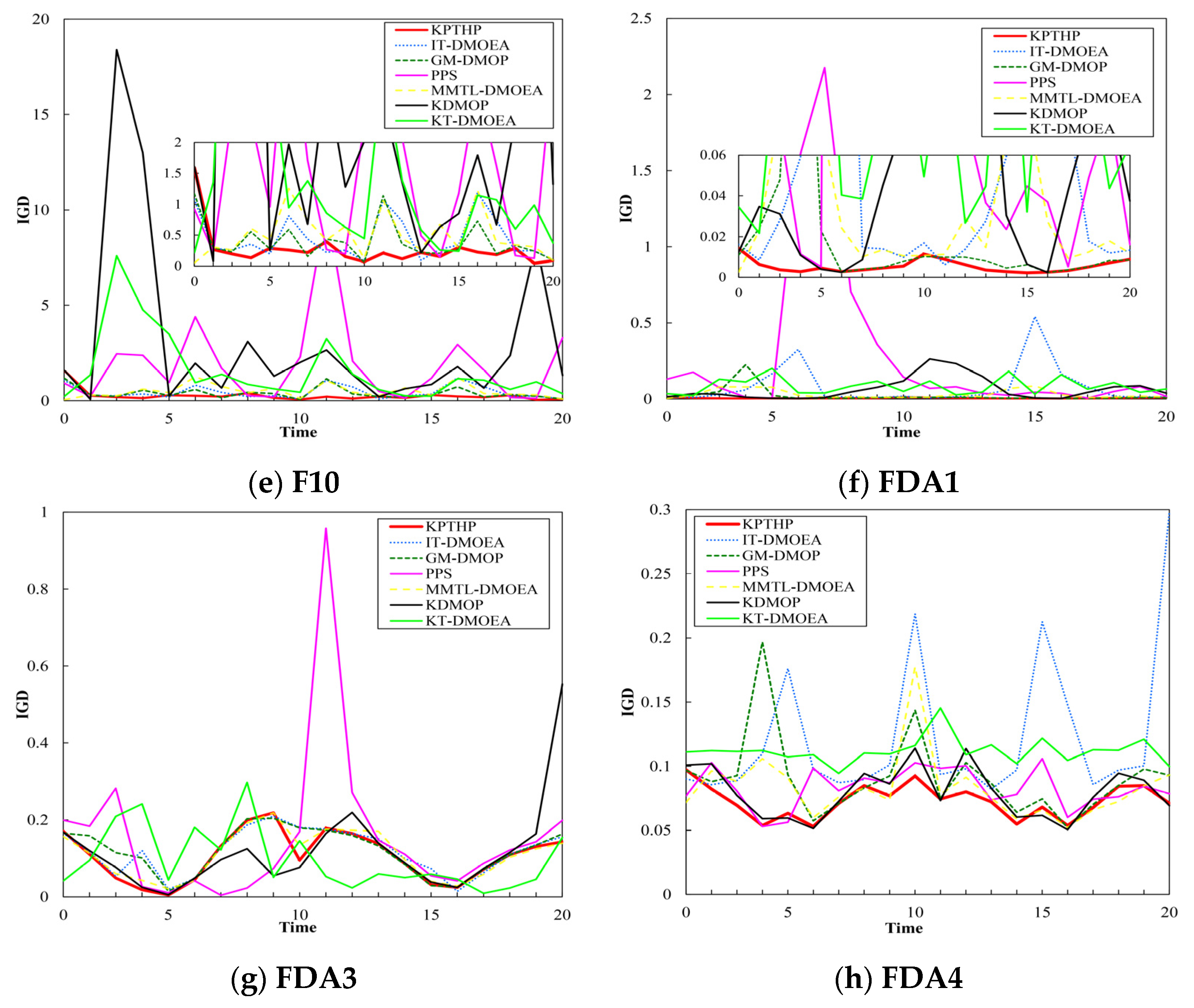

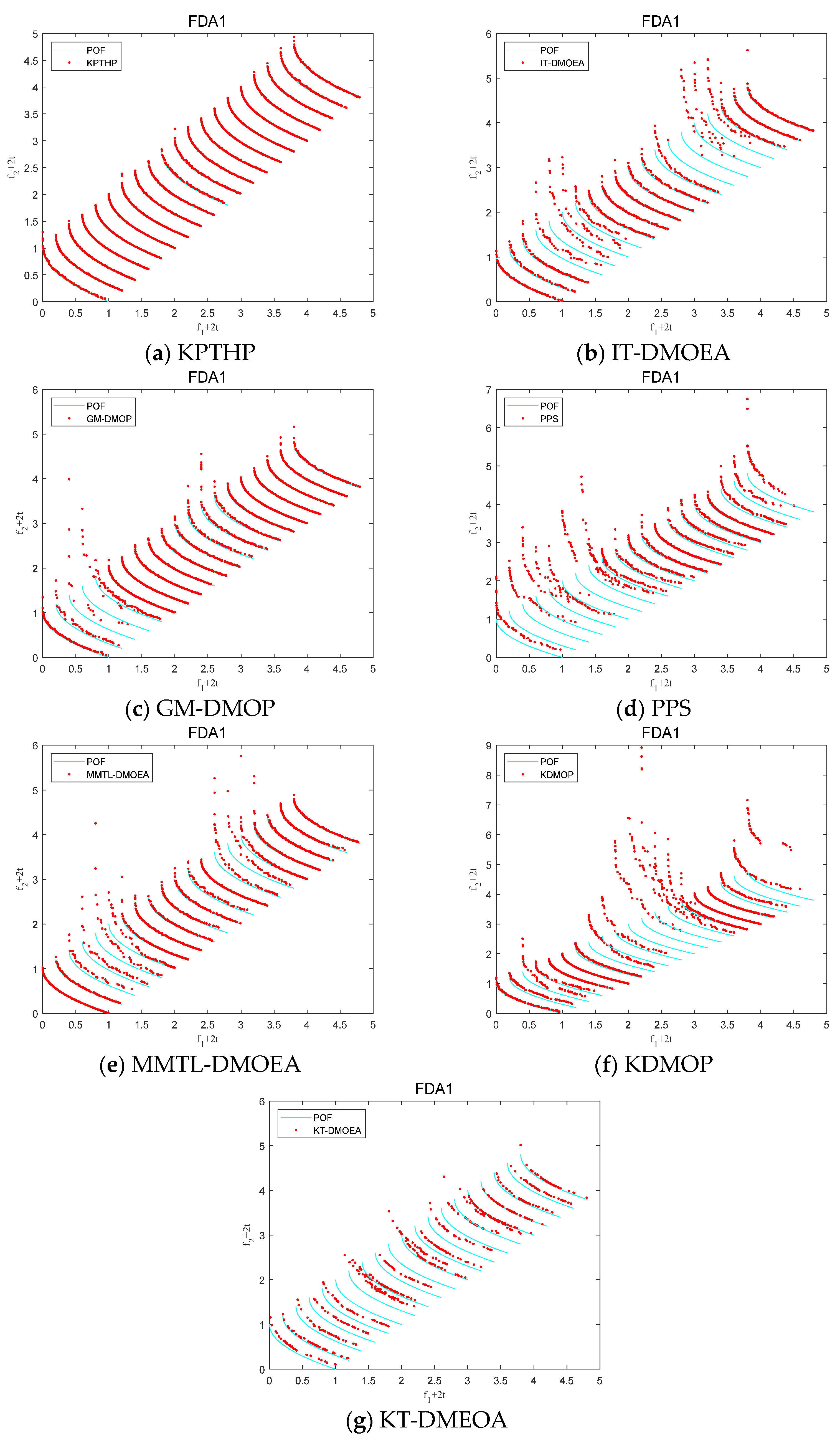

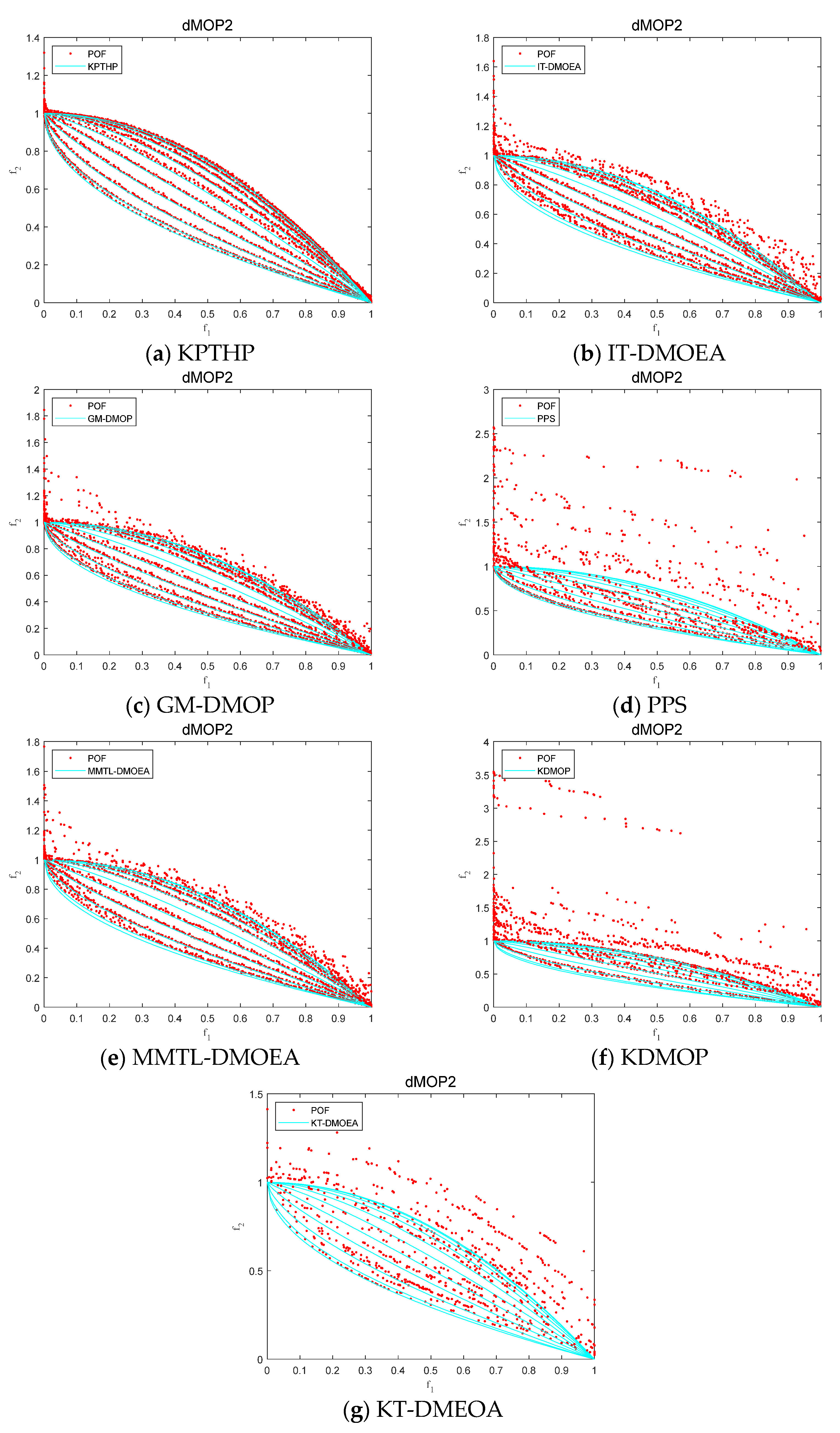

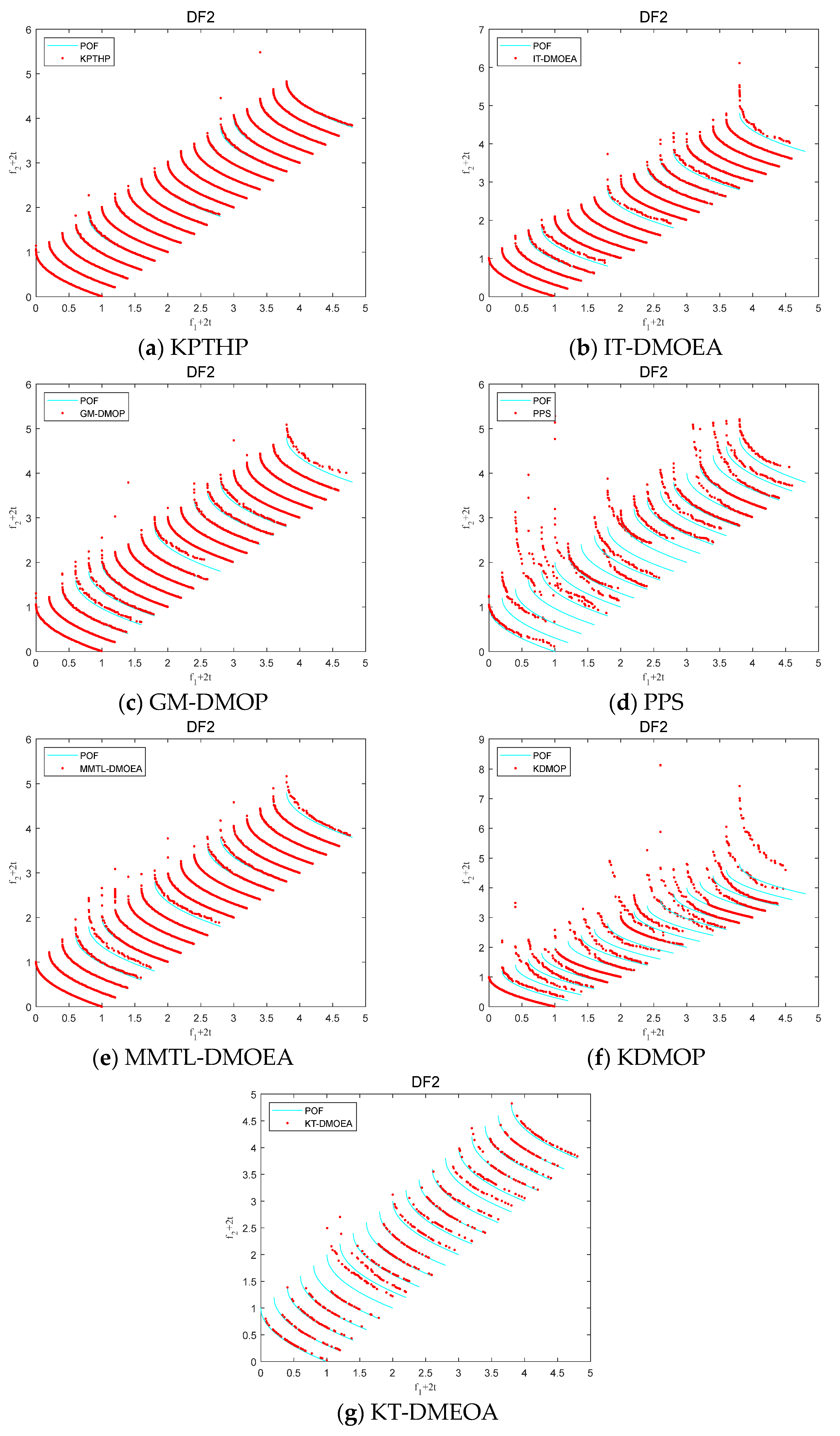

Figure 7,

Figure 8 and

Figure 9 show the true POF and the actual solution set by FDA, dMOP2, and DF2 at thirty different moments. Meanwhile, the actual solution set is marked with red dots, and the true POF is marked with blue curves. It can be clearly seen that KPTHP maintains good convergence and diversity at different moments. For DFA1 and DF2, the ability of PPS, KDMOP, and KT-DMOEA to track the true POF is very poor most of the time, and the approximate Pareto front acquired deviates from the true POF very seriously. Because the core idea of both PPS and KDMOP is center-point-based prediction solely, the evolutionary direction determined by the center point is not effective when the true POF is unchanged. KT-DMOEA is a knee-points-based prediction method, which is mainly focused on the movement of knee points and has great limitation and instability. For dMOP2, it is obvious that the distribution of population individuals for the six algorithms compared is very uneven.

For IT-DMOEA, because the evolution of the population relies on the role of the reference vector, the capability of generating the guided population decreases in the case of concave and convex changes of POF, while KPTHP can maintain a very good distribution, probably benefiting from two factors. One is that the key points are a microcosm of the true POF, which are small in number. However, we can generate many reliable and similarly distributed individuals through the action of transfer learning. The second is that for the case of relatively regular changes in the true POF, the center-point-based prediction can complement the key-points-based transfer process, thus combining each other’s excellent features perfectly.

5. Further Discussion

5.1. Influence of Change Frequency

This section mainly explores the effect of change frequency on seven different algorithms. The experiments are still performed on DF, F, dMOP, and FDA test functions. Furthermore,

nt is set to 5 fixedly, and

τt is set to 10, 15, and 20 successively. The experimental performance of different algorithms on MIGD and HVD is shown in

Table 6 and

Table 7.

It is apparent that KPTHP obtains the best or second-best results on the majority of the tested functions in terms of MIGD, followed by KT-DMOEA obtaining the remaining best results and GM-DMOP receiving the second-best results at most. Finally, MMTL-DMOEA, IT-DMOEA, KDMOP, and PPS performers impotently in order. With respect to the HVD metric, KPTHP still works outstandingly, having the best and second-best results on the test functions. In addition, the performance of KPTHP improves significantly as the change frequency increases.

By the description above, it is known that KPTHP has superb convergence and perfect diversity. After a great deal of careful consideration, there are several major reasons to explain this superiority. On the one hand, KPTHP is a nonlinear transfer model, while key-points-based prediction is a linear model. By combining their respective strengths and advantages, and we can generate a great many of reliable individuals with leading responsibilities from a small number of key points predicted through transfer in response to the time-varying Pareto fronts. On the other hand, the transfer process can be well complemented by individuals of center-point-based feed-forward prediction. This may explain why pure transfer learning of MMTL-DMOEA, IT-DMOEA, and KT-DMOEA or absolute prediction methods of GM-DMOP, PPS, and KDMOP do not perform as well as KPTHP.

5.2. Influence of Change Severity

In addition to

τt,

nt is also a very important parameter in DMOPs that can affect the performance of the algorithms. In this section, we set

τt fixed to 5 and

nt to 5 and 10. It is universally known that smaller

nt indicates a more drastic change in the environment and therefore makes the algorithm more challenging. The results of the seven algorithms for the MIGD indicator are displayed in

Table 8.

It can be observed from

Table 8 that the algorithms perform well at different change severity. With

nt increasing, many of the tested functions improve in performance on all the algorithms. For F10, KPTHP obtains the best performance when the environment changes more intensively; nonetheless, the experimental performance is slightly worse than IT-DMOEA and KT-DMOEA when the environment changes mildly. For some simpler problems, such as DF1, KPTHP has little effect with intensity changing, which benefits from good diversity maintenance and predictive capability of KPTHP. Unfortunately, KPTHP is inferior to counterparts in the F8 problem. One possible reason for this is that in the case of more drastic changes, the POF of the three-objective function is not easily handled and is prone to sink into the local optimum.

5.3. Analysis of the Different Components of KPTHP

In this section, we explore the impact of the different components of KPTHP, simultaneously giving two novel versions of the algorithm. KPTHP-v1 solely adopts key-points-based transfer to obtain individuals at the next moment, while KPTHP-v2 merely utilizes center-point-based feed-forward prediction to forecast individuals. The statistical results of the three versions are presented in

Table 9 for the three metrics. For the rest,

nt and

τt are fixed to 5 and 20.

From

Table 9, we can obviously notice that the performance of KPTHP is significantly better than the other two versions, which suggests that each component of KPTHP has an indispensable influence. Comparing KPTHP and KPTHP-v1, we can find that the MIGD value of the former is significantly smaller than that of the latter, which indicates that feed-forward prediction can improve the convergence of the algorithm to a certain extent. Then, comparing KPTHP and KPTHP-v2, KPTHP is smaller than KPTHP-v2 for the majority of test functions with HVD and MMS values, which implies that the transfer based on key points can improve the distribution and diversity of the algorithm. In summary, given the preceding analysis, it is concluded that each component is significant and essential when dealing with dynamic environments.

6. Conclusions

The critical factor in evaluating the performance of a dynamic multi-objective evolutionary algorithm is its ability to respond quickly to new environments and converge efficiently to new POF. This paper proposed a new response mechanism called KPTHP, consisting of key-points-based transfer learning strategy and hybrid prediction strategies. The key-points-based transfer learning strategy concentrates on convergence and diversity and addresses nonlinear and unpredictable variations, which can generate part of high-performing individuals. The center-point-based prediction strategy and the transfer process complement each other to yield linearly predicted solutions with good distributivity that together constitute the initial population.

We selected the DF, F, dMOP, and FDA test suites, a total of sixteen test problems whose decision variables are linearly and nonlinearly related. Experimental results on various performance indicators show that KPTHP integrating RM-MEDA has obtained excellent diversity and can efficiently converge to the POF of the new environment on most problems. In addition, considering the existing research results, our proposed algorithm can be applied to solve many practical problems, such as dynamic scheduling.

Although the proposed KPTHP can generate high-quality initial populations, the reliability of the acquired individuals becomes poor, and the accuracy of feed-forward prediction based on the center point degrades when the environment changes are more complex. Therefore, in future research work, we will explore following several promising directions. First, exploring how to obtain a great number of reliable individuals with a small amount of data is well worth considering, which is beneficial for our static evolutionary process. Secondly, we can combine other prediction methods and investigate their influence. Furthermore, it is worth testing KPTHP on a wider range of problems that have different types of variations.