Abstract

In this paper, we establish two local convergence theorems that provide initial conditions and error estimates to guarantee the Q-convergence of an extended version of Chebyshev–Halley family of iterative methods for multiple polynomial zeros due to Osada (J. Comput. Appl. Math. 2008, 216, 585–599). Our results unify and complement earlier local convergence results about Halley, Chebyshev and Super–Halley methods for multiple polynomial zeros. To the best of our knowledge, the results about the Osada’s method for multiple polynomial zeros are the first of their kind in the literature. Moreover, our unified approach allows us to compare the convergence domains and error estimates of the mentioned famous methods and several new randomly generated methods.

1. Introduction

Undoubtedly, the most popular iteration methods in the literature are the Newton’s method, the Halley’s method [1] and the Chebyshev’s method [2]. A vast historical survey of these illustrious iteration methods can be found in the papers of Ypma [3], Scavo and Thoo [4] and Ezquerro et al. [5]. It is well known that the Newton’s method is quadratically convergent while Halley and Chebyshev’s methods are cubically convergent to simple zeros. However, all these methods converge linearly if the zeros are multiple.

In 1870, Schröder [6] presented the following modification of Newton’s method:

which restores the quadratic convergence when the multiplicity of the zero is known. Driven by the same reasons, in 1963 Obreshkov [7] developed the following modifications of Halley and Chebyshev’s methods:

and

The methods (2) and (3) are known as Halley’s method for multiple zeros and Chebyshev’s method for multiple zeros, and their convergence order is known to be three if the multiplicity m of the zero is known.

In 1994, Osada [8] defined the following third order modification of the Newton’s method:

known as Osada’s method for multiple zeros. In 2008, he [9] used an arbitrary real parameter to construct an iteration family for multiple zeros that includes as special cases the methods (1)–(4). Another member of the mentioned Osada’s iteration family is Super-Halley method for multiple zeros which can be defined by (see [10] and references therein):

Let and . We define the following extension of the Osada’s iteration family:

where the iteration function is defined by:

with and defined as follows:

Apparently, the domain of the iteration function (7) is the set:

It is easy to see that the iteration (6) includes the Halley’s method (2) for , the Chebyshev’s method (3) for , Super–Halley method (5) for and the Osada’s method (4) for and . Hereafter, the iteration (6) shall be called Chebyshev–Halley family for multiple zeros. Note that Chebyshev–Halley family for simple zeros () has been firstly introduced and studied in its explicit form by Hernández and Salanova [11].

In 2009, Proinov [12] established two types of local convergence theorems about the Newton’s method (1) (applied to polynomials) under two different types of initial conditions. In 2015 and 2016, Proinov and Ivanov [13] and Ivanov [14] used the same two types of initial conditions to establish local convergence theorems about the Halley’s method (2) and the Chebyshev’s method (3) for multiple polynomial zeros. Very recently, Ivanov [10] have proved two general theorems (Theorems 3 and 4) that provide sets of initial approximations to ensure the Q-convergence ([15] (Definition 2.1)) of Picard iteration and have applied them to investigate the local convergence of Super–Halley method (5) for multiple polynomial zeros.

In this paper, we use the approach of [10] to investigate the Q-convergence of Chebyshev–Halley family (6). Thus, we obtain two kinds of local convergence theorems (Theorems 1 and 2) that supply the exact bounds of sets of initial approximations accompanied by a priori and a posteriori error estimates that guarantee the Q-convergence of the iteration (6). An assessment of the asymptotic error constant of the family (6) is also established. Our results unify and complement the results of Proinov and Ivanov [13] and Ivanov [10,14] about the methods (2), (3) and (5). On the other hand, the results about the Osada’s method (4) (Corollarys 4 and 5) are the first such results in the literature. At the end of our study, we use Theorem 1 to compare the convergence domains and error estimates of the methods (2)–(5) and several new randomly generated members of (6).

2. Main Results

From now on, shall denote the ring of univariate polynomials over . Let be a polynomial of degree and be a zero of f. We define the functions and by

where d denotes the distance from to the nearest among the other zeros of f and denotes the distance from x to the nearest zero of f not equal to . Note that, if is an unique zero of f, then we set . Also, we note that the domain of is the set,

In this section, we present two local convergence theorems about Chebyshev–Halley family (7) under two different kinds of initial conditions regarding the functions of initial conditions defined by (10).

2.1. Local Convergence Theorem of the First Kind

Furthermore, for the integers n and m () and the number , we define the real function by:

where the function is defined by:

and the function is defined by:

with . It is worth mentioning that is positive and increasing while is decreasing on the interval .

The following is our first main theorem of this paper:

Theorem 1.

Let be a polynomial of degree and be a zero of f with known multiplicity . Suppose is an initial guess satisfying the conditions:

where E is defined by (10) and the function is defined by:

with and defined by (13) and (12). Then the iteration (6) is well defined and convergent Q-cubically to ξ with error estimates for all :

where and the function is defined by (11). Besides, the following estimate of the asymptotic error constant holds:

In the cases , and , we get the following consequences of Theorem 1 about Chebyshev, Halley and Super-Halley methods, which where proven in [10,13,14], but without the assessment of the asymptotic error constants:

Corollary 1

([14] (Theorem 2)). Let be a polynomial of degree and be a zero of f with multiplicity . Suppose satisfies the following initial conditions:

where the function is defined by (10) and the function is defined by:

Then the Chebyshev’s iteration (3) is well defined and convergent Q-cubically to ξ with error estimates (15), where , and with the following estimate of the asymptotic error constant:

Corollary 2

([13] (Theorem 4.5)). Let be a polynomial of degree and be a zero of f with multiplicity . Suppose satisfies the following initial condition

where is defined by (10). Then the Halley’s iteration (2) is well defined and convergent Q-cubically to ξ with error estimates (15) where and the function is defined by

Besides, the following estimate of the asymptotic error constant holds:

Corollary 3

([10] (Theorem 3)). Let be a polynomial of degree and be a zero of f with multiplicity . Suppose satisfies the following initial condition

where is defined by (10). Then Super-Halley iteration (5) is well defined and convergent Q-cubically to ξ with error estimates (15), where and the function is defined by:

The estimate (18) of the asymptotic error constant holds.

In the case and , we get the following consequence of Theorem 1 about the Osada’s method (4):

Corollary 4.

Let be a polynomial of degree and be a zero of f with multiplicity . Suppose satisfies the following initial conditions:

where is defined by (10) and the function is defined by:

Then the Osada’s iteration (4) is well defined and convergent Q-cubically to ξ with error estimates (15) where . Besides, the following estimate of the asymptotic error constant holds:

2.2. Local Convergence Theorem of the Second Kind

Before stating our second main theorem, for the integers n and m () and the number , we define the real function by:

where the function is defined by:

and the function is defined by:

with . Obviously, the function is positive and increasing while the function is decreasing on the interval .

The next theorem is our second main result of this paper.

Theorem 2.

Let be a polynomial of degree and be a zero of f with known multiplicity . Suppose is an initial guess satisfying the conditions:

where the function is defined by (10) and the function is defined by

with defined by (21). Then the iteration sequence (6) is well defined and convergent Q-cubically to ξ with error estimates for all :

where and with .

In the cases , and , from Theorem 2 we immediately get [14] (Theorem 3), [13] (Theorem 5.7) and [10] (Theorem 4) about Chebyshev, Halley and Super-Halley methods, respectively. In the case and , we get the following consequence of Theorem 2 about the Osada’s method.

Corollary 5.

3. Proof of the Main Results

As mentioned, in 2020 Ivanov [10] proved two general convergence theorems that give exact sets of initial approximations to guarantee the Q-convergence of Picard iteration

In this section, we use this general approach to prove our main results stated in the previous section.

3.1. Preliminaries

To make the paper self-contained, we recall some important results that will be applied in the proofs of the main theorems.

Lemma 1

([10] (Lemma 2)). Let and be all zeros of f different from ξ, then for any the following inequality holds:

where is defined by (10).

Lemma 2

([10] (Lemma 1)). Let K be an arbitrary valued field and be a polynomial of degree which splits over K. Let also be all distinct zeros of f with multiplicities .

- (i)

- If is such that, then for allwe have

- (ii)

- Ifis such thatand, then for allwe have.

For the proof of our first main result, we apply the next theorem that was proved in [10] without the estimate of the asymptotic error constant which, however, can be easily proven using the inequality in (30) and the concept of quasi-homogeneity of the exact degree of (see [16] (Definition 8)). Such a proof is performed in [16] (Proposition 3).

Theorem 3

([10] (Theorem 1)). Let be an iterative function, and be defined by (10). Let be a quasi-homogeneous function of exact degree such that for each with , we have

If is an initial approximation such that

then Picard iteration (28) is well defined and convergent to ξ with Q-order and with error estimates, for all :

In addition, for all the following error estimate holds:

where R is the minimal solution of in the interval . Moreover, we have the following estimate of the asymptotic error constant:

The following theorem shall be applied for the proof of our second main result:

Theorem 4

([10] (Theorem 2)). Let be an iterative function, and be defined by (10). Let be a nonzero quasi-homogeneous function of exact degree and for each with , we have and . If is an initial guess satisfying

where the function ψ is defined by

then Picard iteration (28) is well defined and convergent to ξ with Q-order and with the following error estimates:

where with and . Moreover, the following estimate of the asymptotic error constant holds:

3.2. Proof of Theorem 1

In the next lemma, we prove two inequalities that play a crucial role in the further proofs.

Lemma 3.

Let be a polynomial of degree , be a parameter and be a zero of f with known multiplicity . Suppose is such that:

where is defined by (10). Then there exists a complex number such that:

or

where .

Proof.

Let satisfy (35) and let be all distinct zeros of f with respective multiplicities . Then by and Lemma 1, we infer that x is not a zero of f and so we can define the quantities and as in Lemma 2. Without loss of generality, for some we put and .

Now, we shall prove that the number defined by

satisfies the claims of the lemma.

Using some known technics (see e.g., [14] (Lemma 1) and [10] (Lemma 3)) we reach the following estimates:

If , then using the reverse triangle inequality and (37) we obtain

which proves the first claim. Otherwise, we get

which proves the second claim and completes the proof of the lemma. □

The following is our main lemma in this section. In its proof, we avoid the case since in this case the lemma coincides with Lemma 4.4 of [13] concerning the Halley’s method.

Lemma 4.

Proof.

Let satisfy (38). If either or , then and so the assertions of the lemma hold. Suppose that and . Let be all distinct zeros of f with respective multiplicities and the quantities and be defined as in Lemma 2. Without loss of generality for some we put and .

First we shall prove that . According to (9) we need to prove that implies . To do this, we shall prove the inequality which in fact is equivalent to

Indeed, from Lemma 2 (i), (37) and the first condition of (38) we get which means that and so by Lemma 2 we have

which in turn leads to

Now, let us define the number by (36). If , then from the second condition of (38) and the estimates (37) we obtain . Therefore, by Lemma 3 and the second condition of (38) we get

In the case , we get the same conclusion but regarding . Consequently, (40) holds and therefore . Further, from (7) and (41) we obtain

where

From this, the triangle inequality, the estimates (37) and the claims of Lemma 3, we obtain

which completes the proof of the lemma. □

Proof

(Proof of Theorem 1). Let be Chebyshev–Halley iterative function defined by (7). If , then for all and the conclusions of the theorem follow. Suppose . From Lemma 4 and Theorem 3 it follows that the conclusions of Theorem 1 follow under the conditions

This completes the proof since with is equivalent to . □

3.3. Proof of Theorem 2

4. Comparative Analysis

In this section, we use Theorem 1 to compare the convergence domains and the error estimates of several particular members of Chebyshev–Halley iteration family (6). Define the function by (11). It is easy to see that the initial condition (14) can be presented in the form where is the unique solution of the equation in the interval (see e.g., Corollarys 2 and 3). Observe that as bigger is as larger is the convergence domain of the respective method and better are its error estimates. In order to compare the convergence domains of the Halley’s method (2), the Chebyshev’s method (3), Super-Haley method (5) and the Osada’s method (4) with each other and with some other members of the family (6), in the following figures we depict the functions obtained for the couples and with , , , and with four complex numbers randomly chosen from the rectangle

Note that these two couples have been chosen to highlight the cases and since we know that (see [10] (Remark 1)) in the second case Corollary 3 provides a larger convergence domain with better error estimates for Super–Halley method than Corollary 2 for the Halley’s method and vice versa in the first case.

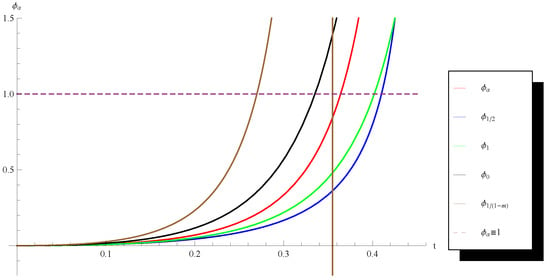

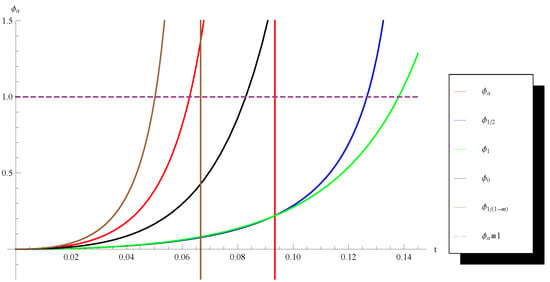

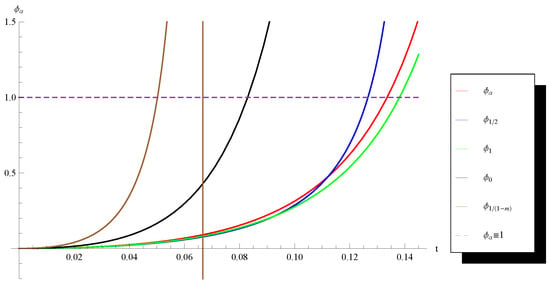

One can see from the graphs (Figure 1, Figure 2, Figure 3 and Figure 4) that in all considered cases except the first one (Figure 1), the randomly chosen method has a larger convergence domain and better error estimates than the Osada’s method. In the second case (Figure 2), the random method is better than the Chebyshev’s method while in the last case (Figure 4) the random method is better even than the Halley’s method.

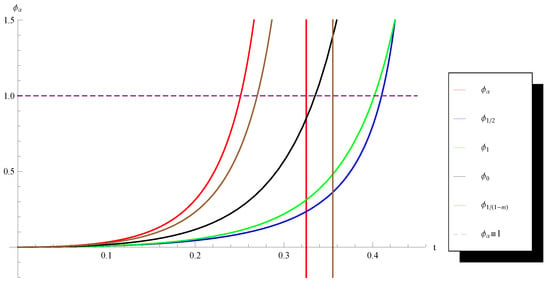

Figure 1.

Graph of the functions , , , and for , and .

Figure 2.

Graph of the functions , , , and for , and .

Figure 3.

Graph of the functions , , , and for , and .

Figure 4.

Graph of the functions , , , and for , and .

5. Conclusions

Two kinds of convergence theorems (Theorems 1 and 2) that ensure exact sets of initial conditions, a priori and a posteriori error estimates right from the first step and assessments of the asymptotic error constant of Chebyshev–Halley iteration family (6) for multiple polynomial zeros have been proven in this paper. This results unify and complement the existing results about the known Halley, Chebyshev and Super-Haley methods for multiple polynomial zeros. The obtained theorems about Osada’s method (4) are new even in the case of simple zeros. Finally, this unifying study allowed us to compare the mentioned famous iteration methods with some new randomly generated ones. This comparison showed that our results assure larger convergence domains and better error estimates for Halley’s method (2) when and for the Super-Haley method (5) when . However, in the second case, there exist many methods that are better than Halley’s method.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The author declares no conflict of interest.

References

- Halley, E. A new, exact, and easy method of finding the roots of any equations generally, and that without any previous reduction. Philos. Trans. R. Soc. 1694, 18, 136–148. (In Latin) [Google Scholar] [CrossRef]

- Chebyshev, P. Complete Works of P.L. Chebishev; USSR Academy of Sciences: Moscow, Russia, 1973; pp. 7–25. (In Russian) [Google Scholar]

- Ypma, T. Historical development of the Newton-Raphson method. SIAM Rev. 1995, 37, 531–551. [Google Scholar] [CrossRef]

- Scavo, T.; Thoo, J.B. On the geometry of Halley’s method. Am. Math. Mon. 1995, 102, 417–433. [Google Scholar] [CrossRef]

- Ezquerro, J.; Gutiérrez, J.M.; Hernández, M.; Salanova, M. Halley’s method: Perhaps the most rediscovered method in the world. In Margarita Mathematica; University La Rioja: Logroño, Franch, 2001; pp. 205–220. (In Spanish) [Google Scholar]

- Schröder, E. Über unendlich viele Algorithmen zur Auflösung der Gleichungen. Math. Ann. 1870, 2, 317–365. [Google Scholar] [CrossRef]

- Obreshkov, N. On the numerical solution of equations. Annu. Univ. Sofia Fac. Sci. Phys. Math. 1963, 56, 73–83. (In Bulgarian) [Google Scholar]

- Osada, N. An optimal multiple root-finding method of order three. J. Comput. Appl. Math. 1994, 51, 131–133. [Google Scholar] [CrossRef]

- Osada, N. Chebyshev–Halley methods for analytic functions. J. Comput. Appl. Math. 2008, 216, 585–599. [Google Scholar] [CrossRef][Green Version]

- Ivanov, S.I. General Local Convergence Theorems about the Picard Iteration in Arbitrary Normed Fields with Applications to Super-Halley Method for Multiple Polynomial Zeros. Mathematics 2020, 8, 1599. [Google Scholar] [CrossRef]

- Hernández, M.; Salanova, M. A family of Chebyshev-Halley type methods. Int. J. Comput. Math. 1993, 47, 59–63. [Google Scholar] [CrossRef]

- Proinov, P.D. General local convergence theory for a class of iterative processes and its applications to Newton’s method. J. Complex. 2009, 25, 38–62. [Google Scholar] [CrossRef]

- Proinov, P.D.; Ivanov, S.I. On the convergence of Halley’s method for multiple polynomial zeros. Mediterr. J. Math. 2015, 12, 555–572. [Google Scholar] [CrossRef]

- Ivanov, S.I. On the convergence of Chebyshev’s method for multiple polynomial zeros. Results Math. 2016, 69, 93–103. [Google Scholar] [CrossRef]

- Jay, L.O. A note on Q-order of convergence. BIT 2001, 41, 422–429. [Google Scholar] [CrossRef]

- Proinov, P.D. Two Classes of Iteration Functions and Q-Convergence of Two Iterative Methods for Polynomial Zeros. Symmetry 2021, 13, 371. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).