Abstract

As effective methods to foster students’ understanding of scientific models in science education are needed, increased reflection on thinking about models is regarded as a relevant competence associated with scientific literacy. Our study focuses on the influence of model-based approaches (modeling vs. model viewing) in an out-of-school laboratory module on the students’ understanding of scientific models. A mixed method design examines three subsections of the construct: (1) students’ reasoning about multiple models in science, (2) students’ understanding of models as exact replicas, and (3) students’ understanding of the changing nature of models. There were 293 ninth graders from Bavarian grammar schools that participated in our hands-on module using creative model-based tasks. An open-ended test item evaluated the students’ understanding of “multiple models” (MM). We defined five categories with a majority of students arguing that the individuality of DNA structure leads to various DNA models (modelers = 36.3%, model viewers = 41.1%). Additionally, when applying two subscales of the quantitative instrument Students’ Understanding of Models in Science (SUMS) at three testing points (before, after, and delayed-after participation), a short- and mid-term decrease for the subscale “models as exact replicas” (ER) appeared, while mean scores increased short- and mid-term for the subscale “the changing nature of models” (CNM). Despite the lack of differences between the two approaches, a positive impact of model-based learning on students’ understanding of scientific models was observed.

1. Introduction

Genetics, as a key aspect in modern biology, provides access to many fields such as the decryption of specific disease patterns in medicine, the development of customized and effective medication, and increased understanding of genetic conditions on our behaviors [1]. Models and modeling play a significant role in the research process as they may help to explain experimental observations by revealing essential relationships that bridge theoretical knowledge to build a basis for further scientific predictions [2]. It is important for researchers to develop complex genetic models to describe and understand the molecular basis of observed phenomena, for example, for gene cascades that can explain the basics of learning and memory [3,4].

Years ago, one of the most revolutionary events for modern genetics was inspired by the creative process of modeling and finally helped in the interpretation of experimental data [5]. In 1953, Francis Crick wrote to his then 12-year-old son about “a most important discovery” and described the “beautiful” structure of DNA [6] as a molecule that carries the most genetic information in all organisms from bacteria to humans. In 1962, he and his colleagues, James Watson and Maurice Wilkinson, were awarded with the Nobel Prize. Nevertheless, it is often forgotten that the decoding of the DNA structure by modeling was also largely facilitated by the innovative crystallographic studies from Rosalind Franklin and Raymond Gosling. Their research was essential for determining the structure of DNA. Franklin recognized that an adequate model of DNA structure must have phosphate groups on the outside of the molecule [7], also, identified two distinct configurations of DNA (A and B form), and was able to show that a double helix was consistent with the X-ray patterns of both forms [8].

1.1. Teaching Genetics: The Role of Outreach Laboratories and Model-Support

Modeling and experimentation go hand in hand in the description and explanation of genetic phenomena. However, as the understanding of invisible molecular processes and abstract concepts still poses numerous questions, transposing this knowledge into classrooms is a challenge for learning genetics at school [9,10]. To counteract learning difficulties caused by inaccessible working spaces of real scientist connected with incomprehensible research contents, outreach laboratories at universities may help students to get in touch with realistic learning scenarios by offering special material resources as compared with the regular biology lessons [11,12]. Many studies have investigated the effects of student-centered learning in outreach laboratories on students’ cognitive achievement and have demonstrated further benefits as compared with conventional teacher-centered science classes due to the combination of newly acquired knowledge with autonomous hands-on learning [13,14,15]. Another advantage of out-of-school labs is that participants are actively involved in the learning content as they slip into the role of scientists when working cooperatively on student-centered hands-on tasks [16,17]. Discussing socio-scientific issues in an outreach laboratory, the authors [18] showed that student-centered approaches provide an appropriate means to establish students’ own opinions, even though they have been shown to be associated with a higher cognitive load than that of teacher-guided approaches. An important strategy for a classroom discussion seems to be the promotion of students’ ability to ask their own research questions while performing inquiry-based tasks [19]. From another perspective, the participation in a teacher-led lab activity that focuses on DNA manipulations to reveal the connection between gene and phenotype significantly improved students’ mental models of DNA as well as their procedural understanding of DNA manipulations [20]. Nevertheless, authentic first-hand experiences may help to increase scores on wellbeing when students have the opportunity to work like real scientists [21].

One of our aims was to innovate traditional outreach programs in learning genetics by arousing students’ creativity and transferring enthusiasm from arts to science classes. We developed a STEAM teaching approach (STEAM = science, technology, engineering, arts and mathematics) for an out-of-school lab setting, combining creative model-based learning with classical hands-on experimentation [22]. Visual representations are regarded as essential to understand complex (molecular) contents and especially models that are highly relevant for exemplification in teaching genetics [23]. For chemistry education it is known that enacting with hand-held molecular models can reduce the demand of imaging concepts and processes in the mind by lowering the cognitive load [24]. Models and modeling may help students to learn, structure. and integrate newly acquired information with their previous knowledge, since the mere mental transformation of novel representations is very memory intense [25]. The students’ understanding of three-dimensional molecular structures also seems to be dependent on the type of representation used. The application of concrete three-dimensional models or pseudo-concrete computer-generated models leads to better results than more abstract kinds of representations (e.g., schematic representations and stereochemical formulas) [26].

Models and modeling occupy various roles in scientific practice and there are also many different ways to use models and modeling in science classrooms [27,28]. A general distinction can be drawn between “model-based teaching” (the use of existing models by students) and “modeling-based teaching” (the creation and use of models by students) [29]. The work of Odenbaugh defines five major applications of models in biology: (1) to explore unknown possibilities, (2) to explore complex systems by using simplified models, (3) to develop conceptual frameworks, (4) to make accurate predictions, and (5) to generate causal explanations [30]. In consequence, modeling or model-based inquiry can also help students to explore their own ideas and to refine their conceptual understanding [28]. However, common model-based approaches include models primarily as teaching tools, for example, as illustrative objects to explain specific processes and structures. In contrast, student-centered modeling activities have the potential to engage students in developing, evaluating, and improving their own models which finally helps them to reflect on how scientists use models to study natural phenomena [31,32]. When comparing influences on cognitive achievement, cognitive load, and instructional efficiency model viewers achieved significantly higher mid-term knowledge increases than modelers, while individual cognitive load scores remained similar. Accordingly, model viewing produced significantly higher scores for instructional efficiency, pointing to enhanced cognitive achievement [33]. The correct understanding of the three genetics concepts (DNA, gene, and chromosome) may have hindered the development of correct and complete DNA models from the modelers [17,33]. We also evaluated students’ model quality and monitored potential influences on individual creativity and knowledge levels [34]. Girls created significantly better structured models than boys, and girls’ model quality also significantly correlated with short- and mid-term knowledge levels and to the creativity subscale “flow”. Modeling seems to provide stronger support for female students and is a suitable approach for emphasizing creativity in science education to overcome the negative perceptions of traditional science [35].

1.2. Empirical Findings on Students’ Understanding of Scientific Models

As models and modeling play a key role in scientific inquiry and communication, the Next Generation Science Standards (NGSS, [36]) emphasize the meta-level of thinking about models as an essential learning goal in science curricula with the aim of promoting understanding of the nature of science and developing scientific literacy [37]. However, in classrooms, the use of models as learning tools to gain conceptual and theoretical knowledge often predominates the role of models as part of the nature of science [32,38]. It is not surprising that empirical studies have indicated that both teachers and learners mainly associate models with descriptive characteristics and their role as equipment for teaching visualize abstract concepts [39,40]. Biology teachers, in particular, mentioned primarily descriptive entities of models as compared with other science teachers who were able to give more accurate definitions of models consistent with scientific explanations [40]. In consequence, students’ appreciation of models is often limited and naïve, when they describe models as physical copies and do not understand their role as mediators between theory and observation [39,41]. A recent study investigated students’ understanding of the nature and purpose of biological models confirmed these earlier findings [42] and reported that across grades the majority of students still considered models as idealized representations of an original with the purpose to illustrate or to explain this original. One reason could be the frequency of introducing passive models in classrooms, although the active involvement and handling of models seemingly may better support a perception of models as interpretive and predictive tools [24,43]. This is in line with current research on the uses of three-dimensional physical models in biology classroom instruction [27]. Werner and colleagues found that several categories of scientific reasoning were rarely applied during an extensive use of models in biology lessons. Furthermore, they revealed a lack of critical reflection on the applied models unless they were regarded as essential for developing a general understanding of science and scientific reasoning skills.

Additionally, the demand for defined descriptions of students’ understanding of models with regard to either grade- or context-specific aspects became greater. In order to promote students’ meta-knowledge of models and modeling, more investigations on context-specific teaching approaches are in the interest of research, as well as an accompanying evaluation of students’ understanding of models [44]. In addition, the activity of argumentation is considered important in modeling of a phenomenon, since scientific modeling is inherently an argumentative act. Furthermore, students can remain focused on the role of the model while arguing with their classmates about it. Herein, arguments can be mental, written or verbal with the intention of judging and understanding ideas, communicating them to others, and convincing oneself or others that the ideas and views to explain a phenomenon are useful [45]. However, science teachers themselves need modeling skills as well as an elaborated understanding of models and modeling to apply modeling practices appropriately in the classroom (e.g., [46]). According to Justi and Gilbert’s model of modeling [47], four main stages for successful modeling in science classrooms should be taken into account: (1) collecting information about the entity that is being modeled, (2) producing a mental model, (3) expressing that model in an adequate representation form, (4) testing and evaluating its scope and limitations. Furthermore, Krell and colleagues recently saw the need to develop an instrument to analyze and describe modeling activities of (preservice) science teachers and to derive modeling strategies [48].

Empirical research of students’ understanding of models is widespread as well as the number of potential assessment instruments is high [38,49,50,51]. On the basis of their individual life experiences, students built up personal and alternative concepts of the role of scientific models, which, in addition, do not have to match the teacher’s assumptions about the students’ perceptions. Treagust and colleagues [38] designed the quantitative instrument Students’ Understanding of Models in Science (SUMS) that measures the following five aspects: (1) scientific models as multiple representations, (2) models as exact replicas, (3) models as explanatory tools, (4) the uses of scientific models, and (5) the changing nature of scientific models. On the one hand, their results for secondary science students revealed that the majority of students think that new ideas and research findings can lead to changes of existing scientific models (factor 5). On the other hand, answers were different for models as exact replicas (factor 2) emphasizing, in particular, that descriptive entities of scientific models depend on the level of abstraction [38].

A second approach applied open-ended test items to evaluate a theoretical framework that concentrated on five partly similar aspects of students’ understandings. Two biological models which were nature of models and multiple models, and their use in science which included purpose of models, testing, and changing models [41,51]. Empirical data supported a subdivision of each scale into three levels (I–III) and confirmed that these levels reflect an increasing degree of difficulty [44]. As expected, students’ answers could be more frequently classified as level I and II as compared with far fewer answers at the highest level III. Taking into account that Grünkorn and colleagues defined learners’ understanding as competencies, these results are assigned to the specific domain of biology [41]. However, the underlying framework is applicable to evaluate students’ understanding of scientific models in general [51]. This offers the advantage to assess multidisciplinary topics as well, for example in the context of molecular instruction. Especially in terms of molecular biochemical content, an overlapping is often given as well as an application of adequate models makes sense [23].

A third study used the instrument Students’ Views of Scientific Models and Modeling (VSMM) and focused on three main aspects of representational characteristics of models and students’ educational levels [52]. The applied subscales were: (1) nature of models, (2) nature of modeling, and (3) purpose of models, and each included modality, dimensionality, and dynamics. Their major findings were that high school students more frequently understood textual representations and pictorial representations as models (model identification), while they also more often perceived differences between two-dimensional and three-dimensional models (utility of multiple models) as compared with middle school students [52]. These findings support the assumption that the students’ age and educational level are additional relevant factors which explain their interactions with different representative forms [53].

1.3. Objectives of the Study

The present research compares the influence of a model-based and a modeling-based approach in an out-of-school laboratory module on students’ understanding of the role of scientific models. To follow the demand on more context-specific evaluations, both approaches relate to the topic of DNA structure and investigate three sub-aspects as follows: are there differences between the two approaches in students’ reasoning about multiple models in science (RQ1); to what extent do the two approaches affect students’ understanding of scientific models as exact replicas (RQ2); how do model-based activities influence students’ understanding of the changing nature of scientific models, if at all (RQ3).

2. Materials and Methods

2.1. Educational Intervention

A one-day module (270 min) on DNA structure for ninth graders at a university out-of-school lab was implemented by the same teacher. The hands-on learning activity was embedded in an inquiry-based setting where students worked in pairs, and used a workbook as a guide to encourage problem-solving as well as collaborative skills (for a detailed description of the module’s phases see [22,34]). The contents of the lab day were adapted to follow the guidelines set by the Bavarian grammar school syllabus [54]. With regard to abstraction capability and the promotion of logical thinking, students dealt with challenging, application-oriented questions that required interdisciplinary networked thinking based on fundamental biological knowledge. Working with model concepts as well as frequent changes between different organizational levels (e.g., cells, organs, organisms, ecosystems) promoted the ability to abstract and train multi-perspective and logical thinking. On the basis of the traits observed, ninth graders gained an overview of the path from genetic information to traits. They got to know DNA as an information carrier and could describe a simplified DNA model.

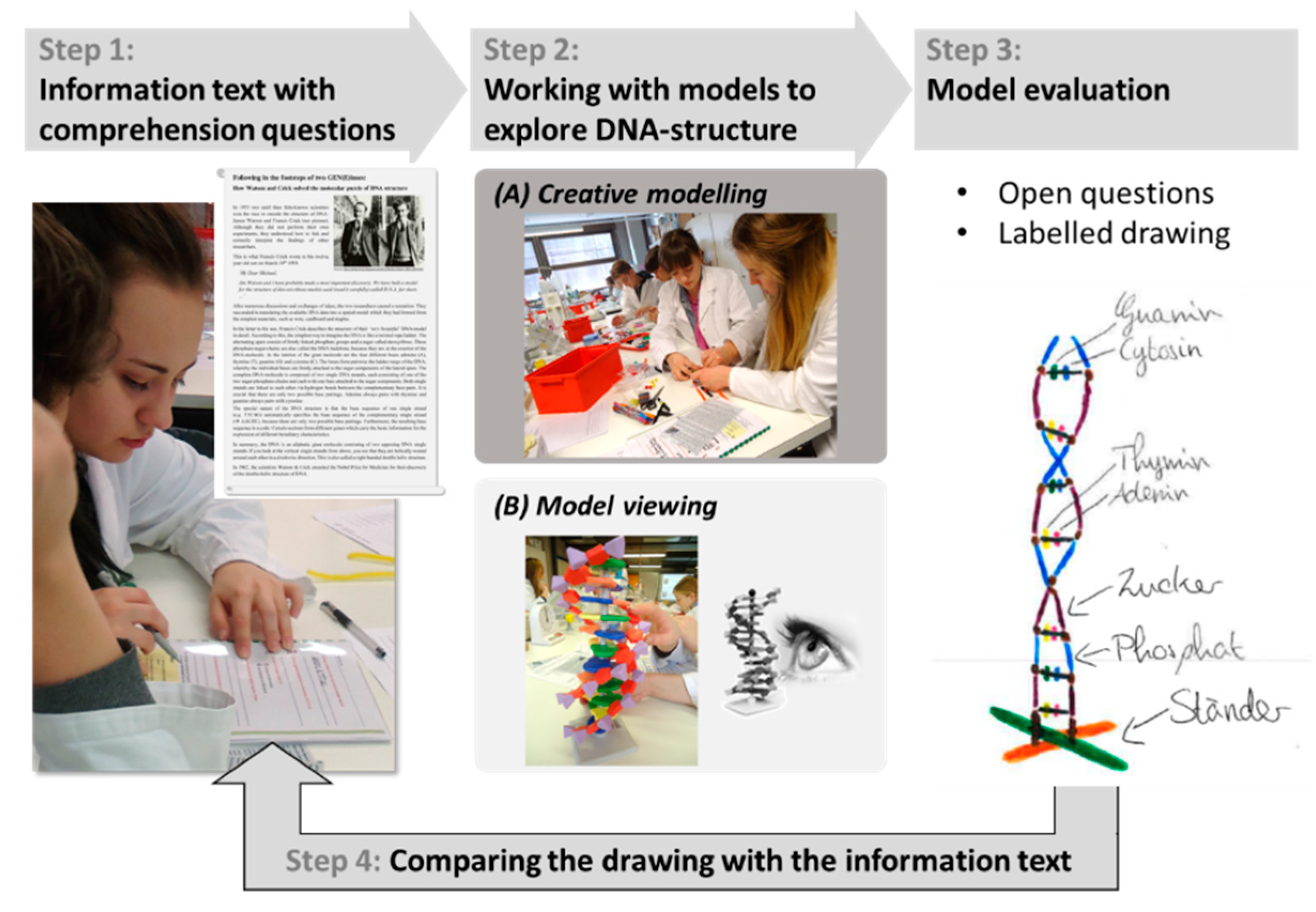

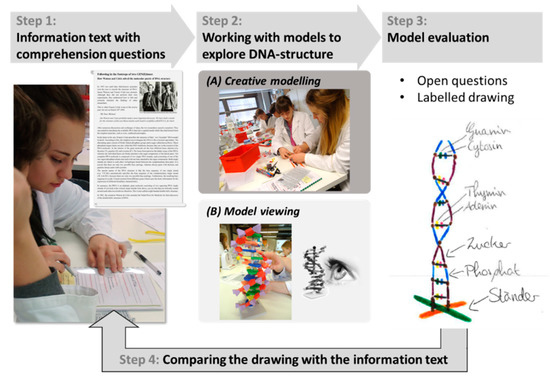

The intervention started with a pre-lab phase (50 min) to introduce the laboratory bench and practice essential working techniques (e.g., micro pipetting, decantation, and centrifugation). The two experimental phases, DNA isolation from oral mucosal cells for 60 min and agarose gel electrophoresis for 85 min, were connected by a model phase (60 min), where students followed the footsteps of Watson and Crick to solve the molecular puzzle of DNA structure. The model phase was the key activity as it provided the theoretical basis for the experimental findings (Figure 1). After reading about the discovery of the DNA structure, students answered comprehension questions. They internalized essential background information as they mentally began to develop a model of DNA structure. On the basis of the text, the following important components should be considered, for example, the phosphate-sugar chains as DNA backbone, names and arrangement of the bases, possible base pairings, hydrogen bonds between base pairings, and the right-handed double helix structure. For the subsequent model-based activities participants were randomly assigned to two subsamples: (A) The modelers (md) who creatively generated a DNA model with no instructions provided except DNA-modeling kits containing various handcrafting materials (e.g., colored beads, pipe cleaners, scissors, scotch tape, plasticine, and paper cards). (B) The model viewers (mv) who worked instead with a completed but unlabeled commercially available school model and compared the substructures of this model with their mental models. In order to consider the scope and limitations of the models both treatments had to make a labelled drawing to explain the elements of their models’. During this model evaluation students could explicitly reflect their modeling process and the nature of models while they were arguing with their partners about their ideas and whether the representation of DNA might be appropriate as recommended by Passmore and Svoboda [45]. In the final interpretation phase, both groups discussed and compared the findings of the model phase with previously formulated hypotheses and with the experimental results. Additionally, students had to consider the scope and limitations of the models.

Figure 1.

Overview of student activities in the model phase.

2.2. Participants

In 2017, twelve classes from eight different Bavarian grammar schools (‘Gymnasium’) participated in our laboratory module. Class sizes ranged from 20 to 34 students. Data were collected from 293 ninth graders (59.04% female, age M ± SD = 14.51 ± 0.69, novices). The classes were randomly assigned to two treatments: 120 modelers (md) creatively elaborated a DNA model and 134 model viewers (mv) identified DNA substructures on a commercially available school model. To control for the effect of repeated measurement, a test-retest sample was also taken from students in grammar schools (n = 39), who completed the SUMS questionnaire (Students’ Understanding of Models, [38]) without having participated in the module or receiving any instruction on the topic during data collection.

Participation was voluntary. The parents of all students gave their written consent for students’ participation. The study was conducted in accordance with the Declaration of Helsinki [55]. The Bavarian State Ministry for Education and Cultural Affairs approved the questionnaire. Data collection was pseudo-anonymous. Students could not be identified from the data used.

2.3. Test Design and Instruments

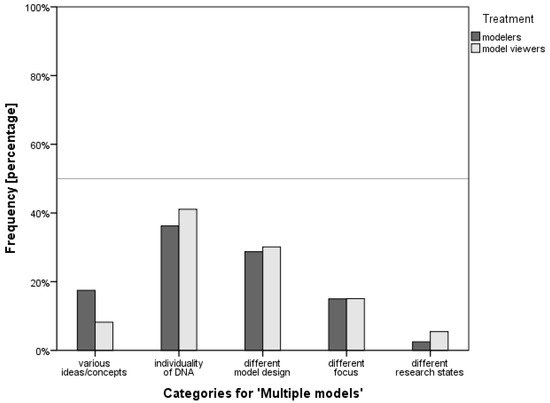

Our study followed a quasi-experimental mixed-method design with pre-test (T0), post-test (T1) and retention-test (T2). The data were gathered using paper-and-pencil questionnaires. Students were never aware of any testing schedules. For qualitative assessment of students’ reasoning about “multiple models” (MM) in science, we used an open-ended test item directly after participation in the lab module (T0, “Explain why there can be different models of one biological original (like the DNA structure)!” adapted from [41]). A single decider categorized students’ answers by using qualitative content analysis [56]. Although we considered the framework of Grünkorn et al. [41] to be appropriate, it was only partially transferable to our study. Firstly, the measurement design differed between the studies, and the applicability of the proposed category system to classify our student responses was too extensive and complex. In addition, our participants often argued contextually, which would have required an extension of the existing framework. Consequently, we developed an alternative system by inspecting the variety of explanations with regard to students’ understanding of multiple models as compared with one biological original and we identified five different categories: MM1, various ideas/concepts; MM2, individuality of DNA; MM3, different model design; MM4, different focus; and MM5, different research states). Detailed descriptions of the categories as well as examples from students’ answers are shown in Table 1. In order to examine reliability, we randomly selected 15% of the students’ answers for intra- and inter-rater categorization. The dataset was reanalyzed by the first author after six months to estimate intra-rater statistics and by a nonpartisan third person to obtain independent inter-rater reliability. Cohen’s Kappa coefficient [57] yielded reliability scores for intra-rater reliability of kappa = 0.826 and for inter-rater reliability of kappa = 0.651. These scores were rated as almost perfect or rather substantial indicating that the assessment was independent of the raters [58]. Consequently, a high observer agreement was interpreted as an indication that the category system used was easily applicable and led to measurement accurate data [59].

Table 1.

Category system to evaluate students’ understanding of models with regard to the aspect of “multiple models” with an open-ended test item (Q: Explain why there can be different models of one biological original (like the DNA structure)!).

Students completed the SUMS questionnaire (Students’ Understanding of Models, [33]) three times: two weeks before participation (T0), immediately after the module (T1), and six weeks after participation (T2). We applied a shortened version of the SUMS questionnaire using the subscales ER (models as exact replicas) and CNM (the changing nature of models), as these subscales adequately fit the intent of the model-based learning sequences. For the subscale ER we concentrated on items with high factor loadings (≥0.64) and dropped those with cross loadings from the original questionnaire (see Section 3.2.1 below). The Cronbach’s alpha values of the internal consistency of each scale are presented in Table 2. Although acceptable values are normally above 0.70 [60], values between 0.70 and 0.60 can be rated as still reasonable if the factors have only a few items [61]. It became clear that reliabilities increased to more acceptable levels over the three test times. This can be explained by the fact that the response patterns of the individual students over the test period became more homogeneous and more strongly divided opinion patterns existed on the construct examined. The SUMS instrument used a 5-point Likert-type scale with the following answer options: strongly disagree (1), disagree (2), not sure (3), agree (4), and strongly agree (5). Item order was changed randomly for each test schedule.

Table 2.

Number of items and Cronbach’s alpha scores of the SUMS questionnaire (Students’ Understanding of Models, [38]) for the subscales models as exact replicas (ER) and the changing nature of models (CNM). Cronbach’s alpha scores are calculated for pre- (T0), post- (T1) and retention-test (T2).

2.4. Statistical Analysis

Statistical tests were conducted using SPSS Statistics 24. The mean scores of the SUMS scale were normally distributed as assessed by the Shapiro–Wilk test, (p > 0.05) and according to the QQ-Plots [62]. Consequently, we used parametric testing methods. Pearson’s chi-square test was applied for comparing observed frequencies of the categorical variables with the treatment groups [63,64]. An explanatory factor analysis with subsequent orthogonal rotation (varimax) was conducted on the SUMS item set to inspect the similarity to the original scale. To assess the suitability of the sample, the Kaiser–Meyer–Olkin test (KMO) [65] and Bartlett’s test of sphericity were applied. The Kaiser–Guttman criterion was used to determine the number of factors to be extracted [66]. Between-group differences were analyzed using a one-way ANOVA at each testing point and within-group comparisons by using a repeated-measures ANOVA based on mean scores for each subscale. Pairwise comparisons at the different testing points used the Bonferroni correction. We reported the effect size using partial eta squared, considering values of 0.01 as a small effect, 0.06 as a medium effect, and 0.14 as a large effect [67].

3. Results

3.1. Qualitative Assessment

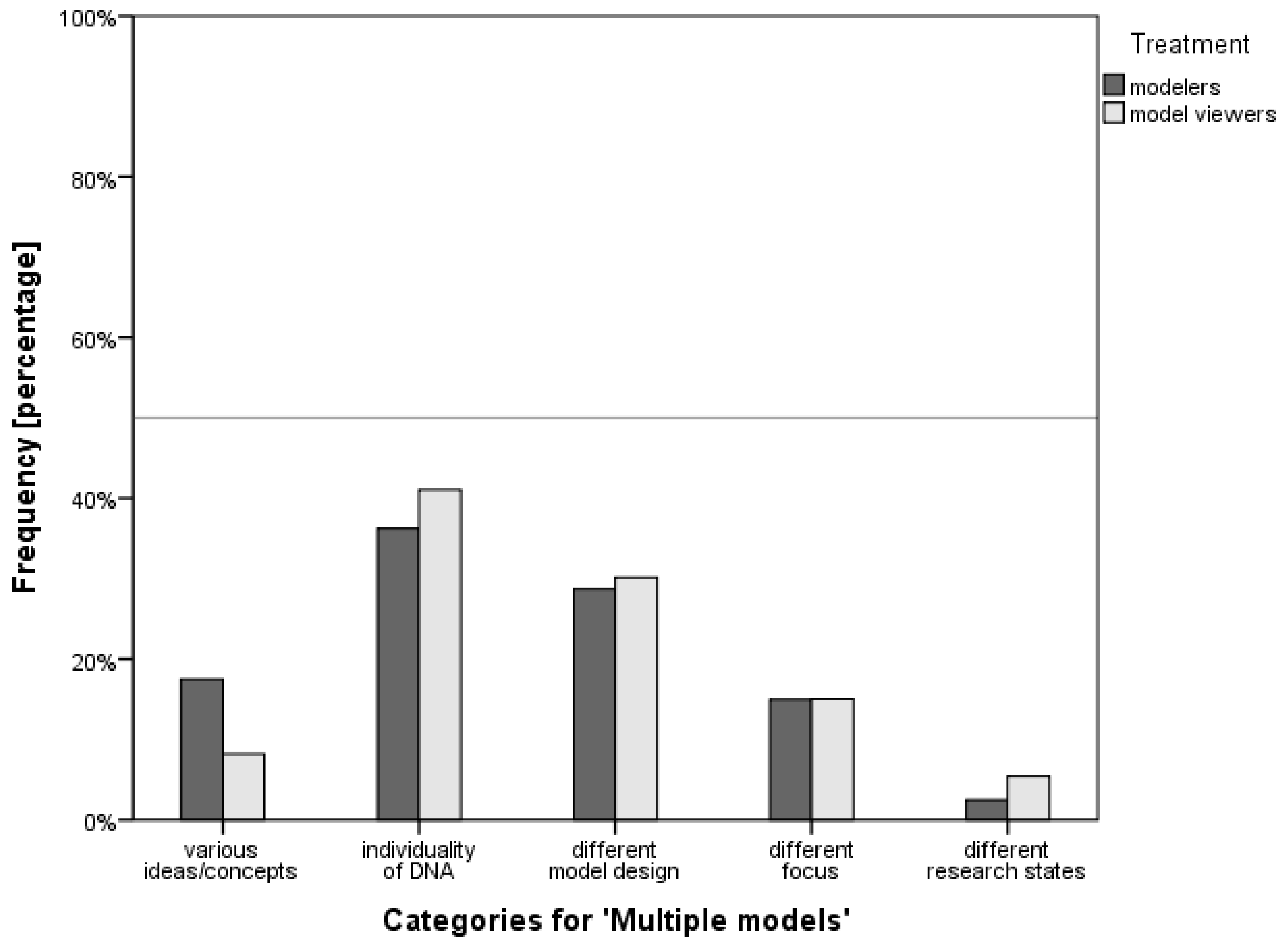

To evaluate students’ understanding of multiple models (MM) to one biological original with an open-ended test item, several categories were extracted (NStudent pairs = 189, NStatements = 153, nmd = 80, nmv = 73). The frequency is based on all students’ answers and all statements add up to 100% (Figure 2). For the category MM1, more modelers (md = 17.5%) as compared with model viewers (mv = 8.2%) justified the existence of multiple models with various ideas about the original that lead to different representations of a phenomenon, some also maintain that those different models are valid at the same time. The majority of students for both treatments argue that the individuality of DNA structure (category MM2) explains the variety of DNA models (md = 36.3%, mv = 41.1%). A different model design (category MM3), for example, the choice of the material used to build the model or the decision whether to present the DNA in 2D or 3D, is also given by the students as justification, regardless of the treatment (md = 28.6%, mv = 30.1%). Less frequently, students in both treatments name the focus of the model (category MM4) as a reason for different forms of representation, for example, to illustrate certain relationships in detail like the different base pairings or the double-helical structure of the DNA (md = 15.0%, mv = 15.1%). Only very few students related the existence of different models to the original (category MM5) with new research leading to a change in the model (md = 2.6%, mv = 5.5%). Nonetheless, no frequencies showed statistically significant association between the type of treatment and students’ argumentation about elaborating multiple models for DNA structure (chi-square (4) = 3.77, n.s.).

Figure 2.

Frequency and distribution of student’ answers split by treatments on the open-ended item “Explain why there can be different models of one biological original (like the DNA structure)!” to evaluate students’ understanding with regard to the aspect of multiple models. Note: Open question with categories formed from answers given.

3.2. Quantitative Assessment

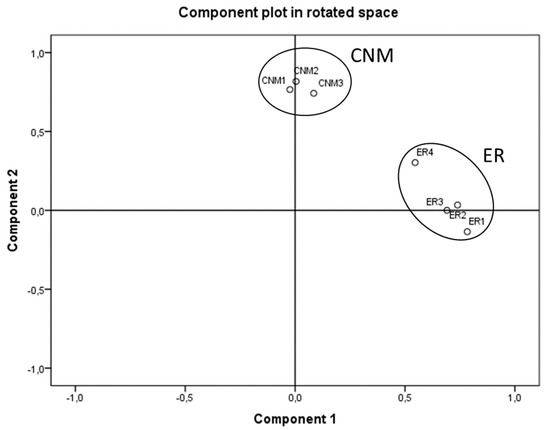

3.2.1. Factor Analysis

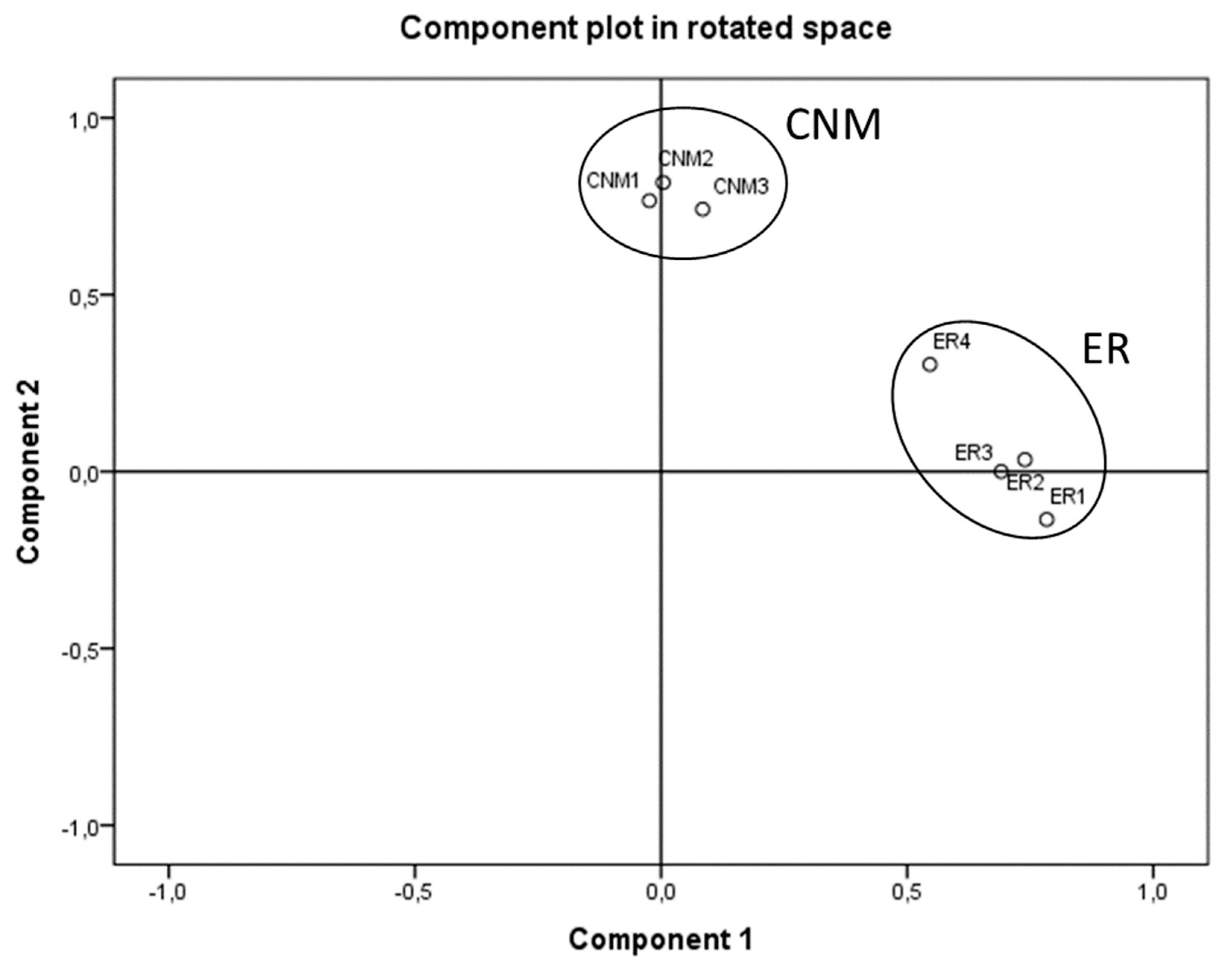

Principal component analysis (PCA) on 7 items of the SUMS (T1) with orthogonal rotation (varimax) yielded two factors on the basis of eigenvalues >1.0. The Kaiser–Meyer–Olkin measure verified the sampling adequacy (KMO = 0.667), which is well above the acceptable limit of 0.5 [68]. Bartlett’s test of sphericity (chi-square = 241.578, p < 0.001) indicated that correlations between items were sufficiently large for performing a PCA [59]. Examination of the Kaiser–Guttman criterion yielded empirical justification for retaining two factors, which explained 55.14% of the total variance. The scree plot and the component plot in rotated space (Figure 3) supported our two-factor solution and confirmed the original subscales. Among other factor solutions, the varimax-rotated two-factor solution yielded the most interpretable result, with items loading highly on only one of the two factors (Table 3, scores under 0.35 are suppressed). The percent of variance explained by models as exact replicas (ER) was 29.46%, and 25.67% for the changing nature of models (CNM).

Figure 3.

Component plot in rotated space with applied 7 items of the SUMS (Students’ Understanding of Models [38]) indicating two factors (items ER1-4, models as exact replicas and items CNM1-3, the changing nature of models).

Table 3.

Factor loadings from the principal factor analysis of the post-test (T1) values of two subscales of the SUMS (Students’ Understanding of Models [38]).

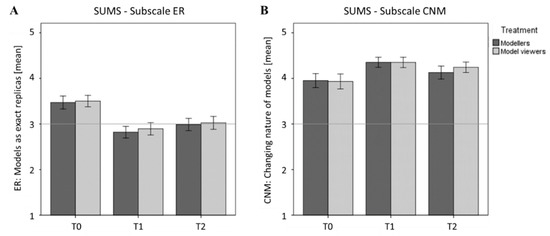

3.2.2. Influences of the Model-Based Approaches on Two Subscales of the SUMS

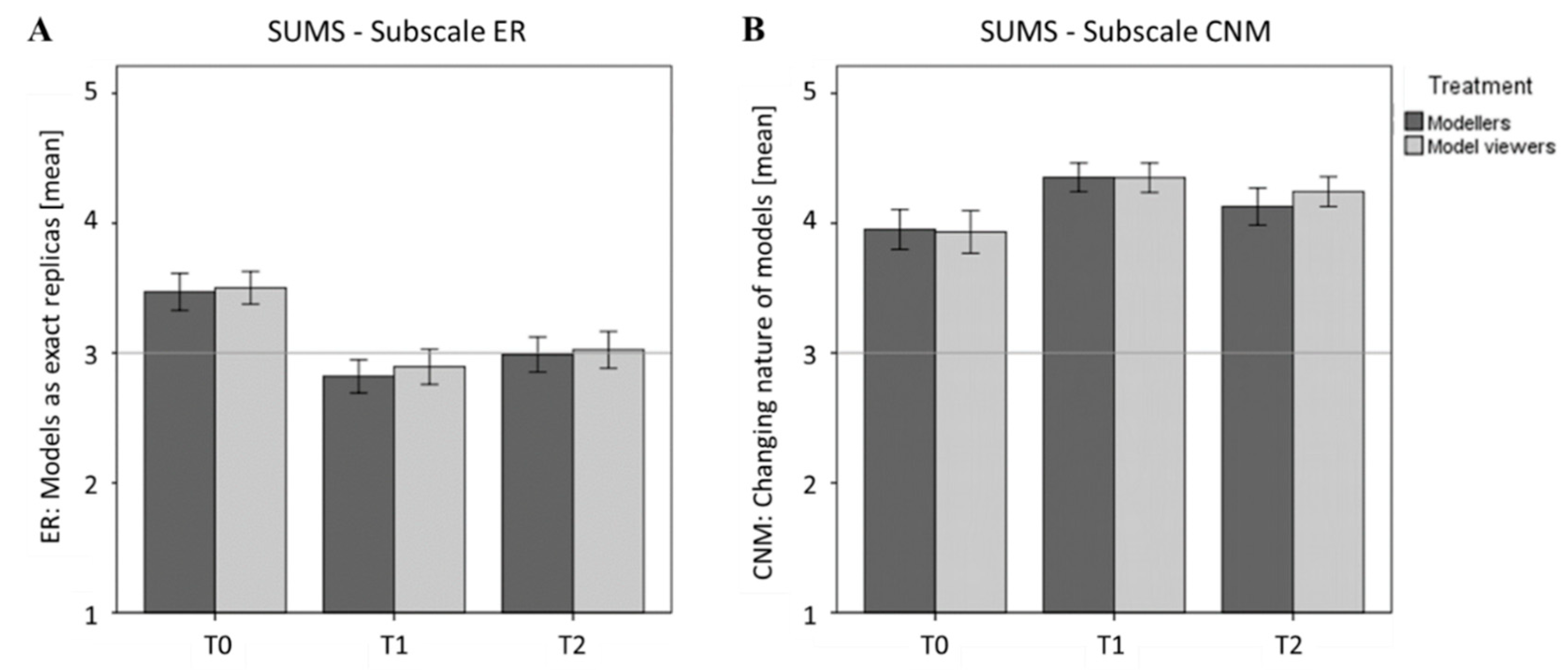

The subscale ER (models as exact replicas, Figure 4A) revealed significant differences in the repeated measurement ANOVA (F(1.89, 424.30) = 103.80, p < 0.001, partial eta squared = 0.32). The Mauchly’s test indicated that the assumption of sphericity had been violated (chi-square (2) = 14.87, p < 0.001). Therefore, degrees of freedom were corrected by using Huynh-Feldt estimates of sphericity (epsilon = 0.947). The ER mean scores dropped from T0 (M ± SD = 3.49 ± 0.71) to T1 (2.86 ± 0.71) and increased to testing point T2 (3.01 ± 0.75). Post hoc pair-wise comparison with Bonferroni correction showed similar results. The ER mean scores dropped short-term (T0 to T1, p < 0.001) and increased again at testing point T2 (T1 to T2, p = 0.001). The testing points T0 and T2 also revealed a significant decrease of ER mean scores (T0 to T2, p < 0.001).

Figure 4.

Mean scores for the SUMS subscales (A) ER (models as exact replicas) and (B) CNM (the changing nature of models) to testing points T0, T1, and T2 split by treatment. Bars are 95% confidence intervals.

The subscale ER was also analyzed for differences between the treatments (Figure 4A). There was no significant treatment effect (F(1.90, 424.28) = 0.123, p = 0.875, partial eta squared = 0.001) which indicated that the mean scores from modelers and model viewers were similar (M ± SD: md = T0 (3.47 ± 0.72), T1 (2.82 ± 0.65), T2 (2.99 ± 0.69); mv = T0 (3.50 ± 0.70), T1 (2.89 ± 0.76), T2 (3.00 ± 0.75)).

The repeated measurement ANOVA for the subscale CNM (the changing nature of models, Figure 4B) also showed a statistically significant difference between testing points (F(1.72, 379.97) = 33.72, p < 0.001, partial eta squared = 0.13). The Mauchly’s test revealed violation of the assumption of sphericity for the subscale CNM (chi-square (2) = 41.36), and therefore degrees of freedom were corrected by using Huynh–Feldt estimates of sphericity (epsilon = 0.860). In contrast to subscale ER, the CNM mean scores increased from T0 (M ± SD = 3.94 ± 0.79) to T1 (4.35 ± 0.59) and dropped to testing point T2 (4.18 ± 0.69). Post hoc pairwise comparison with Bonferroni correction showed similar results. CNM mean scores increased short-term (T0 to T1, p < 0.001) and decreased again at testing point T2 (T1 to T2, p < 0.001). The testing points T0 and T2 also showed a significant increase of CNM mean scores (T0 to T2, p < 0.001).

The subscale CNM also showed no statistical differences between modelers and model viewers (Figure 4B; F(1.73, 379.69) = 1.137, p = 0.316; partial eta squared = 0.01), indicating similar mean scores for both treatments (M ± SD: md = T0 (3.97 ± 0.69), T1 (4.36 ± 0.55); T2 (4.12 ± 0.72) and mv = T0 (3.93 ± 0.87), T1 (4.35 ± 0.62), T2 (4.22 ± 0.67)).

Observing effects of repeated measures of all applied SUMS items, a non-participant test-retest group yielded no statistical differences in a repeated measurement ANOVA (M ± SD = T0 (3.54 ± 0.41), T1 (3.62 ± 0.43); T2 (3.75 ± 0.36); F (2, 0 48) = 2.889; p = 0.065; partial eta squared =0.11).

4. Discussion

Over the years increasing efforts have been undertaken to establish models and modeling as integral parts of science curricula and several national education standards highlight their importance for scientific literacy [36,69]. The application of models and the implementation of modeling in science classrooms has been well described and has produced a long series of studies as both are supposed to introduce and engage students in authentic scientific inquiry [2,39,43]. We have shown that a hands-on module in an outreach laboratory is a successful approach for bridging abstract scientific theory with experimental observations through two kinds of model support (modeling vs. model viewing). A review of modeling-based learning (MbL) approaches specifies five areas that are closely linked to students’ learning outcomes which are contributing to cognitive, metacognitive, social, material, and epistemological aspects [70]. We investigated students’ cognitive achievement and reported that short- and mid-term knowledge increases after participation in a hands-on laboratory module with modeling tasks [34].

However, as earlier studies have already investigated the effects of inquiry-based modeling on students’ understanding of scientific models, especially over multiple testing points [71,72], we follow the demand for further effective implementations of MbL and give an example in the context of molecular DNA models [70]. The findings are in line with previous research and clearly demonstrate a significant improvement of students’ understanding of scientific models. Gobert and Pallant used a pre- and post-test design and scaffolded modeling tasks in which students developed their own models, then critiqued peers’ models, and finally reflected upon revised models in order to identify improved characteristics. Gobert and Pallant’s approach on authentic model-based learning also indicates a deeper understanding of content knowledge as well as an improved understanding of models and their use in science [71]. Another study from organic chemistry education demonstrated that combining two types of three-dimensional molecular models (physical vs. virtual) may foster students’ understanding of the model concept as well as the spatial understanding of molecular structures [72]. Therefore, even short-term interventions with adequate inquiry-based tasks seem to have the potential to foster students’ understanding of scientific models.

4.1. Influences of the Model-Based Approaches on Students’ Understanding of Multiple Models

The aspect of students understanding of multiple models (MM) refers to one original that is represented by different model objects [39,40]. The framework developed by Grünkorn et al. is complex and for reasons already mentioned (see Section 2.3) could not be adequately transferred to students’ answers. Nevertheless, some of our categories are comparable to those of Grünkorn et al. and their detailed category system is helpful in assessing students’ understanding of multiple models [41].

Quantitative comparison revealed no significant differences between treatments. This result, at first, was surprising because the two approaches lead to different ways of perceptions of the way to describe and simplify the theoretical background of DNA structure. The model viewers explore the DNA structure more passively through a didactically prepared representation (commercially available school model) as compared with the modelers that strengthen important modeling abilities such as actively designing individual models of DNA after mental modeling and modifying their models [28,32]. This implies that all model viewers have acquired the scientific background on identical models, while modelers constructed multiple models of differing model quality [34]. Therefore, we had expected differences in students’ responses to the treatments of at least two categories. As modelers might have experienced inspiring thoughts during creative modeling, more justifications about the existence of multiple models with various ideas about the original (MM1) would have been coherent. Additionally, we hypothesized that the category MM3 (different model design) could be assigned more often to the modelers’ answers because they were free to choose by themselves the material and design of their models from a modeling kit. However, both assumptions could not be statistically confirmed and suggest that the different treatments do not affect students’ reasoning.

Focusing on the comparison between our results and the framework of Grünkorn et al. our findings are partially in line with their categories in terms of multiple models [41]. First, category MM3 (different model design) is comparable to Level 1 [41], pointing to a low level of understanding, as many students only relate to material and design properties of model objects and consider models as teaching tools. Second, student answers on category MM4 (different focus) could be assigned to Level 2 [41], which corresponds to a medium level of understanding. According to Grosslight et al. [39], students with a median understanding realize that the construction of a model is connected to a specific purpose. Consequently, models are not seen as exact duplicates of an original but rather as a medium of something [73]. Third, remarkably less students argued about the existence of multiple models with various ideas and concepts (MM1) that can be classified as responses on highest Level 3 [41]. Herein, students mentioned, for example, different assumptions, differing interpretations, and their recognition that different models of an original can be valid at the same time [41,52]. The different frequency distribution between the levels as compared with our study could be explained by varying grades (seventh to eleventh grade), different contexts (biomembrane structures, human gullet structures, taste maps of the human tongue), and treatments [41]. These findings are in line with earlier research showing that students’ age, educational level, as well as the biological context are relevant factors in students’ understanding of models [44,51]. However, it is noticeable that most participants state the individuality of DNA (category MM2) as a reason for the existence of multiple models. Furthermore, some others argue that different research states could lead to different representation forms (category MM5). These two alternative categories are much more content-oriented than the others as students use newly acquired knowledge from the module for their explanations (e.g., arrangement of DNA bases cause different models, a DNA model could be changed in the future due to new findings). Category MM2 highlights typical biological properties of the original and MM5 multiple models caused by the process of scientific discovery. Both categories are apparent to students when they follow the historical discovery route of DNA structure during the module. In summary, arguments in the category MM2 emphasize a lower understanding of models from a medial perspective as an illustration of something [31,32]. This is also true for students’ answers in category MM5 since their reasoning indicates an initial understanding. According to Grünkorn et al. students understand only one model as the final model and are unaware that multiple models can be valid contemporaneously [41]. Finally, future research that focuses on investigations of students’ reasoning about multiple models over several test times would be of interest.

4.2. Influences of the Model-Based Approaches on Two Subscales of the SUMS

We clearly replicated the original factor structure of the quantitative instrument Students’ Understanding of Models in Science (SUMS), with the sub-aspects models, exact replicas (ER) and the changing nature of models (CNM), indicating a good fit of the instrument [38]. Additionally, our findings show significant positive influences for both of our approaches on both subscales, demonstrating that even short-term interventions could contribute to a deeper understanding of the role of models and modeling in science. This can also be confirmed by the measured effect sizes: For the differences in understanding between the three test times, a large effect can be reported for the subscale ER (0.32) and a medium effect for the subscale CNM (0.13). As there are multiple frameworks and assessment instruments, the relationship between our results and existing literature is presented for each sub-aspect [39,40,52].

The ER subscale investigates the students’ understanding of how close a model needs to be to the real thing. The observed mean scores (T0) are slightly lower but comparable to the original values from Treagust et al. on secondary school students (eighth to tenth grade) [38]. The empirical data confirms the common perception of models as simple copies in the pre-test. This understanding is considered naïve because it describes models primarily through accuracy and matching details which result in being very similar to the original [39,41]. However, many students are unaware that models can be defined as constructed representations with different theoretical perspectives, focusing on different aspects of an original to explain complex or unknown entities [39]. This is in line with other frameworks that also assign a majority of students to an understanding at lowest Level 1 under the sub-aspects such as kinds of models [39] or nature of models [41]. Reasons for this might be that students appreciate models primarily as visual objects in the classroom (medial perception, e.g., a heart model with detailed anatomical structures) and even teachers more often seem to neglect modeling as a typical method of science in the classroom [32,41]. The teachers’ views on models and modeling in learning science could explain these results. According to Justi and Gilbert, teachers know the value of models in the learning of science but often do not realize their value in learning about science [31]. Furthermore, many biology teachers seem to define models as reproductions and to ignore the idea of a model being a subjective mental image of something [40].

It is therefore encouraging that both methods lead to a short- and mid-term decrease of students’ understanding of models as exact replicas (ER). Consequently, our participants perceive models less as simple copies because they might have learned through the module that scientists use models and modeling when the actual appearance is not known yet. Both approaches also make students aware that an abstract model of DNA structure can still provide accurate insights even if some details are missing because they are irrelevant for the selected representation form.

The CNM subscale examines how strongly the students perceived models as always valid or appreciated that changes in scientific thinking can lead to adjusted models. Measured mean scores (T0) are slightly higher as compared with those from the Treagust et al. study [38], pointing to an agreement with the changing nature of models due to new findings or advanced technologies. Although mean scores were already relatively high in the pre-test, the observed short- and mid-term increases indicate further positive influences on students’ understanding of the changing nature of models (CNM) regardless of the treatment. This result makes sense, as our module concentrates on the discovery path of DNA structure when combining hands-on experiments with model-based tasks, and therefore our participants themselves follow the typical route of scientific inquiry. However, an evolved understanding of CNM might further be encourage by biology teachers if they stimulate critical thinking about the effectiveness of models and modeling for scientific reasoning [27].

4.3. Limitations of the Study

Nevertheless, our study might have the following limitations: Due to the design of the study, the modelers constructed their own model, whereas the model viewers investigated a regular school model. In consequence, we could have reported a greater variability of DNA models and model quality among the modelers, which is why the potential complexity of the models between the two treatments was partly different. Second, an additional pre-modeling phase that provides meta-modeling knowledge to all participants could be helpful for a further development of students’ modeling skills and in consequence for students’ understanding of models. Third, our participants were from the highest stratification secondary school level (‘Gymnasium’). Therefore, the results could not be generalize to other school types or other grades. As genetics is a complex and specific field, it receives less attention in other school types or is taught in higher classes so that comparability would be difficult.

5. Conclusions

Following the demand for effective model-based strategies with regard towards a more authentic science education [74], we have shown that combining hands-on experimentation with model-based tasks in an outreach laboratory (modeling vs. model viewing) successfully promotes students’ understanding of scientific models. Initially, the investigation of students’ reasoning about multiple models provided a typical cross-section for the age group surveyed and showed that a majority justified model differences with varying properties of the original (DNA) or with regard to the model design. According to the literature this corresponds more to a lower understanding of multiple models and emphasizes the medial perspective in which models are mainly regarded as teaching tools [32,39,41]. Furthermore, most student responses to this aspect were related to the inquiry-based setting about DNA structure (individuality of DNA and different research states). In consequence, transferability of established frameworks was difficult and alternative categories were formed. This demonstrates, that a specific biological context might play a decisive role in the students’ argumentation and could make it difficult to evaluate the model’s understanding within a standardized framework. Earlier studies also have reported that students’ understanding of models might be influenced by educational levels and biological contexts [44,51].

We could clearly reproduce the original factor structure of two subscales of the SUMS (Students’ Understanding of Models in Science [38]). Furthermore, our pre-, post- and retention-design provided interesting insights into the influences of model-based strategies under the sub-aspects models as exact replicas (ER) and the changing nature of models (CNM). We observed a short- and mid-term decrease for the subscale ER, which indicates that many participants diverged from a naïve perception of models as simple copies. In contrast, a short- and mid-term increase for the subscale CNM, points to a heightened awareness that new research findings could lead to changes and adaptions of existing scientific models. It is encouraging that even a one-day module has the power to improve students’ understanding towards a more scientific point of view. Therefore, we conclude that the context of DNA structure provides a fruitful example for combining hands-on experimentation with model-based learning in an out-of-school laboratory by offering access to an exciting path of discovery of molecular phenomena using student-centered hands-on tasks [22].

In summary, both student-centered approaches positively affect students’ understanding of models. However, creative modeling can be time-intense both in preparation and in classroom implementation, and this might sometimes even result in students’ misconceptions [34] Whereas, learning through model viewing offers an alternative way that can be realized much more easily in biology classrooms.

Nevertheless, there is still a dearth of investigations of innovative and working model applications in science classes. Moreover, future research needs to focus on the role of teachers, and to examine further facets of students’ understanding of models such as testing models and purpose of models. Even though our knowledge has come a long way towards successfully integrating models and modeling into science curricula, there are still some milestones to achieve until they are established educational practice.

Author Contributions

Conceptualization, J.M.; Formal analysis, J.M.; Funding acquisition, F.X.B.; Investigation, J.M.; Methodology, J.M.; Supervision, F.X.B.; Visualization, J.M.; Writing—original draft, J.M.; Writing—review & editing, F.X.B.

Funding

This study was supported by the ‘CREATIONS’ project funded by the European Union’s HORIZON 2020-SEAC-2014-1 Program (Grant: 665917) and by the ‘Qualitätsoffensive Lehrerbildung’ Program funded by the German Federal Ministry of Education and Research (BMBF; Grant: 01JA160). This publication was also funded by the German Research Foundation (DFG) and the University of Bayreuth in the APC funding program ‘Open Access Publishing’.

Acknowledgments

Special thanks to the teachers and students involved in this study for their cooperation.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results. This article reflects only the authors’ views. The European Union as well as the BMBF and the DFG are not liable for any use that might be made of information contained herein.

References

- Graw, J. Genetik [Genetics], 6th ed.; Springer: Berlin/Heidelberg, Germany, 2015; ISBN 9783662448168. [Google Scholar]

- Gilbert, J.K.; Boulter, C.; Rutherford, M. Models in explanations, Part 1: Horses for courses? Int. J. Sci. Educ. 1998, 20, 83–97. [Google Scholar] [CrossRef]

- Matynia, A.; Kushner, S.A.; Silva, A.J. Genetic approaches to molecular and cellular cognition: A focus on LTP and learning and memory. Ann. Rev. Genet. 2002, 36, 687–720. [Google Scholar] [CrossRef]

- Plomin, R.; Walker, S.O. Genetics and educational psychology. Br. J. Educ. Psychol. 2003, 73, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Watson, J.D.; Crick, F.H.C. Molecular structure of nucleic acids. A structure for deoxyribose nucleic acid. Nature 1953, 171, 737–738. [Google Scholar] [CrossRef]

- Usher, S. Letters of Note. Correspondence Deserving of a Wider Audience; Canongate: Edinburgh, Scotland, 2013; ISBN 9781782112235. [Google Scholar]

- Klug, A. Rosalind Franklin and the discovery of the structure of DNA. Nature 1968, 219, 808–810. [Google Scholar] [CrossRef] [PubMed]

- Elkin, L.O. Rosalind Franklin and the double helix. Phys. Today 2003, 56, 42–48. [Google Scholar] [CrossRef]

- Longden, B. Genetics—are there inherent learning difficulties? J. Biol. Educ. 1982, 16, 135–140. [Google Scholar] [CrossRef]

- Kindfield, A.C.H. Confusing chromosome number and structure: A common student error. J. Biol. Educ. 1991, 25, 193–200. [Google Scholar] [CrossRef]

- Euler, M. The Role of Experiments in the Teaching and Learning of Physics; IOS Press: Amsterdam, The Netherlands, 2015; pp. 175–221. [Google Scholar]

- Scharfenberg, F.-J.; Bogner, F.X.; Klautke, S. Learning in a gene technology laboratory with educational focus: Results of a teaching unit with authentic experiments. Biochem. Mol. Biol. Edu. 2007, 35, 28–39. [Google Scholar] [CrossRef]

- Franke, G.; Bogner, F.X. Cognitive influences of students’ alternative conceptions within a hands-on gene technology module. J. Educ. Res. 2011, 104, 158–170. [Google Scholar] [CrossRef]

- Scharfenberg, F.-J.; Bogner, F.X. Teaching Gene Technology in an Outreach Lab: Students’ Assigned Cognitive load clusters and the clusters’ relationships to learner characteristics, laboratory variables, and cognitive achievement. Res. Sci. Educ. 2013, 43, 141–161. [Google Scholar] [CrossRef]

- Langheinrich, J.; Bogner, F.X. Computer-related self-concept: The impact on cognitive achievement. Stud. Educ. Eval. 2016, 50, 46–52. [Google Scholar] [CrossRef]

- Scharfenberg, F.-J.; Bogner, F.X. A new two-step approach for hands-on teaching of gene technology: Effects on students’ activities during experimentation in an outreach gene technology lab. Res. Sci. Educ. 2011, 41, 505–523. [Google Scholar] [CrossRef]

- Langheinrich, J.; Bogner, F.X. Student conceptions about the DNA structure within a hierarchical organizational level: Improvement by experiment- and computer-based outreach learning. Biochem. Mol. Biol. Edu. 2015, 43, 393–402. [Google Scholar] [CrossRef]

- Goldschmidt, M.; Scharfenberg, F.-J.; Bogner, F.X. Instructional efficiency of different discussion approaches in an outreach laboratory: Teacher-guided versus student-centered. J. Educ. Res. 2016, 109, 27–36. [Google Scholar] [CrossRef]

- Bielik, T.; Yarden, A. Promoting the asking of research questions in a high-school biotechnology inquiry-oriented program. Int. J. STEM Educ. 2016, 3, 397. [Google Scholar] [CrossRef]

- Ben-Nun, M.S.; Yarden, A. Learning molecular genetics in teacher-led outreach laboratories. J. Biol. Educ. 2009, 44, 19–25. [Google Scholar] [CrossRef]

- Meissner, B.; Bogner, F.X. Enriching students’ education using interactive workstations at a salt mine turned science center. J. Chem. Educ. 2011, 88, 510–515. [Google Scholar] [CrossRef]

- Mierdel, J.; Bogner, F.X. Simply inGEN(E)ious! How creative DNA-modeling can enrich classic hands-on experimentation. 2019; submitted. [Google Scholar]

- Rotbain, Y.; Marbach-Ad, G.; Stavy, R. Effect of bead and illustrations models on high school students’ achievement in molecular genetics. J. Res. Sci. Teach. 2006, 43, 500–529. [Google Scholar] [CrossRef]

- Stull, A.T.; Gainer, M.J.; Hegarty, M. Learning by enacting: The role of embodiment in chemistry education. Learn. Instr. 2018, 55, 80–92. [Google Scholar] [CrossRef]

- Stull, A.T.; Hegarty, M. Model manipulation and learning: Fostering representational competence with virtual and concrete models. J. Educ. Psychol. 2016, 108, 509–527. [Google Scholar] [CrossRef]

- Ferk, V.; Vrtacnik, M.; Blejec, A.; Gril, A. Students’ understanding of molecular structure representations. Int. J. Sci. Educ. 2003, 25, 1227–1245. [Google Scholar] [CrossRef]

- Werner, S.; Förtsch, C.; Boone, W.; von Kotzebue, L.; Neuhaus, B.J. Investigating how german biology teachers use three-dimensional physical models in classroom instruction: A video study. Res. Sci. Educ. 2017, 1, 195. [Google Scholar] [CrossRef]

- Svoboda, J.; Passmore, C. The strategies of modeling in biology education. Sci. Educ. 2013, 22, 119–142. [Google Scholar] [CrossRef]

- Gilbert, J.K.; Justi, R. Learning Scientific Concepts from Modelling-Based Teaching. In Modelling-Based Teaching in Science Education. Models and Modeling in Science Education; Gilbert, J.K., Justi, R., Eds.; Springer: Cham, Switzerland, 2016; Volume 9. [Google Scholar]

- Odenbaugh, J. Idealized, inaccurate but successful: A pragmatic approach to evaluating models in theoretical ecology. Biol. Philos. 2005, 20, 231–255. [Google Scholar] [CrossRef]

- Justi, R.; Gilbert, J.K. Science teachers’ knowledge about and attitudes towards the use of models and modelling in learning science. Int. J. Sci. Educ. 2002, 24, 1273–1292. [Google Scholar] [CrossRef]

- Oh, P.S.; Oh, S.J. What teachers of science need to know about models: An overview. Int. J. Sci. Educ. 2011, 33, 1109–1130. [Google Scholar] [CrossRef]

- Mierdel, J.; Bogner, F.X. Investigations of modellers and model viewers in an out-of-school gene-technology laboratory. 2019; submitted. [Google Scholar]

- Mierdel, J.; Bogner, F.X. Is creativity, hands-on modeling and cognitive learning gender-dependent? Think. Skills Creat. 2019, 31, 91–102. [Google Scholar] [CrossRef]

- Runco, M.A.; Acar, S.; Cayirdag, N. A closer look at the creativity gap and why students are less creative at school than outside of school. Think. Skills Creat. 2017, 24, 242–249. [Google Scholar] [CrossRef]

- NGSS Lead States. Next Generation Science Standards: For States, by States; The National Academies Press: Washington, DC, USA, 2013. [Google Scholar]

- Halloun, I.A. Mediated modeling in science education. Sci. Educ. 2007, 16, 653–697. [Google Scholar] [CrossRef]

- Treagust, D.F.; Chittleborough, G.D.; Mamiala, T.L. Students’ understanding of the role of scientific models in learning science. Int. J. Sci. Educ. 2002, 24, 357–368. [Google Scholar] [CrossRef]

- Grosslight, L.; Unger, C.; Jay, E.; Smith, C.L. Understanding models and their use in science: Conceptions of middle and high school students and experts. J. Res. Sci. Teach. 1991, 28, 799–822. [Google Scholar] [CrossRef]

- Justi, R.; Gilbert, J.K. Teachers’ views on the nature of models. Int. J. Sci. Educ. 2003, 25, 1369–1386. [Google Scholar] [CrossRef]

- Grünkorn, J.; Upmeier zu Belzen, A.; Krüger, D. Assessing students’ understandings of biological models and their use in science to evaluate a theoretical framework. Int. J. Sci. Educ. 2014, 36, 1651–1684. [Google Scholar] [CrossRef]

- Gogolin, S.; Krüger, D. Students’ understanding of the nature and purpose of models. J. Res. Sci. Teach. 2018, 55, 1313–1338. [Google Scholar] [CrossRef]

- Chittleborough, G.D.; Treagust, D.F. Why models are advantageous to learning science. Educ. Quím. 2009, 20, 12–17. [Google Scholar] [CrossRef]

- Krell, M.; Upmeier zu Belzen, A.; Krüger, D. Students’ understanding of the purpose of models in different biological contexts. Int. J. Biol. Educ. 2012, 2, 1–34. [Google Scholar]

- Passmore, C.M.; Svoboda, J. Exploring opportunities for argumentation in modelling classrooms. Int. J. Sci. Educ. 2012, 34, 1535–1554. [Google Scholar] [CrossRef]

- Günther, S.; Fleige, J.; Upmeier zu Belzen, A.; Krüger, D. Interventionsstudie Mit Angehenden Lehrkräften zur Förderung von Modellkompetenz im Unterrichtsfach Biologie [Intervention Study with Pre-Service Teachers for Fostering Model Competence in Biology Education]. In Entwicklung Von Professionalität Pädagogischen Personals; Gräsel, C., Trempler, K., Eds.; Springer: Wiesbaden, Germany, 2017; pp. 215–236. [Google Scholar]

- Justi, R.S.; Gilbert, J.K. Modelling, teachers’ views on the nature of modelling, and implications for the education of modellers. Int. J. Sci. Educ. 2002, 24, 369–387. [Google Scholar] [CrossRef]

- Krell, M.; Walzer, C.; Hergert, S.; Krüger, D. Development and application of a category system to describe pre-service science teachers’ activities in the process of scientific modelling. Res. Sci. Educ. 2017, 1–27. [Google Scholar] [CrossRef]

- Treagust, D.F.; Chittleborough, G.D.; Mamiala, T.L. Students’ understanding of the descriptive and predictive nature of teaching models in organic chemistry. Res. Sci. Educ. 2004, 34, 1–20. [Google Scholar] [CrossRef]

- Krell, M.; Upmeier zu Belzen, A.; Krüger, D. Students’ levels of understanding models and modelling in biology: Global or aspect-dependent? Res. Sci. Educ. 2014, 44, 109–132. [Google Scholar] [CrossRef]

- Upmeier zu Belzen, A.; Krüger, D. Modellkompetenz im Biologieunterricht [Model competence in biology education]. Die Zeitschrift für Didaktik der Naturwissenschaften 2010, 16, 41–57. [Google Scholar]

- Wen-Yu Lee, S.; Chang, H.-Y.; Wu, H.-K. Students’ views of scientific models and modeling: Do representational characteristics of models and students’ educational levels matter? Res. Sci. Educ. 2017, 47, 305–328. [Google Scholar] [CrossRef]

- Ainsworth, S. DeFT: A conceptual framework for considering learning with multiple representations. Learn. Instr. 2006, 16, 183–198. [Google Scholar] [CrossRef]

- ISB. Staatsinstitut für Schulqualität und Bildungsforschung—Bavarian Syllabus Gymnasium G8; Kastner: Wolnzach, Germany, 2007. [Google Scholar]

- World Health Organization. Declaration of Helsinki. Bull. World Health Organ. 2001, 79, 373–374. [Google Scholar]

- Mayring, P. Qualitative Inhaltsanalyse: Grundlagen und Techniken (Beltz Pädagogik) Taschenbuch 2; Beltz: Weinheim, Germany, 2015. [Google Scholar]

- Cohen, J. Weighted kappa: Nominal scale agreement provision for scaled disagreement or partial credit. Psychol. Bull. 1968, 70, 213–220. [Google Scholar] [CrossRef] [PubMed]

- Landis, R.J.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Döring, N.; Bortz, J. Forschungsmethoden und Evaluation in den Sozial-und Humanwissenschaften [Research Methods and Evaluation in Social and Human Sciences], 5th ed.; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Kline, P. The Handbook of Psychological Testing, 2nd ed.; Routledge: London, UK, 2000. [Google Scholar]

- Hair, J.; Black, W.; Babin, B.; Anderson, R.; Tatham, R. Multivariate Data Analysis, 6th ed.; Pearson Educational: Cranbury, NJ, USA, 2006. [Google Scholar]

- Field, A.P. Discovering Statistics Using IBM SPSS Statistics (and Sex and Drugs and Rock’ n’ Roll), 4th ed.; SAGE: London, UK, 2013. [Google Scholar]

- Fisher, R.A. On the interpretation of chi square from contingency tables, and the calculation of P. J. Royal Stat. Soc. 1922, 85, 87–94. [Google Scholar] [CrossRef]

- Pearson, K. On the criterion that a given system of deviations from the probable in the case of a correlated system of variables is such that it can be reasonably supposed to have arisen from random sampling. Philos. Mag. 1900, 50, 157–175. [Google Scholar] [CrossRef]

- Kaiser, H.F. A second generation little jiffy. Psychometrika 1970, 35, 401–415. [Google Scholar] [CrossRef]

- Kaiser, H.F. Consequently perhaps. Measurement 1960, XX, 141–151. [Google Scholar]

- Bühner, M.; Ziegler, M. Statistik für Psychologen und Sozialwissenschaftler [Statistics for Psychologists and Social Scientists], 3rd ed.; Pearson Studium: München, Germany, 2012; ISBN 9783827372741. [Google Scholar]

- Field, A.P. Discovering Statistics Using SPSS (and Sex and Drugs and Rock’ n’ Roll), 3rd ed.; SAGE: London, UK, 2009. [Google Scholar]

- KMK. Beschlüsse der Kultusministerkonferenz—Bildungsstandards im Fach Biologie für den Mittleren Bildungsabschluss [Resolution of the Standing Conference of the Ministers of Education and Cultural Affairs of the Länder in the Federal Republic of Germany—Standards of Biology Education for Secondary School]; Luchterhand: Munich, Germany, 2005. [Google Scholar]

- Louca, L.T.; Zacharia, Z.C. Modeling-based learning in science education: Cognitive, metacognitive, social, material and epistemological contributions. Educ. Rev. 2012, 64, 471–492. [Google Scholar] [CrossRef]

- Gobert, J.D.; Pallant, A. Fostering students’ epistemologies of models via authentic model-based tasks. J. Sci. Educ. Technol. 2004, 13, 7–22. [Google Scholar] [CrossRef]

- Dori, Y.J.; Barak, M. Virtual and physical molecular modeling: Fostering model perception and spatial understanding. Educ. Technol. Soc. 2001, 4, 61–74. [Google Scholar]

- Mahr, B. Information science and the logic of models. Softw. Syst. Model. 2009, 8, 365–383. [Google Scholar] [CrossRef]

- Gilbert, J.K. Models and Modelling: Routes to More Authentic Science Education. Int. J. Sci. Math. Educ. 2004, 2, 115–130. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).