An Analysis of the Features of Words That Influence Vocabulary Difficulty

Abstract

1. An Analysis of the Features of Words That Influence Vocabulary Difficulty

2. Review of Research

2.1. Role in Language

2.2. Role in the Lexicon

2.3. Role in Students’ Existing Knowledge

3. Study One: Words on an Assessment of Core Vocabulary

3.1. Method

3.1.1. The Assessment

3.1.2. Variables for Analysis

3.2. Sample of Students

3.3. Data Analysis

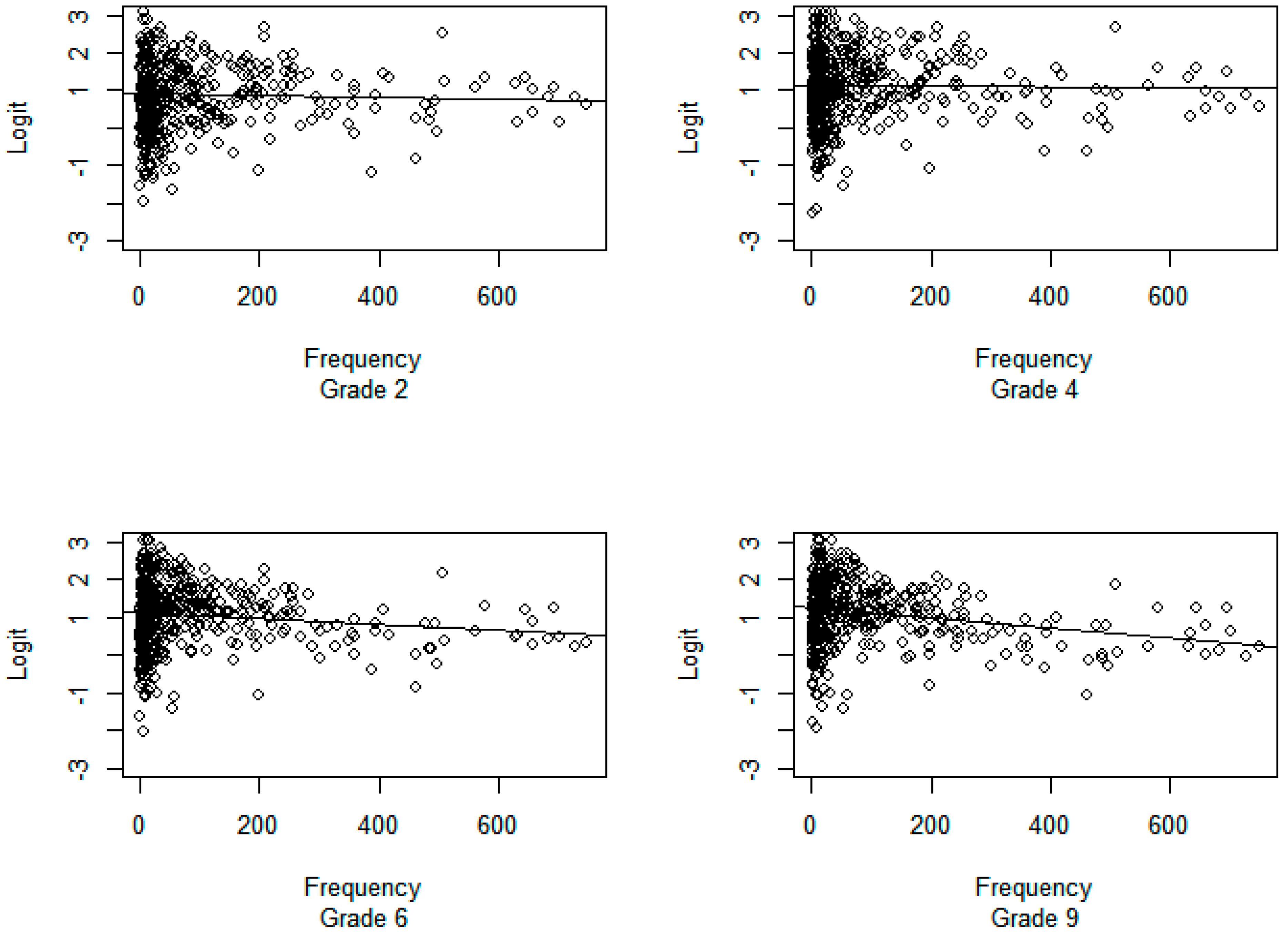

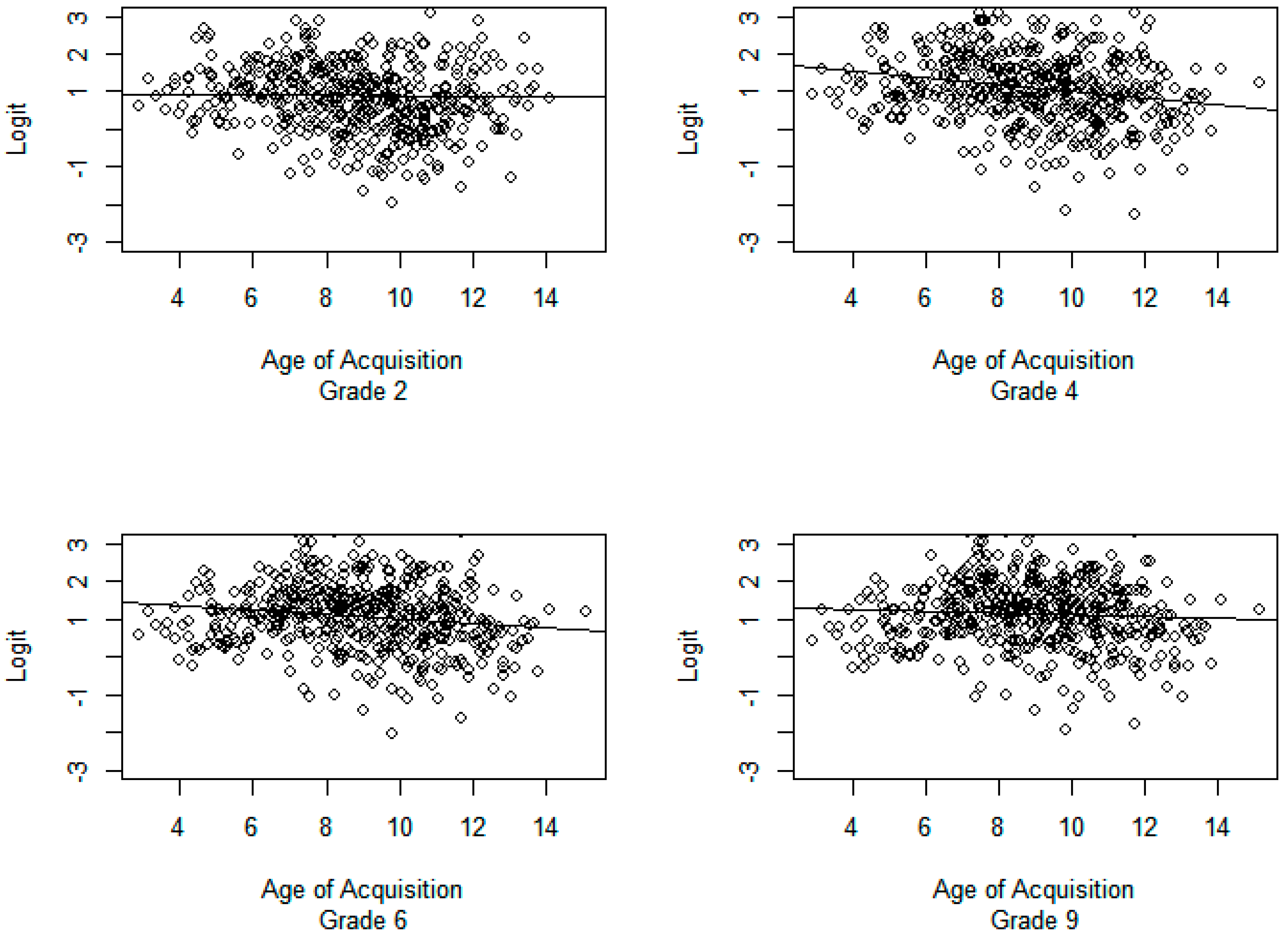

3.4. Results

3.4.1. Word Characteristics: Overall

3.4.2. Word Characteristics: Grade Bands

3.5. Summary

4. Study Two

4.1. Method

VASE Vocabulary Assessment

4.2. Analysis and Results

4.3. Summary

5. Overall Discussion

6. Implications for Curriculum Design and Instruction

7. Limitations and Future Research

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Davis, F.B. Two new measures of reading ability. J. Educ. Psychol. 1942, 33, 365–372. [Google Scholar] [CrossRef]

- Hoff, E.; Tian, C. Socioeconomic status and cultural influences on language. J. Commun. Disord. 2005, 38, 271–278. [Google Scholar] [CrossRef] [PubMed]

- Gates, A.I. The word recognition ability and the reading vocabulary of second-and third-grade children. Read. Teach. 1962, 15, 443–448. [Google Scholar]

- Stallman, A.; Commeyras, M.; Kerr, B.; Reimer, K.; Jimenez, R.; Hartman, D.; Pearson, P.D. Are “new” words really new? Read. Res. Instr. 1989, 29, 12–29. [Google Scholar] [CrossRef]

- Hiebert, E.H. The words we teach, the words we don’t: An examination of the taught and rare vocabularies of core reading programs. Paper Presented at the Annual Meeting of the American Educational Research Association, Philadelphia, PA, USA, 7 April 2014. Available online: https://www.academia.edu/7858492/The_Words_We_Teach_The_Words_We_Dont_An_examination_of_the_taught_and_rare_vocabularies_of_core_reading_programs (accessed on 27 November 2018).

- Frankenberg-Garcia, A.; Flowerdew, L.; Aston, G. (Eds.) New Trends in Corpora and Language Learning; Bloomsbury Publishing: New York, NY, USA, 2013. [Google Scholar]

- Mugglestone, L. (Ed.) The Oxford History of English; Oxford University Press: Cambridge, UK, 2012. [Google Scholar]

- Kuperman, V.; Stadthagen-Gonzalez, H.; Brysbaert, M. Age-of-acquisition ratings for 30,000 English words. Behav. Res. Methods 2012, 44, 978–990. [Google Scholar] [CrossRef] [PubMed]

- U.S. Department of Education. Academic Performance and Outcomes for English Learners: Performance on National Assessments and On-Time Graduation Rates; U.S. Department of Education: Washington, DC, USA, 2017. Available online: https://www2.ed.gov/datastory/el-outcomes/index.html (accessed on 5 December 2018).

- Nagy, W.E.; Scott, J.A. Vocabulary processes. In Handbook of Reading Research; Kamil, M., Mosenthal, P., Pearson, P.D., Barr, R., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2000; Volume 3, pp. 269–284. [Google Scholar]

- McKeown, M.; Deane, P.; Scott, J.; Krovetz, R.; Lawless, R. Vocabulary Assessment to Support Instruction: Building Rich Word-Learning Experiences; Guilford Press: New York, NY, USA, 2017. [Google Scholar]

- Pearson, P.D.; Hiebert, E.H.; Kamil, M.L. Vocabulary assessment: What we know and what we need to learn. Read. Res. Q. 2007, 42, 282–296. [Google Scholar] [CrossRef]

- Schmitt, N. Size and depth of vocabulary knowledge: What the research shows. Learning 2014, 64, 913–951. [Google Scholar] [CrossRef]

- Nagy, W.E.; Hiebert, E.H. Toward a theory of word selection. In Handbook of Reading Research; Kamil, M.L., Pearson, P.D., Moje, E.B., Afflerbach, P.P., Eds.; Longman: New York, NY, USA, 2011; Volume 4, pp. 388–404. [Google Scholar]

- Balota, D.A.; Yap, M.J.; Cortese, M.J. Visual word recognition: The journey from features to meaning (a travel update). Handb. Psycholinguist. 2006, 2, 285–375. [Google Scholar]

- Nagy, W.; Anderson, R.C.; Schommer, M.; Scott, J.A.; Stallman, A.C. Morphological families in the internal lexicon. Read. Res. Q. 1989, 24, 262–282. [Google Scholar] [CrossRef]

- Nerlich, B.; Clarke, D.D. Ambiguities we live by: Towards a pragmatics of polysemy. J. Pragmat. 2001, 33, 1–20. [Google Scholar] [CrossRef]

- Cervetti, G.N.; Hiebert, E.H.; Pearson, P.D.; McClung, N.A. Factors that influence the difficulty of science words. J. Lit. Res. 2015, 47, 153–185. [Google Scholar] [CrossRef]

- Sullivan, J. Developing Knowledge of Polysemous Vocabulary. Ph.D. Thesis, University of Waterloo, Waterloo, ON, Canada, 2006. [Google Scholar]

- Millis, M.L.; Bution, S.B. The effect of polysemy on lexical decision time: Now you see it, now you don’t. Mem. Cognit. 1989, 17, 141–147. [Google Scholar] [CrossRef]

- Carroll, J.B.; Davies, P.; Richman, B. The American Heritage Word Frequency Book; Houghton Mifflin: Boston, MA, USA, 1971. [Google Scholar]

- Dockrell, J.E.; Braisby, N.; Best, R.M. Children’s acquisition of science terms: Simple exposure is insufficient. Learn. Instr. 2007, 17, 577–594. [Google Scholar] [CrossRef]

- Marzano, R.J.; Marzano, J.S. A Cluster Approach to Elementary Vocabulary Instruction; International Reading Association: Newark, DE, USA, 1988. [Google Scholar]

- Jenkins, J.R.; Dixon, R. Vocabulary learning. Contemp. Educ. Psychol. 1983, 8, 237–260. [Google Scholar] [CrossRef]

- Nagy, W.E.; Scott, J.A. Word schemas: Expectations about the form and meaning of new words. Cognit. Instr. 1990, 7, 105–127. [Google Scholar] [CrossRef]

- Buchanan, T.W.; Etzel, J.A.; Adolphs, R.; Tranel, D. The influence of autonomic arousal and semantic relatedness on memory for emotional words. Int. J. Psychophysiol. 2006, 61, 26–33. [Google Scholar] [CrossRef] [PubMed]

- Erten, İ.H.; Tekin, M. Effects on vocabulary acquisition of presenting new words in semantic sets versus semantically unrelated sets. System 2008, 36, 407–422. [Google Scholar] [CrossRef]

- Venezky, R.L. The American Way of Spelling: The Structure and Origins of American English Orthography; Guilford Press: New York, NY, USA, 1999. [Google Scholar]

- Nagy, W.E.; Anderson, R.C. How many words are there in printed school English? Read. Res. Q. 1984, 19, 304–330. [Google Scholar] [CrossRef]

- Carlisle, J.F.; Stone, C. Exploring the role of morphemes in word reading. Read. Res. Q. 2005, 40, 428–449. [Google Scholar] [CrossRef]

- Zeno, S.M.; Ivens, S.H.; Millard, R.T.; Duvvuri, R. The Educator’s Word Frequency Guide; Touchstone Applied Science Associates Inc.: Brewster, MA, USA, 1995. [Google Scholar]

- Toglia, M.P.; Battig, W.F. Handbook of Semantic Word Norms; Lawrence Erlbaum: Mahwah, NJ, USA, 1978. [Google Scholar]

- Freebody, P.; Anderson, R.C. Effects of vocabulary difficulty, text cohesion, and schema availability on reading comprehension. Read. Res. Q. 1983, 18, 277–294. [Google Scholar] [CrossRef]

- Morrison, C.M.; Ellis, A.W. Roles of word frequency and age of acquisition in word naming and lexical decision. J. Exp. Psychol. Learn. Mem. Cognit. 1995, 21, 116. [Google Scholar] [CrossRef]

- Bergman, C.B.; Martelli, M.; Burani, C.; Pelli, D.; Zoccolotti, P. How the word length effect develops with age. J. Vis. 2006, 6, 999. [Google Scholar] [CrossRef]

- Miller, L.T.; Lee, C.J. Construct validation of the Peabody Picture Vocabulary Test—Revised: A structural equation model of the acquisition order of words. Psychol. Assess. 1993, 5, 438. [Google Scholar] [CrossRef]

- Biemiller, A. Words Worth Teaching: Closing the Vocabulary Gap; McGraw-Hill SRA: Columbus, OH, USA, 2010. [Google Scholar]

- Dale, E.; O’Rourke, J. The Living Word Vocabulary; World Book-Childcraft International: Chicago, IL, USA, 1981. [Google Scholar]

- Landauer, T.K.; Dumais, S.T. A solution to Plato’s problem: The latent semantic analysis theory of acquisition, induction, and representation of knowledge. Psychol. Rev. 1997, 104, 211. [Google Scholar] [CrossRef]

- Lund, K.; Burgess, C. Producing high-dimensional semantic spaces from lexical co-occurrence. Behav. Res. Methods Instrum. Comput. 1996, 28, 203–208. [Google Scholar] [CrossRef]

- Miller, G. WordNet: An Electronic Lexical Database; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Paivio, A.; Yuille, J.; Madigan, S.A. Concreteness, imagery and meaningfulness values for 925 nouns. J. Exp. Psychol. 1968, 76, 1–25. [Google Scholar] [CrossRef]

- Altarriba, J.; Bauer, L.M.; Benvenuto, C. Concreteness, context-availability, and imageability ratings and word associations for abstract, concrete, and emotion words. Behav. Res. Methods Instrum. Comput. 1999, 31, 578–602. [Google Scholar] [CrossRef]

- De Groot, A.; Keijzer, R. What is hard to learn is easy to forget: The roles of word concreteness, cognate status, and word frequency in foreign language vocabulary learning and forgetting. Lang. Learn. 2000, 50, 1–56. [Google Scholar] [CrossRef]

- Varela, F.J.; Thompson, E.T.; Rosch, E. The Embodied Mind: Cognitive Science and Human Experience; The MIT Press: Cambridge, MA, USA, 1991. [Google Scholar]

- Thorndike, E.L. The Teacher’s Word Book; Bureau of Publications, Teachers College, Columbia University: New York, NY, USA, 1921. [Google Scholar]

- Thorndike, E.L. A Teacher’s Word Book of the 20,000 Words; Bureau of Publications, Teachers College, Columbia University: New York, NY, USA, 1932. [Google Scholar]

- Thorndike, E.L.; Lorge, I. The Teacher’s Word Book of 30,000 Words; Bureau of Publications, Teachers College, Columbia University: New York, NY, USA, 1944. [Google Scholar]

- Elson, W.H.; Gray, W.S. The Elson Basic Readers; Scott Foresman: Chicago, IL, USA, 1931. [Google Scholar]

- Gates, A.I. The Work-Play Books; Macmillan: New York, NY, USA, 1930. [Google Scholar]

- Hiebert, E.H.; Raphael, T.E. Psychological perspectives on literacy and extensions to educational practice. In Handbook of Educational Psychology; Berliner, D.C., Calfee, R.C., Eds.; Macmillan: New York, NY, USA, 1996; pp. 550–602. [Google Scholar]

- Zipf, G.K. The Psychology of Language; Houghton-Mifflin: Boston, MA, USA, 1935. [Google Scholar]

- Kučera, H.; Francis, W. Computational Analysis of Present Day American English; Brown University Press: Providence, RI, USA, 1967. [Google Scholar]

- Davies, M. The 385+ million word Corpus of Contemporary American English (1990–2008+): Design, architecture, and linguistic insights. Int. J. Corpus Linguist. 2009, 14, 159–190. [Google Scholar] [CrossRef]

- Leech, G.; Rayson, P. Word Frequencies in Written and Spoken English: Based on the British National Corpus; Routledge: New York, NY, USA, 2014. [Google Scholar]

- Hiebert, E.H. Standards, assessment, and text difficulty. In What Research Has to Say about Reading Instruction, 3rd ed.; Farstrup, A.E., Samuels, S.J., Eds.; International Reading Association: Newark, DE, USA, 2002; pp. 337–369. [Google Scholar]

- Dolch, E.W. A basic sight vocabulary. Elem. Sch. J. 1936, 36, 456–460. [Google Scholar] [CrossRef]

- Fry, E.B. The Vocabulary Teacher’s Book of Lists; Jossey-Bass: Hoboken, NJ, USA, 2004. [Google Scholar]

- Clay, M. Becoming Literate: The Construction of Inner Control; Heinemann: Portsmouth, NH, USA, 1991. [Google Scholar]

- Stahl, S.; Heubach, K. Fluency-oriented reading instruction. J. Lit. Res. 2005, 37, 25–60. [Google Scholar] [CrossRef]

- Reading Excellence Act. 1998. Available online: www.gpo.gov/fdsys/pkg/BILLS-105hr2614eas/pdf/BILLS-105hr2614eas.pdf (accessed on 1 January 2019).

- Hiebert, E.H. In pursuit of an effective, efficient vocabulary curriculum for the elementary grades. In The Teaching and Learning of Vocabulary: Bringing Scientific Research to Practice; Hiebert, E.H., Kamil, M., Eds.; LEA: Mahwah, NJ, USA, 2005; pp. 243–263. [Google Scholar]

- Hiebert, E.H. Core vocabulary and the challenge of complex text. In Quality Reading Instruction in the Age of Common Core Standards; Neuman, S.B., Gambrell, L.B., Eds.; International Reading Association: Newark, DE, USA, 2013; pp. 149–161. [Google Scholar]

- National Governors Association Center for Best Practices & Council of Chief State School Officers. Common Core State Standards for English Language Arts & Literacy in History/Social Studies, Science, and Technical Subjects; Appendix A; National Governors Association Center for Best Practices & Council of Chief State School Officers: Washington, DC, USA, 2010; Available online: www.corestandards.org/assets/Appendix_A.pdf (accessed on 1 January 2019).

- Reading Plus. InSight Assessment; Reading Plus: Winooski, VT, USA, 2016. [Google Scholar]

- Vocabulary Innovations in Education Consortium. Vocabulary Assessment Study in Education; August 2014. Available online: http://vineconsortium.org/vase/ (accessed on 12 August 2018).

- Hiebert, E.H. Word Zone Profiler; TextProject: Santa Cruz, CA, USA, 2012. [Google Scholar]

- Becker, W.C.; Dixon, R.; Anderson-Inman, L. Morphographic and Root Word Analysis of 26,000 High Frequency Words; University of Oregon Follow Through Project, College of Education: Eugene, OR, USA, 1980. [Google Scholar]

- Brysbaert, M.; Warriner, A.B.; Kuperman, V. Concreteness ratings for 40 thousand generally known English word lemmas. Behav. Res. Methods 2014, 46, 904–911. [Google Scholar] [CrossRef] [PubMed]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2016; Available online: https://www.R-project.org/ (accessed on 1 January 2019).

- Bates, D.; Maechler, M.; Bolker, B.; Walker, S. Fitting linear mixed-effects models Using lme4. J. Stat. Softw. 2015, 67, 1–48. [Google Scholar] [CrossRef]

- Cortese, M.J.; Schock, J. Imageability and age of acquisition effects in disyllabic word recognition. Q. J. Exp. Psychol. 2013, 66, 946–972. [Google Scholar] [CrossRef] [PubMed]

- Monaghan, P. Age of acquisition predicts rate of lexical evolution. Cognition 2014, 133, 530–534. [Google Scholar] [CrossRef] [PubMed]

- Carlisle, J.F.; Katz, L.A. Effects of word and morpheme familiarity on reading of derived words. Read. Writ. Interdiscip. J. 2006, 19, 669–693. [Google Scholar] [CrossRef]

- Dijkstra, T.; Martín, F.M.; Schulpen, B.; Schreuder, R.; Baayen, R.H. A roommate in cream: Morphological family size effects on interlingual homograph recognition. Lang. Cognit. Process. 2007, 20, 7–41. [Google Scholar] [CrossRef]

- California Department of Education. 29 June 2018. Facts about English Learners in California. Available online: https://www.cde.ca.gov/ds/sd/cb/cefelfacts.asp (accessed on 12 August 2018).

- Laufer, B. Why are some words more difficult than others? Some intralexical factors that affect the learning of words. Int. Rev. Appl. Linguist. 1990, 28, 293–307. [Google Scholar] [CrossRef]

- Crossley, S.; Salsbury, T.; McNamara, D. Measuring L2 lexical growth using hypernymic relationships. Lang. Learn. 2009, 59, 307–334. [Google Scholar] [CrossRef]

- Salsbury, T.; Crossley, S.A.; McNamara, D.S. Psycholinguistic word information in second language oral discourse. Second Lang. Res. 2011, 27, 343–360. [Google Scholar] [CrossRef]

- Graves, M.F.; Ringstaff, C.; Li, L.; Flynn, K. Effects of teaching upper elementary grade students to use Word Learning Strategies. Read. Psychol. 2018, 1–20. [Google Scholar] [CrossRef]

- Scott, J.A.; Flinspach, S.L.; Vevea, J.L.; Castaneda, R. Vocabulary Knowledge as a Multidimensional Concept: A Six Factor Model; Poster at the Annual Meeting of the Society for the Scientific Study of Reading; Hapuna Beach: Waimea, HI, USA, 2015. [Google Scholar]

- Thissen, D.; Steinberg, L.; Mooney, J. Trace lines for testlets: A use of multiple-categorical-response models. J. Educ. Meas. 1989, 26, 247–260. [Google Scholar] [CrossRef]

- Samejima, F. The graded response model. In Handbook of Modern Item Response Theory; van der Linden, W.J., Hambleton, R.K., Eds.; Springer: New York, NY, USA, 1996; pp. 85–100. [Google Scholar]

- Graves, M.F.; August, D.; Mancilla-Martinez, J. Teaching Vocabulary to English Language Learners; Teachers College Press: New York, NY, USA, 2012. [Google Scholar]

- Gardner, D.; Davies, M. A new academic vocabulary list. Appl. Linguist. 2013, 35, 305–327. [Google Scholar] [CrossRef]

- Nagy, W.; Townsend, D. Words as tools: Learning academic vocabulary as language acquisition. Read. Res. Q. 2012, 47, 91–108. [Google Scholar] [CrossRef]

- Landauer, T.K.; Kireyev, K.; Panaccione, C. Word maturity: A new metric for word knowledge. Sci. Stud. Read. 2011, 15, 92–108. [Google Scholar] [CrossRef]

- Gehsmann, K.M.; Spichtig, A.N.; Pascoe, J.P.; Ferrara, J.D. Comparing the construct of reading proficiency across five commonly used reading assessments: Implications for policy and practice. Paper Presented at the 67th Annual Conference of the Literacy Research Association, Tampa, FL, USA, 29 November 2017. Available online: https://www.researchgate.net/publication/321731743_Comparing_the_Construct_of_Reading_Proficiency_Across_Five_Commonly_Used_Reading_Assessments_Implications_for_Policy_and_Practice_Literacy_Research_Association_December_2017 (accessed on 1 January 2019).

- Wright, T.S.; Cervetti, G.N. A systematic review of the research on vocabulary instruction that impacts text comprehension. Read. Res. Q. 2017, 52, 203–226. [Google Scholar] [CrossRef]

- Ceci, S.J.; Papierno, P.B. The rhetoric and reality of gap closing: When the “have-nots” gain but the “haves” gain even more. Am. Psychol. 2005, 60, 149–160. [Google Scholar] [CrossRef]

- Scott, J.A.; Lubliner, S.; Hiebert, E.H. Constructs underlying word selection and assessment tasks in the archival research on vocabulary instruction. In 55th Yearbook of the National Reading Conference; Hoffman, J.V., Schallert, D.L., Fairbanks, C.M., Worthy, J., Maloch, B., Eds.; NRC: Oak Creek, WI, USA, 2006; pp. 264–275. [Google Scholar]

- Brenner, D.; Hiebert, E.H.; Tompkins, R. How much and what are third graders reading? In Reading More, Reading Better: Solving Problems in the Teaching of Literacy; Hiebert, E.H., Ed.; Guilford Press: New York, NY, USA, 2009; pp. 118–140. [Google Scholar]

- Swanson, E.; Wanzek, J.; McCulley, L.; Stillman-Spisak, S.; Vaughn, S.; Simmons, D.; Fogarty, M.; Hairrell, A. Literacy and text reading in middle and high school social studies and English language arts classrooms. Read. Writ. Q. 2016, 32, 199–222. [Google Scholar] [CrossRef]

| Nagy/Hiebert (2011) Criteria | Study Measure | |

|---|---|---|

| Role in Language | Frequency: How often does this word occur in text? | •Frequency •Polysemy •Part of speech |

| Dispersion: How does the frequency of a word differ by genre, topic, or subject area? | •Dispersion (Domain specificity) | |

| Role in the Lexicon | Morphological relatedness: What is the size of the morphological family? | •Size of morphological family |

| Semantic relatedness: How is this word related to other words that students know or need to know? | ||

| Role in Students’ Existing Knowledge | Familiarity: Is this word already known to students and, if so, to what degree? | •Age of acquisition •Length in letters and syllables |

| Conceptual difficulty: To what extent can the meaning of this word be explained to students in terms of words, concepts, and experiences with which they are already familiar? | •Concreteness–Abstractness |

| Assess. Level | U | Morph. Family Size | Dispersion | Word Length (Letters) | Word Length (Syllables) | Polysemy | Parts of Speech | A of A | Concreteness | Example |

|---|---|---|---|---|---|---|---|---|---|---|

| InSight 1 | 449.10 (468.80) | 742.7 (339.3) | 0.90 (0.01) | 5.48 (0.71) | 1.68 (0.71) | 8.8 (8.5) | 1.88 (0) | 5.21 (1.2) | 3.15 (0.19) | words |

| InSight 2 | 222.40 (6.36) | 428.58 (360.91) | 0.90 (0.10) | 5.90 (0.71) | 1.85 (0) | 12.13 (0.75) | 1.80 (0) | 6.40 (0.52) | 3.06 (0.01) | strong |

| InSight 3 | 134.68 (36.06) | 229.74 (254.79) | 0.89 (0.04) | 7.28 (0) | 2.60 (0.71) | 6.90 (12.0) | 1.40 (0.71) | 7.56 (0.69) | 2.65 (0.12) | minutes |

| InSight 4 | 80.83 (22.63) | 189.05 (139.90) | 0.86 (0.16) | 6.53 (2.12) | 2.28 (0) | 6.53 (0.71) | 1.45 (0) | 7.90 (1.53) | 2.72 (0.03) | private |

| InSight 5 | 52.43 (25.46) | 205.64 (42.87) | 0.86 (0.01) | 7.28 (2.12) | 2.50 (0.71) | 6.93 (6.36) | 1.60 (0.71) | 8.56 (2.91) | 2.49 (1.43) | opinion |

| InSight 6 | 35.10 (9.19) | 141.83 (1.43) | 0.87 (0.06) | 8.33 (1.41) | 2.8 (0) | 5.98 (2.83) | 1.35 (0.71) | 8.60 (2.40) | 2.46 (0.57) | selection |

| InSight 7 | 24.30 (9.89) | 90.37 (80.20) | 0.83 (0.17) | 8.73 (1.41) | 3.1 (0.71) | 5.43 (0.71) | 1.25 (0.71) | 9.63 (0.84) | 2.46 (0.94) | challenge |

| InSight 8 | 16.23 (3.54) | 60.13 (0.06) | 0.82 (0.06) | 8.30 (1.41) | 2.93 (0.71) | 4.1 (3.92) | 1.23 (0.71) | 9.49 (0.27) | 2.51 (0.28) | isolated |

| InSight 9 | 10.30 (8.49) | 54.23 (148.58) | 0.80 (0.14) | 8.33 (0) | 2.78 (1.41) | 4.50 (4.24) | 1.38 (0.71) | 9.98 (2.84) | 2.28 (0.04) | literally |

| InSight 10 | 7.83 (8.49) | 34.39 (10.98) | 0.74 (0.08) | 8.65 (2.12) | 3.00 (1.41) | 3.80 (1.41) | 1.28 (0.71) | 10.41 (3.01) | 2.43 (0.02) | corporate |

| InSight 11 | 4.95 (6.36) | 37.84 (3.97) | 0.67 (0.18) | 8.80 (0.71) | 3.18 (0.71) | 3.80 (1.41) | 1.3 (0) | 11.11 (0.47) | 2.15 (0.41) | magnitude |

| InSight 12 | 2.14 (1.76) | 9.30 (15.73) | 0.63 (0.25) | 9.05 (0.71) | 3.23 (0.71) | 2.93 (0) | 1.13 (0) | 11.96 (0.44) | 2.12 (0.66) | negotiate |

| VASE | 20.64 (40.62) | 79.21 (126.27) | 0.65 (0.20) | 7.79 (2.14) | 2.60 (0.97) | 3.75 (3.42) | 1.35 (0.54) | 9.4 (1.82) | 3.12 (0.92) | sequence |

| Model Comparison | AIC | BIC | LogLike | Deviance | Chi X | df | p-Value |

|---|---|---|---|---|---|---|---|

| M0: Null | 4047.30 | 4064.00 | –2020.70 | 4041.30 | - | - | - |

| M1: +Characteristics | 3476.00 | 3542.70 | –1726.00 | 3452.00 | 589.32 | 9 | p < 0.001 |

| M3: +Grade Level | 3354.30 | 3437.70 | –1662.20 | 3324.30 | 127.66 | 3 | p < 0.001 |

| M0: Null Model | SE | p-Value | M1: +Characteristic | SE | p-Value | M2: +Grades | SE | p-Value | |

|---|---|---|---|---|---|---|---|---|---|

| Fixed Effects: | |||||||||

| Intercept | 1.0490 | 0.0392 | <0.001 | 2.51 | 0.53 | <0.001 | 2.3410 | 0.5273 | <0.001 |

| Freq | - | - | - | –0.28 | 0.06 | <0.001 | –0.2757 | 0.0594 | <0.001 |

| Morph | - | - | - | –0.08 | 0.05 | 0.140 | –0.0740 | 0.0508 | 0.236 |

| Disp | - | - | - | 0.15 | 0.32 | 0.644 | 0.1352 | 0.3233 | 0.946 |

| Length | - | - | - | 0.03 | 0.03 | 0.388 | 0.0277 | 0.0316 | 0.495 |

| Syllab | - | - | - | –0.09 | 0.07 | 0.218 | –0.0854 | 0.0690 | 0.191 |

| Polysem | - | - | - | –0.01 | 0.01 | 0.740 | –0.0029 | 0.0091 | 0.939 |

| Pos | - | - | - | –0.04 | 0.07 | 0.582 | –0.0404 | 0.0712 | 0.341 |

| Age | - | - | - | –0.15 | 0.03 | <0.001 | –0.1458 | 0.0264 | <0.001 |

| Concrete | - | - | - | 0.03 | 0.06 | 0.623 | 0.0279 | 0.0576 | 0.767 |

| is 4th | - | - | - | - | - | - | 0.2753 | 0.0251 | <0.001 |

| is 6th | - | - | - | - | - | - | 0.1656 | 0.0239 | <0.001 |

| is 9th | - | - | - | - | - | - | 0.1757 | 0.0256 | <0.001 |

| Random Effects: | |||||||||

| σ2e | 0.6725 | 0.6502 | 0.6505 | ||||||

| σ2u0 | 0.2625 | 134.6224 | 123.5799 |

| Word Characteristics | Grades 2–3 | Grades 4–5 | Grades 6–8 | Grades 9–12 | ||||

|---|---|---|---|---|---|---|---|---|

| Coef (SE) | p-Val | Coef (SE) | p-Val | Coef (SE) | p-Val | Coef (SE) | p-Val | |

| Intercept | 2.455 (0.5544) | 0.000 | 3.0359 (0.6307) | 0.000 | 2.5839 (0.5887) | 0.000 | 2.4958 (0.5749) | 0.000 |

| Frequency | –0.0013 (0.0003) | 0.0000 | –0.0016 (0.0004) | 0.0002 | –0.0025 (0.0005) | 0.0000 | –0.0031 (0.0006) | 0.0000 |

| Morphology | –0.0002 (0.0001) | 0.1100 | –0.0003 (0.0002) | 0.1067 | –0.0002 (0.0002) | 0.2120 | –0.0002 (0.0002) | 0.2740 |

| Dispersion | 0.1487 (0.4313) | 0.7300 | –0.075 (0.4576) | 0.8699 | 0.1336 (0.3795) | 0.7250 | 0.2209 (0.3476) | 0.5250 |

| Length | –0.0014 (0.0312) | 0.9630 | 0.0207 (0.0353) | 0.5576 | 0.0314 (0.0338) | 0.3540 | 0.0222 (0.0339) | 0.5130 |

| Syllables | –0.0783 (0.0642) | 0.2230 | –0.0543 (0.0736) | 0.4611 | –0.0597 (0.0734) | 0.4160 | –0.0167 (0.0744) | 0.8230 |

| Polysemy | 0.006 (0.0062) | 0.3320 | 0.0122 (0.0084) | 0.1480 | –0.0003 (0.0103) | 0.9730 | –0.0048 (0.0118) | 0.6820 |

| Parts of Speech | –0.0387 (0.0558) | 0.4890 | –0.1512 (0.0702) | 0.0318 | –0.0853 (0.0765) | 0.2650 | –0.0343 (0.0806) | 0.6710 |

| Age of Acquisition | –0.158 (0.0261) | 0.0000 | –0.1767 (0.0295) | 0.0000 | –0.1516 (0.0284) | 0.0000 | –0.1459 (0.0283) | 0.0000 |

| Concreteness | –0.0003 (0.0472) | 0.9950 | 0.0495 (0.059) | 0.4012 | 0.0409 (0.062) | 0.5100 | 0.0113 (0.0654) | 0.8630 |

| Predictor | Coefficient | SE | Z Value | p-Value |

|---|---|---|---|---|

| (Intercept) | 2.0988 | 0.6221 | 3.3740 | 0.0000 |

| Word Location | –0.1926 | 0.0116 | –16.5970 | 0.0000 |

| Word Frequency | 0.0008 | 0.0018 | 0.4200 | 0.6748 |

| Morphology | 0.0002 | 0.0005 | 0.3460 | 0.7293 |

| Dispersion | 0.1609 | 0.3910 | 0.4110 | 0.6808 |

| Length | –0.1253 | 0.0561 | –2.2350 | 0.0254 |

| Syllables | 0.3239 | 0.1236 | 2.6200 | 0.0000 |

| Parts of Speech | 0.1390 | 0.1383 | 1.0050 | 0.3150 |

| Polysemy | –0.0352 | 0.0251 | –1.4020 | 0.1608 |

| Age of Acquisition | –0.1019 | 0.0360 | –2.8320 | 0.0046 |

| Concreteness | 0.1544 | 0.0734 | 2.1040 | 0.0354 |

| Predictor | Coefficient | SE | Z Value | p-Value |

|---|---|---|---|---|

| (Intercept) | 2.5000 | 0.6095 | 4.1010 | 0.0000 |

| Word Location | –0.2046 | 0.0119 | –17.2310 | 0.0000 |

| Word Frequency | 0.0011 | 0.0019 | 0.5990 | 0.5491 |

| Morphology | 0.0000 | 0.0004 | –0.0090 | 0.9931 |

| Dispersion | 0.0748 | 0.3927 | 0.1900 | 0.8490 |

| Length | –0.1221 | 0.0566 | –2.1570 | 0.0310 |

| Syllables | 0.2832 | 0.1263 | 2.2420 | 0.0250 |

| Parts of Speech | 0.1568 | 0.1400 | 1.1200 | 0.2626 |

| Polysemy | –0.0269 | 0.0253 | –1.0620 | 0.2884 |

| Age of Acquisition | –0.0836 | 0.0354 | –2.3610 | 0.0182 |

| Concreteness | 0.1228 | 0.0752 | 1.6330 | 0.1025 |

| Predictor | Coefficient | SE | Z Value | p-Value |

|---|---|---|---|---|

| (Intercept) | 0.7095 | 0.6035 | 1.1760 | 0.2398 |

| Word Location | –0.1718 | 0.0114 | –15.1230 | 0.0000 |

| Word Frequency | 0.0015 | 0.0017 | 0.9310 | 0.3518 |

| Morphology | 0.0001 | 0.0005 | 0.1740 | 0.8617 |

| Dispersion | 0.4053 | 0.4049 | 1.0010 | 0.3168 |

| Length | –0.1516 | 0.0566 | –2.6800 | 0.0074 |

| Syllables | 0.4401 | 0.1262 | 3.4880 | 0.0005 |

| Parts of Speech | 0.1239 | 0.1343 | 0.9230 | 0.3560 |

| Polysemy | –0.0437 | 0.0242 | –1.8060 | 0.0710 |

| Age of Acquisition | –0.1219 | 0.0332 | –3.6700 | 0.0002 |

| Concreteness | 0.2748 | 0.0723 | 3.8000 | 0.0001 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hiebert, E.H.; Scott, J.A.; Castaneda, R.; Spichtig, A. An Analysis of the Features of Words That Influence Vocabulary Difficulty. Educ. Sci. 2019, 9, 8. https://doi.org/10.3390/educsci9010008

Hiebert EH, Scott JA, Castaneda R, Spichtig A. An Analysis of the Features of Words That Influence Vocabulary Difficulty. Education Sciences. 2019; 9(1):8. https://doi.org/10.3390/educsci9010008

Chicago/Turabian StyleHiebert, Elfrieda H., Judith A. Scott, Ruben Castaneda, and Alexandra Spichtig. 2019. "An Analysis of the Features of Words That Influence Vocabulary Difficulty" Education Sciences 9, no. 1: 8. https://doi.org/10.3390/educsci9010008

APA StyleHiebert, E. H., Scott, J. A., Castaneda, R., & Spichtig, A. (2019). An Analysis of the Features of Words That Influence Vocabulary Difficulty. Education Sciences, 9(1), 8. https://doi.org/10.3390/educsci9010008