A Psychometric Re-Examination of the Science Teaching Efficacy and Beliefs Instrument (STEBI) in a Canadian Context

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data

- Personal information (e.g., gender, education and experience);

- Professional development (PD) (e.g., the number of PD days);

- Time management in the classroom;

- Assessment practices including types of assessment and marking;

- Teaching strategy;

- Science-teaching efficacy and beliefs (as measured by the Science Teaching Efficacy and Beliefs Instrument).

2.2. Participants

2.3. Instrument

2.4. Analysis

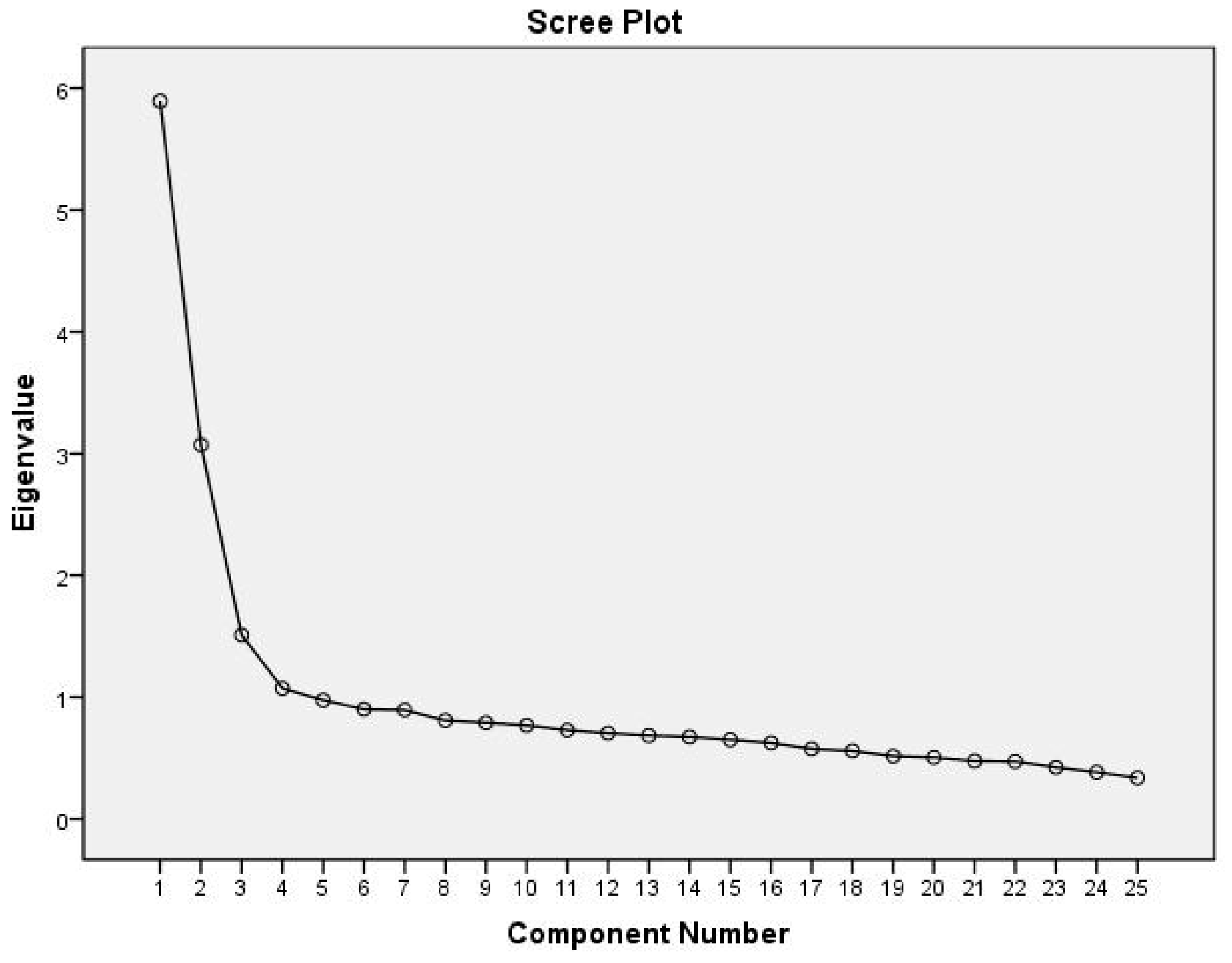

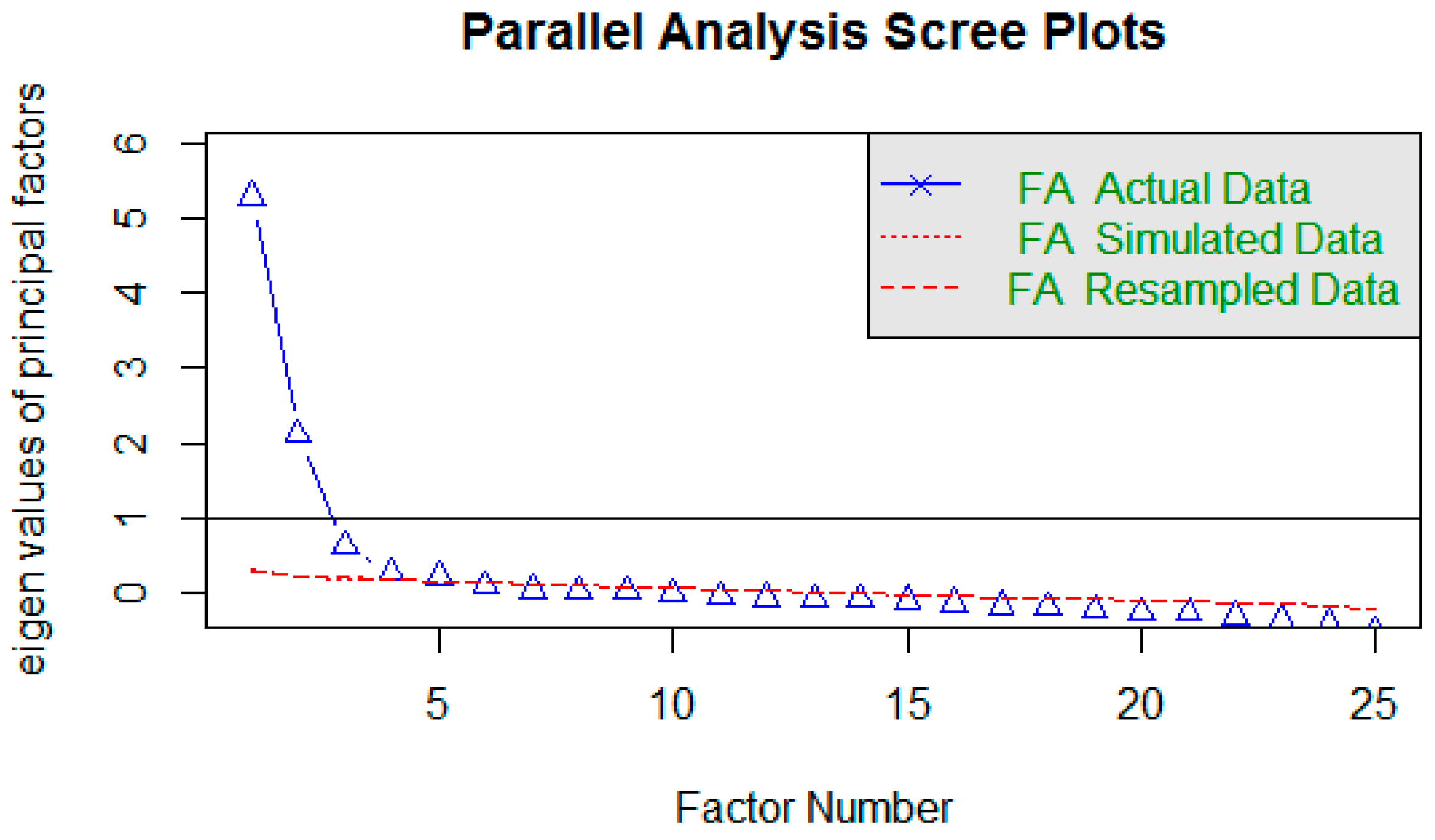

3. Results

4. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bandura, A. Social Foundations of Thought and Action; Pearson Publications: Englewood Cliffs, NJ, USA, 1986. [Google Scholar]

- Bandura, A. (Ed.) Self-Efficacy in Changing Societies; Cambridge University Press: Cambridge, UK, 1995. [Google Scholar]

- Tschannen-Moran, M.; Hoy, A.W.; Hoy, W.K. Teacher efficacy: Its meaning and measure. Rev. Educ. Res. 1998, 68, 202–248. [Google Scholar] [CrossRef]

- Bandura, A. Self-efficacy: toward a unifying theory of behavioral change. Psychol. Rev. 1977, 84, 191. [Google Scholar] [CrossRef] [PubMed]

- Gibson, S.; Dembo, M.H. Teacher efficacy: A construct validation. J. Educ. Psychol. 1984, 76, 569. [Google Scholar] [CrossRef]

- Schunk, D.H. Social cognitive theory and self-regulated learning. In Self-Regulated Learning and Academic Achievement; Springer: New York, NY, USA, 1989; pp. 83–110. [Google Scholar]

- Tschannen-Moran, M.; Hoy, A.W. Teacher efficacy: Capturing an elusive construct. Teach. Teach. Educ. 2001, 17, 783–805. [Google Scholar] [CrossRef]

- Tschannen-Moran, M.; Hoy, A.W. The differential antecedents of self-efficacy beliefs of novice and experienced teachers. Teach. Teach. Educ. 2007, 23, 944–956. [Google Scholar] [CrossRef]

- Wang, H.; Hall, N.C.; Rahimi, S. Self-efficacy and causal attributions in teachers: Effects on burnout, job satisfaction, illness, and quitting intentions. Teach. Teach. Educ. 2015, 47, 120–130. [Google Scholar] [CrossRef]

- Allinder, R.M. The relationship between efficacy and the instructional practices of special education teachers and consultants. Teach. Educ. Spec. Educ. 1994, 17, 86–95. [Google Scholar] [CrossRef]

- Fackler, S.; Malmberg, L.E. Teachers’ self-efficacy in 14 OECD countries: Teacher, student group, school and leadership effects. Teach. Teach. Educ. 2016, 56, 185–195. [Google Scholar] [CrossRef]

- Gabriele, A.J.; Joram, E. Teachers’ reflections on their reform-based teaching in mathematics: Implications for the development of teacher self-efficacy. Act. Teach. Educ. 2007, 29, 60–74. [Google Scholar] [CrossRef]

- Cho, Y.; Shim, S.S. Predicting teachers’ achievement goals for teaching: The role of perceived school goal structure and teachers’ sense of efficacy. Teach. Teach. Educ. 2013, 32, 12–21. [Google Scholar] [CrossRef]

- Wolters, C.A.; Daugherty, S.G. Goal structures and teachers’ sense of efficacy: Their relation and association to teaching experience and academic level. J. Educ. Psychol. 2007, 99, 181. [Google Scholar] [CrossRef]

- Guo, Y.; Connor, C.M.; Yang, Y.; Roehrig, A.D.; Morrison, F.J. The effects of teacher qualification, teacher self-efficacy, and classroom practices on fifth graders’ literacy outcomes. Elem. Sch. J. 2012, 113, 3–24. [Google Scholar] [CrossRef]

- Leroy, N.; Bressoux, P.; Sarrazin, P.; Trouilloud, D. Impact of teachers’ implicit theories and perceived pressures on the establishment of an autonomy supportive climate. Eur. J. Psycho. Educ. 2007, 22, 529. [Google Scholar] [CrossRef]

- Mashburn, A.J.; Hamre, B.K.; Downer, J.T.; Pianta, R.C. Teacher and classroom characteristics associated with teachers’ ratings of prekindergartners’ relationships and behaviors. J. Psychoeduc. Assess. 2006, 24, 367–380. [Google Scholar] [CrossRef]

- Caprara, G.V.; Barbaranelli, C.; Steca, P.; Malone, P.S. Teachers’ self-efficacy beliefs as determinants of job satisfaction and students’ academic achievement: A study at the school level. J. Sch. Psychol. 2006, 44, 473–490. [Google Scholar] [CrossRef]

- Mojavezi, A.; Tamiz, M.P. The Impact of Teacher Self-efficacy on the Students’ Motivation and Achievement. Theory Pract. Lang. Stud. 2012, 2. [Google Scholar] [CrossRef]

- Muijs, D.; Reynolds, D. Teachers’ beliefs and behaviors: What really matters? J. Classr. Interact. 2002, 37, 3–15. [Google Scholar]

- Ross, J.A. Teacher efficacy and the effects of coaching on student achievement. Can. J. Educ. 1992, 17, 51–65. [Google Scholar] [CrossRef]

- Taştan, S.B.; Davoudi, S.M.M.; Masalimova, A.R.; Bersanov, A.S.; Kurbanov, R.A.; Boiarchuk, A.V.; Pavlushin, A.A. The Impacts of Teacher’s Efficacy and Motivation on Student’s Academic Achievement in Science Education among Secondary and High School Students. EURASIA J. Math. Sci. Technol. Educ. 2018, 14, 2353–2366. [Google Scholar] [CrossRef] [Green Version]

- Mahler, D.; Großschedl, J.; Harms, U. Does motivation matter?—The relationship between teachers’ self-efficacy and enthusiasm and students’ performance. PLoS ONE 2018, 13, e0207252. [Google Scholar] [CrossRef]

- Miller, A.D.; Ramirez, E.M.; Murdock, T.B. The influence of teachers’ self-efficacy on perceptions: Perceived teacher competence and respect and student effort and achievement. Teach. Teach. Educ. 2017, 64, 260–269. [Google Scholar] [CrossRef]

- Linnenbrink, E.A.; Pintrich, P.R. The role of self-efficacy beliefs instudent engagement and learning intheclassroom. Read. Writ. Q. 2003, 19, 119–137. [Google Scholar] [CrossRef]

- Pajares, F. Self-efficacy beliefs, motivation, and achievement in writing: A review of the literature. Read. Writ. Q. 2003, 19, 139–158. [Google Scholar] [CrossRef]

- Zimmerman, B.J.; Bandura, A.; Martinez-Pons, M. Self-motivation for academic attainment: The role of self-efficacy beliefs and personal goal setting. Am. Educ. Res. J. 1992, 29, 663–676. [Google Scholar] [CrossRef]

- Charalambous, C.Y.; Philippou, G.N.; Kyriakides, L. Tracing the development of preservice teachers’ efficacy beliefs in teaching mathematics during fieldwork. Educ. Stud. Math. 2008, 67, 125–142. [Google Scholar] [CrossRef]

- Çakiroglu, J.; Çakiroglu, E.; Boone, W.J. Pre-Service Teacher Self-Efficacy Beliefs Regarding Science Teaching: A Comparison of Pre-Service Teachers in Turkey and the USA. Sci. Educ. 2005, 14, 31–40. [Google Scholar]

- Bleicher, R.E. Revisiting the STEBI-B: Measuring self-efficacy in preservice elementary teachers. Sch. Sci. Math. 2004, 104, 383–391. [Google Scholar] [CrossRef]

- Riggs, I.M.; Enochs, L.G. Toward the development of an elementary teacher’s science teaching efficacy belief instrument. Sci. Educ. 1990, 74, 625–637. [Google Scholar] [CrossRef]

- Rohaan, E.J.; Taconis, R.; Jochems, W.M. Exploring the underlying components of primary school teachers’ pedagogical content knowledge for technology education. Eurasia J. Math. Sci. Technol. Educ. 2011, 7, 293–304. [Google Scholar] [CrossRef]

- Mavrikaki, E.; Athanasiou, K. Development and Application of an Instrument to Measure Greek Primary Education Teachers’ Biology Teaching Self-efficacy Beliefs. Eurasia J. Math. Sci. Technol. Educ. 2011, 7, 203–213. [Google Scholar] [CrossRef] [Green Version]

- Enochs, L.G.; Riggs, I.M. Further development of an elementary science teaching efficacy belief instrument: A preservice elementary scale. Sch. Sci. Math. 1990, 90, 694–706. [Google Scholar] [CrossRef]

- Shroyer, G.; Riggs, I.; Enochs, L. Measurement of Science Teachers’ Efficacy beliefs. In The Role of Science Teachers’ Beliefs in International Classrooms; Sense Publishers: Dordrecht, The Netherlands, 2014; pp. 103–118. [Google Scholar]

- Klinger, D. The evolving culture of large-scale assessments in Canadian education. Can. J. Educ. Adm. Policy 2008, 76, 1–34. [Google Scholar]

- Volante, L. An alternative vision for large-scale assessment in Canada. J. Teach. Learn. 2006, 4. [Google Scholar] [CrossRef]

- DeVellis, R.F. Scale Development: Theory and Applications; Sage Publications: Thousand Oaks, CA, USA, 2016; Volume 26. [Google Scholar]

- O’Grady, K.; Houme, K.P. PCAP 2013: Report on the Pan-Canadian Assessment of Science, Reading, and Mathematics; Council of Ministers of Education: Toronto, ON, Canada, 2015. [Google Scholar]

- Wang, M.C.; Haertel, G.D.; Walberg, H.J. Synthesis of research: What Helps Students Learn? Educ. Leadersh. 1986, 43, 60–69. [Google Scholar]

- Morris, J.E.; Lummis, G.W.; McKinnon, D.H.; Heyworth, J. Measuring preservice teacher self-efficacy in music and visual arts: Validation of an amended science teacher efficacy belief instrument. Teach. Teach. Educ. 2017, 64, 1–11. [Google Scholar] [CrossRef]

- Henson, R.K.; Kogan, L.R.; Vacha-Haase, T. A reliability generalization study of the teacher efficacy scale and related instruments. Educ. Psychol. Meas. 2001, 61, 404–420. [Google Scholar] [CrossRef]

- RCoreTeam. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2018. [Google Scholar]

- O’connor, B.P. SPSS and SAS programs for determining the number of components using parallel analysis and Velicer’s MAP test. Behav. Res. Methods 2000, 32, 396–402. [Google Scholar] [CrossRef] [Green Version]

- Tucker, L.R. A Method for Synthesis of Factor Analysis Studies; Personnel Research Section Report No.984; Department of the Army: Washington, DC, USA, 1951.

- Costello, A.B.; Osborne, J.W. Best practices in exploratory factor analysis: Four recommendations for getting the most from your analysis. Pract. Assess. Res. Eval. 2005, 10, 1–9. [Google Scholar]

- Wilkinson, L.; APA Task Force on Statistical Inference. Statistical methods in psychology journals: Guidelines and explanations. Am. Psychol. 1999, 54, 594–604. [Google Scholar] [CrossRef]

| PCAP 2013 | STEBI (1990) | |||||

|---|---|---|---|---|---|---|

| Oblique Solution | Orthogonal Solution | Original Solution | ||||

| PSTE | STOE | PSTE | STOE | PSTE | STOE | |

| Item1* | −0.10 | 0.48 | −0.07 | 0.48 | 0.06 | 0.44 |

| item2* | 0.35 | 0.15 | 0.36 | 0.18 | 0.54 | 0.07 |

| Item3 | 0.74 | −0.04 | 0.74 | 0.02 | 0.67 | −0.02 |

| item4* | −0.02 | 0.53 | 0.02 | 0.53 | −0.05 | 0.53 |

| item5* | 0.60 | 0.05 | 0.60 | 0.10 | 0.69 | 0.04 |

| Item6 | 0.59 | −0.06 | 0.58 | −0.01 | 0.64 | 0.04 |

| item7* | −0.29 | 0.48 | −0.26 | 0.45 | −0.14 | 0.57 |

| Item8 | 0.69 | −0.07 | 0.69 | −0.02 | 0.68 | 0.04 |

| item9* | 0.10 | 0.40 | 0.12 | 0.40 | 0.07 | 0.35 |

| Item10 | −0.09 | 0.27 | −0.07 | 0.27 | −0.07 | 0.39 |

| item11* | 0.02 | 0.45 | 0.05 | 0.45 | −0.03 | 0.43 |

| item12* | 0.72 | −0.05 | 0.71 | 0.00 | 0.75 | 0 |

| Item13 | 0.11 | 0.27 | 0.13 | 0.28 | 0.08 | 0.41 |

| item14* | −0.01 | 0.60 | 0.03 | 0.60 | −0.01 | 0.61 |

| item15* | 0.01 | 0.63 | 0.06 | 0.63 | −0.05 | 0.7 |

| item16* | 0.20 | 0.34 | 0.23 | 0.36 | −0.01 | 0.52 |

| Item17 | 0.70 | −0.04 | 0.69 | 0.01 | 0.75 | 0.05 |

| item18* | 0.67 | −0.05 | 0.66 | 0.00 | 0.67 | −0.01 |

| Item19 | 0.80 | −0.10 | 0.79 | −0.04 | 0.76 | −0.09 |

| Item20 | 0.10 | 0.31 | 0.12 | 0.32 | 0.16 | 0.35 |

| Item21 | 0.53 | 0.08 | 0.54 | 0.12 | 0.69 | −0.07 |

| Item22 | 0.60 | 0.03 | 0.60 | 0.07 | 0.72 | −0.07 |

| item23* | 0.47 | 0.06 | 0.48 | 0.10 | 0.6 | 0.08 |

| Item24 | 0.55 | 0.08 | 0.55 | 0.12 | 0.69 | 0.02 |

| Item25 | 0.03 | 0.34 | 0.06 | 0.34 | 0.04 | 0.37 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moslemi, N.; Mousavi, A. A Psychometric Re-Examination of the Science Teaching Efficacy and Beliefs Instrument (STEBI) in a Canadian Context. Educ. Sci. 2019, 9, 17. https://doi.org/10.3390/educsci9010017

Moslemi N, Mousavi A. A Psychometric Re-Examination of the Science Teaching Efficacy and Beliefs Instrument (STEBI) in a Canadian Context. Education Sciences. 2019; 9(1):17. https://doi.org/10.3390/educsci9010017

Chicago/Turabian StyleMoslemi, Neda, and Amin Mousavi. 2019. "A Psychometric Re-Examination of the Science Teaching Efficacy and Beliefs Instrument (STEBI) in a Canadian Context" Education Sciences 9, no. 1: 17. https://doi.org/10.3390/educsci9010017

APA StyleMoslemi, N., & Mousavi, A. (2019). A Psychometric Re-Examination of the Science Teaching Efficacy and Beliefs Instrument (STEBI) in a Canadian Context. Education Sciences, 9(1), 17. https://doi.org/10.3390/educsci9010017