Abstract

Intuitive knowledge seems to influence human decision-making outside of consciousness and differs from deliberate cognitive and metacognitive processes. Intuitive knowledge can play an essential role in problem solving and may offer the initiation of subsequent learning processes. Scientific discovery learning with computer simulations leads to the acquisition of intuitive knowledge. To improve knowledge acquisition, particular instructional support is needed as pure discovery learning often does not lead to successful learning outcomes. Hence, the goal of this study was to determine whether two different instructional interventions for scientific discovery effectively produced intuitive knowledge acquisition when learning with computer simulations. Instructional interventions for learning with computer simulations on the topic ‘ecosystem water’ were developed and tested in the two well-known categories data interpretation and self-regulation using a sample of 117 eighth graders during science class. The results demonstrated the efficacy of these instructional interventions on learners’ intuitive knowledge acquisition. A predetermined combination of instructional support for data interpretation and for self-regulation proved to be successful for learners’ intuitive knowledge acquisition after a learning session involving the computer simulation. Furthermore, the instructional intervention describing and interpreting own simulation outcomes for data interpretation seems to be an effective method for acquiring intuitive knowledge.

1. Introduction

This study examines the effects of a particular type of instructional support for intuitive knowledge acquisition through scientific discovery learning with an ecological computer simulation.

Discovery learning [1] refers to an approach where learners discover knowledge or concepts of a domain with the available material in a mainly self-regulated way. Extending this approach, scientific discovery learning refers specifically to learning science, e.g., references [2,3,4,5]. In contrast to learning opportunities in which learning occurs through knowledge transfer from the teacher to the students, knowledge acquisition through scientific discovery learning using computer simulations is dependent on the active participation of learners in the learning process [6,7,8]. Learners are asked to manipulate given parameters and monitor the results of their manipulations by means of an output. Thus knowledge can be developed in an interactive and independent approach.

Knowledge acquisition through scientific discovery learning with computer simulations seems to be different from knowledge acquisition with a more or less expository approach [7]. It is supposed that scientific discovery learning with computer simulations results in intuitive knowledge gain that is different from learning outcomes acquired in a more traditional manner where mere transfer of declarative knowledge to the learner is prevalent [7]. Intuitive knowledge is considered to be unreflected knowledge without conscious awareness [9] and can be regarded as crucial for scaffolding judgment and decision-making in further learning processes [10]. It seems to influence human decision-making outside of consciousness [10,11] and differs from deliberate cognitive and metacognitive processes. Hence, intuitive knowledge can play an essential role in problem solving and may offer the initiation of subsequent learning processes [12]. Therefore, this phenomenon can contribute to teaching and learning biological principles, concepts, rules, and terms.

Numerous studies show that scientific discovery learning often represents an obstacle for students’ learning, e.g., references [5,13,14,15,16,17]. However, providing students with methods of learning with computer simulations supplemented with specific instructional support can enhance knowledge gain [2]. Particular instructional support was given to two categories of scientific discovery, data interpretation’ and ‘self-regulation’ and it was then examined whether this led to students’ intuitive knowledge acquisition when learning with a computer simulation in a biological context.

2. Theoretical Background

2.1. Intuitive Knowledge

Intuitive knowledge, which is considered unreflected knowledge (cf. [18,19]), enables the anticipation of a situation’s possible outcomes in comparatively less time than reflecting about the situation [20]. Concerning this matter, Swaak and de Jong [8] speak of intuitive knowledge as being quickly available, and they defined this knowledge type as “the quick perception of anticipated situations” (p. 288). Fensham and Marton [21], as referred to in Lindström, Marton and Ottoson [22], defined intuition as “formulating or solving a problem through a sudden illumination based on global perception of a phenomenon” and stated that it “originates from widely varied experience of that phenomenon over a long time” (p. 265). It is emphasized that necessary experiences attained over time are stored in the long-term memory as prior knowledge (cf. [10]) to allow the acquisition of intuitive knowledge.

Research literature shows a wide range of terms associated with intuitive knowledge, such as intuition [10,23,24,25,26,27,28], tacit knowledge [29,30], implicit knowledge [31], and intuitive understanding [22]. Among the various definitions of intuition, e.g., references [20,32], we refer to the definition by Swaak and de Jong [8] and implement the concept of intuitive knowledge synonymously with the term intuition.

It is supposed that intuitive knowledge (or intuition) can be seen as a kind of ‘hunch’ [28] and may influence an individual’s judgment, decisions, and behavior. In this respect, Betsch [10] defined intuition as “a process of thinking” where the output “can serve as a basis for judgement and decisions” (p. 4). Here, it is assumed that intuitive knowledge offers the initiation of ensuing learning processes [11,29,33]. It is argued that intuition seems to influence human decision-making [34]. Fischbein [26] constitutes that intuitions are implicit and operate automatically on a subconscious level (cf. [10,11]). Referring to Westcott [35], Fischbein [26] cited that intuition “occurs when an individual reaches a conclusion on the basis of less explicit information than is ordinarily required to reach that conclusion” (p. 97). Hence, intuitive knowledge is considered to be unreflected knowledge and allows conclusions to be reached by using less existing and necessary information [35]. In this regard, intuition differs from deliberate cognitive and metacognitive processes which require attention and working memory capacity [36]. Therefore, intuitive knowledge originates beyond conscious thought [10,20,23] and rather, may be regarded as naive lay knowledge [37]. Mostly, learners cannot pay attention to all given information simultaneously, and thus, they are requested to focus on relevant information. This deliberate process requires sequential processing of particular given information. In contrast, intuition is capable of handling an enormous amount of information concurrently by using already existing experiences stored in the long-term memory, and it can play an essential role in problem solving.

The five characteristic criteria for intuitive knowledge according to Swaak and de Jong [7,8], are as follows:

- Intuitive knowledge can only be acquired by using already existing previous knowledge in perceptually rich dynamic situations. It is assumed that when applying previous knowledge in situations containing a huge amount of information, implicitly induced learning processes lead to intuitive knowledge acquisition.

- Intuitive knowledge is hard to verbalize. This means that intuitive knowledge differs from conceptual knowledge, which is regarded as a network of concepts and their relationships to a functional structure generated through reflective learning that can be articulated (cf. [38]). However, according to Lindström, Marton and Ottoson [22], intuitive and conceptual understanding should not be considered as separate knowledge types. They believe intuitive and conceptual understanding to be intertwined aspects of a learner’s awareness. Hence, intuitive knowledge can be seen as a quality of conceptual knowledge [39].

- Perception is crucial when referring to intuitive knowledge. The illustration of situations plays an essential role in the acquisition of intuitive knowledge. In this regard, Fischbein [26] emphasized the importance of visualization through external representation.

- Another characteristic referring to intuitive knowledge is the importance of anticipation. Anticipation refers to the presumption of occurrences, developments, or actions. Intuitions anticipate what will or will not happen, and intuitive evaluation anticipates the possible outcomes of a situation without the ability to explicitly explain them [7,29,40,41]. Here, intuitive knowledge can be ascribed as ‘know without knowing’ [10] (p. 4), so that ‘the input to this process is mostly provided by knowledge stored in the long-term memory that has been primarily acquired via associative learning. The input is processed automatically and without conscious awareness. The output is a feeling that can serve as a basis for judgments and decisions’ [10] (p. 4).

- It is assumed that the access to intuitive knowledge in the memory is different to the access to declarative knowledge as factual and conceptual knowledge. The difficulty of verbalizing intuitive knowledge might be one reason for this differential access. Swaak and de Jong [8] mention that ‘the action-driven and perception-driven elements in learning ‘tune’ the knowledge and give it an intuitive quality’ (p. 287).

It is argued that learners acquire intuitive knowledge while learning with computer simulations [7,8,42]. Thomas and Hooper [43] mentioned that computer simulations could be considered to be ‘experiencing programs’ with the opportunity for learners to acquire “an intuitive understanding of the learning goal” (p. 499). Swaak and de Jong [7] proposed that intuitive knowledge can only be acquired after applying already existing previous knowledge in situations perceived as rich and dynamic. From a rich learning environment, such as a computer simulation, learners are capable of extracting a great amount of domain-specific information that is usually displayed as a dynamic, graphic representation of the output. In this regard, intuitive knowledge cannot be generated by learning by a more traditional approach, for example, by only reading textbooks. Here, Fischbein [26] constitutes that intuitions “can never be produced by mere verbal learning”. Intuitions “can be attained only as an effect of the direct, experiential involvement of the subject in a practical or mental activity” (p. 95). Learners have to participate in the learning process actively [44].

The next section describes the special features related to learning with computer simulations.

2.2. Learning with Computer Simulations

Computer simulations are considered a technically highly sophisticated option that offer numerous benefits for the learning and teaching of science [45,46,47]. For example, they can potentially improve learners’ understanding of abstract biological phenomena, such as predator–prey relationships and give learners opportunities to harmlessly and interactively conduct experiments [48,49,50]. Learning from computer simulations in discovery environments sees learners as active participants in their learning processes constructing their individual knowledge bases [6,42,44]. Learners actively participate in their learning processes by conducting computer-simulated experiments. They are required to independently find relationships between given variables in a domain and do not merely passively absorb information. Hence, it is supposed that the learning outcomes using computer simulations are different from those acquired by learning with an approach emphasizing pure knowledge transfer [7]. Consequently, knowledge acquisition when learning with computer simulations seems different from learning with a more or less explanatory approach, such as learning from texts [7,8,51].

Swaak, de Jong, and van Joolingen [8] described three characteristics related to the use of computer simulations as discovery environments. Firstly, a computer simulation can be regarded as a rich environment that offers learners a great amount of information they could extract by themselves. Secondly, opportunities for active experiences are characteristics ascribed to computer simulations. Learners actively engage in the learning process and should not merely absorb information via the computer screen. Learners are asked to conduct experiments using a computer simulation so as to be aware of a domain. Thirdly, another characteristic of computer simulations, in contrast to textbooks, is that they are learning environments with low transparency. Information and relationships between given variables in the computer simulation extracted by the learner are not explicitly presented. Learners are asked to deduce the characteristics underlying the simulation when they explore rules, principles, terms or concepts while conducting experiments [3,52]. Learners are to determine the hidden model behind the simulation by conducting experiments [6].

However, empirical findings report several cognitive and metacognitive difficulties regarding scientific discovery learning with computer simulations [2,14,16,53,54]. De Jong and van Joolingen [2] differentiate four types of difficulties learners may experience during discovery learning. The current study focuses on two problems learners often encounter when interpreting data and on difficulties pertaining to self-regulation when learning with a scientific computer simulation [13,15].

Specific instructional support for learning using computer simulations can foster successful knowledge acquisition [2]. In their review article de Jong and van Joolingen [2] pointed out that specific instructional interventions to support data interpretation and self-regulation are effective for computer simulation-based learning. In a literature review Urhahne and Harms [55] inferred that specific supporting measures for data interpretation and self-regulation strongly effected students’ knowledge gain. Also, negative effects on achievement could not be determined when learning with computer simulations was supported with instructional support for data interpretation and self-regulation [55].

The following text presents approaches to overcoming difficulties that learners encounter with scientific discovery learning using computer simulations, and improving knowledge acquisition with particular instructional interventions.

2.3. Supporting Learning from Computer Simulations

Instructional support is necessary to cope with difficulties when learning with computer simulations, to foster learning outcomes and to enhance more successful and goal-oriented knowledge acquisition [2,55,56]. The latter should be supported in several ways. In their triple scheme for discovery learning with computer simulations, Zhang et al. [57] differentiate three spheres of instructional support to be given in three different phases of the learning process:

- (1)

- Interpretative support enables learners to access and use prior knowledge and develop appropriate hypotheses;

- (2)

- Experimental support enhances learners’ ability to design verifiable experiments, to predict and to observe simulation results, and to adequately draw conclusions;

- (3)

- Reflective support increases the learners’ ability to raise self-awareness of the learning processes and helps support the combining of abstract and reflective integration of their discoveries.

The four learning difficulty categories associated with computer simulations (hypotheses generation, design of experiments, interpretation of data, and regulative learning processes) suggested by de Jong and van Joolingen [2] can be integrated (cf. [57]) into the above mentioned spheres. The scheme proposed by Zhang and colleagues [57] provided the theoretical framework for the presented study and is used to explain the various options of instructional support.

Interpretative support enhances awareness of the importance of the discovery process. Learners need to activate their prior knowledge to generate appropriate hypotheses and to obtain an appropriate understanding. Interpretative support is provided to enhance problem representation, ease access to prior experiences, facilitate the handling of the computer simulations and is generally given before learners begin conducting experiments with the computer simulations. Here, domain-specific background information available to the learner is an effective and supportive intervention tool [53,58]. Providing learners permanent access to specific information, such as principles, concepts, terms or facts of a domain, during their interaction with the computer program seems beneficial for knowledge acquisition [53], whereas offering this information earlier on seems less effective [53,59]. Another kind of interpretative support is concrete assignments that learners have to work on using computer simulations [13,60].

A basic framework for an assignment can be based on a POE (Predict-Observe-Explain) strategy [61]. Using this strategy, learners, are first required to predict the outcome of a given task. Learners are then asked to conduct a related experiment and then describe its outcome. Finally, learners have to compare the outcome with their prediction. Hereby, learners can be directed to explore important relationships between variables. Worked-out examples can be a type of interpretative support. In numerous studies, worked-out examples show positive effects on knowledge acquisition (e.g., [62,63,64,65,66,67]), especially for novice learners. Worked-out examples introduce a specific problem, suggest problem solving steps, and give a detailed description of the appropriate solution [68]. Applying worked-out examples may be considered an effective method to increase learners’ problem solving skills [16,69,70].

Experimental support is used to improve scientific inquiry while learners use the computer simulation. This kind of support helps learners adequately design scientific experiments, appropriately predict outcomes, observe outcomes, and draw conclusions (cf. [71]). Effective experimental support interventions include the progressive and cumulative introduction to handling the computer simulations, explanation of important simulation parameters within the computer program, the request to predict possible simulation outcomes by the learner, and answering the learner’s requests to describe and to interpret the simulation outcome. Progressive and increasing introduction to a computer simulation gives learners the relevant information to work with the simulation and to work on assignments. In particular, highly informative computer simulations possess a structure of increasing complexity [72] to assist in avoiding a possible cognitive overload due to the informational richness. In a study of Lewis, Stern, and Linn [73], the prediction of possible simulation outcomes by the learners had a tendency to lead to a higher knowledge gain than in a control group. The ability to describe and justify one’s own simulation outcome as an experimental support indicates successful knowledge acquisition [74].

Reflective support scaffolds learners’ metacognitive knowledge after conducting computer simulated experiments. This kind of instructional support fosters the integration of the newly discovered information. Reflective support enables learners to increase their self-awareness of the learning processes and supports abstract and reflective implementation of their discoveries. For example, a reflective assessment on one’s own inquiry can lead to an improved comprehension regarding the meaningful knowledge acquisition. This can be realized, for example, when learners are encouraged to assess and reflect on their own inquiry using a reflective assessment tool within the computer simulation [75]. In the ThinkerTools-Curriculum [75,76], learners are initially required to assess their own inquiry. Subsequently, learners are requested to justify their assessment in written form. This reflective support has been shown to lead to positive effects in knowledge acquisition.

The following describes two categories of difficulty that learners often encounter with processes of scientific discovery learning: data interpretation and self-regulation. Subsequently, for these two categories specific instructional interventions are presented to support scientific discovery learning with computer simulations.

To enhance the effectiveness of data interpretation, empirical findings have shown that explanatory and justified feedback about a simulation outcome supports effective knowledge acquisition, particularly for novices [77]. In a study by Moreno [77], learners received explanatory or justified feedback about their approach to the computer program after they had worked on computer-based tasks. A study by Lin and Lehmann [78] confirmed the assumption that the description and interpretation of simulation outcomes generated by a learner leads to effective knowledge acquisition. After learners completed their computer simulated experiments, they were requested to justify the simulation outcomes.

It has been proposed that self-reflective tasks can effectively support self-regulated learning. A concrete method of improving awareness, such as reflective self-assessment, shows positive effects on knowledge acquisition [75]. Learners were asked to reflect on their own and their classmates’ inquiries based on provided criteria after having completed computer-simulated experiments [75]. The method of reflective self-assessment supported learners in the self-reflection phase [79,80] and shows positive effects with regard to learners’ knowledge gain.

Less systematic has been the examination of the impact when using specific instructional interventions on the acquisition of intuitive knowledge to call upon learners’ reflective activities, such as the justification of simulation outcomes, and tasks or hints to encourage the reflection on inquiries during the learning processes. However, these instructional interventions have the potential to improve simulation-based learning and intuitive knowledge acquisition. Hence, the current study developed and tested specific instructional interventions for data interpretation and self-regulation. Intuitive knowledge acquisition was measured depending on these instructional interventions. One assumption is that when learning with a scientific computer simulation learners’ intuitive knowledge acquisition could be effectively enhanced by the instructional interventions for data interpretation and self-regulation.

2.4. Assessing Outcomes from Learning with Computer Simulations

Due to the lower transparency of computer simulations [44], learners have no direct view on the variables and their relationships. Therefore, learning with computer simulations has several effects which cannot be revealed by knowledge tests designed in a more conventional way, e.g., traditional multiple-choice tests. Through a meta-analysis, Thomas and Hooper [43] found that ‘the effects of simulations are not revealed by tests of knowledge (…)’ (p. 479). Numerous empirical studies that have focused on knowledge acquisition when learning with computer simulations have used tests emphasizing knowledge gain in an explicit matter. These tests have mostly involved requests for domain-specific facts (e.g., terms and definitions) or concepts to discover interrelations between the given variables. In contrast, to assess declarative knowledge with more or less traditional paper-and-pencil methods, intuitive knowledge can be assessed methodologically by asking for predictions of situations [7,81]. In this regard, referring to Swaak and de Jong [8], and based on their definition of intuitive knowledge as ‘the quick perception of anticipated situations’ (p. 288), the particular components of their definition can be described with respect to the assessment of intuitive knowledge, as follows.

Quick: Time taken to answer items is considered to be an indicator as to what degree knowledge can still be regarded as intuitive. Learners are requested to answer an item as quickly as possible. In this regard, the test format to assess intuitive knowledge described in this study differs from more traditional approaches, for example, multiple-choice tests. It is assumed that knowledge gained by quickly answering items has an intuitive quality.

Perception: With respect to the item format, perception seems to be crucial. For this reason, and compared to many other tests assessing declarative knowledge, short texts and pictures with minimal textual information can be used.

Anticipated: An important aspect of intuitive knowledge is anticipation. An item consists of a given initial situation with predetermined variables. The change in a particular variable leads to a possible outcome that can be anticipated.

Situation: Each item contains a question part, consisting of a question or a commencement of a given statement with a change in that situation, and a response part, consisting of possible answers. Consequently, each item comprises an initial situation influenced by a given action or a changed value of a variable and possible (post)situations to be anticipated.

These components, suggested by Swaak and de Jong [7], were considered and provided a basis for developing the test instrument to assess intuitive knowledge used in the study presented here.

2.5. Research Aims and Hypotheses

Intuitive knowledge can be regarded as crucial for subsequent learning processes and conceptual knowledge construction in biology education. The research aim of this study was to find out whether, and to what extent, specific instructional support for data interpretation and for self-regulation when learning with a computer simulation may lead to learners’ intuitive knowledge acquisition in a biological context. The ensuing hypotheses on instructional support for data interpretation and self-regulated learning were formed in this study as follows:

The first hypothesis was that interacting with the computer simulation would lead to intuitive knowledge acquisition.

The second hypothesis was that supporting scientific discovery learning with particular instructional interventions for data interpretation or self-regulation would lead to a greater intuitive knowledge gain than without this kind of instructional support.

The third hypothesis stated that cumulative effects on intuitive knowledge gain could be shown using a combination of instructional interventions for data interpretation and self-regulation.

3. Materials and Methods

3.1. Participants

A sample of 117 eighth grade students (61 girls, 56 boys) from six secondary school classes in Northern Germany (Schleswig-Holstein) participated in the study. The age ranged from 13 to 16 years (M = 14.09, SD = 0.54). Class size ranged from 15 to 23 students. The results of a Welch-test revealed no significant differences between participants’ prior intuitive knowledge between the different groups (p = 0.094, ns). Students received no formal prior instruction on water-ecological content relevant to the study. The participants’ necessary skills in working with the computer program were empirically collected by questionnaire in the apron of the study. The data indicated that the participants had advanced computer skills. Furthermore, the teachers responsible for the participants were asked and confirmed that their students had been taught mathematical contents relevant to understand the graphical relationships implied in the simulation. Student participation in the study was voluntarily and the authors strictly handled student anonymity and ethical issues.

3.2. Materials

3.2.1. Content and the Computer Program ‘SimBioSee’

Educational interventions tested the content “relationships between organisms”. Interdependent relationships in ecosystems between living organisms are relevant in science classrooms at all school levels, as e.g., predator-prey-relationships and the carrying capacities of ecosystems are essential to grasp more sophisticated concepts in advanced biology classes (cf. [82]). The concrete subject of the computer simulation ‘population dynamics and predator–prey relationships’ is said to be a relevant part of science education [83] and is a crucial component of the current biology curriculum for eighth graders in German secondary schools.

A desktop or a laptop-based computer program, including a computer simulation operated by keyboard and computer mouse, was developed and tested for the study. Prior to developing the computer program, twenty-eight biology teachers were interviewed with regard to the biological content. Based on their statements, the water-ecological computer program ‘SimBioSee’ was developed comprising domain specific fundamentals as well as a computer simulation on the predator–prey relationship between the two endemic fish species ‘pike’ and ‘rudd’ [84]. The computer program comprises an introduction with a manual, particular information pages, and a worked-out example. The information pages are used as a kind of interpretative support [57], permitting learners to have a permanent access to (water) ecological fundamentals during the interaction with the computer program with the aid of a navigation bar and appropriate linked headlines. Furthermore, worked-out examples are integrated in the computer program.

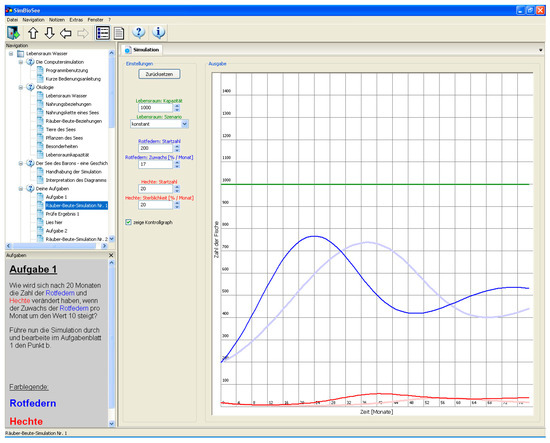

The computer simulation embedded in the computer program is shown as a diagram and contains the numbers of predators (pikes) and prey (rudds) as dependent variables and time as the independent variable (see Figure 1). For each of the two selected species, the numbers of fish are displayed in color-coded graphs. Parameters to modify the graphs can be found on the left side of the diagram. Transparent graphs in the diagram show the start conditions and allow for easy comparison with the simulation outcomes.

Figure 1.

Screenshot of the computer simulation in the computer program ‘SimBioSee’. The navigation bar to the left contains linked headlines to further information pages presented on the right side. A field on the left, below the navigation bar, contains an assignment. The simulation outcome on the right side shows the number of the fish species at the indicated time. The red graph represents the number of predators (pikes) and the blue graph the number of prey (rudds). Graph modifications can be executed by changing the given parameters presented on the left side of the diagram. One can directly compare the simulation outcome to the lighter-colored initial conditions of the graphs.

3.2.2. Instructional Support

The independent variables of the study are the instructional support for data interpretation and for self-regulation.

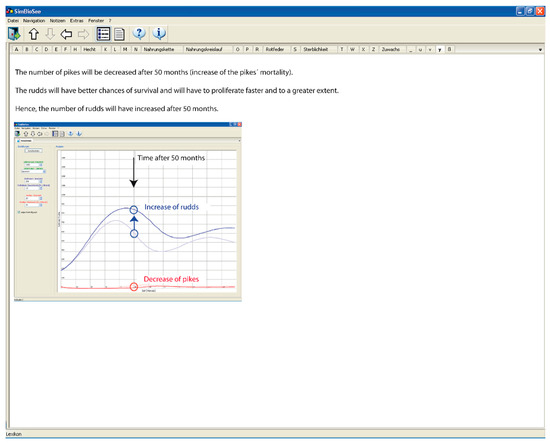

Support for data interpretation: Learners were either (a) asked to describe and scientifically interpret the simulation outcome [78] or (b) received a description and biological interpretation of the computer program generated simulation outcome [77]. In the condition in which participants were requested to generate a solution (GeS), learners were asked to write a description and interpretation of their own simulation outcome on their assignment (see Appendix A). In the given solution condition (GiS), a description and a biological interpretation of the simulation outcome appeared on the computer screen after learners conducted their computer-simulated experiment (see Figure 2). Students received no instructional support for data interpretation in the no solution condition (NS).

Figure 2.

Screenshot of a description and biological interpretation of a simulation outcome as an instructional intervention for data interpretation given by the computer program ‘SimBioSee’ (GiS).

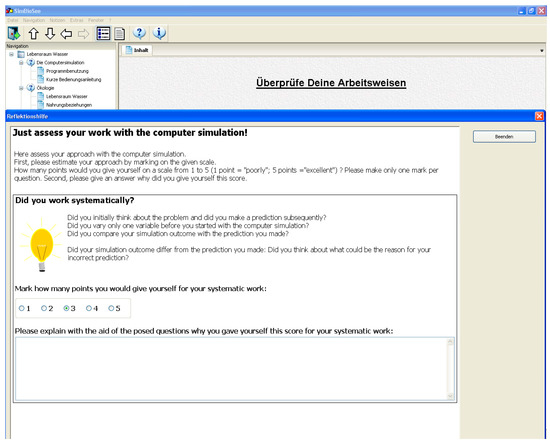

Support for self-regulation: Learners were requested to assess and to reflect on their own inquiry. Learners self-assessed and reflected with the help of a reflective assessment tool integrated in a separate section of the computer program ‘SimBioSee’. The assessment tool was implemented after learners had completed their assignments. Learners were first asked to assess their own inquiry using a 5-point scale of assessment. Questions in reference to different phases of the inquiry cycle were used to unburden learners’ self-assessment. Afterwards, learners were requested to explain their assessment in a text box in the reflective assessment tool (see Figure 3). Half the students received this kind of instructional support for self-regulation (R), the others received no instructional support for (NR).

Figure 3.

Screenshot of the reflective assessment tool as instructional support for self-regulation (R). After learners had conducted all computer-simulated experiments they were asked to assess their own inquiry as a five-point score. Subsequently, learners were requested to explain their own score based on their inquiry and why this score was appropriate.

The students’ intuitive knowledge acquisition was the dependent variable in the study and was operationalized by means of the computer-based intuitive knowledge test, the ‘SPEED-test’.

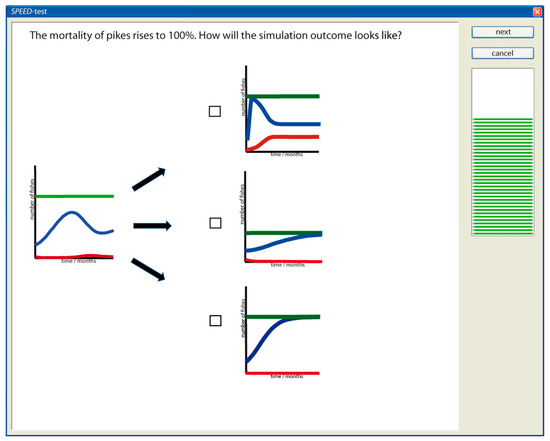

3.2.3. Intuitive Knowledge Test: ‘SPEED-test’

Based on the definition of intuitive knowledge as ‘the quick perception of anticipated situations,’ the specific components were taken into account to develop the test instrument, ‘SPEED-test’ (cf. [8]). In total, the computer-based test instrument comprised eleven multiple-choice items. Each item could be answered after a learning session with the computer program. Figure 4 shows a sample item from the ‘SPEED-test’.

Figure 4.

Screenshot of the ‘SPEED-test’ sample item used in the study.

Each item of the ‘SPEED-test’ consisted of a question with regard to a change of a value from an initial situation. The initial situation given in each item was displayed on the left side of the computer screen as an image containing a diagram. This diagram stayed the same for each item. Next to the initial situation and in the middle of the screen, the response part presented consisted of three possible (post)situations to be anticipated by the learner via mouse click. Each item contained one correct answer. The initial situation and three possible outcomes were displayed as simplified simulation graphs of the computer simulation ‘SimBioSee’ with its two relevant curves showing the number of fishes as well as the line illustrating the biotope-carrying capacity. In addition, a running time scale displayed on the right side of the computer screen was used to trigger time pressure to encourage learners to give answers as quickly as possible. Learners had a maximum of 20 s to answer an item. If a learner did not answer an item during this time span, the next following item was displayed on the computer screen automatically. If an item could not be answered, a ‘next’-button located in the computer program on the upper right side of the computer screen offered the learner the possibility to continue to the next item. Before learners started the ‘SPEED-test’, they were introduced to the program and had the chance to practice the interaction with the test instrument and the item format with test items. Test items were similar to items for assessing intuitive knowledge, but their results were not been taken into account.

For the data analysis, the ‘SPEED-test’ recorded log files saving the learners’ given answers for each item response. After accomplishing the ‘SPEED-test’, the computer program automatically informed the learner about the number of correct answers via a pop-up window without indicating which items had been answered correctly. The SPEED pre and post-tests comprised the same items.

3.3. Procedure

The study was implemented during regular science lessons in six different school classes. Before learning with the computer program ‘SimBioSee’ during the session, every participant had to complete the ‘SPEED-(pre)test’ which comprised items to assess the learners’ previous intuitive knowledge. After answering an item, learners obtained the next item automatically and could not go back to a previously answered item. During the learning phase, each student used one laptop to work on four specific assignments for approximately seventy minutes. Participants had to follow a POE strategy to solve the assignments using the computer simulation. At the beginning of each assignment, learners were required to predict possible consequences of particular external influences on a water ecosystem. Subsequently, the students were required to compare the simulation outcomes with the predictions after completing the computer simulated experiment. Depending on the treatment condition, descriptions and a biological interpretation of the simulation outcomes were used to extend each assignment at the end of the experiment.

After that, every student accomplished the ‘SPEED-(post)test’. All items of the ‘SPEED-test’ could already be answered using the computer simulations. Students required approximately five minutes to complete the ‘SPEED-test’. The class sessions lasted up to, but not more than ninety minutes.

3.3.1. Assignments

Four concrete assignments were completed with the aid of the computer simulation in ‘SimBioSee’. The basic frame of each paper assignment was aligned with a POE strategy [61]. The content of an assignment was additionally shown in the bottom left section of the computer screen (see Figure 1). Appendix A displays an assignment example.

3.3.2. Design

The study was based on a 3 × 2-factorial design. Groups were built on a class level as students required short tutorials to correctly work with the instructional interventions. Namely, students asked to describe, interpret, and reflect on their own simulation outcomes had a more detailed orientation than for example students who obtained the description and interpretation from ‘SimBioSee’. Various instructional interventions for data interpretation and self-regulation were combined to create six experimental conditions, including one control group in which learners received neither instructional support for data interpretation, nor instructional support for self-regulation (see Table 1). Instructional interventions for data interpretation and self-regulation were different for each of the six groups. The biology teacher and examiner were present while participants worked on the assignments during science class in their own classrooms. The identical laptops were provided by the Leibniz Institute for Science and Mathematics Education. The computer program ‘SimBioSee’ was the same in each condition except regarding the examined instructional interventions. Learners did not communicate with each other during the learning session. Participants were equally distributed among the six research conditions for both testing time points; a Chi-square test produced no significant differences between the number of participants in the different groups, χ2(2) = 1.32, ns.

Table 1.

Participant distribution in control (NR/NS) and experimental conditions.

4. Results

Intuitive knowledge gain was analyzed focusing on the instructional interventions of data interpretation and self-regulation. A multivariate repeated measures analysis of variance (MANOVA) for the dependent variable, intuitive knowledge, with the two instructional interventions as between-subjects factors and the testing time points as a within-subjects factor revealed a significant main effect of testing time points (F(1, 111) = 15.95, p < 0.001, part. η2 = 0.13). This means that the learners showed significant knowledge gain from the pre-test to the post-test across the six conditions.

Furthermore, a significant interaction between instructional interventions was detected (F(2, 111) = 9.17, p < 0.001, part. η2 = 0.14). Therefore, the conditions profited differently from the provided instructional interventions. Specifically the participants of the conditions (GiS/R and GeS/NR) showed the highest amount of knowledge gain. Comparatively, learners showed the lowest knowledge gain in the post-test who in the GeS/R condition were requested to describe and to interpret their own simulation outcome and also required to reflect on their scientific discovery learning. The participants in the GiS/R and GeS/NR conditions showed a significant amount of knowledge gain from the pre-test to the post-test (see Table 2).

Table 2.

Intuitive knowledge gain from pre-test to post-test in the control and experimental conditions.

Analyses were subsequently carried out to examine if the knowledge gain under the instructional support conditions was greater than that of the control group (NS/NR). Comparisons were made between each of the experimental conditions and the control group in the five computed analyses of variance (ANOVA). The condition with the given solution and reflective support (GiS/R; F(1, 39) = 4.99, p < 0.05, part. η2 = 0.11) and the generated condition with no reflective support (GeS/NR; F(1, 37) = 5.58, p < 0.05, part. η2 = 0.13) resulted in significantly better performances than those of the control group. No significant differences were found between the control group and the condition with no solution and reflective support (NS/R; F(1, 34) = 0.00, ns, part. η2 = 0.00), the condition with the given solution and no reflective support (F(1, 32) = 0.55, ns, part. η2 = 0.02) or the condition with the generated solution and reflective support (F(1, 40) = 0.03, ns, part. η2 = 0.00).

Furthermore, we analyzed whether the instructional interventions had any effect on knowledge acquisition when pre-test scores were taken into account as a covariate. Pre-test results were additionally recognized as there appeared to be significant differences between the conditions (F(5, 111) = 2.36. p < 0.05, part. η2 = 0.10). Hence, an analysis of covariance (ANCOVA) was performed with the post-test results as the dependent variable, the instructional support for data interpretation and self-regulation as the independent variables and the pre-test results as a covariate. The ANCOVA revealed no significant main effects of instructional support for data interpretation (F(2, 110) = 0.73, ns, part. η2 = 0.01) and instructional support for self-regulation (F(1, 110) = 0.01, ns, part. η2 = 0.00). However, the interaction effect of instructional support for data interpretation and instructional support for self-regulation was significant (F(2, 110) = 7.52, p < 0.001, part. η2 = 0.12).

5. Discussion

The study examined the effects of specific instructional support for data interpretation and self-regulation for intuitive knowledge acquisition when learning with computer simulations in a biological context. Based on the presumption that learning with computer simulations requires instructional support, it was assumed that computer simulation based learning leads to intuitive knowledge acquisition [2,7,42].

In general, and irrespective of instructional support for data interpretation and self-regulation, significant intuitive knowledge gain was observed after the learning session with ‘SimBioSee’, as predicted by Hypothesis 1 (interacting with the computer simulation would lead to intuitive knowledge acquisition). Intuitive knowledge gain may be ascribed to the well-structured design of the computer program with the computer simulations, which can be characterized as a rich environment [44], enabling learners to extract relevant ecological fundamentals. These fundamentals may provide a basis for acquiring intuitive knowledge [81]. Furthermore, learners in all experimental conditions and the control group were given the task of working on specific assignments during the inquiry cycle. Learners focused on acquiring important fundamentals [60] on the topic of water ecology through working on these assignments. The ‘SPEED-test’ was used to check participants’ discovered fundamentals through the computer simulation and assignments. Consequently, one can speculate that working on the assignments with the computer simulations had a positive impact on intuitive knowledge acquisition.

The study’s outcomes revealed differential effects of the instructional interventions for data interpretation and self-regulation on intuitive knowledge acquisition. Regarding Hypothesis 2 (supporting scientific discovery learning with particular instructional interventions for data interpretation or self-regulation would lead to a greater intuitive knowledge gain than without this kind of instructional support), a positive impact of instructional support on intuitive knowledge acquisition was partially supported and verified for at least one experimental condition. Describing and scientifically interpreting simulation outcomes as an instructional intervention for data interpretation turned out to be effective for intuitive knowledge acquisition. Concerning knowledge acquisition, Moreno and Mayer [74] found similar findings, showing the effectiveness of justifying one’s own simulation outcomes. Furthermore, requesting learners to explain the simulation outcomes after they had conducted a computer-simulated experiment was an instructional support method leading to significantly improved learning outcomes in the transfer task. In 19 cited studies on learning mathematics and computer science, Webb [85] showed that students’ giving explanations has positive effects on achievement, whereas their merely receiving explanations has few positive effects on learning outcomes. Self-explanations can be evoked through describing and interpreting the own simulation outcomes scientifically. Chi and Bassok [86] and Chi et al. [87] named this phenomenon ‘the self-explanation effect’ and it has been shown to lead to successful learning outcomes. Wong, Lawson and Keeves [88] found positive effects of conceptual knowledge acquisition about a geometric theorem by eliciting self-explanations. The self-explanation effect is regarded as an active knowledge-constructing process [87,88,89]. Generating explanations through describing and interpreting one’s own simulation outcomes creates a deeper understanding and results in higher learning outcomes, as new information is suitably embedded into the structures of prior knowledge. Hence, it is supposed that describing and interpreting one’s own simulation outcomes elicits self-explanations. These self-explanations especially stimulate learners’ cognitive processes during learning with computer simulations, and this may support intuitive knowledge acquisition. The self-explanation effect could explain why describing and interpreting one’s own simulation outcomes leads to higher intuitive knowledge gain than just receiving the simulation outcomes from the computer program or only getting the request to reflect or getting no support.

Concerning Hypothesis 3 (cumulative effects on intuitive knowledge gain could be shown using a combination of instructional interventions for data interpretation and self-regulation), a cumulative effect concerning the tested instructional interventions for data interpretation and for self-regulation was found in one experimental condition. Combining instructional support for data interpretation and self-regulation in a specific manner leads to higher intuitive knowledge gain than supporting the learners using merely one of these interventions during the learning session. Therefore, Hypothesis 3 was partially supported. Learners who received the simulation outcomes as instructional support for data interpretation and were also requested to reflect about their own simulation outcomes gained significant intuitive knowledge when learning with the computer simulations. In this regard, it would seem that this certain combination of instructional support can foster intuitive knowledge acquisition while learning with the discovery environment and receiving only one or none of the tested instructional interventions.

Supporting learners only with the correct simulation outcomes given by the computer program as instructional support for data interpretation appeared not to be beneficial for intuitive knowledge acquisition. However, the additional request to reflect on the learner’s own simulation outcomes and on their own inquiries during the learning processes turned out to be effective for intuitive knowledge acquisition. Learners were prompted to awareness of their own learning processes by reflecting on their own inquiries [90]. In addition to receiving the correct simulation outcomes, this might have a positive impact for intuitive knowledge acquisition. Hence, receiving the correct simulation outcome after conducting the related experiment and additionally reflecting on the simulation outcomes proved to be an effective combination of instructional interventions. Therefore, a causal interrelationship can be assumed between repeated requests to engage with the given simulation outcome—displayed as simplified formats of given situations—and additionally reflecting on one’s own inquiry and intuitive knowledge gain. Learners who received the correct solution from the computer program and who were additionally required to reflect were requested to engage with the related simulation outcome at least three times. Besides studying their own simulation results, learners also obtained the opportunity to receive the correct simulation outcomes and were requested to reflect on them. In this regard, the displayed format of a given situation plays an crucial role concerning intuitive knowledge acquisition [8,26,51]. Thus, reduced graphical representations with possible simulation outcomes were used in the ‘SPEED-test’. Learning is required to select and to organize information into coherent representations and to integrate this information into already existing knowledge [91]. By receiving the correct simulation outcomes, learners’ cognitive processes for selecting relevant information during the learning processes can be relieved as the learners are not required to find a solution on their own. Instead of searching for a correct solution, learners can instead use their cognitive resources to understand the solution’s steps [92]. Presumably, the learners’ cognitive processes can be focused on relevant information [74] and their selection is supported by receiving the correct simulation outcome. The comparatively high intuitive knowledge gain of learners who received the correct simulation outcomes and who were also asked to reflect may be grounded the learners’ cognitive processes being focused on relevant information throughout these particular instructional interventions. Consequently, a causal relationship can be assumed between a given simulation outcome, the support for learners’ cognitive processes with relevant information about the simulation outcome, the additional request to reflect, and a resulting intuitive knowledge gain.

In this study, it was shown that specific instructional interventions for data interpretation and self-regulation can effectively support intuitive knowledge acquisition when learning with a scientific computer simulation for only one learning session. Furthermore, intuitive knowledge acquisition can easily be tested by the ‘SPEED-test’. This point may be important for science teachers when implementing computer-based science learning during their science lessons in school.

However, the study provides no evidence about the exact time it takes to acquire intuitive knowledge from widely varied experiences of a phenomenon [21,22]. According to our study results, only one learning session about a new biological concept supported with particular instructional interventions seems to be sufficient for acquiring intuitive knowledge. The long-term effects of these instructional interventions should be explored in further research.

In general, our study outcomes could be important for the field of artificial intelligence and machine learning specifically (cf. [93,94,95]) as intuitive knowledge offers the initiation of ensuing learning processes [11,33]. Here, the expertise from science education could be useful because the computer sciences momentarily seem to lack adequate expertise [93].

6. Conclusions

As mentioned in previous literature, intuitive knowledge can be considered to be crucial for further learning processes and conceptual knowledge construction. To this effect, intuitive knowledge and conceptual understanding can be regarded as two intertwined aspects [22], as prior knowledge could be the basis for intuitive knowledge acquisition [81]. The tested instructional interventions for data interpretation and self-regulation showed different effects on learners’ intuitive knowledge acquisition. Therefore, it cannot be stated clearly and in a generalized way whether one specific instructional intervention involving the interpretation of data or instructional intervention for self-regulation or a combination of both fosters adequate intuitive knowledge acquisition during one learning session of computer-simulated experiments, as predicted by Hypotheses 2 and 3. It seems that a rather specific instructional intervention or a certain combination of two interventions is effective as instructional support for intuitive knowledge acquisition. In this regard, giving learners the correct simulation outcomes and letting them describe and interpret their own simulation outcomes in a biological sense seems very useful for intuitive knowledge acquisition. However, the results indicate that another combination of instructional interventions consisting of generating a solution and additionally reflecting on one´s own simulation outcomes does not lead to effective intuitive knowledge acquisition. This specific combination even seems to constrain intuitive knowledge acquisition. Further research should investigate why a specific combination of instructional interventions fosters intuitive knowledge acquisition, whereas another combination seems to be beneficial. Hence, one should choose an appropriate instructional design to support effective intuitive knowledge acquisition.

Author Contributions

The project’s basic idea and objectives were developed by U.H. and D.U. All three authors conceived and designed the experiments; M.E. performed the experiments, analyzed the data and wrote the first version of the manuscript that was further developed and edited equally by all three authors.

Funding

This research was part of a project funded by the German Research Foundation (DFG), Germany (grant No. HA 2705/3-1).

Acknowledgments

Our thanks go to Olaf Conrad from the Department of Earth Sciences, University of Hamburg. His support and the technical contribution with regard to the programming of the framework of the computer program is greatly appreciable. Without his work, it would have been impossible to conduct this study.

Conflicts of Interest

The authors declare no conflict of interest. The founding sponsor did not contribute to the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A

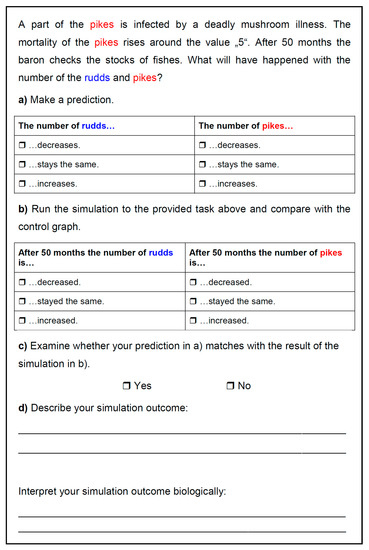

Figure A1.

Example of a Concrete Assignment to Stimulate the Generation of a Solution (GeS).

References

- Bruner, J.S. The act of discovery. Harv. Educ. Rev. 1961, 31, 21–32. [Google Scholar]

- De Jong, T.; van Joolingen, W.R. Scientific discovery learning with computer simulations of conceptual domains. Rev. Educ. Res. 1998, 68, 179–202. [Google Scholar] [CrossRef]

- Kistner, S.; Vollmeyer, R.; Burns, B.D.; Kortenkamp, U. Model development in scientific discovery learning with a computerbased physics task. Comput. Hum. Behav. 2016, 59, 446–455. [Google Scholar] [CrossRef]

- Klahr, D.; Dunbar, K. Dual space search during scientific reasoning. Cogn. Sci. 1988, 12, 1–48. [Google Scholar] [CrossRef]

- Künsting, J.; Kempf, J.; Wirth, J. Enhancing scientific discovery learning through metacognitive support. Contemp. Educ. Psychol. 2013, 38, 349–360. [Google Scholar] [CrossRef]

- De Jong, T.; van Joolingen, W.; Scott, D.; de Hoog, R.; Lapied, L.; Valent, R. SMISLE: System for multimedia integrated simulation learning environments. In Design and Production of Multimedia and Simulation-Based Learning Material; de Jong, T., Sarti, L., Eds.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1994; pp. 133–165. [Google Scholar]

- Swaak, J.; de Jong, T. Measuring intuitive knowledge in science: The development of the what-if test. Stud. Educ. Eval. 1996, 22, 341–362. [Google Scholar] [CrossRef]

- Swaak, J.; de Jong, T. Discovery simulations and the assessment of intuitive knowledge. J. Comput. Assist. Learn. 2001, 17, 284–294. [Google Scholar] [CrossRef]

- Rosenblatt, A.D.; Thickstun, J.T. Intuition and Consciousness. Psychoanal. Q. 1994, 63, 696–714. [Google Scholar] [PubMed]

- Betsch, T. The nature of intuition and its neglect in research on judgment and decision making. In Intuition in Judgment and Decision Making; Plessner, H., Betsch, C., Betsch, T., Eds.; Lawrence Erlbaum Associates: New York, NY, USA, 2008; pp. 3–22. ISBN 978-0805857412. [Google Scholar]

- Sadler-Smith, E.; Shefy, E. The intuitive executive: Understanding and applying ‘gut feel’ in decision-making. Acad. Manag. Rev. 2004, 18, 76–91. [Google Scholar] [CrossRef]

- Sherin, B. Common sense clarified: The role of intuitive knowledge in physics problem solving. J. Res. Sci. Teach. 2006, 43, 535–555. [Google Scholar] [CrossRef]

- De Jong, T.; van Joolingen, W.R.; Swaak, J.; Veermans, K.; Limbach, R.; King, S.; Gureghian, D. Self-directed learning in simulation-based discovery environments. J. Comput. Assist. Learn. 1998, 14, 235–246. [Google Scholar] [CrossRef]

- Kirschner, P.A.; Sweller, J.; Clark, R.E. Why minimal guidance during instruction does not work: An analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educ. Psychol. 2006, 41, 75–86. [Google Scholar] [CrossRef]

- Manlove, S.; Lazonder, A.W.; de Jong, T. Regulative support for collaborative scientific inquiry learning. J. Comput. Assist. Learn. 2006, 22, 87–98. [Google Scholar] [CrossRef]

- Rey, G.D. Instructional advice, time advice and learning questions in computer simulations. Australas. J. Educ. Technol. 2010, 26, 675–689. [Google Scholar] [CrossRef]

- Yaman, M.; Nerdel, C.; Bayrhuber, H. The effects of instructional support and learner interests when learning using computer simulations. Comput. Educ. 2008, 51, 1784–1794. [Google Scholar] [CrossRef]

- Strack, F.; Deutsch, R. Reflective and impulsive determinants of social behavior. Personal. Soc. Psychol. Rev. 2004, 8, 220–247. [Google Scholar] [CrossRef] [PubMed]

- Kahneman, D. Maps of Bounded Rationality: Psychology for Behavioral Economics. Am. Econ. Rev. 2003, 93, 1449–1475. [Google Scholar] [CrossRef]

- Dane, E.; Pratt, M.G. Exploring intuition and its role in managerial decision making. Acad. Manag. Rev. 2007, 32, 33–54. [Google Scholar] [CrossRef]

- Fensham, P.J.; Marton, F. What has happened to intuition in science education? Res. Sci. Educ. 1991, 22, 114–122. [Google Scholar] [CrossRef]

- Lindström, B.; Marton, F.; Ottoson, T. Computer simulation as a tool for developing intuitive and conceptual understanding in mechanics. Comput. Hum. Behav. 1993, 9, 263–281. [Google Scholar] [CrossRef]

- Blume, B.D.; Covin, J.G. Attributions to intuitions in the venture founding process: Do entrepreneurs actually use intuition or just say that they do? J. Bus. Ventur. 2011, 26, 137–151. [Google Scholar] [CrossRef]

- Cheng, M.-F.; Brown, D.E. Conceptual resources in self-developed explanatory models: The importance of integrating conscious and intuitive knowledge. Int. J. Sci. Educ. 2010, 32, 2367–2392. [Google Scholar] [CrossRef]

- Chudnoff, E. Intuitive knowledge. Philos. Stud. 2013, 162, 359–378. [Google Scholar] [CrossRef]

- Fischbein, E. Intuition in Science and Mathematics: An Educational Approach; Reidel: Dordrecht, The Netherlands, 1987. [Google Scholar]

- Holzinger, A.; Kickmeier-Rust, M.D.; Wassertheurer, S.; Hessinger, M. Learning performance with interactive simulations in medical education: Lessons learned from results of learning complex physiological models with the haemodynamics simulator. Comput. Educ. 2009, 52, 292–301. [Google Scholar] [CrossRef]

- Reber, R.; Ruch-Monachon, M.A.; Perrig, W.A. Decomposing intuitive components in a conceptual problem solving task. Conscious. Cognit. 2007, 16, 294–309. [Google Scholar] [CrossRef] [PubMed]

- Brock, R. Intuition and insight: Two concepts that illuminate the tacit in science education. Stud. Sci. Educ. 2015, 51, 127–167. [Google Scholar] [CrossRef]

- Policastro, E. Intuition. In Encyclopedia of Creativity; Runco, M.A., Pritzker, S.R., Eds.; Academic Press: San Diego, CA, USA, 1999; pp. 89–93. ISBN 9780080548500. [Google Scholar]

- Broadbent, D.E.; Fitzgerald, P.; Broadbent, M.H.P. Implicit and explicit knowledge in the control of complex systems. Br. J. Psychol. 1986, 77, 33–50. [Google Scholar] [CrossRef]

- Sinclair, M.; Ashkanasy, N.M. Intuition: Myth of a decision-making tool? Manag. Learn. 2005, 36, 353–370. [Google Scholar] [CrossRef]

- Holzinger, A.; Softic, S.; Stickel, C.; Ebner, M.; Debevc, M. Intuitive e-teaching by using combined hci devices: Experiences with wiimote applications. In Universal Access in Human-Computer Interaction. Applications and Services, Lecture Notes in Computer Science; Stephanidis, C., Ed.; Springer: Berlin, Germany, 2009; pp. 44–52. ISBN 3-642-02712-1. [Google Scholar]

- Hodgkinson, G.P.; Langan-Fox, J.; Sadler-Smith, E. Intuition: A fundamental bridging construct in the behavioural sciences. Br. J. Psychol. 2008, 99, 1–27. [Google Scholar] [CrossRef] [PubMed]

- Westcott, M.R. Toward a Contemporary Psychology of Intuition; Holt, Rinehart & Winston: New York, NY, USA, 1968; ISBN 978-0030677304. [Google Scholar]

- Sweller, J.; van Merrienboër, J.J.G.; Paas, F.G.W.C. Cognitive Architecture and Instructional Design. Educ. Psychol. Rev. 1998, 10, 251–296. [Google Scholar] [CrossRef]

- Rümmele, A. Intuitives Wissen. [Intuitive knowledge]. In Kognitive Entwicklungspsychologie: Aktuelle Forschungsergebnisse; [Cognitive Developmental Psychology: Current research Results]; Rümmele, A., Pauen, S., Schwarzer, G., Eds.; Pabst Science Publishers: Lengerich, Germany, 1997; pp. 89–92. [Google Scholar]

- Krathwohl, D.R. A Revision of Bloom´s Taxonomy: An Overview. Theory Pract. 2002, 41, 212–218. [Google Scholar] [CrossRef]

- Kolloffel, B.; Eysink, T.H.S.; de Jong, T. Comparing the effects of representational tools in collaborative and individual inquiry learning. Comput.-Support. Collab. Learn. 2011, 6, 223–251. [Google Scholar] [CrossRef]

- De Jong, T.; Wilhelm, P.; Anjewierden, A. Inquiry and assessment: Future developments from a technological perspective. In Technology-Based Assessments for 21th Century Skills: Theoretical and Practical Implications from Modern Research; Mayrath, M.C., Clarke-Midura, J., Robinson, D.H., Schraw, G., Eds.; Information Age Publishing Inc.: Charlotte, NC, USA, 2012; pp. 249–266. ISBN 978-I-61735-632-2. [Google Scholar]

- Duma, G.M.; Mento, G.; Manari, T.; Martinelli, M.; Tressoldi, P. Driving with intuition: A preregistered study about the EEG anticipation of simulated random car accidents. PLoS ONE 2017, 12, e0170370. [Google Scholar] [CrossRef] [PubMed]

- Swaak, J.; van Joolingen, W.R.; de Jong, T. Supporting simulation-based learning: The effects of model progression and assignments on definitional and intuitive knowledge. Learn. Instr. 1998, 8, 235–253. [Google Scholar] [CrossRef]

- Thomas, R.; Hooper, E. Simulations: An opportunity we are missing. J. Res. Comput. Educ. 1991, 23, 497–513. [Google Scholar] [CrossRef]

- Swaak, J.; de Jong, T.; van Joolingen, W.R. The effects of discovery learning and expository instruction on the acquisition of definitional and intuitive knowledge. J. Comput. Assist. Learn. 2004, 20, 225–234. [Google Scholar] [CrossRef]

- Blake, C.; Scanlon, E. Reconsidering simulations in science education at a distance: Features of effective use. J. Comput. Assist. Learn. 2007, 23, 491–502. [Google Scholar] [CrossRef]

- D’Angelo, C.; Rutstein, D.; Harris, C.; Bernard, R.; Borokhovski, E.; Haertel, G. Simulations for STEM Learning: Systematic Review and Meta-Analysis; SRI International: Menlo Park, CA, USA, 2014. [Google Scholar]

- Lindgren, R.; Tscholl, M.; Wang, S.; Johnson, E. Enhancing learning and engagement through embodied interaction within a mixed reality simulation. Comput. Educ. 2016, 95, 174–187. [Google Scholar] [CrossRef]

- De Jong, T. Computer simulations: Technological advances in inquiry learning. Science 2006, 312, 532–533. [Google Scholar] [CrossRef] [PubMed]

- Ryoo, K.L.; Linn, M.C. Can dynamic visualizations improve middle school students’ understanding of energy in photosynthesis? J. Res. Sci. Teach. 2012, 49, 218–243. [Google Scholar] [CrossRef]

- Urhahne, D.; Prenzel, M.; von Davier, M.; Senkbeil, M.; Bleschke, M. Computereinsatz im naturwissenschaftlichen Unterricht—Ein Überblick über die pädagogisch-psychologischen Grundlagen und ihre Anwendung [Computer use in science education—An overview of the psychological and educational foundations and its applications]. Zeitschrift für Didaktik der Naturwissenschaften 2000, 6, 157–186. [Google Scholar]

- Swaak, J.; de Jong, T. Learner vs. system control in using online support for simulation-based discovery learning. Learn. Environ. Res. 2001, 4, 217–241. [Google Scholar] [CrossRef]

- Van Joolingen, W.R.; de Jong, T.; Lazonder, A.W.; Savelsbergh, E.R.; Manlove, S. Co-Lab: Research and development of an online learning environment for collaborative scientific discovery learning. Comput. Hum. Behav. 2005, 21, 671–688. [Google Scholar] [CrossRef]

- Leutner, D. Guided discovery learning with computer-based simulation games: Effects of adaptive and non-adaptive instructional support. Learn. Instr. 1993, 3, 113–132. [Google Scholar] [CrossRef]

- Mayer, R.E. Should there be a three-strikes rule against pure discovery learning? The case for guided methods of instruction. Am. Psychol. 2004, 59, 14–19. [Google Scholar] [CrossRef] [PubMed]

- Urhahne, D.; Harms, U. Instruktionale Unterstützung beim Lernen mit Computersimulationen [Instructional support for learning with computer simulations]. Unterrichtswissenschaft 2006, 34, 358–377. [Google Scholar]

- Van Joolingen, W.R.; de Jong, T.; Dimitrakopoulout, A. Issues in computer supported inquiry learning in science. J. Comput. Assist. Learn. 2007, 23, 111–119. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, Q.; Sun, Y.; Reid, D.J. Triple scheme of learning support design for scientific discovery learning based on computer simulation: Experimental research. J. Comput. Assist. Learn. 2004, 20, 269–282. [Google Scholar] [CrossRef]

- Reid, D.J.; Zhang, J.; Chen, Q. Supporting scientific discovery learning in a simulation environment. J. Comput. Assist. Learn. 2003, 19, 9–20. [Google Scholar] [CrossRef]

- Elshout, J.J.; Veenman, M.V.J. Relation between intellectual ability and working method as predictors of learning. J. Educ. Res. 1992, 85, 134–143. [Google Scholar] [CrossRef]

- Vreman-de Olde, C.; de Jong, T. Scaffolding learners in designing investigation assignments for a computer simulation. J. Comput. Assist. Learn. 2006, 22, 63–73. [Google Scholar] [CrossRef]

- White, R.T.; Gunstone, R.F. Probing Understanding; Falmer Press: London, UK, 1992; ISBN 978-0750700481. [Google Scholar]

- Atkinson, R.K.; Derry, S.J.; Renkl, A.; Wortham, D. Learning from examples: Instructional principles from the worked examples research. Rev. Educ. Res. 2000, 70, 181–214. [Google Scholar] [CrossRef]

- Nerdel, C.; Prechtl, H. Learning complex systems with simulations in science education. In Instructional Design for Effective and Enjoyable Computer—Supported Learning: Proceedings of the First Joint Meeting of the EARLI SIGs Instructional Design and Learning and Instruction with Computers; Gerjets, P., Kirschner, P.A., Elen, J., Joiner, R., Eds.; Knowledge Media Research Center: Tuebingen, Germany, 2004; pp. 160–171. Available online: http://www.iwm-kmrc.de/workshops/SIM2004/pdf_files/Nerdel_et_al.pdf (accessed on 15 February 2018).

- Neubrand, C.; Borzikowsky, C.; Harms, U. Adaptive prompts for learning Evolution with worked examples—Highlighting the students between the “novices” and the “experts” in a classroom. Int. J. Environ. Sci. Educ. 2016, 11, 6774–6795. [Google Scholar]

- Neubrand, C.; Harms, U. Tackling the difficulties in learning evolution: Effects of adaptive self-explanation prompts. J. Biol. Educ. 2017, 51, 336–348. [Google Scholar] [CrossRef]

- Renkl, A. The worked-out examples principle in multimedia learning. In The Cambridge Handbook of Multimedia Learning; Mayer, R., Ed.; Cambridge University Press: Cambridge, UK, 2005; pp. 229–246. ISBN 978-1107610316. [Google Scholar]

- Renkl, A.; Atkinson, R.K. Learning from examples: Fostering self-explanations in computer-based learning environments. Interact. Learn. Environ. 2002, 10, 105–119. [Google Scholar] [CrossRef]

- Mulder, Y.; Lazonder, A.W.; de Jong, T. Simulation-based inquiry learning and computer modelling: Pitfalls and potentials. Simul. Gaming 2015, 46, 322–347. [Google Scholar] [CrossRef]

- Biesinger, K.; Crippen, K. The effects of feedback protocol on self-regulated learning in a web-based worked example learning environment. Comput. Educ. 2010, 55, 1470–1482. [Google Scholar] [CrossRef]

- Kalyuga, S.; Chandler, P.; Tuovinen, J.; Sweller, J. When problem solving is superior to studying worked examples. J. Educ. Psychol. 2001, 93, 579–588. [Google Scholar] [CrossRef]

- Pedaste, M.; Mäeots, M.; Siiman, L.A.; de Jong, T.; van Riesen, S.A.N.; Kamp, E.T.; Manoli, C.C.; Zacharias, C.Z.; Tsourlidaki, E. Phases of inquiry-based learning: Definitions and the inquiry cycle. Educ. Res. Rev. 2015, 14, 47–61. [Google Scholar] [CrossRef]

- White, B. Computer microworlds and scientific inquiry: An alternative approach to science education. In International Handbook of Science Education; Fraser, B., Tobin, K., Eds.; Kluwer: Dordrecht, The Netherlands, 1998; pp. 295–314. ISBN 978-0-7923-3531-3. [Google Scholar]

- Lewis, E.L.; Stern, J.L.; Linn, M.C. The effect of computer simulations on introductory thermodynamics understanding. Educ. Technol. 1993, 33, 45–58. [Google Scholar]

- Moreno, R.; Mayer, R.E. Role of guidance, reflection and interactivity in an agent-based multimedia game. J. Educ. Psychol. 2005, 97, 117–128. [Google Scholar] [CrossRef]

- White, B.; Frederiksen, J.R. Inquiry, modeling, and metacognition: Making science accessible to all students. Cognit. Instr. 1998, 16, 3–118. [Google Scholar] [CrossRef]

- White, B.; Shimoda, T.A.; Frederiksen, J.R. Enabling students to construct theories of collaborative inquiry and reflective learning: Computer support for metacognitive development. Int. J. Artif. Intell. Educ. 1999, 10, 151–182. [Google Scholar]

- Moreno, R. Decreasing cognitive load for novice students: Effects of explanatory versus corrective feedback in discovery-based multimedia. Instr. Sci. 2004, 32, 99–113. [Google Scholar] [CrossRef]

- Lin, X.; Lehmann, J.D. Supporting learning of variable control in a computer-based biology environment: Effects of prompting college students to reflect on their thinking. J. Res. Sci. Teach. 1999, 36, 837–858. [Google Scholar] [CrossRef]

- Zimmermann, B.J.; Bonner, S.; Kovach, R. Developing Self-Regulated Learners: Beyond Achievement to Self-Efficacy; American Psychological Association: Washington, DC, USA, 1996; ISBN 978-1-55798-392-3. [Google Scholar]

- Zimmermann, B.J.; Tsikalas, K.E. Can computer-based learning environments (CBLEs) be used as self-regulatory tools to enhance learning? Educ. Psychol. 2005, 40, 267–271. [Google Scholar] [CrossRef]

- Wichmann, A.; Timpe, S. Can dynamic visualizations with variable control enhance the acquisition of intuitive knowledge? J. Sci. Educ. Technol. 2015, 24, 709–720. [Google Scholar] [CrossRef]

- NGSS Lead States. Next Generation Science Standards: For states, by States; The National Academies Press: Washington, DC, USA, 2013; ISBN 978-0-309-27227-8. [Google Scholar]

- Finley, F.N.; Steward, J.; Yarroch, N.L. Teachers’ perceptions of important difficult science content. Sci. Educ. 1982, 66, 531–538. [Google Scholar] [CrossRef]

- Eckhardt, M.; Urhahne, D.; Conrad, O.; Harms, U. How effective is instructional support for learning with computer simulations? Instr. Sci. 2013, 41, 105–124. [Google Scholar] [CrossRef]

- Webb, N.M. Peer interaction and learning in small groups. Int. J. Educ. Res. 1989, 13, 21–39. [Google Scholar] [CrossRef]

- Chi, M.T.H.; Bassok, M. Learning from examples via self-explanations. In Knowing, Learning and Instruction: Essays in Honor of Robert Glaser; Resnick, L.B., Ed.; Lawrence Erlbaum Associates Inc.: Hillsdale, MI, USA, 1989; pp. 251–282. [Google Scholar]

- Chi, M.T.H.; de Leeuw, N.; Chiu, M.H.; La Vancher, C. Eliciting self-explanations improves understanding. Cognit. Sci. 1994, 18, 439–477. [Google Scholar] [CrossRef]

- Wong, R.M.F.; Lawson, M.J.; Keeves, J. The effects of self-explanation training on students’ problem solving in high-school mathematics. Learn. Instr. 2002, 12, 233–262. [Google Scholar] [CrossRef]

- Tajika, H.; Nakatsu, N.; Nozaki, H.; Ewald, N.; Shunichi, M. Effects of self-explanation as a metacognitive strategy for solving mathematical word problems. Jpn. Psychol. Res. 2007, 49, 222–233. [Google Scholar] [CrossRef]

- Van den Boom, G.; Paas, F.; van Merriënboer, J.; van Gog, Y. Reflection prompts and tutor feedback in a web-based learning environment: Effects on students’ self-regulated learning competence. Comput. Hum. Behav. 2004, 20, 551–567. [Google Scholar] [CrossRef]

- Mayer, R.E. Multimedia Learning; Cambridge University Press: Cambridge, UK, 2001; ISBN 978-0521787499. [Google Scholar]

- Eysink, T.H.; de Jong, T. Does instructional approach matter? How elaboration plays a crucial role in multimedia learning. J. Learn. Sci. 2012, 21, 583–625. [Google Scholar] [CrossRef]

- Holzinger, A.; Dehmer, M.; Jurisica, I. Knowledge discovery and interactive data mining in bioinformatics—state-of-the-art, future challenges and research directions. BMC Bioinform. 2014, 15 (Suppl. 6), I1. [Google Scholar] [CrossRef] [PubMed]

- Holzinger, A.; Biemann, C.; Pattichis, C.S.; Kell, D.B. What do we need to build explainable ai systems for the medical domain? arXiv, 2017; arXiv:1712.09923. [Google Scholar]

- Holzinger, A.; Plass, M.; Holzinger, K.; Crisan, G.C.; Pintea, C.-M.; Palade, V. A glass-box interactive machine learning approach for solving np-hard problems with the human-in-the-loop. arXiv, 2017; arXiv:1708.01104. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).