1. Introduction

The 2001 reauthorization of the Elementary and Secondary Education Act (No Child Left Behind, NCLB) formalized a movement to attach leader, teacher, and school success with measures of student achievement. The catalyst of this movement can be attributed to the Cold War with the Soviet Union in the 1970s and 1980s and the economic race to keep pace with Asia (specifically Japan) in the 1990s. Today global competitors (e.g., China, India,

etc.) have promised even more efforts to keep American students prepared for a flattened world [

1]. As a result, today’s metric to assess school effectiveness is student achievement; merging assessments, data, and accountability. However, data can have the potential to be valuable in other ways. To begin, data can provide valuable feedback loops to teachers to refine their pedagogical practices and thus improve student learning. Additionally, data can be used to uncover inequities. The NCLB legislation has done both. Interestingly, NCLB has indeed proved valuable to uncover (

vis-à-vis disaggregated achievement data) inequalities in learning among sub-groups. How NCLB has impacted school level educators’ leadership and pedagogy is a matter of debate and a focus of this study.

Increased accountability is one way the government is coping with increased global competition and other pressures. As such, accountability is manifested in the form of high stakes testing, common core standards, and the surge in the number of charter schools and other educational alternatives. Federal and state governing agencies, along with the private corporate sectors, joined to voice calls for a standard core curriculum and assessments that measure progress of such a curricula. The curricular work was realized only in part. That is, as a result of the 1980s Secretary of Education’s 1982 report, A Nation At Risk, the demands placed on students’ curricular requirements rose dramatically in short order (e.g., high school students around the country were required to take math, science, and foreign languages classes). It would take another three decades before the common curricula would be realized (in 2010 the common core standards for mathematics and English were approved by 45 states (Haager and Vaughn, 2012); and common standards for social studies are currently underway). Prior to the common core there was no set measure to student achievement on a national comparative basis. Common core standards provide consistent assessment measures with which to compare student achievement nationally. Scores from common core assessments will then be used as data toward assessing the quality of teaching and learning occurring in schools.

This educational reform effort was manifested into the acceleration of assessment systems in the 1990s. In this decade state Governors and national business leaders convened for separate initiatives to press for the improvement of education in the name of economic competition. Both initiatives called for more data to be used to monitor school effectiveness in general, and more specifically, individual student achievement. In 2001, No Child Left Behind (NCLB) was a historic school reform measure enacted from the federal level to create a new normative assessment practice in K-12 schools [

2]. NCLB created specific achievement targets and mandated specific measures to monitor the growth toward the targets. Moreover, sanctions were developed as enforcements for educators failing to meet the student achievement targets. As a result, schools have been introduced to the world of assessments—formative and summative, diagnostic, data warehouses, and data-driven decision-making—to name a few.

In spite of these new accountability measures, there is a widespread belief that the educational profession has underutilized the valuable commodity of student achievement data to inform how and how well students are learning. The mandates to use data left out the declarative (why), practical (how), and procedural (why), knowledge needed by district and school level educators. In 2002, the U.S. Department of Education unabashedly expressed its goal to “change education to make it an evidence-based field” building upon the belief that education has been operating “largely on the basis of ideology and professional consensus” and, “as such . . . is subject to fads and is incapable of cumulative progress that follows from the application of the scientific method” [

3]. Popham stated that “given the significance of students’ test scores these days, teachers and administrators who choose to remain unaware of assessment’s key concepts are being dangerously naïve” [

4]. Unfortunately, educators find purposefully using school data a difficult skill to master– despite increases in high stakes and ever increasing rates and measures of accountability [

5,

6,

7,

8,

9,

10,

11,

12,

13].

Nevertheless there continue to be a steady stream of data delivered to the schoolhouse door and a constant pressure to “use data.” As a result, schools have been characterized as placed in “the paradoxical situation of being both data rich and information poor” [

14]. What do practices look like for current school educators? How do they perceive their uses of school data as mandated by federal law (NCLB) and district and school policy? How do school level leaders perceive the use of data? The aim of this study is to gain an understanding of how teachers and principals are using data. We chose teachers and principals as the units of analyses because they are in the crosshairs of both the mandates to use data and the associated sanctions. The use of data has only escalated since NCLB. For instance, there is a clear and present movement to use student achievement data to evaluate, reward, and punish teachers. Understanding how these school level educators have come to understand and use data is both timely and important.

Although the terms data and assessment have taken on a negative connotation due to the increased pressure created by high stakes testing, assessments can also be used to directly, immediately, and positively impact student learning. Yeh defines rapid formative assessment as, “systems that provide formative feedback to students and teachers regarding student performance. Assessments are dubbed ‘rapid’ because they are provided two to five times weekly. When conducted to promote student growth and learning, assessment is aligned with strategically selected key intended outcomes, and integrated into daily instructional activities” [

15].

2. What Are Data?

The challenge for building data use capacity and clarifying the ambiguity during associated activities stems from a lack of literacy with respect to the use of data in practice. There is confusion when referring to data; as different definitions, forms and contexts for its use exist. Examples of broad categories of data that educators access in education daily are summative and formative. Summative assessment data are typically derived from end of the year assessments that monitor and record student achievement [

16,

17]; and they provide a macro-level vantage point most useful for educators and policy makers at a distance from the classroom [

18]. These data are either from the criteria-based assessment (e.g., NCLB approved state-assessment), norm-referenced assessment (e.g., TIMS—Trends in International Mathematics and Science, PISA—Program for International Student Assessment, or NAEP—National Assessment of Educational Progress). Formative assessment data are then used for “shaping student’s learning.” [

19] These activities describe “a planned process in which assessment-elicited evidence of students' status is used by teachers to adjust their ongoing instructional procedures or by students to adjust their current learning tactics” [

20]. In other words, summative data are

autopsy data that are collected after the fact while formative data are

biopsydata that are still actionable. However, no two formative assessment systems are exactly alike. For example, Confrey and Kazak [

21] contend that, while it was once thought that a singular learning trajectory existed for all students, variants of student trajectories exist that must be reconciled as teachers teach and students learn. Certain math assessments provide teachers vital insight into these individual learning trajectories. In the end, mathematical learning trajectories may “increase the likelihood of successful learning by defining a corridor of possible opportunities and constraints and recognizable typical patterns for use by teachers in conducting practice” [

21]. As a result, logical progression can be mapped to model student cognition. This gets at what students know (the content), and how they know it, (the route they took to achieve competence).

Young and Kim stated that the, “purposes that assessment data are supposed to serve and the occasions for using the data are not necessarily defined

a priori; teachers choose how and when to use the information gathered through assessments” [

22]. The knowledge management field has investigated the transformation of raw data into meaningful information in order to make knowledgeable decisions about one’s practice [

7].

Militello and colleagues have conducted a series of in-depth case studies in order to analyze what data are used in schools, how they are used, and if they are valid measures for each associated use. Results indicate that (1) many assessment systems broadly define the validity of their products, promising more than their psychometric properties can deliver, and (2) the use of many assessment systems are misguided, as the end-users are uninformed of the scientific characteristics of each assessment. To help school educators, Militello and colleagues created an

Assessment Fit Principle [

23]. The principle states that there must be a match between

the purpose of the assessments (e.g., properties of assessment including validity) and

the intended uses by school educators (e.g., lesson planning) [

13,

23,

24]. Effective use of assessments for educational decision-making is predicated upon this fit.

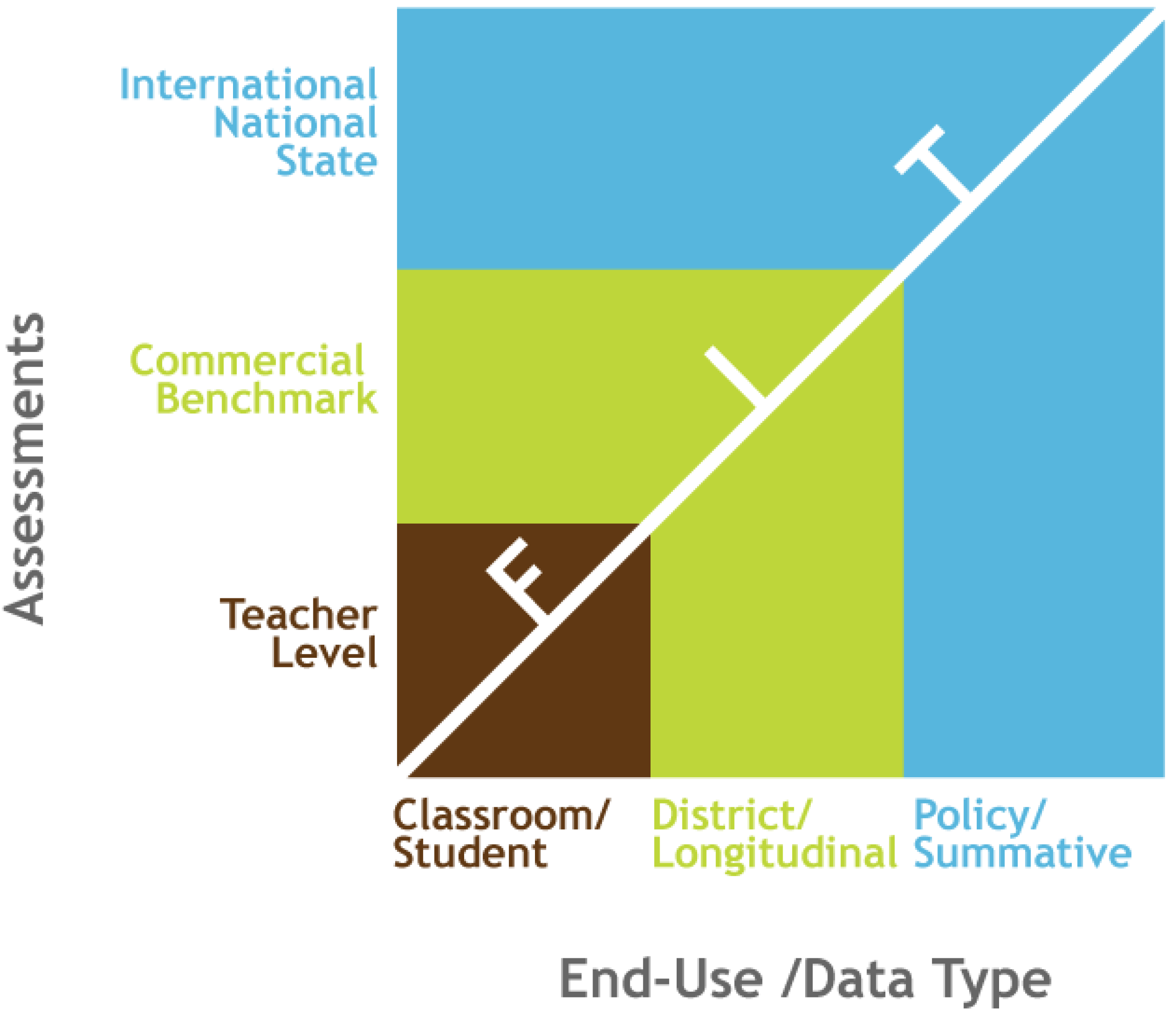

Figure 1 provides a visual representation of the concept of fit. The line of fit in the figure links specific types of data with particular end-users. This figure was developed from a series of empirical studies [

13,

23]. These studies documented the use of various assessment systems (e.g., National NEAP; state test; third party formative; district benchmark) and analyzed this use within the framework of the product’s validity. As a result, a qualitative “line of best fit” was hypothesized for these districts.

Figure 1.

Assessment Fit Principle [

23].

Figure 1.

Assessment Fit Principle [

23].

What emerged from this work were cognitive diagnostic assessments that provide teachers detailed information regarding how, and if students are learning. Leighton and Gierl define cognitive diagnostic assessment (CDA) as a means to “measure specific knowledge structures and processing skills in students so as to provide information about their cognitive strengths and weaknesses” [

25]. “Fit for the classroom-level” resides at the diagnostic level in the assessment system axis. Diagnostic characteristics track student learning and knowledge at a fine grain level, and at a faster rate than before [

18]. By giving teachers cognitively diagnostic data in a timely fashion, teachers can change their teaching in response to the data. This allows data to be transformed from assessments

of learning to assessments

for learning [

26,

27,

28]. That is, assessing

for learning stipulates that assessment data fits classroom educators’ need for diagnostic-level and timely information about their students. Armed with relevant and timely data, educators who were formerly inept at efficiently operationalizing the results of student data, are now able to use data in their practice. Studies have supported the use of assessment for learning to impact student learning [

29,

30,

31].

3. Promising Use of Data

There is a growing body of literature on the promise of data use. For example, the U.S. Department of Education stated, “Research shows that teachers who use student test performance to guide and improve teaching are more effective than teachers who do not use such information” [

32]. Black and William extensively reviewed 250 studies to ascertain the influence of formative assessment on learning [

33]. They describe impressive learning gains within the classroom, especially when formative feedback is at the teacher-student interaction level. Students taught by teachers who use assessment for learning achieved in six to seven months what otherwise took one year [

28,

33]. Shute’s meta-analysis of formative feedback revealed .40 to .80

SD gains in student learning. “Effective and useful feedback,” she argued, “depends on three things: (a)

motive (the student needs it); (b)

opportunity (the student receives it in time to use it); and (c)

means (the student is able and willing to use it)” [

34]. Finally, cost-effectiveness studies have always been the gold standard of research, second only to student outcome. Yeh found that rapid formative assessments are 124 times more cost-effective than class size reduction [

15].

Studies found that effective use of data in schools included multiple sets of data as well as collaboration, especially between teachers and principals [

35]. Leadership has been a key ingredient to innovation and reform in general, but there is evidence that leadership has a pronounced effect on teacher’s use of data [

14,

36,

37,

38,

39,

40,

41]. Massell also found that increased communication and knowledge provided by data appeared to be positively altering educator attitudes toward the school’s capabilities of educating some underperforming groups [

42]. These evidences demonstrate some of the positive effects of data use on teachers’ instructional practice and school improvement. The extant educational literature has generated normative features of the meaningful, effective use of school data. Specific outcomes of using data have included:

Increases reliability and predictive validity of school improvement efforts [

5,

13,

14];

Helps improve instructional practice [

5,

14,

43,

44,

45]

Monitors teacher effectiveness [

24,

46];

Targets student needs for short-term intervention [

15,

24,

37,

43,

47].

School leaders drive the success of their schools as they integrate appropriate data usage into shaping their vision. When schools have a clear vision, this encourages a data culture where students themselves can be involved in taking ownership of their own data [

48]. Simultaneously, there are renewed warnings that data has limitations for teacher evaluations [

49], and that careful consideration must be given to the fit between the intended uses and psychometric purpose of data [

23].

4. Interrogating Assessment Knowledge: Implications for Pedagogy

In the end, the relevancy of data for educators is rooted in the integration into instructional practice, accessibility of informative data, ability to make sense of data into meaningful information, and especially—their own general and pedagogical content knowledge. Additionally, organizational conditions and setting/contexts matter [

22]. Much the same way as technology pedagogical content knowledge (TPCK) was established [

50]—an assessment pedagogical content knowledge (APCK) is needed.

Coburn and Talbert (2006) note that psychometric properties of the assessment, alignment with desired outcome, ability for assessment data to give insights into student thinking and reasoning, alignment of results with their own knowledge, and the use of multiple sets of data is what makes evidence valid for individuals. This summary aligns with the ‘Fit Principle’. When grading student work, teachers use more than just the formal measures [

51]. Most teachers also utilize internally created assessments to supplement the results received from more formal, mandated measures [

13,

52].

Teachers test frequently, using tests they created for their assessment purposes, and feel put off by additional tests. They are also particularly unsatisfied when forced to administer published tests that they did not help create. Teachers oppose such assessments because they most often are not aligned with teachers’ content and style, and are thus incapable of accurately measuring student growth and learning. Their frustration is justified, as assessments are a waste of valuable time when they do not produce valid and reliable data [

22,

53].

Challenges to changing school cultures must be addressed if student assessments are to have a vital role in classroom teaching. While existing studies on teacher and school level data use focus on the indications of successful implementation, an understanding of school or classroom level data use remains more opaque. This investigation sheds light on teachers and principals’ perceptions of their use of data. Q-methodology was used as a tool to help us understand (1) why teachers and principals are using the data in the ways they are and (2) how the use of data are or are not supported. The development of q-methodology as well as the collection and analysis of q-sort data will be discussed in the next sections.

5. Research Design

Q-methodology is a research methodology that allows the researcher to study the perceptions of individuals [

54]. Specifically, the methodology allows for a process of analyzing multiple perspectives, where each perspective is highly idiosyncratic and valued [

56]. Q methodology invites participants to make decisions as to “what is meaningful” and hence, what does (and what does not) have value and significance from their perspectives around a given subject. When using Q methodology, the researcher asks participants to sort a collection of statements about a topic from the most important or characteristic statement to the least important or characteristic statement for the topic that is being investigated (e.g. are teachers using data to modify their lesson plans). The statements that are sorted are referred to as a Q-sample and are often generated through interviews with people who are knowledgeable about the topic at hand [

57]. Q methodology was created as an attempt to understand subjective thoughts in an objective manner. The purpose of Q methodology is to better understand the relationships of subjective statements by a group of participants. While data obtained from the use of Q-methodology is not generalizable, it is both transferable and informative to that particular group of participants.

5.1. Q Sample Statement Development

The Q sample statements are a principle component of Q methodology, and represent “a collection of stimulus items . . . that is presented to respondents for rank ordering in a Q-sort” [

57]. For this study, a concourse of statements was generated from the extant literature on the use of school data and interviews with principals and teachers. A pensive review of related literature and interviews led to the development of a set of statements that best reflected the promising use of school data by principals and teachers. Initially, more than 50 statements were developed for both teachers and principals. A small set of principals (5), and teachers (6), were then interviewed about their uses of school data. This helped us add to the body of statements derived from the literature. Finally, the statements were analyzed for redundancy and uniqueness, and ultimately edited for clarity. In the end, this concourse resulted in 23 card statements that represented the Q-sample for both the principal and the teacher study (see

Table 1 and

Table 2).

Table 1.

Principal Q-sample.

Table 1.

Principal Q-sample.

| 1. I have easy access to school data. |

| 2. I have developed a common language and culture about school data with my staff. |

| 3. I ensure that teachers receive or can generate useful school data reports. |

| 4. I use school data to allocate resources. |

| 5. I ensure that teachers are provided ongoing support and professional development for the use of school data. |

| 6. I model how teachers can use school data to inform their practice. |

| 7. I use school data to evaluate teachers. |

| 8. I collect, analyze, and use school data in our school improvement plans. |

| 9. I collect, analyze and use parent, teacher, and student survey data. |

| 10. I analyze school data using student demographic variables. |

| 11. I recognize and celebrate accomplishments based on school data. |

| 12. I use school data to create new programs for students. |

| 13. I designate a data specialist who assists teachers in the use of data to inform instruction. |

| 14. I collect, analyze and use school data to evaluate school programs. |

| 15. I ensure that assessments (other than the state assessment) have been aligned with state standards. |

| 16. I hold teachers accountable for using school data to inform their teaching practice. |

| 17. I ensure that teachers receive assessment data results within one week of administration. (not including MCAS, SAT) |

| 18. I ensure that we use a centralized technological system to store, access and analyze data. |

| 19. I structure regular, designated time for teachers to collaborate with colleagues about school data. |

| 20. I am evaluated based on school data. |

| 21. I ensure that teachers have easy access to school data. |

| 22. I ensure that parents have access to data. |

| 23. I support teachers in their use of data. |

Table 2.

Teacher Q-sample.

Table 2.

Teacher Q-sample.

| 1. I regularly use school data to develop and modify lesson plans. |

| 2. I regularly use school data to recommend students in remedial programs. |

| 3. I regularly use school data to set performance goals for students. |

| 4. I regularly use school data to communicate with colleagues about student learning. |

| 5. I regularly use school data to form flexible student groups in the classroom. |

| 6. I regularly use school data as evidence at meetings (e.g. pre-referral, 504, IEP). |

| 7. I regularly use school data to modify my instructional strategies (e.g. differentiate my instruction). |

| 8. I regularly use school data to recommend students’ placement in advanced or gifted and talented programs. |

| 9. I regularly use school data to identify content to re-teach. |

| 10. I regularly use school data to communicate with the student’s home. |

| 11. I regularly use school data to communicate with my grade-level/content area colleagues about teaching practices. |

| 12. I regularly use school data to form flexible student groups with other teachers during the school day. |

| 13. I regularly use school data to communicate with colleagues outside my grade-level/content area. |

| 14. I regularly use school data to focus on students that are not yet, but close to, passing the state assessment. |

| 15. I regularly use school data to communicate with school and/or district administrators about teaching practices. |

| 16. I regularly use school data to communicate with students about their progress towards learning standards. |

| 17. I regularly use school data to identify my own professional development needs. |

| 18. I regularly use school data to monitor student progress toward curricular standards. |

| 19. I regularly use school data to monitor the progress of students by sub-group in my classroom (e.g. student demographics). |

| 20. I regularly use school data to compare my students with my colleagues’ students. |

| 21. I regularly use school data to assign student grades. |

| 22. I regularly use school data to advocate for resources for my students. |

| 23. I regularly use school data to communicate with school and/or district administrators about student learning. |

6. Data Collection

6.1. P Sample and Data Collection

Participants were asked to rank order the 23 card statements as well as to complete a questionnaire after they performed the sort. Thirty-four teachers completed the teacher q-sort and the subsequent open response questions, while twenty-eight principals completed the principal q-sort and subsequent open response questions (see Appendix for the protocol).

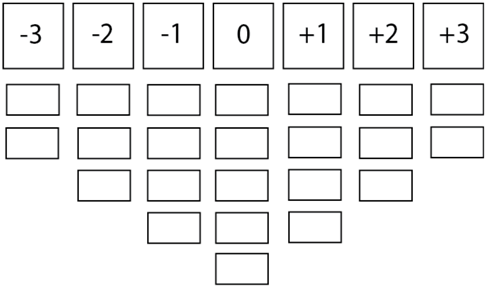

The conditions of instruction for sorting the Q-sample asked the participants to rank order the 23 statements (printed on 3x1 cards) on a continuum of “most characteristic of how I use school data” to “least characteristic of how I use school data.” Responses were recorded on a grid containing nine columns with headings ranging from +3 (most characteristic statements) on the right side to –3 (least characteristic statements) on the left side. Each grid had two spaces available under the end points, three under +/–2, four under +/–1, and five under 0. In parlance with Q methodology, the distribution was created to resemble a normal curve (see protocol in Appendix to see the sorting grid). In addition to performing the Q sort, participants were asked a series of questions regarding decisions they made in performing the Q-sorts related to why they sorted the Q-sample as they did (e. g., “If there were other specific statements that you had difficulty placing, please list the number of the statement and describe your dilemma”).

7. Data Analysis

7.1. Principal Sort Data Analysis

Initially, the principal study produced three emergent factors. The eigenvalues and corresponding explained variance demonstrated no clear factors. However, analysis of the Scree plot displayed a strong initial factor. For this study, two factors were rotated using the Varimax rotation technique. Factor A accounted for 16% of the explained variance. Seven of the 28 principals sampled (25%) expressed a significant degree of similarity within the statements provided in the sort. In Q-methodology, significance is identified using the following formula: 1/square root (N) X 2.58 (standard deviation, p<.01) where N is the number of cards. Consequently, the significance value for the principal study was 0.54 (see

Table 3).

Table 3.

Principal factor loadings matrix.

Table 3.

Principal factor loadings matrix.

| Factor Loadings |

|---|

| Principal | Factor A | Principal | Factor A | Principal | Factor A |

| O1 | -.20 | O11 | -.03 | M7 | .09 |

| O2 | .41 | O12 | .69* | M8 | .67* |

| O3 | .54* | O13 | .37 | M9 | .31 |

| O4 | .16 | O14 | .38 | K1 | .38 |

| O5 | -.02 | O15 | .11 | K2 | .30 |

| O6 | .49 | M2 | .26 | K3 | .32 |

| O7 | .55* | M3 | .45 | K4 | -.30 |

| O8 | .36 | M4 | .63* | K5 | .07 |

| O9 | .65* | M5 | .26 | | |

| O10 | 0 | M6 | .54* | | |

7.2. Teacher Sort Data Analysis

The teacher study produced eight emergent factors. As with the principal sort analysis, the eigenvalues and corresponding explained variance demonstrated no clear factors. Analysis of the Scree plot, again, displayed a strong initial factor. For this study, two factors were rotated using the Varimax rotation technique. Factor A accounted for 41% of the explained variance. Thirteen of the 34 teachers sampled (38%) expressed a significant degree of similarity within the statements provided in the sort. The significance value for the teacher study was 0.54 (see

Table 4).

Table 4.

Teacher factor loadings matrix with an * indicating a defining sort (p < .01).

Table 4.

Teacher factor loadings matrix with an * indicating a defining sort (p < .01).

| Factor Loadings |

|---|

| Teacher | Factor A | Teacher | Factor A | Teacher | Factor A |

| S1 | .55* | M1 | .02 | AA2 | .22 |

| S2 | .51 | M2 | .19 | C1 | .40 |

| E1 | .32 | M3 | .25 | C2 | .42 |

| E2 | .62* | J1 | .17 | P1 | .47 |

| N1 | .51 | AB1 | .57* | P3 | .68* |

| A1 | .67* | AB2 | .38 | D1 | .09 |

| A2 | -.04 | AM1 | .64* | D2 | .01 |

| A3 | .59* | AM2 | .24 | J2 | .17 |

| A4 | .59* | R1 | .51 | AB3 | .08 |

| K1 | .73* | R2 | .15 | MG1 | .69* |

| K2 | .07 | AA1 | .57* | MG2 | .75* |

| | | | | P4 | .61* |

For purposes of this research, we decided to report on the main or most significant factor for principals and teachers in this study. As a result, we describe and compare the model factor A for both principals and teachers.

8. Findings

The exploratory analysis of the principal data produced a main factor. Seven study participants returned statistically significant strong positive loadings on one factor. This indicates that these principals were in agreement on several characteristics of how they use data. The statements listed in the table below with a q-sort value of +3 are those that these principals found to be most characteristic of their practice. The statements with a q-sort value of –3 are the ones principals thought were least characteristic of their behavior with respect to data use.

Table 5.

Most and least characteristic statements for principal factor.

Table 5.

Most and least characteristic statements for principal factor.

| Q-Sort Value | Statement |

|---|

| + 3 | As a principal, I have easy access to school data. (card #1) |

| + 3 | As a principal, I collect, analyze, and use school data in our school improvement plans. (card #8) |

| + 2 | As a principal, I collect, analyze and use school data to evaluate school programs. (card #14) |

| +2 | As a principal, I model how teachers can use school data to inform their practice. (card #6) |

| – 3 | As a principal, I ensure that assessments (other than the state assessment) have been aligned with state standards. (card #15) |

| – 3 | As a principal, I designate a data specialist who assists teachers in the use of data to inform instruction. (card #13) |

The statements placed by the principals at the positive end of the spectrum show that they use data to analyze and evaluate their schools and make improvements as well as for modeling best practices of data use.

Upon observing the protocols for the principals loading statistically significant regarding the statements that they agreed upon one principal said, “This semester’s plans have centered around the student survey results from two different surveys” (MM8). Another principal said, “I use the data I have to develop class reports for my teachers to show them how their students have performed in the past and what their chance is of doing well in their class. I also use it to ID (identify) students that are at-risk and show teachers how to do the same” (MM6). In reference to the importance of data in school, one principal stated, “My years of experiences as an administrator, along with my school’s involvement in multi grants, gives me the perspective that data are very important in schools. Although, initially teachers were overwhelmed and somewhat disinterested in the data, they are now quite adept at reviewing/discussing and utilizing the data effectively” (OD7). These statements support the conclusion drawn by the main factor, which indicates principals use data to make school improvements and to model best practices of data use for their teachers.

Alternatively, principals placed card 13 that says, “As a principal, I designate a data specialist who assists teachers in the use of data to inform instruction,” at the negative end of the continuum. One principal said he placed it at the end because, “This is not easily accomplished because there is just one data specialist in our district whose availability seems limited to helping our school and district administrators interpret reports. Then it becomes my responsibility, as a principal, to consult with elementary curriculum specialists who can help me further interpret the data and provide support to staff. This is most definitely a budget issue, and since one person can’t do it all, I think it is important that principals become more knowledgeable and skillful with regard to how they can provide support to teachers in the use of data to inform instruction.” Another principal said he placed card 15 that says, “As a principal, I ensure that assessments (other than the state assessment) have been aligned with state standards” at the negative end of the continuum because “I needed to separate my actual practice from what I know and believe to be best practice.” This principal’s statement basically means he struggles with aligning assessments with state standards, in other words teaching to the test. We can conclude from the analysis of cards at the end of the continuum as well as the statements above that principals do not have the support of a data specialist to assist them and their teachers in adhering to demands to use data being exerted upon them by policy created above the school level.

The exploratory analysis of teacher data produced a main factor. Thirteen participants of the teacher study returned statistically significant strong positive loadings on one factor. This indicates that these teachers were in agreement on several characteristics of how they use data. The statements with a q-sort value of +3 are those that these teachers found to be most characteristic of their practice. The statements with a q-sort value of –3 are the ones teachers thought were least characteristic of their behavior with respect to data use.

Table 6.

Most and least characteristic statements for teacher factor.

Table 6.

Most and least characteristic statements for teacher factor.

| Q-Sort Value | Statement |

|---|

| + 3 | I regularly use school data to develop and modify lesson plans. (card #1) |

| + 3 | I regularly use school data to modify my instructional strategies (e.g. differentiate my instruction). (card #7) |

| + 2 | I regularly use school data as evidence at meetings (e.g. pre-referral, 504, IEP.) (card #6) |

| – 3 | I regularly use school data to compare my students with my colleagues’ students.(card #20) |

| – 3 | I regularly use school data to form flexible student groups with other teachers during the school day. (card #12) |

The statements placed by the teachers at the positive end of spectrum show that teachers use data mainly focusing on improving their individual instructional practice by modifying lesson plans, instructional strategies and re-teaching content. Moreover, school data is also used by teachers in a formative manner to improve the outcome of students, such as giving the feedback to students and as the evidence to make the decision at the meeting.

The relation of these statements to each other clearly centers upon data’s utility to inform classroom practice. A number of the corresponding open-ended response questions illustrate this tendency as well. One teacher commented: “This is the main purpose of my assessments. I try to use them to focus my instruction on weak/difficult areas or topics that need to be re-taught” (SM1). Another teacher who loaded significantly commented that, “Sometimes data helps us drive instruction and to teach and get better results” (RC1). In addition respondent said, “The more formal assessments we have to support the need for assistance the stronger a case we have. We need to be able to prove that a child is placed where they are or are receiving services for a reason. This testing also helps teachers determine when services are not appropriate or needed” (NF2). These statements in conjunction with the cards placed on the positive end of the continuum support our conclusion that teachers are using data to improve instructional practice.

In contrast teachers indicated that they did not regularly use data in making decisions on how to interact with other teachers. For example, teachers said they placed card 12 that states, “I regularly use school data to form flexible student groups with other teachers during the school day” on the least characteristic end of the continuum because, “I don’t have any time to see other teachers in the school day” (CO2). Another teacher said she placed card 12 at the negative end because, “We never do this. It sounds really cool and I’d like to do it. I think the pain of not doing it is what landed it in the –3 column” (P3).

One teacher said she placed card 20 that says, “I regularly use school data to compare my students with my colleagues’ students” at the end of the continuum because, “I rarely, if ever, compare my students to other colleagues / students. Personally, I don’t like to compare students but to look at them as individuals, and their personal success/challenges” (P4). These statements from teachers are indicative of the ambiguous environment within which teachers are making decisions about how to use data. Though the literature illustrates that effective use of data includes using it to initiate conversations and to support collaboration among teachers, teachers do not feel they have the time and some do not see the need to use data in this way.

The emerging factors do describe to some extent why and how teachers and principals are using data. The answer to the question of how the use of data is or is not supported is alluded to in the open-ended statements made by principals and teachers. These statements tended to lean toward comments about not having the necessary time and technical support to use data in some of the ways the literature states as effective. As seen above, principals and teachers did address how the use of data are or are not supported in their answers to the open-ended questions.

9. Discussion and Conclusion

Data are becoming increasingly important in the data driven climate educational workers are currently required to function in. Educational leaders are grappling with national and state summative assessments, district benchmark assessments, and the emergence of a multitude of formative assessment products. Making these data an integral, systemic part of their professional practice will hinge on acquiring the knowledge, skills, and dispositions to understand assessments as the means, not the ends of the instructional process. That is, school leadership and assessments and instruction must be integrally linked, not diametrically opposed [

58,

59,

60]. Studies have demonstrated that teachers can find data meaningful when they are able to use the data to inform what they know about students, and consequently what they do [

61]. Additionally, while educational reform and change take an emotional toll on educators, when they are able to learn, see, and experience the success with a reform, they will form emotional and intellectual bonds with the new approach.

The knowledge management field has investigated the transformation of raw data into meaningful information in order to make knowledgeable decisions about one’s practice [

7]. The resources needed for such a transformation include “people, processes and technologies” [

8]. Bringing these elements together means the creation and support of an assessment-savvy learning organization. Educators in such an environment can transform accountability systems into real changes in professional practice “to the extent that they generate and focus attention on information relevant to teaching and learning, motivate [people] to use that information and expend effort to improve practice, build the knowledge base necessary for interpreting and applying the new information to improve practice, and allocate resources for all of the above” [

62].

Federal and state level policy demands for data use create implications for principals and teachers. The commonalities discovered through this study indicate that both principals and teachers agreed that they used data to improve and inform their individual practice. However, the differences exhibited by the card statements placed at the end of the continuum coupled with the open-ended statements paint a picture that demonstrates a lack of support and time to meet the demands of policies that are left up to the interpretation of the practitioner.

Results demonstrate that the most important aspect of data use for teachers is making classroom decisions. However, policies such as NCLB and the implications of AYP mean that teachers expanding their focus to the implications of data use for the entire school would prove to be more powerful. An integrated approach to developing strategies for implementing data use in schools could enable teachers and principals to collaborate and understand the best use of data for their particular school setting and create the coherence to replace the disconnectedness. While the results of the principal study demonstrate that principals focus on using data to evaluate programs and school improvement, principals could positively impact their teacher relationships by expanding their focus to providing opportunities for teachers to learn more about their expectations for using data through professional development that involve both parties in setting goals for data use.

Inside the paradox created by the ambiguity of teacher and principal data use and the unrealized connection are embedded problems such as defining data and data use, implementation of effective data use, building teacher and principal data use capacity, and overall institutional accountability. That is to say, merely adding assessment data to schools will not necessarily lead to student achievement gains and overall school improvement.

The findings of this study indicate that we cannot assume we are talking about the same data unless we have purposely implemented a systemic approach to data use that proves to be relevant to both teachers and principals. Additionally, motivation, and knowledge, resources and support (

capacity), and the valid and meaningful institutional measures and standards (

coherence) are necessary to transform practice [

58,

62].

While the results of this study did answer the question of how teachers and principals are using data, there are more questions that remain. For example, in this study the definition of data given to the respondent was broad and left to the interpretation of the respondent. In future studies asking teachers and principals to define the types of data they are using to make decisions for their practice might produce more specific results and be more helpful to practitioners. In addition, further studies should be conducted where the review of literature and interview questions asked in development of the cards have in mind the purpose of comparing and contrasting how teachers and principals use data. Lastly, an analysis of the emergent factors with respect to individual and school demographics might also provide an interesting perspective of teacher and principal data use.

Generally, when introducing a new curriculum or policy change rarely can we expect the stakeholders to follow our lead without a foundational plan. Knowledge of types of data and how to effectively use the data to achieve stated outcomes should be part of the process for educating principals and teachers. In addition teachers and principals can work together to eliminate the confusing view of data by purposefully collaborating on the development of goals and objectives for using data in their individual schools. Since the goal of q-methodology is to objectively analyze subjective thoughts of individuals the results of this study is both transferable and informative to the group of study participants. Militello and Benham found that q-methodology is a useful tool for mobilizing discussions, engaging in reflection, and planning new activities [

63]. Therefore, the application of q-methodology to the topic of data in a particular school setting could be the key to helping principals and teachers better understand and navigate the data environment in their school.

The levels of accountability and responsibility between teachers and building level principals are different; consequently teachers and principals conceptually use school data differently. As such, principals have the greatest impact on teacher performance; while teachers have the greatest impact on student learning and achievement. In order to be effective, principals need to understand and use data in ways that help them see a snapshot of both student learning and teacher effectiveness, and to use this image to inform their leadership. Teachers need to understand how to use school data in order to tailor their instruction toward increased student learning. In short, both teachers and principals can benefit from a more in-depth knowledge and understanding of data usage in order that they might select the right data and learn from it. We conclude that in order to improve the quality of data use among and between teachers and principals, school leaders must focus on creating a comprehensive plan for data use in their schools, which includes creating a data literate environment. This data literate environment starts by investing in development of the schools human capital by providing time for teachers and principals to collaborate as well as by providing the technical support which provide practitioners with knowledge and in turn bring clarity to the how and why of data use.

This study demonstrates the importance of the investment in training principals and teachers in understanding and in the proper usage of school data. Without such data literacy, the desired amplified and cohesive use of data cannot occur. That is, the use of data toward more efficient and effective school operations that leads to the ultimate goal of increased student achievement will not be possible. It is clearer than ever before that only those literate and proficient in data usage will be able to survive in this era of accountability in which we currently operate. If we are to expect advances in pedagogical practices toward equitable student achievement ends, then how school leaders and teachers understand and use school data are of paramount importance. The road to improve teacher pedagogy and equitable student learning may indeed be paved with data.