1. Introduction

The technological and educational transformations we are currently experiencing, such as those driven by artificial intelligence, prompt us to reflect on how educational systems, and the individuals within them, respond to change. In many cases, it is not merely the lack of access to technology that deepens inequalities, but rather inaction, insufficient preparation, or delays in activating processes of adaptation and meaningful engagement with available resources (

Mejías-Acosta et al., 2024). The COVID-19 pandemic exposed both the material divide and the training gap. The material divide refers to unequal access to technological infrastructure, such as devices and internet connectivity, which disproportionately affected students from disadvantaged backgrounds (

Salem et al., 2022). The training gap, by contrast, concerns the lack of digital competence —that is, the ability to engage critically and effectively with digital environments, even when technology is available (

Zhang & Tian, 2025). These two dimensions are interrelated but conceptually distinct, and both contributed to the educational challenges encountered during the crisis. For example, the emergence of generative artificial intelligence (AI), capable of automating complex cognitive tasks, generating personalized content, and transforming teaching–learning dynamics, has revealed that universities, despite the efforts made during the COVID-19 pandemic, are not prepared to confront this technological change. Critical digital competency gaps persist, limiting the meaningful, ethical, and effective integration of AI into higher education (

Zhang & Tian, 2025). These shortcomings range from a lack of understanding of algorithmic bias to gaps in practical skills, such as, for instance, prompt formulation (

Krause et al., 2025).

This situation demands urgent structural action from institutions, which must promote deep and critical digital literacy. The COVID-19 pandemic serves as a paradigm of this reality: it triggered an unprecedented disruption that led a large portion of the global population into lockdown. Latin America and the Caribbean accounted for 80% of confirmed cases and 30% of deaths, despite representing only 8.4% of the world’s population (

Pan American Health Organization, 2022), exposing deep structural inequalities.

In the educational domain, this crisis prompted a sudden shift to online learning, with significant territorial and political implications in managing its effects (

Allain-Dupré et al., 2020). The impact of the COVID-19 pandemic on the online educational model has been remarkable, especially in higher education. This has resulted in unexpected costs for universities, which have faced significant challenges in adapting to online learning (

Burki, 2020;

Saha et al., 2023). Restrictions and social distancing measures forced universities, during the first year of the pandemic, to move education into students’ homes.

Prior to 2019, research indicated the need to train teachers in the effective use of digital technologies in teaching-learning and to improve students’ digital competencies (DCs) (

Reisoğlu & Çebi, 2020;

Instefjord & Munthe, 2015). In addition, studies noted that DCs had not yet been adequately integrated into teacher education programs (

Amhag et al., 2019). In this sense, the pandemic accelerated the adoption of information and communication technologies (ICTs) in education, leading to a re-evaluation of pedagogical practices and highlighting the need to develop new skills and strategies for online teaching (

Noguera-Fructuoso & Valdivia-Vizarreta, 2023). The pandemic also exacerbated hardships for students in disadvantaged socioeconomic backgrounds, where technological resources are scarce. This scenario reflects the need for education that is more technologically aligned but also highlights socioeconomic and technological disparities between different communities (

Anderton et al., 2020).

While developed countries can more readily plan for the shift to virtual learning and mitigate the adverse effects of the pandemic, the situation is not as straightforward for developing countries. Poor and emerging nations face shortages of technological infrastructure and their access to low-cost network options remains inadequate (

Treve, 2021).

Aristovnik et al. conducted one of the largest comparative studies of the impact of the first six months of the pandemic on various academic, social, emotional, and financial aspects among higher education students from 62 countries and found that the level of satisfaction with academic and work life was higher in developed countries compared to developing ones (

Aristovnik et al., 2020). This aligns with other non-comparative studies, such as in Norway, where confinement generated stress in students, but academic performance was not affected (

Raaen et al., 2020), and in Spain (

González et al., 2020), whereas it was affected in Indonesia (

Bestiantono et al., 2020) and Jordan (

Haider & Al-Salman, 2020).

Digital competence, as a multidimensional construct, is described in reference frameworks such as DigComp (

Redecker & Punie, 2017), developed by the European Commission, which sets out five key areas: information and data, communication and collaboration, content creation, security, and problem-solving. However, in this study, we do not assess objective performance in these areas, but rather students’ self-perceived digital competence, categorized into four levels: basic, intermediate, advanced, and specialized. These categories, inspired by the progressive logic of DigComp, make it possible to approximate how students feel capable of mobilizing their skills. Beyond conceptual frameworks (

Salem et al., 2022), it is crucial to measure this self-perception because it reflects dimensions of confidence and agency that influence the effective use of technologies in situations of change, and because it is sensitive to rapid transformations in the educational context and to inequalities that are not always evident in objective tests. Moreover, understanding students’ self-perception allows institutions to better tailor educational strategies to their actual needs (

Sarva et al., 2023).

This study aims to analyze the change in self-perception of digital competence among university students in Spain, Mexico, and Peru. We describe the pre-existing conditions that may have shaped differences in their starting points, as well as the institutional and individual responses adopted. It seeks to identify variables that help explain how these students navigated the shift, and what lessons can be drawn to address future challenges with greater preparedness, awareness, and proactivity.

Contextual Aspects

Before the pandemic, the university systems of Spain, Mexico, and Peru displayed uneven levels of digital readiness, the result of differing institutional policies, regulatory frameworks, and structural capacities. As

Lloyd and Ordorika (

2021) note, universities have tended to prioritize criteria of international visibility and academic performance over functions such as social equity, local impact, or the democratization of knowledge. This orientation may have relegated investments in digital competencies and an inclusive technological culture, constraining the real capacity for institutional adaptation to emergency remote teaching.

In Spain, the university system had undergone significant changes since the implementation of the European Higher Education Area, promoting a competency-centered approach and autonomous learning (

Núñez-Canal et al., 2022). At the faculty level, there was a structured framework for professional development in digital competence, with actions such as competency certification, the offering of MOOCs, and the use of open educational resources (

CRUE Universidades Españolas, 2020). However, prior to the pandemic, students were not required to possess these competencies, nor was there a mandatory policy promoting them at the institutional level (

CRUE Universidades Españolas, 2020). The technological infrastructure and experience in online teaching were more consolidated than in the other two countries, although not uniformly across all universities. In Mexico, despite a tradition of distance education through various technological media, most universities maintained an in-person model (

Aguilera-Hermida et al., 2021). Before the pandemic, there was no national policy guiding the systematic incorporation of digital technologies or the development of teaching competencies in this area. Digital initiatives depended on each institution, resulting in a heterogeneous landscape in terms of infrastructure, teacher training, and access to technologies (

Zapata-Garibay et al., 2021). This meant that, although some sectors were prepared, in general higher education did not have a solid foundation in prior digital policy (

Aguirre Vázquez, 2023).

In Peru, the situation was even more limited: nearly 70% of universities had no experience with virtual courses before 2020 (

Figallo et al., 2020). Although connectivity was relatively better than in other Latin American countries, the level of digital competence among students was low (

Crawford-Visbal et al., 2020), and many institutions lacked digital platforms or learning management systems (

Martín-Cuadrado et al., 2021). There was no specific national digital strategy for the university sector, although the framework of the Basic Conditions for Quality (CBC), established in the 2014 university reform, included indirect guidelines related to infrastructure and institutional capacities (

Ministry of Education of Peru, 2021).

In sum, the three countries exhibit divergent trajectories in terms of digital preparedness in higher education. Spain started from a more structured context, with consolidated training and technological frameworks, at least in the teaching domain. Mexico, with isolated institutional initiatives, lacked a cohesive national policy, while Peru faced deeper structural gaps, both in infrastructure and in digital competence. These differences may partly explain the variations in responsiveness to the health crisis.

2. Materials and Methods

2.1. Participants

The target population was students enrolled in faculties of education in countries with diverse education systems. Two universities in Spain, one in Mexico and three in Peru, chosen from the authors’ network of professional contacts, participated and their collaboration was requested to collect data.

Non-probabilistic or convenience sampling of students was used, due to the uncertainty during the health crisis and the speed of the decisions that the authorities made about the course of teaching activities, as well as the ease of access to students due to confinement, proximity, availability of time, and the willingness of students to respond.

881 students were included in the study. Students with physical disabilities (n = 7) who completed the survey were excluded, since they constituted a very small subgroup, which would prevent any reliable inference about them. It was also not possible to combine them with other categories of the Disability status variable because such a combination would lack conceptual meaning. Besides this, convergence problems during the estimation of the statistical models, due to small cell size, warranted their exclusion from the study.

2.2. The Instrument

This study presents a secondary analysis of data collected as part of a broader investigation into educational responses during the COVID-19 pandemic. For the present analysis, we focused on two very similar specific questions that assessed students’ self-perceived digital competence: “indicate your level of digital skills before the state of emergency (basic, intermediate, advanced, specialist)” and “indicate your level of digital skills during the state of emergency (basic, intermediate, advanced, specialist)”. The categories “basic, intermediate, advanced, specialist” are inspired by generic progression descriptors present in frameworks such as DigComp (A–C bands) and institutional adaptations (e.g., university frameworks such as CEAU), but they do not constitute a validated scale from these frameworks nor a literal transcription of their official levels. We therefore interpret it as a global self-perception measure. Also, we acknowledge that these single-item measures have limitations in terms of precision and may present ambiguity for respondents regarding what constitutes each competency level. However, ordinal scales are appropriate for capturing relative changes in paired samples, as our analysis focuses on the direction of change within individuals rather than absolute competency levels. This approach is consistent with established practices in educational research examining self-perceived changes over time.

With these two questions, a binary variable was constructed to capture the direction of change in the self-perception of the level of digital competence before and after the onset of the pandemic. For example, if a student stated that before the pandemic, he perceived his digital competence as intermediate, and after the start of the pandemic he stated that his competence was advanced, then the variable “improvement in DCs” was coded as “yes”, while if he stated that it was intermediate or basic, it was coded as “no”.

A directed acyclic graph (DAG) was constructed using R’s “DAGitty” package web version 3.1 (

Textor et al., 2016), to determine which variables should be included in the regression model as control variables, to assess their effect on the cross-country comparison. After this process, the following variables were included: age; gender (male, female, non-binary/prefer not to respond); previous study modality (face-to-face, face-to-face with virtual campus, 25–50% online, +51% online, virtual); and answer to the question: Do you require any specific adaptation for your learning due to a disability? (no adaptation required; yes, sensory; yes, intellectual, I prefer not to say).

The questionnaire used to collect data was based on a Microsoft Forms form. The data were directly exported in MS Excel format and then imported into Stata version 17 (

StataCorp, 2021).

2.3. Statistical Methods

A Poisson multiple regression model with robust standard errors was developed, with the variable “improvement in DCs” as the dependent variable, and with the independent variable (indicator) “country” to represent the students’ country. This modified Poisson regression method that uses a sandwich variance estimator provides reliable estimates of proportion or prevalence ratios and associated standard errors in cross-sectional or prospective studies, making it a strong alternative to logistic regression and log-binomial models, because its coefficients are directly interpretable, it handles common outcomes (>10%) better, and it is robust to model misspecification (

Chen et al., 2018;

Talbot et al., 2023).

First, a model with only the study’s independent variable was fitted to estimate the “unadjusted” proportion ratios as an initial exploration of differences between countries. Then, in a second model, the four control variables or predictors of changes in DCs perception were added. Finally, to adjust the comparison of DCs for variability in the month of survey administration across countries, the variable “month”, consisting of a continuous numerical (trend) vector from 5 to 9 representing the months of May to September, was included in the final Poisson regression model. All variables were entered into the model as fixed effects, because the reason for their inclusion was not to estimate their effects on the dependent variable, but rather to adjust for the effect of their distribution across countries on the cross-country comparison.

To evaluate the significance of the coefficients of the Poisson regression models, Wald tests were used, adjusted for multiple comparisons using the Sidak method (

Abdi, 2007), which maintains the probability of at least one Type I error at α under independence of tests. Full model estimates and diagnostics are provided in

Supplementary Table S1. The proportions of students who improved their DCs and their corresponding 95% confidence intervals were obtained by calculating the corresponding marginal effects.

p values < 0.05 were considered significant.

2.4. Ethical Aspects

The students who participated in this study did so freely and voluntarily. Those who completed the questionnaire had to accept an informed consent form located at the beginning of the instrument, in which they were informed of the confidentiality, anonymity, and use of the data. In other words, they had information about their rights to access their personal data, to rectify them, to delete them and to oppose their processing. Finally, the results were communicated through the same channels through which the initial contact of their university was established. The procedure followed was in accordance with the provisions of Organic Law 3/2018, of 5 December, on the protection of personal data and guarantees of digital rights and complementary regulations, as well as the provisions of the General Data Protection Regulation (European Union) 2016/679 of the European Parliament and of the Council, of 27 April 2016.

3. Results

Table 1 shows the distribution of participants by country and some characteristics. Students from Mexico were concentrated in younger age groups, followed by Spain, while more students from Peru were represented in the older age groups. There were more female students in the Peru and Mexico groups than in the Spanish group, although a significant proportion of those in Spain and Mexico chose not to disclose their gender, which was uncommon in the Peru group.

As for the study modality prior to the start of the pandemic, the Spanish group reported that it was mostly face-to-face, with little virtual component, while the Mexican group reported the opposite, that is, mostly virtual, with a smaller face-to-face component. The Peru group reported that half studied face-to-face with virtual campuses, and 30% were face-to-face only.

The declaration of a disability that required an adaptation to facilitate access to the educational process was more frequent in the Mexican group, followed by Peru and finally by Spain.

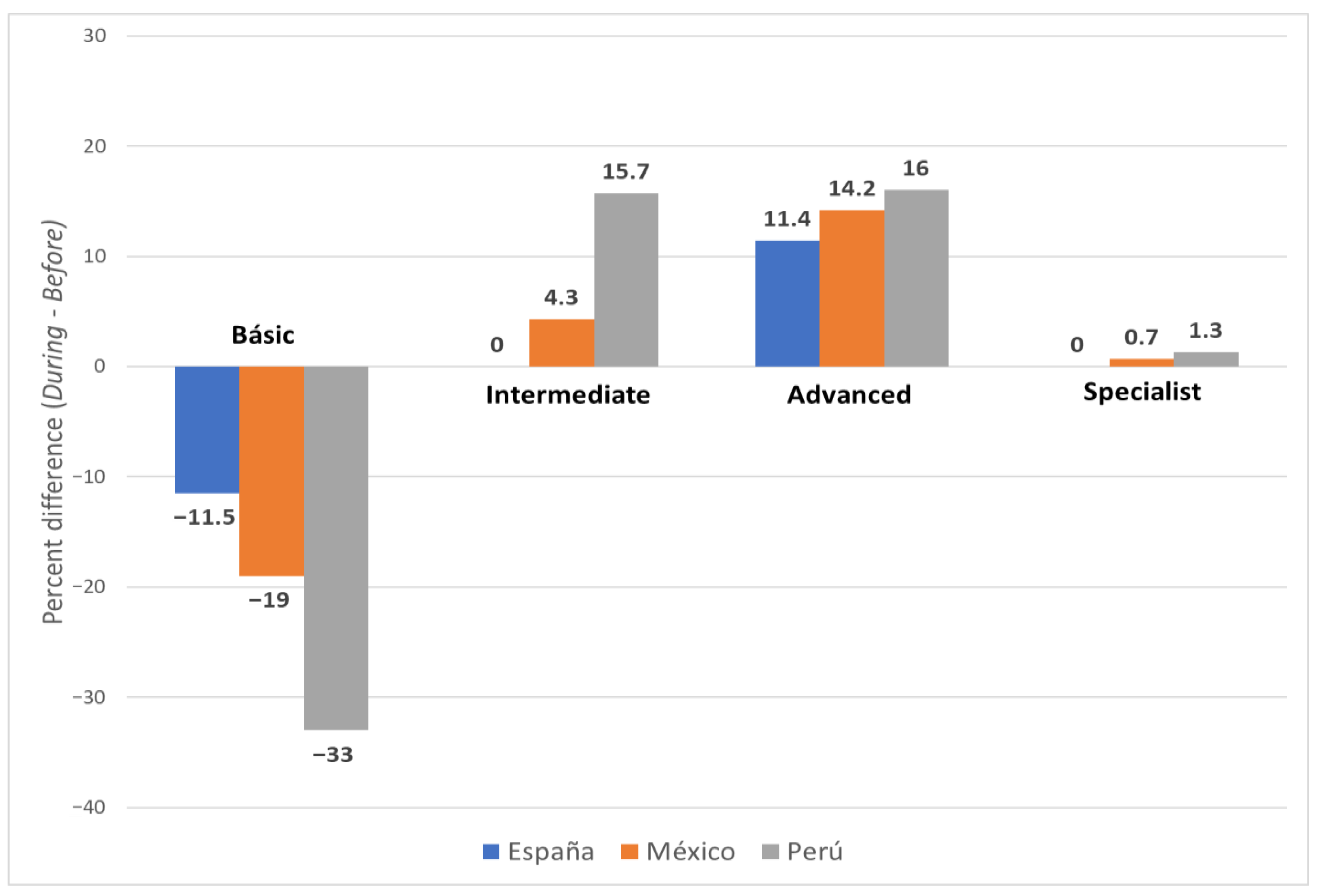

Table 2 shows the level of self-perceived DCs stated by students in the three countries, before and during the pandemic, as well as the difference in percentages during

menus before, which helps to assess the changes between these two periods of their lives. Students in Spain reported that, at the beginning of the pandemic, the levels of perceived digital skills were intermediate (48.3%) and advanced (31.8%); those in Mexico were basic (38.0%) and intermediate (49.1%); similar patterns were observed in Peru, with basic (47.1%) and intermediate (41.1%). During the first six months of the pandemic, the self-perception of their DCs levels among students in Spain improved (basic 48.3%; intermediate; 43.2%), but those in Mexico improved markedly (intermediate: 53.4%; advanced: 25.2%), and even more so those in Peru (intermediate: 56.8%; advanced: 27.4%).

Students in all three countries perceived their DCs improved. However,

Figure 1 shows that the difference in the percentages (during minus before) for the basic level of students in Peru decreased by 33 percentage points, while for the intermediate, advanced, and specialist levels increased by 15.7, 16.0, and 1.3 percentage points, respectively. This self-perceived improvement in DCs was similar, but of a smaller magnitude among the students in Mexico, and of an even lesser magnitude among the students in Spain.

The dynamic pattern or flow of self-perceived DCs between competency levels can be seen in greater detail in

Figure 2, which shows students in all three countries. Almost half of students in Peru perceived a basic proficiency level (blue) before the start of the pandemic, but a large proportion of them perceived an improvement in their proficiency level to the intermediate level (orange), although another significant proportion did not perceive an improvement of their proficiency and remained at the basic level (blue). Similarly, a significant proportion of students perceived an improvement of their level of proficiency from intermediate (orange) to advanced (green), and some even perceived an improvement from basic (blue) to advanced (green) directly.

This pattern was similar to that observed among the students in Mexico, but most of them perceived an advancement from the basic level to the intermediate level, and a substantial part perceived advancement from the intermediate to the advanced level; however, an appreciable proportion stated that their DCs declined from intermediate to basic, which was rare in Spain and Peru.

As for students in Spain, the evolution of the DCs was more stable, with a higher proportion of students self-perceiving their DCs level at the start of the pandemic as intermediate and advanced, with fewer students who perceived basic initial levels, but who mostly perceived improvement to intermediate and advanced levels.

Overall, students from Peru perceived substantial improvement in their DCs, followed by those from Mexico, approaching the students from Spain, although not perceiving the initial levels stated by them.

To compare the percentage of students who perceived improvement in their DCs between Spain, Mexico, and Peru, a binary dependent variable was used that was coded as “yes” when the student stated that their self-perceived DCs increased, or as “no” when they were maintained or decreased. The left-hand column (“Unadjusted” percentage) of

Table 3 shows the results of the Poisson regression model in which the independent variable is the students’ country (dummy-coded). More than half (56%) of students in Peru perceived improvement in their DCs, followed by 41% of students in Mexico and 22% of students in Spain. The overall Wald test for country was significant (Wald χ

2(2) = 39.77,

p < 0.001). Relative to Spain, the prevalence ratio (PR) of improvement was 1.80 (95% CI: 1.33–2.42;

p < 0.001) in Mexico and 2.21 (95% CI: 1.72–2.84;

p < 0.001) in Peru. Model fit statistics were: log pseudolikelihood = −670.32, pseudo-R

2 = 0.024. Goodness-of-fit tests indicated no lack of fit (Deviance χ

2(878) = 610.64,

p = 1.000; Pearson χ

2(878) = 516.00,

p = 1.000). According to the Wald Tests for the comparison of the coefficients of the model, adjusted for multiple comparisons using Sidak’s method, the percentages of students in Spain, Mexico and Peru who stated that their digital skills improved were statistically different to each other (Spain vs. Mexico:

p < 0.001; Spain vs. Peru:

p < 0.001; and Mexico vs. Peru:

p = 0.045).

The right-hand column shows the percentage of students who perceived improvement in their DCs by country, “adjusted” for differences in the distribution of the variables age, gender, mode of study prior to the pandemic, disability-related adaptation needs stated by the students, and the month of the survey. To do this, these variables were added to the Poisson regression model that already had country as the independent variable.

As can be seen in the “adjusted” column, the difference between the percentages of students who reported improvement decreased between Peru and Mexico after the adjustment but was still greater than the percentage of students who reported improvement in Spain. Using robust Poisson regression, the joint test of covariates was significant (Wald χ2(15) = 63.38, p < 0.001). Relative to Spain, the adjusted prevalence ratio of improvement was 1.57 in Mexico (95% CI: 1.09–2.27; p = 0.015) and 1.77 in Peru (95% CI: 1.32–2.37; p < 0.001). Month as a linear trend was not significant (PR = 1.04 per month; 95% CI: 0.97–1.12; p = 0.278). Model fit indices indicated adequate fit: log pseudolikelihood = −661.22, pseudo-R2 = 0.037; deviance χ2(865) = 592.44 (p = 1.000), Pearson χ2(865) = 518.02 (p = 1.000), AIC = 1354.44, BIC = 1430.93. According to the Wald Tests for the comparison of the coefficients of the model, adjusted for multiple comparisons using Sidak’s method, the percentages of students in Peru and Mexico who stated that their digital skills improved were statistically higher than the percentage in Spain (p < 0.001 and p = 0.015, respectively), but their percentages of self-perceived improvement were similar to each other (p = 0.328, not significant). Compared with the unadjusted model, adjusted PRs were attenuated but remained significant for Mexico and Peru.

To evaluate how the variables age, gender, “modality of studies prior to the pandemic”, and “needs for adaptation due to a disability” contributed to the evolution of the self-perceived DCs level,

Table 4 shows the percentage of students from the three countries who stated that they perceived improvement in their DCs, distributed according to these variables. Students in Peru reported improvement significantly in all age groups, while those in Mexico improved mainly in the under-21 age group and stated decreasing improvements as age increased. Students in Spain stated improvements at a lower rate, but primarily at the age extremes.

Regarding the effect of gender on the proportion of students who stated improvement of their DCs, the largest increases were observed in male and female students in Peru, with smaller increases in those who stated a different gender. Students from Mexico showed a similar pattern, but of lesser magnitude, while students from Spain showed an even lower proportion of students who stated improvement of their DCs level, but with a predominance among those who indicated that their gender was male.

The increase in the percentage of students in Peru who stated improvements in their DCs was close to 50% in almost all study modalities prior to the start of the pandemic. This pattern was similar in students in Mexico, except for those who were already studying in the fully virtual modality or those who had a virtual component of less than 50%. The proportion of students in Spain who stated improvements in their DCs was low in almost all previous modalities, except for those who were already in virtual mode completely or partially.

The percentage of students in Peru and Mexico who stated self-perceived improvements in their DCs was higher in those who did not reported having a disability, decreasing as disability complexity increased. On the other hand, among students in Spain, those who stated improvement of their self-perceived DCs were mainly students with sensory disabilities.

Table 4 shows that there were important differences between Spain, Mexico, and Peru in the distribution of the percentage of students who stated that their self-perceived DCs level improved, and that these differences depended partially on the distribution of the variables age, gender, mode of study, and disability of the students. When the comparison of DCs between countries according to these variables was adjusted or controlled, using Poisson regression models, the conclusions of the unadjusted analysis were maintained, noting, incidentally, a heterogeneous effect of the variable Disability in Spain, in the sense that 50% of its students who stated sensory disabilities responded that their DCs improved, but none of those who preferred not to declare whether they had a disability responded that they had improved their DCs. In contrast, the effect of the Disability variable was homogeneous for Mexico and Peru.

4. Discussion

With the increased adoption of online and hybrid learning post-COVID-19 by educational institutions, the need for students to understand and use digital tools to support their learning becomes evident (

Eurostat, 2023).

Competencies are defined as the ability to successfully respond to demands or perform specific tasks. Digital competence is fundamental for continuous learning, as detailed by

Calderón et al. (

2022) and

Núñez-Canal et al. (

2022). It goes beyond knowledge and skills: it involves the ability to face complex demands and apply skills in specific situations, along with psychological resources and attitudes.

Our objective was to determine whether changes in the self-perceived level of DCs during the first semester of the pandemic were different between Spain, Mexico, and Peru, countries that represent different levels of socioeconomic and educational development. Preliminary findings suggest a higher performance of university students in Spain relative to students in Mexico and Peru, who stated lower levels of self-perceived DCs level before the lockdown, especially in Peru. However, during the first six months of the pandemic, the perception of students from Mexico and Peru improved markedly. To the best of our knowledge, this is the first report to compare the evolution of the self-perception of the level of DCs between countries during the first months of the COVID-19 pandemic. Given the prolonged impact of the pandemic, online learning is likely to continue for the foreseeable future (

Esteve-Mon et al., 2020).

There were no statistically significant differences between the percentages of improvement in DCs between students from Peru and Mexico, who were younger than students from Spain. The literature documents that the level of DCs tends to be higher in younger cohorts (

Draganac et al., 2022). Likewise, a trend towards higher levels of DCs has been observed in male individuals (

Bonacini & Murat, 2023;

Wijana, 2022). In our study, participants were mostly female in Peru, followed by Mexico, whereas in Spain, female participation was lower. In addition, nearly 40% of Spanish students declined to disclose their gender on the survey, a minority phenomenon in Mexico and almost non-existent in Peru. On the other hand, differences were identified in the previous study modality, which serves as an indicator of prior access to information technologies (

Bonacini & Murat, 2023;

Pangrazio et al., 2020). However, using Poisson regression models, we were unable to explain the marked differences on the evolution in self-perceived DCs between countries based on differences in distribution by age, gender, previous study modality and the month of the survey. In the case of Spain, this finding may also reflect that students recognized the acquisition of new technical skills under the pressure of the circumstances but may not regard these as constituting a profound or qualitative improvement in their digital competence as future teachers. Similar conclusions have been reported by

Aguilar Cuesta et al. (

2021), who found that preservice teachers acknowledged progress during the pandemic yet did not equate it with substantial advances in their overall digital competence, This pattern may also be consistent with a ceiling effect, given that Spanish students started from higher initial levels of digital competence and therefore had less scope for perceived improvement. In the case of Peru, students perceived an improvement in their digital competences linked to their forced exposure to virtual environments, which may help to reduce pre-existing gaps in technology use. This perception was further reinforced by public policies aimed at expanding access and by greater awareness of the professional applicability of these skills, contributing to a more positive valuation of digital learning during the crisis (

García Zare et al., 2023).

Some studies have addressed the differential effect of the pandemic on some aspects related to DCs. For example,

Draganac et al. (

2022) explored differences in DCs between undergraduate and graduate students in multiple countries, while

Tejedor et al. (

2021) analyzed responses on the perception of digital competences in undergraduate students from Spain, Italy, and Ecuador without identifying significant differences, and the authors did not ask students to directly assess their level of competence, nor did they assess their change during the pandemic. Other authors, such as

Zhao et al. (

2025), have reported that higher education students and instructors show a basic level of digital competence, highlighting the need to strengthen institutional strategies.

Wijana (

2022) in Indonesia found that degree completion depends on family support and academic self-efficacy, not on the perception of DCs. Although relatively few studies have directly examined self-perceived digital competencies across multiple countries during the pandemic, there are relevant contributions addressing this dimension within national contexts, such as the longitudinal study by

Salem et al. (

2022) in Saudi Arabia. There are also broader comparative studies, such as

Aristovnik et al. (

2020), which analyze academic, social, and digital aspects of the student experience in 62 countries, although they do not focus specifically on the self-perception of digital competencies. In this sense, our study seeks to contribute a perspective centered on the evolution of the self-perception of university students in developing countries.

Comparisons between France, Germany, Italy, Spain, and the United Kingdom have made it clear that educational outcomes are related to the possession of the necessary resources for distance learning (

Azubuike et al., 2021),

Aristovnik et al. (

2020) noted that the effects of the pandemic have been disproportionately more severe and negative for the education systems of less developed countries, increasing the gaps with developed countries. It was therefore to be expected that this negative trend would be repeated in the case of digital competence. Conversely, our results suggest that, under certain conditions, students may perceive greater improvements in their DCs compared to students in countries with more robust education systems.

These findings can be partially explained by the institutional differences outlined in Section Contextual Aspects. The lower initial levels of digital preparedness in Mexico and Peru likely created greater scope for perceived improvement when faced with emergency remote teaching. The rapid implementation of institutional responses, including the deployment of digital platforms, ad hoc training, and flexible pedagogical strategies, may have directly influenced students’ perceived competence. The contrast with Spain, where more consolidated systems were already in place prior to the pandemic, suggests that abrupt systemic transformation may foster measurable gains in digital self-efficacy under crisis conditions.

Our study has several limitations. First, although we assessed students’ self-perception of changing their DCs, we did not directly measure these competencies. This single-item self-rating lacks formal psychometric validation. As such, construct validity is limited, and we explicitly limit inference to perceived directional change and note the need for future work using validated multi-item instruments. However, self-perception is a valid and relevant construct because it influences behavior and predicts students’ technological adoption (

Bandura, 1997;

Compeau & Higgins, 1995;

Venkatesh & Davis, 2000;

Venkatesh et al., 2003). Although we believe that our assessments reflect an underlying change, it would be necessary to confirm our findings with direct measurements. Second, random samples from a priori-defined population were not used and this may affect external validity; however, the selected universities admit very diverse students in each country, so, to increase the external validity, we chose to control diversity by adjusting for objective variables such as those described in

Table 4. Third, the study period was limited to the first six months of the pandemic, so it would be pertinent to conduct longer-term assessments to understand whether disparities between countries widen, hold, or decrease over time. Finally, the variation in the conceptualization of the term used (i.e., digital competence) in different languages could raise doubts about its equivalence in the three countries studied (

Pangrazio et al., 2020). Although some minor differences have been stated in its conceptualization in three different languages (Scandinavian, English and Spanish), our study was carried out in three Spanish-speaking countries, which would limit the impact of the assumed meaning of the term on our results. Additionally, the study cannot disentangle the influence of education policies from institutional support or from potential self-selection into responding.

Our results are consistent with differences in education policies, access to digital resources, and readiness for online education during the onset of the COVID-19 pandemic in the three countries. However, given the cross-sectional study design and measures used, we cannot attribute these patterns to specific policy effects. This interpretation aligns with the territorial and political impact of pandemic management (

Allain-Dupré et al., 2020). The use of digital tools has been shown to enrich learning processes and enhance efficiency (

Mangas et al., 2023). Hybrid models have opened new learning formats that require fundamental digital competencies (

Calderón et al., 2022), and as

Lloyd and Ordorika (

2021) emphasize, it is important for universities to promote essential principles such as equity, local engagement, and digital democratization.

Faced with this reality, it is incumbent upon us to adapt teaching methods to meet the needs of students in a volatile digital landscape, both during and after the COVID-19 pandemic. Opportunities for innovation and resilience in education have opened up (

Azubuike et al., 2021); therefore, it may be advisable to (1) reevaluate pedagogical practices and develop new skills and strategies for online teaching (

Noguera-Fructuoso & Valdivia-Vizarreta, 2023;

Allain-Dupré et al., 2020); (2) determine educational policy guidelines covering all aspects of online learning (

Esteve-Mon et al., 2020;

Burki, 2020); (3) motivate students to acquire these competencies and maintain their relevance in the modern era (

Eurostat, 2023;

Saha et al., 2023); and (4) invest in a strategic and flexible curricular approach to overcome the rapid obsolescence of knowledge (

Esteve-Mon et al., 2020).

5. Conclusions

In conclusion, our results suggest that despite the manifest limitations in their education systems, students in developing countries such as Peru and Mexico reported significant progress in their self-perceived digital competence during the early months of the pandemic. While describing this moment as an “opportunity” requires caution, it is plausible to hypothesize that factors such as the rapid response of educational authorities, targeted investments in structural solutions, and the openness of faculty to innovate and adapt may have contributed to creating a favorable environment for such progress. Although this improvement seems to be driven more by educational policies than by students’ sociodemographics, further research is needed to confirm whether these gains are sustainable over time.

The associations found in this study show that, under conditions of disruption, students in developing countries—such as Mexico and Peru—may significantly improve their self-perceived digital competencies, tending to narrow the gap with peers in countries with more robust educational systems like Spain. While sociodemographic variables alone do not account for the observed patterns, the role of institutional strategies and education policies remains a plausible explanation that warrants targeted testing in future studies.

However, this should not be seen merely as a reactive success. Rather, the results suggest the need to move toward a model of digital implementation with concrete actions: teacher training in digital literacy, subsidies for connectivity, assessment of competencies using validated frameworks such as DigComp, and policies that promote the use of open resources, hybrid teaching, and flexible learning (

Valdivia-Vizarreta & Noguera, 2022). The COVID-19 pandemic exposed long-standing inequalities and prompted necessary adaptations, but it also may have revealed the untapped potential of students when given the opportunity to respond to new demands.

In today’s rapidly evolving digital landscape, where emerging tools such as artificial intelligence are reshaping knowledge production and access, universities must move beyond emergency responses. Cultivating a culture of continuous digital development is essential, one that prepares students not only to adapt to change, but to lead it. Promoting meaningful, context-sensitive digital competence should thus be a core mission of higher education institutions committed to inclusion, innovation, and future resilience.

This manuscript was prepared without the use of large language models (LLMs). The authors are solely responsible for its content.