1. Introduction

Digital fatigue refers to a multidimensional state of tiredness and depleted energy attributable to prolonged digital device use, with visual, emotional, motivational, and social manifestations (

Romero-Rodríguez et al., 2023;

Yang et al., 2025). Technostress is a broader strain process arising from ICT demands—e.g., hyperconnectivity and overload (

Marsh et al., 2024)—that impair well-being and performance (

Yang et al., 2025), within which digital fatigue can appear as an outcome (

Fauville et al., 2021). Videoconference (“Zoom”) fatigue narrows the focus to synchronous video calls (

Bailenson, 2021;

Li & Yee, 2023) and has validated dimensions (

Fauville et al., 2021;

Li et al., 2024) overlapping with digital fatigue. Finally, digital eye strain primarily causes ocular/visual symptoms due to near work and sustained screen exposure (

American Academy of Ophthalmology, 2025;

Kaur et al., 2022;

Pucker et al., 2024;

Sheppard & Wolffsohn, 2018).

In the United States, almost half of the teachers in a study reported burnout and four out of five felt they were “always on call” because of technology (

WGU Labs, 2024). In Europe, a Hungarian survey (

n = 596) found that 54% reported “very severe technological stress” associated with long working hours and work–life imbalance (

Buda & Kovács, 2024). In Oceania, an Australian panel with ~6200 university employees classified 73% as being at high psychosocial risk due to the always-on culture (

UniSA, 2024). The same pattern emerges in other regions. In the Philippines, 322 teachers scored M = 3.35/5 on the Zoom Exhaustion & Fatigue Scale—representing a moderate level (

Oducado et al., 2022); in South Africa, technostress increased work-family conflict (β = 0.56) among 302 university lecturers (

Ward & Harunavamwe, 2025); and in Peru, technostress correlated strongly downward with teacher performance (ρ = −0.93) in a sample of 234 teachers (

Rafael Pantoja, 2025). Using methods ranging from validated scales to structural models, these studies indicate that digital fatigue is a global problem that is measurable and detrimental to well-being and academic productivity.

AI tools such as ChatGPT have begun to show potential in higher education: a study at the University of Illinois College of Medicine reported a fivefold reduction (≈150 h) in the time teachers spent providing feedback on assignments (

Sreedhar et al., 2025), and a quantitative analysis at Indian universities estimated weekly savings of 22.2 h after adopting automated assessment and planning systems (

Gupta et al., 2024). However, none of these studies measured whether time savings translate into less digital fatigue or less screen exposure, and the most recent systematic review on teacher well-being did not identify controlled trials with generative AI (

Lillelien & Jensen, 2025). This leaves an empirical gap that this pilot addresses.

Following Job Demands–Resources (JD-R) theory—which classifies screen overload as a harmful demand (

Molino et al., 2020) and digital autonomy/optimization as a protective resource (

Baumeister et al., 2021)—this study examines whether a two-week content-curation sprint with ChatGPT (i) reduces recorded screen time and (ii) reduces self-reported digital fatigue in university teachers. Within JD–R, digital fatigue arises when ICT demands (after-hours connectivity, multitasking, and prolonged screen exposure) outweigh job resources (autonomy and time/attention) (

Bakker et al., 2023;

Marsh et al., 2024). Generative AI can operate as a job resource through three pathways: (i) reallocation of time and attention via content curation and summarization, (ii) reduced cognitive load by structuring materials into concise, actionable artefacts, and (iii) increased decision latitude during lesson planning (

Lee et al., 2024;

OECD, 2024;

UNESCO, 2023). Accordingly, we hypothesized that a brief, instructor-facing curation sprint would shorten screen exposure and lower perceived digital fatigue.

To provide preliminary causal evidence, we used an AB–AB reversal design with eight university instructors, and we discuss implications for teacher workload policies.

Despite converging reports of elevated digital fatigue, the underlying evidence base remains methodologically heterogeneous. First, most cross-national studies are cross-sectional and rely on single-occasion self-report, which prevents establishing temporal precedence and is vulnerable to common-method bias. Second, operationalizations of “digital fatigue” vary widely—from ad hoc items or techno-stress composites to unvalidated proxies such as perceived productivity—limiting comparability and the interpretability of pooled estimates. Third, samples are typically small, convenience-based, and student-focused; instructor-specific mechanisms (e.g., grading, content curation, and communication load) are rarely analyzed, and effect sizes are inconsistently reported. Fourth, exposure to AI is often underspecified (which tools, for which tasks, and with what training), making it difficult to identify the active ingredient and to rule out novelty or expectancy effects. Consequently, the causal question—whether generative AI can reduce instructors’ digital fatigue—remains unanswered.

In this study, we address these limitations by specifying a single, theory-driven mechanism—AI-assisted short-cycle content curation—as a resource within JD-R; implementing a within-instructor pre–post design; employing a validated fatigue instrument and reporting standardized effect sizes; and focusing on university instructors in Latin America, a population underrepresented in prior work. By making the intervention, measures, and analytic plan explicit, we move from summary to synthesis and position the study to inform cumulative evidence. Theoretically, we extend JD-R by framing generative-AI–assisted short-cycle content curation as a time-and-attention resource that buffers digital strain among instructors. Practically, we contribute a low-cost, chat-based micro-sprint protocol for faculty development that is immediately scalable and directly targets digital fatigue, a largely overlooked outcome in AI-in-education research.

Accordingly, our primary research question was whether, in an AB–AB single-case reversal design with university lecturers, a brief, instructor-facing ChatGPT micro-sprint (≈15 min/day plus a weekly 15-min check-in) reduces daily screen-time (minutes/day) and perceived digital fatigue (FDU-24) during B phases compared with adjacent A phases.

2. Materials and Methods

2.1. Design

We used a multiple-baseline method across lecturers with an embedded AB–AB single-case reversal within each participant (

Kratochwill et al., 2015). Baseline lengths were randomized to 5, 6, or 7 consecutive workdays to stagger intervention onset and mitigate history effects. Each participant completed four phases in fixed order: A1 (baseline), B1 (intervention), A2 (withdrawal), and B2 (re-introduction). Daily measurements occurred on each workday throughout all phases.

A phase advanced only after ≥5 consecutive daily datapoints were visually stable (±15% around the phase mean) and without evident monotonic drift; otherwise, the phase continued until stability criteria were met.

During B phases, lecturers performed a brief, instructor-facing ChatGPT micro-sprint (≈15 min/day) to prepare teaching materials, plus a weekly 15-min check-in with the study team to verify adherence and address prompt-related issues. During A phases, lecturers followed usual preparation without the micro-sprint. We kept day-level logs and saved a one-sentence daily summary of the micro-sprint to a shared drive as an auditable trace.

Adherence was the proportion of planned micro-sprints completed during B phases. Fidelity was pre-specified as ≥90% of workdays with a saved daily summary during B1/B2. Weekly check-ins verified adherence/fidelity.

Primary outcomes were (i) device-tracked daily screen-time (minutes/day), every logged workday, and (ii) perceived digital fatigue (FDU-24 total score), administered once per week within each phase. Secondary/process outcomes included micro-sprint adherence and technology acceptance (Perceived Usefulness, Perceived Ease of Use) collected after B2.

Inference relied on replicated on–off control within participants (A → B → A → B), immediacy of level change at phase boundaries, and phase-wise visual analysis of level, trend, and variability. Quantification used non-overlap indices (Tau-U with baseline-trend correction) and within-participant standardized mean differences, each with 95% confidence intervals, given the pilot’s nature (n = 8), and p-values were interpreted as exploratory.

We selected this staggered single-case AB–AB design rather than a parallel-group randomized controlled trial because the unit of change was within-instructor behaviour (time on screen and routine planning practices), the available sample was small and heterogeneous across courses, and running parallel sections posed a high contamination risk (spillovers in workflows and prompts). The AB–AB structure provided strong internal validity via replication within participants (withdrawal/reintroduction) and replication across participants (staggered baselines) while ensuring equitable access to the active condition. We chose this approach because single-case time-series paired with Tau-U effect sizes are robust to non-normality and allow trend-controlled estimates appropriate for short phases, making them well-suited for early-phase efficacy/feasibility evaluations in which rapid, reversible changes are expected and strict class-level randomization is impractical.

External validity and precision were limited by the small, single-site sample (n = 8); accordingly, we treated generalizability as a major limitation and framed the study as hypothesis-generating.

2.2. Setting and Participants

The study was carried out at a mid-sized private university in northern Peru. Eight full-time lecturers (four women; M age = 38.7 years, SD = 5.2) met all inclusion criteria: (i) a teaching load of ≥20 h per week; (ii) a baseline score of ≥48 on the 24-item Peruvian Digital Fatigue Questionnaire (FDU-24; wording minimally adapted for faculty), which corresponds to at least moderate digital fatigue (

Yglesias-Alva et al., 2025); and (iii) willingness to share fully anonymised screen-time analytics. Ten lecturers responded to the e-mail invitation, but two were excluded because their FDU-24 scores were below the threshold. All eight eligible participants provided written informed consent. Given that the institution employs approximately 180 lecturers, the final sample represents about 4% of the faculty body.

2.3. Intervention

During each B phase, lecturers uploaded compulsory course readings (≤10,000-word PDF) to ChatGPT-4o once per workday with the following prompt:

“Summarise this text in ≤200 words, keep headings, and add three discussion questions.”

The AI summary replaced the source text for lesson planning; students did not receive the AI output.

2.4. Measures

Table 1 summarizes the instruments, their sources, and reliability indices calculated in the current sample.

The FDU-24 exhibited excellent internal consistency in this study (α = 0.93; ω = 0.92) and has published validation evidence in Peruvian university populations, supporting its use in higher-education settings. For technology acceptance, the perceived usefulness (PU) and perceived ease of use (PEOU) short forms showed good internal consistency (PU α = 0.90; PEOU α = 0.87). These coefficients, together with prior validation, support both the reliability of our measures and their external validity for instructor populations in this context.

2.5. Procedure

Table 2 details the sequence of phases, duration and variables collected in each stage of the AB–AB design.

2.6. Statistical Analysis

Time series were graphed for level, trend, and immediacy of effect. Non-overlap between phases was quantified with Tau-U (

Klingbeil et al., 2019), corrected for baseline trend. Effect magnitudes were interpreted as small (<0.20), moderate (0.20–0.60) or large (>0.60) per

Parker et al. (

2011). Paired-phase Cohen’s

d supplemented Tau-U for Baseline 1 vs. Intervention 1 and Withdrawal vs. Intervention 2. Two-tailed α = 0.05. Analyses were run in R 4.4.0 using packages scan and singleCaseES.

2.7. Ethical Considerations

The protocol was approved by Institutional Research Ethics Committee of Universidad Continental (CIEI-UC; protocol UC-2025-041, approved 12 January 2025). All procedures followed the Declaration of Helsinki and Peru’s Personal Data Protection Law 29733. Lecturers could withdraw at any time without penalty. Screen-time logs were hashed, stored on encrypted drives, and will be deleted five years post-publication.

3. Results

3.1. Descriptive Statistics

Table 3 summarizes descriptive statistics across phases. At baseline (A

1), the mean FDU-24 total was 65.0 ± 7.4 (SE = 2.62; 95% CI [58.8, 71.2]) and mean daily screen time was 420 ± 42 min/day (SE = 14.85; 95% CI [384.9, 455.1]). During the first intervention (B

1), these dropped to 50.8 ± 7.6 (SE = 2.69; 95% CI [44.4, 57.2]) and 298 ± 36 min (SE = 12.73; 95% CI [267.9, 328.1]), respectively—representing −14.2 points in FDU-24 (−21.8%) and −122 min/day (−29.0%) relative to A

1. In the withdrawal phase (A

2), values rebounded toward baseline (64.5 ± 7.0, SE = 2.48; 95% CI [58.6, 70.4]; 400 ± 44 min, SE = 15.56; 95% CI [363.2, 436.8]) and decreased again upon re-introduction (B

2: 48.3 ± 6.6, SE = 2.33; 95% CI [42.8, 53.8]; 297 ± 48 min, SE = 16.97; 95% CI [256.9, 337.1]), amounting to −16.2 points in FDU-24 (−25.1%) and −103 min/day (−25.8%) versus A

2.

3.2. Within-Participant Effect Sizes

Single-case non-overlap indices were consistently large. The overall median Tau-U across participants was −0.79 (95% CI −0.88 to −0.64). By phase contrast, the median Tau-U was −0.84 (95% CI −0.95 to −0.66) for A

1 → B

1 and −0.74 (95% CI −0.86 to −0.56) for A

2 → B

2, indicating replicated reductions across A → B transitions. Complementary paired-phase standardized mean differences were also large: A

1 → B

1,

d = −1.67 (FDU-24) and −2.22 (screen time); A

2 → B

2,

d = −1.87 (FDU-24) and −1.50 (screen time). These magnitudes align with the visual non-overlap and phase-level contrasts.

Table 4 summarizes single-case non-overlap indices and phase-level effect sizes: the overall median Tau-U across participants was −0.79 (95% CI −0.88 to −0.64). By phase, median Tau-U was −0.84 for A1→B1 (95% CI −0.95 to −0.66) and −0.74 for A2→B2 (95% CI −0.86 to −0.56), indicating replicated reductions across A→B transitions. Com-plementary paired-phase standardized mean differences were also large: A1→B1, d = −1.67 (FDU-24) and −2.22 (screen time); A2→B2, d = −1.87 (FDU-24) and −1.50 (screen time). These magnitudes align with the visual non-overlap and phase-level contrasts.

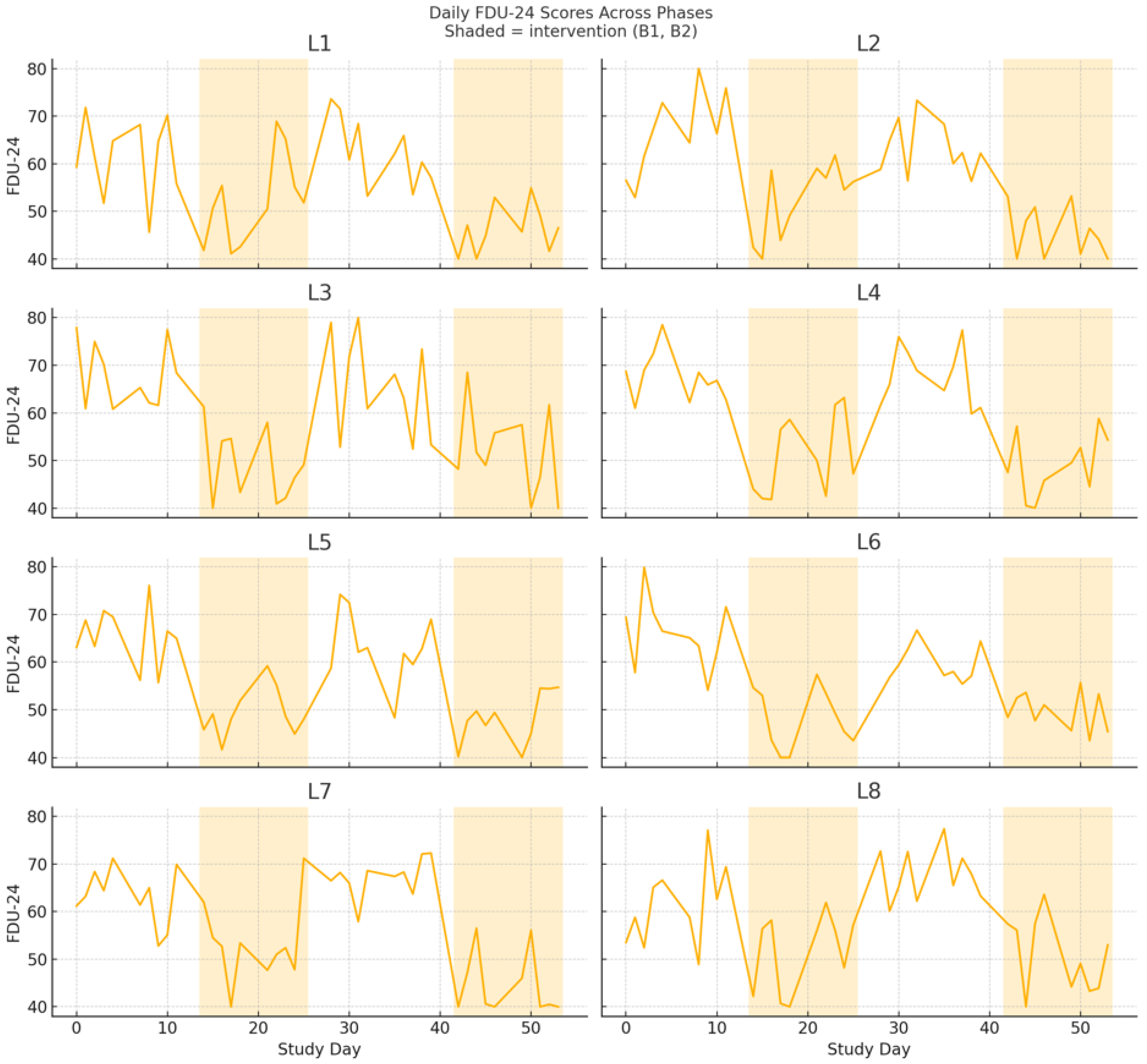

3.3. Individual Time-Series

Figure 1 shows immediate level changes at the start of each B phase for 7/8 lecturers (87.5%) within <2 days, followed by partial rebounds during A

2 and renewed declines in B

2. The replicated “on–off” control pattern is evident in both outcomes, with visibly lower medians and reduced within-phase dispersion during intervention phases.

3.4. Technology Acceptance

Post-intervention technology acceptance ratings were uniformly high: Perceived Usefulness (PU) = 6.2 ± 0.5, 95% CI [5.78, 6.62]; Perceived Ease of Use (PEOU) = 6.4 ± 0.4, 95% CI [6.07, 6.73] (1–7 scale). Every lecturer scored ≥5 on both subscales.

To contextualize the PU/PEOU patterns, we report brief, de-identified lecturer perceptions recorded during weekly check-ins, and in the intervention, logs included: “Short cycles helped me focus curation and cut late-night screen time” (L1); “Having ready-to-adapt summaries reduced ‘tab hopping’ and fatigue after classes” (L2); and “I reduced prep time from 90 to 45 min” (L3). These perceptions were consistent with higher perceived usefulness and ease of use and align with the hypothesized time-and-attention mechanism.

Table 5 summarizes post-intervention technology acceptance ratings, which were uniformly high: Perceived Usefulness (PU) = 6.2 ± 0.5 (95% CI: 5.78–6.62) and Perceived Ease of Use (PEOU) = 6.4 ± 0.4 (95% CI: 6.07–6.73) on a 1–7 scale; every lecturer scored ≥5 on both subscales.

3.5. Treatment Fidelity and Adverse Events

Protocol adherence averaged 96% (range 90–100%) across B phases based on weekly log audits. Sensitivity analyses (excluding first day of each phase to remove start-up transients) yielded comparable Tau-U and d magnitudes and did not alter conclusions. No adverse events were reported during weekly check-ins.

4. Discussion

Embedding a daily 15-min “ChatGPT micro-sprint” supplemented by a weekly 15-min check-in at the start of lesson-planning sessions cut instructors’ screen exposure by ≈122 min day−1 (≈10 h week−1) and lowered multidimensional digital fatigue scores by ≈22–24%. The baseline was 420 min day−1, so the reduction represents ~29% of initial exposure. Because the protocol itself requires only 1.25 h week−1 of synchronous time (15 min × 5 days + 15 min check-in), every hour invested yields roughly an 8 h return—an 8:1 time-efficiency ratio. These gains were corroborated by a Tau-U of −0.79 and Cohen’s d values between −1.5 and −2.2, effect sizes seldom reported in faculty well-being interventions. To our knowledge, this is the first experimental evidence that a generative-AI workflow can simultaneously shorten preparation time and mitigate digital fatigue among university teachers—a dual benefit with direct implications for productivity and occupational health.

Our outcomes index time cost (daily screen time) and self-reported digital workload burden (FDU-24), but we did not directly evaluate the quality of lecturers’ work while using ChatGPT. Consequently, our findings should be interpreted as evidence of feasibility and workload reduction, not as proof of quality equivalence. Future studies should incorporate triangulated quality measures, such as (i) blinded rubric-based ratings of teaching artifacts (syllabi revisions, feedback given to students, and assessment prompts), (ii) structured classroom/online-session observations, (iii) learner and peer evaluations, and (iv) qualitative methods (think-aloud protocols, interviews, and diary studies) to trace how ChatGPT is used, where human oversight occurs, and whether any unintended degradation or improvement in instructional quality emerges.

Our ~10 h week

−1 gain is midway between the 22.2 h week

−1 savings reported by

Gupta et al. (

2024) and the five-fold reduction in faculty grading workload—≈150 h saved, or ≈80% less grading time once the AI workflow stabilised—documented by

Sreedhar et al. (

2025). This span suggests that workload relief scales with task complexity and the maturity of AI workflows. While prior work shows that generative AI lifts perceived efficiency and self-efficacy among higher-education faculty (

Mah & Groß, 2024), empirical data on well-being outcomes remain scarce. Efficiency without well-being is hollow. Our findings extend a growing body of technostress research—where excessive ICT demands erode job satisfaction and health (

Marrinhas et al., 2023;

Nascimento et al., 2025;

Saleem & Malik, 2023;

Yang et al., 2025)—by demonstrating that the very technology often blamed for overload can also remove a demand (screen time) and add a resource (creative scaffolding) when deployed strategically. That pattern aligns with recent JD-R extensions that treat AI capabilities as a resource moderating techno-strain (

Chuang et al., 2025).

By coupling reduced demands (shorter exposure, lower cognitive load) with augmented resources (automation, immediate feedback), generative AI seems to tilt the JD-R balance toward motivational pathways, echoing

Belkina et al.’s (

2025) meta-synthesis, where workload relief is a recurrent benefit in higher education: although fear of misuse predicts lower uptake (

Verano-Tacoronte et al., 2025), instructors who move to daily GenAI use are already reporting lighter workloads (

D2L, 2025)—suggesting a potential fear-relief cycle worth testing in longitudinal designs.

Institutions could operationalise our findings in four complementary ways. First, a “micro-sprint” protocol: by reserving the opening 15 min of each planning meeting for a ChatGPT session, departments can release roughly ten hours of instructor time per week without adding screen burden (present study; qualitative perception of efficiency reported in

Lee et al., 2024). Second, prompt-engineering clinics: professional-development workshops that teach cognitive off-loading prompts—rather than creative-writing tricks alone—would magnify the fatigue-reduction effect we observed (

Diyab et al., 2025;

Martin et al., 2025). Third, equity safeguards: because qualitative evidence documents high technostress among lecturers in Latin-American universities with limited digital infrastructure (

Herrera-Sánchez et al., 2023), institutions could adopt campus-wide licenses that give lecturers free ChatGPT-4 access—an approach already implemented at Duke University (

Duke OIT, 2024) and several U.S. state-system campuses (

OpenAI, 2024;

Palmer, 2025), and complement it with basic ergonomic-wellness training to mitigate screen-related strain (

Buda & Kovács, 2024). Finally, well-being dashboards: learning-management systems should embed the FDU-24 scale—recently validated with university students and displaying values of α = 0.93 and ω = 0.92 in our faculty sample (

Yglesias-Alva et al., 2025)—to flag excessive after-hours activity and nudge lecturers toward AI-generated summaries as a healthier alternative. This aligns with UNESCO’s Guidance for Generative AI in Education and Research, which calls for immediate actions and long-term capacity-building to ensure a human-centred, teacher-supportive implementation of GenAI; our micro-sprint model operationalizes these recommendations in practice (

UNESCO, 2023).

Strengths include a replicated AB–AB single-case design with staggered baselines, high treatment fidelity, and multidimensional workload/fatigue measurement, which strengthen internal validity. Notwithstanding these strengths, several limitations warrant being noted. First, we did not directly assess instructional quality or learner outcomes; therefore, the observed workload reductions should not be interpreted as evidence of quality equivalence. Second, the single-site, small-N lecturer sample (n = 8) limits precision and external validity. Third, the non-randomized AB–AB design with a fixed phase order and a short observation window restricts causal inference and durability claims; carry-over, maturation, and history effects cannot be fully excluded despite the on–off replication. Fourth, outcomes relied on self-report and device-based traces that may miss multi-device activity or misclassify apps; reactivity is also possible. Fifth, the study lacked blinding and an active-control AI condition, so expectancy/Hawthorne and novelty effects may contribute and constrain attribution to ChatGPT per se. Finally, we did not audit content integrity/quality of AI-assisted deliverables (e.g., blinded rubric ratings, originality checks, alignment with institutional policy). Accordingly, findings should be regarded as preliminary and hypothesis-generating.

Future work should include large, multi-site randomized trials that compare ChatGPT with non-AI support tools, incorporate ≥6-month follow-up, and assess cost–effectiveness. Studies should also mode mediators (e.g., prompt complexity, prior AI experience) and technostress creators/inhibitors to pinpoint for whom and under what conditions generative AI delivers wellness gains, thereby advancing SDG 4.c on better-supported, well-qualified teachers (

United Nations, 2015).

5. Conclusions

In a staggered single-case AB–AB design with eight lecturers, a chat-delivered, generative-AI-assisted short-cycle content-curation protocol produced immediate, replicated reductions in digital fatigue and daily screen time (large Tau-U and Cohen’s d), alongside high acceptance, 96% fidelity, and no adverse events. Theoretically, the intervention operationalizes a JD–R resource (time-and-attention); practically, it is low cost and readily scalable within routine faculty development. While preliminary, these signals offer a concrete, replicable pathway for institutions seeking to mitigate digital fatigue without adding workload.

Strengths include a replicated AB–AB design, high treatment fidelity, and multidimensional fatigue measurement; limitations include a small, single-site sample, reliance on self-logged analytics, and the absence of an active-control AI condition, which constrain generalizability and durability claims.

Next-step studies should: (i) integrate neuroergonomic/neuroscience markers of fatigue—e.g., oculometrics and attentional-lapse tasks, heart-rate variability, and, where feasible, non-invasive EEG—to triangulate self-report and test mechanisms; (ii) run cost–benefit or cost-effectiveness evaluations that monetize time saved against training, licensing, and governance costs (with sensitivity analyses at the course and department levels); (iii) conduct multi-site pragmatic trials (e.g., cluster RCTs with active controls) to assess generalizability, heterogeneity of effects, and dose–response; and (iv) examine long-term sustainability and policy alignment, embedding well-being metrics into quality-assurance and faculty-development cycles.

In sum, a concise “content-curation sprint” with generative AI can alleviate digital overload while preserving workload feasibility, offering a pragmatic pathway for institutions balancing pedagogical innovation with educator well-being.

Author Contributions

Conceptualization, R.C.D.-M. and V.T.C.B.; methodology, R.C.D.-M.; software, R.C.D.-M.; validation, R.C.D.-M., L.M.H.R. and H.R.G.; formal analysis, R.C.D.-M.; investigation, V.T.C.B., L.M.H.R., A.A.L., J.C.R.C., F.V.P., M.A.D.l.C.C., H.R.G. and A.M.G.M.; resources, V.T.C.B., L.M.H.R., A.A.L., J.C.R.C., F.V.P., M.A.D.l.C.C., H.R.G. and A.M.G.M.; data curation, R.C.D.-M. and A.A.L.; writing—original draft preparation, R.C.D.-M.; writing—review and editing, R.C.D.-M., V.T.C.B., L.M.H.R., A.A.L., J.C.R.C., F.V.P., M.A.D.l.C.C., H.R.G. and A.M.G.M.; visualization, R.C.D.-M.; supervision, R.C.D.-M.; project administration, R.C.D.-M.; funding acquisition, R.C.D.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of Universidad Continental (protocol code: UC-2025-041, date of approval: 12 January 2025).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- American Academy of Ophthalmology. (2025). Computer vision syndrome (Digital eye strain)—EyeWiki. EyeWiki. Available online: https://eyewiki.org/Computer_Vision_Syndrome_(Digital_Eye_Strain) (accessed on 31 August 2025).

- Bailenson, J. N. (2021). Nonverbal overload: A theoretical argument for the causes of Zoom fatigue. Technology, Mind, and Behavior, 2(1). [Google Scholar] [CrossRef]

- Bakker, A. B., Demerouti, E., & Sanz-Vergel, A. (2023). Job demands–resources theory: Ten years later. Annual Review of Organizational Psychology and Organizational Behavior, 10(1), 25–53. [Google Scholar] [CrossRef]

- Baumeister, V. M., Kuen, L. P., Bruckes, M., & Schewe, G. (2021). The relationship of work-related ICT use with well-being, incorporating the role of resources and demands: A meta-analysis. Sage Open, 11(4), 21582440211061560. [Google Scholar] [CrossRef]

- Belkina, M., Daniel, S., Nikolic, S., Haque, R., Lyden, S., Neal, P., Grundy, S., & Hassan, G. M. (2025). Implementing generative AI (GenAI) in higher education: A systematic review of case studies. Computers and Education: Artificial Intelligence, 8, 100407. [Google Scholar] [CrossRef]

- Buda, A., & Kovács, K. (2024). The digital aspects of the wellbeing of university teachers. Frontiers in Education, 9, 1406141. [Google Scholar] [CrossRef]

- Chuang, Y.-T., Chiang, H.-L., & Lin, A.-P. (2025). Insights from the job demands–Resources model: AI’s dual impact on employees’ work and life well-being. International Journal of Information Management, 83, 102887. [Google Scholar] [CrossRef]

- D2L. (2025). New research data reveals that faculty workload can drop with daily AI use. D2L. Available online: https://www.d2l.com/newsroom/tyton_partners_report_examines_ai_in_higher_education/ (accessed on 2 August 2025).

- Diyab, A., Frost, R. M., Fedoruk, B. D., & Diyab, A. (2025). Engineered prompts in ChatGPT for educational assessment in software engineering and computer science. Education Sciences, 15(2), 156. [Google Scholar] [CrossRef]

- Duke OIT. (2024). ChatGPT Edu|Office of information technology. Available online: https://oit.duke.edu/service/chatgpt-edu/ (accessed on 2 August 2025).

- Fauville, G., Luo, M., Queiroz, A. C. M., Bailenson, J. N., & Hancock, J. (2021). Zoom exhaustion & fatigue scale. Computers in Human Behavior Reports, 4, 100119. [Google Scholar] [CrossRef]

- Gupta, M. S., Kumar, N., & Rao, V. (2024, November 30). AI and teacher productivity: A quantitative analysis of time-saving and workload reduction in education. Conference Venue Date & Time Proceedings International Conference on Advancing Synergies in Science, Engineering, and Management (ASEM-2024), Saharanpur, India. Available online: https://www.researchgate.net/publication/387980511_AI_and_Teacher_Productivity_A_Quantitative_Analysis_of_Time-Saving_and_Workload_Reduction_in_Education (accessed on 1 August 2025).

- Herrera-Sánchez, M. J., Casanova-Villalba, C. I., Bravo Bravo, I. F., & Barba Mosquera, A. E. (2023). Estudio comparativo de las desigualdades en el tecnoestrés entre instituciones de educación superior en América Latina y Europa. Código Científico Revista de Investigación, 4(2), 1288–1303. [Google Scholar] [CrossRef]

- Kaur, K., Gurnani, B., Nayak, S., Deori, N., Kaur, S., Jethani, J., Singh, D., Agarkar, S., Hussaindeen, J. R., Sukhija, J., & Mishra, D. (2022). Digital eye strain—A comprehensive review. Ophthalmology and Therapy, 11(5), 1655–1680. [Google Scholar] [CrossRef]

- Klingbeil, D. A., Van Norman, E. R., McLendon, K. E., Ross, S. G., & Begeny, J. C. (2019). Evaluating Tau-U with oral reading fluency data and the impact of measurement error. Behavior Modification, 43(3), 413–438. [Google Scholar] [CrossRef] [PubMed]

- Kratochwill, T. R., Mission, P., & Hagermoser, E. (2015). Single-case experimental designs. In The encyclopedia of clinical psychology (pp. 1–12). John Wiley & Sons, Ltd. [Google Scholar] [CrossRef]

- Lee, D., Arnold, M., Srivastava, A., Plastow, K., Strelan, P., Ploeckl, F., Lekkas, D., & Palmer, E. (2024). The impact of generative AI on higher education learning and teaching: A study of educators’ perspectives. Computers and Education: Artificial Intelligence, 6, 100221. [Google Scholar] [CrossRef]

- Li, B. J., & Yee, A. Z. H. (2023). Understanding videoconference fatigue: A systematic review of dimensions, antecedents and theories. Internet Research, 33(2), 796–819. [Google Scholar] [CrossRef]

- Li, B. J., Zhang, H., & Montag, C. (2024). Too much to process? Exploring the relationships between communication and information overload and videoconference fatigue. PLoS ONE, 19(12), e0312376. [Google Scholar] [CrossRef]

- Lillelien, K., & Jensen, M. T. (2025). Digital and digitized interventions for teachers’ professional well-being: A systematic review of work engagement and burnout using the job demands–resources theory. Education Sciences, 15(7), 799. [Google Scholar] [CrossRef]

- Mah, D.-K., & Groß, N. (2024). Artificial intelligence in higher education: Exploring faculty use, self-efficacy, distinct profiles, and professional development needs. International Journal of Educational Technology in Higher Education, 21(1), 58. [Google Scholar] [CrossRef]

- Marrinhas, D., Santos, V., Salvado, C., Pedrosa, D., & Pereira, A. (2023). Burnout and technostress during the COVID-19 pandemic: The perception of higher education teachers and researchers. Frontiers in Education, 8, 1144220. [Google Scholar] [CrossRef]

- Marsh, E., Perez Vallejos, E., & Spence, A. (2024). Digital workplace technology intensity: Qualitative insights on employee wellbeing impacts of digital workplace job demands. Frontiers in Organizational Psychology, 2, 1392997. [Google Scholar] [CrossRef]

- Martin, A. J., Collie, R. J., Kennett, R., Liu, D., Ginns, P., Sudimantara, L. B., Dewi, E. W., & Rüschenpöhler, L. G. (2025). Integrating generative AI and load reduction instruction to individualize and optimize students’ learning. Learning and Individual Differences, 121, 102723. [Google Scholar] [CrossRef]

- Molino, M., Ingusci, E., Signore, F., Manuti, A., Giancaspro, M. L., Russo, V., Zito, M., & Cortese, C. G. (2020). Wellbeing costs of technology use during COVID-19 remote working: An investigation using the Italian translation of the technostress creators scale. Sustainability, 12(15), 5911. [Google Scholar] [CrossRef]

- Nascimento, L., Correia, M. F., & Califf, C. B. (2025). Techno-eustress under remote work: A longitudinal study in higher education teachers. Education and Information Technologies, 30, 16633–16670. [Google Scholar] [CrossRef]

- Oducado, R. M. F., Dequilla, M. A. C. V., & Villaruz, J. F. (2022). Factors predicting videoconferencing fatigue among higher education faculty. Education and Information Technologies, 27(7), 9713–9724. [Google Scholar] [CrossRef] [PubMed]

- OECD. (2024). Education policy outlook 2024: Reshaping teaching into a thriving profession from ABCs to AI. OECD. [Google Scholar] [CrossRef]

- OpenAI. (2024). Presentamos ChatGPT Edu. OpenAI. Available online: https://openai.com/es-ES/index/introducing-chatgpt-edu/ (accessed on 3 August 2025).

- Palmer, K. (2025). Faculty latest targets of big tech’s AI-ification of higher Ed. Inside Higher Ed. Available online: https://www.insidehighered.com/news/faculty-issues/learning-assessment/2025/08/01/faculty-are-latest-targets-higher-eds-ai (accessed on 3 August 2025).

- Parker, R. I., Vannest, K. J., Davis, J. L., & Sauber, S. B. (2011). Combining nonoverlap and trend for single-case research: Tau-U. Behavior Therapy, 42(2), 284–299. [Google Scholar] [CrossRef]

- Pucker, A., Kerr, A., Sanderson, J., & Lievens, C. (2024). Digital eye strain: Updated perspectives. Clinical Optometry, 16, 233–246. [Google Scholar] [CrossRef]

- Rafael Pantoja, M. (2025). Tecnoestrés y desempeño profesional en docentes de una universidad pública de Huaraz 2024 [Master’s thesis, Universidad Católica de Trujillo Benedicto XVI]. Available online: https://repositorio.uct.edu.pe/items/5eb28156-09db-4fce-878d-10a211b4df92 (accessed on 7 July 2025).

- Rauniar, R., Rawski, G., Yang, J., & Johnson, B. (2014). Technology acceptance model (TAM) and social media usage: An empirical study on Facebook. Journal of Enterprise Information Management, 27(1), 6–30. [Google Scholar] [CrossRef]

- Romero-Rodríguez, J.-M., Hinojo-Lucena, F.-J., Kopecký, K., & García-González, A. (2023). Fatiga digital en estudiantes universitarios como consecuencia de la enseñanza online durante la pandemia COVID-19. Educación XX1, 26(2), 165–184. [Google Scholar] [CrossRef]

- Saleem, F., & Malik, M. I. (2023). Technostress, quality of work life, and job performance: A moderated mediation model. Behavioral Sciences, 13(12), 1014. [Google Scholar] [CrossRef] [PubMed]

- Sheppard, A. L., & Wolffsohn, J. S. (2018). Digital eye strain: Prevalence, measurement and amelioration. BMJ Open Ophthalmology, 3(1), e000146. [Google Scholar] [CrossRef]

- Sreedhar, R., Chang, L., Gangopadhyaya, A., Shiels, P. W., Loza, J., Chi, E., Gabel, E., & Park, Y. S. (2025). Comparing scoring consistency of large language models with faculty for formative assessments in medical education. Journal of General Internal Medicine, 40(1), 127–134. [Google Scholar] [CrossRef]

- UNESCO. (2023). Guidance for generative AI in education and research. UNESCO. [Google Scholar] [CrossRef]

- UniSA. (2024). Uni sector scores poor report card when it comes to workplace health. Home. Available online: https://unisa.edu.au/media-centre/Releases/2024/uni-sector-scores-poor-report-card-when-it-comes-to-workplace-health/ (accessed on 7 July 2025).

- United Nations. (2015). THE 17 GOALS|Sustainable development. Available online: https://sdgs.un.org/goals (accessed on 7 August 2025).

- Verano-Tacoronte, D., Bolívar-Cruz, A., & Sosa-Cabrera, S. (2025). Are university teachers ready for generative artificial intelligence? Unpacking faculty anxiety in the ChatGPT era. Education and Information Technologies. [Google Scholar] [CrossRef]

- Ward, C. E., & Harunavamwe, M. (2025). The role of perceived organisational support on technostress and work–family conflict. SA Journal of Industrial Psychology, 51(0), a2218. [Google Scholar] [CrossRef]

- WGU Labs. (2024). 2024 CIN faculty EdTech survey: EdTech and the evolving role of faculty. WGU Labs. Available online: https://www.wgulabs.org/posts/2024-cin-faculty-edtech-survey-edtech-and-the-evolving-role-of-faculty (accessed on 7 July 2025).

- Yang, D., Liu, J., Wang, H., Chen, P., Wang, C., & Metwally, A. H. S. (2025). Technostress among teachers: A systematic literature review and future research agenda. Computers in Human Behavior, 168, 108619. [Google Scholar] [CrossRef]

- Yglesias-Alva, L. A., Estrada-Alva, L. A., Lizarzaburu-Montero, L. M., Miranda-Troncoso, A. E., Vera-Calmet, V. G., & Aguilar-Armas, H. M. (2025). Digital fatigue in Peruvian University students: Design and validation of a multidimensional questionnaire. Journal of Educational and Social Research, 15(3), 431. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).