Students’ Perceptions of Generative AI Image Tools in Design Education: Insights from Architectural Education

Abstract

1. Introduction

2. Methods

3. Results

3.1. Descriptive Overview of Student Responses

3.1.1. Ethical Responsibility of Using AI-Generated Images

3.1.2. Integration of AI-Generated Images in Design Education

3.1.3. Use of AI-Generated Images in the Design Process of Studio Project

3.1.4. Impact of AI-Generated Images in Future Career

3.2. Correlation Analysis

3.3. MANOVA Analysis

4. Discussion

4.1. Foundational Issues

4.1.1. Ethics and Disclosure

4.1.2. Authorship and Human-Centered Design Decision

4.2. Contextual Insights

4.2.1. Job Security and Future Professional Roles

4.2.2. Tool Usability and Prompt Literacy

4.2.3. Detectability and Perceived Technological Trajectory

4.2.4. Reflection on GenAI Workshop

5. Limitations and Future Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| GenAI | Generative Artificial Intelligence |

| IR | Instructor Responsibility to detect |

| DA | Design Authenticity |

| LN | Learning Necessity |

| EU | Excitement to Use |

| SA | Student Acknowledgment |

| IS | Institutional Support |

| ED | Ease to Detect |

| TT | Temporal Trend |

| SC | Student Competitiveness |

| FI | Future Impact |

| NFC | Necessity for Future Career |

| FJS | Future Job Security |

| EFU | Expectation for Future Use |

| N_CD | Never allowed Concept Design |

| A_CD | Acceptable with acknowledgment Concept Design |

| N_DD | Never allowed Design Development |

| A_DD | Acceptable with acknowledgment Design Development |

| N_FP | Never allowed Final Presentation |

| A_FP | Acceptable with acknowledgment Final Presentation |

Appendix A

- Male

- Female

- Non-binary/third gender

- Prefer to self-describe

- Prefer not to say

- White

- Black or African American

- American Indian or Alaska Native

- Asian

- Native Hawaiian or Pacific Islander

- Other (specify)

- Less than high school diploma

- High School degree or equivalent (e.g., GED)

- Bachelor’s degree (e.g., BA, BS)

- Master’s degree (e.g., MA, MS, MEd)

- Doctorate (e.g., PhD, EdD)

- Others (please specify)

- IARD (Interior Architecture)

- ARCH (Architecture)

- Others (please specify)

- 1st

- 2nd

- 3rd

- 4th

- 5th

- Others (please specify)

- Whenever a student uses AI-generated images in their work, they should acknowledge the use of AI image generated platform.

- It is the responsibility of teachers and professors to detect if AI-generated images were used in students’ work.

- I believe that my institution will be supportive of the usage of AI-generated images to assist students with their design.

- The use of AI-generated images in students’ work can be easily detected.

- The use of AI-generated images is unethical in the context of the authenticity of the design project.

- AI-generated images are hyped at the moment only because it is new and unique, but soon the hype will be over.

- AI-generated images will contribute to increasing the competitiveness of students who are not confident in creativity and design capability.

- I need to learn how to use AI-generated image platforms to improve my performance as a designer.

- I am excited about using AI-generated images as a future architectural designer.

- AI-generated images will critically affect architectural design disciplines in my future career.

- I will need to use AI-generated images to be competitive in my future career.

- I worry that AI-generated images will take my job in the future. In my future job, I will be able to outsource creative visualizing tasks to AI-generated images while I focus on critical problem-solving aspects.

- The use of AI-generated images in the CONCEPT DESIGN process as an inspiration tool should not be allowed in design, regardless of whether the student acknowledges it.

- Using AI-generated images in the CONCEPT DESIGN process, as an inspiration tool, could be acceptable if the student acknowledges it.

- The use of AI-generated images in DESIGN DEVELOPMENT, especially for developing material, lighting, and colors, should not be allowed in design, regardless of whether the student acknowledges it.

- Using of AI-generated images in DESIGN DEVELOPMENT, especially for developing material, lighting, and colors, could be acceptable if the student acknowledges it.

- The use of AI-generated images in the PRESENTATION (such as in a final review) as a final rendering image should not be allowed in design, regardless of whether the student acknowledges it.

- Using AI-generated images in the presentation (such as in a final review) as a final rendering image could be acceptable if the student acknowledges it.

Appendix B

| 1 Ethical Responsibility and Personal Attitudes | 2 Acknowledgment and Institutional Support | 3 Perceived Impact on Career and Future Use | |

| IR * Instructor Responsibility to detect | 0.377 | −0.002 | −0.494 |

| DA * Design Authenticity | 0.156 | 0.615 | −0.543 |

| LN Learning Necessity | 0.583 | 0.251 | −0.411 |

| EU Excitement to Use | 0.266 | 0.269 | −0.622 |

| SA Student Acknowledgment | −0.347 | 0.569 | 0.377 |

| IS Institutional Support | −0.252 | 0.787 | 0.330 |

| ED Ease to Detect | 0.493 | 0.239 | 0.324 |

| TT * Temporal Trend | 0.536 | −0.101 | −0.035 |

| SC Student Competitiveness | 0.252 | 0.344 | 0.395 |

| FI Future Impact | 0.539 | −0.206 | 0.465 |

| NFC Necessity for Future Career | 0.445 | 0.186 | 0.476 |

| FJS Future Job Security | 0.314 | −0.152 | 0.531 |

| EFU Expectation for Future Use | 0.543 | 0.015 | 0.038 |

| Note. ‘*’ indicates the item that was reverse computed for Cronbach’s alpha and Principal Component Analysis. | |||

References

- Ackermann, E. (1996). Perspective-taking and object construction. In Y. B. Kafai, & M. Resnick (Eds.), Constructionism in practice (1st ed.). Routledge. [Google Scholar] [CrossRef]

- Almaz, A. F., El-Agouz, E. A. E., Abdelfatah, M. T., & Mohamed, I. R. (2024). The future role of Artificial Intelligence (AI) design’s integration into architectural and interior design education is to improve efficiency, sustainability, and creativity. Civil Engineering and Architecture, 12(3), 1749–1772. [Google Scholar] [CrossRef]

- Bates, T., Cobo, C., Mariño, O., & Wheeler, S. (2020). Can artificial intelligence transform higher education? International Journal of Educational Technology in Higher Education, 17(1), 42. [Google Scholar] [CrossRef]

- Chan, C. K. Y., & Hu, W. (2023). Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. International Journal of Educational Technology in Higher Education, 20(1), 43. [Google Scholar] [CrossRef]

- Chandrasekera, T., Hosseini, Z., & Perera, U. (2024). Can artificial intelligence support creativity in early design processes? International Journal of Architectural Computing, 23(1), 122–136. [Google Scholar] [CrossRef]

- Chiu, T. K. F. (2023). The impact of Generative AI (GenAI) on practices, policies and research direction in education: A case of ChatGPT and Midjourney. Interactive Learning Environments, 32(10), 6187–6203. [Google Scholar] [CrossRef]

- Chui, M., Hazan, E., Roberts, R., Singla, A., & Smaje, K. (2023). The economic potential of generative AI: The next productivity frontier. McKinsey & Company. Available online: https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier (accessed on 21 August 2024).

- Hanafy, N. O. (2023). Artificial intelligence’s effects on design process creativity: “A study on used A.I. Text-to-Image in architecture”. Journal of Building Engineering, 80, 107999. [Google Scholar] [CrossRef]

- Hettithanthri, U., Hansen, P., & Munasinghe, H. (2023). Exploring the architectural design process assisted in conventional design studio: A systematic literature review. International Journal of Technology and Design Education, 33(5), 1835–1859. [Google Scholar] [CrossRef]

- Holmes, W., Miao, F., & UNESCO. (2023). Guidance for generative AI in education and research. UNESCO Publishing. [Google Scholar]

- Horvath, A.-S., & Pouliou, P. (2024). AI for conceptual architecture: Reflections on designing with text-to-text, text-to-image, and image-to-image generators. Frontiers of Architectural Research, 13(3), 593–612. [Google Scholar] [CrossRef]

- Hsu, H.-P. (2025). From programming to prompting: Developing computational thinking through large language model-based generative artificial intelligence. TechTrends, 69(3), 485–506. [Google Scholar] [CrossRef]

- Ibrahim, H., Liu, F., Asim, R., Battu, B., Benabderrahmane, S., Alhafni, B., Adnan, W., Alhanai, T., AlShebli, B., Baghdadi, R., Bélanger, J. J., Beretta, E., Celik, K., Chaqfeh, M., Daqaq, M. F., Bernoussi, Z. E., Fougnie, D., Garcia De Soto, B., Gandolfi, A., … Zaki, Y. (2023). Perception, performance, and detectability of conversational artificial intelligence across 32 university courses. Scientific Reports, 13(1), 12187. [Google Scholar] [CrossRef]

- Ibrahim, N. L. N., & Utaberta, N. (2012). Learning in architecture design studio. Procedia—Social and Behavioral Sciences, 60, 30–35. [Google Scholar] [CrossRef]

- Ifenthaler, D., Majumdar, R., Gorissen, P., Judge, M., Mishra, S., Raffaghelli, J., & Shimada, A. (2024). Artificial intelligence in education: Implications for policymakers, researchers, and practitioners. Technology, Knowledge and Learning, 29(4), 1693–1710. [Google Scholar] [CrossRef]

- Jaruga-Rozdolska, A. (2022). Artificial intelligence as part of future practices in the architect’s work: Midjourney generative tool as part of a process of creating an architectural form. Architectus, 3(71), 95–104. [Google Scholar] [CrossRef]

- Kahraman, M. U., Şekerci, Y., Develier, M., & Koyuncu, F. (2024). Integrating artificial intelligence in interior design education: Concept development. Journal of Computational Design, 5(1), 31–60. [Google Scholar] [CrossRef]

- Kee, T., Kuys, B., & King, R. (2024). Generative artificial intelligence to enhance architecture education to develop digital literacy and holistic competency. Journal of Artificial Intelligence in Architecture, 3(1), 24–41. [Google Scholar] [CrossRef]

- Levin, I., Semenov, A. L., & Gorsky, M. (2025). Smart learning in the 21st century: Advancing constructionism across three digital epochs. Education Sciences, 15(1), 45. [Google Scholar] [CrossRef]

- Ngo, T. T. A. (2023). The Perception by University Students of the Use of ChatGPT in Education. International Journal of Emerging Technologies in Learning (iJET), 18(17), 4–19. [Google Scholar] [CrossRef]

- Nussbaumer, L. L., & Guerin, D. A. (2000). The relationship between learning styles and visualization skills among interior design students. Journal of Interior Design, 26(2), 1–15. [Google Scholar] [CrossRef]

- Ogunleye, B., Zakariyyah, K. I., Ajao, O., Olayinka, O., & Sharma, H. (2024). A systematic review of generative AI for teaching and learning practice. Education Sciences, 14(6), 636. [Google Scholar] [CrossRef]

- Papert, S. A. (1980). Mindstorms: Children, computers, and powerful ideas. Basic books. [Google Scholar]

- Soliman, A. M. (2017). Appropriate teaching and learning strategies for the architectural design process in pedagogic design studios. Frontiers of Architectural Research, 6(2), 204–217. [Google Scholar] [CrossRef]

- Sopher, H., Fisher-Gewirtzman, D., & Kalay, Y. E. (2018). Use of immersive virtual environment in the design studio. Proceedings of eCAADe 2018—36th Annual Conference, 17, 856–862. [Google Scholar]

- Taber, K. S. (2018). The Use of Cronbach’s Alpha When Developing and Reporting Research Instruments in Science Education. Research in Science Education, 48(6), 1273–1296. [Google Scholar] [CrossRef]

- Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education—Where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 39. [Google Scholar] [CrossRef]

| n = 42 | % | |

|---|---|---|

| Gender | ||

| Female | 35 | 83.3 |

| Male | 6 | 14.3 |

| Prefer not to say | 1 | 2.4 |

| Race | ||

| White | 36 | 85.7 |

| Hispanic or Latino | 3 | 7.1 |

| American Indian or Alaska Native | 2 | 4.8 |

| Asian | 1 | 2.4 |

| Major and Years | ||

| Architecture | 10 | 23.8 |

| Second years | 3 | 7.1 |

| Third years | 2 | 4.8 |

| Fourth years | 1 | 2.4 |

| Fifth years | 4 | 9.5 |

| Interior Architecture | 32 | 76.2 |

| First years | 6 | 14.3 |

| Second years | 10 | 23.8 |

| Third years | 3 | 7.1 |

| Fourth years | 13 | 31.0 |

| Age (Mean) | 21.78 |

| Component | Initial Eigenvalues (Total) | % of Variance | Cumulative % |

|---|---|---|---|

| 1 Ethical Responsibility and Personal Attitudes | 2.24 | 17.21 | 35.16 |

| 2 Acknowledgment and Institutional Support | 1.74 | 13.40 | 48.57 |

| 3 Perceived Impact on Career and Future Use | 2.33 | 17.95 | 17.95 |

| Category 1 Ethical Responsibility and Personal Attitudes (α = 0.66) | ||||

| M | SD | PCA loading | ||

| IR * Instructor Responsibility to detect | It is the responsibility of teachers (professors) to detect if AI-generated images were used in students’ work. | 2.88 | 0.97 | 0.54 |

| DA * Design Authenticity | The use of AI-generated images is unethical in the context of the authenticity of the design project. | 2.00 | 0.86 | 0.78 |

| LN Learning Necessity | I need to learn how to use AI-generated image platforms to improve my performance as a designer. | 3.62 | 1.15 | 0.70 |

| EU Excitement to Use | I am excited about using AI-generated images as a future architectural designer. | 4.12 | 0.94 | 0.71 |

| Category 2 Acknowledgment and Institutional Support (α = 0.72) | ||||

| M | SD | PCA loading | ||

| SA Student Acknowledgment | Whenever a student uses AI-generated images in their work, they should acknowledge the use of an AI image-generated platform. | 4.40 | 0.99 | 0.75 |

| IS Institutional Support | I believe that my institution will be supportive of the usage of AI-generated images to assist students with their design. | 3.98 | 1.02 | 0.89 |

| Category 3 Perceived Impact on Career and Future Use (α = 0.62) | ||||

| M | SD | PCA loading | ||

| ED Ease to Detect | The use of AI-generated images in students’ work can be easily detected. | 3.74 | 1.06 | 0.61 |

| TT * Temporal Trend | AI-generated images are hyped at the moment only because they are new and unique, but soon the hype will be over | 2.45 | 0.94 | 0.41 |

| SC Student Competitiveness | AI-generated images will contribute to increasing the competitiveness of students who are not confident in creativity and design capability. | 3.64 | 1.12 | 0.46 |

| FI Future Impact | AI-generated images will critically affect architectural design disciplines in my future career. | 3.79 | 1.00 | 0.69 |

| NFC Necessity for Future Career | I will need to use AI-generated images to be competitive in my future career. | 3.36 | 1.14 | 0.65 |

| FJS Future Job Security | I worry that AI-generated images will take my job in the future. | 2.43 | 1.29 | 0.55 |

| EFU Expectation for Future Use | In my future job, I will be able to outsource creative visualizing tasks to AI-generated images while I focus on critical problem-solving aspects. | 3.74 | 0.94 | 0.47 |

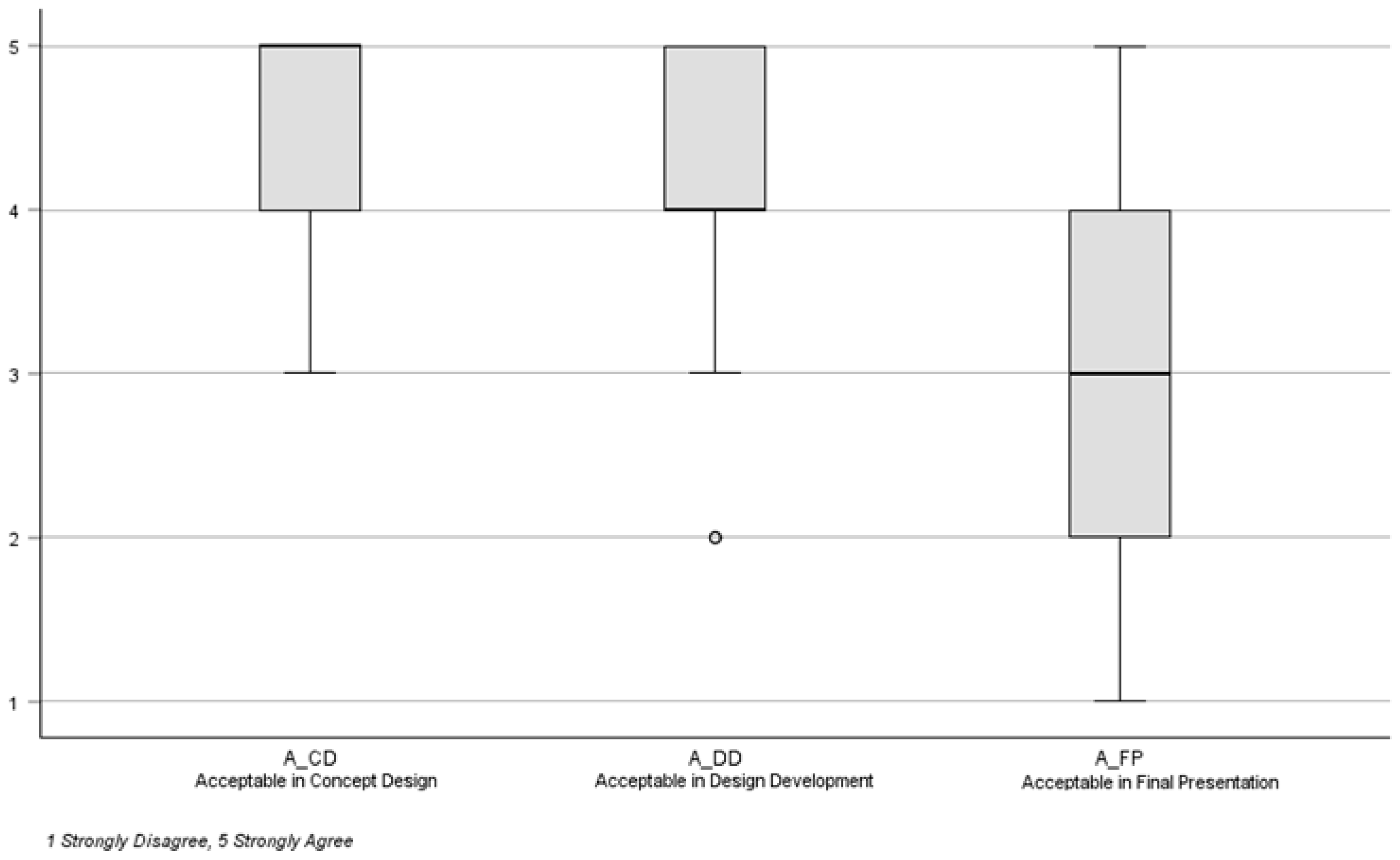

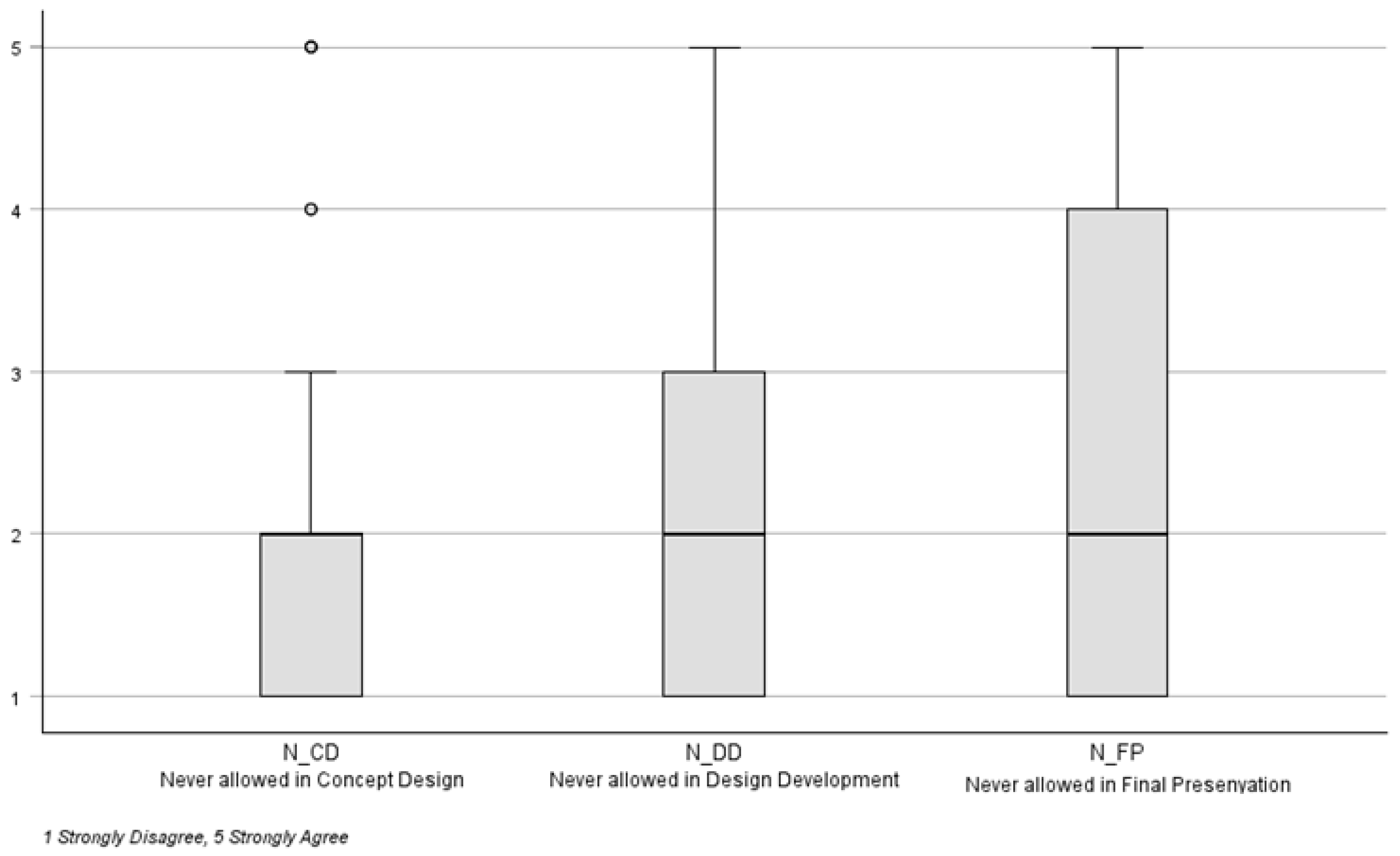

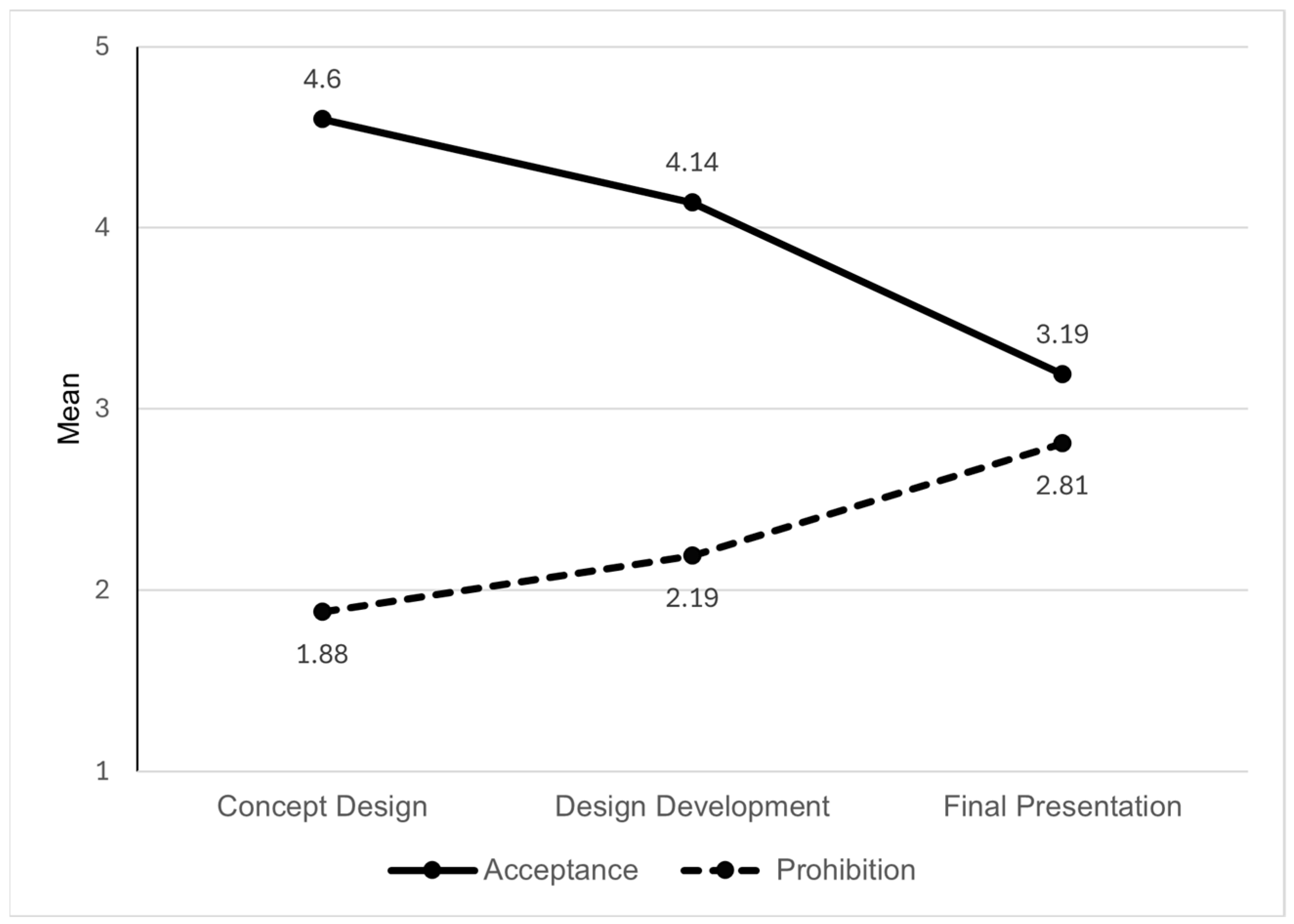

| Category 4 Use of AI-generated images in the Design Process (α = 0.78) | ||||

| M | SD | |||

| N_CD * Never allowed Concept Design | The use of AI-generated images in the CONCEPT DESIGN process as an inspiration tool should not be allowed in design, regardless of whether the student acknowledges it. | 1.88 | 1.17 | - |

| A_CD Acceptable with acknowledgment Concept Design | Using AI-generated images in the CONCEPT DESIGN process as an inspiration tool could be acceptable if the student acknowledges it. | 4.60 | 0.54 | - |

| N_DD * Never allowed Design Development | The use of AI-generated images in DESIGN DEVELOPMENT, especially for developing material, lighting, and colors, should not be allowed in design, regardless of whether the student acknowledges it. | 2.19 | 1.09 | - |

| A_DD Acceptable with acknowledgment Design Development | Using AI-generated images in DESIGN DEVELOPMENT, especially for developing material, lighting, and colors, could be acceptable if the student acknowledges it. | 4.14 | 0.81 | - |

| N_FP * Never allowed Final Presentation | The use of AI-generated images in the PRESENTATION (such as in a final review) as a final rendering image should not be allowed in design, regardless of whether the student acknowledges it. | 2.81 | 1.50 | - |

| A_FP Acceptable with acknowledgment Final Presentation | Using AI-generated images in the PRESENTATION (such as in a final review) as a final rendering image could be acceptable if the student acknowledges it. | 3.19 | 1.37 | - |

| Variables | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2 DA | 0.265 | |||||||||||||||||

| 3 LN | −0.372 * | −0.348 * | ||||||||||||||||

| 4 EU | −0.198 | −0.424 ** | 0.359 * | |||||||||||||||

| 5 SA | 0.255 | 0.000 | −0.140 | −0.184 | ||||||||||||||

| 6 IS | 0.194 | −0.195 | −0.112 | −0.048 | 0.564 ** | |||||||||||||

| 7 ED | −0.031 | 0.027 | 0.217 | 0.032 | 0.150 | 0.039 | ||||||||||||

| 8 TT | 0.060 | −0.030 | −0.130 | −0.227 | 0.113 | 0.163 | −0.147 | |||||||||||

| 9 SC | 0.117 | −0.025 | 0.119 | −0.097 | −0.020 | 0.290 | 0.206 | −0.051 | ||||||||||

| 10 FI | 0.049 | 0.199 | 0.118 | −0.205 | −0.132 | −0.077 | 0.130 | −0.179 | 0.191 | |||||||||

| 11 NFC | −0.027 | −0.025 | −0.080 | −0.131 | 0.020 | 0.195 | 0.240 | −0.154 | 0.254 | 0.473 ** | ||||||||

| 12 FJS | 0.061 | 0.331 * | −0.002 | −0.143 | 0.052 | −0.047 | 0.405 ** | −0.043 | 0.209 | 0.262 | 0.142 | |||||||

| 13 EFU | −0.062 | −0.091 | 0.222 | 0.009 | −0.041 | −0.057 | 0.174 | −0.304 | −0.068 | 0.250 | 0.203 | 0.055 | ||||||

| 14 N_CD | 0.181 | 0.097 | −0.307 * | −0.163 | 0.190 | 0.059 | −0.359 * | 0.403 ** | −0.126 | −0.168 | −0.295 | 0.018 | 0.037 | |||||

| 15 A_CD | −0.511 ** | −0.315 * | 0.373 * | 0.430 ** | −0.278 | −0.018 | −0.231 | −0.062 | −0.083 | −0.253 | −0.272 | −0.303 | −0.069 | −0.154 | ||||

| 16 N_DD | 0.323 * | 0.420 ** | −0.312 * | −0.237 | 0.312 * | 0.158 | −0.167 | 0.104 | 0.077 | −0.029 | −0.213 | 0.184 | −0.046 | 0.535 ** | −0.320 * | |||

| 17 A_DD | −0.195 | −0.561 ** | 0.373 * | 0.391 * | −0.286 | −0.113 | 0.044 | 0.009 | −0.076 | −0.051 | 0.101 | −0.315 * | 0.178 | −0.110 | 0.299 | −0.693 ** | ||

| 18 N_FP | 0.370 * | 0.475 ** | −0.086 | −0.053 | 0.152 | −0.162 | −0.017 | −0.058 | −0.070 | −0.141 | −0.215 | 0.232 | −0.227 | 0.125 | −0.276 | 0.426 ** | −0.336 * | |

| 19 A_FP | −0.296 | −0.543 ** | 0.203 | 0.266 | −0.293 | 0.021 | 0.018 | −0.144 | 0.045 | 0.120 | 0.205 | −0.352 * | 0.287 | −0.229 | 0.271 | −0.616 ** | 0.568 ** | −0.779 ** |

| Independent Variables | Dependent Variables | Type III Sum of Squares | df (Model, Error) | Mean Square | F-Value | p-Value | R Squared |

|---|---|---|---|---|---|---|---|

| IR Instructor’s Responsibility to detect | A_CD | 4.693 | 4, 37 | 1.173 | 5.846 | <0.001 | 0.387 |

| A_DD | 2.990 | 4, 37 | 0.747 | 1.145 | 0.351 | 0.110 | |

| A_FP | 7.228 | 4, 37 | 1.807 | 0.965 | 0.438 | 0.095 | |

| DA Design Authenticity | A_CD | 1.482 | 3, 38 | 0.494 | 1.765 | 0.170 | 0.122 |

| A_DD | 8.733 | 3, 38 | 2.911 | 6.008 | 0.002 | 0.322 | |

| A_FP | 26.707 | 3, 38 | 8.902 | 6.797 | <0.001 | 0.349 | |

| N_CD | 1.580 | 3, 38 | 0.527 | 0.365 | 0.779 | 0.028 | |

| N_DD | 10.245 | 3, 38 | 3.415 | 3.395 | 0.028 | 0.211 | |

| N_FP | 34.301 | 3, 38 | 11.434 | 7.469 | <0.001 | 0.371 | |

| LN Learning Necessity | A_CD | 2.675 | 4, 37 | 0.669 | 2.620 | 0.050 | 0.221 |

| A_DD | 3.893 | 4, 37 | 0.973 | 1.549 | 0.208 | 0.143 | |

| A_DD | 6.379 | 4, 37 | 1.595 | 0.842 | 0.508 | 0.083 | |

| EU Excitement to Use | A_CD | 2.667 | 3, 38 | 0.889 | 3.573 | 0.023 | 0.220 |

| A_DD | 4.413 | 3, 38 | 1.471 | 2.459 | 0.078 | 0.163 | |

| A_FP | 5.524 | 3, 38 | 1.841 | 0.986 | 0.410 | 0.072 |

| How Do You Feel About Incorporating AI Image Generators into Your Design Process? | |

|---|---|

| Categories | Quotes |

| Intend to use in concept design at the early design stage (n = 31) | “It allows for a lot more experimentation in such a short time. This could really help kick-start a project.” “I feel like it is extremely helpful because my brain is only so limited and AI is unlimited.” “I think it is a great start to a project with brainstorming and seeing an idea highly rendered.” |

| Recognize its contribution to inspiration and confidence (n = 15) | “I feel like AI will be a great resource for concept development, just as places like Pinterest help with inspiration.” “I think this is an amazing tool for launching a project. It will help get my creativity going.” “It helps newer designers feel more confident about coming up with ideas. “ “Certain tasks are sped up with AI tools, and it gives confidence to put more detail into the project.” |

| Intend not to use in the final stage of the design process (n = 7) | “I would use it in preliminary stages, but I would want my work to be my own later.” “I don’t feel I would use it for a final project.” “I may try to use it as a brainstorming tool, but I am still hesitant to use it past the conceptual design stage.” “I believe that AI should be avoided in final rendering and technical design. Given the current state of AI, it does not understand many design rules and the human condition in space.” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huh, M.B.; Miri, M.; Tracy, T. Students’ Perceptions of Generative AI Image Tools in Design Education: Insights from Architectural Education. Educ. Sci. 2025, 15, 1160. https://doi.org/10.3390/educsci15091160

Huh MB, Miri M, Tracy T. Students’ Perceptions of Generative AI Image Tools in Design Education: Insights from Architectural Education. Education Sciences. 2025; 15(9):1160. https://doi.org/10.3390/educsci15091160

Chicago/Turabian StyleHuh, Michelle Boyoung, Marjan Miri, and Torrey Tracy. 2025. "Students’ Perceptions of Generative AI Image Tools in Design Education: Insights from Architectural Education" Education Sciences 15, no. 9: 1160. https://doi.org/10.3390/educsci15091160

APA StyleHuh, M. B., Miri, M., & Tracy, T. (2025). Students’ Perceptions of Generative AI Image Tools in Design Education: Insights from Architectural Education. Education Sciences, 15(9), 1160. https://doi.org/10.3390/educsci15091160