1. Introduction

We live in a rapidly changing world driven by advanced technology and an increased flow of information, where users of educational services seek ever more effective and flexible learning methods. These methods must enable them to perceive, absorb, and apply specific topics or skills within a short period of time. Consequently, numerous questions arise regarding the relevance of higher education and its capacity to meet the needs of learners. It is essential to reconsider and update teaching and learning methods to adapt to current conditions while aligning with the generational characteristics of students. This leads to questions such as the following: How does the modern individual find the information they need? What are the channels for obtaining the necessary information? How much time is dedicated to learning—the perception, processing, and interpretation of information? What are the generational characteristics of today’s learners? Are the capabilities of digital environments adequately utilized in university education?

These questions touch not only on the type, content, and volume of educational materials, but also on the tools used for effective perception and interpretation. This presents several challenges for higher education in the Republic of Bulgaria and its traditional learning formats, calling for a fundamental shift in teaching approaches. Although the COVID-19 pandemic accelerated the adoption and development of e-learning and its associated tools, most of these were discontinued once the situation normalized. While this was partially due to limitations in academic curricula, this was not the sole reason. Contemporary education demands flexible frameworks for structuring content and dynamic teaching methods that align with learners’ expectations of educational services. There is a need for active work on teaching methods and tools, including specific learning units that integrate a variety of instruments for fast assimilation and interpretation of information at a convenient moment for the learner.

In this context, it is advisable to draw upon the experience and best practices of microlearning by systematizing the opportunities for applying well-established instructional tools, including digital tools, in higher education. Microlearning, also known as “microteaching” or “micro educational units,” is a learning process that segments information into small, specific, and easily digestible units (

Hug, 2006). This approach focuses on delivering short, concentrated content aimed at achieving a clearly defined learning outcome.

Torgerson and Iannone (

2020) define microlearning as a set of tools and principles already employed by training content creators, such as “just-in-time” learning and refresher modules. These educational units share one essential characteristic: they can be quickly absorbed.

Tipton (

2017) makes an important clarification: breaking down instructional content into smaller units is not intended to make learning superficial or to merely fit the format, but rather to support deeper understanding and improved knowledge retention.

According to Blueoceanbrain.com (

Blue Ocean Brain, 2025), for microlearning to be effective, it must be naturally integrated into students’ curricula and daily tasks. They add that such systems should be based on how people learn and retain information and must be adaptable to help learners achieve specific, concrete goals. Educational expert

Thalheimer (

2017) defines microlearning as any relatively short learning interaction—ranging from a few seconds to approximately 20 min—that may include a combination of the following: presentation of new content, review of prior material, hands-on exercises, task assignments, feedback on independent work, consultations, and more.

Kapp and Defelice (

2019) further elaborate on the definition of microlearning by out-lining its key elements: the unit is short in duration, with a clearly defined beginning and end; it targets a specific and measurable learning outcome; it can take various forms and lengths, such as a short video lesson, text document, work instruction, or info-graphic; it requires voluntary and active learner engagement; it includes a specific learner action (whether cognitive or physical); and it must be consciously designed, rather than being a mechanical segmentation of longer content into smaller parts.

In their study “Online Microlearning and Student Engagement in Computer Games Higher Education”,

McKee and Ntokos (

2022) reveal that the use of microlearning techniques—such as segmented lecture recordings—can contribute to maintaining learner engagement and confidence with the instructional content among higher education students. This approach proves particularly effective in the context of online and distance education, as it addresses the challenge of sustaining students’ focus and attention. The study finds that an optimal video length of 5–8 min is associated with the greatest increase in learners’ confidence and engagement.

Another study, presented in the article “The Effectiveness of Microlearning to Improve Students’ Learning Ability”, compares the effectiveness of traditional teaching methods with microlearning in the field of information technology at the elementary school level. The results indicate that microlearning methods led to an 18% improvement in learning outcomes compared to traditional methods, and students demonstrated a high level of motivation and interest in the microlearning tools used (

Mohammed et al., 2018, p. 37).

Microlearning supports the retention of previously acquired knowledge by leveraging psychological principles related to memory and learning. The psychologist

Ebbinghaus (

1885) conducted some of the earliest research on memory and spaced learning. His well-known “forgetting curve” illustrates how learners tend to forget more than 50% of the learned material within 20 min after a lesson. Furthermore, this percentage drops to 40% within nine hours, and to 24% after 31 days if the information is not reviewed.

In 2015,

Murre and Dros (

2015) successfully replicated Ebbinghaus’s forgetting curve. In their study, a participant spent 70 h learning information at spaced intervals, resulting in retention levels comparable to those reported in Ebbinghaus’s original findings. Additional studies have confirmed that memory reactivation through periodic review—a widely applied technique in microlearning—helps enhance long-term information retention. A 2018 study by

MacLeod et al. (

2018) demonstrated that memory reactivation strengthens long-term memory by triggering cellular reconsolidation.

With the help of mobile devices and apps, learners can study at their own pace and from any location. The ability to move backward and forward through lessons allows them to increase their retention rate by revisiting previous content in shorter sessions. The review process reinforces neural connections in the brain and facilitates the transfer of knowledge from short-term to long-term memory. In his research,

Kang (

2016) also notes that findings from cognitive and educational psychology indicate that spacing out repetitions of material over time significantly improves long-term retention.

Microlearning enables the learning environment to adapt to the limited attention span of individuals, particularly in the context of mobile learning systems (

Hamzah, 2021). Attention span encompasses a variety of factors, including learner-specific characteristics such as behavior, competencies, demographics, prior knowledge, literacy, needs, preferences, and learning skills, as well as technological parameters such as network conditions, device capabilities, and platform functionality. This adaptation aims to optimize the learning process by delivering content tailored to the user’s needs, providing appropriate learning materials and activities based on the learner’s current knowledge and performance. By incorporating these adaptive features, personalized learning systems can better engage users and enhance knowledge retention by aligning educational content with individual needs, preferences, and contextual factors.

Microlearning also adapts to limited attention spans, particularly in mobile learning contexts (

Hamzah, 2021). Personal attributes (e.g., behavior, skills, preferences) and technological factors (e.g., device capabilities, networks) affect attention. Adaptive learning systems that match instructional content with individual needs, preferences, and contextual factors improve engagement and learning efficiency.

Microlearning helps to avoid the phenomenon of mental fatigue, also known as central nervous system fatigue or central fatigue (

Shail, 2019). Mental fatigue leads to a decline in cognitive processes such as planning, response inhibition, executive attention, sustained attention, goal-directed attention, alternating attention, divided attention, and conflict-monitoring selective attention (

Slimani & Znazen, 2015,

2018). According to

Ishii et al.’s (

2014, p. 469) research, it represents a potential deterioration in cognitive function and is considered one of the most significant contributors to accidents in modern society. Microlearning employs the conceptual model of neuronal regulation and advocates for the prevention of overstimulation or cognitive overload through temporally spaced instructional sessions.

The issue of enhancing the effectiveness of learners’ perception and interpretation of information has been explored from various perspectives; however, there is a growing need to investigate this issue more thoroughly within the context of design education. The specific nature of design education necessitates the use of numerous visual tools, as visual thinking is characteristic of designers—this is a process in which problems are explored from multiple perspectives through observation, and subsequently perceived and interpreted via visualization (sketching, drafting, three-dimensional modeling, etc.). Alongside the emergence of new digital tools that enable faster and more realistic visualization of ideas, sketching remains the most convenient, efficient, and cost-effective tool for rapidly illustrating designers’ concepts. Sketching is a fundamental skill for designers, not as a linear process, but as a way of thinking. These specificities in design education require the skillful integration of diverse educational tools that guide and enhance the processes of observation, perception, and interpretation of new knowledge.

The discussion above highlights the necessity of conducting research focused on the development of specific instructional units that integrate a variety of tools and methods for the rapid acquisition and interpretation of targeted information. On the one hand, these instructional units must be aligned with the particularities of design education, and on the other, they must correspond to the behavioral characteristics and expectations of contemporary learners. According to

Zlatanova-Pazheva (

2024, pp. 138–139), as of 2024, the societal role of children has become increasingly represented by Generation Alpha and Generation Z—cohorts born after the widespread adoption of the Internet. “They grow up with constant access to the Internet and participation in social media and therefore perceive these technologies as an integral part of life. For this reason, Generation Z is often referred to as ‘the first digital generation.’ Generation Z is constantly connected to the Internet and their social media profiles via their mobile phones. They use these devices to study, search for information, chat, shop, take photos, listen to music and podcasts, and more.” (

Zlatanova-Pazheva, 2024, p. 78).

It is no coincidence that various institutions offering intensive courses—often conducted remotely and employing flexible learning options—are in high demand among modern learners, regardless of their age or interests.

An excellent example of such an educational model in the Republic of Bulgaria is the learning platform

Ucha.se (

2025), which, according to its own data, had over 1,169,550 users as of 26 May 2025. The educational content on the platform is aligned with Bulgaria’s national educational standards and the official curriculum from grades 1 through 12.

The numerous awards the platform has received over the years, along with its extensive user base, reflect public recognition of the effectiveness of the educational methods and tools it employs—such as video lessons, quizzes, and revision with mind maps. All content is accessible online and structured according to the principles of microlearning. It allows students to progress at their own pace and incorporates various approaches, including gamification, interactivity, engagement, and personalization of the learning process. For example, learners earn points while studying, which helps them develop their profiles and earn badges; they can also compare their activity with that of their friends, monitor their learning habits, and identify areas for improvement.

The example of the educational platform

Ucha.se (

2025) illustrates how the integration of digital technologies into education is transforming teaching and learning methods to better align with learners’ cognitive dispositions.

According to

Laurillard (

2012), digital education creates new opportunities for teaching and learning through models based on simulations, visual metaphors, and adaptive content—elements particularly valuable in the field of design. Laurillard proposes a teaching framework that emphasizes the role of digital environments and adaptive technologies as tools for personalizing learning and enhancing student engagement.

Research on design education highlights the importance of interdisciplinary approaches and constructivist learning models, in which knowledge is actively constructed by the learner.

Kolb (

1984) developed an experiential learning model, which is widely applicable in design education as it supports students in developing critical thinking and creative problem-solving skills (

Kolb, 1984, p. 21). Similarly,

Schön (

1983) introduced the concept of the “reflective practitioner,” describing how professionals—including designers—learn through reflection on their own practice. Experiential learning and reflective practice models are extensively applied in design teaching, as they enhance students’ abilities to think critically and engage in creative problem-solving.

Contemporary pedagogy emphasizes the importance of constructive alignment—the coherence between learning objectives, teaching methods, and assessment—as a key factor for the effective acquisition of new knowledge (

Biggs & Tang, 2007). According to

Biggs and Tang (

2007), constructive alignment between intended learning outcomes, teaching strategies, and evaluation methods is essential for meaningful learning, particularly in disciplines that demand high levels of autonomy and creativity. In this context, the role of the educator shifts from a transmitter of knowledge to a facilitator of learning, encouraging students to take an active role in constructing their own understanding (

Biggs & Tang, 2007).

There is a growing interest in the use of visual and conceptual tools such as mind maps, visual diagrams, storyboarding, sketchnoting, and prototyping, which facilitate the processes of perception, interpretation, and application of knowledge.

Novak and Cañas (

2008) emphasize that these tools support conceptual understanding by building connections between new and existing information.

From the perspective of learning theory in the digital age,

Siemens (

2005) proposes connectivism as a model in which learning occurs through the creation of networks of knowledge and links between various sources of information. This is particularly relevant for design students, who must navigate large volumes of visual, textual, and interactive content from diverse digital channels.

Salmon (

2011) emphasizes the role of e-moderation and online collaboration as key factors for enhancing knowledge acquisition in digital learning environments. These approaches encourage active student participation and knowledge construction within a social context—a principle that aligns closely with project-based design education. This is further supported by the findings of

Ivanova et al. (

2024).

There is also considerable interest in the influence of cognitive and motivational factors on learning in design education. Research indicates that elements such as visual thinking, visual literacy, and spatial perception play a significant role in acquiring new concepts in this field (

McKim, 1972). These factors not only facilitate content comprehension but also support the creative processing and application of knowledge within the context of real-world projects. Furthermore, approaches that incorporate gamification, collaborative learning, and the use of digital tools have demonstrated a positive impact on student engagement and deeper understanding of the material (

Boud et al., 2013). Such motivational strategies, including gamification and collaborative learning, were also described by Ivanova et al. during training conducted in the 2020–2021 academic year in a fully virtual environment. In this context, assistants introduced a new tool—an online interactive whiteboard, Mural—that catalyzed this innovative approach to collaborative work. An additional benefit of using such a tool was the increased transparency in the educational process for all students (

Ivanova et al., 2024, pp. 38–41).

Research by

Clark and Mayer (

2016) on multimedia learning demonstrates that the integration of visual and verbal representations of information, when applied appropriately, leads to improved cognitive processing and a higher level of comprehension. This is particularly relevant in design education, where visual metaphors and diagrams support the interpretation of abstract concepts.

Mayer (

2009) further confirms that well-designed multimedia can reduce cognitive load and facilitate learning.

In this context, architectural visualization emerges as a discipline-specific application of multimedia learning, directly supporting the educational process in design fields. It serves as a fundamental tool in architectural design, communication, and presentation. It enables clearer and more realistic envisioning of proposed projects, bridging conceptual thinking with visual output (

Singh, 2024). More precisely, architectural visualization refers to the process of creating visual representations of architectural designs using digital tools. These representations may include static images, animations, or interactive 3D environments that help communicate spatial, material, and atmospheric qualities of a design before it is built (

Wang & Schnabel, 2009, pp. 15–26).

The research is theoretically grounded in the Cognitive Theory of Multimedia Learning (

Mayer, 2001), which emphasizes the role of dual coding—visual and verbal—in facilitating effective knowledge acquisition. This principle directly informed the design of the experimental phase, in which live demonstration, video tutorials, and their combination were tested for their impact on learning performance.

Additionally, the study draws on constructivist learning theory, which posits that learners actively build knowledge through experience and interaction. This pedagogical framework supports the inclusion of project-based and microlearning formats that encourage autonomy, engagement, and contextual understanding—particularly in design education where tacit knowledge and visual reasoning are essential.

These frameworks guided the research design and helped translate theoretical principles into specific instructional strategies, making the study not only empirically valid but also pedagogically meaningful.

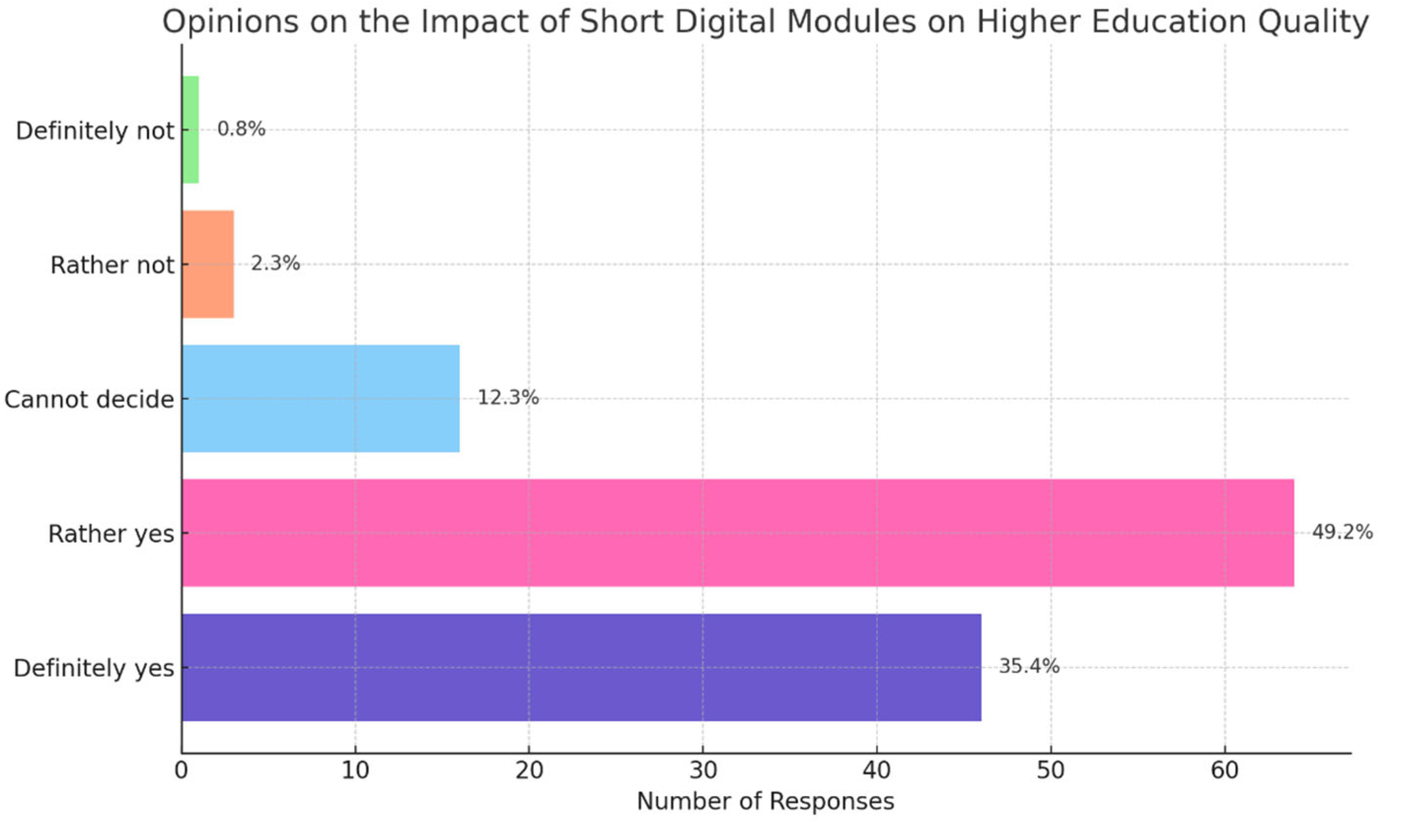

The present study aims to explore the possibilities for more effective acquisition and interpretation of new knowledge by design students using multimedia learning. The research is based on both quantitative and qualitative data collected from a survey of 130 respondents, as well as an experiment conducted with students in a real educational setting. The focus is placed on identifying successful pedagogical practices and approaches that support deeper understanding and application of knowledge within design education, specifically in the field of architectural visualization.

2. Materials and Methods

2.1. Research Design

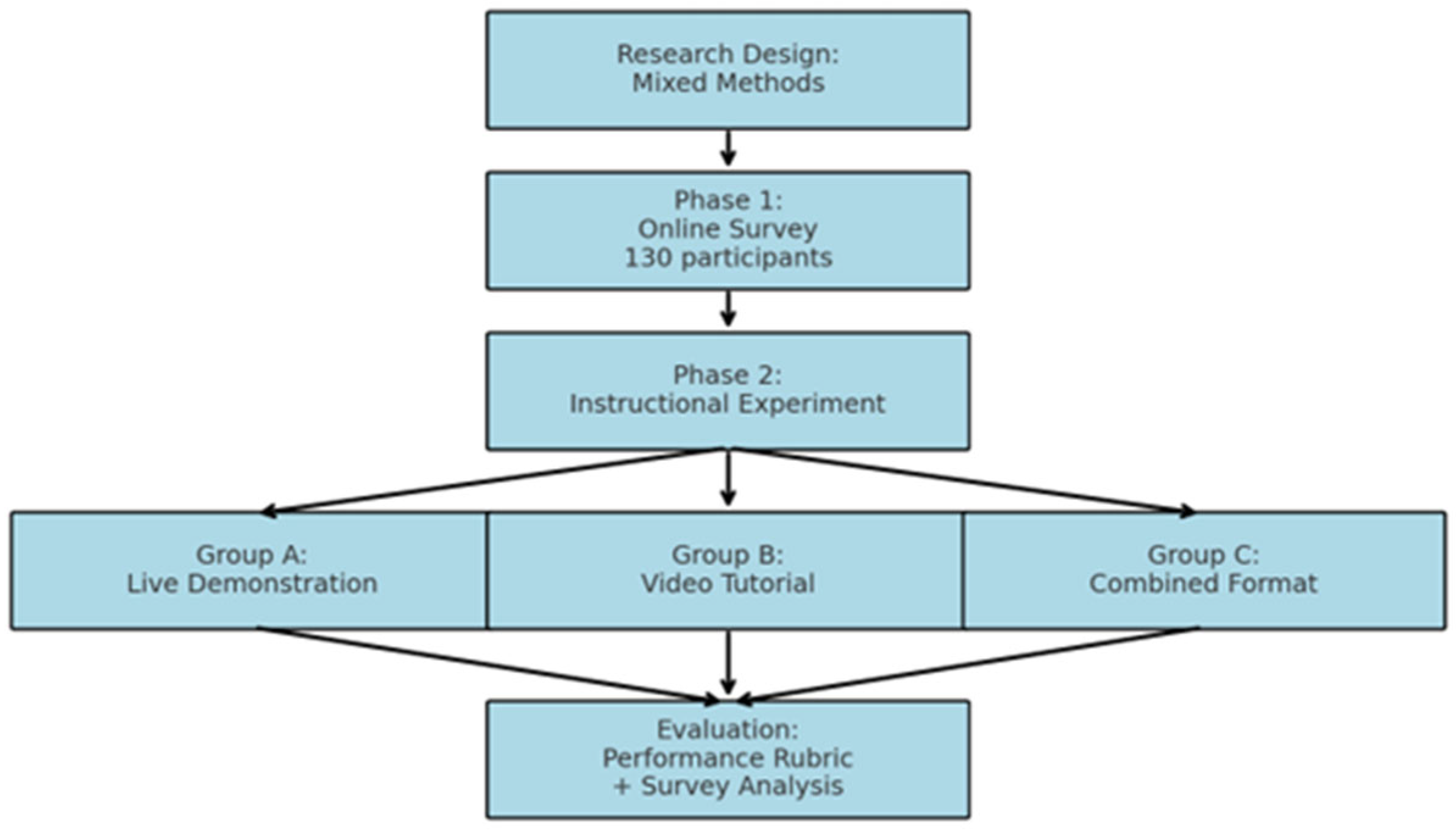

This study employed a mixed-methods research design, combining both qualitative and quantitative approaches to explore the effectiveness of multimedia and microlearning strategies in architectural visualization education. The research was conducted in two main phases: a survey phase and an experimental training phase.

In the survey phase, data were collected via an online questionnaire aimed at analyzing the learning habits, preferences, and perceptions of students and faculty members in design-related programs. This phase sought to provide contextual background for the experimental intervention.

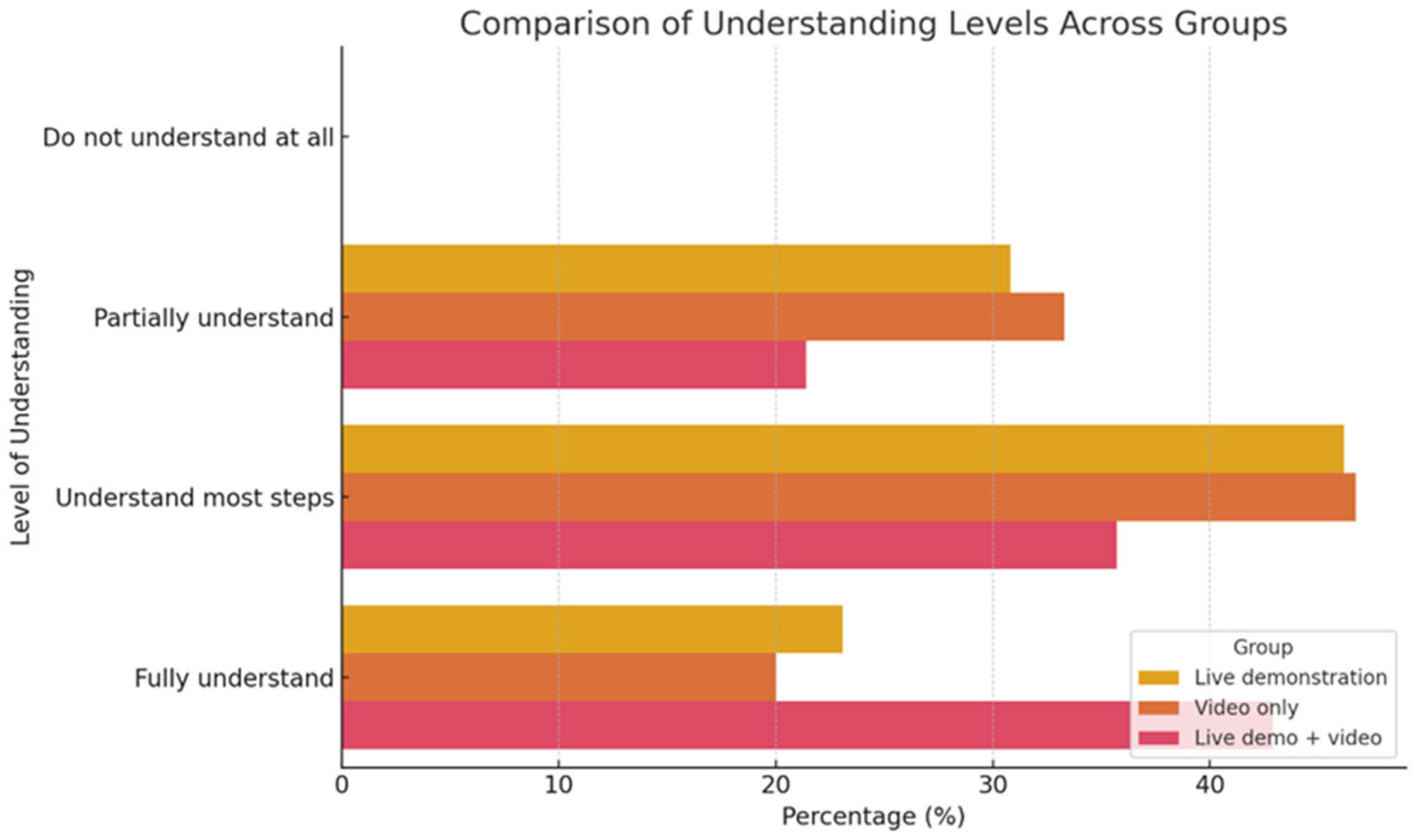

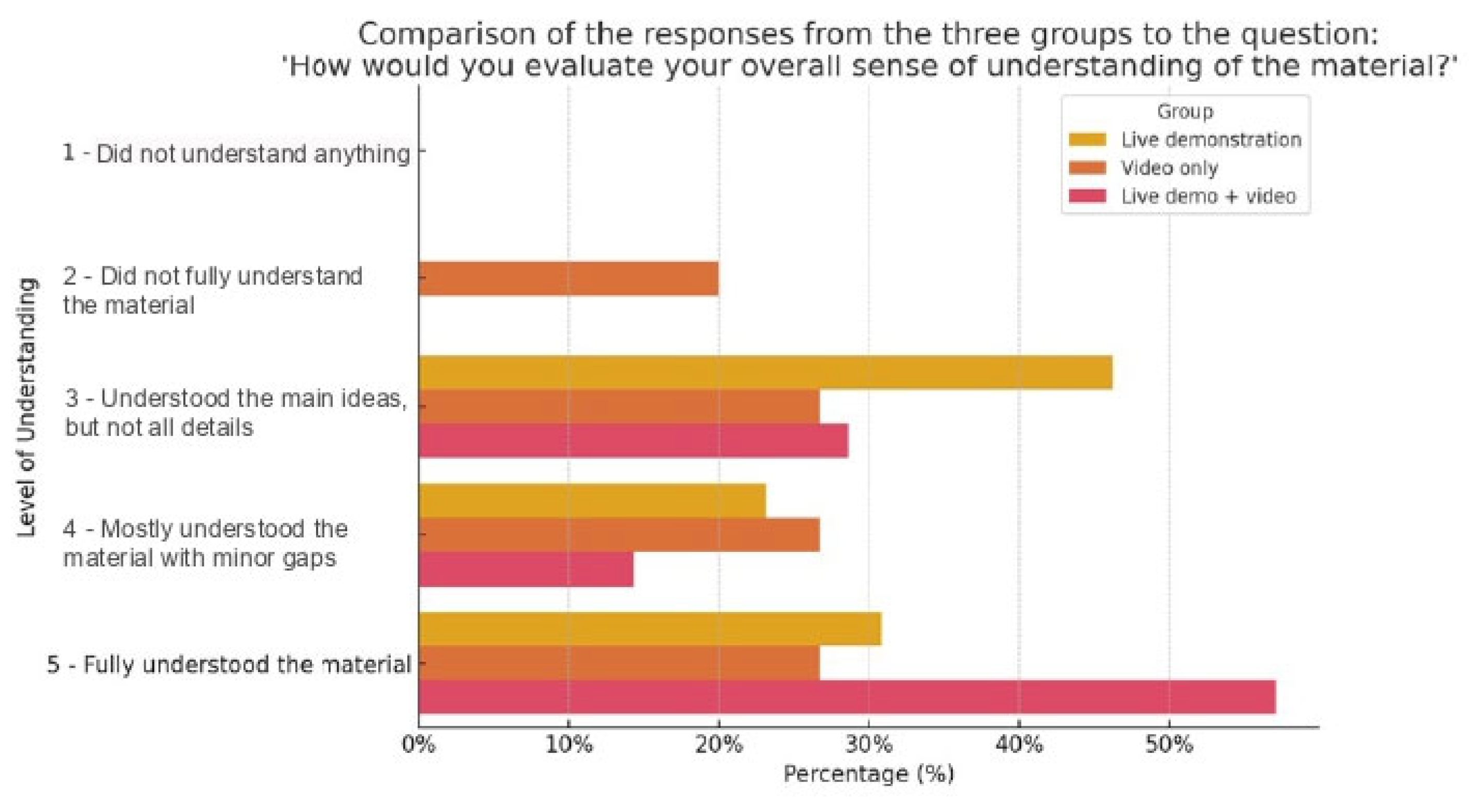

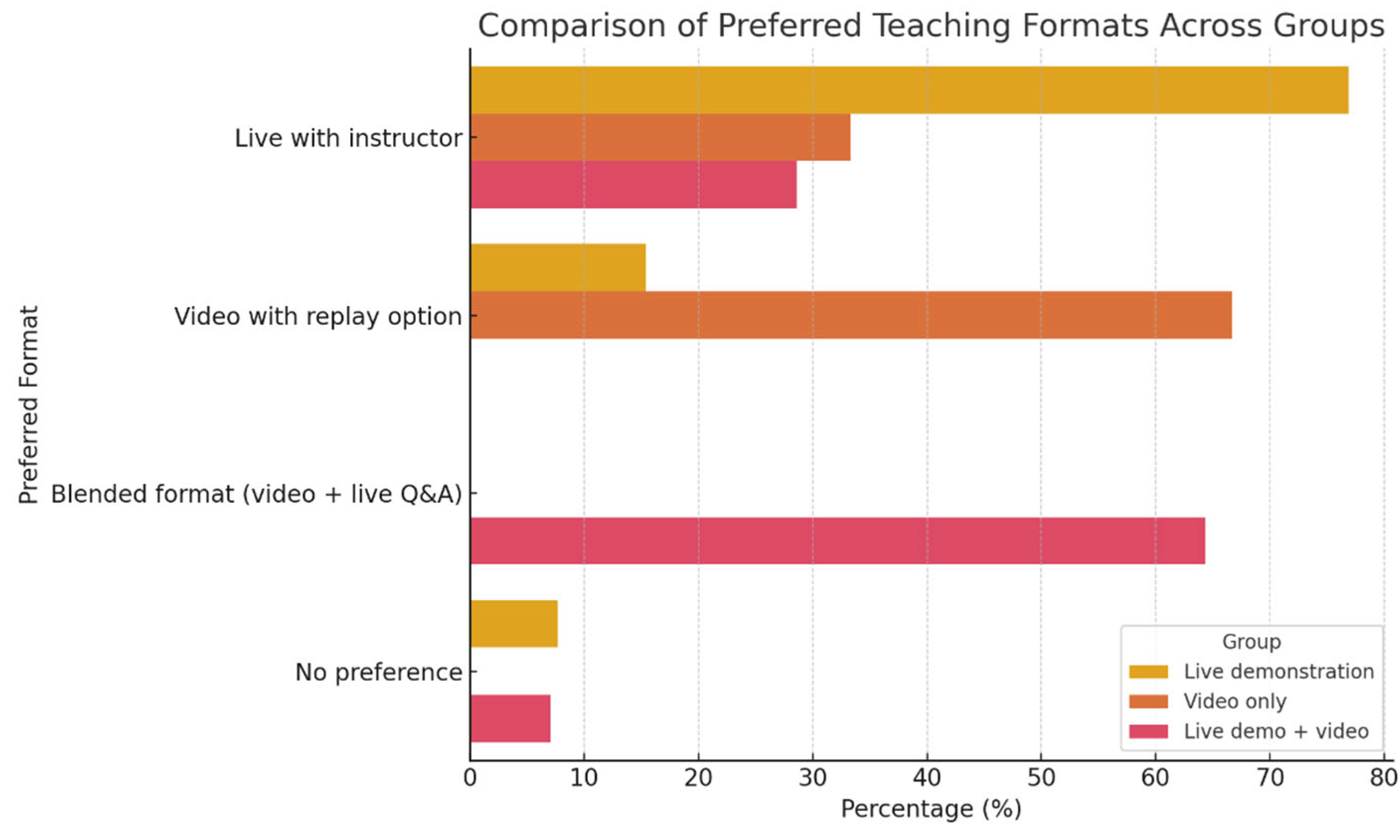

The experimental phase involved a controlled pedagogical intervention with three groups of students, each exposed to a different instructional method: (1) traditional live demonstration, (2) pre-recorded video tutorial, and (3) a combination of both. This design enabled comparison of the instructional methods in terms of learner understanding, confidence, and performance outcomes.

2.2. Participants

The study involved a total of 130 participants, all of whom were students enrolled in design-related academic programs at eight Bulgarian universities. Participants were selected through voluntary response sampling and were informed of the study’s objectives and their rights as participants.

The sample included students from various academic years and specializations in architecture, interior design, and landscape architecture. The diversity of the sample ensured a broad representation of design learners with different levels of experience and familiarity with digital tools.

For the experimental phase, participants were randomly assigned into three groups:

Group A received instruction through live demonstration only;

Group B engaged with a video tutorial;

Group C experienced a combined approach integrating both live and video-based instruction.

The composition of each group was balanced to ensure comparable size and background characteristics, enabling fair analysis of the learning outcomes.

2.3. Procedure

The study was conducted in two main phases: a preliminary survey and a controlled instructional experiment.

In the first phase, an online survey was distributed to students and academic staff across eight Bulgarian universities. The survey aimed to gather insights into participants’ learning habits, preferred formats, and familiarity with digital educational tools. The data served to inform the instructional strategies tested in the second phase.

In the second phase, students were divided into three experimental groups. All groups received instruction on the same design topic—creating a simple architectural visualization task—under different learning conditions. The sessions were conducted in a controlled academic setting with equivalent time allocation:

Group A attended a live demonstration by an instructor.

Group B watched a pre-recorded video tutorial.

Group C participated in a combined format involving both a live session and the same video material.

Following the instructional session, all participants were asked to complete the same practical task individually. Their results were then evaluated through expert judgment, which does not involve predefined quantitative criteria or scales.

2.4. Instruments and Tools

The instruments used in this study included:

Online Questionnaire—developed using Google Forms and distributed via academic mailing lists and course platforms. The questionnaire comprised both closed and open-ended questions addressing learning preferences, frequency of multimedia usage, and attitudes toward digital learning environments. Demographic data such as study program and academic year were also collected.

Instructional Materials—created specifically for the experimental phase. These included:

A live demonstration plan delivered by an instructor in real time.

A video tutorial recorded in advance and made accessible to participants online.

A task brief outlining the learning objective and final outcome expected from all groups.

Assessment Rubric—a standardized evaluation form developed to assess the student submissions. The rubric focused on three main criteria:

Understanding of the task,

Confidence in execution, and

Performance Quality of the final visualization product.

Expert evaluators used this rubric to assess all submissions anonymously.

2.5. Data Analysis

The data collected from both phases of the study were analyzed using descriptive and comparative statistical methods, as well as qualitative content analysis (

Figure 1).

Quantitative data from the online questionnaire and the rubric-based evaluations were processed using Microsoft Excel (Version 2024) and SPSS (Version 29). Descriptive statistics (means, percentages, and standard deviations) were calculated to summarize participant demographics and preferences. Comparative analysis was used to examine differences in task performance across the three instructional groups.

Qualitative data from open-ended survey responses were analyzed thematically. Responses were grouped into categories based on recurring keywords and concepts, allowing the researchers to identify common attitudes and perceptions regarding learning formats and digital tools.

The findings from both analyses were then triangulated to ensure validity and consistency between quantitative outcomes and qualitative insights. Emphasis was placed on interpreting results through the lens of pedagogical relevance for contemporary design education.