1. Introduction

The rapid advancement of general artificial intelligence technologies is driving profound transformations in the field of education, fundamentally reshaping the nature of educational activities. “Search-as-learning” is increasingly becoming an efficient learning approach for students at the current stage, marking a shift from traditional modes of knowledge acquisition toward more intelligent and autonomous methods. General large language models, represented by ChatGPT (

Shafik, 2023), have demonstrated broad application potential in areas such as knowledge-based question answering, personalized tutoring, educational assessment, and learning support, particularly in higher education, where the cultivation of self-directed learning skills is emphasized. These models offer significant potential and diverse possibilities for providing personalized learning support to university students (

Zawacki-Richter et al., 2019;

Baidoo-Anu & Ansah, 2023). Existing research based on student self-reports has shown that LLMs can improve the learning experience, enhance motivation, develop autonomous learning skills, and boost academic performance (

Ng et al., 2024). However, despite the undeniable disruptive impact of AI technologies on education, the “generalization advantages” of LLMs have not yet been fully translated into reliable “domain-specific depth” in educational settings. Unresolved issues remain regarding how models like ChatGPT4 can enhance answer accuracy and provide substantive support for students’ self-directed learning in technology-embedded learning environments.

From the perspective of question understanding, LLMs sometimes fail to accurately interpret complex, ambiguous, or conceptually mixed questions posed by students. Additionally, their semantic representation capabilities, trained on general corpora, are prone to misinterpretation or redundancy when dealing with domain-specific content—particularly in course-related question answering—thereby increasing students’ cognitive load in identifying and filtering information (

Yang et al., 2022). From the perspective of answer generation, LLMs inherently suffer from issues such as hallucinated content and factual inconsistencies. These limitations in semantic precision, logical coherence, and domain depth result in responses that are often irrelevant or incorrect during knowledge-based interactions. Furthermore, regarding learning support strategies, although LLMs hold promise in facilitating students’ self-regulated learning, their effectiveness is highly dependent on students’ questioning abilities and dialogic strategies, leading to significant individual differences in real-world scenarios (

Lin, 2024). These findings reveal the current limitations of LLMs in terms of answer accuracy, knowledge alignment, and interactive guidance.

To address these challenges, this study proposes an intelligent tutoring model that integrates knowledge graphs with large language models (KG-CQ). The core innovation lies in the integration of structured course knowledge to explicitly augment LLMs. This mechanism not only improves the model’s semantic understanding and answer accuracy for course content but also enhances its capacity to perceive, identify, and guide student inquiry intentions, thereby providing more effective support for self-directed and personalized learning.

This research focuses on three key aspects: the intelligent construction and evaluation of a course knowledge graph for the Data Structures (C Language) course in the field of Educational Technology; the enhancement and verification of KG-CQ’s capabilities in question answering and knowledge extraction; and the evaluation of its application effectiveness. Specifically, the study employs knowledge graph construction methodologies and organizes extensive real-world course data and knowledge resources to build a domain-specific knowledge base. This, in turn, supports LLMs in overcoming inherent limitations, such as cognitive rigidity, interpretive bias, divergent association, and black-box behavior, during response generation. It also enhances their contextual understanding and knowledge cognition abilities, enabling them to accurately interpret student intentions and needs. Moreover, by leveraging the emergent knowledge capabilities of LLMs, the model improves the performance and effectiveness of domain-specific knowledge question answering. This research provides methodological guidance for constructing domain-oriented LLM-based Q&A systems, practical reference for applying knowledge-graph-enhanced LLMs in educational scenarios, and strong support for enabling students to conduct efficient and precise self-directed learning.

This study aims to address the following research questions:

RQ1: How can the construction of a course knowledge graph–integrated question-answering mechanism enhance an LLM’s understanding of domain-specific content and improve its performance in terms of accuracy, specialization, and personalized support?

RQ2: In real course applications, can the KG-CQ model effectively promote students’ self-directed learning outcomes?

2. Related Works

2.1. Research on Intelligent Tutoring Models Based on Constructivist Learning Theory

Constructivist learning theory posits that knowledge is not passively received but actively constructed by the learner through interaction with others and the environment within specific contexts (

Cobern, 1993). Guided by this concept, autonomous inquiry, contextual experience, and resource integration have become key pathways to effective learning. The emphasis of constructivism on learner agency and the construction of meaning provides a solid theoretical foundation for the design of AI-supported learning environments, particularly in fostering students’ self-directed learning abilities.

In recent years, numerous studies have attempted to integrate constructivist learning theory with artificial intelligence technologies, aiming to develop intelligent tutoring models that promote self-directed learning and stimulate students’ interest and motivation. For instance, Bakar proposed an Intelligent Neural Mechanism-Based Math Problem Solving Tutoring System (IN-MP-STS), which simulates students’ cognitive processes to enhance their autonomous construction ability during problem solving (

Abu Bakar et al., 2024). Kim from a constructivist perspective, explored strategies for integrating ChatGPT into educational settings, emphasizing that educational systems should focus on four key dimensions—context, collaboration, dialogue, and constructiveness—to foster students’ engagement and initiative in learning (

Kim & Adlof, 2024).

These studies indicate that tutoring systems driven by constructivist principles not only prioritize the accurate delivery of content but also emphasize the interactivity and generativity of the learning process. Building on this foundation, the intelligent tutoring model proposed in this study—integrating a knowledge graph with a large language model—enhances the model’s capacity for knowledge organization and logical expression through structured knowledge graphs. Coupled with the interactive feedback mechanisms of large language models, this approach further stimulates students’ problem awareness and inquiry motivation, thereby more effectively supporting their knowledge construction and self-directed learning in complex course contexts.

2.2. Limitations of Large Language Models in Course-Based Question Answering

Large language models have demonstrated strong performance in open-ended question-answering tasks due to their powerful capabilities in language understanding and generation. However, since they are primarily trained on large-scale general-purpose corpora, their outputs often exhibit several limitations when applied to specialized course content—namely, limited timeliness of knowledge, insufficient adaptability to domain-specific contexts, and semantic interpretation biases. These issues are particularly prominent in course-based question-answering scenarios, where student questions often involve complex structures, overlapping concepts, or context-dependent elements, all of which place higher demands on the model’s precision in comprehension and logical reasoning.

Researchers have attempted to address these challenges by introducing structured knowledge and reasoning mechanisms. For instance, Zhong (

C. Zhong et al., 2025) proposed Interdisciplinary-QG, an automated interdisciplinary test question-generation framework based on GPT-4. This framework integrates knowledge graph-enhanced retrieval-augmented generation and chain-of-thought reasoning techniques and employs a structured BRTE (Background–Role–Task–Example) prompt template, which significantly improves the accuracy and interdisciplinary coherence of generated questions. Zhong developed an intelligent tutoring system (ITS) for programming education based on information tutoring feedback (ITF), which provides real-time guidance and feedback for self-directed learners during problem-solving tasks, thereby enhancing their computational thinking skills (

X. Zhong & Zhan, 2024). Similarly, Zhong introduced InnoChat, an innovative-thinking training system based on GPT-4, that employs a dual-path enhancement mechanism involving both logic chain fine-tuning and prompt optimization to effectively improve the model’s capability in fostering creative thinking (

W. Zhong et al., 2025).

Although these improvement strategies have shown effectiveness in specific educational scenarios, relying solely on prompt engineering and supervised fine-tuning remains insufficient to fully overcome the inherent challenges faced by general-purpose LLMs in course-based question answering, such as fragmented knowledge representation, logical discontinuities, and limited interpretability. In contrast, knowledge graphs represent knowledge entities and their relationships through graph structures, which can significantly enhance a QA model’s semantic organization, logical coherence, and interpretability.

Therefore, this study proposes an intelligent tutoring model that integrates knowledge graphs with large language models. While preserving the strengths of LLMs in language generation, this model incorporates structured knowledge support to improve domain specificity, coherence, and instructional capability in educational question-answering scenarios. Ultimately, it aims to better support students’ personalized learning pathways and promote sustained, high-quality self-directed learning.

3. Concept Model and Implementation

3.1. The Conceptual Model

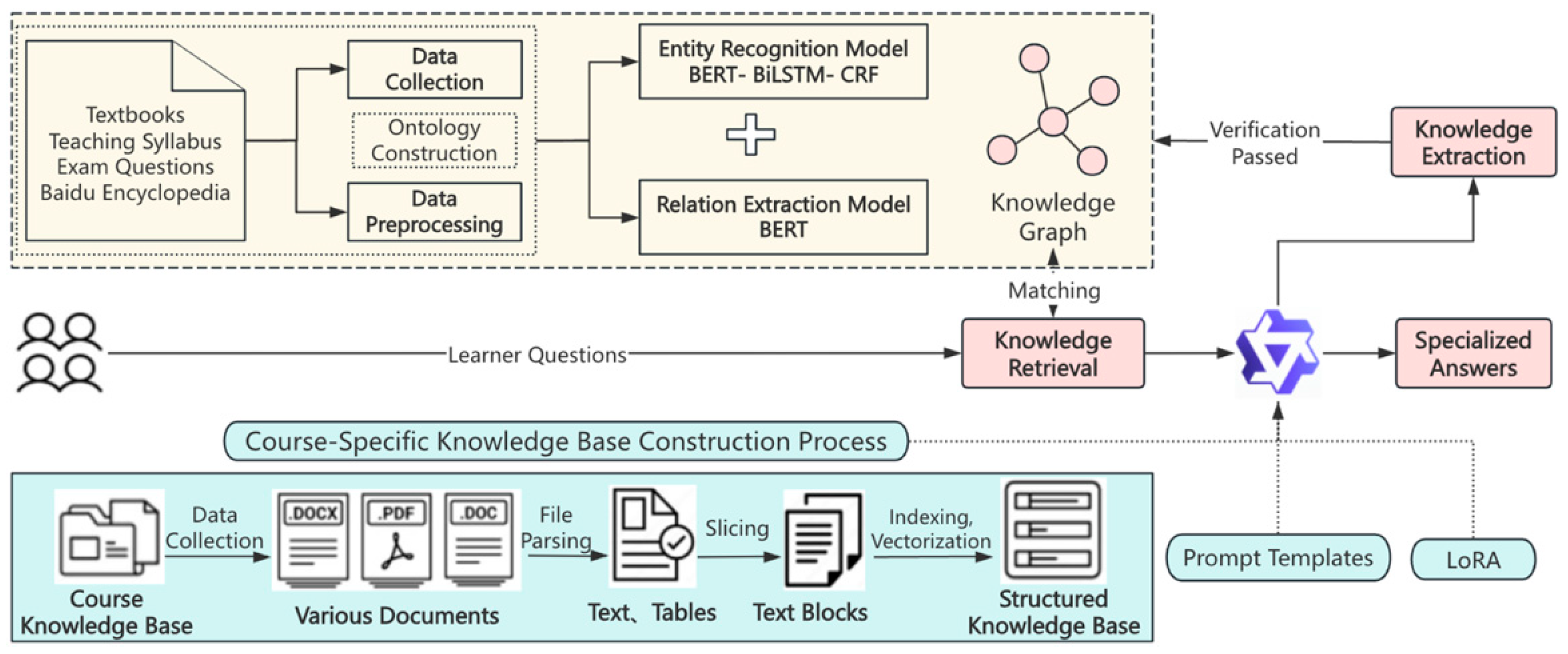

This study proposes the KG-CQ model, which aims to explore a question-answering paradigm that integrates a knowledge graph with a large language model, thereby enhancing the model’s semantic understanding of student queries and its ability to match specialized knowledge. The model is designed to provide more accurate and professional answers while also stimulating students’ awareness of questioning and inquiry motivation. Ultimately, it supports students in constructing knowledge and developing cognition more effectively during self-directed learning. The overall architecture of the model consists of four components, as shown in

Figure 1: Knowledge Graph, Knowledge Retrieval, Specialized Question Answering, and Knowledge Extraction. These components work collaboratively to form a closed loop of “Understanding–Generation–Extraction.”

Knowledge Graph: Focusing on the target course Data Structures (C Language), a course knowledge graph is constructed and stored in the Neo4j graph database, forming a complete knowledge framework for the course. This provides foundational knowledge support and traceability for subsequent question-answering tasks and enhances the model’s ability to understand the course context and domain-specific knowledge. A portion of the knowledge graph is shown in

Figure 2.

Knowledge Retrieval: The learner’s input questions are parsed to accurately identify course-related entities and relations, which are then matched with nodes in the knowledge graph. The matching results are converted into structured query statements using Cypher queries and embedded into a prompt template. This guides the large language model to focus on the precise course domain when generating answers, thereby reducing irrelevant and redundant content and laying a foundation for professional and accurate responses.

Specialized Question Answering: Course knowledge is transformed into a standardized vector-based knowledge base. A semantic retrieval mechanism is built using the LangChain framework (

Topsakal & Akinci, 2023) and vector database technology, providing domain-specific knowledge support for the large language model. Additionally, LoRA fine-tuning is introduced to further optimize the model’s understanding of course concepts, logical relations, and typical questions. This component enhances the stability and interpretability of the model when answering complex course questions, offering students responses that are semantically clear and logically coherent, thereby supporting autonomous learning.

Knowledge Extraction Module: Structured knowledge is automatically extracted from text using the large language model, enabling dynamic updates to the course knowledge graph. Newly extracted triples are validated and then automatically written into the Neo4j graph database. This module increases both the breadth and timeliness of the knowledge base during the question-answering process, contributing to the long-term accumulation of course knowledge and continuously supporting students with comprehensive and accurate information for self-directed learning.

Base Model Selection: Based on the SuperCLUE benchmark (

Wang et al., 2019), which emphasizes Chinese language capability in evaluating large language models, this study selects Qwen2-7B, released by Alibaba in June 2024, as the base model. Qwen2-7B is a large language model with 7 billion parameters, demonstrating strong performance in text generation and knowledge reasoning, making it well-suited for the comparative experiments conducted in this study.

Evaluation Metrics: This study adopts precision, recall, accuracy, and F1-score as evaluation metrics. Accuracy measures the proportion of correctly predicted samples among all samples and reflects the overall correctness of predictions. Precision refers to the proportion of true positives among all samples predicted as positive, indicating the reliability of the model’s predictions. Recall refers to the proportion of true positives among all actual positives, reflecting the model’s sensitivity. The F1-score is the harmonic mean of precision and recall, used to comprehensively evaluate model performance in scenarios with imbalanced positive and negative samples, especially when both precision and recall are critical.

The formulas are as follows:

In this context, TP refers to the number of correctly predicted entities; FP denotes the number of samples predicted as positive but are actually negative; FN indicates the number of samples predicted as negative but are actually positive; and TN represents the number of correctly predicted non-entity instances.

3.2. Implementation

3.2.1. Knowledge Graph

Data Collection and Preprocessing: The data sources include course materials (digital textbooks, lesson plans, and exercise sets) and open resources (Baidu Encyclopedia, MOOC platforms). Tools such as OCR and Python3.9.21 are used for text extraction and processing, and manual verification is conducted to ensure data quality and consistency.

Course Ontology Construction: Following the Stanford seven-step ontology development method (

Noy & McGuinness, 2001), the course knowledge ontology is categorized into three types of entities (Structure, Algorithm, and Concept) and five types of relations (Predecessor, Successor, Sibling, Related, and Synonym).

Entity Recognition Model: A combined BERT + BiLSTM + CRF model is employed to implement entity recognition under the BIO (Begin, Inside, Outside) tagging scheme, improving the accuracy of course entity parsing and structural localization.

Relation Extraction Model: Based on a BERT-based relation classification model, specific tagging and concatenation strategies are used to extract semantic relations between entities. A softmax classifier is used for relation identification, supporting subsequent tasks such as graph retrieval, answer traceability, and semantic inference.

3.2.2. Knowledge Retrieval

An entity extractor (MatchNode) and a relation searcher (SearchNode) are designed, along with the construction of Cypher query templates. These components parse course and entity information from student questions and embed the query results into the prompt input of the large language model. This ensures that the model can accurately understand the questions, achieving precise retrieval and effective responses to provide professional answers to students.

3.2.3. Specialized Question Answering

Course Knowledge Base: Authoritative data, domain-specific websites, and expert knowledge are collected and converted into unified document objects. These are then segmented and embedded into a vector space and stored in a vector database to support semantic retrieval.

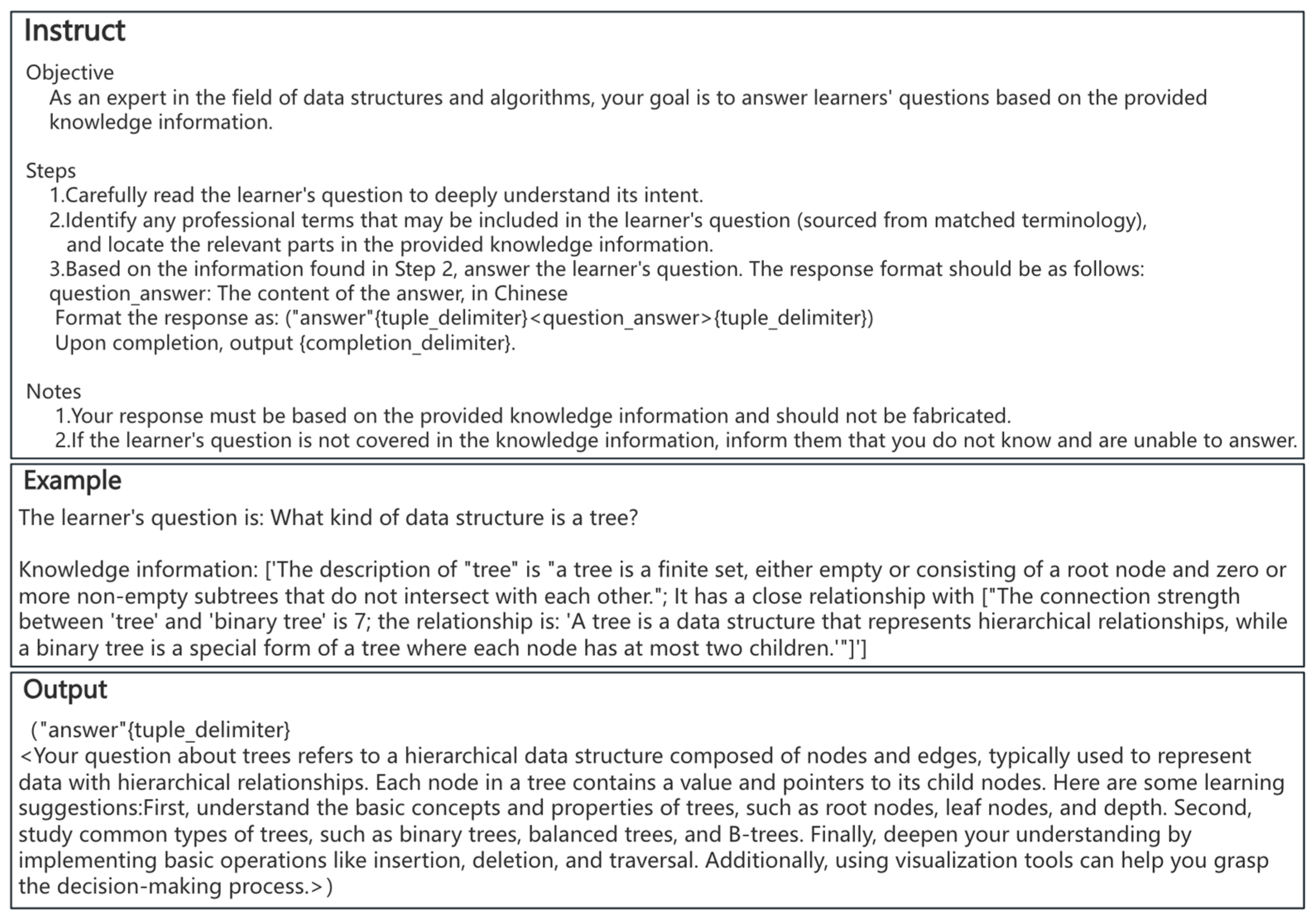

Prompt Template: The design of the prompt template in this study follows several principles, including role setting, background description, task definition, and output formatting. The constructed prompt follows the formula:

Here, Role is fixed during use to define the model’s role; Instruct provides specific task-related instructions; Examples offer guidance through illustrative cases; and Output defines the output format.

As shown in

Figure 3, the role in the task description is set as that of an expert in the field of data structures. The background involves extracting entities from learners’ questions and locating corresponding information in the knowledge graph; if no match is found, no answer is provided. This ensures a focus on key content. The entity and relation categories based on the course ontology are used to clarify the standards for knowledge extraction. Examples are included to guide task execution, and the output follows a predefined format to enhance the professionalism, accuracy, and interpretability of the model’s answers.

Model Fine-Tuning: The LoRA method is used for efficient parameter tuning to adapt the large language model to the Q&A tasks in the

Data Structures (C Language) course. This approach simulates parameter variations via low-rank decomposition, updating only specific matrices (A and B) to enable efficient model training with a minimal number of parameters. The corresponding formula is as follows:

where

W0 denotes the parameters of the large language model, and ∆

W represents the parameter change during full fine-tuning.

3.2.4. Knowledge Extraction

The large language model is used to extract entity and relation information from text and convert it into structured triples. Data cleaning rules and anomaly detection mechanisms are applied to improve the stability of the extraction process. The extracted triples are written into the knowledge graph, enabling dynamic updates to the knowledge base.

3.3. Comprehensive Performance Evaluation and Analysis of the Intelligent Course Q&A Model

3.3.1. Accuracy Analysis of the Model

This study collected and compiled 100 multiple-choice questions covering various knowledge points from the

Data Structures (C Language) course. These questions were categorized into three levels of difficulty: easy, medium, and hard. Different models were tested on this set of questions, and their answering accuracies were recorded to objectively evaluate their performance. The experimental results are presented in

Table 1 (with the values in parentheses representing the number of correct answers and the total number of questions).

As shown in

Table 1, the KG-CQ model achieved a 14% improvement in average accuracy compared to the base model. However, due to significant differences in training data and corpora, the model’s performance on easy and medium questions was inferior to ChatGPT4, one of the most powerful current language models. Nevertheless, the accuracy on hard questions was on par with GPT-4, demonstrating that an intelligent Q&A model specifically designed for course content can effectively improve the accuracy of answering complex questions. This suggests that the model has strong domain adaptability and can help students better understand and master course content.

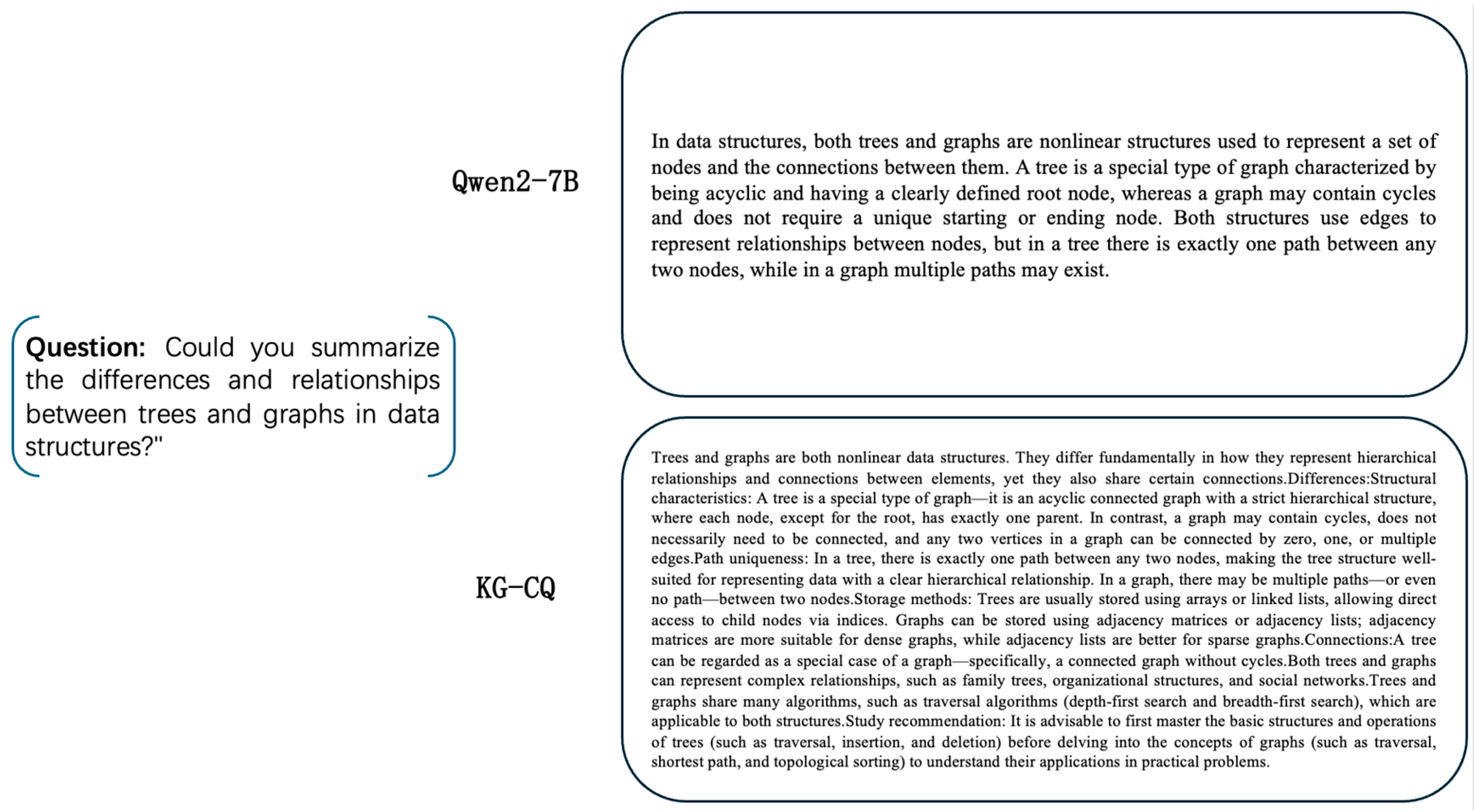

3.3.2. Demonstration of Answering Performance

The study compares the Qwen2-7B model with the KG-CQ model to illustrate the differences in question comprehension and the professionalism of their responses. As shown in

Figure 4, the KG-CQ model developed in this study can provide highly relevant and professional answers in the context of course guidance, offering learners targeted learning recommendations based on course content. In contrast, the Qwen2-7B model delivers comparatively less effective responses.

3.3.3. Comparison of Knowledge Extraction Performance

To evaluate the KG-CQ model’s ability to understand and extract knowledge, a dataset containing 2916 questions was constructed, including 2500 course-related questions and 416 non-course-related questions. Two graduate students majoring in Educational Technology were invited to independently annotate the entities and relationships within the questions. The model’s extraction results were then compared with the annotations to verify its knowledge extraction performance and ensure the objectivity and reliability of the experimental results.

Comparison of Entity Recognition Performance

The entity recognition performance of different models was compared before and after incorporating the course knowledge graph as input. Initially, only the question text was input to evaluate each model’s ability to recognize course-related entities. Subsequently, the course knowledge graph was introduced as a knowledge base to support the model in identifying entities based on the content of the graph, thereby enhancing its understanding of course semantics. The recognition targets correspond to the three types of entities defined earlier. For example, in the question “What type of data structure is a stack?”, the model needs to identify key nodes such as “stack” and “data structure” that exist within the knowledge graph.

As shown in

Table 2, after the introduction of the knowledge graph, the KG-CQ model effectively matched and identified existing course entities within the graph, further enhancing its semantic understanding capability. Compared to other models, the KG-CQ model demonstrated more stable performance, accurately recognizing key course entities and avoiding common issues, such as inconsistent recognition or missing entities, observed in general-purpose models.

Figure 5 further illustrates the overall performance of the KG-CQ model in the entity extraction task, validating its ability to perform accurate entity recognition.

Comparison of Relation Recognition Performance

The relation extraction performance of three models—Qwen2-7B, ChatGPT4, and KG-CQ—was compared across multiple test questions, focusing on the five types of relations defined earlier. The question numbers (①–⑮) and detailed results are presented in

Table 3.

The results indicate that the other two models demonstrated unstable performance in relation extraction, often exhibiting errors in relation classification and confusion among relation types, leading to lower accuracy. In contrast, the KG-CQ model, leveraging the structured knowledge of the knowledge graph, showed greater stability and accuracy in most relation extraction tasks. This suggests that the model possesses strong relational reasoning capabilities and a deeper understanding of the structure of course knowledge.

Figure 6 further illustrates the overall performance of the KG-CQ model in relation extraction tasks, validating its effectiveness in extracting course-specific knowledge.

3.3.4. Ablation Study

An ablation study is an experiment where we systematically remove or modify certain components of our model to understand their individual contribution to the overall performance.

To verify the effectiveness and necessity of incorporating the knowledge graph and course-specific knowledge base in model construction, this study conducted an ablation experiment. The tested models included the base model Qwen2-7B, a model with only the knowledge graph, a model with only the course-specific knowledge base, and the full KG-CQ model. The detailed results are shown in

Table 4.

As shown in

Table 4, the KG-CQ model achieved higher accuracy than the models with only the knowledge graph or the course-specific knowledge base. This indicates that the KG-CQ model not only enhances knowledge alignment and reasoning ability but also improves the understanding of students’ question intentions and the accuracy of responses. These findings demonstrate the model’s potential and practical value in supporting self-directed learning scenarios.

4. Experiment

4.1. Participants

The participants in this study were 30 students majoring in Educational Technology at H University. All participants had completed the Data Structures (C Language) course and possessed a basic understanding of the course content. The students were randomly assigned to two groups: an experimental group and a control group, with 15 participants in each.

4.2. Procedure

After the course concluded, all participants were required to complete a set of designated post-class exercises. The study was designed to compare the experimental and control groups: students in the experimental group were allowed to use the KG-CQ model as an aid during problem solving, while the control group completed the exercises without model assistance. This setup aimed to evaluate the practical application value of the KG-CQ model in post-class practice and its support for students’ self-directed learning. The specific application process is illustrated in

Figure 7.

During the preparation phase, based on the Data Structures (C Language) course syllabus, 50 multiple-choice questions covering various knowledge points were selected and categorized by difficulty into three levels: easy (22 questions), medium (18 questions), and hard (10 questions). All questions were reviewed by professional instructors to ensure scientific accuracy and appropriate difficulty distribution. Additionally, multiple rounds of testing and debugging were conducted on the KG-CQ model to ensure its functionality and answer accuracy prior to the formal experiment.

In the implementation phase, students in the experimental group were allowed to use the intelligent Q&A model during the exercises. When encountering unfamiliar problems, they could query relevant knowledge points or obtain solution suggestions through the model. The number of correct answers for each student in the experimental group was recorded to calculate the average accuracy. The frequency of model use and total answering time were also tracked. After the exercises, selected students from the experimental group participated in interviews to gather in-depth feedback on the model’s functions, ease of use, and perceived learning support. The interview content was transcribed into textual data for further analysis.

Students in the control group completed the same set of questions under identical conditions but without access to the intelligent Q&A model. They relied solely on traditional learning resources such as textbooks and class notes. Their number of correct answers and total answering time were recorded for comparative analysis with the experimental group.

5. Results

5.1. Test Scores

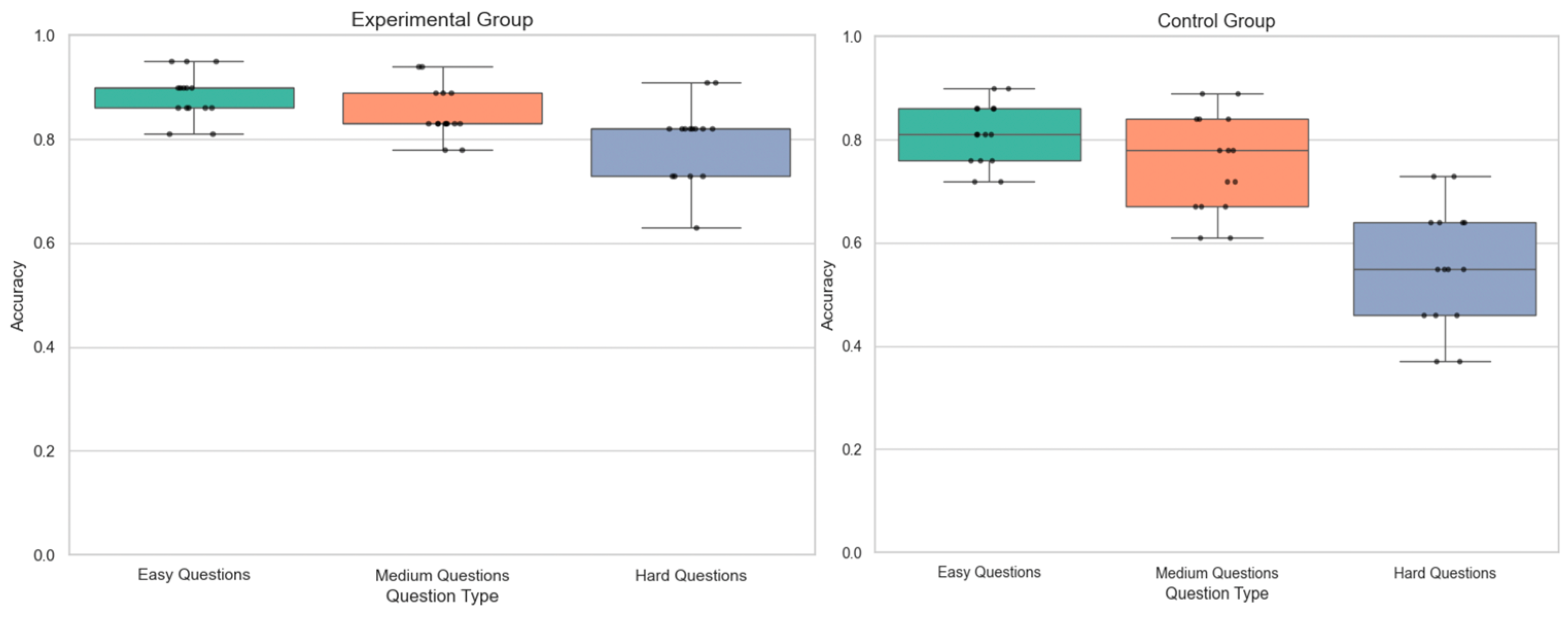

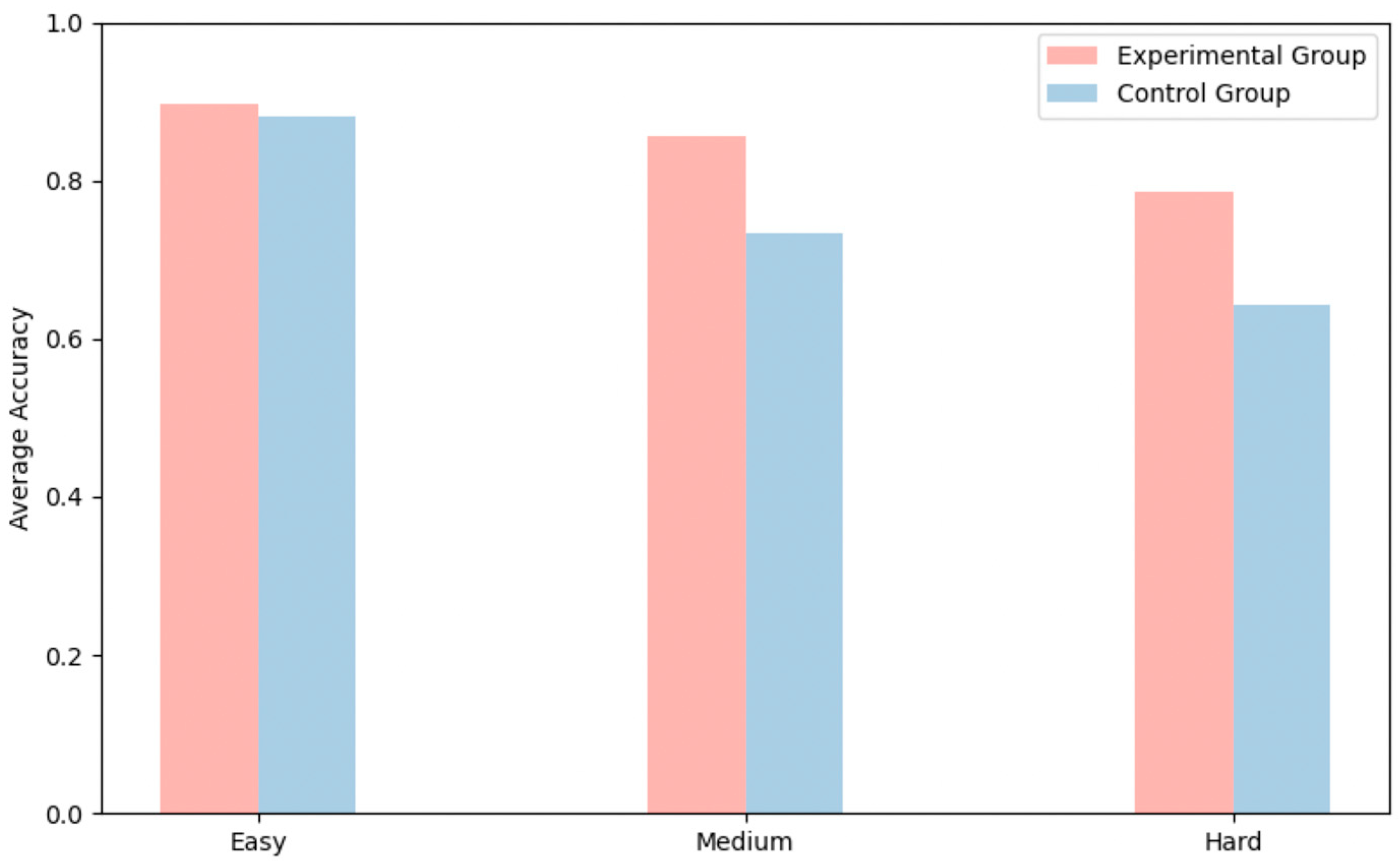

A total of 1500 responses were collected from 30 students in the experimental and control groups. As shown in

Table 5, the average accuracy rates for the experimental group on easy, medium, and hard questions were 0.899, 0.861, and 0.771, respectively, with an overall average accuracy of 0.844 and a variance of approximately 0.0021. This indicates that the students in the experimental group achieved a relatively high level of accuracy with low variability, demonstrating stable performance.

In contrast, the control group achieved average accuracy rates of 0.897, 0.766, and 0.628 for easy, medium, and hard questions, respectively, with an overall average accuracy of 0.764 and a variance of approximately 0.0031. Compared with the experimental group, the control group exhibited not only a lower average accuracy but also greater variability, suggesting more pronounced differences in performance across different question types and among individual students.

5.2. Self-Directed Learning Ability

This study employed students’ total test scores as the primary experimental indicator to evaluate changes in their self-directed learning ability. The measurement scale comprised three dimensions—learning motivation, learning strategies, and self-management—consisting of 15 items rated on a 5-point Likert scale (1 = strongly disagree, 5 = strongly agree). The scale demonstrated good internal consistency, with a Cronbach’s α coefficient of 0.87. Validity testing indicated that all items reached a statistically significant level. The overall SDL score ranged from 1 to 5, and in the experimental group, the mean score increased from 3.21 before the intervention to 3.87 after the intervention.

For data analysis, a paired-sample

t-test was conducted to assess the effectiveness of the knowledge graph-integrated large language model-based intelligent tutoring system (KG-CQ) in enhancing students’ SDL ability. The Shapiro–Wilk test confirmed that all measurement data approximated a normal distribution (

p > 0.05), meeting the assumptions for statistical analysis. As shown in

Table 6, the experimental group’s post-intervention SDL scores were significantly higher than those measured prior to the intervention (

t = 3.128,

p = 0.004). These findings suggest that the KG-CQ model not only fosters students’ learning initiative but also substantially improves their overall self-directed learning ability.

5.3. Application Effect of the Intelligent Course Question-Answering Model

5.3.1. Support of the Model for Students’ Self-Directed Learning

As shown in

Figure 8, after using the KG-CQ model to support learning, students in the experimental group achieved relatively high accuracy on easy questions, with small fluctuations and stable performance. For medium and hard questions, although the accuracy slightly decreased and the variability increased, the overall stability remained. This indicates that the model played a positive role in helping students independently address problems of varying difficulty levels.

In contrast, the accuracy of the control group students declined across all question types, with significant individual differences. Particularly for difficult questions, the range of fluctuations increased considerably, reflecting that students, in the absence of model support, faced clear limitations in their ability to learn autonomously when tackling challenging tasks. Therefore, the introduction of the KG-CQ model not only effectively improved the overall performance of students in the experimental group on questions of varying difficulty but also enhanced the stability of their learning process. More importantly, the model significantly promoted the development of students’ self-directed learning abilities, enabling them to better cope with various learning challenges. This fully demonstrates the model’s important role in improving learning outcomes and supporting students’ independent learning.

5.3.2. Accuracy Analysis

As shown in

Figure 9, the average answer accuracy of students in the experimental group supported by the KG-CQ model was significantly higher than that of the control group who did not use the model. This indicates that the knowledge graph-enhanced question-answering model effectively improves students’ ability to understand and apply knowledge. The model helps students quickly locate relevant nodes in the knowledge graph and access accurate and well-structured knowledge content, thereby facilitating self-directed learning. Students in the experimental group also reported that the model provided procedural guidance and knowledge pathways during the question-answering process, which made it easier to trace back knowledge and significantly improved learning efficiency. In contrast, control group students often relied on traditional information retrieval methods when encountering difficult problems. This approach was time-consuming and labor-intensive and often led to comprehension difficulties due to disorganized information or fragmented knowledge, resulting in greater fluctuations in answer accuracy.

Figure 10 further shows that the mean score of students in the experimental group was 80.47, with a standard deviation of 3.1 and a variance of 9.63. In comparison, the mean score of the control group was 66.33, with a standard deviation of 6.14 and a variance of 37.67. The overall performance of the experimental group was more stable, indicating that the model played a positive role in enhancing students’ self-directed learning and deep understanding.

5.3.3. Practicality Analysis

Statistical results show that students in the experimental group used the model an average of approximately 15 times throughout the testing process, mainly focusing on medium and hard questions. Most students believed that, compared with traditional learning methods, the model had significant advantages in knowledge localization, clarity of problem-solving paths, and answering efficiency, thereby effectively improving both accuracy and learning outcomes. Particularly during post-class review or self-testing sessions, the model provided targeted feedback and supplementary knowledge, which helped enhance students’ self-directed learning ability.

In addition, as shown in

Table 7, the average response time of the experimental group was lower than that of the control group across all question types, with the most significant difference observed in hard questions. This result indicates that the intelligent course Q&A model can provide timely knowledge hints and problem-solving guidance, helping students reduce the time spent searching for relevant knowledge and solve problems more efficiently, thus demonstrating a high level of practicality.

5.3.4. Student Interviews

User Experience with the Model: Interviews with 10 students revealed that they generally found the KG-CQ model to be functionally clear and easy to operate, aligning with their learning habits and offering efficient support for self-directed learning without increasing their cognitive load. They also provided suggestions for improvement, such as enhancing the visualization of the knowledge graph and adding real-time discussion functions to improve human–computer interaction.

Support for Learning in Educational Technology: Student Wang mentioned that the model helped quickly reinforce content that was not fully understood in class and supported targeted pre-class preparation. Student Zhang stated that the model, combined with the course knowledge graph, could provide precise answers and learning suggestions based on specific questions, reducing inefficient information retrieval and improving learning focus and efficiency. Student Li noted that the model sparked their interest and curiosity in knowledge, and the interactive Q&A process strengthened their learning initiative and persistence.

In summary, the model not only demonstrated strong practicality in supporting question answering but also effectively enhanced students’ self-directed learning abilities and cognitive development.

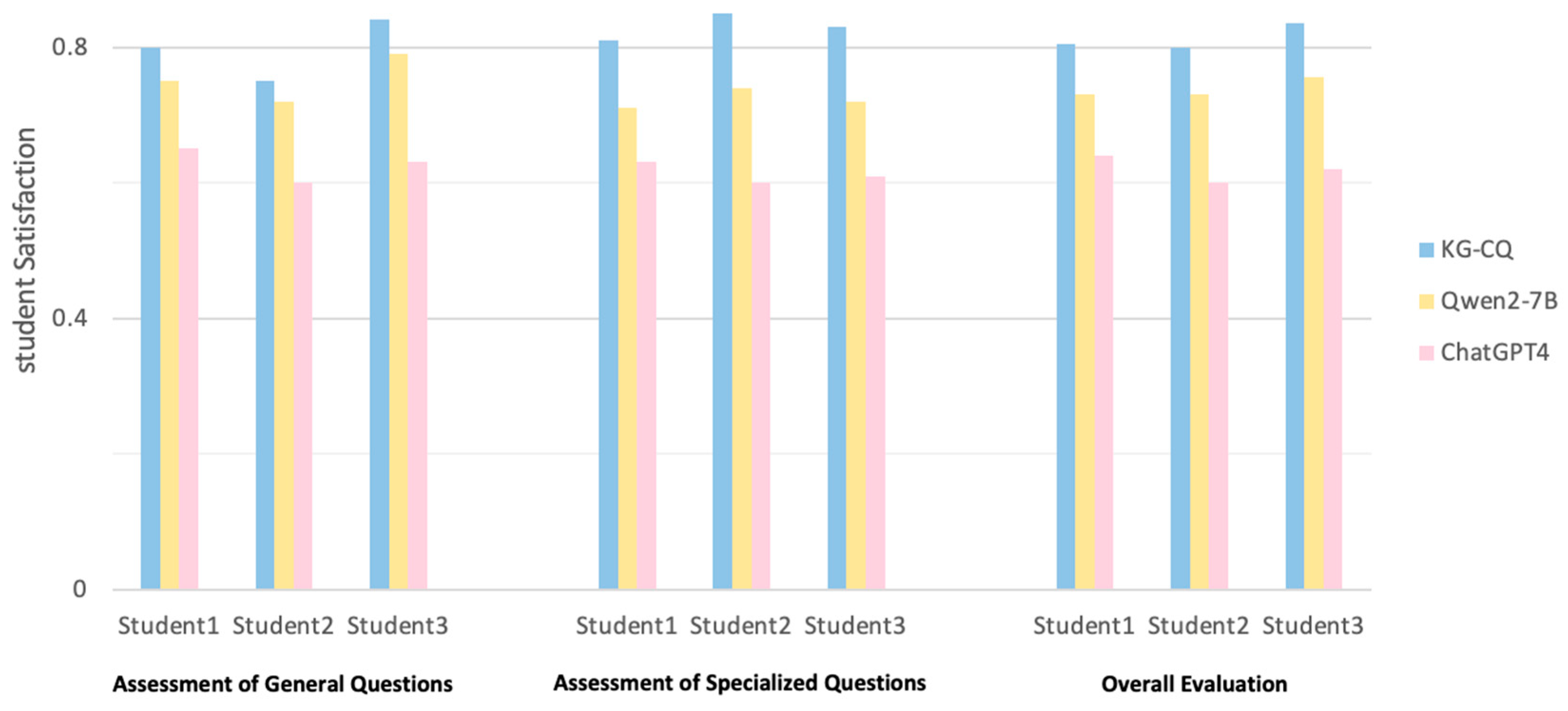

5.3.5. Student Satisfaction

A total of 200 questions were collected and divided into two categories: 100 general course questions and 100 specialized course questions. (General questions are common within the course and require less specialized knowledge, while specialized questions demand a certain degree of disciplinary knowledge for reasoning and analysis.) These questions were tested using three different models: ChatGPT4, Qwen2-7B, and KG-CQ model developed in this study. The generated answers from each model were collected and organized.

Subsequently, three graduate students majoring in Educational Technology were invited to evaluate the model outputs from four dimensions: “accuracy in understanding the question,” “completeness of the answer content,” “clarity of logical expression,” and “support for self-directed learning.” Each rater conducted the evaluation independently based on their learning perspective and academic background, using a binary satisfaction scoring system (satisfied/unsatisfied). Meanwhile, to ensure the objectivity and consistency of the scoring results, Fleiss’s Kappa coefficient was employed to examine the inter-rater agreement among three evaluators. The coefficient was 0.82, indicating a high level of consistency and reliability among raters.

As shown in

Figure 11, the KG-CQ model achieved significantly higher student satisfaction on both types of questions compared to the other models. This may be attributed to the KG-CQ model’s ability to deeply analyze questions, retrieve relevant knowledge from the knowledge base, and generate comprehensive, detailed, and professional answers based on the designed prompt templates, while also offering corresponding learning suggestions. As a result, the final answers were more widely accepted by students.

6. Discussion

This study focuses on two core questions: “How can the integration of a course knowledge graph into a question-answering mechanism enhance the large language model’s understanding of specialized course content?” and “Can the constructed KG-CQ model effectively improve students’ self-directed learning outcomes in practical applications?” Experimental results show that the KG-CQ model outperforms both traditional learning methods and general large language models across multiple indicators, confirming its feasibility and effectiveness in professional course Q&A scenarios and demonstrating its positive impact on enhancing students’ self-directed learning abilities. It should be noted that the experimental group’s performance in this study was achieved with the support of the tool; therefore, further research is needed to examine students’ transfer learning ability in contexts without such tool support.

Regarding the first research question, by constructing a question-answering mechanism integrated with a course knowledge graph, the KG-CQ model not only strengthens the large language model’s semantic understanding and knowledge tracing capabilities within specialized course content but also significantly improves its overall performance in terms of answer accuracy, domain specificity, and personalized support. This provides an effective pathway to address issues such as fragmented knowledge and hallucinated responses in large models. As Bender et al. pointed out, large language models tend to produce semantic misunderstandings and information distortion when handling domain-specific knowledge, affecting the accuracy and reliability of professional Q&A (

Bender et al., 2021). The KG-CQ model, leveraging the knowledge traceability and node associations of the Neo4j graph database, effectively achieves the precise recall of question-related entities and domain-specific knowledge, thereby significantly reducing the risk of hallucinated content and biased answers. Compared with the Q&A framework proposed by Ma et al. which combines semantic matching and answer localization reasoning, the KG-CQ model deeply integrates the knowledge graph with the large language model, enhancing the accuracy and contextual matching of answers in specialized domains (

Ma et al., 2020). It also draws on structured retrieval, chain-of-thought reasoning, and vector database construction to improve the semantic focus and logical coherence of generated content. Comparative tests with Qwen2-7B and ChatGPT4 further demonstrate that the KG-CQ model excels in handling difficult questions and high-level reasoning tasks, validating the reinforcing role of course knowledge graphs in semantic understanding and demonstrating the scalability of large language models in specialized domains. In addition, compared to approaches that rely solely on prompt engineering or fine-tuning (

Lu et al., 2025) KG-CQ provides stronger interpretability and traceability in knowledge structuring and dynamic updates. Its knowledge extraction module forms a closed loop from generation to storage, enabling the model to gradually accumulate course knowledge through usage and continuously optimize subsequent Q&A performance. This offers a viable pathway for the sustainable optimization of large language models in educational contexts and provides valuable insights into addressing the issues of knowledge fragmentation and the black-box nature of current research (

Hu & Wang, 2024).

As for the second research question, the KG-CQ model, through structured knowledge support in practical applications, actively guides students to acquire and integrate course knowledge. It not only significantly improves their performance on medium- and high-difficulty questions but also effectively promotes the development of self-directed learning awareness and self-regulation abilities. While enhancing learning outcomes, it also provides strong support for students to deeply understand complex knowledge and cultivate higher-order thinking skills. The average accuracy of the experimental group (0.84) was significantly higher than that of the control group (0.76), with the most notable improvement observed in medium and hard questions. Cen Z et al. in their design and validation of a process-oriented computational thinking gamified intelligent tutoring system, proposed combining machine learning algorithms with process-based behavioral analysis to dynamically assess and guide the development of learners’ computational thinking (

Cen et al., 2025). Their findings verified that semantic support and procedural feedback can effectively reduce students’ information-filtering burden and improve the goal-orientation and efficiency of learning. Correlation analysis further revealed a strong positive correlation between model usage frequency and accuracy on hard questions (r = 0.90), indicating the KG-CQ model’s advantages in supporting the comprehension of complex knowledge and higher-order thinking. Results from the Shapiro–Wilk test and paired-sample t-test showed a significant improvement in the experimental group’s self-directed learning ability after the intervention (

t = 3.128,

p = 0.004), while no significant change was observed in the control group. This finding aligns with constructivist learning theory (

Cobern, 2012), suggesting that under the dual influence of structured knowledge support and interactive feedback mechanisms, students are able to actively construct knowledge in complex situations and enhance their self-regulated learning capacity. Interview results were also consistent with the quantitative findings. Most students reported that the KG-CQ model not only provided efficient support for knowledge localization and problem-solving paths but also offered strong assistance for self-directed learning during pre-class preparation and post-class review. This is in line with the key elements of contextuality, collaboration, and generativity emphasized by Kharroubi S et al. and Kara A et al. (

Kharroubi & ElMediouni, 2024;

Kara et al., 2024).

Nevertheless, this study has several limitations. First, the knowledge graph in the KG-CQ model currently relies primarily on structured text, and its adaptability and automation in interdisciplinary courses remain limited. Future work could extend the application of the model to interdisciplinary and multilingual contexts and incorporate real-time collaborative visualization features to better support complex learning scenarios. Second, the sample size in this study was relatively small. Although the experimental results were statistically significant, the model’s generalizability across different institutions and academic backgrounds requires further verification. Future research could employ larger and more diverse samples to enhance external validity. In addition, the measurement and analysis of students’ self-directed learning ability in this study were not fully comprehensive. Subsequent studies should provide a more complete description of the scale, including reliability and validity information, and introduce between-group change estimates to more accurately assess improvements in self-directed learning.

7. Conclusions

This study proposed an intelligent assistant model (KG-CQ) that integrates a knowledge graph with a large language model. It effectively addresses the two core questions raised at the beginning of the research. First, through the incorporation of structured course knowledge, the model significantly enhances the large language model’s understanding and semantic interpretation of specialized course content, markedly improving the accuracy of knowledge matching for student questions and validating the model’s high precision in entity recognition and relation extraction. Second, in practical course applications, the KG-CQ model not only substantially improves students’ answer accuracy—particularly for medium- and high-difficulty questions—but also promotes the development of higher-order thinking and self-directed learning abilities. Interview and satisfaction data further confirm the model’s positive impact on stimulating learning motivation and improving learning efficiency.

Although general large language models have demonstrated tremendous potential in empowering educational practices, there remains a long way to go in realizing this goal. This study still has certain limitations, including a relatively small sample size, reliance on the quality of the knowledge graph, and the current inability of the question-answering tasks to cover more complex knowledge structures. Future research could further validate the generalizability of the KG-CQ model using larger samples, interdisciplinary knowledge graphs, and diverse application scenarios. Moreover, integrating mechanisms such as multimodal input and personalized recommendation could further enhance the model’s support for complex reasoning and semantic understanding, thereby continuously exploring feasible pathways for the deep integration of artificial intelligence technologies into education and for promoting self-directed learning and personalized development among students.

Author Contributions

Conceptualization, Z.Z. and G.W.; methodology, G.W.; software, G.W.; validation, Z.Z., G.W. and S.Q.; formal analysis, G.W. and S.Q.; investigation, G.W. and S.Q.; resources, Z.Z.; data curation, G.W.; writing—original draft preparation, G.W.; writing—review and editing, Z.Z., G.W. and S.Q.; visualization, Z.Z., G.W. and S.Q.; supervision, Z.Z.; project administration, Z.Z., G.W. and S.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation in China [62277018; 62237001] and the Project of Teaching Quality and Teaching Reform in Undergraduate Universities of Guangdong Province: Research and Practice on a Multi-Agent Empowered “Cross-Innovation Integration” Talent Training Model [2024-602].

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of South China Normal University (SCNU-SITE-2025-202506001, 7 May 2025).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data is not available, as the individuals who completed the test questions and participated in the interviews agreed to provide their responses only under the condition that the information would be kept strictly confidential.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Abu Bakar, M. A., Ab Ghani, A. T., & Abdullah, M. L. (2024). An intelligent mathematics problem-solving tutoring system framework: A conceptual of merging of fuzzy neural networks and neuroscience mechanistic. International Journal of Online & Biomedical Engineering, 20(5), 44–65. [Google Scholar]

- Baidoo-Anu, D., & Ansah, L. O. (2023). Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. Journal of AI, 7(1), 52–62. [Google Scholar] [CrossRef]

- Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021, March 3–10). On the dangers of stochastic parrots: Can language models be too big? 2021 ACM Conference on Fairness, Accountability, and Transparency (pp. 610–623), Toronto, ON, Canada. [Google Scholar]

- Cen, Z., Zheng, L., & Zhan, Z. (2025, March 14–16). Design and performance validation of a computational thinking gamified intelligent tutoring system focusing on thinking process. 2025 14th International Conference on Educational and Information Technology (ICEIT) (pp. 181–187), Guangzhou, China. [Google Scholar]

- Cobern, W. W. (1993). Constructivism. Journal of Educational and Psychological Consultation, 4(1), 105–112. [Google Scholar] [CrossRef]

- Cobern, W. W. (2012). Contextual constructivism: The impact of culture on the learning and teaching of science. In The practice of constructivism in science education (pp. 51–69). Routledge. [Google Scholar]

- Hu, S., & Wang, X. (2024, August). Foke: A personalized and explainable education framework integrating foundation models, knowledge graphs, and prompt engineering. In China national conference on big data and social computing (pp. 399–411). Springer Nature Singapore. [Google Scholar]

- Kara, A., Ergulec, F., & Eren, E. (2024). The mediating role of self-regulated online learning behaviors: Exploring the impact of personality traits on student engagement. Education and Information Technologies, 29(17), 23517–23546. [Google Scholar] [CrossRef]

- Kharroubi, S., & ElMediouni, A. (2024). Conceptual review: Cultivating learner autonomy through self-directed learning & self-regulated learning: A socio-constructivist exploration. International Journal of Language and Literary Studies, 6(2), 276–296. [Google Scholar]

- Kim, M., & Adlof, L. (2024). Adapting to the future: ChatGPT as a means for supporting constructivist learning environments. TechTrends, 68(1), 37–46. [Google Scholar] [CrossRef]

- Lin, X. (2024). Exploring the role of ChatGPT as a facilitator for motivating self-directed learning among adult learners. Adult Learning, 35(3), 156–166. [Google Scholar] [CrossRef]

- Lu, Y., Zhou, Y., Li, J., Wang, Y., Liu, X., He, D., Liu, F., & Zhang, M. (2025, February 25–27). Knowledge editing with dynamic knowledge graphs for multi-hop question answering. AAAI Conference on Artificial Intelligence (Vol. 39, No. 23, pp. 24741–24749), Philadelphia, PA, USA. [Google Scholar]

- Ma, X., Zhu, Q., Zhou, Y., & Li, X. (2020, February 7–12). Improving question generation with sentence-level semantic matching and answer position inferring. AAAI Conference on Artificial Intelligence (Vol. 34, No. 05, pp. 8464–8471), New York, NY, USA. [Google Scholar]

- Ng, D. T. K., Tan, C. W., & Leung, J. K. L. (2024). Empowering student self-regulated learning and science education through ChatGPT: A pioneering pilot study. British Journal of Educational Technology, 55(4), 1328–1353. [Google Scholar] [CrossRef]

- Noy, N. F., & McGuinness, D. L. (2001). Knowledge Systems Laboratory Stanford University. Available online: http://www.ksl.stanford.edu/people/dlm/papers/ontology-tutorial-noy-mcguinness-abstract.html (accessed on 12 May 2025).

- Shafik, W. (2023). Introduction to chatgpt. In Advanced applications of generative AI and natural language processing models (pp. 1–25). IGI Global Scientific Publishing. [Google Scholar]

- Topsakal, O., & Akinci, T. C. (2023, July 10–12). Creating large language model applications utilizing langchain: A primer on developing llm apps fast. International Conference on Applied Engineering and Natural Sciences (Vol. 1, No. 1, pp. 1050–1056), Konya, Turkey. [Google Scholar]

- Wang, A., Pruksachatkun, Y., Nangia, N., Singh, A., Michael, J., Hill, F., Levy, O., & Bowman, S. R. (2019, December 8–14). SuperGLUE: A stickier benchmark for general-purpose language understanding systems. 33rd International Conference on Neural Information Processing Systems (pp. 3266–3280), Vancouver, BC, Canada. [Google Scholar]

- Yang, H., Kim, H., Lee, J. H., & Shin, D. (2022). Implementation of an AI chatbot as an English conversation partner in EFL speaking classes. ReCALL, 34(3), 327–343. [Google Scholar] [CrossRef]

- Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education–where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 39. [Google Scholar] [CrossRef]

- Zhong, C., Ye, F., Wang, Z., Jigeer, A., & Zhan, Z. (2025, March 14–16). Interdisciplinary-QG: An LLM-based framework for generating high-quality interdisciplinary test questions with knowledge graphs and chain-of-thought reasoning. 2025 14th International Conference on Educational and Information Technology (ICEIT) (pp. 68–78), Guangzhou, China. [Google Scholar]

- Zhong, W., Ye, F., Zhong, C., Cai, R., & Zhan, Z. (2025, April 18–20). InnoChat: A heuristic teaching large model for in-novation thinking training based on logical chains and prompt tuning. 7th International Conference on Computer Science and Technologies in Education (pp. 68–82), Wuhan, China. [Google Scholar]

- Zhong, X., & Zhan, Z. (2024). An intelligent tutoring system for programming education based on informative tutoring feedback: System development, algorithm design, and empirical study. Interactive Technology and Smart Education, 22(1), 3–24. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).