Abstract

Artificial intelligence (AI) holds considerable promise to transform education, from personalizing learning to enhancing teaching efficiency, yet it simultaneously introduces significant concerns regarding ethical implications and responsible implementation. This exploratory survey investigated the perceptions of 376 teachers, university students, and future educators from the University of Salerno area concerning AI integration in education. Data were collected via a comprehensive digital questionnaire, divided into sections on personal data, AI’s perceived impact, its usefulness, and specific applications in education. Descriptive and inferential statistical analyses, including mean, mode, standard deviation, and 95% confidence intervals, were applied to the Likert scale responses. Results indicated a general openness to AI as a supportive tool for personalized learning and efficiency. However, significant reservations emerged regarding AI’s capacity to replace the human role. For instance, 69% of participants disagreed that AI tutors could match human feedback efficiency, and strong opposition was found against AI replacing textbooks (81% disagreement) or face-to-face lessons (87% disagreement). Conversely, there was an overwhelming consensus on the necessity of careful and conscious AI use (98% agreement). Participants also exhibited skepticism regarding AI’s utility for younger learners (e.g., 80% disagreement for ages 0–6), while largely agreeing on its benefit for adult learning. Strong support was observed for AI’s role in providing simulations and virtual labs (89% agreement) and developing interactive educational content (94% agreement). This study underscores a positive inclination towards AI as an enhancement tool, balanced by a strong insistence on preserving human interaction in education, highlighting the need for thoughtful integration and training.

1. Introduction

Artificial intelligence (AI) is broadly seen by future educators as a significant opportunity to transform education, although they simultaneously recognize the inherent challenges and limitations associated with its implementation. The potential for AI in the educational sector is vast and continuously expanding, with its benefits increasingly supported by scientific evidence in recent years.

Among the numerous potential benefits of AI in education are the following:

- The capability to design personalized learning pathways, tailoring educational content to individual student needs and providing real-time feedback (Tapalova et al., 2022; Chen et al., 2020);

- The improvement of teaching methodologies through the deployment of intelligent tutoring systems and the development of automated assessments, which collectively enhance the efficiency and accessibility of educational interventions (Zadorina et al., 2024; Kamalov et al., 2023; Chen et al., 2020);

- The provision of support in managing administrative practices and processes, thereby freeing up educators’ time and allowing them to focus more on core teaching responsibilities (Zadorina et al., 2024; Chen et al., 2020).

Despite the growing awareness of the advantages that AI can offer, there is also a critical understanding that AI cannot fundamentally replace the human role of teachers, as its intelligence is inherently distinct from human intelligence (Cope et al., 2020). From an ethical and social perspective, the implementation of AI introduces additional concerns, such as data privacy and equity in access to technologies (Kamalov et al., 2023; Tapalova et al., 2022). Consequently, it is imperative to implement robust security measures to prevent the misuse of AI technologies within educational settings (Kamalov et al., 2023). These concerns highlight the critical need for established ethical frameworks, such as those proposed by UNESCO’s guidance on AI in education (UNESCO, 2023), which emphasize principles of fairness, transparency, and accountability to ensure the responsible development and deployment of AI in educational settings. UNESCO’s AI competency frameworks aim to help students and educators to understand the intricacies of AI, focusing on a human-centered approach to promote responsible AI usage and integrate AI techniques into educational curricula. This initiative raises critical questions for education systems, including how to adapt these frameworks to unique cultural contexts, provide support for educators, help students to navigate ethical dilemmas, and promote ethical AI design and usage.

While there is generally a positive outlook regarding AI’s potential to enhance educational processes, there are also significant concerns and notable gaps in the existing literature that warrant addressing. Teachers, despite largely holding a favorable view of AI in education and acknowledging its potential to reduce workloads and foster professional development, frequently express concerns regarding ethical and privacy issues. They emphasize the necessity of a balanced approach that maximizes benefits while rigorously protecting stakeholders’ rights (Uygun, 2024; Xue & Wang, 2022; Gocen & Aydemir, 2020). Similarly, students and future educators perceive AI as a valuable tool for personalized learning, capable of offering adaptive learning paths and real-time feedback that can significantly improve learning outcomes. However, the current implementation of AI is still perceived to be in the experimental stages, which discourages carefree and confident adoption by these groups (‘Alam et al., 2024). Common challenges identified include ethical issues, the urgent need for clear legal frameworks governing AI adoption in education (Ahmad et al., 2022), and the potential for AI to fundamentally alter the roles of teachers and educational institutions (Gocen & Aydemir, 2020; ‘Alam et al., 2024; Kamalov et al., 2023). Specifically, there is a conspicuous lack of comprehensive discussion on how to effectively integrate AI into educational curricula, an aspect deemed crucial for its successful implementation (Xue & Wang, 2022).

While these studies acknowledge the “what” of AI’s potential and challenges, they often lack the “how” and “why” from a theoretical standpoint. The effective integration of technology into education, for instance, can be conceptualized through models like the Substitution, Augmentation, Modification, Redefinition (SAMR) model (Hamilton et al., 2016), which offers a practical framework for educators to effectively integrate technology. SAMR guides teachers through four stages, helping them to integrate technology in innovative ways:

- At the Substitution stage, technology directly replaces traditional tools without significant functional change—for example, using a word processor instead of paper and pencil or Nearpod replacing physical handouts with digital versions;

- The Augmentation stage involves technology enhancing the learning process beyond traditional methods, adding value, such as a word processor with spelling and grammar checks or Nearpod providing interactive activities and real-time feedback through quizzes and polls;

- In the Modification stage, technology significantly redesigns learning tasks, transforming the experience—for example, students collaborating on shared documents with multimedia elements or Nearpod facilitating collaborative activities and dynamic discussions;

- The highest level, Redefinition, sees technology enabling new learning experiences that were previously inconceivable, completely transforming the task. An example is creating multimedia presentations with interactive elements or Nearpod’s Virtual Reality (VR) Field Trips and 3D models, which offer immersive, hands-on learning. By progressing through these stages, tools like Nearpod can enhance student learning and create transformative educational experiences.

Similarly, understanding the factors influencing the adoption and use of AI tools necessitates theoretical frameworks such as the Unified Theory of Acceptance and Use of Technology (UTAUT, Venkatesh et al., 2003). UTAUT examines the acceptance of technology, determined by the effects of performance expectancy, effort expectancy, social influence, and facilitating conditions. This theory, proposed by (Venkatesh et al., 2003), is a comprehensive model developed by integrating key constructs from multiple parent theories, including the Technology Acceptance Model (TAM), to provide a holistic understanding of technology acceptance. UTAUT predicts the actual use of technology based on behavioral intention, which in turn depends on these four key constructs, moderated by factors like age, gender, experience, and voluntariness of use:

- Performance expectancy refers to the degree to which an individual believes that using the system will help them to attain gains in job performance;

- Effort expectancy is defined as the degree of ease associated with the use of the system;

- Social influence is the degree to which an individual perceives that important others believe that they should use the new system;

- Facilitating conditions refers to the belief that organizational and technical infrastructure exists to support the system’s use. UTAUT has shown significant predictive power, accounting for 70 percent of the variance in use intention, making it a robust framework for understanding technology adoption. Its extended version, UTAUT2 (Venkatesh et al., 2012), further enhances this by incorporating hedonic motivation, price value, and habit, particularly for consumer technology adoption.

The existing literature, while recognizing the growing interest in AI in education and touching upon its potential benefits and associated concerns, demonstrates significant gaps. More specifically, there is a lack of a comprehensive and comparative analysis of the perceptions held by different key stakeholders within a specific educational context, such as that of the University of Salerno. Current research frequently presents a polarized view of AI adoption and lacks a nuanced understanding of how teachers, university students, and future educators perceive the potential benefits and risks across diverse educational applications, including individual learning, support for frontal teaching, and assessment. Furthermore, there is limited exploration of the specific concerns and preferences of these diverse groups regarding the integration of AI tools in education, particularly concerning ethical implications, the evolving role of educators, and the practical aspects of curriculum integration. By examining these perceptions through the lens of technology acceptance (UTAUT) and integration (SAMR) theories, this study aims to provide a more robust understanding of the practical and theoretical implications of AI in education.

To address these identified gaps, the present research seeks to investigate the following research question:

- How do teachers, university students, and future educators within the specific territorial context of the University of Salerno perceive the potential benefits and risks of AI in various educational contexts, and are there significant differences in their opinions regarding the integration and application of AI in education?

This study, guided by the research question, therefore aims to provide a more detailed and balanced understanding of these diverse perspectives. By doing so, it intends to offer valuable insights for the conscious and responsible integration of AI into the future of education within this specific setting.

In light of the posed research question, the following study hypotheses can be outlined.

Hypothesis 1.

Participants from the educational world (teachers, university students, and future educators) in the territorial context of the University of Salerno will generally exhibit openness and a positive perception towards the potential of artificial intelligence as a support tool in the field of education.

Hypothesis 2.

Participants will largely agree on the potential of AI in personalizing learning and increasing teaching efficiency, but will be more skeptical about its ability to replace human interaction.

Hypothesis 3.

A strong consensus will emerge among participants regarding the need for a cautious, conscious, and responsible approach to the integration of AI in education, underlining the importance of knowledge and training in this area.

2. Materials and Methods

2.1. The Research Methodology

The adopted research methodology was structured in a systematic manner to ensure reliable and valid results. It began with the design of a comprehensive questionnaire, which adhered to established principles of clarity and brevity (Krosnick & Presser, 2010). The questions were meticulously crafted to minimize ambiguity and ensure inclusivity, reflecting best practices in educational research design (Cohen et al., 2017).

Before the questionnaire was fully administered, it was adopted in a pilot test by engaging a small group of participants (university students and future educators), selected on a voluntary basis from among those most easily accessible to the author (students participating in his “Evaluation of e-Learning” course at the University of Salerno). The purpose of this pre-test was to identify any ambiguities in the questions, verify the clarity of the language, and ensure that the response options (4-point Likert scale) were clearly understood and that the questionnaire was not excessively long. The number of participants involved was 13. Their observations were collected in a focus group, in which each participant provided specific suggestions on the questions. The observations were used to improve the form of the questions or eliminate some redundant items.

After this pilot phase, the questionnaire was submitted to two more experienced colleagues (a full professor and an associate professor of experimental pedagogy) to assess the instrument’s content validity, ensuring that the questions were relevant to the research objectives and that their wording was impartial and non-biased. The experts’ recommendations and suggested modifications were incorporated into the final questionnaire, thus contributing to its methodological robustness.

The questionnaire was then translated and distributed digitally using Google Forms, an efficient tool for anonymous data collection and real-time analysis (Anderson & Shattuck, 2012). Participants included a diverse group of teachers, students, and future educators from the University of Salerno area, representing a range of educational and professional contexts. The sampling approach was guided by convenience sampling principles (Etikan et al., 2016), allowing access to varied perspectives while recognizing potential limitations in generalizability. Upon collection, data analysis employed both descriptive and inferential statistical techniques to derive meaningful insights into participants’ opinions. The analysis was aimed at identifying trends and patterns, providing a robust foundation for drawing conclusions. The methodology also incorporated ethical considerations, ensuring confidentiality and voluntary participation. Participants were informed of the study’s objectives and were required to give consent for their data to be used exclusively for research purposes.

This comprehensive approach, combining principles of inclusivity, digital efficiency, and ethical rigor, underscores the validity of the findings while demonstrating a commitment to methodological excellence.

2.2. The Questionnaire

The questionnaire has been designed by following published suggestions (Krosnick & Presser, 2010). It is divided into 4 sections. Section A (Table A1 in Appendix A) aims at collecting personal data about the participants. Section B (Table A2 in Appendix A) aims at measuring the perceptions of participants about the impact of AI. Section C (Table A3 in Appendix A) aims at measuring the perceptions of participants about the usefulness of AI. Finally, Section D (Table A4 in Appendix A) aims at measuring the perceptions of participants about the use of AI in education.

In Section B, Section C, and Section D, the answers about the agreement of the participants are collected on a Likert scale on 4 levels. The first one (Yes) and the second one (More Yes than No) indicate positive perceptions of participants. In contrast, the third one (More No than Yes) and the last one (More Yes than No) indicate negative perceptions. The four-point Likert scale was intentionally chosen without a neutral midpoint to encourage participants to express a clear directional stance regarding their perceptions of AI, thereby eliciting more definitive opinions. This methodological decision aimed to reduce “fence sitting” and enhance the clarity of the collected data (Brown & Maydeu-Olivares, 2011).

2.3. The Tools for the Survey and Data Analysis

The questionnaire was translated and administered in digital form through the use of Google Forms. This platform has the advantage of allowing for completely anonymous data collection and of connecting the tracked responses to a spreadsheet (Google Sheet), which then facilitated the data analysis and processing operations.

The collected data were processed by using Microsoft Excel and its “Data Analysis” add-on. The analysis was divided into two main phases to provide a comprehensive understanding of the participants’ perceptions.

As measures of central tendency and dispersion for each questionnaire item (in Sections B, C, and D), the mean and mode were calculated to identify the most common responses and the central tendency of opinions.

The standard deviation was used to quantify the dispersion of responses around the mean, providing an indication of the variability or homogeneity of opinions. A higher standard deviation (e.g., as noted for B10) indicates the greater divergence of opinions among participants.

The 95% confidence intervals, as mentioned in the Results, indicate the range within which the true population mean is likely to lie, with a 95% confidence level. The fact that the 95% confidence level for all items is 0.1 suggests greater confidence in the collected data and a certain level of homogeneity in the participants’ responses.

2.4. The People

The research described here is an exploratory study, aimed at investigating the perceptions of and opinions on AI in a heterogeneous sample of subjects. To this end, the goal was to gather the perspectives of individuals from the educational field regarding the use of artificial intelligence. Therefore, an effort was made to involve as many participants as possible with diverse backgrounds and experiences, united by an interest in AI. It is important to note that the sample was selected through convenience sampling, taking advantage of existing professional relationships with the participants (Etikan et al., 2016). While this method has limitations in terms of the generalizability of the results and is not associated with strict scientific rigor, it is suitable for exploratory studies that aim to capture a variety of perspectives on the phenomenon of AI in education. The collected opinions can thus be considered as representing some of the many points of view on this topic, and caution should be exercised when extending these findings to a broader population beyond the specific territorial context of the University of Salerno. In fact, the sample was composed of students of the University of Salerno, high school teachers, school technical and administrative staff, and participants in the specialization courses held at the University of Salerno. The students and the post-graduate participants from the University of Salerno were selected among those attending courses at the Department of Humanities, Philosophy, and Education. High school teachers and technical and administrative staff were selected among those that had been engaged in training courses on AI and held in institutes in the provinces of Avellino and Salerno.

Participation in the research was voluntary, after presenting the objectives of the study and guaranteeing the anonymity and confidentiality of the data collected.

Although convenience sampling has limitations in terms of the generalizability of the results, it is suitable for exploratory studies that aim to capture a variety of perspectives on a given phenomenon (Denzin & Lincoln, 2018). The diversification of participants’ backgrounds helps to mitigate potential biases resulting from sample selection (Creswell & Poth, 2018). Data were collected through the questionnaire, with items seeking to encourage the free and articulated expression of participants’ opinions.

3. Results

The results are organized around the structure of the questionnaire. Section 3.1 presents general data about the participants; Section 3.2 presents data on the impact of AI; Section 3.3 presents data about the usefulness of AI; and Section 3.4 presents data about the use of AI in education.

Beyond the general descriptive statistics presented in Section 3.1, a more in-depth inferential analysis was conducted to identify significant differences in perceptions among the diverse participant groups (teachers, university students, and future educators), addressing the core objective of this study. This comparative approach allows for a nuanced understanding of how professional roles, prior experiences, and demographic factors might influence opinions on AI’s impact, usefulness, and application in education. While the initial descriptive data highlight overall trends, comparing responses across these distinct cohorts and exploring potential correlations with variables such as age and educational qualification provides critical insights into the underlying reasons for the observed agreements or disagreements. The following sub-sections will therefore integrate these comparative findings where relevant, offering more analytical depth to the presented results.

3.1. General Data About the Participants

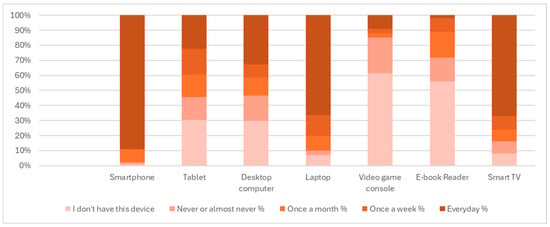

Section A of the administered questionnaire aimed at collecting personal data about the participants. A total of 376 people completed the questionnaire. As shown in Table 1, most of them (78%) are women. Table 2 shows how their age is distributed among the defined ranges: 16% between 18 and 22, 13% between 23 and 27, 15% between 28 and 32, 14% between 33 and 37, 21% between 38 and 45, 13% between 46 and 55, and 7% older than 56. As shown in Table 3, their cultural level is high: 43% of them have a master’s degree and 37% a high school diploma. As shown in Table 4, 21% of the participants are permanent teachers, 3% are employees in public administration, 6% of them are technicians or freelancers, 6% are private company employees, 22% of them are internship students, 29% are university students, and the remaining 13% of them are unemployed or other. As shown in Figure 1, there are still some individuals that do not have a smartphone (1%) and others that have one but do not use it everyday (about 10%). The rest of them (89%) use a smartphone everyday. As shown in Figure 2, for work or study activities, the most used device is a laptop (89%), followed by a smartphone (61%).

Table 1.

Gender of participants.

Table 2.

Distribution of participants’ ages.

Table 3.

Qualifications of participants.

Table 4.

Main occupations of participants.

Figure 1.

Percentages regarding (A5) How much do you use the following devices?

Figure 2.

Percentages regarding (A6) What devices do you use to study or work?

3.2. The Impact of AI

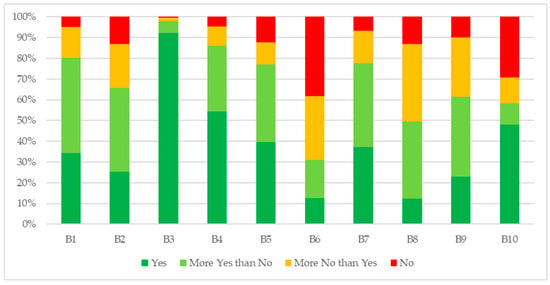

Section B of the administered questionnaire aimed at measuring the perceptions of participants about the impact of AI. All answers are given in Table 5, Figure 3, and Table 6.

Table 5.

The agreement of the participants about the impact of AI.

Figure 3.

Percentages related to the agreement of the participants about the impact of AI.

Table 6.

The agreement of the participants about the impact of AI as per their role.

Overall, the findings in this section indicate a general positive perception towards AI, strongly supporting Hypothesis 1, which posited an openness to AI as a support tool. For instance, the perceptions of AI’s beneficial impact on teaching (B2, Mean = 2.8) and its utility in improving student performance through adaptive online environments (B4, Mean = 3.4) align with this positive predisposition. AI requires careful and conscious use (question B3). This overwhelming consensus, with 98% agreement, directly supports Hypothesis 3, which anticipated a strong consensus on the need for a cautious, conscious, and responsible approach to the integration of AI in education. This aligns with a key aspect of Hypothesis 2, which suggested that participants would largely agree on the potential of AI in personalizing learning and increasing teaching efficiency. Specifically, most participants agreed that AI improves student performance (question B4, Mean = 3.4) and is useful to recommend content and facilitate learning (question B5, Mean = 3.0).

Conversely, Hypothesis 2 is also strongly supported by the data, showing skepticism towards AI replacing human interaction. It is a widespread perception that human feedback is still more effective than AI-generated feedback (question B6), with 69% of participants disagreeing that AI tutors could match the human feedback efficiency. Additionally, perceptions of the importance of humans compared with computers in teaching (question B10) were polarized, with 48% answering “Yes” and 10% answering “More Yes than No”, highlighting a strong emphasis on the human role.

As detailed in Table 6, further analysis of the responses, particularly for statements revealing varied opinions, reveals distinct perspectives across participant groups. For instance, while a strong consensus emerged on the necessity of careful and conscious AI use (B3), indicated by a mean of 3.9 and a mode of 4.0, a more granular examination could reveal how different stakeholder groups (e.g., permanent teachers versus university students) weigh the ethical implications and the need for robust security measures, given that such concerns are highlighted in the literature.

Regarding the perceived efficiency of AI tutors compared to human feedback (B6), where 69% of participants disagreed that AI tutors could match the human feedback efficiency, a comparative analysis revealed that both teachers, who are directly responsible for providing feedback, and educators exhibited statistically significant weaker skepticism (Mean = 2.2 and 2.5 respectively, St. Dev. = 1.0) compared to university students (Mean = 1.2, St. Dev. = 0.5). This reinforces the widespread opinion that human feedback remains more effective. Similarly, the polarized opinions on the importance of humans versus computers in teaching (B10) can be noted. Both teachers and educators (Mean = 3.4 and 3.3, respectively, St. Dev. = 1.1 and 1.0) strongly agreed on human importance, compared to students (Mean = 1.3 and St. Dev. = 0.5), highlighting a potential protective stance towards the human role in education. Such detailed comparisons directly address the study’s aim of identifying differences in opinions among key educational stakeholders.

3.3. The Usefulness of AI

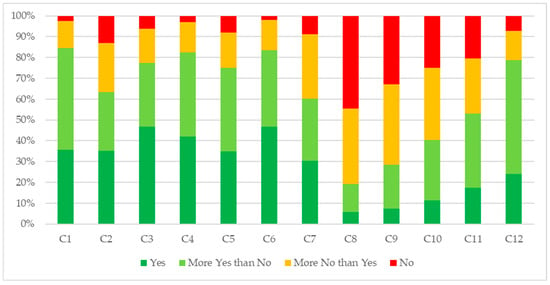

Section C of the administered questionnaire aimed at measuring the perceptions of participants about the usefulness of AI. All answers are given in Figure 4 and Table 7 and Table 8.

Figure 4.

Percentages related to the agreement of the participants about the usefulness of AI.

Table 7.

The agreement of the participants about the usefulness of AI.

Table 8.

The agreement of the participants about the usefulness of AI as per their role.

The results from this section further support Hypothesis 1, demonstrating a broad consensus on AI’s usefulness for improving learning in formal contexts (C1, Mean = 3.2), for acquiring new skills (C6, Mean = 3.3), and particularly for adult learning (C12, Mean = 3.0). A significant portion of the participants agree on the utility of AI for homework and individual study (question C2), but there is a larger percentage expressing doubt.

A large percentage of participants see AI as useful in transforming in-person courses into distance ones (question C3). Most participants agree on the utility of AI as additional support for in-person learning (question C4). Again, many participants believe that AI can support distance learning (question C5).

There is uncertainty about AI’s effectiveness in workplace learning (question C7).

The data regarding age groups further reinforce Hypothesis 2’s emphasis on skepticism towards AI for younger learners, with 80% disagreement for ages 0–6 (C8) and persistent doubt for ages 7–10 (C9) and 11–14 (C10). This contrasts with the strong agreement on AI’s utility for adult learning (C12, Mean = 3.0).

As detailed in Table 8, delving deeper into the perceived usefulness of AI across different learning contexts and age groups reveals interesting patterns and potential differences among participant demographics. While there is strong overall skepticism regarding AI’s utility for younger learners (ages 0–6 years in C8, and 7–10 years in C9), with 80% disagreement for ages 0–6, and increasing agreement for adult learning (C12), a cross-group analysis clarified that teachers, particularly those engaged in primary or secondary education, expressed significantly higher levels of doubt about AI’s effectiveness for children (e.g., for teachers, Mean = 2.1 and St. Dev. = 0.9; for educators, Mean = 2.0 and St. Dev. = 0.9; for students, Mean = 1.3 and St. Dev. = 0.7). This finding underscores a critical understanding that AI may not fundamentally replace the human role, especially for younger learners, who require more nuanced social and emotional interaction.

Furthermore, while the general utility of AI in improving learning in formal contexts (C1) and acquiring new skills (C6) is widely acknowledged, investigating whether teachers and students held similar or differing views on AI’s ability to assist with homework (C2) or transform course delivery (C3, C4, C5) showed nuances. For instance, university students expressed statistically significantly higher agreement on AI’s usefulness for individual study and homework (on C2, Mean = 3.1 and St. Dev. = 1.2) compared to both teachers (Mean = 2.7 and St. Dev. = 1.0) and educators (Mean = 2.8 and St. Dev. = 0.9), possibly reflecting their direct experience with personalized learning paths and real-time feedback systems.

3.4. The Use of AI in Education

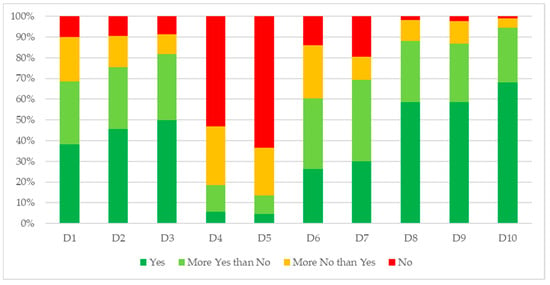

Finally, section D of the administered questionnaire aimed at measuring the perceptions of participants about the use of AI in education. All answers are given in Figure 5 and Table 9 and Table 10.

Figure 5.

Percentages related to the agreement of the participants about the use of AI in education.

Table 9.

The agreement of the participants about the use of AI in education.

Table 10.

The agreement of the participants about the use of AI in education as per their role.

The data in this section strongly reinforce Hypothesis 1, revealing significant participant endorsement for AI as an enhancing tool for individual study (D1, 69% agreement), supporting face-to-face lessons (D2, Mean = 3.1), and improving overall learning experiences (D3, 82% agreement), while only 19% of participants believe that AI should replace textbooks, reflecting a strong preference for traditional learning materials over AI substitution (question D4). Moreover, it is especially valued for developing interactive content (D10, 94% agreement) and providing simulations and virtual labs (D8, 89% agreement).

A critical aspect of Hypothesis 2, concerning skepticism towards AI replacing human interaction, is powerfully affirmed by the strong opposition to AI replacing textbooks (D4), with 81% disagreement, and the even stronger rejection of AI replacing face-to-face lessons (D5), with 87% disagreement.

Opinions are divided on AI’s role in evaluation (question D6).

A high percentage of participants are in favor of AI creating customized assessment rubrics, indicating a positive outlook on AI’s ability to personalize learning criteria (question D7). A significantly high percentage of participants support AI providing simulations and virtual labs, highlighting a strong belief in AI’s capability to offer hands-on learning experiences (question D8). Similarly, most participants endorse AI’s role in creating immersive learning environments, suggesting high confidence in AI-enhanced reality tools (question D9). Finally, an overwhelming 94% are in favor of AI developing interactive educational content, reflecting strong approval of AI’s potential to make learning engaging and dynamic (question D10).

As detailed in Table 10, further disaggregation of the data regarding the direct use of AI in education provides a richer understanding of stakeholder preferences and reservations, directly addressing this study’s core research question. Given the strong opposition to replacing textbooks (D4) and face-to-face lessons (D5) with AI, with 81% and 87% disagreement, respectively, it was revealed that all participants exhibited statistically significantly stronger resistance to these substitutions (e.g., D5, for teachers, Mean = 1.6 and St. Dev. = 0.8; for educators, Mean = 1.8 and St. Dev. = 0.9; for students, Mean = 1.1 and St. Dev. = 0.3). This indicates a robust preference for preserving human interaction and traditional learning materials in the educational context, aligning with the overall finding that participants have significant reservations about AI replacing the human role.

Conversely, for areas of strong consensus, such as AI’s role in providing simulations and virtual labs (D8), creating immersive environments (D9), and developing interactive educational content (D10) (with 89%, 94% agreement, respectively), a comparative analysis confirmed that this overwhelming support was consistent across all participant groups, with no statistically significant differences observed. This widespread endorsement suggests the collective recognition of AI’s potential to enhance learning through dynamic and engaging materials and experiences. Finally, the mixed opinions on AI’s role in developing assessment tests (e.g., D6, for teachers, Mean = 3.1 and St. Dev. = 0.8; for educators, Mean = 3.0 and St. Dev. = 0.9; for students, Mean = 1.9 and St. Dev. = 0.9) and customized rubrics (e.g., D7, for teachers, Mean = 3.2 and St. Dev. = 0.8; for educators, Mean = 3.3 and St. Dev. = 0.8; for students, Mean = 4.0 and St. Dev. = 0.0) were found to be more divided among teachers, suggesting their ongoing deliberation about the fairness and efficacy of AI in evaluation, while students showed slightly higher openness to AI-generated rubrics, possibly seeing it as a way to personalize learning criteria.

3.5. Differences Among the Groups of Participants

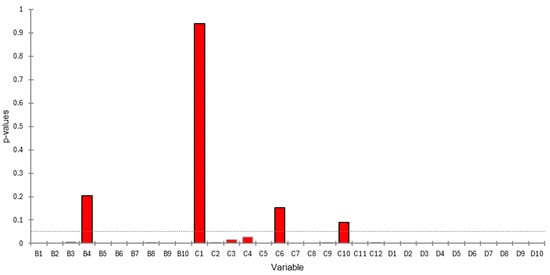

Beyond these descriptive statistics, to identify statistically significant differences in perceptions among the diverse participant groups (teachers, university students, and future educators) on the Likert scale responses, a non-parametric approach was employed. In particular, a Kruskal–Wallis H-test was conducted to determine if there were overall significant differences across the three groups. The Kruskal–Wallis test indicated significance. This analytical approach allowed for a better understanding of how demographic variables influence perceptions regarding AI integration in education.

As shown in Figure 6, the p-values calculated by the Kruskal–Wallis test allow us to identify the questionnaire items where there is the greatest agreement between the three groups. Specifically, for item C1, a p-value greater than 0.9 indicates that the responses provided by the three groups are essentially the same. For items B4, C6, and C10, differences are present but minimal. Finally, for the other items, low p-values indicate that there are substantial differences between the points of view of the three groups involved.

Figure 6.

The p-values (Kruskal–Wallis) calculated for the three groups of participants on the questionnaire’s items.

4. Discussion

Although the collection of opinions was performed with a Likert scale, a numerical value from 1 to 4 was assigned in order to calculate the mean, mode, standard deviation, and confidence level (95%).

The first analysis looks at the confidence level (95%). The 95% confidence interval indicates the range of values in which the true population mean is likely to be found, with a confidence level of 95%. A narrow confidence interval indicates a precise estimate of the mean. A wide interval indicates a less precise estimate, due to greater variability in the data or a reduced sample size (Cumming, 2014). For all items of the questionnaire, the value of the confidence level (95%) is always the same: 0.1. This value indicates greater confidence in the data collected and that there is some homogeneity in the participants’ responses.

The mean provides the average value of the responses, and the standard deviation indicates the dispersion of the responses around the mean (Norman, 2010).

Moving beyond descriptive statistics, the inferential analysis allowed for a deeper exploration of the research question, specifically identifying significant differences in perceptions among teachers, university students, and future educators regarding AI integration in education. This section will systematically evaluate the study’s hypotheses by integrating these group-specific findings, offering a more nuanced and analytical understanding of the results. As broadly indicated in the Conclusions, all three hypotheses were confirmed by the collected data.

Regarding Hypothesis 1, which posited a general openness and positive perception towards AI as a support tool, the study indeed found substantial acceptance and a positive predisposition, thereby strongly supporting this hypothesis.

This openness is reflected in the generally positive perception of AI’s impact on teaching (B2, Mean = 2.8), strong agreement on its potential to improve student performance through adaptive online environments (B4, Mean = 3.4), and overwhelming support for its use in developing interactive educational content (D10, 94% agreement) and providing simulations and virtual labs (D8, 89% agreement). However, this openness was not uniform across all applications or participant groups. For instance, while there was a strong consensus on the necessity of careful AI use (B3) among all participants, the degree of openness towards AI replacing human elements varied considerably by role. As observed in Table 6, university students consistently displayed lower skepticism towards AI’s efficiency in providing feedback (B6) compared to teachers and educators (students Mean = 1.2, St. Dev. = 0.5 vs. teachers Mean = 2.2, St. Dev. = 1.0 and educators Mean = 2.5, St. Dev. = 1.0). This suggests that students may perceive AI as a more direct and efficient tool for their individual learning processes, aligning with previous research recognizing AI as valuable for personalized learning and real-time feedback.

Hypothesis 2, predicting agreement on AI’s potential for personalized learning and efficiency but skepticism towards replacing human interaction, was strongly supported, with nuanced group differences. The agreement on AI’s potential for personalized learning is evidenced by the positive perceptions of adaptive online environments (B4, Mean = 3.4) and content recommendation systems (B5, Mean = 3.0), as well as its perceived usefulness for individual study (D1, 69% agreement) and improving learning (D3, 82% agreement). Crucially, the widespread opinion that human feedback remains more effective than AI-generated feedback (B6, 69% disagreement) was reinforced, particularly by teachers and educators, who demonstrated statistically significantly weaker skepticism (Mean = 2.2 and 2.5, respectively, St. Dev. = 1.0) compared to university students (Mean = 1.2, St. Dev. = 0.5). Similarly, the polarized opinions on the importance of humans versus computers in teaching (B10) clearly show teachers and educators holding a stronger protective stance towards the human role (teachers Mean = 3.4, St. Dev. = 1.1; educators Mean = 3.3, St. Dev. = 1.0) compared to students (Mean = 1.3, St. Dev. = 0.5). This directly aligns with the critical understanding in the literature that AI cannot fundamentally replace the human role of teachers. Furthermore, while AI’s utility for adult learning (C12) received strong consensus, a cross-group analysis, detailed in Table 8, revealed that teachers and educators expressed significantly higher levels of doubt about AI’s effectiveness for younger learners (ages 0–6 in C8 and 7–10 in C9) than students (e.g., C8: teachers Mean = 2.1, St. Dev. = 0.9 vs. students Mean = 1.3, St. Dev. = 0.7). This particular finding reinforces the idea that younger learners require more nuanced social and emotional interaction that AI may not provide.

Delving into the practical application of AI in education, as per Hypothesis 2, resistance to replacing traditional elements was evident across the board, further supporting this hypothesis, but with varying degrees among groups. For instance, the strong opposition to AI replacing textbooks (D4, 81% disagreement) and face-to-face lessons (D5, 87% disagreement) was particularly pronounced among teachers and educators compared to students. Teachers and educators exhibited statistically significantly stronger resistance to these substitutions (e.g., D5: teachers Mean = 1.6, St. Dev. = 0.8; educators Mean = 1.8, St. Dev. = 0.9 vs. students Mean = 1.1, St. Dev. = 0.3). This underscores a robust preference for preserving human interaction and traditional learning materials in the educational context, which is a consistent theme in the overall findings. Conversely, the overwhelming support for AI’s role in providing simulations and virtual labs (D8), creating immersive environments (D9), and developing interactive educational content (D10) was remarkably consistent across all participant groups, with no statistically significant differences. This widespread endorsement points to the collective recognition of AI’s potential to enhance learning through dynamic and engaging materials, aligning with the “Augmentation” or even “Redefinition” stages of technology integration as per the SAMR model.

Hypothesis 3, which anticipated a strong consensus on the need for a cautious, conscious, and responsible approach to AI integration, was unequivocally confirmed. The high agreement on the necessity of careful and conscious AI use (B3, Mean = 3.9, Mode = 4.0), with 98% agreement among participants, highlights a collective awareness of the ethical and implementation concerns highlighted in the literature, such as data privacy and equity. The mixed opinions on AI’s role in developing assessment tests (D6) and customized rubrics (D7) were found to be more divided among teachers and educators (e.g., D6: teachers Mean = 3.1, St. Dev. = 0.8; educators Mean = 3.0, St. Dev. = 0.9) compared to students (Mean = 1.9, St. Dev. = 0.9). This suggests ongoing deliberation by teaching professionals about the fairness and efficacy of AI in evaluation, whereas students show slightly higher openness, potentially seeing it as a way to personalize learning criteria. This aligns with the “Performance Expectancy” and “Effort Expectancy” constructs of the Unified Theory of Acceptance and Use of Technology (UTAUT), where the perceived effectiveness and ease of use influence acceptance. The emphasis on careful use also implies recognition of the need for “Facilitating Conditions”, such as adequate training and infrastructure, for successful AI adoption.

Analyzing Table 5 on the impact of artificial intelligence, several salient aspects emerge, supported by the statistical data provided.

General recognition of the positive potential of AI is evident, although with different nuances. For statement B1, the mean of 3.1 and the mode of 3.0 indicate a predominantly positive perception, with most participants agreeing or agreeing more than not. Similarly, for B2, the mean of 2.8 and the mode of 3.0 suggest a tendency towards approval, although with a higher standard deviation (1.0) than B1 (0.8), indicating greater dispersion in opinions. A strong consensus is recorded on the importance of the conscious and responsible use of AI (B3), as evidenced by the very high mean of 3.9, the mode of 4.0, and the very low standard deviation of 0.4. The adaptability of online learning environments to the needs of users is perceived as an improving factor for student performance (B4), with a mean of 3.4 and a mode of 4.0, supported by a standard deviation of 0.8. The usefulness of content recommendation systems to personalize learning (B5) receives moderate approval, with a mean of 3.0 and a mode of 4.0, but a standard deviation of 1.0 signals significant variability in the responses. There is strong disagreement on the ability of automated tutors to match the effectiveness of human feedback (B6), with a low mean of 2.1 and a mode of 1.0, accompanied by a standard deviation of 1.0, indicating a widespread belief in the superiority of human feedback. The intention to use AI in education is conditional on its proven effectiveness (B7), with a mean of 3.1 and a mode of 3.0, suggesting evidence-based caution. The standard deviation of 0.9 indicates moderate variability. There is no widespread belief that AI improves the learning experience for students (B8), with a mean of 2.5 and a mode of 2.0, tending towards disagreement, and a standard deviation of 0.9. A slight positive trend emerges regarding the ability of AI to improve study paths (B9), with a mean of 2.7 and a mode of 3.0, as well as a standard deviation of 0.9. Finally, opinions on the importance of humans versus computers in teaching (B10) appear polarized, despite a mean of 2.8, suggesting a slight bias towards human importance, and a mode of 4.0. The high standard deviation of 1.3 highlights the strong disagreement among participants on this aspect.

In summary, the data in Table 5 reveal an openness to AI as a complementary tool in education, but with strong reservations about replacing the human role, especially with regard to feedback and the overall learning experience. The high standard deviation found in some statements highlights the diversity of opinions among participants.

These results highlight a generally positive perception of AI’s potential in education, with a strong emphasis on the need for careful and conscious use (Ghosh, 2025). However, there is still skepticism about the effectiveness of AI tutors compared to human tutors (Henkel et al., 2025) and the overall improvement in the learning experience (Gökçearslan et al., 2024).

Analyzing Table 7 on the usefulness of artificial intelligence, further salient aspects emerge, supported by the statistical data provided.

A general consensus on the usefulness of AI systems to improve learning in formal contexts (C1) is indicated by the mean of 3.2 and the mode of 3.0, with a standard deviation of 0.7, suggesting the moderate dispersion of opinions, which are generally positive. The usefulness of AI for homework or individual study (C2) presents a less defined picture, with a mean of 2.9 and a mode of 4.0 but a high standard deviation of 1.0, which indicates significant variability in perceptions. Strong approval is found regarding the usefulness of AI in assisting in the transformation of in-person courses into distance courses (C3), as highlighted by the mean of 3.2 and the mode of 4.0, with a standard deviation of 0.9, suggesting a good degree of agreement. The perception of AI as an additional support for in-person learning (C4) is predominantly positive, with a mean of 3.2 and a mode of 4.0, supported by a standard deviation of 0.8. Moreover, regarding additional support for distance learning (C5), participants show a generally favorable opinion, with a mean of 3.0 and a mode of 3.0, as well as a standard deviation of 0.9. A high degree of agreement emerges on the usefulness of AI in acquiring new skills (C6), with a mean of 3.3 and a mode of 4.0, accompanied by a standard deviation of 0.8. The effectiveness of AI in improving training in the workplace (C7) is perceived in a more uncertain way, with a mean of 2.8 and a mode of 2.0, as well as a standard deviation of 1.0, indicating considerable dispersion in the responses. Strong skepticism prevails regarding the usefulness of AI for learning in children aged 0–6 years (C8), with a low mean of 1.8 and a mode of 1.0, as well as a standard deviation of 0.9, indicating widespread disagreement. Similar doubts are expressed for the learning of students aged 7–10 years (C9), with a mean of 2.0 and a mode of 2.0, as well as a standard deviation of 0.9, indicating a general lack of confidence in the usefulness of AI for this age group. Moreover, for the age group 11–14 years (C10), opinions tend towards disagreement or uncertainty, with a mean of 2.6 and a mode of 3.0, as well as a standard deviation of 0.9. For the learning of students aged 15–20 (C11), a slight increase in the perception of usefulness is observed, with a mean of 2.5 and a mode of 3.0, as well as a standard deviation of 1.0, suggesting more mixed opinions. Finally, a strong consensus emerges on the usefulness of AI for adult learning (over 20 years) (C12), with a mean of 3.0 and a mode of 3.0, as well as a standard deviation of 0.8, indicating a predominantly positive perception.

In summary, the data in Table 7, reporting the responses to Section C of the questionnaire, show that participants tend to find AI systems more useful for older students, with increasing skepticism about their usefulness as the age of students decreases, especially for younger children. AI is generally seen as useful for formal, distance learning contexts and for the acquisition of new skills, while doubts remain about its effectiveness for workplace training (Anderson & Dron, 2011; Zawacki-Richter et al., 2019) and, in particular, for early childhood education (Papadakis et al., 2022). The findings underscore that AI tools could be more effective as the age of learning recipients increases. Future studies could explore cultural or demographic factors influencing these perceptions and evaluate the long-term impacts of AI integration across educational stages.

Finally, analyzing Table 9 on the use of AI in education, other salient aspects emerge, supported by the statistical data provided.

A strong consensus is expressed regarding the usefulness of AI for individual study at home (D1), with a mean of 3.0 and a mode of 4.0, indicating a predominantly positive perception. The standard deviation of 1.0 suggests moderate variability in the responses. AI is seen as a potentially useful tool to support the frontal lesson (D2), with a mean of 3.1 and a mode of 4.0. The standard deviation of 1.0 highlights a certain degree of dispersion in opinions. The majority of participants agree that AI can improve their learning (D3), with a mean of 3.2 and a mode of 4.0. The standard deviation of 0.9 indicates moderate variability. Clear disagreement emerges regarding the replacement of textbooks with AI (D4), with a very low mean of 1.7 and a mode of 1.0. The standard deviation of 0.9 confirms the strong opposition to this idea. Marked opposition is also found towards the replacement of frontal lessons with AI (D5), with a mean of 1.5 and a mode of 1.0. The standard deviation of 0.8 underlines the widespread rejection of this possibility. Opinions on the use of AI to develop assessment tests with various types of questions (D6) are more divided, with a mean of 2.7 and a mode of 3.0. The standard deviation of 1.0 indicates significant variability in the answers. A predominantly favorable opinion emerges regarding the creation of personalized assessment rubrics based on specific student learning criteria via AI (D7), with a mean of 2.8 and a mode of 3.0. The standard deviation of 1.1 suggests some dispersion of opinions. A strong consensus is found on the usefulness of AI to provide simulations and virtual laboratories for hands-on learning experiences (D8), with a high mean of 3.4 and a mode of 4.0. The low standard deviation of 0.7 indicates a high level of agreement. Similarly, the creation of immersive learning environments through augmented and virtual reality via AI (D9) receives strong support, with a mean of 3.4 and a mode of 4.0. The standard deviation of 0.8 highlights a good level of agreement. Finally, a very strong consensus is found on the development of interactive educational content, such as animated videos or e-learning modules, via AI (D10), with a mean of 3.6 and a mode of 4.0. The very low standard deviation of 0.6 indicates almost unanimous agreement.

In summary, the data in Table 9 show strong support for using AI as a complementary and enhancing tool for learning and teaching, especially for self-study, supporting lectures, improving learning, providing hands-on experiences through simulations and virtual reality, creating personalized rubrics, and developing interactive content. In contrast, there is strong resistance to replacing traditional elements of education, such as textbooks and lectures, with AI. Opinions on AI for the development of assessment tests appear more uncertain (Luckin et al., 2016; Williamson et al., 2020).

These results emphasize AI’s capacity to personalize learning and create dynamic, engaging materials (Hwang et al., 2020). Similarly, the high approval for AI-driven simulations and virtual labs suggests that immersive technologies (e.g., VR/AR) significantly enhance hands-on learning, particularly when powered by AI’s adaptive capabilities (Merchant et al., 2014). Meanwhile, a critique of techno-solutionism emerges, which cautions against overestimating AI’s ability to replicate the socio-emotional benefits of human teachers (Selwyn, 2019). Similarly, educators and learners often resist AI applications that threaten to depersonalize education, preferring hybrid models that retain human oversight (Zawacki-Richter et al., 2019).

It is crucial to acknowledge the limitations of this exploratory study to properly contextualize its findings. The sample was selected using convenience sampling principles, leveraging existing professional relationships. While this approach allowed for access to diverse perspectives within the University of Salerno area and is suitable for exploratory studies aiming to capture varied viewpoints, it inherently limits the generalizability of the results. Consequently, the collected perceptions should be considered representative of the specific territorial context and participant demographics studied, and caution should be exercised when extending these findings to a broader population. Future research could address this by employing more robust, probability-based sampling methods to enhance the external validity and by conducting longitudinal studies to evaluate the long-term impacts of AI integration across different educational stages and regions. This study serves as a foundational step, providing valuable insights that can inform more extensive and targeted investigations into the complex interplay of AI and human elements in education.

5. Conclusions

This study undertook a survey on the knowledge, beliefs, and perceptions of teachers, trainers, university students, and future educators in the area surrounding the University of Salerno regarding the use of artificial intelligence in education. Guided by the research questions, aimed at understanding the opinions of different key figures in the education system, this survey represents a first step to answering the following question: what do current and future educators think about the use of artificial intelligence in education? The presented survey aimed to gather the knowledge and opinions of the various actors involved in the educational field on the concept of AI, in order to identify areas of consensus and dissent, as well as to assess the need for targeted training interventions.

All hypotheses posed in the Introduction seem to be confirmed by the collected data. In particular, regarding Hypothesis 1, general recognition of the positive potential of AI has been noted, and the data reveal openness to AI as a complementary tool in education, the substantial acceptance of AI, and a positive predisposition towards its informed and responsible use in education. Regarding Hypothesis 2, the data showed strong reservations about replacing the human role, especially with regard to feedback and the overall learning experience, since there is strong disagreement on the ability of automated tutors to match the effectiveness of human feedback. Regarding Hypothesis 3, the study recorded a strong consensus on the importance of the conscious and responsible use of AI. Specific training and professional development interventions to spread knowledge, increase awareness, and contribute to the careful and effective use of AI in the educational field seem to be the best ways to achieve this.

The added value of this study lies in its comprehensive and comparative analysis of perceptions held by diverse key stakeholders—teachers, university students, and future educators—within the specific territorial context of the University of Salerno. This approach directly addresses a notable gap in the existing literature, which lacked a nuanced understanding of how these groups perceive AI’s benefits and risks across various educational applications, including individual learning, support for frontal teaching, and assessment. By examining these perceptions through the theoretical lenses of the Unified Theory of Acceptance and Use of Technology (UTAUT) and the SAMR model, this research provides a more robust understanding of the practical and theoretical implications of AI in education, offering insights into the “how” and “why” of technology adoption and transformation that extend beyond mere descriptive observations.

The inferential analysis further highlighted significant differences in opinions among the participant groups, particularly reinforcing the understanding that AI is largely favored as an enhancement tool rather than a replacement for human interaction. For instance, there was an overwhelming consensus across all participant groups on the necessity of careful and conscious AI use (98% agreement), underscoring a collective awareness of the ethical implications and the need for robust security measures. While strong overall skepticism persisted regarding AI tutors matching human feedback efficiency (69% disagreement), university students exhibited statistically significantly weaker skepticism (Mean = 1.2, St. Dev. = 0.5) compared to teachers (Mean = 2.2, St. Dev. = 1.0) and educators (Mean = 2.5, St. Dev. = 1.0), indicating their potential perception of AI as a more direct and efficient tool for individual learning processes. Similarly, the polarized opinions on the importance of humans versus computers in teaching (B10) clearly showed teachers and educators holding a stronger protective stance towards the human role (teachers Mean = 3.4; educators Mean = 3.3) compared to students (Mean = 1.3), aligning with the critical understanding that AI cannot fundamentally replace the human role of teachers.

Furthermore, the study confirmed the strong opposition to AI replacing textbooks (81% disagreement) and face-to-face lessons (87% disagreement). This resistance was statistically significantly stronger among teachers and educators (e.g., D5 for teachers: Mean = 1.6; for educators: Mean = 1.8) compared to students (Mean = 1.1), emphasizing a robust preference for preserving traditional learning materials and human interaction in education. Conversely, there was overwhelming and consistent support across all participant groups for AI’s role in providing simulations and virtual labs (89% agreement), creating immersive learning environments through augmented and virtual reality (94% agreement), and developing interactive educational content such as animated videos or e-learning modules. These findings strongly align with the “Augmentation” and “Redefinition” stages of technology integration within the SAMR model, pointing to the collective recognition of AI’s potential to significantly enhance learning experiences. Additionally, teachers and educators expressed significantly higher levels of doubt about AI’s effectiveness for younger learners (ages 0–6 in C8 and 7–10 in C9) compared to students (e.g., C8: teachers Mean = 2.1 vs. students Mean = 1.3), reinforcing the idea that younger learners require more nuanced social and emotional interaction that AI may not provide. While opinions on AI’s role in developing assessment tests and customized rubrics were mixed, they were more divided among teachers and educators (e.g., D6 for teachers: Mean = 3.1) compared to students (Mean = 1.9), suggesting ongoing deliberation by teaching professionals about the fairness and efficacy of AI in evaluation.

It is crucial to acknowledge the limitations of this exploratory study to properly contextualize its findings. The sample was selected using convenience sampling principles, leveraging existing professional relationships. While this approach allowed for access to diverse perspectives within the University of Salerno area and is suitable for exploratory studies aiming to capture varied viewpoints, it inherently limits the generalizability of the results. Consequently, the collected perceptions should be considered representative of the specific territorial context and participant demographics studied, and caution should be exercised when extending these findings to a broader population beyond the University of Salerno. This sampling method introduces potential bias that may affect the external validity of the findings.

Regarding a future research agenda, further studies could address these limitations by employing more robust, probability-based sampling methods to enhance the external validity. Additionally, longitudinal studies are recommended to evaluate the long-term impacts of AI integration across different educational stages and regions. Future research could also delve deeper into the cultural or demographic factors that might influence these perceptions, providing a more comprehensive understanding. This study serves as a foundational step, providing valuable insights that can inform more extensive and targeted investigations into the complex interplay of AI and human elements in education.

Funding

This research received no external funding.

Institutional Review Board Statement

In accordance with the guidelines of the American Psychological Association (APA), participants were asked to give informed consent regarding the nature of the survey and its objectives exclusively for research purposes. Their participation was voluntary and was carried out by guaranteeing confidentiality and anonymity, since the data were collected in digital form without requesting the identity of the participants. Therefore, the data were collected in compliance with the European Regulation on Data Protection (GDPR n.679/2016) since they involve EU citizens anonymously and do not identify the participants in any way and irreversibly.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A

Table A1.

Section A, used to collect general data of participants.

Table A1.

Section A, used to collect general data of participants.

| # | Questions (and Possible Answers) |

|---|---|

| A1 | Gender (Woman; Man; I prefer not to answer) |

| A2 | How old are you? (18–22; 23–27; 28–32; 33–37; 38–45; 46–55; over 55) |

| A3 | Qualification of studies (Lower secondary (Middle School); Upper secondary (High School); First level degree; Master’s degree; PhD; Other) |

| A4 | Profession (Permanent teacher; PA employee; Technician/Freelancer; Private company employee; Other; Student in the TFA SOS course; Student) |

| A5 | How much do you use the following devices? (I don’t have this device; Never or almost never; Once a month; Once a week; Every day) |

| Smartphones | |

| Tablets | |

| Desktop computer | |

| Laptop | |

| Video game console | |

| E-book Reader | |

| Smart TVs | |

| A6 | What devices do you use to study or work? (multiple-choice answers on the following devices) |

| Smartphones | |

| Tablets | |

| Desktop computer | |

| Laptop | |

| Video game console | |

| E-book Reader | |

| A3 | Smart TVs |

Table A2.

Section B, used to determine agreement of participants about the impact of AI.

Table A2.

Section B, used to determine agreement of participants about the impact of AI.

| How Much Do You Agree with the Following Statements? (Yes; More Yes Than No; More No Than Yes; No) | |

|---|---|

| B1 | The development of artificial intelligence in everyday devices (e.g., smartphones, cars, refrigerators, televisions, etc.) is advantageous |

| B2 | The impact of artificial intelligence on teaching is beneficial |

| B3 | Artificial intelligence is a technology that requires careful and conscious use |

| B4 | Having an online learning environment that can adapt to user needs improves student performance |

| B5 | The use of content recommendation systems to provide individualized learning materials to each student facilitates the learning path |

| B6 | Artificial/automatic tutors reach the same level of efficiency as human tutors in providing feedback in a short time on students’ work |

| B7 | I would use artificial intelligence if it proved effective in the teaching/learning process |

| B8 | Artificial intelligence provides a better learning experience for students |

| B9 | Artificial intelligence can improve study paths |

| B10 | Humans are equally important to computers in teaching |

Table A3.

Section C, used to determine agreement of participants about the usefulness of AI.

Table A3.

Section C, used to determine agreement of participants about the usefulness of AI.

| Artificial Intelligence Systems Are Useful to… (Yes; More Yes Than No; More No Than Yes; No) | |

|---|---|

| C1 | Improve learning in formal education contexts (school and university) |

| C2 | Help with homework or individual study |

| C3 | Assist in the transformation of an in-person course into a distance one |

| C4 | Provide additional support for in-person learning |

| C5 | Provide additional support for distance learning |

| C6 | Acquire new skills |

| C7 | Improve workplace training |

| C8 | Improve children’s learning (ages 0–6 years) |

| C9 | Improve student learning (7–10 years) |

| C10 | Improving student learning (11–14 years) |

| C11 | Improve student learning (15–20 years old) |

| C12 | Improving adult learning (over 20s) |

Table A4.

Section D, used to determine agreement of participants about the use of AI in education.

Table A4.

Section D, used to determine agreement of participants about the use of AI in education.

| I Would Use Artificial Intelligence in Education to… (Yes; More Yes Than No; More No Than Yes; No) | |

|---|---|

| D1 | Enable individual study at home |

| D2 | Support the frontal lesson (face to face) |

| D3 | Improve learning |

| D4 | Replace textbooks |

| D5 | Replace the frontal lesson (face to face) |

| D6 | Develop assessment tests that include various types of questions (multiple choice, true/false, short answer) |

| D7 | Create customized assessment rubrics based on students’ specific learning criteria |

| D8 | Provide simulations and virtual labs for hands-on learning experiences |

| D9 | Create immersive learning environments through augmented and virtual reality |

| D10 | Develop interactive educational content such as animated videos or e-learning modules |

References

- ‘Alam, G., Wiyono, B., Burhanuddin, B., Muslihati, M., & Mujaidah, A. (2024). Artificial intelligence in education world: Opportunities, challenges, and future research recommendations. Fahima, 3(2), 223–234. [Google Scholar] [CrossRef]

- Ahmad, S., Alam, M., Rahmat, M., Mubarik, M., & Hyder, S. (2022). Academic and administrative role of Artificial Intelligence in education. Sustainability, 14(3), 1101. [Google Scholar] [CrossRef]

- Anderson, T., & Dron, J. (2011). Three generations of distance education pedagogy. The International Review of Research in Open and Distributed Learning, 12(3), 80–97. [Google Scholar] [CrossRef]

- Anderson, T., & Shattuck, J. (2012). Design-based research: A decade of progress in education research? Educational Researcher, 41(1), 16–25. [Google Scholar] [CrossRef]

- Brown, A., & Maydeu-Olivares, A. (2011). Item response modeling of forced-choice questionnaires. Educational and Psychological Measurement, 71(3), 460–502. [Google Scholar] [CrossRef]

- Chen, L., Chen, P., & Lin, Z. (2020). Artificial Intelligence in education: A review. IEEE Access, 8, 75264–75278. [Google Scholar] [CrossRef]

- Cohen, L., Manion, L., & Morrison, K. (2017). Research methods in education (8th ed.). Routledge. [Google Scholar]

- Cope, B., Kalantzis, M., & Searsmith, D. (2020). Artificial intelligence for education: Knowledge and its assessment in AI-enabled learning ecologies. Educational Philosophy and Theory, 53, 1229–1245. [Google Scholar] [CrossRef]

- Creswell, J. W., & Poth, C. N. (2018). Qualitative inquiry & research design: Choosing among five approaches. Sage Publications. [Google Scholar]

- Cumming, G. (2014). The new statistics: Why and how. Psychological Science, 25(1), 7–29. [Google Scholar] [CrossRef] [PubMed]

- Denzin, N. K., & Lincoln, Y. S. (Eds.). (2018). The SAGE handbook of qualitative research. Sage Publications. [Google Scholar]

- Etikan, I., Musa, S. A., & Alkassim, R. S. (2016). Comparison of convenience sampling and purposeful sampling. American Journal of Theoretical and Applied Statistics, 5(1), 1–4. [Google Scholar] [CrossRef]

- Ghosh, K. (2025). Recommending personalized video lecture augmentations with tagged community question answers. International Journal of Artificial Intelligence in Education. [Google Scholar] [CrossRef]

- Gocen, A., & Aydemir, F. (2020). Artificial intelligence in education and schools. Research on Education and Media, 12, 13–21. [Google Scholar] [CrossRef]

- Gökçearslan, Ş., Tosun, C., & Erdemir, Z. G. (2024). Benefits, challenges, and methods of Artificial Intelligence (AI) chatbots in education: A systematic literature review. International Journal of Technology in Education, 7(1), 19–39. [Google Scholar] [CrossRef]

- Hamilton, E. R., Rosenberg, J. M., & Akcaoglu, M. (2016). The substitution, augmentation, modification, and redefinition (SAMR) model: A critical review and suggestions for its use. TechTrends, 60(5), 433–441. [Google Scholar] [CrossRef]

- Henkel, O., Horne-Robinson, H., Hills, L., Roberts, B., & McGrane, J. (2025). Supporting literacy assessment in West Africa: Using state-of-the-art speech models to assess oral reading fluency. International Journal of Artificial Intelligence in Education, 35(1), 282–303. [Google Scholar] [CrossRef]

- Hwang, G.-J., Xie, H., Wah, B. W., & Gašević, D. (2020). Vision, challenges, roles and research issues of Artificial Intelligence in Education. Computers and Education: Artificial Intelligence, 1, 100001. [Google Scholar] [CrossRef]

- Kamalov, F., Calonge, D., & Gurrib, I. (2023). New era of Artificial Intelligence in education: Towards a sustainable multifaceted revolution. Sustainability, 15(16), 12451. [Google Scholar] [CrossRef]

- Krosnick, J. A., & Presser, S. (2010). Question and questionnaire design. In P. V. Marsden, & J. D. Wright (Eds.), Handbook of survey research (2nd ed., pp. 263–313). Emerald Group Publishing Limited. [Google Scholar]

- Luckin, R., Holmes, W., Griffiths, M., & Forcier, L. B. (2016). Intelligence unleashed: An argument for AI in education. Pearson. [Google Scholar]

- Merchant, Z., Goetz, E. T., Cifuentes, L., Keeney-Kennicutt, W., & Davis, T. J. (2014). Effectiveness of virtual reality-based instruction on students’ learning outcomes in K-12 and higher education: A meta-analysis. Computers & Education, 70, 29–40. [Google Scholar] [CrossRef]

- Norman, G. (2010). Likert scales, levels of measurement and the “Laws” of statistics. Advances in Health Sciences Education, 15(5), 625–632. [Google Scholar] [CrossRef]

- Papadakis, S., Vaiopoulou, J., & Kalogiannakis, M. (2022). The impact of robotics and AI on early childhood education. Early Childhood Education Journal, 50(7), 1211–1222. [Google Scholar] [CrossRef]

- Selwyn, N. (2019). Should robots replace teachers? AI and the future of education. Polity Press. [Google Scholar]

- Tapalova, O., Zhiyenbayeva, N., & Gura, D. (2022). Artificial Intelligence in education: AIEd for personalised learning pathways. Electronic Journal of e-Learning, 20(5), 639–653. [Google Scholar] [CrossRef]

- UNESCO. (2023). Guidance for generative AI in education and research. Available online: https://www.unesco.org/en/articles/guidance-generative-ai-education-and-research (accessed on 2 May 2025).

- Uygun, D. (2024). Teachers’ perspectives on Artificial Intelligence in education. Advances in Mobile Learning Educational Research, 4(1), 931–939. [Google Scholar] [CrossRef]

- Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. [Google Scholar] [CrossRef]

- Venkatesh, V., Thong, J. Y. L., & Xu, X. (2012). Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Quarterly, 36(1), 157–178. [Google Scholar] [CrossRef]

- Williamson, B., Bayne, S., & Shay, S. (2020). The datafication of teaching in higher education: Critical issues and perspectives. Teaching in Higher Education, 25(4), 351–365. [Google Scholar] [CrossRef]

- Xue, Y., & Wang, Y. (2022). Artificial intelligence for education and teaching. Wireless Communications and Mobile Computing, 2022(6), 1–10. [Google Scholar] [CrossRef]

- Zadorina, O., Hurskaya, V., Sobolyeva, S., Grekova, L., & Vasylyuk-Zaitseva, S. (2024). The role of Artificial Intelligence in creation of future education: Possibilities and challenges. Futurity Education, 4(2), 163–185. [Google Scholar] [CrossRef]

- Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on Artificial Intelligence applications in higher education—Where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 1–27. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).