1. Introduction

Generative artificial intelligence (GenAI) tools/chatbots, such as ChatGPT, are a new and growing innovation in education, though they lack empirical evidence as a teaching and learning tool (

Crompton & Burke, 2023;

Montenegro-Rueda et al., 2023;

Laupichler et al., 2022). For example, a study by

Wang et al. (

2018) found that AI-driven, gamified learning platforms significantly increase student engagement. Other recent studies with an engagement-centric point of view are

Mirdad et al. (

2024),

Nguyen et al. (

2024), and

Bognár and Khine (

2025).

Nguyen et al. (

2024) distinguished GenAI from a bigger list of techniques/technologies, such as machine learning, and proposed alternative use cases such as interactive teaching aid and accessibility in learning.

Mirdad et al. (

2024) looked into the correlation between AI usage, student engagement, and other educational aspects involving AI.

Bognár and Khine (

2025) analysed a large size student survey to characterise large-language-model-based learning support and its impacts on student engagement.

In other words, GenAI applications in higher education teaching and learning could support engagement in learning and the enhancement of skills through the augmentation of student engagement, but there is a need for more evidence regarding its influence. To have a comprehensive understanding of GenAI’s impact on teaching and learning with a particular focus on engagement, we identified three domains to observe current trends in GenAI and its contributions: (I) learning engagement, (II) learning efficiency and skill development, and (III) strengths and weakness of AI within higher education platforms.

1.1. Engagement in Learning Through the Application of GenAI Tools

GenAI-assisted teaching and learning present an exciting opportunity to aid students in their learning and skill development. Technology-enhanced teaching and learning is increasingly prevalent in higher education, and several studies surveying student perceptions of the use of technology in teaching reported very positive outcomes (

Crompton & Burke, 2023;

Jump, 2011), including increased enjoyment in learning (

Farah & Maybury, 2009). There are multiple potential barriers to student engagement in higher education.

Hennessy and Murphy (

2025) outlined that undergraduate students feel more comfortable engaging with technology rather than speaking in class or engaging with peers. However, the integration of GenAI in higher education is still in its infancy (

Southworth et al., 2023;

Laupichler et al., 2022), and much of the literature in the field pre-dates the emergence of easily accessible GenAI tools such as ChatGPT, which was released on 30 November 2022.

Furthermore, it is evident from

Johnston et al. (

2024) that there is extensive awareness and use of GenAI by students in higher education. A few early-stage studies identified the role of an artificial assistant as an engagement source, such as

Crown et al. (

2010). A systematic capture of GenAI-induced engagement was investigated by

Zawacki-Richter et al. (

2019) a few years before the emergence of ChatGPT.

Zawacki-Richter et al. (

2019) described engagement within GenAI-driven education as “…drawing on Intelligent Tutoring Systems (ITS), intelligent virtual reality (IVR) is used to engage and guide students in authentic virtual reality and game-based learning environments”. Thus, we identified a research/knowledge gap in this rapidly emerging area of education and used this study to create an opportunity to promote engagement in learning through the application of GenAI tools. The following section explores GenAI tools for learning efficiency and skill development.

1.2. GenAI Tools for Learning Efficiency and Skill Development

As stated by

Crompton and Burke (

2023), GenAI tools represent a “concomitant proliferation of new AI tools available”. While

Crompton and Burke (

2023) reported on the benefits of GenAI to both educators and students in higher education, there is a lack of research on the development or application of these GenAI tools for learning engagement and/or efficiency. In the context of AI tools for skill development,

Crompton and Burke’s (

2023) systematic review revealed research gaps specifically in the context of new tools, such as ChatGPT, which was the most widely used GenAI tool by our cohort at the start of our study. Another recent literature review presents the breakdown of AI-integrated education models according to categories/research topics/methods and performs detailed bibliometric analyses (

Wang et al., 2024).

This study focused on ChatGPT to explore the application of and engagement with GenAI in education with respect to teaching and learning. This study set out to support skills, including problem solving, critical thinking, and creativity. The rationale for integrating GenAI tools to support problem-solving tasks was due to the issue that students may experience cognitive load or fatigue during research (

Sweller, 1988) or fixation or ideation initiation block (

Jansson et al., 1991) during creative idea generation. GenAI tools can support research during problem solving, in the context of cognitive load, by providing students with refined information through their use of effective prompts. This may be especially supportive given the domain-specific, knowledge-based nature of problem-solving (

Sweller, 1988).

Further problem-solving support could occur in the context of communication skills during ideation through GenAI tools for rendering, thus fostering students’ creative confidence. Using GenAI tools during design thinking could support engagement and efficiency in learning and cognate skills. The rationale for integrating GenAI tools to support critical thinking was due to the following question: can one enhance critical thinking with the generative AI wave? (

Spector & Ma, 2019).

Ennis (

1962) described critical thinking as “the correct assessing of statements” along logical, critical, and pragmatic dimensions. The notion of critical thinking is open to interpretation and often approached differently by experts in different contexts of higher education (

Behar-Horenstein & Niu, 2011). Others link critical thinking with the self-awareness of the skills and their usage towards overcoming an intellectual task (

Walker & Finney, 1999). Whether generalised or discipline-specific (

Ennis, 1989;

Renaud & Murray, 2008), higher education has the primary role to shape students’ broad range of critical thinking skills (

Bezanilla et al., 2019). Indeed, critical thinking is a key programme learning outcome in many higher education courses. Furthermore, strong critical thinking skills are associated with selection for excellence programmes (

Leest & Wolbers, 2020).

In the context of GenAI tools for learning engagement, learning efficiency, and skill development, we set out to explore GenAI, namely ChatGPT, to give students a practical focus to solve problems in an emerging way (e.g.,

Zhang & Aslan, 2021;

Montenegro-Rueda et al., 2023), developing their creativity and critical thinking. Interactions between GenAI and students are a key element in enhancing teaching and learning and need to be represented in curricula at generalised and specialised levels. There is an increased interest in using GenAI tools in a range of teaching and learning environments. Thus, this study set out to engage students through a hands-on GenAI experience supporting their learning efficiency and skill development, creating an environment for students to uncover the effectiveness (strengths and limitations) of the GenAI tools for themselves in a supported educational context.

1.3. Effectiveness (Strengths and Limitations) of GenAI Tools in an Educational Context

As outlined earlier,

Crompton and Burke (

2023) revealed research gaps specifically in the context of new GenAI tools, such as ChatGPT. This study set out to explore the application of and engagement with GenAI, specifically ChatGPT, in higher education with respect to teaching and learning. Generally, there is a range of strengths and limitations on the effectiveness of GenAI tools in higher education. Specifically the strengths reported in research studies include personalised learning opportunities (

Pane et al., 2017); assessment support, namely feedback (

Shute, 2008); accessibility of GenAI platforms in comparison to tutor access (

Holstein et al., 2018); breadth of educational resources (

Liu et al., 2016); and catering for learning diversity (

Melo-Lopez et al., 2025).

In the context of limitations, GenAI tools do not uphold equity or environmental values in their product sector. In addition, GenAI tools lack empathy (or human touch) (

Laurillard, 2012), raise privacy concerns (

Slade & Prinsloo, 2013), represent bias (

Eubanks, 2018), impose high resource cost (

World Economic Forum, 2020b), present technical issues or access (

Luckin et al., 2016), require educator training (

Zawacki-Richter et al., 2019), and offer limited scope in the context of the breadth of educational activities and needs (

Holmes et al., 2019).

1.4. Aims of the Research Study

The previous sections have evidenced the gaps in the application of and engagement with GenAI, specifically ChatGPT, in higher education with respect to teaching and learning. Using a pragmatic research framework, our study focuses on the integration and evaluation of generative AI (GenAI) tools to improve student engagement, efficiency, and skill development. Our study was implemented in diverse teaching and learning contexts, including a student literature review exercise in Physiology, a creative design thinking process in Initial Teacher Education, and structural design and analysis problems in Civil Engineering. Aligning with the study aims, the following sections present existing research in the context of GenAI and higher education. The sections explore engagement in learning through the application of GenAI tools; GenAI tools for learning engagement, learning efficiency, and skill development; and finally, the effectiveness (strengths and limitations) of GenAI tools in an educational context.

This multidisciplinary research study set out

To support student engagement in learning through the application of GenAI tools;

To explore GenAI tools for learning engagement, learning efficiency, and skill development;

To promote awareness and evaluation of the effectiveness (strengths and limitations) of GenAI tools in an educational context.

To address the research gaps investigated above in line with these research aims, the paper is organised as follows.

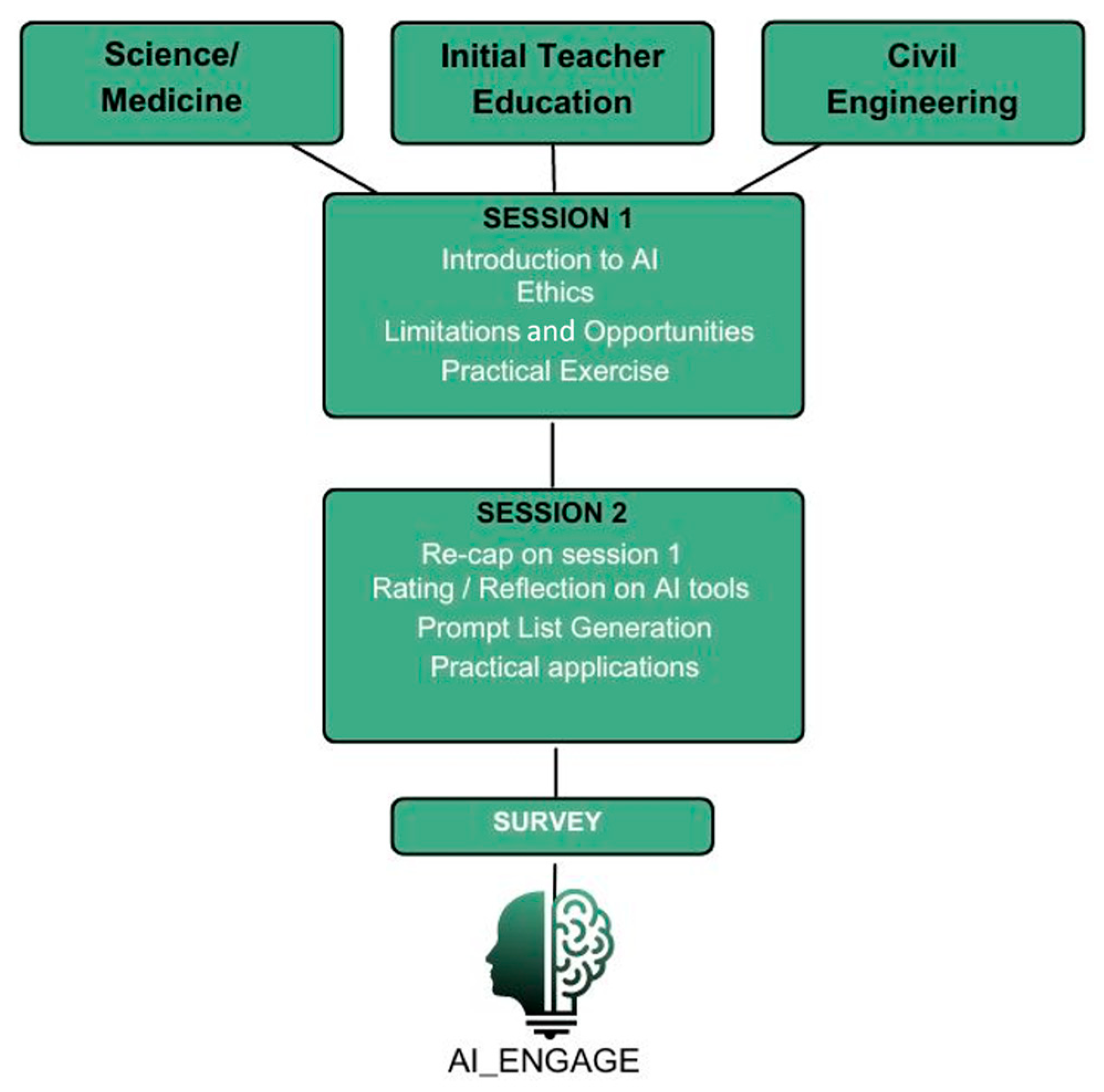

Section 2 presents a streamlined methodology of the three subject areas where AI-focused interventions (AI-ENGAGE,

Figure 1) took place.

Section 3 presents the results, particularly student feedback gained from the intervention sessions incorporating AI tools within each student cohort.

Section 4 provides the discussions following the results, and

Section 5 provides the conclusions.

2. Methodology

In this section, we describe the tools, materials, and approaches that define our research design and implementation areas. The following sections summarise our research framework, sample, research methods, design, and data analysis, respectively.

2.1. Research Framework

This research study was structured around a pragmatic research framework that focused on the integration and evaluation of generative AI (GenAI) tools to improve student engagement, efficiency, and skill development. The pragmatic research framework was supported by a methodological approach using exploratory mixed methods that combined qualitative (thematic analysis) and quantitative (descriptive statistics) methods.

2.2. Sample

This research study involved year 3 and 4 undergraduate students from Physiology, Initial Teacher Education, and Civil Engineering disciplines. Prior to study commencement, ethical approval (2023_09_06_EHS) for the study in the three modules was granted by the university research ethics committee. All the participants were informed about the purpose of the research and consent. For anonymity purposes, demographic information was not collected during the surveys. However, relevant statistics can be derived from the course records. All the modules are university-level teaching. The Initial Teacher Education cohort is in the 3rd year of their programme, the Physiology cohort is in their 4th year, and the Civil Engineering cohort is in their 4th year. In summary, the student profile range was not highly variant based on our observations. No exclusion operation was performed; the survey remained open to all classroom participants willing to complete it.

2.3. Research Methods

The survey was completed by all three module cohorts following the AI-focused interventions. A maximum of 129 individual survey responses were recorded. The data is presented for the whole merged cohort (i.e., the responses from each of the three distinct disciplines are combined) to maximise the participant numbers in order to draw sound conclusions. Individual cohort analysis is also provided to demonstrate the degree of agreement (or not) between the different cohorts for certain quantitative questions. The Engineering cohort contributed the majority of responses (maximum n = 69 out of class of 114 (60%)), the Initial Teacher Education cohort contributed slightly fewer total responses (maximum n = 44 out of a class of 56 (78%)), and the Physiology cohort contributed the fewest total responses (maximum n = 16 out of a class of 25 (64%)). These participation numbers are reflected in the different class sizes.

To support the study aims, the data is presented in the following subsections as quantitative and qualitative results addressing engagement effectiveness with GenAI in education; GenAI engagement to enhance learning and skills; future use of AI tools by students in University; prior experience of GenAI; usefulness of GenAI in education; limitations of AI in education; and ethical concerns using GenAI tool(s). Intervention details are presented in

Appendix A.

2.4. Design

Each module engaged students in normative teaching and learning (previous years’ module activities). The use of the GenAI tool (ChatGPT 3.5) was intended to provide additional teaching and learning support to students, and it was expected to be used intermittently (when needed) during the module. In each of the three disciplines, students received two separate sessions focusing on theoretical and practical issues relating to GenAI usage in education. After the key teaching and learning activities concluded, toward the final weeks of the module, participants (students) were invited to take part in a voluntary 20 min online survey on the Qualtrics platform to determine their experience and engagement with GenAI tools in their learning experience (module) (

Figure 1). A detailed description of the content in the different sessions and disciplines is included in

Appendix A. The online survey was optional, anonymous, and did not affect the module assessment. The survey data was collected, stored and analysed using the Qualtrics platform. The full survey is available as a

Supplemental file.

The limitations of this study were considered and could include variations in institutional culture, language semantics, AI tool availability, and/or access. In addition, GenAI policies (or lack thereof) could affect educators’ implementation of the study and students’ participation in the study among our three research sites.

2.5. Data Analysis

The qualitative data was analysed using an inductive approach using Braun and Clarke thematic analysis, which involved identifying and analysing themes within qualitative data without imposing pre-existing coding frameworks or theoretical concepts. Though a pragmatic research framework guided this research study, we acknowledge the exploratory nature of this research study. The inductive coding took place using the following approach: familiarisation of the data by reading and re-reading the data, followed by generating initial codes, and then searching for themes, which involved grouping codes into potential themes based on patterns emerging from the data. This was followed by reviewing the themes to refine and validate them against the dataset. Finally, we defined and named the themes to represent new insights in areas with limited prior research. To represent these qualitative codes quantitatively, the research team assigned numerical values to coded segments of data to allow for statistical analysis or visualisation. This was based on frequency counts, whereby a count represented how often each code appeared in the dataset.

Schematic detailing the structure of the AI-ENGAGE methodology. Three separate disciplines (Physiology, Initial Teacher Education, and Civil Engineering) each had two distinct AI-focused intervention sessions of similar format, followed by a common survey (Qualtrics platform).

3. Results

3.1. Engagement Effectiveness with GenAI in Education

A main aim of this study was to assess the effectiveness of AI-focused interventions in specific areas relating to engagement (

Figure 2).

Students were asked to record their responses to the following three questions: Did AI tool(s) affect engagement with your peers in the module (2A)? Did AI tool(s) affect engagement with your educator(s)/tutor(s) in the module (2B)? Did AI tool(s) affect engagement with your subject/discipline (2C)? The percentage of responses recorded for each of the five listed options from the three cohorts was merged, relating to engagement with peers 2A (n = 117), educators/tutors 2B (n = 116), and subject/discipline 2C (n = 117).

In the context of GenAI affecting engagement, 67% of students reported “some” to “very good” effect on engagement with peers (

Figure 2A), 66% reported “some” to “very good” effect on engagement with their educators (

Figure 2B), and 74% reported “some” to “very good” engagement with their subject/discipline (

Figure 2C). This student reported data suggest that our GenAI interventions had a positive effect on student engagement with respect to their peers, educators, and subject/discipline. It is notable that the greatest effect was on engagement with the subject/discipline. Contrary to the positive engagement reported by the majority of students, it must be noted that approximately 17% of students reported “no effect” of the GenAI tool affecting their engagement with peers (17%), educators (17%), or their subject/discipline (15%).

To understand how student responses differ among various disciplines’ interventions,

Figure 3 displays discipline-specific response numbers ranging from 13 to 60, indicating variation among the discipline-specific sample sizes. Regardless of the sample size, each cohort most frequently identifies GenAI contributions to engagement (with peers, educators, and subject) as having a “Good Effect”. However, the overall distribution patterns, apart from the most frequent response, are deemed different. It should also be pointed out that there is a significant portion of students stating that GenAI has “No Effect” on engagement with various agents, specifically ranging around 15–20%. While numerous parameters among the interventions are different, certain common notions among the survey outcomes still seem to align with each other.

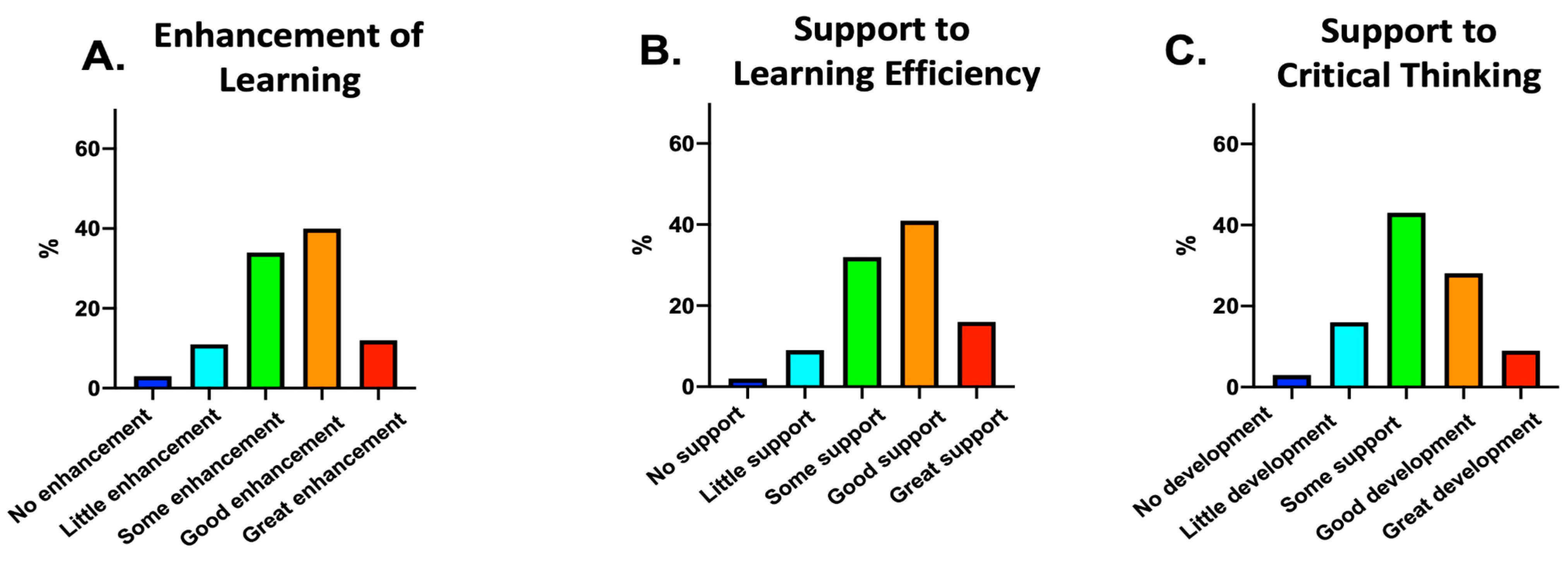

3.2. AI Engagement to Enhance Learning and Skills

A main aim of this study was to assess the effectiveness of GenAI interventions to enhance learning or as support for learning efficiency and skills (

Figure 4).

Students were asked to record their responses to the following three questions: Did AI tool(s) enhance your learning (4A)? Did AI tool(s) support your learning efficiency (4B)? Did AI tool(s) support the development of your critical thinking (4C)? Percentage of responses recorded for each of the five listed options from the three cohorts merged relating to support for learning (4A) (n = 116), support for learning efficiency (4B) (n = 116), and support for the development of critical thinking (4C) (n = 116).

A total of 86% of students reported at least some enhancement of learning (

Figure 4A), 89% reported at least some support for learning efficiency (

Figure 4B), and 80% reported at least some development of critical thinking (

Figure 4C). These data are encouraging in relation to our GenAI interventions having a positive effect on student learning. It is notable that the greatest effect was on support to learning efficiency.

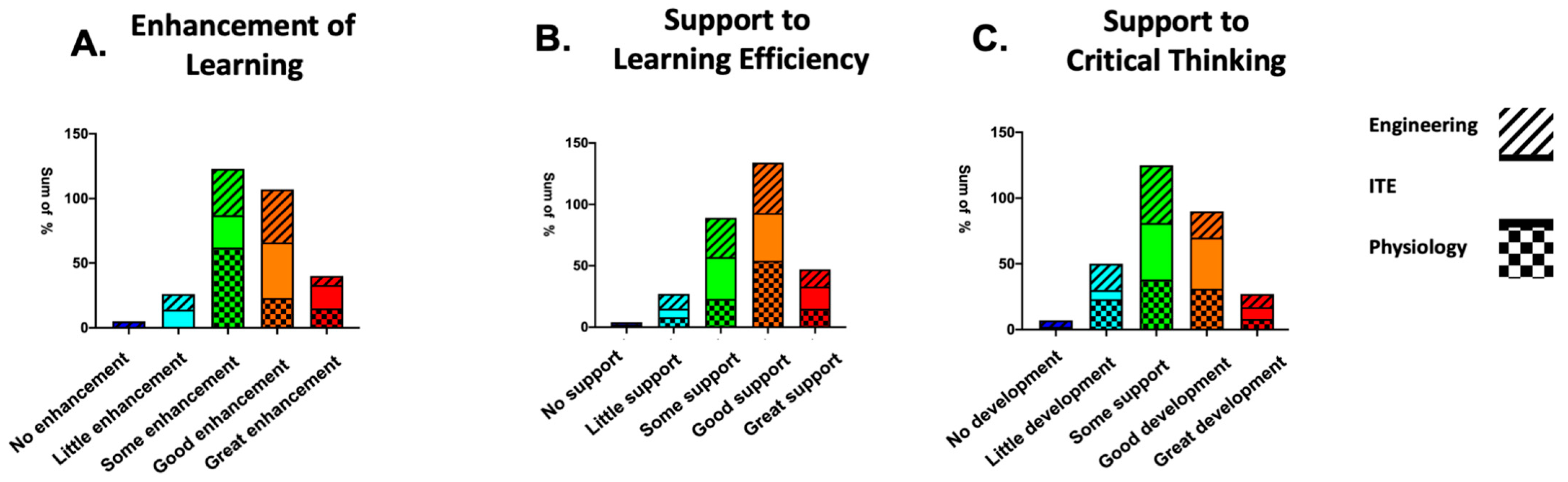

When the student cohorts are interpreted separately, the distributions shown in

Figure 5 are observed regarding learning enhancement, support to learning efficiency, and support to critical thinking. Physiology, Initial Teaching Education, and Civil Engineering students provided 13, 44, and 59 responses to these questions, respectively. Physiology students most frequently reported “Some” enhancement of learning, whereas Initial Teaching Education and Civil Engineering students most frequently reported “Good” enhancement of learning. The most frequent response for all cohorts in relation to learning efficiency is “Good support” Likewise, all the cohorts most frequently indicated support to critical thinking as “Some Development”. It should be noted that certain distribution features depend on factors more than the discipline, which is further discussed in the following section.

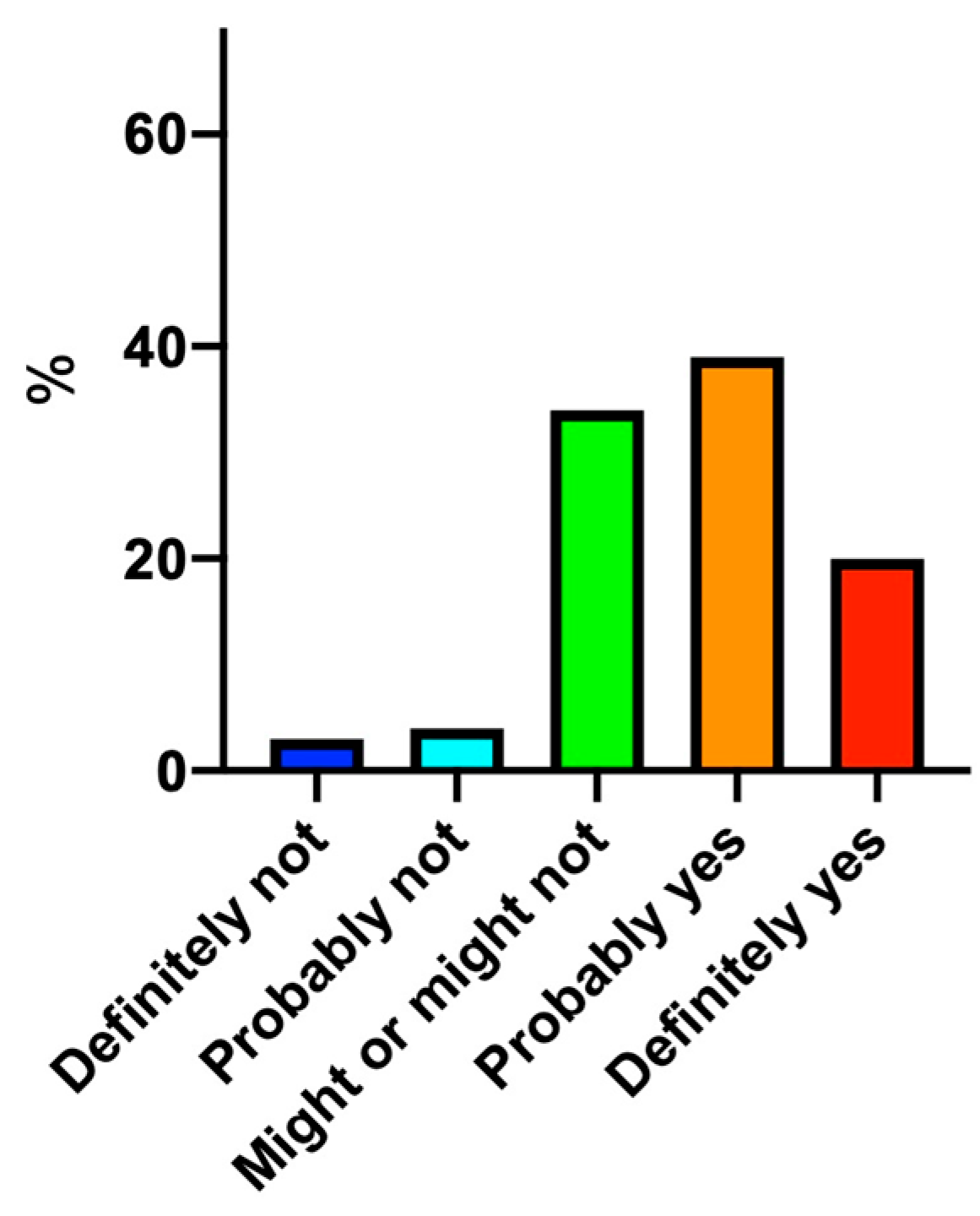

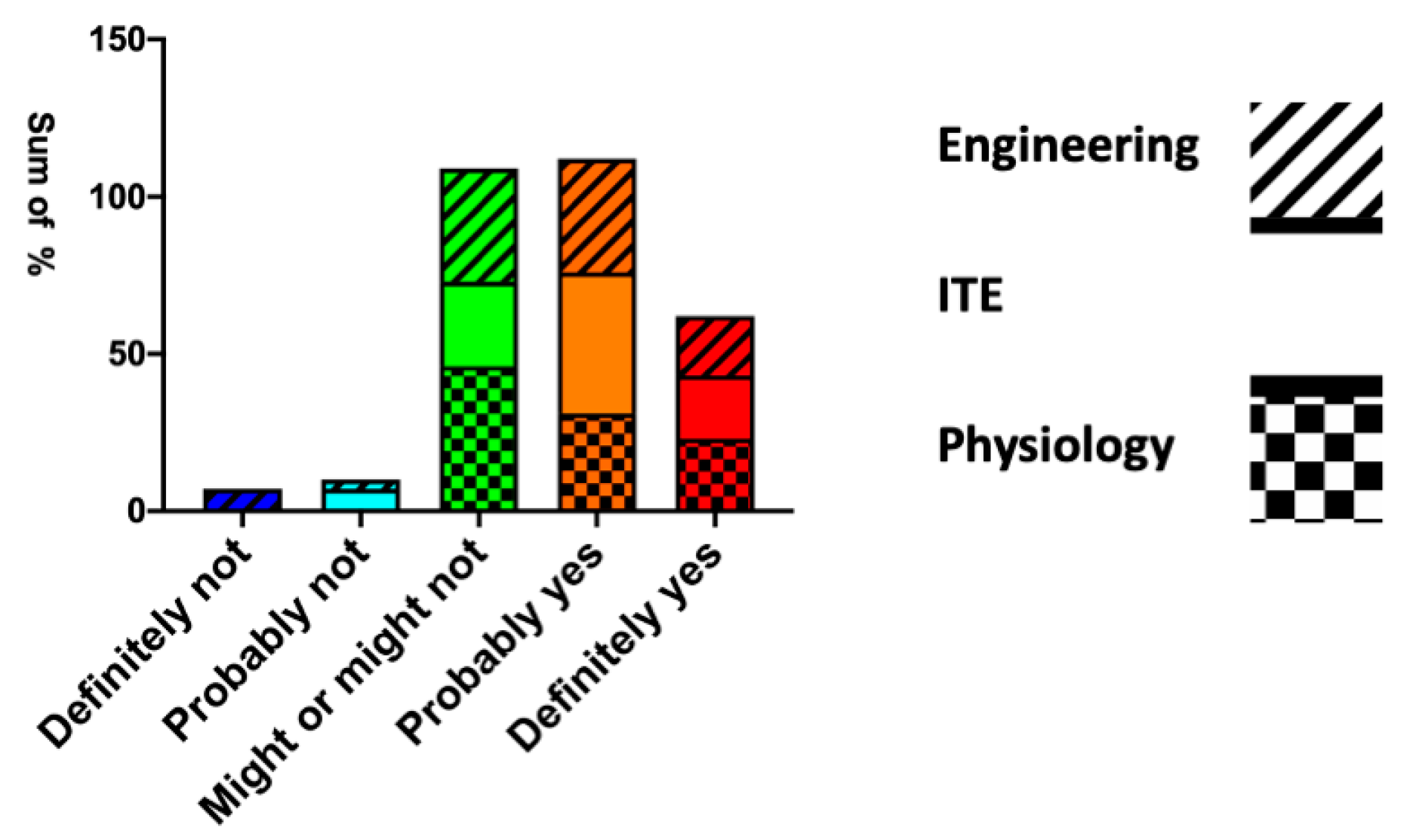

3.3. Future Use of GenAI Tools by Students in University

In order to determine the intentions of students to use AI tools in the context of their university course work in the future, we specifically asked the students, “

Will you use AI tool(s) for your University course work?” (

Figure 6).

Students were asked to record their responses to the following question:

will you use AI tool(s) for your University course work (6A)? The percentage of responses was recorded for each of the five listed options from the three cohorts merged (n = 116). A total of 59% of students reported that they would probably or definitely use GenAI tools for their University work. An additional 34% reported that they might or might not use GenAI tools, with only 7% of students reporting that they would probably not or definitely not use GenAI tools for their university course work (

Figure 6).

A final discipline-specific observation is made to understand whether students are willing to use GenAI again in the future. The most dominant response for Physiology is “Might or Might Not”, whereas Initial Teaching Education is more optimistic with the response “Probably Yes”. Civil Engineering responses have a similar share of these two responses, shown in

Figure 7. In summary, all cohorts denote a right-skewed distribution, which is on the positive side of the GenAI resources’ reusage. More discussions are shared, particularly to identify limitations of these responses due to the diversity within the survey and the intervention features.

3.4. Prior Experience of GenAI

We next asked students about their prior experience with GenAI (

Figure 8).

Students were asked to record their responses to the following question: “Prior to engaging in this module, please choose at least one word and less than five words to describe your feelings about your AI experience in the context of your University Course?” Coded responses recorded for the three cohorts were merged, presented as a pie chart (189 coded responses).

While the qualitative data was not empirically quantified, the most frequent specific codes represent positive experiences, including “useful” (13.8%), “helpful” (10.6%) “convenient” (6.9%), “good” (5.8%) “efficient” (3.2%), “interesting” (3.2%), and “wonderful” (2.1%). In contrast, some negative words or statements were recorded, including “inexperienced” (4.8%), “unreliable” (4.8%), and “confused” (2.1%). Though a minority response, one example from the “other” code was “cheating” (1.6%) (

Figure 8). The following quotations evidence statements by students depicting distinct qualitative codes:

“Very convenient and can help me with my course to a great extent” (code: convenient).

“AI can be used in university education to quickly complete the retrieval and sorting of information, improve the efficiency of users’ work and study” (code: efficient).

“It can often be useful but also unreliable and can provide inaccurate information” (code: useful; reliability).

“Felt like cheating” (code: cheating).

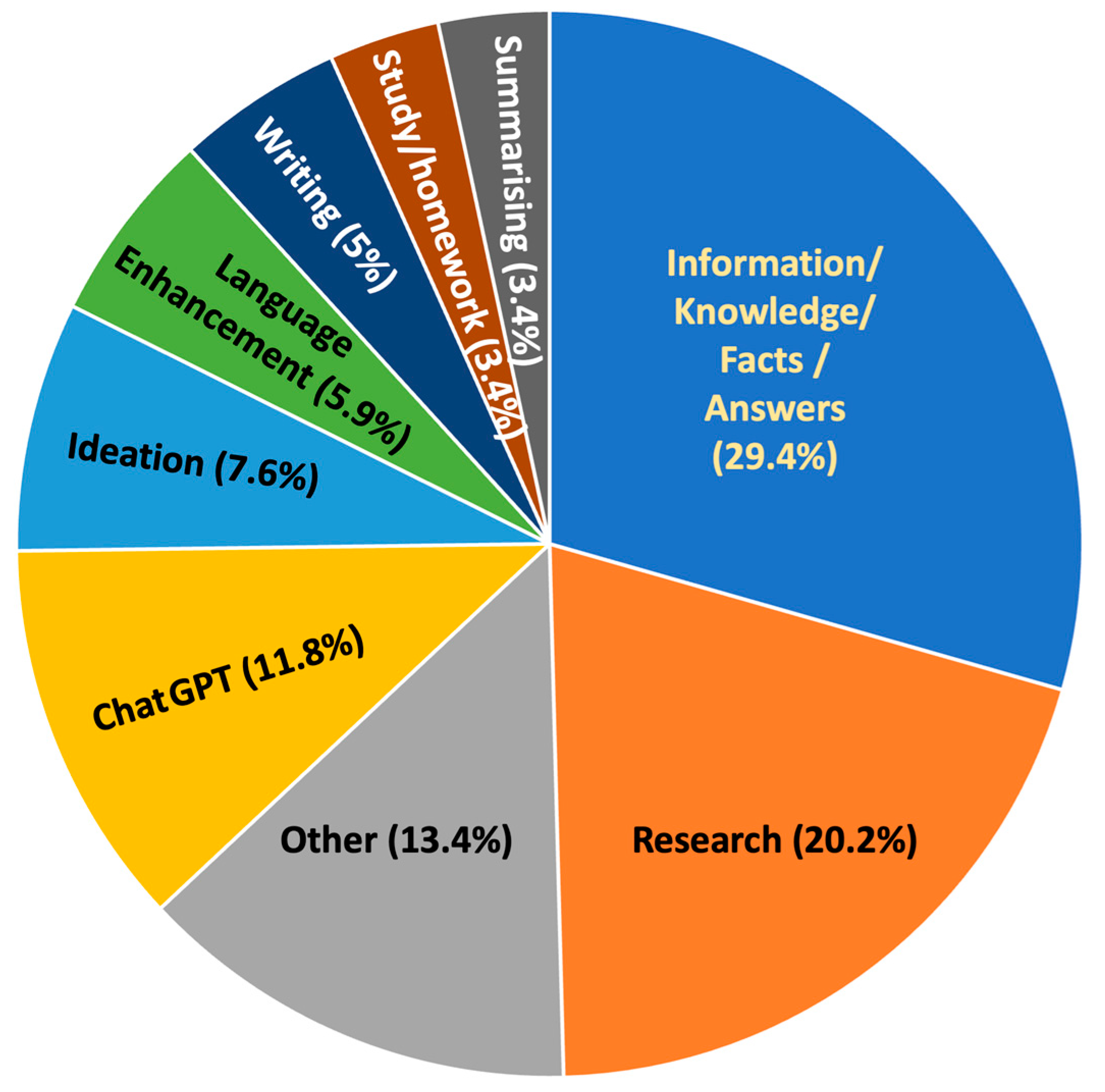

3.5. Usefulness of GenAI in Education

Students were surveyed to determine the usefulness of GenAI tools in education (

Figure 9).

Students were asked to record their responses to the following question: “

In the module, what aspect of using the AI tool(s) did you find most useful?”.

Figure 9 represents the coded responses recorded for the three cohorts merged, presented as a pie chart (119 coded responses).

The most useful aspect was in the context of “information”, indicated by 29.4% of students. The next most useful aspect was “research” indicated by 20.2% of students. The next most useful specific aspect of the GenAI tools was a tool, namely “ChatGPT”, which was expressed by 11.8% of students. The following quotations evidence statements by students depicting distinct qualitative codes.

7.6% indicated that using the GenAI tool was useful for “ideation”, and 5.9% indicated “language enhancement”. Other qualitative codes, evidenced by 5% or fewer responses, included “writing” (5%), “study/homework” (3.4%), and “summarising” (3.4%). With respect to less popular uses, the following quotes evidence statements by students depicting a respective qualitative code:

One student encapsulated the holistic usefulness of GenAI tools in education in the following statement, “University, staff and students should embrace and take advantage of these new technologies and breakthroughs and not shy away from them. Should be used more as an educational resource to help students grow as they would from other resources” (

Figure 9).

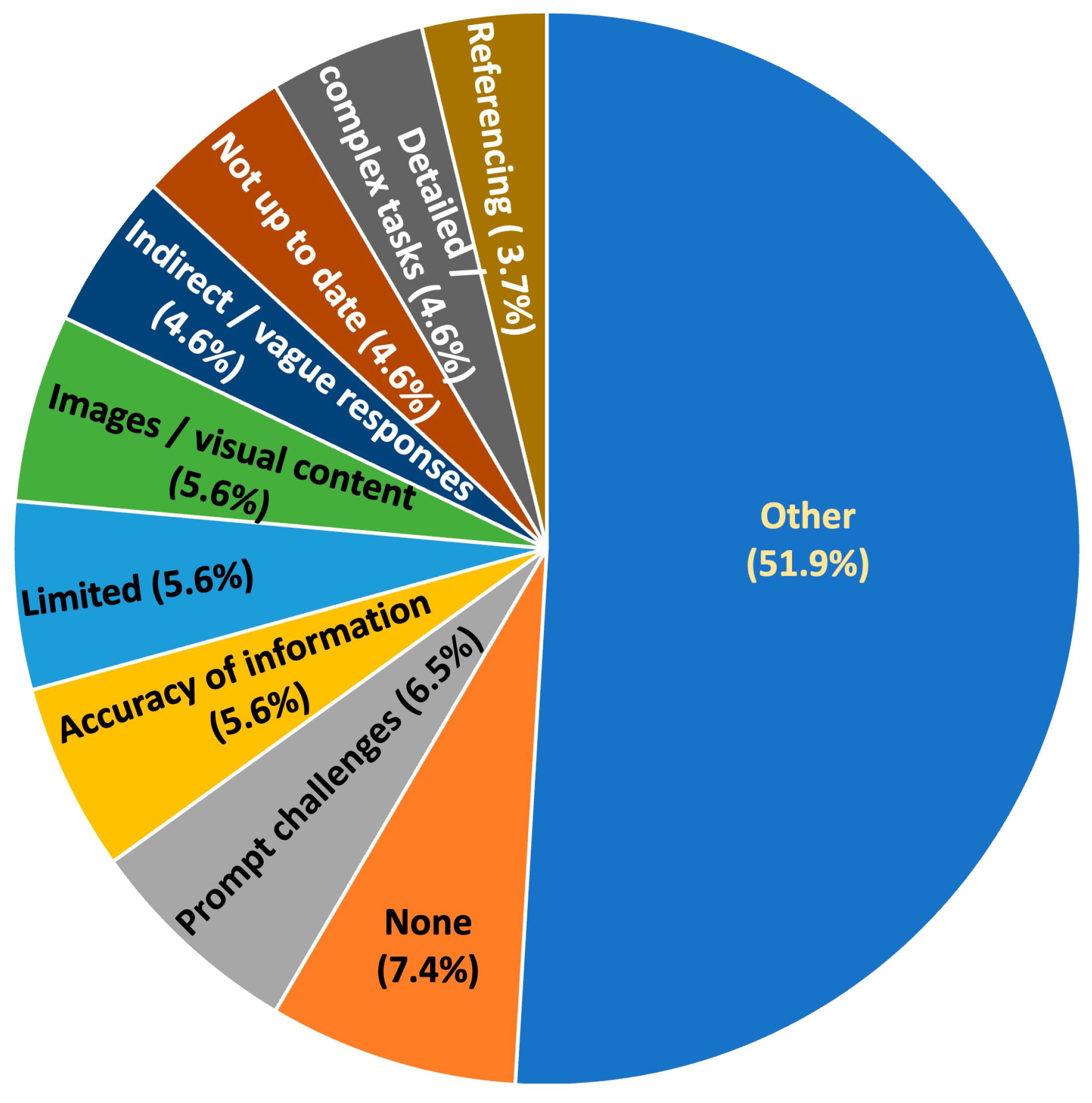

3.6. Limitations of GenAI in Education

We next ask students about their perceived limitations of AI in education (

Figure 10).

Students were asked to record their responses to the following question: “

In the module, what aspect of using the AI tool(s) did you find limiting/restrictive?”

Figure 10 represents the coded responses recorded for the three cohorts merged, presented as a pie chart (108 coded responses).

Notably, there were many distinct, individual responses to this question, resulting in 51.9% of responses being described as “other”. This highlighted a particular diversity of opinion with regard to what students found to be most limiting or restrictive (

Figure 10). The additional codes were diverse, including “none” (7.4%), “prompt challenges” (6.5%), “accuracy of information” (5.6%), “images/visual content” (5.6%), “limited” (5.6%) “detailed/complex tasks” (4.6%), indirect/vague responses (4.6%), “not up to date” (4.6%), and “referencing” (3.7%). The following quotations evidence the most frequent qualitative responses or statements by students depicting the limitations of GenAI in their education:

“Had to be very specific with questions you ask” (code: prompt challenges).

“AI giving you inaccurate information” (code: accuracy of information).

“No visual content” (code: images/visual content).

“Sometimes it would not tell me the answer directly.” (code: indirect/vague responses).

“Research only to 2021” (code: not up to date).

With respect to less popular uses (fewer than three responses), the following quotes evidence statements by students depicting a respective qualitative code:

3.7. Ethical Concerns Using GenAI Tool(s)

Given the rapid emergence of GenAI tools and potential concerns regarding the ethical use of GenAI tools, we asked students about their ethical concerns regarding the use of GenAI tools.

Students were asked to record their responses to the following question: what are your ethical concerns about using AI tools? Coded responses recorded for the three cohorts were merged, presented as a pie chart (90 coded responses).

The most frequent specific responses were in the context of “plagiarism” (28.9%), “none” (24.4%), and “reliability/trust” (10%). Examples of students’ statements with respect to these ethical concerns are represented in the following statements;

Other themes or codes that arose for this question included “suppression of thought” (5.6%), “Human factor” (4.4%), “correct use” (3.3%), “intellectual property” (3.3%), “self-awareness” (3.3%), “fairness” (2.2%) “loss of jobs” (2.2%), “data privacy” (2.2%) “bias” (1.1%), “cheating” (1.1%), and “illegal activity” (1.1%). The following quotations evidence student statements depicting ethical concerns and respective qualitative code:

“Reduces the use of human interaction and thinking” (code: suppression of thought).

“We should embrace new technologies although we may lose the “human touch” (code: human factor).

“Might help to do something illegal” (code: illegal activity).

“AI should be used in right ways” (code: correct use).

“Job opportunities in the future may be more limited” (code: loss of jobs).

The following section discusses our study findings in the context of implications for teaching and learning of and for AI in higher education.

Figure 11 summarizes the coded responses.

4. Discussion

4.1. Engagement Effectiveness with AI in Education

GenAI tools are actively being leveraged within the higher education sector for a variety of uses, including the promotion of student engagement, e.g., the “Cara” 24 h virtual assistant programme (

Walsh, 2023). Our data has threefold confirmation to support GenAI’s presence as an engagement strategy: engagement with peers, educators, and the subject/discipline. The survey findings demonstrate that GenAI had a moderate to positive effect in terms of engagement with these three actors, based on the mode value of the response sets. On the other hand, the respondents rarely interpret GenAI’s engagement contributions as “very good effect”, meaning there is space for improvement in the delivery of GenAI content within the testbed modules and more broadly in the higher education sector. The argument that there is room for improvement in delivery is strengthened by the observation that some respondents labelled engagement contributions as “no effect”. Perhaps this finding can be systematically reduced with further targeted tailoring of the GenAI activities used in the modules. Nevertheless, the majority of the student population favour GenAI as an engagement source, while we believe that this will heavily rely on the intervention occurring during the module.

4.2. AI Engagement to Enhance Learning and Skills

AI’s advantage as a support may be more pronounced for novices (as novice designers or especially managers are generally only briefly trained in design thinking through business design approaches) rather than expert designers; the latter have been trained over years, most probably in environments where trainers have a crucial part in fostering a productive conversation based on criticism. Notwithstanding, there is also an argument to be made that optimal leveraging of GenAI tools can be achieved by those with expert knowledge, e.g., through more refined prompt generation.

The survey results demonstrate positive contributions from the GenAI activities regarding learning enhancement and efficiency. The most dominant feedback regarding learning enhancement and learning efficiency suggests “good” level of enhancement and “good” level of support, while the response distributions are right-skewed in both cases (indicating more positive responses). Those who qualify GenAI contributions to learning enhancement and efficiency as “no enhancement/support” are few, providing supportive evidence for GenAI as a learning enhancement/efficiency tool. GenAI’s contributions to critical thinking, on the other hand, have slightly less positive representation; the mode of the response set implies “some support” in developing critical thinking skills through the course and the GenAI intervention. Nevertheless, at least some development of critical thinking in 80% of students is striking. One potential fear held by those opposing the use of AI tools is that it may suppress original or critical thought and promote “intellectual laziness” (

Zelihic, 2024). Our data suggests that this is not the case or is not recognised by the students. Taken together, our findings suggest that supported GenAI exercises are useful as technology tools and for the promotion of critical thinking approaches to complex problems.

4.3. Future Use of AI Tools by Students in University

Digital literacy is a key skill for navigating and thriving in today’s technology-driven society. Our data strongly suggests that students will continue to engage with and use GenAI tools within their higher education courses (

Figure 6), gaining exposure to current and developing digital skills. Given that digital literacy is a good predictor of one’s ability to distinguish between facts and misinformation (

World Economic Forum, 2024), it can be considered positive that students intend to continue to engage with GenAI tools, potentially protecting them from displacement and facilitating successful job transitions (

World Economic Forum, 2024). In parallel, the third-level education system must evolve to work in harmony with increased/continued use of GenAI tools by students. The WEF document on the future of learning advocates that “students, teachers and administrators must receive necessary training and upskilling opportunities oriented to their needs to help them make the most productive use of AI systems” with “equity and inclusion considerations…central to the design of programmes”. This view is supported by

Delcker et al. (

2024), who advocated that educators and higher education institutions have the responsibility to create safe learning environments that foster points of contact with AI and possibilities to actively engage with AI (

Delcker et al., 2024). The findings from this study can inform the creation and sustaining of creative and safe learning environments to develop points of contact with AI.

4.4. Prior Experience of GenAI

In the context of prior experience with GenAI, this study’s data represents mainly positive statements (

Figure 8). The main negative was “unreliable”, which existed prior to GenAI experience in education. As we are aware, many educators and students do not use GenAI, and there is an abundance of positives evident. These include the focus on skills, technology equity, reduced cognitive load, and social and emotional learning. However, GenAI experience prior to third-level education greatly adds to the existing positives, including cognate skills for technology and associated sectors, learning effectiveness and efficiency, and a competitive edge in technology sectors.

GenAI-assisted learning presents an exciting opportunity to aid students in their learning. Technology-enhanced learning is increasingly prevalent in higher education, and several studies surveying student perceptions of the use of technology in teaching reported very positive outcomes (

Jump, 2011), including increased enjoyment in learning (

Farah & Maybury, 2009).

4.5. Usefulness of GenAI in Education

From this study, one student encapsulated the holistic usefulness of GenAI tools in education in the following statement: “University, staff and students should embrace and take advantage of these new technologies and breakthroughs and not shy away from them. Should be used more as an educational resource to help students grow as they would from other resources”. Many teachers fear that the use and impact of GenAI in education will diminish their role or even replace them. Rather than replacing teachers, GenAI can support their work, enabling them to design learning experiences that empower learners to be creative, think, solve real-world problems, collaborate effectively, and provide learning experiences that GenAI systems on their own cannot do. It is evident that tools, such as ChatGPT, can be valuable resources, but only when it is used in combination with human expertise and collaboration. Education needs to afford opportunities that mimic the world of work, emphasising the value of multidisciplinary teams. GenAI can serve as a collaborator that represents its usefulness in student learning through supporting engagement, efficiency, and skill development (

Gottweis et al., 2025).

GenAI facilitates “space” in the learning experience by enabling learning of key skills required for the 21st century (critical thinking and creative thinking). In the context of this research study, the GenAI tool (ChatGPT) enabled the adjustment of teaching strategies and provided timely interventions to support struggling students through contextual learning tasks. In this regard, the design process, tools and frameworks, and GenAI have similar roles: offloading some of the cognitive load to external mediums to free up innovators’ memory for synthesising insights and generating abductive hypotheses. The learning involved an introduction to the GenAI tool to overcome misconceptions from the perspective of ethics, such as its use as a cheat tool. The specific application of the GenAI tool to support learning in various contexts (Physiology, Education, and Engineering) resulted in enabling learning without fear of judgement.

4.6. The Limitations of GenAI in Education

The limitations of GenAI in education span economic, environmental, and societal issues (

UNESCO, 2019). With respect to economic issues, GenAI represents certain costs due to resource requirements (

Staton & Murgia, 2023). Implementing GenAI in education requires significant investment in policy, infrastructure, training, and sustainability. In education, this could result in a further limitation in the context of equity of access issues for underfunded educational institutions and their communities. Further societal issues include data privacy, reducing human interaction, bias, inequality, empathy, and ethical concerns, as discussed further in the next section (

Luckin, 2017;

Schmelzer, 2024;

World Economic Forum, 2020a).

However, as stated by the participants in this study, the most useful aspect of GenAI in education was in the context of “information”, indicated by approximately 30% of students. While GenAI lacks the ability to think critically and represent creativity, GenAI in education may afford greater time to develop these skills. Developing these higher-order cognitive skills thus propels both the societal and economic issues as negative drawbacks. However, we must use both these skills to ensure GenAI and associated technologies do not further increase the use of technology requiring greater demand on data centres, which impose a great threat to our planet.

4.7. Ethical Issues Using GenAI Tool(s)

Discussions of ethical issues in relation to GenAI in education have significantly increased since the abrupt release of ChatGPT in November 2022. Ethical concerns can relate to (i) the generation of the large language models, e.g., “digital sweatshops” (

Bartholomew, 2023); (ii) bias in datasets used to train the models and how that might affect human biases (

Vicente & Matute, 2023); (iii) accessibility (with the emergence of more and more paid subscriptions) (

De la Torre & Frazee, 2024); (iv) accuracy (

Alkaissi & McFarlane, 2023); and (v) whether students could be trusted to use AI tools appropriately and not substitute original work with content generated by GenAI tools (

Cotton et al., 2023). Some of these issues were identified in our survey when asking students about their prior experience of GenAI (

Figure 8), with 4.8% of responses coded as “unreliable” (although responses to this question were generally more positive, citing terms like “useful” and “helpful” highly). In a question directly directed at ethical concerns, 28.9% of students cited “plagiarism” and 10% cited “reliability/trust”, with “suppression of thought” (5.6%) and “human factors” (4.4%) also mentioned (

Figure 11). Thus, several ethical issues reported in the literature were captured in our student population. Interestingly, 24.4% of students reported no ethical concerns (“none”). This is a sizable number given that all student groups were given at least some basic information on the ethics of GenAI in session 1. Interestingly, students in the Physiology cohort identified as being relatively GenAI naive, while also having more pronounced ethical concerns. Thus, it is possible that the more experienced/proficient GenAI users become, the less likely they are to be concerned about ethical issues. Taken together, these data support the concept of introducing training and education in the ethical use of GenAI within our universities.

4.8. Limitations and Future Directions

This study has focused on AI-focused interventions led by three individual educators in three distinct disciplines in three higher education locations. While we consider this to be primarily advantageous in terms of determining the generalisability of our findings, there may be distinct institutional cultures with respect to the use of GenAI tools that influenced the results. For example, access to ChatGPT in the Engineering cohort based in China required the use of a virtual private network (VPN). This study was also only carried out in Irish-affiliated higher education courses; however, we believe our findings are broadly generalisable to higher education.

This study focused on the free version of ChatGPT (v3.5), which was the tool most commonly used by the students prior to our study in Autumn 2023. Since that time, several other GenAI chatbots have emerged or become more popular, e.g., Microsoft CoPilot, Google Gemini, Claude AI, ChatGPT-4o, Dall-e, Midjourney, etc. These additional tools may solve/remedy some of the challenges with ChatGPT v3.5 or potentially introduce new challenges, e.g., educational inequality, as monthly paid subscriptions are required for access to some of these tools.

In addition to those limitations expressed above, it should be noted that the samples collected from three cohorts varied in size. For example, out of the total number of 129 participants, 69 of them are contributions from the module taught in China, while the remaining 60 responses are gathered from two different institutions in Ireland. Likewise, the Engineering survey response number is 69, which is significantly larger compared to ITE (44 respondents) and Physiology (16 respondents). Likewise, the representation of the overall classroom opinion is also bounded by different percentages, ranging between 60% and 78%. These variations indicate limitations in terms of the size and the cohort of the sampling pool, although discipline-specific comparisons show significant and similar trends in various questions.

Our study was carried out in a single university trimester in Autumn 2023. It will be interesting to assess the long-term impact of GenAI interventions on student learning as the use of GenAI tools in the university setting increases for both taught and research-focused courses. As GenAI tools become more widely used to promote engagement in the university setting, it will be important for academic staff to be flexible in their grading/assessment of material generated in collaboration with GenAI tools, including appropriate instruction on ethical use of/citation of GenAI tools for academic integrity purposes.

5. Conclusions

Engagement in teaching and learning, which was empirically measured and fostered in this study, can contribute to informing higher education systems and structures, encouraging approaches to address relevant matters for AI related to learner engagement, efficiency, skill development, and ethics.

The present research study could enhance other similar studies. In addition, future studies could include the perspective of other stakeholders (e.g., educators, leadership or management committees, other higher education community members, and industry professionals). A more inclusive approach that considers diverse voices and perspectives could enrich students’ understanding of their own reality and the influence of external/contextual forces. Future studies could report the data respective of each subject/discipline separately, or comparatively.

Our data indicates that teacher-led introductions to GenAI tools have positive effects on student engagement with peers, educators, and most notably the subject the students are engaging with. Students also reported very positive supportive effects with respect to enhancement of learning, learning efficiency, and skill development. Taken together, our data collected from diverse teaching and learning contexts (e.g., different subjects, different institutions, and different educators) support the use of teacher-led GenAI-focused interventions, specifically ChatGPT, at third-level education. This diversity in delivery is a particular strength of this study. Approaches like this can inform opportunities, limitations, and ethical considerations of AI in education.

Author Contributions

Conceptualization, K.L., E.O. and E.P.C.; Methodology, K.L., E.O. and E.P.C.; Formal analysis, K.L., E.O. and E.P.C.; Investigation, K.L., E.O. and E.P.C.; Resources, K.L., E.O. and E.P.C.; Data curation, K.L., E.O. and E.P.C.; Writing—original draft, K.L., E.O. and E.P.C.; Writing—review & editing, K.L., E.O. and E.P.C.; Visualization, K.L., E.O. and E.P.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This research was conducted in accordance with the EHS Research Ethics, and approval received as per code: EHS 2023-09-02, approved in 2 September 2023.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data available upon request from corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| GenAI | Generative Artificial Intelligence |

| ChatGPT | Chat Generative Pre-Trained Transformer |

| ITE | Initial Teacher Education |

Appendix A. Intervention Details

Appendix A.1. Physiology

The GenAI intervention in Physiology took place in University College Dublin in trimester 1 of the academic year 2023/2024. A final (4th) year undergraduate module was identified for the intervention, PHYS40170—Fundamentals of Physiological Research (25 students). During this module, students develop a number of skills relating to research, which are designed to support their participation in a 12-week research study in trimester 2 of the academic year. A key deliverable of PHYS40170 is the preparation and submission of a literature review on a topic that is aligned with the student’s research study. In the first part of this module, students were encouraged to develop their literature review in the traditional manner, e.g., through literature searches in PubMed. In weeks 4 and 5 of the trimester, the students received 2 × 50 min tutorial-style sessions, which represent their “AI-intervention”.

- (i)

A brief introduction to generative AI.

- (ii)

An introduction to relevant ethical considerations associated with generative AI.

- (iii)

Discussions on potential limitations and opportunities associated with generative AI, particularly in the context of writing a literature review (one of the main objectives of the module).

- (iv)

A large section of the time in class was devoted to a practical exercise where students were asked to explore the response of ChatGPT 3.5 to prompts relating to four key themes associated with the writing of a literature review: 1. content summarisation, 2. content generation, 3. content ideation, and 4. language enhancement.

- (v)

The class concluded with a peer–peer discussion of student experiences during the practical exercise.

- (i)

A brief recap of material covered in the first session.

- (ii)

Further reflection on the opportunities and limitations associated with using generative AI in the context of a literature review. Reflection points included “did the AI tool perform the requested task?”, “how would students ‘rate the quality of the work performed by the AI tool?”, “Is the quality of the work performed by the AI tool University standard?”, and whether the students “have any ethical concerns regarding the exercise”.

- (iii)

Generating a “prompt list” of useful/helpful prompts when engaging with GenAI.

- (iv)

Exploration and practical exercise employing other online tools (e.g., Litmaps) to support a literature review.

Approximately 1 week after session 2, students were invited to participate in the AI_ENGAGE survey hosted on the Qualtrics platform, where responses were recorded from 16 out of 25 students.

Appendix A.2. Initial Teacher Education

The GenAI intervention in Initial Teacher Education took place at the University of Limerick in semester 1 (Autumn) of the academic year 2023/2024. A third-year undergraduate module was identified for the intervention, WT4025 Wood Design and Technology 2 (56 students). During this module, through the design for context activity, students experience the complexities of designing with wood through a series of tests and experiments that examine the suitability of the material for the end-use environment.

A key deliverable of WT4025 is for student teachers to communicate the development of conceptual and practical design skills through the realisation of creative and innovative solutions to a global challenge (problem). In the first part (6 weeks) of this module, students were encouraged to integrate knowledge and skills with qualities of cooperative enquiry and reflective thought to develop design solutions. In the latter part (6 weeks) of this module, students develop craft and wood processing skills/techniques through an iterative reflective approach of design and realisation.

The 12-week semester engaged students in weekly 4 h experiential learning sessions (labs) that facilitated enquiry and reflective thought through a design thinking framework. The “GenAI-intervention” was introduced to support students’ cognitive load during design thinking (problem solving).

- (i)

A brief introduction to generative AI.

- (ii)

An introduction to relevant ethical considerations associated with generative AI.

- (iii)

Discussions on potential limitations and opportunities associated with generative AI, particularly in the context of design thinking.

- (iv)

A large proportion of the lab time was devoted to a practical activity/task where students applied their existing knowledge or thought process (head), followed by a norm task which involved the familiar or habitual search tool such as “Google” to research global challenges (need finding) to support problem framing. After the search tool (Google) provided students with information, which is normally information overload, students then engaged in an investigation, critically reflecting on the relevance of the research.

- (i)

A quick recap on the first session.

- (ii)

An overview of generating effective “prompts” for effective use of generative AI.

- (iii)

A large proportion of the lab time was devoted to a practical activity/task where students engaged with the AI tool ChatGPT using a specific prompt(s) to obtain targeted information.

- (iv)

Students critically engaged in critical reflection on their responses and responses from Google and ChatGPT for suitability (validity) and factualness (reliability).

- (v)

The session concluded with a peer–peer discussion of student experiences during the practical exercise.

The use of the GenAI tool ChatGPT occurred when needed or desired by the students in the subsequent design thinking tasks during the module. After the key teaching and learning activities, toward the final weeks of the module, students were invited to participate in the AI_ENGAGE survey hosted on the Qualtrics platform, where responses were recorded from 44 out of 56 students. The post survey was used to determine their experience using and engagement with GenAI tools in their learning experience (module).

Appendix A.3. Civil Engineering

The final AI intervention linked to this research study was within the subject of Civil Engineering as part of one of UCD’s joint international collaborations, taking place in trimester 1, 2023/2024. This is a joint undergraduate degree programme offered by the UCD School of Civil Engineering and the Chang’an University College of Transportation in Xian, China. The intervention was appended to the computer-aided design/modelling exercises of the 4th (final) year undergraduate module, CVEN 4008W Bridge Engineering, with a class size of 114 students. One of the aims of this course is to provide the technical know-how to students for modelling, simulation, and early-stage design decision-making of bridge structures using computer platforms. AI was introduced as a novel feature supporting this scheme, and two exercises were performed to represent different decision/knowledge needs of bridge design and analysis. While this is a joint degree programme, this class was taught face-to-face in China, and this gave the instructor an opportunity to make use of the interactive environment in the classroom. One lecture, consisting of two sessions, was allocated to the exercises, and the session contents were as follows.

- (i)

A brief introduction to generative AI.

- (ii)

Different examples of GenAI applications with benchmark platforms that can relate to engineering problems, i.e., text-to-text (ChatGPT), text-to-image (Midjourney), and text/image-to-video narrative (D-ID).

- (iii)

Use case: A synthetic interview with a bridge contractor followed by a discussion on the ethical and reliability/trustworthiness concerns of GenAI.

- (iv)

Introducing the two AI exercises addressing different-stage bridge analysis/design activities.

- (i)

Introduction of Exercise 1, Preliminary Bridge Design Decisions. A quick review of the bridge structural forms and bridge types pertaining to different span lengths. The specific exercise was to regenerate the feasible bridge type vs. span chart published by the Institution of Structural Engineers via ChatGPT prompts. Two question structures are introduced (e.g., “what bridge type is feasible for span X m?” vs. “what span range is feasible for Y bridge types?”), and their effectiveness is compared.

- (ii)

Introduction to Exercise 2, Numerical Modelling of Bridges. A quick review of the computer assignment covering a single-span bridge’s behaviour via finite element analysis and the open-source modelling platform OpenSees. Given the key bridge parameters, ChatGPT was asked to develop an OpenSees model with two different boundary conditions. The model was compared with those developed in class, and the boundary conditions’ accuracy was checked live in class.

- (iii)

A closing discussion on what went wrong and what went right in terms of bridge design and analysis with generative AI support.

Students were invited to participate in the AI_ENGAGE survey, and 69 responses were received.

References

- Alkaissi, H., & McFarlane, S. I. (2023). Artificial hallucinations in ChatGPT: Implications in scientific writing. Cureus, 15(2), e35179. [Google Scholar] [CrossRef] [PubMed]

- Bartholomew, J. (2023, August 29). Q&A: Uncovering the labor exploitation that powers AI. Columbia Journalism Review. Available online: https://www.cjr.org/tow_center/qa-uncovering-the-labor-exploitation-that-powers-ai.php (accessed on 22 April 2025).

- Behar-Horenstein, L. S., & Niu, L. (2011). Teaching critical thinking skills in higher education: A review of the literature. Journal of College Teaching & Learning, 8(2). [Google Scholar] [CrossRef]

- Bezanilla, M. J., Fernández-Nogueira, D., Poblete, M., & Galindo-Domínguez, H. (2019). Methodologies for teaching-learning critical thinking in higher education: The teacher’s view. Thinking Skills and Creativity, 33, 100584. [Google Scholar] [CrossRef]

- Bognár, L., & Khine, M. S. (2025). The shifting landscape of student engagement: A pre-post semester analysis in AI-enhanced classrooms. Computers and Education: Artificial Intelligence, 8, 100395. [Google Scholar] [CrossRef]

- Cotton, D. R. E., Cotton, P. A., & Shipway, J. R. (2023). Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International, 61(2), 228–239. [Google Scholar] [CrossRef]

- Crompton, H., & Burke, D. (2023). Artificial intelligence in higher education: The state of the field. International Journal of Educational Technology in Higher Education, 20, 22. [Google Scholar] [CrossRef]

- Crown, S., Fuentes, A., Jones, R., Nambiar, R., & Crown, D. (2010, June 20–23). Ann G. Neering: Interactive chatbot to motivate and engage engineering students. 2010 Annual Conference & Exposition (pp. 15–181), Louisville, KY, USA. [Google Scholar]

- De la Torre, A., & Frazee, J. (2024). A call to action to address inequity in AI access. Inside higher education, opinion, view. Available online: https://www.insidehighered.com/opinion/views/2024/04/04/call-action-address-inequity-ai-access-opinion#:~:text=Put%20simply%2C%20students%20who%20are,tool%E2%80%94and%20they%20do%20not (accessed on 22 April 2025).

- Delcker, J., Heil, J., Ifenthaler, D., Seufert, S., & Spirgi, L. (2024). First-year students AI-competence as a predictor for intended and de facto use of AI-tools for supporting learning processes in higher education. International Journal of Educational Technology in Higher Education, 21, 18. [Google Scholar] [CrossRef]

- Ennis, R. H. (1962). A concept of critical thinking. Harvard Educational Review, 32(1), 81–111. [Google Scholar]

- Ennis, R. H. (1989). Critical thinking and subject specificity: Clarification and needed research. Educational Researcher, 18(3), 4–10. [Google Scholar] [CrossRef]

- Eubanks, V. (2018). Automating inequality: How high-tech tools profile, police, and punish the poor. St. Martin’s Press. [Google Scholar]

- Farah, C. S., & Maybury, T. (2009). Implementing digital technology to enhance student learning of pathology. European Journal Dentistry Education, 13(3), 172–178. [Google Scholar] [CrossRef] [PubMed]

- Gottweis, J., Weng, W. H., Daryin, A., Tu, T., Palepu, A., Sirkovic, P., Myaskovsky, A., Weissenberger, F., Rong, K., Tanno, R., Saab, K., Popovici, D., Blum, J., Zhang, F., Chou, K., Hassidim, A., Gokturk, B., Vahdat, A., Kohli, P., … Natarajan, V. (2025). Towards an AI co-scientist. arXiv, arXiv:2502.18864. [Google Scholar]

- Hennessy, A., & Murphy, K. (2025). Barriers to student engagement: A focus group study on student engagement of first-year computing students. Irish Educational Studies, 44, 183–200. [Google Scholar] [CrossRef]

- Holmes, W., Bialik, M., & Fadel, C. (2019). Artificial intelligence in education: Promises and implications for teaching and learning. Center for Curriculum Redesign. [Google Scholar]

- Holstein, K., McLaren, B. M., & Aleven, V. (2018, April 21–26). The classroom as a dashboard: Co-designing wearable cognitive augmentation for K-12 teachers. 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada. [Google Scholar]

- Jansson, D. G., & Smith, S. M. (1991). Design fixation. Design Studies, 12(1), 3–11. [Google Scholar] [CrossRef]

- Johnston, H., Wells, R. F., & Shanks, E. M. (2024). Student perspectives on the use of generative artificial intelligence technologies in higher education. International Journal of Education Integration, 20, 2. [Google Scholar] [CrossRef]

- Jump, L. (2011). Why university lecturers enhance their teaching through the use of technology: A systematic review. Learning, Media and Technology, 36(1), 55–68. [Google Scholar] [CrossRef]

- Laupichler, M. C., Aster, A., Schirch, J., & Raupach, T. (2022). Artificial intelligence literacy in higher and adult education: A scoping literature review. Computers and Education: Artificial Intelligence, 3, 100101. [Google Scholar] [CrossRef]

- Laurillard, D. (2012). Teaching as a design science: Building pedagogical patterns for learning and technology. Routledge. [Google Scholar]

- Leest, B., & Wolbers, M. H. J. (2020). Critical thinking, creativity and study results as predictors of selection for and successful completion of excellence programmes in Dutch higher education institutions. European Journal of Higher Education, 11(1), 29–43. [Google Scholar] [CrossRef]

- Liu, D., Huang, R., & Wosinski, M. (2016). Smart learning in smart cities. Lecture Notes in Educational Technology. Springer. [Google Scholar]

- Luckin, R. (2017). Towards artificial intelligence-based assessment systems. Nature Human Behaviour. [Google Scholar]

- Luckin, R., Holmes, W., Griffiths, M., & Forcier, L. B. (2016). Intelligence unleashed: An argument for AI in education. Pearson. [Google Scholar]

- Melo-López, V. A., Basantes-Andrade, A., Gudiño-Mejía, C. B., & Hernández-Martínez, E. (2025). The Impact of Artificial Intelligence on Inclusive Education: A Systematic Review. Education Sciences, 15(5), 539. [Google Scholar] [CrossRef]

- Mirdad, K., Daeli, O. P. M., Septiani, N., Ekawati, A., & Rusilowati, U. (2024). Optimizing student engagement and performance usingai-enabled educational tools. Journal of Computer Science and Technology Application, 1(1), 53–60. [Google Scholar]

- Montenegro-Rueda, M., Fernández-Cerero, J., Fernández-Batanero, J. M., & López-Meneses, E. (2023). Impact of the Implementation of ChatGPT in Education: A Systematic Review. Computers, 12(8), 153. [Google Scholar] [CrossRef]

- Nguyen, A., Kremantzis, M., Essien, A., Petrounias, I., & Hosseini, S. (2024). Enhancing student engagement through artificial intelligence (AI): Understanding the basics, opportunities, and challenges. Journal of University Teaching and Learning Practice, 21(6), 1–13. [Google Scholar] [CrossRef]

- Pane, J. F., Steiner, E., Baird, M. D., & Hamilton, L. S. (2017). Continued progress: Promising evidence on personalized learning. Santa RAND Corporation. [Google Scholar]

- Renaud, R. D., & Murray, H. G. (2008). A comparison of a subject-specific and a general measure of critical thinking. Thinking Skills and Creativity, 3(2), 85–93. [Google Scholar] [CrossRef]

- Schmelzer, R. (2024, May 28). How AI is changing the future of higher education. Forbes Magazine. Available online: https://www.forbes.com/sites/ronschmelzer/2024/05/28/how-ai-is-shaping-the-future-of-education/?sh=327cd2ecc9ec (accessed on 22 April 2025).

- Shute, V. J. (2008). Focus on formative feedback. Review of Educational Research, 78(1), 153–189. [Google Scholar] [CrossRef]

- Slade, S., & Prinsloo, P. (2013). Learning analytics: Ethical issues and dilemmas. American Behavioral Scientist, 57(10), 1510–1529. [Google Scholar] [CrossRef]

- Southworth, J., Migliaccio, K., Glover, J., Glover, J., Reed, D., McCarty, C., Brendemuhl, J., & Thomas, A. (2023). Developing a model for AI Across the curriculum: Transforming the higher education landscape via innovation in AI literacy. Computers and Education: Artificial Intelligence, 4, 100127. [Google Scholar] [CrossRef]

- Spector, J. M., & Ma, S. (2019). Inquiry and critical thinking skills for the next generation: From artificial intelligence back to human intelligence. Smart Learning Environments, 6(1), 8. [Google Scholar] [CrossRef]

- Staton, B., & Murgia, M. (2023, May 21). The AI revolution already transforming education. Financial Times. Available online: https://www.ft.com/content/47fd20c6-240d-4ffa-a0de-70717712ed1c (accessed on 22 April 2025).

- Sweller, J. (1988). Cognitive load during problem solving: Effects on learning. Cognitive Science, 12, 257–285. [Google Scholar] [CrossRef]

- UNESCO. (2019). Artificial intelligence in education: Challenges and opportunities for sustainable development. UNESCO. [Google Scholar]

- Vicente, L., & Matute, H. (2023). Humans inherit artificial intelligence biases. Scientific Reports, 13(1), 15737. [Google Scholar] [CrossRef]

- Walker, P., & Finney, N. (1999). Skill development and critical thinking in higher education. Teaching in Higher Education, 4(4), 531–547. [Google Scholar] [CrossRef]

- Walsh, J. (2023, November 30). Three ways AI can support student success and well-being. Times Higher Education. Available online: https://www.timeshighereducation.com/campus/three-ways-ai-can-support-student-success-and-wellbeing (accessed on 22 April 2025).

- Wang, F., Hannafin, M. J., & Zhang, Z. (2018). Gamified learning environments: What we know and what we need to know. Educational Technology Research and Development, 66(3), 1–20. [Google Scholar]

- Wang, S., Wang, F., Zhu, Z., Wang, J., Tran, T., & Du, Z. (2024). Artificial intelligence in education: A systematic literature review. Expert Systems with Applications, 252, 124167. [Google Scholar] [CrossRef]

- World Economic Forum. (2020a). Ethical AI: A framework for education and society. World Economic Forum. [Google Scholar]

- World Economic Forum. (2020b). Schools of the future: Defining new models of education for the fourth industrial revolution. World Economic Forum. [Google Scholar]

- World Economic Forum. (2024). Shaping the future of learning: The role of AI in education 4.0. World Economic Forum. [Google Scholar]

- Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education—Where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 39. [Google Scholar] [CrossRef]

- Zelihic, M. (2024, February 8). AI’s impact on the learning environment at work. Forbes Magazine. Available online: https://www.forbes.com/sites/majazelihic/2024/02/08/ais-impact-on-the-learning-environment-at-work/ (accessed on 22 April 2025).

- Zhang, K., & Aslan, A. B. (2021). AI technologies for education: Recent research & future directions. Computers and Education: Artificial Intelligence, 2, 100025. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).