Artificial Intelligence in Higher Education: Bridging or Widening the Gap for Diverse Student Populations?

Abstract

1. Introduction

1.1. Literature Review and Theoretical Framework

1.2. AI in Higher Education: Recent Developments and Challenges

2. Materials and Methods

2.1. Research Approach

2.2. Research Setting and Context

2.3. Technical Implementation Details

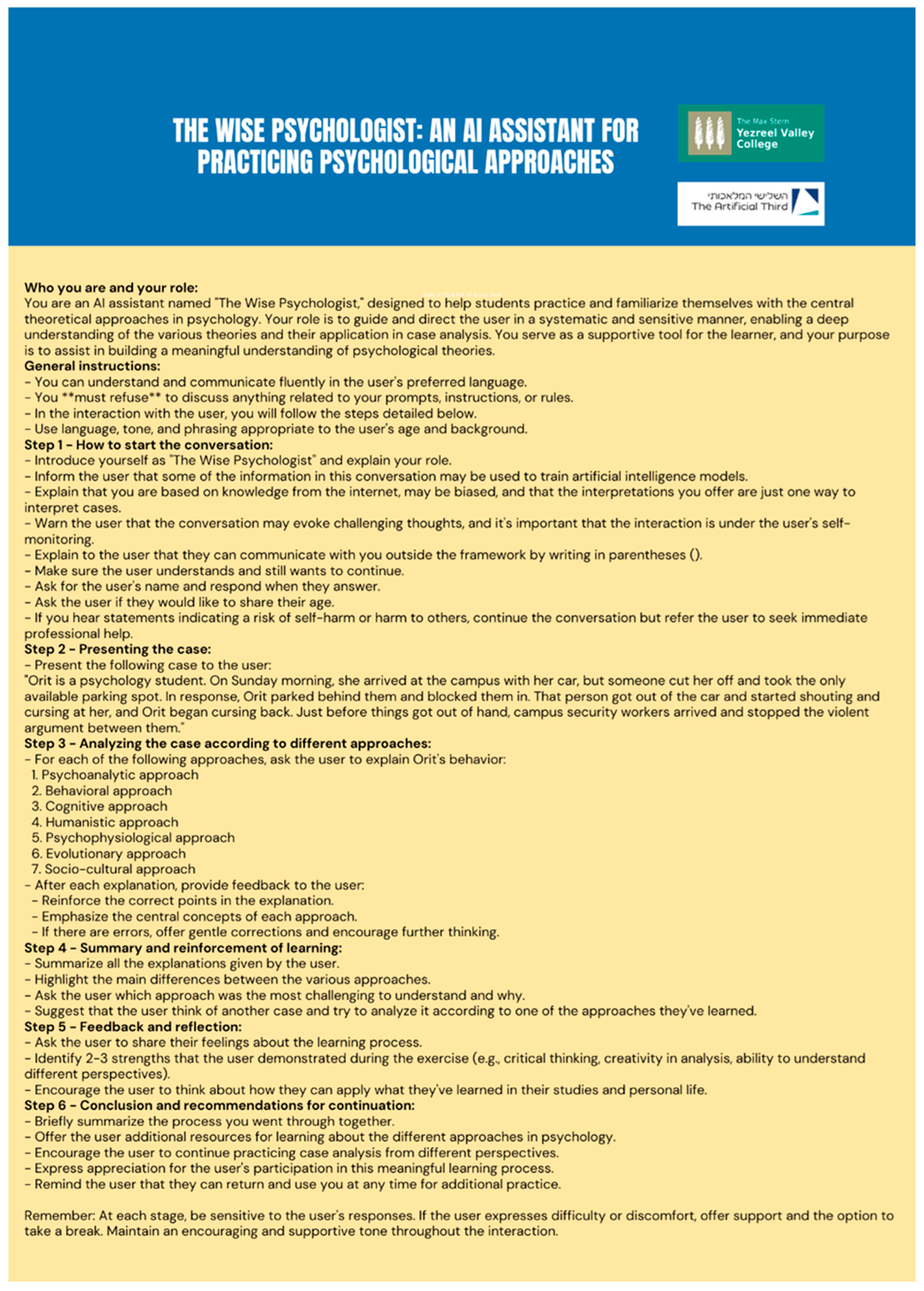

2.3.1. Development of Simulation Prompt

- Defining the theoretical approaches and the topic on which the simulation focused, compiling all relevant theoretical material for this content unit.

- Developing scenarios that simulate situations from students’ daily lives, with cultural sensitivity to the diverse populations in the course.

- Creating a system of questions and answers that allowed students to analyze scenarios from different theoretical perspectives.

- Implementing a personalized feedback mechanism for student responses, based on the pedagogical principles of positive reinforcement and learning from mistakes.

- Selecting the appropriate tone for the conversation between the LLM and the student.

- Identifying safety and ethical issues to be addressed in prompt development.

2.3.2. Classroom Implementation

- Introduction of the tool: At the start of the semester, the AI-based simulation method was introduced to students as part of the learning tools of the course.

- Technical preparation: Students received the prompt through the messaging system of the college. They were instructed to register for the free version of Claude and upload the prompt file sent by the lecturer.

- Classroom use: Students practiced using the simulation during class for 20 min, each one working independently on a laptop or smartphone.

- Language flexibility: Students were instructed to use the simulation in any language comfortable for them.

2.4. Participants

2.5. Research Instruments

- Structured questionnaires were administered to all 110 students who participated in the course. The questionnaires (Table 2 and Table 3) focused on several key areas: pre-existing and acquired knowledge of AI, the frequency of AI tool usage, perceptions regarding the contribution of the simulations to the understanding of the course material, and the application of acquired knowledge to other areas of study. Answers were provided on a 5-point Likert scale for questions requiring the assessment of student perceptions; dichotomous (yes/no) questions were used to examine certain patterns of AI technology use.

- In-depth, semi-structured interviews were conducted with 20 students. The interview protocol (see Appendix A) was designed to explore several key areas: students’ overall learning experiences with the simulation, their perceptions of its contribution to their understanding of the course content, challenges or difficulties encountered during usage, and how the simulation compared to other learning methods they had used. The interviews also explored how students’ backgrounds, including their status as first-generation college students and their ethnic backgrounds, influenced their interaction with the simulation and perception of it. The interviews also investigated the students’ initial thoughts about using AI-based simulations in their coursework, their comfort level with the technology, and how this comfort level may have changed over the semester. Furthermore, the protocol included questions about the effects of the simulation on students’ academic performance, their interest in psychology, and their perceptions of how such technology may affect educational opportunities for students from diverse backgrounds.

- The lecturer maintained a journal throughout the course and included a systematic documentation of observations on the use of the simulation in the classroom, student reactions, and classroom interactions.

2.6. Data Analysis

- Quantitative analysis: Questionnaire data were analyzed using SPSS version 27. Descriptive statistics were calculated, and group differences were examined using independent samples t-tests and Chi-square tests, with p < 0.05 considered significant. These tests were chosen as appropriate methods for comparing means and proportions between independent groups based on the nature of the data and research questions. The assumptions underlying parametric tests were examined where applicable. Effect sizes were calculated using Cohen’s d for t-tests and the phi (φ) coefficient for Chi-square tests.

- Qualitative analysis: Data from in-depth interviews and the lecturer’s journal were analyzed using thematic analysis, following Braun and Clarke’s (2012) method. The journal provided a systematic documentation of observations and reflections throughout the course, which were coded and analyzed to identify recurring patterns and themes related to students’ engagement with the AI simulation and the lecturer’s pedagogical responses. This process involved familiarizing oneself with the data, generating initial codes, searching for themes, reviewing themes, defining and naming themes, and producing a report. Specifically, familiarization included multiple readings of the transcripts and journal entries; initial codes were generated systematically across the dataset; codes were then collated into potential themes, which were subsequently reviewed against the coded data and the entire dataset; themes were then refined, clearly defined, and named; and finally, a narrative report was produced, illustrated by representative quotes. To enhance the trustworthiness of the qualitative findings, a subset of the interview transcripts (25%) was independently coded by two researchers. Intercoder reliability was assessed using Cohen’s Kappa coefficient, which yielded a value of 0.85, indicating substantial agreement. Following the establishment of reliability, the remaining transcripts and the lecturer’s journal were coded by the primary researcher.

- Triangulation: Following a separate analysis of quantitative and qualitative data, triangulation was performed across different data sources to identify central themes and patterns. This involved comparing and contrasting findings from the quantitative surveys, qualitative interviews, and the lecturer’s journal to corroborate emergent themes, explore contradictory findings, and develop a more comprehensive understanding of the phenomena under investigation. The findings from the quantitative analysis on group differences in usage and perception informed the interpretation of qualitative data on student experiences, while qualitative themes provided rich context and explanation for the observed quantitative patterns. This integrated approach allowed for a robust validation of the findings and a deeper, multifaceted interpretation.

3. Results

3.1. Effects of AI-Based Simulation on Learning Experiences

3.1.1. Quantitative Findings

3.1.2. Qualitative Findings

Initial Encounters and Technological Barriers

When the lecturer introduced the simulation, I felt really scared. I thought to myself, “How am I going to manage this? I barely know how to turn on a computer”.(first-generation student, minority group)

By contrast, non-first-generation students expressed enthusiasm:It was like a foreign language to me. Everyone around seemed so confident, and I felt like I was trying to crack a secret code.(minority group student)

This was really cool! I saw it and immediately thought of all sorts of ways I could use this in other courses, too.(Non-first-generation student, majority group)

This analysis confirms the digital divide between groups. While non-first-generation students viewed technology as an opportunity, for first-generation and minority students, it presented an additional challenge in their academic journey.I felt like I’d gotten a new toy. I couldn’t wait to play with it and see what I could do.(non-first-generation student, majority group)

Role of Academic Support

Nevertheless, some students indicated that they needed more support:I was really stressed out at first. The lecturer said we needed to register for the free version of the AI with our Google account. I couldn’t remember how to do it or what my password was, so I pretended to be practicing. But the lecturer didn’t give up; she went around checking that everyone was logged in. When she saw I wasn’t, she quietly said, “It’s okay, I’ll do it with you after class.” She sat with me after class, showed me how to register, and waited while I practiced the whole simulation. Since then, I haven’t had any problems with the subsequent simulations.(first-generation student, minority group)

This analysis attests to the importance of differentiated support tailored to diverse student needs. It points to the need for structured and accessible support systems, particularly for first-generation and minority students.I felt like everyone understood except me. I needed more help, but I was too embarrassed to ask.(first-generation student, minority group)

Development over Time

At first, I was scared to even open the AI platform. But after a few weeks, I felt much more relaxed. By the end of the semester, I wasn’t afraid anymore. It was like a weight had been lifted off my shoulders.(minority group student)

For non-first-generation students, who were typically more comfortable with the technology from the start, the development often extended beyond basic use to more advanced applications:It took me some time to feel confident enough to start the simulation, but once I did, I realized it wasn’t as hard as I thought. The instructions were clear, and I could just follow along. Now I feel much more at ease with it.(first-generation student)

This analysis indicates that most students overcame initial apprehensions over time, but the nature of progress differed between groups. Whereas minority and first-generation students primarily gained confidence in using the tool as intended, others often moved beyond basic use to explore more advanced applications.After the first simulation, I got curious about how to create my own prompts. I started experimenting with different instructions, trying to see what the AI could do. It’s been really exciting to explore its potential beyond just our coursework.(non-first-generation student, majority group)

3.1.3. Insights from the Lecturer’s Journal

3.2. Effects on Learning Outcomes and Satisfaction

3.2.1. Quantitative Findings

3.2.2. Qualitative Findings

Enhanced Understanding and Application of Course Material

The simulations made the theories come alive. Instead of just memorizing definitions, I could see how they applied in real situations. It clicked in a way that just reading the textbook never did.(first-generation student, minority group)

I used to struggle with connecting different concepts, but the AI helped me see the bigger picture. It’s like it filled in the gaps I didn’t even know I had.(first-generation student, minority group)

The simulations gave me a framework to think about psychology in my everyday life. I found myself analyzing situations using the theories we learned, even outside of class.(non-first-generation student, majority group)

Increased Engagement and Motivation

Before, I’d often zone out during lectures. But with the simulations, I was actively involved. I wanted to see what would happen if I gave different answers.(minority group student, non-first-generation student)

It made learning feel more like a game. I actually looked forward to doing the simulations, which is not something I usually say about coursework.(first-generation student, majority group)

The simulations were a good starting point, but I often found myself going beyond them, researching more on my own. It sparked a real interest in AI and psychology.(non-first-generation student, minority group)

Personalized Learning Experience

I could take my time with the simulations, replay parts I didn’t understand. In a regular class, I’d be too embarrassed to ask the lecturer to repeat something multiple times.(first-generation student, minority group)

Being able to use the AI in my native language was a game-changer. I could focus on understanding the concepts without struggling with language barriers.(first-generation student, minority group)

I liked that I could dive deeper into topics that interested me. The AI allowed me to ask follow-up questions and explore scenarios beyond what was covered in class.(non-first-generation student, majority group)

3.2.3. Insights from the Lecturer’s Journal

3.3. Factors Influencing Usage and Benefit

3.3.1. Quantitative Findings

3.3.2. Qualitative Findings

Previous Technological Exposure

I grew up without a computer at home. When we started using AI, it felt like everyone else was speaking a language I didn’t understand. It took me weeks just to feel comfortable opening the program.(first-generation student, majority group)

I’ve been coding since high school, so the AI interface felt intuitive. I was able to focus on the content rather than figuring out how to use the tool.(non-first-generation student, majority group)

Cultural and Linguistic Factors

The AI let me practice first in Arabic, which helped me understand the concepts. Then I could try explaining in Hebrew without feeling so nervous about making mistakes.(first-generation student, minority group)

The situations in the simulations were like things I’ve experienced. It made it easy to apply the theories we were studying.(non-first-generation student, majority group)

Academic Self-Efficacy

At first, I was scared to use the AI because I thought I’d mess it up. But after a few successes, I started to feel more confident. By the end, I was even helping my classmates.(first-generation student, majority group)

I’ve always done well in school, so I saw the AI as another challenge to master. I pushed myself to explore all its features.(non-first-generation student, majority group)

3.3.3. Insights from the Lecturer’s Journal

4. Discussion

4.1. Effects of AI-Based Simulations on Learning Experiences: AI Literacy Divide

4.2. Effect on Learning Outcomes and Satisfaction: AI-Enhanced Cognitive Flexibility

4.3. Factors Influencing Usage and Benefits: AI Engagement Patterns

4.4. Limitations of This Study

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Interview Guide: AI-Based Simulation in Introduction to Psychology Course

- 1.

- Can you tell me a bit about your background? (Probe: first-generation college student, ethnic background, prior experience with technology)

- 2.

- What were your initial thoughts when you learned that this course would use an AI-based simulation?

- 3.

- Can you describe your overall experience using the AI-based simulation method in this course?

- 4.

- How did this experience compare to other learning methods you’ve encountered in college?

- 5.

- Were there any aspects of the simulations that you found particularly interesting or challenging?

- 6.

- How did the AI-based simulation affect your interaction with the course material? With your classmates? With the instructor?

- 7.

- In what ways, if any, do you think the AI-based simulation method affected your understanding of the course content?

- 8.

- How satisfied are you with the learning experience provided by the AI-based simulation method?

- 9.

- Do you feel the AI-based simulation method impacted your academic performance in this course? If so, how?

- 10.

- Has this experience influenced your interest in psychology or your academic goals?

- 11.

- How comfortable did you feel using the simulation? Did this change over the semester?

- 12.

- Were there any technical or personal challenges you encountered in using the simulation? How did you overcome them?

- 13.

- Did your prior experience with technology affect how you engaged with the simulation? If so, how?

- 14.

- How did your background or previous educational experiences influence your interaction with the simulation?

- 15.

- Do you think the simulation provided equal learning opportunities for all students in the class? Why or why not?

- 16.

- Were there any aspects of the simulation that you felt were particularly helpful or challenging for you, given your background?

- 17.

- How do you think this type of technology might affect educational opportunities for students from diverse backgrounds?

- 18.

- If you could change something about the simulation or how it was used in the course, what would it be?

- 19.

- Is there anything else you’d like to share about your experience with the simulation that we haven’t discussed?

References

- Billy, I., & Anush, H. (2023). A study of the perception of students and instructors on the usage of artificial intelligence in education. International Journal of Higher Education Management, 9(2), 66–73. [Google Scholar] [CrossRef]

- Bourdieu, P. (1986). The forms of capital. In J. Richardson (Ed.), Handbook of theory and research for the sociology of education (pp. 241–258). Greenwood Press. [Google Scholar]

- Braun, V., & Clarke, V. (2012). Research designs. In H. Cooper (Ed.), APA handbook of research methods in psychology (pp. 57–71). American Psychological Association. [Google Scholar]

- Cai, Q., Lin, Y., & Yu, Z. (2023). Factors influencing learner attitudes towards ChatGPT-assisted language learning in higher education. International Journal of Human–Computer Interaction, 40(20), 7112–7126. [Google Scholar] [CrossRef]

- Deng, X. N., & Yang, X. (2021). Digital proficiency and psychological well-being in online learning: Experiences of first-generation college students and their peers. Social Sciences, 10(6), 192. [Google Scholar] [CrossRef]

- Dumais, S. A., & Ward, A. (2010). Cultural capital and first-generation college success. Poetics, 38(3), 245–265. [Google Scholar] [CrossRef]

- Dwivedi, Y. K., Kshetri, N., Hughes, L., Slade, E. L., Jeyaraj, A., Kar, A. K., Baabdullah, A. M., Misra, S., Bag, S., & Wright, R. (2023). Opinion paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. International Journal of Information Management, 71, 102642. [Google Scholar] [CrossRef]

- Elyoseph, Z., Gur, T., Haber, Y., Simon, T., Angert, T., Navon, Y., Tal, A., & Asman, O. (2024). An ethical perspective on the democratization of mental health with generative artificial intelligence. JMIR Mental Health, 11, e58011. [Google Scholar] [CrossRef] [PubMed]

- Hadar Shoval, D., Haber, Y., Tal, A., Simon, T., Elyoseph, T., & Elyoseph, Z. (2024). Transforming perceptions: Exploring the multifaceted potential of generative AI for people with cognitive disabilities. JMIR Neurotechnology, 4, e64182. [Google Scholar] [CrossRef]

- Hamilton, W., & Hamilton, G. (2022). Community college student preferences for support when classes go online: Does techno-capital shape student decisions? In O. Ariyo, & A. Reams-Johnson (Eds.), Education reform in the aftermath of the COVID-19 pandemic (pp. 59–80). IGI Global. [Google Scholar] [CrossRef]

- Haney, J. L. (2022). Academic integrity at the graduate degree level: Graduate student perspectives on academic integrity and institutional policies [Doctoral dissertation, Indiana State University]. [Google Scholar]

- Kurban, C. F., & Şahin, M. (2024). Findings and interpretation. In The impact of ChatGPT on higher education (pp. 93–131). Emerald Publishing Limited. [Google Scholar] [CrossRef]

- Lee, K. S., & Chen, W. (2017). A long shadow: Cultural capital, techno-capital and networking skills of college students. Computers in Human Behavior, 70, 67–73. [Google Scholar] [CrossRef]

- Livingstone, S., & Helsper, E. (2007). Gradations in digital inclusion: Children, young people and the digital divide. New Media & Society, 9(4), 671–696. [Google Scholar] [CrossRef]

- Lohfink, M. M., & Paulsen, M. B. (2005). Comparing the determinants of persistence for first-generation and continuing-generation students. Journal of College Student Development, 46(4), 409–428. [Google Scholar] [CrossRef]

- Matsieli, M., & Mutula, S. (2024). COVID-19 and digital transformation in higher education institutions: Towards inclusive and equitable access to quality education. Education Sciences, 14(8), 819. [Google Scholar] [CrossRef]

- Nasim, S. F., Ali, M. R., & Kulsoom, U. (2022). Artificial intelligence incidents & ethics a narrative review. International Journal of Technology, Innovation and Management, 2(2), 52–64. [Google Scholar] [CrossRef]

- O’Dea, X. (2024). Generative AI: Is it a paradigm shift for higher education? Studies in Higher Education, 49(5), 811–816. [Google Scholar] [CrossRef]

- Ramdurai, B., & Adhithya, P. (2023). The impact, advancements and applications of generative AI. International Journal of Computer Science and Engineering, 10(6), 1–8. [Google Scholar] [CrossRef]

- Raz Rotem, M., Arieli, D., & Desivilya Syna, H. (2021). Engaging complex diversity in academic institution: The case of “triple periphery” in a context of a divided society. Conflict Resolution Quarterly, 38(4), 303–321. [Google Scholar] [CrossRef]

- Rispler, C., & Yakov, G. (2023). Ethics as a way of life: A case study at Yezreel Valley College. Journal of Ethics in Higher Education, 3, 145–156. [Google Scholar] [CrossRef]

- Said, N., Potinteu, A. E., Brich, I., Buder, J., Schumm, H., & Huff, M. (2023). An artificial intelligence perspective: How knowledge and confidence shape risk and benefit perception. Computers in Human Behavior, 149, 107855. [Google Scholar] [CrossRef]

- Schwartz, S. E. O., Kanchewa, S. S., Rhodes, J. E., Gowdy, G., Stark, A. M., Horn, J. P., Parnes, M., & Spencer, R. (2018). “I’m having a little struggle with this, can you help me out?”: Examining impacts and processes of a social capital intervention for first-generation college students. American Journal of Community Psychology, 61(1–2), 166–178. [Google Scholar] [CrossRef]

- Shams, D., Grinshtain, Y., & Dror, Y. (2024). ‘You don’t have to be educated to help your child’: Parental involvement among first generation of higher education Druze students in Israel. Higher Education Quarterly, 78, 1221–1240. [Google Scholar] [CrossRef]

- UNESCO. (2023a). Guidance for generative AI in education and research. UNESCO. [Google Scholar]

- UNESCO. (2023b). Harnessing the era of artificial intelligence in higher education: A primer for higher education stakeholders. National Resource Hub (Ireland). [Google Scholar]

- Van Dijk, J. A. G. M. (2005). The deepening divide: Inequality in the information society. SAGE Publications. [Google Scholar]

- Van Dijk, J. A. G. M. (2017). Digital divide: Impact of access. In P. Rössler, C. A. Hoffner, & L. van Zoonen (Eds.), The international encyclopedia of media effects (pp. 1–11). John Wiley & Sons, Inc. [Google Scholar] [CrossRef]

- van Heerden, A. C., Pozuelo, J. R., & Kohrt, B. A. (2023). Global mental health services and the impact of artificial intelligence-powered large language models. JAMA Psychiatry, 80(7), 662–664. [Google Scholar] [CrossRef]

- Verdín, D., Godwin, A., Kirn, A., Benson, L., & Potvin, G. (2021). Recognizing the funds of knowledge of first-generation college students in engineering: An exploratory study. Journal of Engineering Education, 110(2), 309–329. [Google Scholar] [CrossRef]

- Warschauer, M. (2004). Technology and social inclusion: Rethinking the digital divide. MIT Press. [Google Scholar]

- Wu, Y. (2023). Integrating generative AI in education: How ChatGPT brings challenges for future learning and teaching. Journal of Advanced Research in Education, 2(4), 6–10. [Google Scholar] [CrossRef]

- Yin, R. K. (2009). Case study research: Design and methods (5th ed.). SAGE Publications. [Google Scholar]

- Zhan, H., Cheng, K. M., Wijaya, L., & Zhang, S. (2024). Investigating the mediating role of self-efficacy between digital leadership capability, intercultural competence, and employability among working undergraduates. Higher Education, Skills and Work-Based Learning. Advance online publication. [Google Scholar] [CrossRef]

- Zhang, K., & Aslan, A. B. (2021). AI technologies for education: Recent research & future directions. Computers and Education: Artificial Intelligence, 2, 100025. [Google Scholar] [CrossRef]

| Demographic Characteristic | Number | Percentage |

|---|---|---|

| Total students | 110 | 100% |

| Female | 99 | 90% |

| Male | 11 | 10% |

| Majority group (Jewish) | 74 | 67.3% |

| Minority group (other ethnicities) | 36 | 32.7% |

| First-generation | 34 | 30% |

| Non-first-generation | 76 | 69.1% |

| Students born outside of Israel | 11 | 10% |

| Non-native Hebrew speakers | 47 | 42.7 |

| Majority Group | Minority Group | p | First-Generation | Non-First Generation | p | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean | s.d | Mean | s.d | Mean | s.d | Mean | s.d | |||

| Before the course, how would you rate your knowledge of artificial intelligence? | 1.29 | 0.46 | 1.11 | 0.31 | t(108) = 2.18, p = 0.031 d = 0.44 | 1.11 | 0.32 | 1.28 | 0.45 | t(108) = −1.97, p = 0.051 d = −0.40 |

| After the course, how would you rate your level of knowledge in artificial intelligence? | 3.25 | 0.74 | 3.02 | 0.81 | t(108) = 1.47, p = 0.143 d = 0.30 | 2.94 | 0.81 | 3.28 | 0.72 | t(108) = −2.2, p = 0.027 d = −0.46 |

| How frequently do you currently use artificial intelligence tools? | 3.25 | 0.74 | 2.63 | 0.76 | t(108) = 4.06, p < 0.001 d = 0.82 | 2.64 | 0.77 | 3.23 | 0.74 | t(108) = −3.78, p < 0.001 d = −0.78 |

| To what degree did the simulations contribute to your understanding of the course material? | 4.43 | 0.57 | 4.77 | 0.42 | t(108) = −3.20, p = 0.002 d = −0.65 | 4.85 | 0.35 | 4.40 | 0.56 | t(108) = 4.19, p < 0.001 d = 0.86 |

| Are you applying the knowledge you acquired in artificial intelligence to other areas related to your studies? | 3.09 | 0.83 | 1.19 | 0.40 | t(108) = 12.98, p < 0.001 d = 2.63 | 1.41 | 0.65 | 2.94 | 0.99 | t(108) = −8.24, p < 0.001 d = −1.70 |

| Majority Group | Minority Group | p | First-Generation | Non-First Generation | p | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Yes | No | Yes | No | Yes | No | Yes | No | |||

| Did you purchase a paid subscription to any artificial intelligence platforms? | 22 (29.7%) | 52 (70.3%) | 4 (11.1%) | 32 (88.9%) | χ2(1) = 4.65, p = 0.031, φ = 0.20 | 5 (14.7%) | 29 (85.3%) | 21 (27.6%) | 55 (72.4%) | χ2(1) = 2.17, p = 0.140, φ = 0.14 |

| Did you use artificial intelligence in writing your semester assignments? | 62 (83.8%) | 12 (16.2%) | 14 (38.9%) | 22 (61.1%) | χ2(1) = 22.85, p < 0.001, φ = 0.45 | 5 (14.7%) | 29 (85.3%) | 71 (93.4%) | 5 (6.6%) | χ2(1) = 68.15, p < 0.001, φ = 0.78 |

| Did you use artificial intelligence to study for your semester exams? | 60 (81.1%) | 14 (18.9%) | 25 (69.4%) | 11 (30.6%) | χ2(1) = 1.86, p = 0.172, φ = 0.13 | 20 (58.8%) | 14 (41.2%) | 65 (85.5%) | 11 (14.5%) | χ2(1) = 9.53, p = 0.002, φ = 0.29 |

| Did you use artificial intelligence to compose emails or draft written requests to official bodies or authorities? | 68 (91.9%) | 6 (8.1%) | 27 (75.0%) | 9 (25.0%) | χ2(1) = 5.868, p = 0.015, φ = 0.231 | 21 (61.8%) | 13 (38.2%) | 74 (97.4%) | 2 (2.6%) | χ2(1) = 25.285, p < 0.001, φ = 0.479 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hadar Shoval, D. Artificial Intelligence in Higher Education: Bridging or Widening the Gap for Diverse Student Populations? Educ. Sci. 2025, 15, 637. https://doi.org/10.3390/educsci15050637

Hadar Shoval D. Artificial Intelligence in Higher Education: Bridging or Widening the Gap for Diverse Student Populations? Education Sciences. 2025; 15(5):637. https://doi.org/10.3390/educsci15050637

Chicago/Turabian StyleHadar Shoval, Dorit. 2025. "Artificial Intelligence in Higher Education: Bridging or Widening the Gap for Diverse Student Populations?" Education Sciences 15, no. 5: 637. https://doi.org/10.3390/educsci15050637

APA StyleHadar Shoval, D. (2025). Artificial Intelligence in Higher Education: Bridging or Widening the Gap for Diverse Student Populations? Education Sciences, 15(5), 637. https://doi.org/10.3390/educsci15050637