1. Introduction

Language learning has undergone substantial transformation due to the rapid development of artificial intelligence (AI), which has made AI-assisted language applications (hereafter AiLA) effective tools for improving English and other language proficiencies. AiLA includes a range of digital platforms, such as online teaching platforms, translation services, speech recognition software, writing aids, and language learning applications. These technologies use real-time analysis, feedback, and AI-driven customization to adjust to the needs of learners and offer focused assistance (

Chiu et al., 2023;

Crompton & Burke, 2023). Even though AI has significantly transformed educational settings, questions remain regarding its effectiveness—particularly of whether the duration of use, familiarity, satisfaction, and frequency of engagement with AiLA contribute to improved English learning outcomes.

According to earlier research, technology by itself cannot ensure learning success; rather, user engagement, cognitive processing, and meaningful pedagogical integration are necessary for its efficacy (

Bax, 2003). The Zone of Proximal Development (ZPD) (

Vygotsky, 1979) and constructivism (

Piaget & Cook, 1952) are helpful theories for comprehending ways AiLA might scaffold learning through tailored exercises, adaptive feedback, and real-world language engagement (

Chapelle, 2009;

Godwin-Jones, 2018). However, learning results might not alter if students engage with AiLA passively without receiving systematic guidance (

Stockwell, 2012). This begs this important question: does students’ exposure to AiLA affect their learning outcomes when learning English?

According to a recent study on AI in education, harnessing the advantages of technology requires user motivation, engagement, and digital literacy (

H. Lee, 2024). Therefore, a lack of structured learning strategies or digital abilities may cause some students to suffer, while others may find AiLA to be very helpful (

Pérez-Paredes & Zhang, 2022;

Uzun, 2024). Moreover, students’ preference for particular features does not always translate into improved language skills. This is because tangible learning gains are not usually the result of using AI-based tools with satisfaction (

Bernacki et al., 2020;

Hubbard, 2021). As such, the need for more research into whether AiLA usage, familiarity, and satisfaction predict English learning outcomes is highlighted by these conflicting results.

Such implies that there are still gaps in understanding how students in tertiary education utilize AiLA. Existing literature provides insights into AI-driven language learning; however, many studies explore general perceptions of AI-based learning (

Y.-J. Lee et al., 2024;

Losi et al., 2024;

Ma & Wang, 2020;

Qiao & Zhao, 2023;

Yunina, 2023). This study fills these research gaps by investigating the relationship between students’ use of AiLA and their self-reported beliefs about their perceived improvements in English learning. Nevertheless, it is important to note that this study uses Likert-scale survey responses to measure students’ self-perceived learning improvement rather than direct evaluations of English language skills. It means that subjective perceptions rather than objectively measured language gains are represented in these data. This differentiation is essential for analyzing the results because self-reported results could not match actual competency improvement. Hence, four (4) research questions are formulated in this study.

Is there a significant correlation between the duration of AiLA usage and English learning outcomes?

Is there a significant correlation between satisfaction with AiLA and improvements in English learning outcomes?

What is the impact of familiarity with AiLA on measurable learning outcomes in English proficiency?

To what extent does the frequency of using AiLA affect students’ English learning outcomes?

This study supports pedagogical paradigms including constructivism and the Zone of Proximal Development (ZPD) and adds to the expanding debate on AI’s role in education. The results offer educators significant details relating to how AiLA affects English language acquisition, empowering them to decide the most effective way to incorporate it into the curriculum. By being aware of its impacts, educators may create more engaging lessons that encourage participation and scaffolded support, which will improve language learning in the long run.

2. Literature Review

2.1. Categorizing AiLAs for Language Learning

The term AiLAs is used in this study to refer to AI tools, independent of their initial aim, that facilitate language acquisition through adaptive features.

Table 1 shows the surveyed AiLAs in the current study as they feature real-time feedback (Grammarly) or voice interaction (Siri), to name a few, to connect with the primary design aim of specific tools and their language learning value to shed light on their pedagogical repurposing. These are in contrast with conventional digital board games (

Ali et al., 2018), web-based platforms (

Ali et al., 2022a), or standalone devices (

Ali et al., 2020) since AiLAs provide intelligent, dynamic, and responsive support, going beyond static learning tools. With AI-driven scaffolding and feedback, even typical technology-based tasks, like student-made movies (

Ali et al., 2022b), can be greatly improved.

Table 1 shows the AI language learning tools evaluated by students in the current study.

2.1.1. AI-Assisted Language Applications (AiLAs)

In this work, we coined the term AI-assisted language applications, or AiLAs, to describe AI-driven applications intended to improve language acquisition. These apps use AI’s capacity to customize learning experiences to help users build both receptive (reading and listening) and productive (speaking and writing) abilities. AiLA maximizes language acquisition and engagement by analyzing individual needs in real time and creating adaptive learning paths (

Chiu et al., 2023;

Crompton & Burke, 2023). In contrast with conventional digital board games (

Ali et al., 2018), web-based platforms (

Ali et al., 2022a), or standalone devices (

Ali et al., 2020), AiLA provides intelligent, dynamic, and responsive support, going beyond static learning tools. With AI-driven scaffolding and feedback, even typical technology-based tasks, like student-made movies (

Ali et al., 2022b), can be greatly improved.

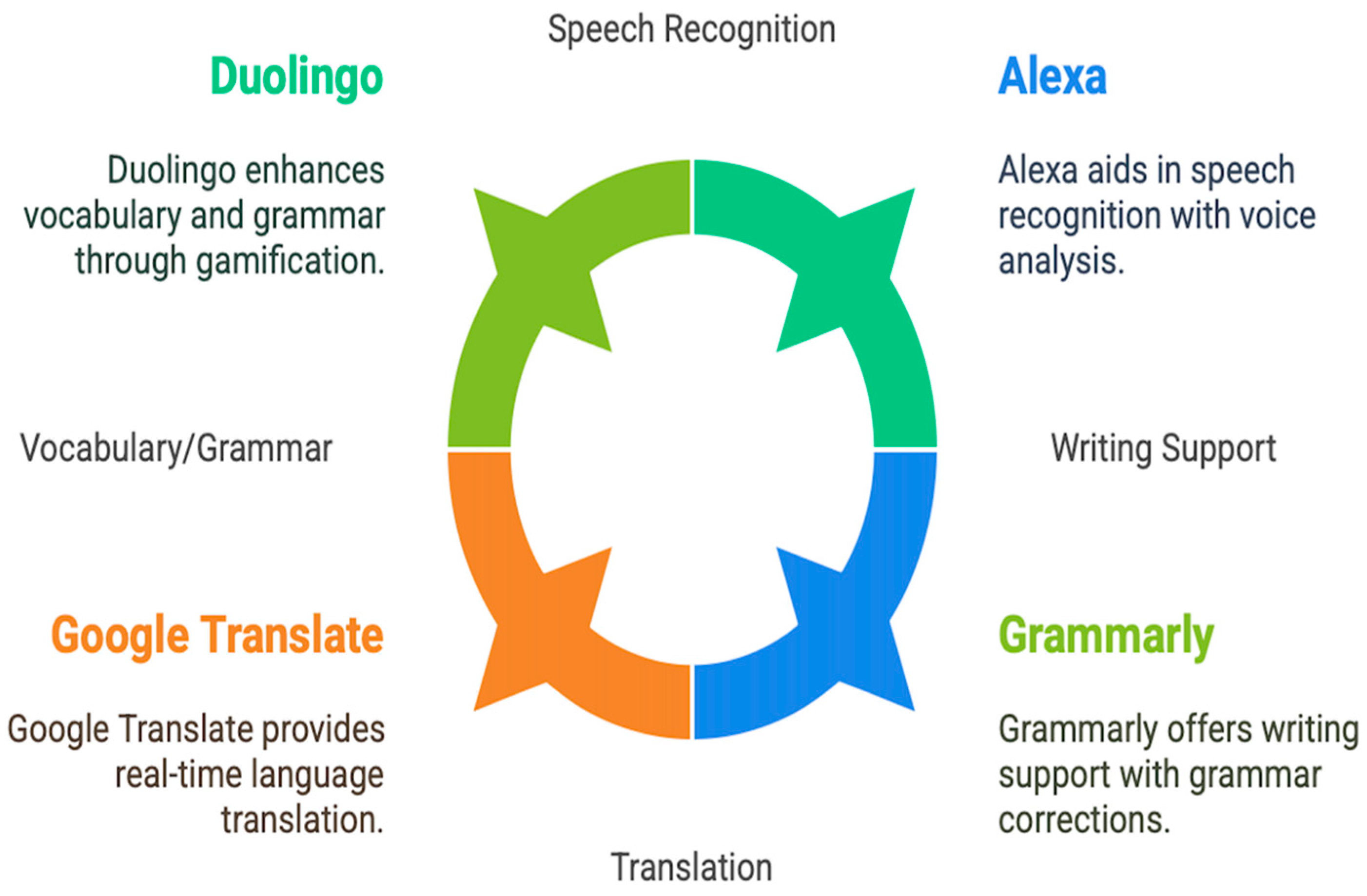

Scheme 1 divides AiLAs into five main categories, all of which improve language acquisition by utilizing AI-powered interaction, feedback, and personalization. They are the following:

Language Learning Apps (e.g.,: Duolingo, Babbel, and Rosetta Stone): Enhance vocabulary and grammar with gamification and adaptive instruction.

Speech Recognition Software (e.g.,: Siri and Alexa): AI-powered voice analysis can assist with conversational skills and pronunciation.

Language Translation Tools (e.g.,: Google Translate and others): Offer real-time audio and text translation for language comprehension.

Writing Support Tools (e.g.,: Grammarly, Quillbot, and ChatGPT): Provide sentence rewording, grammar corrections, and writing improvements.

2.1.2. The Roles of AI in Language Learning

The roles AI play in language learning involve technological advancement. It ensures that the language learning process is personalized, efficient, and engaging, making it vital to comprehend its impact to fully grasp its potential in promoting language learning (

Arani, 2024;

Zakaria et al., 2024).

Moreover, the ability of AI to promote self-regulated learning, which contributes to its effectiveness as a learning assistant, essentially defines its role in language learning.

Qiao and Zhao (

2023) emphasize that the effectiveness of AI in language learning is due to its capacity for self-regulated learning, allowing learners to set their own goals and adapt their learning strategies. AiLA has the capacity to analyze learners’ strengths and weaknesses, which permit for a personalized learning experience. It also provides recommendation on what and how to improve the underdeveloped skills, which helps learners to adjust learning strategies accordingly. With this AI support, learners have greater control over their learning process, and it sets as the primary motivator to achieve better learning outcomes (

Moybeka et al., 2023).

Another role of AI in language learning is that it assists collaboration among learners.

Avsheniuk et al. (

2024) highlight that this collaboration exposes learners to real-world language use and encourages meaningful interactions among them. He further argues that AiLA can connect learners from all around the world based on learners’ preferences and level, as well as promote opportunities to engage in authentic language exchange. Some AiLAs integrate collaborative tools such as group activity and multiplayer games to encourage learners to work in a team, share their ideas, and eventually improve their communication skills (

Ng et al., 2021).

Additionally, AI-assisted language learning serves the role of being a learner’s virtual assistant. The AI advancement, especially with AiLAs being conversational agents, has altered the English language teaching atmosphere. A study by

Zuhdy Idham et al. (

2024) revealed that AI chatbots provided platforms for learners to practice their language by preparing interactive conversations. For instance, learners engage in interactive conversations related to actual scenarios such as ordering food for lunch, helping them utilize language skills in a real-world context.

Fitria (

2021), in her study, found that AI-powered applications such as Grammarly assisted and significantly improved learners’ writing skills. The success of this application in improving learners’ writing skills lay in the advanced AI algorithms to analyze text and provide real-time feedback on various aspects of writing, including grammar, punctuation, style, and tone. This immediate feedback helped learners identify and correct errors as they wrote, leading to improved writing quality over time.

2.1.3. Understanding Learning Outcomes in Learning

Learning language involves basic to higher-order thinking skills; hence, a well-structured learning framework should be integrated to optimize learning outcomes. Bloom’s Taxonomy by

Bloom (

1956) is a relevant guide in structuring a learning framework. This taxonomy structuring learning aims into the six levels of remembering, understanding, applying, analyzing, evaluating, and creating. The levels progress from basic to higher-order thinking skills. This progression allows learners to grow gradually by learning from the most basic to complex language skills. This is because language learning involves not just mastering vocabulary and grammar but also developing the ability to analyze, evaluate, and create with the language. Bloom’s Taxonomy, which emphasizes a spectrum of cognitive processes from lower-order thinking skills (LOTS), such as recall and understanding, to higher-order thinking skills (HOTS), including analysis, evaluation, and creation, provides a framework that can aid learners in optimizing their language learning outcomes.

The understanding of learning outcomes in learning could also be discussed under the context of evaluating educational effectiveness.

Douglass et al. (

2012) created a framework to evaluate educational effectiveness and claimed that learning that follows this framework may elevate learning outcomes. The framework emphasizes three (3) aspects.

This categorization is essential for defining clear educational objectives and ensuring that assessments align with these objectives.

- 2.

Learner self-evaluation of educational effectiveness

Self-assessment encourages students to reflect on their own learning, identify their strengths and weaknesses, and set personal goals for improvement.

- 3.

Input–process–outcome model

The model delineates three critical stages in the educational process: inputs (the resources, curriculum, and instructional strategies), processes (the methods of teaching and learner engagement), and outcomes (the results of teaching and learning).

2.1.4. The Importance of Learning Outcomes in Learning English

Mahajan and Singh (

2017) argued that identifying learning outcomes can enhance English language learning by structuring a well-organized approach to teaching and learning. They added that a well-ordered approach must have clear objectives since they serve as guidance for effective instruction. Moreover, clear objectives provide educators with a roadmap for their instructions (

Habók & Magyar, 2018). By defining what students should know and be able to perform by the end of a lesson or course, educators can plan their teaching more effectively. This clarity helps in selecting relevant materials and activities that align with the intended learning outcomes, making the learning process more focused and effective.

The importance of learning outcomes in learning English should also be seen from the learners’ perspective. Their perspective is a pivotal aspect to ensure that learners are aware of how to achieve the targeted learning outcomes. One significant perspective is their motivation towards reaching the targeted learning outcomes. A study by

Peng (

2021) supported the idea that a motivated learner shows deep engagement with the language, leading to improved learning outcomes. Moreover, it is argued that motivated students who actively use the language produce greater learning results (

Masruddin & Al Hamdany, 2023). Such is due to the fact that motivation is a powerful catalyst in the English language learning process, significantly enhancing learners’ ability to acquire and master new skills. Therefore, when learners are motivated, they learn the language with enthusiasm and dedication, which facilitates the attainment of targeted learning outcomes.

2.1.5. The Role of AI in Addressing Challenges in Language Learning

One significant challenge in achieving learning outcomes is poor instructional design in language learning classrooms. The traditional pedagogical approaches may not be suitable to diverse learners and digital natives. It is because outdated teaching methods fail to engage learners effectively, leading to suboptimal learning outcomes (

Gautam & Gautam, 2021). When lessons are not structured effectively to accommodate these diverse learners, learners are not engaged in the classroom. Consequently, their motivation wanes and teachers have difficulties achieving desired learning outcomes. For tertiary learners, creative and purposeful instructional strategies are required to motivate them to achieve learning outcomes (

Khanshan & Yousefi, 2020). Incorporating technology through differentiated instructional strategies has shown to increase students’ motivation and students’ attention (

Krishan & Al-Rsa’I, 2023). Nevertheless, AI has the potential to address these challenges through tailored pedagogical approaches and the provision of interactive platforms, which helps to increase students’ engagement in learning activities (

Wei, 2023;

Yang & Kyun, 2022). When learners feel that they belong in the learning experience, there is a high possibility for learners to achieve targeted learning outcomes.

Another significant challenge in the tertiary level is the limited infrastructure to cater to current technological needs.

Tadesse and Muluye (

2020) argue that this issue was made worse particularly after the pandemic, when many learners were unable to access learning materials due to a lack of infrastructure. Additionally, many educators were not equipped with this technology and the knowledge and skills on how to use technology for language learning. This rapid transition forced unprepared educators to deliver online pedagogies, which resulted in ineffective instructional delivery and poor learning outcomes (

Liu, 2023). Due to this challenge, educational institutions and internet service providers can collaborate to subsidize internet cost, and thus, online learning resources can be accessed by all.

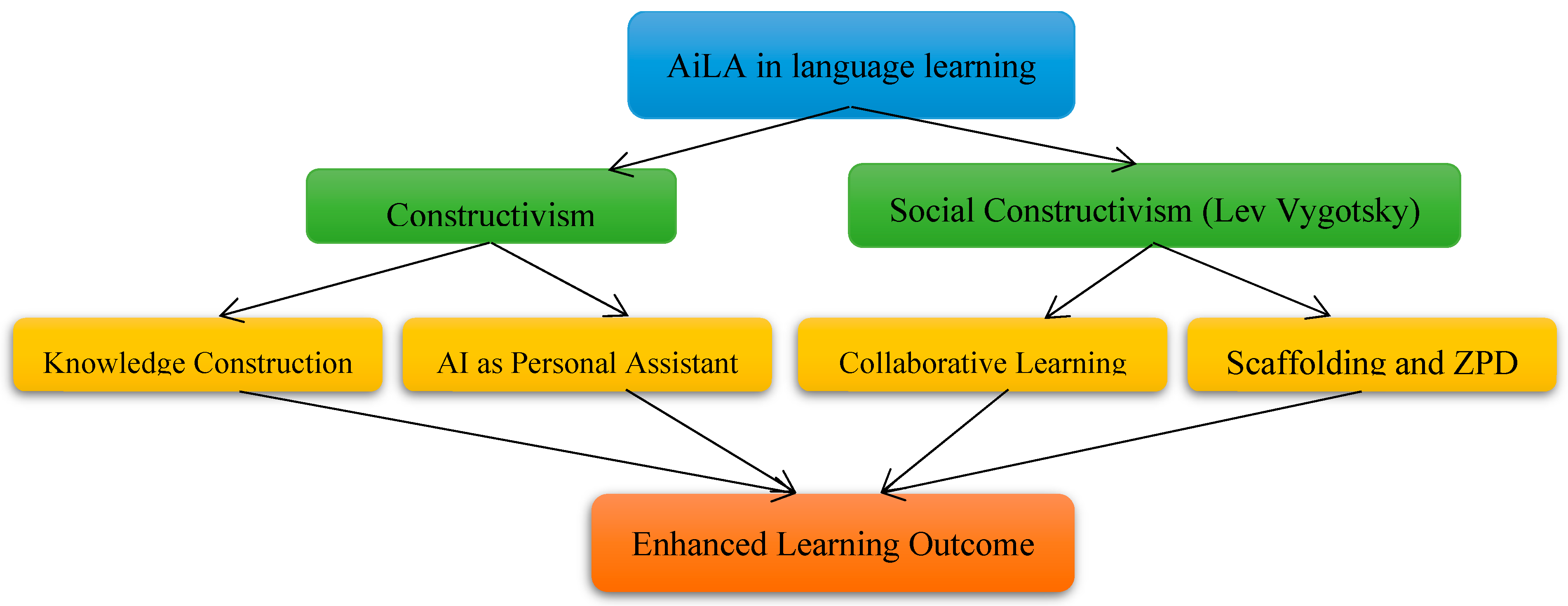

2.1.6. Theories of AiLA in Learning Outcomes of English Language Learning

The integration of AiLA in language learning has led to the emergence of various theoretical frameworks that clarify the learning mechanisms in enhancing learning outcomes. Constructivism is a theory developed by Jean Piaget that holds that students actively construct knowledge through experiences (

Vygotsky & Cole, 1978), and AiLAs support this idea. In particular, the use of speech recognition software (Alexa, Siri) and language applications (Duolingo, Babbel) allows for interactive and self-paced learning. The ZPD and interaction with others are essential elements of learning, according to Lev Vygotsky’s social constructivism (

Vygotsky & Cole, 1978). This can be found in the scaffolding and adaptive learning offered by writing support tools such as Grammarly, Quillbot, and ChatGPT, as well as translation systems in Google Translate.

Moreover, it is described that AI is able to act as a personal assistant that provides autonomous practice and immediate feedback, and as a result, learning is more engaging and personalized (

De la Vall & Araya, 2023;

Dizon & Tang, 2020). It should also be mentioned that the effectiveness of AiLAs in language learning is factorized by collaborative learning, which is grounded by social constructivism. This means that AI platforms can serve as collaborative learning assistants to scaffold learners based on their current progress or known as ZPD (

Huang et al., 2023). By providing adaptive scaffolding that aligns with learners’ ZPD, AiLAs facilitate deeper learning and engagement, which promise that learning outcomes are achieved.

The theoretical framework shown in

Figure 1 demonstrates that AiLA enhances learning outcomes by promoting language acquisition through constructivism and social constructivism. AiLA functions as a personal assistant that facilitates knowledge production consistent with Jean Piaget’s constructivist theory, which emphasizes that learners actively develop knowledge through engagement. Conversely, Lev Vygotsky’s social constructivism emphasizes the value of collaboration and scaffolding using the ZPD.

3. Methodology

The research technique employed in this study is described in this section, including the essential components required to guarantee a thorough and organized investigation. The validity and reliability of the research instrument, study sample, and research design are all addressed. Furthermore, the techniques for data collection and analysis are provided in detail, providing a clear knowledge of how the study was carried out and the findings arrived at.

3.1. Research Design

This study used a quantitative research approach, gathering information on learning outcomes when students utilize AiLA to learn English through a survey method. The efficacy of AiLA in language acquisition can be evaluated using quantitative research since it enables objective measurement of variables and systematic data collection (

Creswell, 2014). Due to its effectiveness in collecting data from a large number of participants in a very short amount of time, the survey approach was selected (

Dörnyei, 2003). Furthermore, surveys provide uniformity and consistency in collecting data while allowing researchers to identify trends and patterns in student learning experiences (

Bryman, 2016). This method adds to our understanding of technology-enhanced language learning by offering insightful information on how AiLA affects learning outcomes.

3.2. The Context of the Study

In terms of the linguistic context, English was used as the participants’ second language, while they spoke Malay as their first language. Based on the institutional assessments, their English proficiency levels (grades B to A) fell between the Common European Framework of Reference for Languages (CEFR) scale’s Independent User (B1) and Proficient User (C1) levels. Therefore, they were in their intermediate-to-advanced band, which ensured relative homogeneity in baseline proficiency even though there were some slight discrepancies within this range. This relatively consistent proficiency background allowed for a more focused exploration of their experiences with AI-assisted language applications. They used a variety of AiLAs on their own to aid in their learning, such as Google Translate for vocabulary help, Grammarly for writing correction, Quillbot for paraphrasing, and ChatGPT for conversation practice. This means that these resources were not formally included in the course curriculum. On the other hand, the study’s emphasis on perceived learning improvements rather than actual course outcomes is indicative of its goal of documenting students’ varying subjective experiences with AiLA. This study conceptualized learning improvements through four key dimensions: (1) overall self-evaluated proficiency growth, (2) applied linguistic skill development, (3) increased communication confidence, and (4) progress toward self-identified language goals. Different from institutional curricular aims, these features arose from learners’ autonomous or directed interactions with AiLA in and outside the classroom. This method demonstrates the mutually beneficial role of AI in both formal and informal learning settings by emphasizing learner viewpoints.

3.3. Research Instrument

This study employs a questionnaire as a research tool, which includes five domains to investigate AiLA use from multiple perspectives. Demographic data, learning outcomes, attitudes, self-efficacy, and test anxiety are the variables that are formulated in the questionnaire. However, only the learning outcomes variable is utilized to ascertain if AiLA predicts students’ learning outcomes in English learning for a more focused study. To measure this characteristic, a 5-point Likert scale from “Strongly Agree” to “Strongly Disagree” is used. To evaluate this aspect, ten (10) items in total are included. The construct “perceived English learning improvement” includes nine items that measure four important facets: (1) Goal Attainment, in which participants assess if the AI tools assist them in reaching their own goals; (2) Skill Proficiency, which records self-reported improvements in vocabulary retention, grammar/syntax mastery, and overall comprehension; (3) Communication Confidence, which assesses improved self-assurance in the use of English; and (4) Proficiency self-assessment, which reflects overall assessments of progress. The four important facets and the nine items are as follows:

3.4. Validity and Reliability of Research Instrument

To guarantee the questionnaire’s appropriateness, relevance, and clarity, content and face validity were carried out (

Fraenkel et al., 2012;

Yagmale, 2003). Face validity evaluates how well items subjectively reflect the desired constructs (

Fraenkel et al., 2012), whereas content validity guarantees an accurate representation of constructs (

Checkoway, 2004;

Chiwaridzo et al., 2017). Before being distributed, the questionnaire was checked for technical flaws, accuracy, and clarity. The principal author requested three (3) of her colleagues to confirm content validity and checked face validity. The reliability of the measured items is shown in

Table 2, where Cronbach’s alpha value is 0.947.

Pallant (

2005) states that while values of 0.80 or higher are advised, those above 0.70 are deemed acceptable. The questionnaire’s strong reliability score attests to its suitability for gathering data.

3.5. Data Collection Procedures

Five (5) essential steps made up the structured approach to data collection, which ensured methodical data collection and analysis. They included data recording, data transfer, distribution of the survey, response gathering, and statistical analysis.

Table 3 shows the data collection procedures used in this study.

3.6. Data Analysis Procedures

The data in the study were examined using appropriate statistical techniques. For Research Questions 1 and 2, Pearson correlation was utilized; meanwhile, for Research Questions 3 and 4, multiple regression analysis and ANOVA were employed. Furthermore, the direction and magnitude of the connections were identified using

Cohen et al.’s (

2007) effect size. The interpretation of the correlation values utilized in the data analysis is shown in

Table 4.

4. Findings and Discussion

4.1. Research Question 1: The Relationship Between the Duration of AiLA Usage and English Learning Outcomes

Using the Pearson product-moment correlation coefficient, the relationship between the length of time spent using AiLA and gains in English learning outcomes was examined. To make sure that there were no infractions of the assumptions of normality, linearity, and homoscedasticity, preliminary studies were performed. The findings showed a medium positive correlation (r = 0.402,

n = 55,

p = 0.002) between the two variables, suggesting that higher levels of learning outcomes are linked to longer AiLA use durations.

Table 5 shows the results of the Pearson correlation between duration and learning outcomes of using AiLA.

There are many positive impacts of AiLA on language acquisition, highlighting their potential effectiveness in enhancing learners’ proficiency over time (

Vadivel et al., 2023). While no specific study explicitly defines an optimal duration of usage required to maximize learning outcomes, a study by

Chaudhary et al. (

2024) suggests that increased engagement of AI tools significantly contributes to better learning outcomes. According to

Chaudhary et al. (

2024), there is a strong correlation between frequent usage of this tool and enhancing learning outcomes. The ability to practice language in context improves retention and contributes to the development of language skills (

Kolegova & Levina, 2024).

These studies strongly support the idea that when there are increased exposure and more practices using AiLA, it fosters long-term language acquisition and students can achieve better learning outcomes. For tertiary-level students who have easy access to these AiLA tools, using them helps them to significantly improve their language skills and have better learning experiences. Additionally, constructivism theory is supported by the effectiveness of AiLA tools since they facilitate active, context-rich engagement, which enables students to build knowledge through exposure and practice.

Piaget and Cook (

1952) believe that experience and interaction are the foundations of learning, and this validates their theory. Nevertheless, to properly embody constructivist concepts, AiLA tools might need to include more social and collaborative components.

4.2. Research Question 2: The Relationship Between Satisfaction with AiLA and Improvements in English Learning Outcomes

The results showed a very low (0.037) Pearson connection between learning outcomes and satisfaction with AiLA. This implies that the two variables have very little in common with one another. Further evidence that shows that this link is not statistically significant is provided by the significance value (

p = 0.787), which suggests that learning results are not strongly predicted by satisfaction with AiLA. The findings of this study, which involved 55 participants, imply that factors other than participant satisfaction with the AiLA tool itself might be affecting the learning outcomes as depicted in

Table 6.

Although this study showed a low correlation between satisfaction with using AiLA and learning outcomes, there are studies indicating that factors such as reliability of the AI tools (

Fakhri et al., 2024), perceived ease of use (

Kashive et al., 2021), and versatility and multifaceted utility of AI tools (

Pavlenko & Syzenko, 2024) contribute to high learners’ satisfaction and eventually contribute to learning outcomes. Students may express satisfaction with the immediate feedback and level of engagement by AiLA, but this does not equate to actual learning outcomes. In this study, satisfaction is not a strong predictor of improved learning outcomes, but other factors like reliability, ease of use, and versatility offer more significant roles in achieving learning outcomes.

Constructivism provides a useful framework for comprehending the weak relationship between learning outcomes and satisfaction using AiLA tools. Constructivism holds that knowledge is constructed through meaningful engagement and interaction with tools and environments, making learning an active process. Constructivism points out that deep learning outcomes rely on the extent to which students interact with and internalize the content, not the degree to which they are with the tool itself, even though satisfaction with AiLA tools may represent favorable user experiences similar to simplicity of use or instant feedback. The weak relationship may also be explained by the fact that constructivism emphasizes the value of social interaction and individual differences in learning, which AiLA tools might not adequately address. Therefore, although AiLA tools can encourage active learning, their potential to provide meaningful learning outcomes may rely more on their ability to enable deeper cognitive engagement and flexibility to meet the demands of a wide range of learners than on how well they elicit satisfaction.

4.3. Research Question 3: The Impact of Familiarity with AiLA on Measurable Learning Outcomes in English Proficiency

Generally, the analysis of the findings for this research question shows that the use of multiple regression analysis, measuring learning outcomes in English proficiency, is not statistically affected by the students’ familiarity with AiLA. With a score of −0.068, the Pearson correlation between learning outcomes (Mean LO) and AiLA familiarity is very weak, indicating a minimal negative connection. Additionally, a

p-value of 0.312, which is higher than the generally accepted cutoff of 0.05, indicates that the relationship is not statistically significant. With a

p-value of 0.623 and an unstandardized coefficient for learning outcomes (Mean LO) of −0.062, the regression analysis shows a lack of predictive connection. Only 0.5% of the variance in AI familiarity can be accounted for by learning outcomes, according to the model’s R2 value of 0.005. Additionally, the adjusted R2 value is negative (−0.014), indicating that the model does not fit the data well. The conclusion that the regression model is not statistically significant is further supported by the ANOVA findings, which display an F-statistic of 0.244 with a significance value of 0.623. Overall, the results imply that students’ English proficiency results are not substantially impacted by their experience with AiLA. All these results are shown in

Table 7,

Table 8,

Table 9 and

Table 10.

According to the findings, including AiLAs into language instruction requires a pedagogically sound strategy. Instructors should create planned activities that encourage participation and active learning rather than merely getting students more accustomed to the artificial intelligence tools (

Reinders & Benson, 2017). According to

Bax (

2003), technology by itself cannot ensure better learning results; rather, its efficacy is dependent on its capacity to promote cognitive engagement and meaningful pedagogical integration. When AiLAs are in line with constructivist learning principles, which prioritize learner autonomy, cooperation, and active participation, they can be especially advantageous (

Chapelle, 2009;

Godwin-Jones, 2018). According to studies, AiLAs work best when they offer interactive learning opportunities, scaffolding, and adaptive feedback (

Chen et al., 2020;

Warschauer, 2011). However, students may use the technology passively if they lack the right instructional support, which would limit their ability to improve their language skills (

Garrett, 2009;

Stockwell, 2012).

Additionally, the attitudes, motivation, and digital literacy of learners influence how effective AiLAs are. According to research, students who have more favorable attitudes toward technology and demonstrate a greater level of self-efficacy tend to benefit more from digital learning resources (

Zheng & Warschauer, 2017). Additionally, those who lack digital literacy may find it difficult to use and navigate AiLAs, which could result in poorer learning results (

Pérez-Paredes & Zhang, 2022;

Uzun, 2024). The way that AiLAs are included into the curriculum is another important factor. Research highlights the need for clear instructional techniques to support students’ effective use of technology-enhanced language learning (TELL) (

Hubbard, 2021;

Levy, 2017). Access to AiLA without organized instruction has the danger of fostering surface-level participation rather than deep learning (

Bernacki et al., 2020). Therefore, instructors need to provide activities that promote deep language processing, for instance, through group projects, interactive debates, and real-world applications, to increase the effectiveness of integrating artificial intelligence in language classes (

Dornyei & Ryan, 2015).

4.4. Research Question 4: The Impact of AiLA Usage Frequency on Students’ English Outcomes

The results show that learning outcomes (Mean LO) are not significantly impacted by user satisfaction with AiLA. With a non-significant

p-value of 0.393 and a Pearson correlation of 0.037, the two variables have little to no connection. With a

p-value of 0.787 and an unstandardized coefficient for satisfaction of 0.035, the regression analysis similarly shows a weak effect, indicating no meaningful predictive value. The negative adjusted R2 (−0.017) and the model’s R

2 value of 0.001 show that satisfaction accounts for a relatively small portion of the variation in learning outcomes. Furthermore, the model’s lack of statistical significance is confirmed by the ANOVA results (

F-value = 0.074,

p = 0.787). In summary, how satisfied students are with AiLA has little to no influence on the students’ learning outcomes.

Table 11,

Table 12,

Table 13,

Table 14 and

Table 15 display each of these findings accordingly.

The results show that user satisfaction with AiLA has no discernible impact on learning outcomes, indicating that merely being satisfied with the tool is not sufficient to improve language ability. This is consistent with research that highlights the need for AI-powered educational tools to be intentionally included in pedagogy to facilitate meaningful learning, going beyond improvements to the user experience (

Shoeibi et al., 2023). The significance of directed learning, in which students are given the proper scaffolding to close the gap between their present abilities and prospective development, is highlighted by Vygotsky’s ZPD. According to research on AI in education, successful engagement and information retention depend more on tailored learning experiences than merely technological convenience (

Lye, 2021).

Furthermore, studies on e-learning systems indicate that although user satisfaction may affect the adoption of a tool, it does not always result in better learning results unless the technology offers organized and flexible support (

Idkhan & Idris, 2023). Therefore, rather than only acting as a passive language tool, AiLA needs to be created to be in line with the ZPD principles by providing interactive learning opportunities, scaffolding, and adaptive feedback that actively include students in the learning process (

Huang et al., 2023;

Luckin & Cukurova, 2019).

5. Conclusions

The purpose of this study was to investigate how AiLA usage, familiarity, and satisfaction relate to the prediction of English learning outcomes. The results show that while longer AiLA usage is somewhat linked to better learning outcomes, language proficiency is not significantly impacted by familiarity or satisfaction with AiLA. In terms of pedagogical implications, the results indicate that rather than being used as passive supplements, AI tools should be carefully included in instruction in languages through interactive, organized forms (such as scaffolded group projects or guided practice sessions). An intentional lesson design that positions AI as an active learning partner is necessary for educators, and developers must give pedagogically informed features such as collaborative interfaces and adaptive feedback systems the most importance to make these tools dynamic platforms for guided language development.

This study does, however, have certain drawbacks. The breadth of learners’ experiences and cognitive engagement with AiLA may not be adequately captured by relying solely on quantitative statistics. Additionally, the study was restricted to a particular sample, which can have an impact on how broadly the findings can be applied to other language learning environments. Future studies should include qualitative methods, including case studies and interviews, to examine learners’ perceptions, challenges, and interactions with AiLA in order to overcome these constraints. Longitudinal studies may also shed more light on the long-term effects of AiLA on language development, confirming its status as a powerful and dynamic teaching aid.

Author Contributions

Conceptualization, Z.A. and S.K.B.; methodology, Z.A.; software, S.N.A.M.; validation, Z.A., S.K.B. and S.Z.M.; formal analysis, Z.A.; investigation, S.N.A.M.; resources, S.K.B.; data curation, S.N.A.M.; writing—original draft preparation, Z.A.; writing—review and editing, S.K.B.; visualization, S.Z.M.; supervision, Z.A.; project administration, S.K.B.; funding acquisition, S.K.B. All authors have read and agreed to the published version of the manuscript.

Funding

We extend our sincere gratitude to Universiti Malaysia Pahang Al-Sultan Abdullah and Multimedia University for their support through the matching grant (RDU233202 UMPSA) and (MMUE/230077).

Institutional Review Board Statement

The study was approved by the Research Ethics Committee of Multimedia University. The approval number is: EA0192025.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to the privacy regulations.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ali, Z., Bakar, N., Ahmad, W., & Saputra, J. (2022a). Evaluating the use of web-based games on students’ vocabulary retention. International Journal of Data and Network Science, 6(3), 711–720. [Google Scholar] [CrossRef]

- Ali, Z., Mohamad Ghazali, M. A. I., Ismail, R., Muhammad, N. N., Zainal Abidin, N. A., & Abdul Malek, N. (2018). Digital Board Game: Is there a need for it in language learning among tertiary level students? MATEC Web of Conferences, 150, 05026. [Google Scholar] [CrossRef]

- Ali, Z., Mohamed Anuar, A. M., Mansor, N. A., Abdul Halim, K. N., & Sivabalan, K. (2020). A preliminary study on the uses of gadgets among children for learning purposes. Journal of Physics: Conference Series, 1529(5), 52055. [Google Scholar] [CrossRef]

- Ali, Z., Mohd Ali, A. Z., Shamsul Harbi, H., Jariyah, S. A., Nor, A. N. M., & Sahar, N. S. (2022b). Help me to find a job: An analysis of students’ delivery strategies in video resume. Asian Journal of University Education (AJUE), 18(2), 489–498. [Google Scholar] [CrossRef]

- Arani, S. M. N. (2024). Navigating the future of language learning: A Conceptual review of AI’s role in personalized learning. Computer-Assisted Language Learning Electronic Journal, 25(3), 1–22. [Google Scholar]

- Avsheniuk, N., Lutsenko, O., Svyrydiuk, T., & Seminikhyna, N. (2024). Empowering language learners’ critical thinking: Evaluating ChatGPT’s role in english course implementation. Arab World English Journal, 1(1), 210–224. [Google Scholar] [CrossRef]

- Bax, S. (2003). CALL—Past, present and future. System, 31(1), 13–28. [Google Scholar] [CrossRef]

- Bernacki, M. L., Greene, J. A., & Crompton, H. (2020). Mobile technology, learning, and achievement: Advances in understanding and measuring the role of mobile technology in education. Contemporary Educational Psychology, 60, 101827. [Google Scholar] [CrossRef]

- Bloom, B. S. (Ed.). (1956). Taxonomy of educational objectives: The classification of educational goals. Handbook I: Cognitive domain. Longmans, Green & Co. [Google Scholar]

- Bryman, A. (2016). Social research methods (5th ed.). Oxford University Press. [Google Scholar]

- Chapelle, C. A. (2009). The relationship between second language acquisition theory and computer-assisted language learning. The Modern Language Journal, 93, 741–753. [Google Scholar] [CrossRef]

- Chaudhary, A. A., Arif, S., Calimlim, F. J. R., Khan, S. Z., & Sadia, A. (2024). The impact of ai-powered educational tools on student engagement and learning outcomes at higher education level. Available online: https://www.researchgate.net/publication/382559080 (accessed on 12 February 2025).

- Checkoway, B. (2004). Research methods in occupational epidemiology (2nd ed.). Oxford University Press. [Google Scholar]

- Chen, L., Wang, X., & Qin, Z. (2020, October 17–18). The application of artificial intelligence in English teaching and learning. International Conference on Social Sciences and Big Data Application (pp. 159–163), Xi’an, China. [Google Scholar]

- Chiu, T. K. F., Xia, Q., Zhou, X., Chai, C. S., & Cheng, M. (2023). Systematic literature review on opportunities, challenges, and future research recommendations of artificial intelligence in education. Computers and Education: Artificial Intelligence, 4, 100118. [Google Scholar] [CrossRef]

- Chiwaridzo, M., Naidoo, N., & Ferguson, G. D. (2017). Content validity and test-retest reliability of a low back pain questionnaire in Zimbabwean adolescents. Archives of Physiotherapy, 7(1), 3. [Google Scholar] [CrossRef] [PubMed]

- Cohen, L., Manion, L., & Morrison, K. (2007). Research methods in education. Routledge. [Google Scholar]

- Creswell, J. W. (2014). The selection of a research approach. In Research design: Qualitative, quantitative, and mix methods approaches (4th ed., pp. 3–23). SAGE. [Google Scholar]

- Crompton, H., & Burke, D. (2023). Artificial intelligence in higher education: The state of the field. International Journal of Educational Technology in Higher Education, 20(1), 22. [Google Scholar] [CrossRef]

- De la Vall, R. R. F., & Araya, F. G. (2023). Exploring the benefits and challenges of AI-language learning tools. International Journal of Social Sciences and Humanities Invention, 10(01), 7569–7576. [Google Scholar] [CrossRef]

- Dizon, G., & Tang, D. (2020). Intelligent personal assistants for autonomous second language learning: An investigation of Alexa. The JALT Call Journal, 16(2), 107–120. [Google Scholar] [CrossRef]

- Dornyei, Z., & Ryan, S. (2015). The psychology of the language learner revisited. Routledge. [Google Scholar]

- Douglass, J. A., Thomson, G., & Zhao, C. M. (2012). The learning outcomes race: The value of self-reported gains in large research universities. Higher Education, 64(3), 317–335. [Google Scholar] [CrossRef]

- Dörnyei, Z. (2003). Questionnaires in second language research: Construction, administration, and processing. Lawrence Erlbaum Associates. [Google Scholar]

- Fakhri, M. M., Ahmar, A. S., Isma, A., Rosidah, R., & Fadhilatunisa, D. (2024). Exploring generative AI tools frequency: Impacts on attitude, satisfaction, and competency in achieving higher education learning goals. EduLine: Journal of Education and Learning Innovation, 4(1), 196–208. [Google Scholar] [CrossRef]

- Fitria, T. N. (2021). Grammarly as AI-powered English writing assistant: Students’ alternative for writing English. Metathesis: Journal of English Language, Literature, and Teaching, 5(1), 65. [Google Scholar] [CrossRef]

- Fraenkel, J. R., Wallen, N. E., & Hyun, H. H. (2012). How to design and evaluate research in education. McGraw-Hill. [Google Scholar]

- Garrett, N. (2009). Computer-assisted language learning trends and issues revisited: Integrating innovation. The Modern Language Journal, 93, 719–740. [Google Scholar] [CrossRef]

- Gautam, D. K., & Gautam, P. K. (2021). Transition to online higher education during COVID-19 pandemic: Turmoil and way forward to developing country of South Asia-Nepal. Journal of Research in Innovative Teaching and Learning, 14(1), 93–111. [Google Scholar] [CrossRef]

- Godwin-Jones, R. (2018). Second language writing online: An update. Language Learning & Technology, 22(1), 1–15. [Google Scholar]

- Habók, A., & Magyar, A. (2018). The effect of language learning strategies on proficiency, attitudes and school achievement. Frontiers in Psychology, 8, 2358. [Google Scholar] [CrossRef] [PubMed]

- Huang, X., Zou, D., Cheng, G., Chen, X., & Xie, H. (2023). Trends, research issues and applications of artificial intelligence in language education. Educational Technology & Society, 26(1), 112–131. [Google Scholar]

- Hubbard, P. (2021). Evaluating CALL research: The state of the art. Available online: https://www.apacall.org/ (accessed on 12 February 2025).

- Idkhan, A. M., & Idris, M. M. (2023). The impact of user satisfaction in the use of e-learning systems in higher education: A CB-SEM approach. International Journal of Environment, Engineering and Education, 5(3), 100–110. [Google Scholar] [CrossRef]

- Kashive, N., Powale, L., & Kashive, K. (2021). Understanding user perception toward artificial intelligence (AI) enabled e-learning. The International Journal of Information and Learning Technology, 38(1), 1–19. [Google Scholar] [CrossRef]

- Khanshan, S. K., & Yousefi, M. H. (2020). The relationship between self-efficacy and instructional practice of in-service soft disciplines, hard disciplines and EFL teachers. Asian-Pacific Journal of Second and Foreign Language Education, 5(1), 1. [Google Scholar] [CrossRef]

- Kolegova, I., & Levina, I. (2024). Using artificial intelligence as a digital tool in foreign language teaching. Bulletin of the South Ural State University. Series “Education. Educational Sciences”, 16(1), 102–110. [Google Scholar] [CrossRef]

- Krishan, I. Q., & Al-Rsa’I, M. S. (2023). The effect of technology-oriented differentiated instruction on motivation to learn science. International Journal of Instruction, 16(1), 961–982. [Google Scholar] [CrossRef]

- Lee, H. (2024). Perceptions, integration, and learning needs of ChatGPT among EFL teachers. Korea TESOL Journal, 20(1), 3–19. [Google Scholar]

- Lee, Y.-J., Davis, R. O., & Lee, S. O. (2024). University students’ perceptions of artificial intelligence-based tools for English writing courses. Online Journal of Communication and Media Technologies, 14(1), e202412. [Google Scholar] [CrossRef]

- Levy, D. (2017). Online, blended and technology-enhanced learning: Tools to facilitate community college student success in the digitally-driven workplace. Contemporary Issues in Education Research (CIER), 10, 255. [Google Scholar] [CrossRef]

- Liu, M. (2023). Exploring the application of artificial intelligence in foreign language teaching: Challenges and future development. SHS Web of Conferences, 168, 03025. [Google Scholar] [CrossRef]

- Losi, M., Trinh, N., & Alharbi, S. (2024). Investigating EFL learners’ perceptions of using AI to enhance English vocabulary acquisition based on the Technology Acceptance Model. Frontiers in Language Studies, 4(1), 1–14. [Google Scholar]

- Luckin, R., & Cukurova, M. (2019). Designing educational technologies in the age of AI: A learning sciences-driven approach. British Journal of Educational Technology, 50(6), 2824–2838. [Google Scholar] [CrossRef]

- Lye, L. (2021). Investigating the effects of mobile apps on language learning outcomes: A study on Duolingo. University of Florida. [Google Scholar]

- Ma, Q., & Wang, L. (2020). Modeling students’ perceptions of artificial intelligence-assisted language learning. Computer Assisted Language Learning, 33(3), 1–23. [Google Scholar]

- Mahajan, M., & Singh, M. K. S. (2017). Importance and benefits of learning outcomes. IOSR Journal of Humanities and Social Science, 22(03), 65–67. [Google Scholar] [CrossRef]

- Masruddin, M., & Al Hamdany, M. Z. (2023). Students’ motivation in learning English in Islamic higher education. FOSTER: Journal of English Language Teaching, 4(4), 199–207. [Google Scholar] [CrossRef]

- Moybeka, A. M. S., Syariatin, N., Tatipang, D. P., Mushthoza, D. A., Putu, N., Dewi, J. L., & Tineh, S. (2023). Artificial intelligence and English classroom: The implications of AI toward EFL students’ motivation. Jurnal Edumaspul, 7(2), 2444–2454. [Google Scholar] [CrossRef]

- Ng, L. L., Azizie, R. S., & Chew, S. Y. (2021). Factors influencing ESL players’ use of vocabulary learning strategies in massively multiplayer online role-playing games (MMORPG). The Asia-Pacific Education Researcher, 31, 369–381. [Google Scholar] [CrossRef]

- Pallant, J. (2005). SPSS survival guide: A step by step guide to data analysis using SPSS for windows (3rd ed.). University Press. [Google Scholar]

- Pavlenko, O., & Syzenko, A. (2024). Using ChatGPT as a learning tool: A study of Ukrainian students’ perceptions. Arab World English Journal, 1(1), 252–264. [Google Scholar] [CrossRef]

- Peng, C. (2021). The academic motivation and engagement of students in English as a foreign language classes: Does teacher praise matter? Frontiers in Psychology, 12, 778174. [Google Scholar] [CrossRef]

- Pérez-Paredes, P., & Zhang, D. (2022). Mobile assisted language learning: Scope, praxis and theory. Porta Linguarum Revista Interuniversitaria De Didáctica De Las Lenguas Extranjeras, 11–25. [Google Scholar] [CrossRef]

- Piaget, J., & Cook, M. (1952). The origins of intelligence in children (Vol. 8). International Universities Press. [Google Scholar]

- Qiao, Y., & Zhao, J. (2023). Artificial intelligence-based language learning: Illuminating the impact on speaking skills and self-regulation in Chinese EFL context. Frontiers in Psychology, 14, 1255594. [Google Scholar] [CrossRef] [PubMed]

- Reinders, H., & Benson, P. (2017). Research agenda: Language learning beyond the classroom. Language Teaching, 50(4), 561–578. [Google Scholar] [CrossRef]

- Shoeibi, N., Sánchez, R. T., & Garcia-Penalvo, F. J. (2023). Evaluating the effectiveness of human-centered AI systems in education. AI Systems in Education, 155–186. Available online: https://repositorio.grial.eu/server/api/core/bitstreams/1ebbcd3f-f5d6-4d18-842b-1029a745b9f7/content (accessed on 28 December 2024).

- Stockwell, G. (2012). Computer-assisted language learning: Diversity in research and practice. Cambridge University Press. [Google Scholar]

- Tadesse, S., & Muluye, W. (2020). The impact of COVID-19 pandemic on education system in developing countries: A review. Open Journal of Social Sciences, 8(10), 159. [Google Scholar] [CrossRef]

- Uzun, L. (2024). Exploring the intersection of technology and EAP in the digital age. In Teaching English for academic purposes: Theory into practice (pp. 345–378). Springer. [Google Scholar]

- Vadivel, B., Shaban, A. A., Ahmed, Z. A., & Saravanan, B. (2023). Unlocking English proficiency: Assessing the influence of AI-powered language learning Apps on young learners’ language acquisition. International Journal of English Language, Education and Literature Studies (IJEEL), 2, 8. [Google Scholar] [CrossRef]

- Vygotsky, L. S. (1979). Mind in society: The development of higher psychological processes. Harvard University Press. [Google Scholar]

- Vygotsky, L. S., & Cole, M. (1978). Mind in society: Development of higher psychological processes. Harvard University Press. [Google Scholar]

- Warschauer, M. (2011). Learning in the cloud. Teachers College Press. [Google Scholar]

- Wei, L. (2023). Artificial intelligence in language instruction: Impact on English learning achievement, L2 motivation, and self-regulated learning. Frontiers in Psychology, 14, 1261955. [Google Scholar] [CrossRef]

- Yagmale, F. (2003). Content validity and its estimation. Journal of Medical Education, 3, 25–27. Available online: https://www.sid.ir/en/Journal/ViewPaper.aspx?ID=33688 (accessed on 12 February 2025).

- Yang, H., & Kyun, S. (2022). The current research trend of artificial intelligence in language learning: A systematic empirical literature review from an activity theory perspective. Australasian Journal of Educational Technology, 38(5), 180–210. [Google Scholar] [CrossRef]

- Yunina, D. (2023). Students’ perception of the impact of AI generative tools in learning the English language. International Journal of Language Education, 7(2), 45–60. [Google Scholar]

- Zakaria, N., Lim, G. F. C., Jalil, N. A., Anuar, N. N. A. N., & Aziz, A. A. (2024). The Implementation of personalised learning to teach English in Malaysian low-enrolment schools. SHS Web of Conferences, 182, 01011. [Google Scholar] [CrossRef]

- Zheng, B., & Warschauer, M. (2017). Epilogue: Second language writing in the age of computer-mediated communication. Journal of Second Language Writing, 36, 61–67. [Google Scholar] [CrossRef]

- Zuhdy Idham, A., Muhammadiyah Barru, U., & Abd Rajab, I. (2024). Navigating the transformative impact of artificial intelligence on English language teaching: Exploring challenges and opportunities. Jurnal Edukasi Saintifik, 4(1), 8–14. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).