Generative Artificial Intelligence in Education: Insights from Rehabilitation Sciences Students

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Participants

2.3. Survey Development

2.4. Data Management and Analysis

3. Results

3.1. Participants

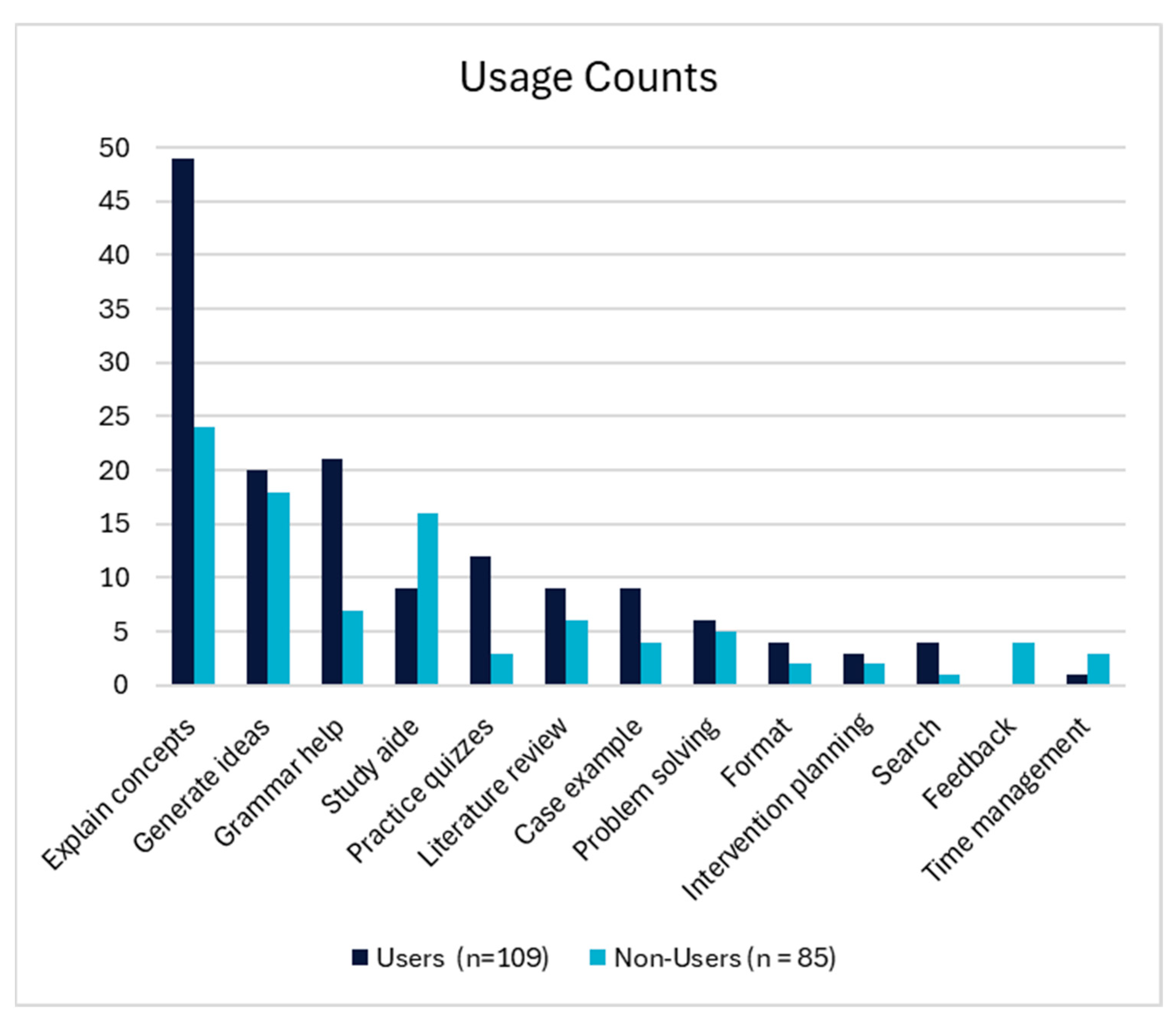

3.2. Use of GenAI

3.3. Perceptions of GenAI

3.4. Facilitators and Knowledge of GenAI

4. Discussion

5. Limitations

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Almansour, M., & Alfhaid, F. M. (2024). Generative artificial intelligence and the personalization of health professional education: A narrative review. Medicine, 103(31), e38955. [Google Scholar] [CrossRef] [PubMed]

- AlQudah, A. A., Al-Emran, M., & Shaalan, K. (2021). Technology acceptance in healthcare: A systematic review. Applied Sciences, 11(22), 10537. [Google Scholar] [CrossRef]

- Alsobhi, M., Khan, F., Chevidikunnan, M. F., Basuodan, R., Shawli, L., & Neamatallah, Z. (2022). Physical therapists’ knowledge and attitudes regarding artificial intelligence applications in health care and rehabilitation: Cross-sectional study. Journal of Medical Internet Research, 24(10), e39565. [Google Scholar] [CrossRef]

- Amoozadeh, M., Daniels, D., Nam, D., Kumar, A., Chen, S., Hilton, M., Srinivasa Ragavan, S., & Alipour, M. A. (2024, March 20–23). Trust in generative AI among students: An exploratory study. 55th ACM Technical Symposium on Computer Science Education V. 1 (pp. 67–73), Portland, OR, USA. [Google Scholar] [CrossRef]

- Baek, C., Tate, T., & Warschauer, M. (2024). “ChatGPT seems too good to be true”: College students’ use and perceptions of generative AI. Computers and Education: Artificial Intelligence, 7, 100294. [Google Scholar] [CrossRef]

- Cotton, D. R., Cotton, P. A., & Shipway, J. R. (2024). Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International, 61(2), 228–239. [Google Scholar] [CrossRef]

- Davenport, T., & Kalakota, R. (2019). The potential for artificial intelligence in healthcare. Future Healthcare Journal, 6(2), 94–98. [Google Scholar] [CrossRef]

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. [Google Scholar] [CrossRef]

- Davis, F. D., Bagozzi, R. P., & Warshaw, P. R. (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35(8), 982–1003. [Google Scholar] [CrossRef]

- Fui-Hoon Nah, F., Zheng, R., Cai, J., Siau, K., & Chen, L. (2023). Generative AI and ChatGPT: Applications, challenges, and AI-human collaboration. Journal of Information Technology Case and Application Research, 25(3), 277–304. [Google Scholar] [CrossRef]

- Furey, P., Town, A., Sumera, K., & Webster, C. A. (2024). Approaches for integrating generative artificial intelligence in emergency healthcare education within higher education: A scoping review. Critical Care Innovations, 7(2), 34–54. [Google Scholar] [CrossRef]

- Gado, S., Kempen, R., Lingelbach, K., & Bipp, T. (2022). Artificial intelligence in psychology: How can we enable psychology students to accept and use artificial intelligence? Psychology Learning & Teaching, 21(1), 37–56. [Google Scholar] [CrossRef]

- Golding, J. M., Lippert, A., Neuschatz, J. S., Salomon, I., & Burke, K. (2024). Generative AI and college students: Use and perceptions. Teaching of Psychology, 25(3), 277–304. [Google Scholar] [CrossRef]

- Hale, J., Alexander, S., Wright, S. T., & Gilliland, K. (2024). Generative AI in undergraduate medical education: A rapid review. Journal of Medical Education and Curricular Development, 11, 1–15. [Google Scholar] [CrossRef]

- Hsieh, H.-F., & Shannon, S. E. (2005). Three approaches to qualitative content analysis. Qualitative Health Research, 15(9), 1277–1288. [Google Scholar] [CrossRef]

- Hu, K. (2023). ChatGPT sets record for fastest-growing user base—Analyst note. Reuters. Available online: https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/ (accessed on 24 January 2025).

- Huang, K. (2023). Alarmed by A.I. chatbots, universities start revamping how they teach. The New York Times. Available online: https://www.nytimes.com/2023/01/16/technology/chatgpt-artificial-intelligence-universities.html (accessed on 24 January 2025).

- Janumpally, R., Nanua, S., Ngo, A., & Youens, K. (2025). Generative artificial intelligence in graduate medical education. Frontiers in Medicine, 11, 1525604. [Google Scholar] [CrossRef] [PubMed]

- Kazley, A. S., Andresen, C., Mund, A., Blankenship, C., & Segal, R. (2024). Is use of ChatGPT cheating? Students of health professions perceptions. Medical Teacher, 1–5. [Google Scholar] [CrossRef]

- Mansour, T., & Wong, J. (2024). Enhancing fieldwork readiness in occupational therapy students with generative AI. Frontiers in Medicine, 11, 1485325. [Google Scholar] [CrossRef] [PubMed]

- Preiksaitis, C., & Rose, C. (2023). Opportunities, challenges, and future directions of generative artificial intelligence in medical education: Scoping review. JMIR Medical Education, 9, e48785. [Google Scholar] [CrossRef]

- Roganović, J. (2024). Familiarity with ChatGPT features modifies expectations and learning outcomes of dental students. International Dental Journal, 74(6), 1456–1462. [Google Scholar] [CrossRef]

- Ruediger, D., Blankstein, M., & Love, S. (2024). Generative AI and postsecondary instructional practices (Vol. 320892). JSTOR. [Google Scholar] [CrossRef]

- Sallam, M. (2023). ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns. Healthcare, 11(6), 887. [Google Scholar] [CrossRef]

- Severin, R., & Gagnon, K. (2023). An early snapshot of attitudes toward generative artificial intelligence in physical therapy education. Journal of Physical Therapy Education, 10.1097. [Google Scholar] [CrossRef] [PubMed]

- Sun, L., & Zhou, L. (2024). Does generative artificial intelligence improve the academic achievement of college students? A meta-analysis. Journal of Educational Computing Research, 62(7), 1896–1933. [Google Scholar] [CrossRef]

- Vaughn, J., Ford, S. H., Scott, M., Jones, C., & Lewinski, A. (2024). Enhancing healthcare education: Leveraging ChatGPT for innovative simulation scenarios. Clinical Simulation in Nursing, 87, 101487. [Google Scholar] [CrossRef]

- Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. [Google Scholar] [CrossRef]

- Wójcik, S., Rulkiewicz, A., Pruszczyk, P., Lisik, W., Poboży, M., & Domienik-Karłowicz, J. (2023). Beyond ChatGPT: What does GPT-4 add to healthcare? The dawn of a new era. Cardiology Journal, 30(6), 1018–1025. [Google Scholar] [CrossRef]

| All | Users | Non-Users | |

|---|---|---|---|

| Use of AI n (%) | N = 196 | n = 110 | n = 86 |

| All the time | 2 (1.0%) | 2 (1.8%) | - |

| Most of the time | 10 (5.1%) | 10 (9.1%) | - |

| Some of the time | 98 (50%) | 98 (89.1%) | - |

| None of the time | 86 (43.9%) | - | 86 (100%) |

| Age mean (SD) | N = 169 | n = 96 | n = 73 |

| 23.0 (2.7) | 22.9 (2.5) | 23.1 (2.8) | |

| Gender Identity n (%) | N = 175 | n = 100 | n = 75 |

| Man | 28 (16%) | 18 (18%) | 10 (13.3%) |

| Woman | 142 (81.1%) | 80 (80%) | 62 (82.7%) |

| Non-binary/third gender | 2 (1.1%) | 0 (0%) | 2 (2.7%) |

| Prefer not to say | 3 (1.7%) | 2(2.0%) | 1 (1.3%) |

| Race and Ethnicity n * | |||

| White | 132 | 69 | 63 |

| Black or African American | 12 | 8 | 4 |

| American Indian/Alaskan Native | 0 | 0 | 0 |

| Asian | 22 | 14 | 8 |

| Native Hawaiian/Pacific Islander | 0 | 0 | 0 |

| Hispanic/Latino/Spanish | 11 | 8 | 3 |

| Arabic/Middle Eastern | 1 | 1 | 0 |

| Prefer not to say | 6 | 4 | 2 |

| Other | 1 | 1 | 0 |

| Annual Family Income Growing Up n (%) | N = 175 | n = 98 | n = 77 |

| Less than $50,000 | 14 (8.0%) | 9 (9.2%) | 5 (6.5%) |

| $50,000–$99,999 | 48 (27.4%) | 18 (18.4%) | 20 (26.0%) |

| $100,000–$149,999 | 39 (22.3%) | 26 (26.5%) | 13 (16.9%) |

| Greater than $150,000 | 35(20.0%) | 17 (17.3%) | 18 (23.4%) |

| Prefer not to answer | 12 (7.4%) | 6 (6.1%) | 7 (9.1%) |

| I am unaware | 26 (14.9%) | 12 (12.2%) | 14 (18.2%) |

| Question Domain * | All N = 192 | Users n = 109 | Non-Users n = 83 | Chi-Square p-Value |

|---|---|---|---|---|

| Accomplishes tasks (%) | ||||

| Agree/Strongly Agree | 69.3% | 73.4% | 63.8% | 0.156 |

| Disagree/Strongly Disagree | 30.7% | 26.7% | 36.1% | |

| Improves performance (%) | ||||

| Agree/Strongly Agree | 51.0% | 66.1% | 31.3% | <0.001 |

| Disagree/Strongly Disagree | 49.0% | 34.0% | 68.7% | |

| Increases productivity (%) | ||||

| Agree/Strongly Agree | 55.7% | 63.3% | 45.8% | 0.015 |

| Disagree/Strongly Disagree | 44.3% | 36.7% | 54.2% | |

| Enhances effectiveness (%) | ||||

| Agree/Strongly Agree | 52.6% | 66.9% | 33.7% | <0.001 |

| Disagree/Strongly Disagree | 47.4% | 33.1% | 66.3% | |

| Makes school easier (%) | ||||

| Agree/Strongly Agree | 67.2% | 67.0% | 67.5% | 0.942 |

| Disagree/Strongly Disagree | 32.8% | 33.0% | 32.5% | |

| Is useful (%) | ||||

| Agree/Strongly Agree | 76.6% | 91.7% | 56.6% | <0.001 |

| Disagree/Strongly Disagree | 23.4% | 8.2% | 43.3% | |

| Helps my ability to learn (%) | ||||

| Agree/Strongly Agree | 59.9% | 76.1% | 38.5% | <0.001 |

| Disagree/Strongly Disagree | 40.1% | 23.8% | 61.5% |

| Question Domain * | All N = 181 | Users n = 103 | Non-Users n = 78 | Chi-Square p-Value |

|---|---|---|---|---|

| Learning to use is easy (%) | ||||

| Agree/Strongly Agree | 87.8% | 92.3% | 82.0% | 0.038 |

| Disagree/Strongly Disagree | 12.2% | 7.8% | 18.0% | |

| Easy to get it to do intention (%) | ||||

| Agree/Strongly Agree | 70.7% | 81.5% | 56.4% | <0.001 |

| Disagree/Strongly Disagree | 29.3% | 18.4% | 43.6% | |

| Interaction is clear and understandable (%) | ||||

| Agree/Strongly Agree | 79.0% | 88.4% | 66.7% | <0.001 |

| Disagree/Strongly Disagree | 21.0% | 11.7% | 33.3% | |

| Flexible to interact with (%) a | ||||

| Agree/Strongly Agree | 77.8% | 84.4% | 69.3% | 0.016 |

| Disagree/Strongly Disagree | 22.2% | 15.7% | 30.8% | |

| Easy to become skillful in GenAI | ||||

| Agree/Strongly Agree | 77.3% | 79.6% | 74.4% | 0.403 |

| Disagree/Strongly Disagree | 22.7% | 20.4% | 25.6% | |

| Easy to use (%) b | ||||

| Agree/Strongly Agree | 88.3% | 96.0% | 78.2% | <0.001 |

| Disagree/Strongly Disagree | 11.7% | 4.0% | 21.8% |

| Question Domain * | All N = 180 | Users n = 102 | Non-Users n = 78 | Chi Square (p-Value) |

|---|---|---|---|---|

| Peers I respect use GenAI for school (%) a | ||||

| Agree/Strongly Agree | 74.9% | 88.1% | 57.7% | <0.001 |

| Disagree/Strongly Disagree | 25.1% | 11.9% | 42.3% | |

| Faculty support use of GenAI for school (%) | ||||

| All | 1.7% | 2.0% | 1.3% | 0.083 |

| Most | 7.2% | 11.8% | 1.3% | |

| Some | 53.3% | 52.9% | 53.8% | |

| None | 3.9% | 3.9% | 3.8% | |

| Unsure | 33.9% | 29.4% | 39.7% | |

| I have the resources I need to use GenAI for school (%) | ||||

| Agree/Strongly Agree | 74.4% | 82.4% | 64.1% | 0.005 |

| Disagree/Strongly Disagree | 25.6% | 17.6% | 35.9% | |

| GenAI provides accurate information (%) ab | ||||

| All of the time | 1.1% | 1.0% | 1.3% | 0.847 |

| Most/Some/None of the time | 98.9% | 99.0% | 98.7% | |

| I am responsible for the accuracy of GenAI (%) ab | ||||

| Fully responsible | 74.7% | 69.3% | 81.8% | 0.057 |

| Share responsibility/Unsure | 25.3% | 30.7% | 18.2% | |

| I know how to indicate the use of GenAI (%) ab | ||||

| Yes | 41.0% | 50.5% | 28.6% | 0.003 |

| No | 59.0% | 49.5% | 71.4% | |

| I indicate when I use GenAI (%) c | ||||

| All of the time | 41.7% | 24.0% | 65.3% | <0.001 |

| Most/Some/None of the time | 58.3% | 76.0% | 34.7% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dekerlegand, R.; Bell, A.; Clancy, M.J.; Pletcher, E.R.; Pollen, T. Generative Artificial Intelligence in Education: Insights from Rehabilitation Sciences Students. Educ. Sci. 2025, 15, 380. https://doi.org/10.3390/educsci15030380

Dekerlegand R, Bell A, Clancy MJ, Pletcher ER, Pollen T. Generative Artificial Intelligence in Education: Insights from Rehabilitation Sciences Students. Education Sciences. 2025; 15(3):380. https://doi.org/10.3390/educsci15030380

Chicago/Turabian StyleDekerlegand, Robert, Alison Bell, Malachy J. Clancy, Erin R. Pletcher, and Travis Pollen. 2025. "Generative Artificial Intelligence in Education: Insights from Rehabilitation Sciences Students" Education Sciences 15, no. 3: 380. https://doi.org/10.3390/educsci15030380

APA StyleDekerlegand, R., Bell, A., Clancy, M. J., Pletcher, E. R., & Pollen, T. (2025). Generative Artificial Intelligence in Education: Insights from Rehabilitation Sciences Students. Education Sciences, 15(3), 380. https://doi.org/10.3390/educsci15030380