1. Introduction

Computational thinking (CT) is increasingly recognized as a vital skill set within STEM (Science, Technology, Engineering, and Mathematics) education, providing students with the cognitive tools necessary for problem-solving in this technology-driven world. In Engineering and computing education, CT is integral to understanding complex systems and formulation algorithms and addressing real-world challenges systematically. Despite its growing importance, measuring and assessing CT skills remain complex and often subjective, particularly when examining students’ self-perceptions versus objectively measured abilities in STEM (

Liu et al., 2023;

Muñoz et al., 2023;

Syafe’i et al., 2023). It is believed that, regardless of their chosen field, students must equip themselves with essential problem-solving skills for the future, including abstraction, decomposition, algorithmic thinking, etc.

Undoubtedly, CT is a recent problem-solving skill that offers a multifaceted approach, integrating mathematical, Engineering, and scientific thinking. As a vital foundation for fostering innovative problem-solving and creative thinking abilities, CT empowers individuals to break down complex problems, recognize patterns, and devise systematic solutions. By developing these competencies, students enhance their ability to address challenges within computing and become better prepared to navigate the evolving technological landscape across various fields (

Hunsaker, 2020;

Liang et al., 2013;

Yeni et al., 2024). The term “computational thinking” was introduced by (

Wing, 2008) to highlight that thinking like a computer scientist can benefit everyone, not just Computer Science majors. It is defined as “the thought processes involved in formulating problems and designing their solutions so that these solutions can be effectively executed by an information processing agent including computers, robots, and humans” (

Wing, 2009).

Currently, CT is an emerging discipline that emphasizes structured and algorithmic approaches to problem-solving. It involves breaking down complex problems into smaller, more manageable components, identifying patterns and relationships, and developing abstract models to address and solve these problems computationally. Recognized as a key multidisciplinary skill of the digital age (

Czerkawski & Lyman, 2015), CT has gained prominence as technology increasingly influences all aspects of life, driving the need for professionals capable of tackling complex challenges using computational methods. Researchers have explored CT as a framework of concepts and thought processes that enable problem formulation and solution development across various disciplines. It is often described as a cognitive strategy akin to thinking like a computer scientist, even for those outside the field (

Riley & Hunt, 2014). Moreover, CT is closely linked to competencies such as problem-solving, system modeling, and problem formulation, highlighting its relevance in fostering critical and analytical thinking across diverse domains (

Denning & Tedre, 2019;

A. Sullivan & Bers, 2019;

Wing, 2008).

In education, CT serves as a tool to enhance critical thinking and technical proficiency. Applying structured problem-solving processes empowers students to create computational artifacts like computer programs and system designs. Educators and policymakers increasingly prioritize the integration of CT into curricula to prepare students for the demands of a rapidly evolving technological landscape (

Agbo et al., 2019;

Tekdal, 2021). Moreover, CT nurtures essential skills such as systematic problem-solving, data analysis, and creative thinking capabilities highly valued in programming, mathematics, and other fields (

Angevine et al., 2017;

Fields et al., 2021;

Tang et al., 2020). These connections underscore CT’s critical role in equipping students with the competencies to excel in a technology-driven world. In addition, integrating CT into education systems is essential for equipping students with future-ready skills (

Grover & Pea, 2013). As a result, CT has been incorporated into the Next Generation Science Standards (NGSS) in the United States and embedded within STEM curricula at the K-12 level (

Tang et al., 2020). Although many countries, including Finland, Norway, South Korea, Israel, Poland, New Zealand, Portugal, and Estonia, have integrated CT into their educational programs (

Junpho et al., 2022;

Karalar & Alpaslan, 2021;

Machuqueiro & Piedade, 2024;

Tikva & Tambouris, 2021), there are only a few studies that target CT analysis among students in Latin America, and most existing research focuses on primary education (

Castro et al., 2021;

Paucar-Curasma et al., 2022;

Ríos Félix et al., 2020). This research gap necessitates more research to establish a solid foundation for studying CT in higher education in Mexico.

Recent studies on CT skill assessment often use either self-assessment tools or objective tests using gamification across primary, college and vocational levels (

Chen et al., 2023;

Hermans et al., 2024;

National Research Council et al., 2011;

Relkin et al., 2020;

Wilensky & Reisman, 2006). A study by (

El-Hamamsy et al., 2023) has introduced the competent Computational Thinking Test (CTT), validated for longitudinal studies among primary students. In contrast, (

Ghosh et al., 2024) developed ACE, a tool assessing higher cognitive levels in visual programming domains. Additionally, (

Zapata-Cáceres et al., 2020) designed and validated a beginner-focused CT test, emphasizing its applicability and content validity. Recent contributions like (

Relkin et al., 2020) and (

Clarke-Midura et al., 2021) have focused on unplugged assessments targeting early childhood and kindergarten education, respectively. Thus, a gap exists between students’ self-perceptions of their CT abilities and their objective performance, particularly in Engineering and computing education at higher levels.

Therefore, this study aims to address the above gaps using a multi-method approach to assess self-perceived and objectively assessed CT skills. The research integrates the Computational Thinking Scale (CTS) adopted from (

Tsai et al., 2021), which measures students’ self-assessments, with the Computational Thinking Test (CTT), an objective measure of their CT competencies. In addition, it analyzes students’ perception of CT competencies in their academic and career lives through qualitative assessment. Through this triple assessment, this study explores the alignment between self-perception and actual performance while examining how demographic factors such as program of study, gender, and prior experience influence students’ CT abilities. Through this method, the research addresses a significant gap in assessing CT skills by adopting a multi-dimensional assessment approach, integrating the CTT, the CTS, and qualitative insights in Latin America.

This study provides a critical foundation for understanding CT in Latin America, which future intervention-based research can build upon to create targeted educational strategies. Overall, this study addresses three key research questions: How aligned are students’ self-perceived CT skills (via CTS) with their objectively assessed CT skills (via CTT)? How do demographic factors (e.g., program of study, gender, and age) influence these skills? Lastly, how do students perceive the relevance of CT skills to their academic experiences and future careers? These questions aim to uncover critical insights into the relationship between perception and performance, demographic influences, and the practical value of CT in STEM education.

Contributions

This study contributes in multiple ways. These contributions provide valuable insights for STEM educators and curriculum developers and pave the way for a more effective integration of CT skills into Engineering and computing education.

Design and Validation of a CT Assessment Instrument: An instrument was designed and validated to assess undergraduate students’ CT skills and perceptions, specifically within Engineering and computing education contexts. Unlike prior CT-focused tools that primarily target pre-college education, this instrument addresses college-level students’ unique requirements and skills in STEM programs.

Cross-Verification Methodology: This study presents valuable insights by comparing students’ self-perceived CT skills with their objectively assessed CT test and qualitative insights obtained via open-ended questions.

Exploration of Demographic and Academic Influences: In addition, it examines how factors like program of study, gender, program, and age influence both self-perceived and objectively assessed CT skills, contributing to an understanding of diversity in CT competencies.

Qualitative Insights: It also incorporates qualitative insights to explore students’ perceptions of CT’s importance and applicability in their academic experiences and future Engineering careers, bridging theoretical knowledge and practical applications.

Future Research Gap: Lays a foundation for future investigations into the factors influencing CT development and the design of interventions to enhance CT education across diverse populations in STEM.

The rest of this article is as follows:

Section 2 presents a detailed theoretical framework of CT and the tools and methods deployed to assess CT in the literature. This is followed by

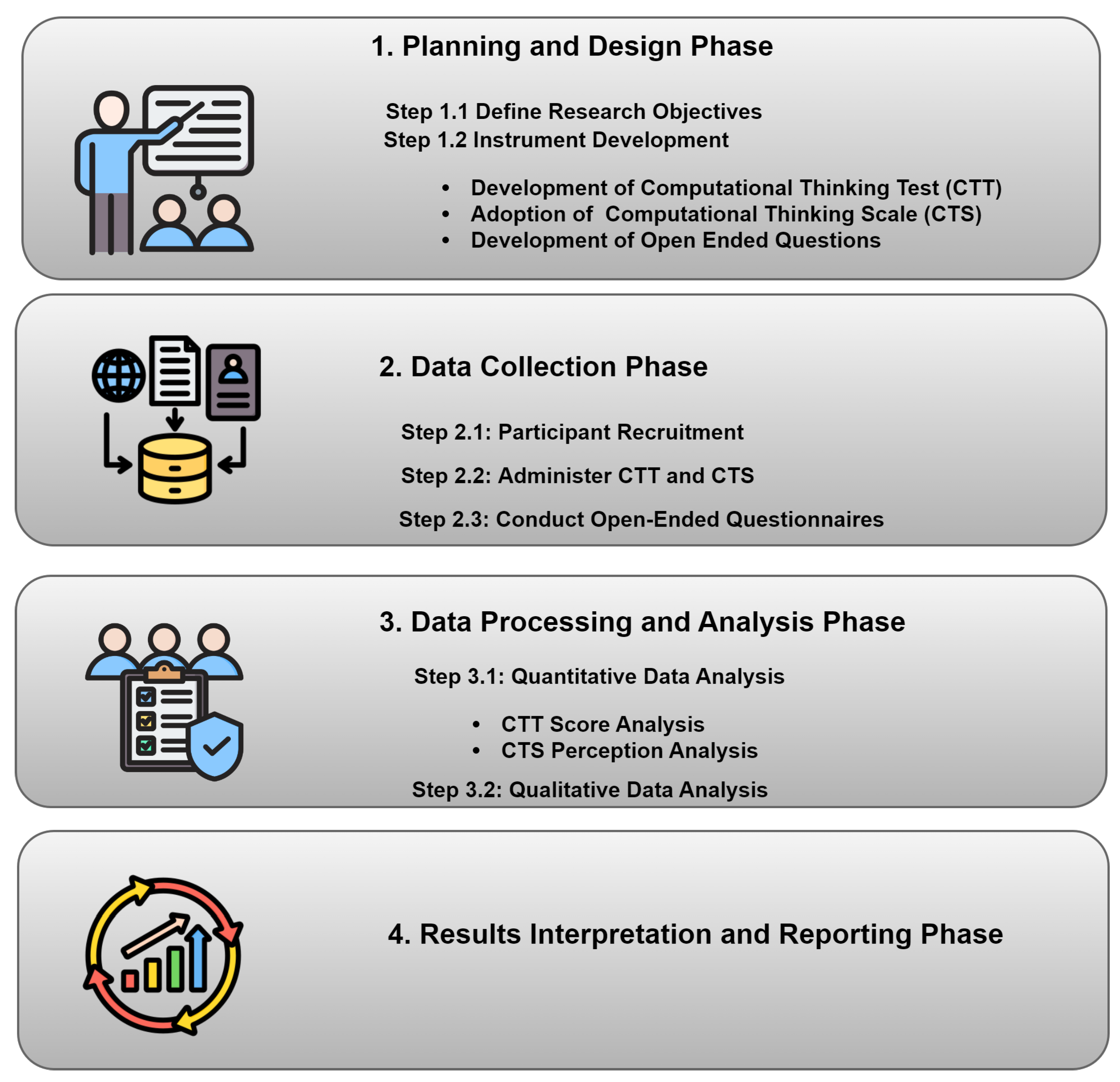

Section 3, which provides an overview of each step executed in this methodology, including the experimental setting, participant details, instrument design, and data analysis. Next,

Section 4 provides a detailed analysis of the results of the CTT, CTS, and qualitative data, and

Section 5 contains a discussion of the work. Further,

Section 7 presents this work’s limitations and future development; and lastly, the conclusion is given in

Section 8.

4. Results

The results section presents a detailed data analysis, highlighting key findings across all study components of the deployed instrument. Performance on the CTT and comparison by demographic factors such as age, gender, program, and institution are presented in

Section 4.1. The CTS results following reliability testing, factor analysis, and quantitative comparisons based on program and gender are discussed in detail in

Section 4.2. Additionally, the results obtained via thematic analysis of qualitative data are discussed in

Section 4.3.

4.1. Quantitative Analysis of CTT

To evaluate students’ performance on the CTT across key factors such as pattern recognition, decomposition, abstraction, and algorithmic thinking, we utilized Cronbach’s alpha as a measure of internal consistency, a widely recognized method for assessing the reliability of scales and questionnaires in related studies (

Field, 2013;

Piedade & Dorotea, 2023). A higher Cronbach’s alpha, typically above 0.7, indicates strong internal consistency (

Buyukozturk, 2002;

Field, 2013;

Korkmaz & Bai, 2019). In this study, we conducted both descriptive and reliability analyses to assess students’ performance and the instrument’s reliability.

In addition, Item Response Theory (IRT) was employed to evaluate the CTT items using Python-based tools. A two-parameter logistic (2PL) model was fitted to estimate item parameters, including difficulty and discrimination, using the pyirt library in Python. The discrimination coefficients ranged from 0.85 to 1.10, with an average close to 1.0, indicating that the items effectively differentiate between individuals with varying levels of computational thinking ability. Difficulty levels spanned from −2.5 to 2.3, ensuring that the test captures a broad spectrum of abilities. Model fit was assessed using metrics such as RMSEA (0.04), CFI (0.96), and SRMR (0.03), all of which indicated a good fit.

Firstly, an overall quantitative analysis of CTT was performed to check the validity and reliability of CTT and overall students’ score analysis; later on, a group-wise performance analysis was conducted, and results are presented in

Table 5. The overall analysis results revealed varied performance and reliability across different factors. Students excelled in pattern recognition (

, Cronbach’s

), demonstrating robust and consistent abilities in analyzing and resolving computational problems. In contrast, performance on decomposition remained moderate (

, Cronbach’s

), suggesting challenges in breaking down complex problems into simpler components. For abstraction (

, Cronbach’s

) and algorithmic thinking (

, Cronbach’s

), students demonstrated fair performance, though abstraction exhibited higher variability, reflecting inconsistency in identifying patterns or relevant details. The overall instrument (

, Cronbach’s

) showed good reliability, confirming its effectiveness in measuring CT constructs.

Table 5 presents comparisons of mean scores and standard deviations for CT competencies across various demographic categories, focusing on abstraction, algorithmic thinking, decomposition, and pattern recognition. Analysis reveals that participants under 20 exhibit lower overall performance, although they demonstrate notable strengths in pattern recognition. In contrast, participants aged 20 and above show superior proficiency across all assessed competencies. Gender differences indicate that boys outperform girls in all areas; however, the observed differences are relatively minor. Institutional analysis suggests that students from public institutions achieve higher scores, particularly in algorithmic thinking and pattern recognition, than private institutions. Furthermore, an examination of academic programs reveals that Computer Science students excel in abstraction and pattern recognition, while Engineering students tend to score lower across all competencies assessed.

4.2. Quantitative Analysis of CTS

This section analyzes students’ CTS data to assess their perceived CT skills based on various demographic factors, including gender, age, and academic program. Statistical analyses determine significant differences in CT skills between groups, including calculating mean, standard deviation,

t-tests, and effect size calculations (Cohen’s d). Firstly, this study adopted the CT scale from (

Tsai et al., 2021) to measure and assess CT skills. The scale is flexible to adapt to assess domain-specific CT skills in STEM fields by adjusting its terminology. It is suitable for secondary and higher-level participants. It has five factors: abstraction (four items), decomposition (three items), algorithmic thinking (four items), evaluation (four items), and generalization (four items).

Multiple studies on CT analysis use a

t-score and

p-value, a statistical measurement used to assess how far a sample mean is from the population mean in terms of the standard deviation. It is part of a

t-test, which compares the means of two groups to determine if they are statistically different (

De la Hoz Serrano et al., 2024;

Hsu et al., 2022). In addition, Cohen’s d is a measure of effect size that quantifies the difference between two groups in standard deviation (

Karalar & Alpaslan, 2021). It is used to assess the practical significance of the difference, or how significant the effect is, in a way independent of sample size.

4.2.1. Reliability and Validity of Adopted CTCSLE

A Confirmatory Factor Analysis (CFA) was conducted following established guidelines using the SmartPLS 3 software. CFA is a statistical technique employed to test the construct validity of a scale and assess how well the data fit the hypothesized model. This approach is appropriate for verifying predefined factor structures and ensuring alignment between the theoretical framework and the observed data. Several parameters were used to assess the reliability and validity of the CTS. Cronbach’s alpha was calculated to evaluate internal reliability, reflecting the interrelatedness of test items (

Hair et al., 2021;

Rosli & Saleh, 2023). Composite Reliability (CR) and Average Variance Extracted (AVE) were also computed for each factor, with factor loadings ≥0.7, AVE > 0.5, and both Cronbach’s alpha and CR ≥ 0.7 deemed acceptable thresholds (

Hair et al., 2017,

2011). The results of CFA presented in

Table 6 collectively confirm the reliability and validity of the CTS.

The results provided valuable insights into the adequacy of the CTS factor structure and its suitability for measuring CT skills. The CFA indicated that the chi-squared value was

, with a chi-squared/df ratio of

, which is within the acceptable range (typically below 3.00), suggesting a reasonable model fit (

Schumacker & Lomax, 2016). Additionally, the Standardized Root Mean Square Residual (SRMR) was 0.076, indicating an acceptable fit, as values below 0.08 indicate a good fit (

Byrne, 2013). The Normed Fit Index (NFI) was 0.935, which exceeds the commonly accepted threshold of 0.90, further supporting the model’s goodness of fit (

Hu & Bentler, 1999). These results suggest that the CTS factor structure is robust for assessing CT skills.

4.2.2. Gender-Wise Comparison of CT Skills

Table 7 reveals differences across all skill dimensions. Perceived CT skills were broadly similar across genders. Both boys and girls scored comparably in abstraction (

p = 0.37) and other factors, with no statistically significant differences observed. A minor difference in decomposition approached significance but was inconclusive. It was found that girls scored higher than boys in abstraction and decomposition. Similarly, boys slightly outperformed girls in generalization. Since the

p-values are more significant than the ordinary significance threshold of 0.05, the

t-values indicate that there is no statistically significant difference between boys’ and girls’ perceived CT skills for any of the factors (abstraction, decomposition, algorithmic thinking, generalization, and evaluation).

4.2.3. Age-Wise Comparison of Students’ Perceived CT Skills

Table 8 analyzes CT skills across two age groups: those under 20 and those 20 and above. The analysis showed minimal differences in perceived CT skills between age groups, with no significant differences in most factors. However, older students (20 and above) scored significantly higher in decomposition (

= 0.92,

= 0.87,

t-value = 2.54 and

p = 0.01), indicating they may have better problem-solving abilities in that skill, potentially due to increased experience. Other factors, such as abstraction and algorithmic thinking, showed no significant age-related differences. Overall, age did not substantially impact performance in CT skills, with only slight variations in decomposition noted between the two age groups.

4.2.4. Program-Wise Comparison of Their Perceived CT Skill

The analysis in

Table 9 reveals differences in CT skills between Computer Science and Engineering students. Computer Science students demonstrated better skills in abstraction, with a higher mean score than Engineering students. Significant differences were found between Computer Science and Engineering students in abstraction (

p = 0.02) and decomposition (

p = 0.001). Computer Science students consistently outperformed their Engineering peers. Other CT factors, such as algorithmic thinking and generalization, showed no significant variation by the program as per the

t-value in the table. While both groups performed similarly in algorithmic thinking, generalization, and evaluation, Computer Science students had slightly higher scores in these areas. These findings suggest that Computer Science students have a stronger foundation in specific CT sub-skills, particularly abstraction and decomposition, than their Engineering participants.

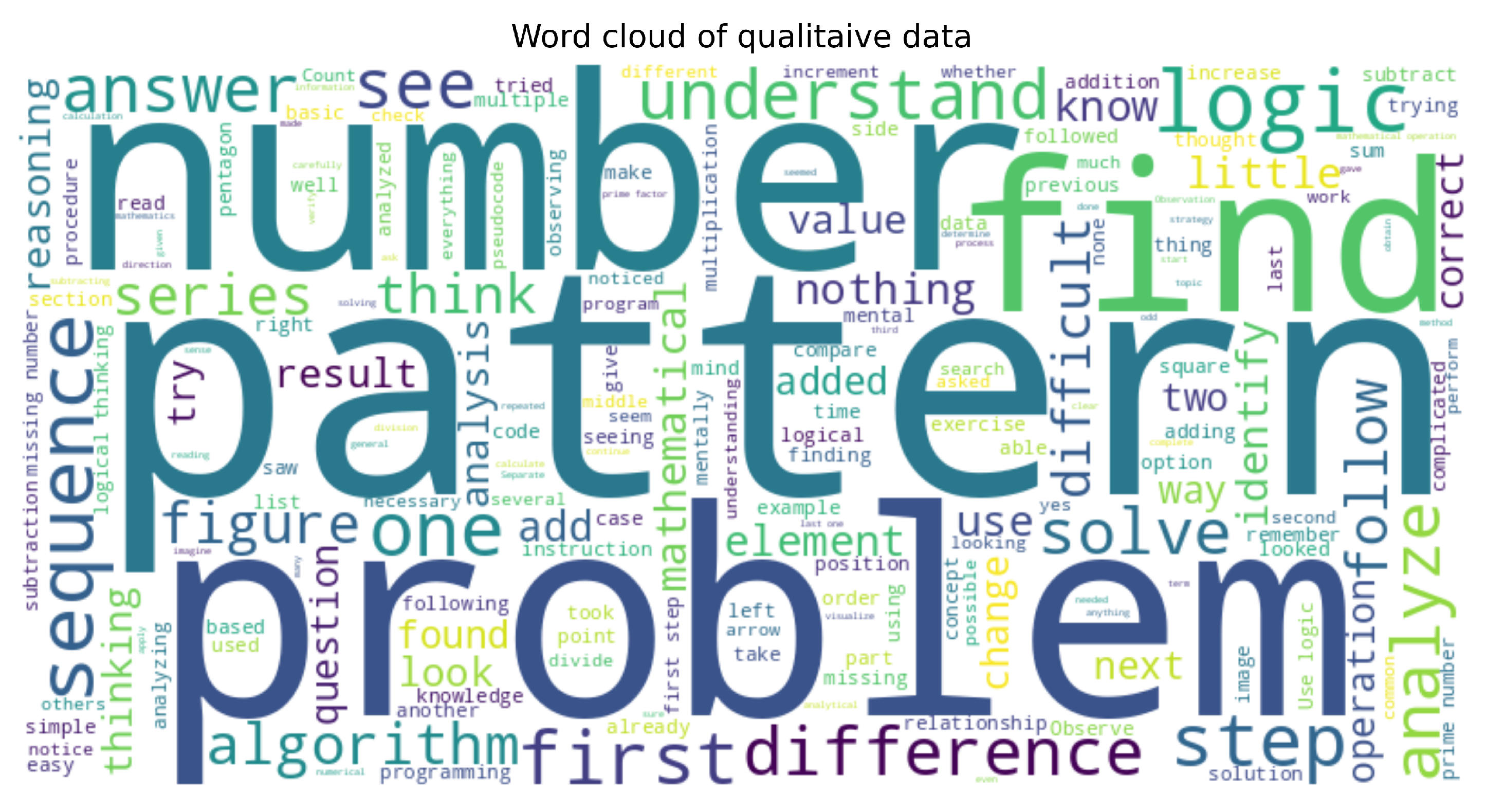

4.3. Qualitative Analysis

The qualitative data consist of students’ opinions, with 196 student records analyzed using the content analysis method (

Moretti et al., 2011;

Yilmaz & Yilmaz, 2023). This approach facilitated the identification of significant themes, patterns, and insights within the data, allowing for a thorough investigation and understanding of the students’ experiences and viewpoints. Four researchers were involved in this analysis to extract sub-themes from data, focusing on the strategies utilized to solve computational problems and the cognitive processes involved in applying CT skills to tackle the given issues. We uncovered a spectrum of insights reflecting both positive and negative aspects. The word cloud from qualitative data contains students’ opinions about CT competencies, as shown in

Figure 2.

Through analyzing students’ responses, identifying key themes and sub-themes revealed deeper insights into their experiences about CT. From the analysis, multiple prominent themes emerged, such as strategies for problem-solving, challenges in applying CT skills, and the perceived value of CT in real-life and academic contexts. For example, under problem-solving strategies, several students described using decomposition (“I broke the problem into smaller parts to manage it better”) and visualization techniques (“I imagined the structure in my mind before coding”). However, challenges such as difficulty with algorithmic thinking and terminology gaps were also highlighted, with one student noting, “I often struggle to understand the steps required for effective algorithms.” Beyond these challenges, students emphasized the relevance of CT skills in their academic and career pursuits, with comments like, “These skills are essential for creating efficient algorithms and solving real-world problems.” This deeper exploration not only enriches the understanding of CT competencies but also uncovers areas where targeted educational interventions could improve student outcomes.

The students employed different strategies to tackle pattern recognition problems using CT techniques. Some explicitly used logical and systematic reasoning, while others took a decomposition approach, breaking down a problem into smaller tasks. A few students were also uncertain about the terminologies related to their applied strategy, indicating a potential gap in understanding CT competencies. Notably, one student highlighted using mental visualization to improve problem-solving. The criteria for selecting the top 20 perceptions were based on the key themes and sub-themes reported as applications of CT by student opinions about the importance and usefulness of CT skills in their academic careers, as detailed in

Table 10. The perceptions also align with existing studies in the literature (

Angevine et al., 2017;

Lye & Koh, 2014;

Yu et al., 2019).

According to

Table 10, students often reported that CT skills enhance their problem-solving abilities by improving their capacity to recognize structures, analyze them, and make coherent decisions. They also mentioned the practical applicability of these competencies in various real-life scenarios and their importance in developing algorithms. Additionally, students highlighted that increased CT competencies could lead them to more efficient and effective solutions in academic and real-life contexts.

In contrast, some participants reported difficulty in identifying patterns, and many found this both difficult and time-consuming. Some faced challenges in applying algorithm thinking and decomposition to break down into manageable parts and applying effective algorithm thinking steps to solve problems. Some students admitted to struggling with simplifying thought processing, resulting in more complicated solutions.

Apart from this, some participants reported a lack of confidence in their CT skills and needing more mathematical and computational knowledge to solve algorithmic thinking problems. Many participants admitted limited familiarity with CT and a lack of foundational knowledge and understanding of how to apply CT concepts to academic tasks. This led to difficulties in correctly using CT competencies. Participants recognized the relevance of CT skills in everyday life for addressing diverse challenges and improving outcomes through enhanced visualization. They also noted the potential of CT skills to enhance Engineering proficiency in solving complex problems. These findings highlight the importance of comprehensive support and education in CT competencies to develop practical problem-solving skills among students. Addressing pattern recognition and algorithmic thinking challenges requires tailored interventions to improve students’ cognitive abilities and computational knowledge. By bridging gaps in understanding and providing adequate training, educators can empower students to navigate complex problem-solving tasks confidently and proficiently.

5. Discussion

The results from this study offer valuable insights into students’ CT abilities across different demographic categories.

Table 11 briefly discusses key findings of each component, the CTT, CTS, and the qualitative part, and triangulates all key findings accordingly. Analyzing the overall score of the CTT, the students demonstrated relatively strong skills in pattern recognition but struggled more with tasks requiring the decomposition of complex problems, with mean (

,

) values, respectively. However, performance variations across different groups of the CTT, such as age, gender, institute, and academic programs, are also noteworthy. Older students tended to perform better in abstraction and algorithmic thinking, possibly due to increased experience and maturity.

Studies addressing students’ CT skills have identified various factors influencing these skills, with gender, program, and age among the most commonly examined variables. The effects of gender on CT skills, in particular, remain a topic of debate. Some studies have reported no significant difference in the CT skills, while others suggest slight differences based on gender (

Gülbahar et al., 2019). For instance, in (

Sirakaya, 2020), the authors examined gender differences in CT skills with a sample of 722 Turkish secondary school students. The

t-test results indicated no significant difference in the mean CT skills scores between girls and boys (t(719) = −0.98,

p = 0.33). In contrast, other work reported a significant difference in favor of female students, with boys’ and girls’ CT skill scores differing significantly (t(43748) = 7.42,

p < 0.01) (

Gülbahar et al., 2019).

Gender is a prominent variable in CT studies, necessitating a comprehensive discussion to understand its implications fully. Numerous studies have identified gender-based differences in CT performance, frequently attributing these disparities to social, cultural, and educational influences (

Atmatzidou & Demetriadis, 2016;

De la Hoz Serrano et al., 2024;

Grover, 2017). For example,

Cheryan et al. (

2017) highlights that the prevalent stereotype associating computing with male-dominated environments can dissuade girls from engaging in these fields, potentially leading to lower performance in CT-related tasks. Similarly,

Denner et al. (

2012) observed that while boys tend to excel in technical CT tasks, girls often show more substantial capabilities in collaboration and problem decomposition. This suggests that gender differences in CT are multifaceted and context-dependent.

However, contrasting these findings,

Espino and González (

2016) argue that both genders possess equal potential to develop CT skills and acquire related information-processing abilities. Parallel to this, another study (

Espino & González, 2016) found that gender differences in CT assessment were minimal. Although boys slightly outperformed girls in abstraction and decomposition tasks, girls demonstrated a marginally higher proficiency in algorithmic thinking. These results indicate that while gender may influence specific aspects of CT, the overall effect is relatively minor. Moreover, the findings support the notion that with appropriate instructional strategies, both boys and girls can achieve comparable levels of proficiency in CT. When compared to similar studies (

Mindetbay et al., 2019;

Tsai et al., 2021;

Wu & Su, 2021), the present study corroborates the trend that gender differences in CT are present but not substantial.

In the current study, the boys outperform girls across various skill areas, including algorithmic thinking and pattern recognition, though the differences are relatively minor in the CTT. This suggests that boys slightly outperform girls in actual performance, but the gaps are not substantial. In contrast, the CTS shows no significant gender differences in perceived CT skills. Both boys and girls report similar levels of competence across all skill dimensions, with girls scoring slightly higher in abstraction and decomposition, whereas boys scored slightly higher in generalization. These differences were not statistically significant, indicating that gender does not play an essential role in how students perceive their computational thinking abilities.

Similarly, the institutional analysis of the overall score analysis of the CTT indicates that students from public institutions outperform those from private institutions, especially in areas such as algorithmic thinking and pattern recognition. This suggests that institutional factors, such as curriculum structure or resources, may shape students’ computational thinking skills. Furthermore, an examination of academic programs highlights that Computer Science students outperform Engineering students in specific CT sub-skills, particularly abstraction and pattern recognition. Similarly, Computer Science students in the CTS also demonstrate superior skills in abstraction, with significant differences found between Computer Science and Engineering students in abstraction (p = 0.02) and decomposition (p = 0.001). Computer Science students consistently scored slightly higher across these areas despite no significant differences in algorithmic thinking, generalization, and evaluation.

These findings suggest that while both groups exhibit similar performance in specific CT dimensions, Computer Science students have a stronger foundation in particular areas, particularly abstraction and decomposition, which could be attributed to their program’s focus on these critical aspects of computational thinking. The curriculum and pedagogical approaches in Computer Science may emphasize abstract reasoning and problem-solving techniques more than Engineering programs, which are often more oriented toward applied, hands-on learning. This alignment can be attributed to the close connection between CT concepts and the field of Computer Science, as several studies highlight how CT’s core principles map directly to the domain. According to (

Weintrop et al., 2016), CT is integral to Computer Science curricula, where programs are designed to nurture cognitive skills such as abstraction, decomposition, and algorithmic thinking. These skills are vital for tasks like coding and problem-solving. Conversely, Engineering programs prioritize practical applications, such as designing and building systems, where problem-solving often emphasizes tangible, real-world outcomes over abstract reasoning.

Several studies corroborate this distinction, underscoring the alignment between CT concepts and Computer Science principles (

Barr & Stephenson, 2011;

Grover & Pea, 2013). For instance, studies (

Grover, 2017;

Grover & Pea, 2013) stress that CT’s foundational elements, such as abstraction, algorithmic thinking, and decomposition, are deeply embedded in Computer Science education and are typically more integrated into its curriculum than in Engineering programs, which tend to focus on applied problem-solving. Similarly,

Lye and Koh (

2014) highlights that by concentrating on practical applications and physical systems, Engineering programs may emphasize developing abstract cognitive skills less. The work of (

Barr & Stephenson, 2011) further reinforces this perspective, discussing how CT directly maps to the Computer Science discipline, thereby fostering more robust development of these skills among students in Computer Science programs.

Regarding the age group, the CTT analysis shows that participants under 20 perform lower overall but excel in pattern recognition. At the same time, those 20 and older demonstrate superior competency across all areas, suggesting age and experience enhance computational thinking (CT). The CTS results reveal minimal perceived CT skill differences, except that older students scored significantly higher in decomposition ( = 0.92, = 0.87, t = 2.54, p = 0.01). Factors like abstraction and algorithmic thinking showed no significant differences, indicating age has a limited impact on CT skills overall. In conclusion, the findings underscore the importance of CT as a foundational skill across disciplines. While certain demographic factors, such as age and academic program, influence CT abilities, there remains significant potential to improve and cultivate these skills, mainly through targeted educational interventions. Future research and curriculum development should continue to address the challenges in decomposition and abstraction while also exploring ways to integrate CT across diverse fields of study.

7. Limitations and Future Work

This study has several limitations, including a limited sample size from only two universities in Mexico, which may affect the generalizability of the findings to broader educational contexts. Additionally, the focus on specific CT sub-competencies, abstraction, decomposition, algorithmic thinking, and pattern recognition without considering other relevant dimensions, such as creativity and critical thinking, narrows the scope of the analysis. The reliance on self-reported data through the CTS introduces potential biases in students’ perceptions of their abilities. Furthermore, this study does not evaluate the long-term impact of CT education on students’ problem-solving skills or career outcomes.

One of the most critical next steps is to design and implement experimental interventions targeting specific CT areas. For instance, focusing on areas where students demonstrate weaknesses, such as algorithmic thinking or debugging, we plan to develop and test teaching interventions incorporating problem-based learning and interactive exercises. These interventions will be rigorously evaluated to assess their effectiveness in improving students’ CT skills and understanding. Future research could also design and implement interventions that consider the impact of integrating CT activities into classrooms and assess their influence on students’ learning outcomes.

In addition, future research should expand the sample size and include diverse institutions and disciplines to enhance the representativeness of the findings. It should also incorporate more comprehensive assessments that cover additional CT competencies, integrate objective performance measures alongside self-reports, and explore the long-term effects of CT education. Longitudinal studies tracking students’ CT skill development and its impact on their professional careers, as well as research on the role of emerging technologies like AI-driven learning tools in CT education, will provide valuable insights for refining instructional practices and fostering effective CT education across a wide range of academic fields.

8. Conclusions

This study addresses key questions about CT skills by exploring the alignment of students’ self-perceptions with objective assessments, the influence of demographic factors, and the relevance of CT to academic and career contexts. The findings provide significant insights and emphasize the need for targeted interventions and policy reforms. Firstly, the results highlight a considerable misalignment between students’ self-reported confidence and CT performance, particularly in decomposition and algorithmic thinking. This discrepancy calls for integrating reflective practices and feedback mechanisms to help students develop more accurate self-assessments and better align their perceptions with their abilities.

Secondly, related to the study’s research question, demographic analyses reveal notable variations in CT skills, age, gender, and program. Computer Science students outperformed their Engineering peers in CT competencies, while public institution students performed better than those from private institutions. In addition, gender differences were negligible in some CT competencies while notable in others. These findings underscore the importance of equitable resource allocation, cross-disciplinary curriculum reforms, and inclusive teaching strategies to address these disparities. Lastly, students recognized the value of CT skills in their academic and professional pursuits, though perceptions of relevance varied by discipline. To ensure broader applicability, CT education must be contextualized to meet the diverse needs of students across academic programs.

The findings suggest the need for tailored educational interventions to strengthen CT integration across disciplines. Additionally, discrepancies between students’ self-reported confidence and actual performance indicate a need for strategies to align perceptions with abilities, fostering a more realistic understanding of their skills. In conclusion, this research contributes to the growing body of knowledge on CT education by presenting a validated assessment framework that informs curriculum development and instructional strategies. By addressing the gaps reported in this study, educators and policymakers can better equip students with the critical problem-solving skills necessary to thrive in a technology-driven world.