Abstract

During group activities, instructors expect that students will ask each other questions. Therefore, in this study, we looked at the nature and role of peer-to-peer questions during an in-class activity. During the activity, students worked collaboratively to respond to five prompts about an acid–base neutralization reaction. We examined the questioning behavior in groups and the nature and types of questions asked. We then looked specifically at the content questions, analyzing how they varied by prompt, as well as the level of those content questions using Bloom’s taxonomy. Finally, we looked at the role that the peer-to-peer questions played as the students completed the activity. The results revealed that the students broadly asked each other social questions, process questions, and content questions, with content questions being the most frequently posed. The prompts that required students to make a prediction, sketch a graph, and explain their reasoning elicited most of the content questions asked. Furthermore, most of the peer-to-peer content questions asked across the five prompts ranked at the two lowest levels of Bloom’s taxonomy. Finally, the posed peer-to-peer questions were found to play many roles in the discussion, including initiating and sustaining conversations, seeking consensus, challenging each other, and promoting social metacognition. The implications for instruction and research are discussed.

1. Introduction

() noted that “the evidence that good teaching has taken place is reflected more in the kinds of questions students ask than the abundance of past answers they can produce” (p. 550). Questioning is central to guiding inquiry in science and is one of the science and engineering practices (SEPs) identified in the Next Generation Science Standards (NGSS) (). Questioning plays a vital role in facilitating meaningful student learning experiences.

Questions in an educational setting can originate from students to teachers, teachers to students, students to other students, or even from a text to students. Integrating carefully crafted questions into the classroom can increase student motivation to learn, promote productive discussions, and support knowledge construction (; ). In fact, questions can serve a variety of functions, including confirming expectations, solving problems, and filling gaps in knowledge and understanding (). Student questions can provide feedback to teachers because they can reveal student conceptual understanding, as well as the nature and quality of their ideas (). Student questions can also influence the curriculum, especially when they highlight areas of student interest (; ). Despite the known benefits, research has shown that students seldom ask questions, and if they do, the questions are often low-level and require little to no cognitive processing ().

Much of the extant research on student questions has been conducted in K-12 settings, looking, among other things, at the nature of the questions students asked during inquiry laboratories or during argumentation (e.g., ; ; ; ). At the college level, research around student questions has mostly focused on written questions asked by students after reading a textbook chapter () and students’ written questions based on lecture material (). Unfortunately, there is limited research looking at the peer-to-peer questions that arise spontaneously during a small-group in-class activity. Therefore, this study seeks to add to the existing research by answering the following four research questions:

- What was the nature of questioning behavior within student groups?

- What types of questions did students ask?

- What was the nature of the content questions asked in the student groups?

- What roles or functions did peer-to-peer questions play in completing the collaborative task?

2. Literature Review

Questions are central to meaningful learning, and using questioning techniques in the classroom can have many benefits for students. Research shows that intentionally delivered questions can increase student motivation to learn the content, promote lively and productive discussions, and guide students in their knowledge construction (; ). Questioning is a fundamental aspect of learning, particularly in collaborative contexts. Classroom discussions among students can stimulate questions that encourage relevant problem-solving strategies, thus fostering collaborative knowledge building and productive discourse (). Teachers play a key role in encouraging questions in the classroom (). When teachers intentionally create collaborative learning environments that elicit student questions and promote peer discussions, students are more likely to generate their own questions during discourse ().

In a traditional science classroom, it is usually the instructor who poses the questions for students to answer (). However, research has shown that providing opportunities for students to generate their own questions enables them to focus on the key aspects of the content and determine their level of understanding (). Therefore, a central goal of science education is to help students develop the ability to ask questions (; ; ), since asking questions is fundamental to both science and scientific inquiry. Indeed, the ability to ask good scientific questions is an important aspect of scientific literacy () and plays a central role in scientific discourse, especially through eliciting explanations, evaluating evidence, and clarifying doubts ().

The type of questions posed influences the knowledge produced and, consequently, the depth of understanding (). Student-generated questions are an important aspect of both self- and peer assessment (). Furthermore, intellectually challenging questions lead to student engagement and learning (). Peer-to-peer questions during group collaborative activities help promote productive discussions, which in turn lead to the co-construction of knowledge (). Nevertheless, the ability to generate higher-order questions must be cultivated—it is not an inherent skill for all students. In fact, according to (), students do not naturally ask these types of questions. Thus, it is essential that teachers provide students with opportunities to engage in collaborative tasks in which they have opportunities to engage in questioning one another.

Furthermore, the tasks teachers use to engage their students can shape the questions that students generate. For instance, when students are asked to follow specific procedures, it often leads to the generation of factual questions, while when students are given open-ended tasks, it can encourage curiosity-driven questions (). This is particularly important because the types of questions students pose can yield different learning outcomes. Explanation-seeking questions foster deeper understanding, which is more effective for knowledge construction than fact-based questions (; ). Research shows that students generating factual questions primarily build basic knowledge, while those posing explanation-seeking questions tend to develop more comprehensive explanations of the content (). Therefore, to encourage the development of questions that seek deeper understanding, it is essential that instructors engage their students in learning tasks that are open-ended.

In contrast, peer-to-peer questioning can significantly contribute to social learning dynamics and the overall effectiveness of group interactions. According to (), students that engage in questioning each other create an interactive environment that supports peer learning. () discovered that peer interactions of this nature not only helped students understand complex concepts but also increased a sense of community among the students. This research informs our study by highlighting the benefits of peer-to-peer questioning, specifically the increase in group cohesion and collective problem solving when asked to work in collaborative settings.

The research has proposed many ways of classifying cognitive questions. Most are based on Bloom’s taxonomy (). Bloom’s taxonomy has six categories: remember, understand, apply, analyze, evaluate, and create (1956). Remembering is the lowest level and requires that students recall or retrieve material already learned, while creating is the highest level. Therefore, questions increasingly demand higher-order thinking as one moves up the categories from remembering towards creating.

3. Theoretical Framework

This study is grounded in the theories of both social constructivism and social metacognition. Under social constructivism, knowledge and understanding of the world are developed by individuals, and meanings are developed in coordination with others. Social constructivists believe that meaningful learning occurs when individuals are engaged in social activities such as interaction and collaboration. Learning is therefore a social process, and meaningful learning occurs when individuals are engaged in a social activity that happens within a community (). The development of ideas in this context is a collaborative process in which students work together and evaluate each other’s ideas. Indeed, constructivist classrooms entail group work, dialogue, and shared norms (). It can be argued that a collaborative environment, in which students work together—such as during problem-solving activities—is essential for building knowledge (). In the current study, students worked collaboratively to complete the assigned in-class activity. As they worked together, they proposed and shared ideas, asked each other questions, and answered their peers’ questions.

Social metacognition, also called socially shared metacognition, involves awareness and control of other learners’ thinking. In a group setting, such as during collaborative learning, students share ideas with their peers, invite others to critique and evaluate their ideas, evaluate their peers’ ideas, and assess, change, and use each other’s strategies (; ). It is worth noting that while metacognition at the individual level involves being aware of and controlling one’s own thinking, metacognition and social metacognition affect each other since social metacognition aids individual metacognition and individual metacognition supports social metacognition. Indeed, social metacognition shares the metacognitive demands among group members, thus making visible each person’s metacognition and improving individual metacognition (). According to Vygotsky, in a social classroom setting, students receive feedback that can help them monitor, evaluate, and adjust their performance (). Therefore, social metacognition allows students to help each other evaluate metacognitive strategies and learn new ones ().

Metacognition involves three skills: planning, monitoring, and evaluating. Planning involves assessing the problem at hand and the resources available, such as time. Monitoring at the individual and group level involves keeping track of one’s own understanding, such as by checking with peers to see if one had the correct idea or monitoring someone else’s statements for correctness. It can also involve a student checking to make sure the group has a shared understanding or a student asking their peers for more information beyond a stated response. Evaluation occurs at the levels of both the self and the group so that a student can assess their own thinking or solution as well as that of the group ().

4. Materials and Methods

4.1. Research Setting

This study was conducted within a General Chemistry (II) course for non-majors at a medium-sized research-intensive university in the midwestern United States. Students enrolled in this course are expected to have previously taken General Chemistry (I). This three-credit course was delivered through three weekly 50 min sessions over the 15-week semester. Instruction in the course consisted of a mixture of direct instruction (lecture), small-group discussions, whole-class discussions, and in-class collaborative activities, such as the one used in this study. Furthermore, clicker questions were used as formative assessments during each class meeting. The clicker questions were usually accompanied by small-group (turn-to-your-neighbor) as well as whole-class discussions. The activity that is the focus of this study, illustrated in Figure 1, was completed after finishing the chapter ‘Aqueous Ionic Equilibrium’, in which we covered the activity’s concepts of neutralization, titrations, and pH curves (pH-metric titrations). Previously in the course, we also covered the concept of electrolytes. The concept of acid–base neutralization was first introduced in the General Chemistry (I) course. In this course, students completed a laboratory activity in which they reacted an acid with a base while using an indicator to monitor and determine the end point of the neutralization reaction.

Figure 1.

Collaborative activity instructions and questions ().

4.2. Data Collection

In this study, we sought to characterize and analyze peer-to-peer questioning among students during an in-class collaborative group activity. At the end of the unit on ‘Aqueous Ionic Equilibrium’, the students were assigned an in-class collaborative activity that addressed acid–base neutralization. The activity described a scenario involving the reaction between an acid and a base. The students were asked to work in self-selected groups of 2–4 to complete the activity shown in Figure 1. They recorded their conversations during the activity and shared the audio files with the instructor. The students had 20 min to complete the activity. There were 183 students who consented to take part in the study, comprising 63 groups.

It is worth noting that in the laboratory course, students completed a similar exercise in which they determined whether a reaction would occur when two solutions were mixed. Part of that process involves writing complete and net ionic equations, which requires understanding and applying the solubility rules.

4.3. Data Analysis

In this study, both oral and written data were collected. All audio files of the student conversations were transcribed verbatim, and the transcribed data were subsequently coded and analyzed alongside the written responses. Transcription was followed by fact checking (), which involved listening to the recordings while also reading transcripts to ensure accuracy. Corrections were made where necessary. During transcription, we listened to each student speaking in turn, making sure that we separated each speaker’s vocalizations accurately. Each speaker was assigned a number based on the order in which they spoke (the first speaker was assigned a 1, the second speaker was assigned a 2, etc.). Written worksheets and the transcripts were anonymized by replacing student names with pseudonyms. We ensured that each group’s transcript was separated before analysis began.

Coding of the data started with first identifying all inquiries in the student transcripts that could be classified as peer-to-peer questions. These inquiries were then quantified to obtain a sense of how many total peer-to-peer questions were asked across all the groups. We identified and counted instances when questions were asked within each group’s transcript with 100% inter-rater agreement. Next, the team of three researchers co-developed codes that were informed by patterns emerging from the data as well as previous research. We tallied up occurrences of each coded category and looked for emergent patterns. The analysis focused on the types of questions asked, whether certain prompts elicited more content questions than others, the levels of content questions asked based on Bloom’s taxonomy, and the role that the questions played as students worked on completing the collaborative activity.

To establish consistency in coding, the three researchers read through the transcripts from four groups and analyzed the student questions for the type of question, the number of content questions asked in each prompt, the level of content questions asked for each prompt (based on Bloom’s taxonomy), and the role that the questions played during student collaborations as they completed the activity. We determined the type of questions asked by examining the objective of the question or the type of information the question sought. We then focused on content questions, first analyzing which prompts elicited more content questions. We expected that tasks students were familiar with would elicit fewer content questions, such as balancing an equation. We further analyzed the content questions using Bloom’s taxonomy to ascertain the level and proportion of content questions asked for each prompt. Finally, we looked at the role each question played by looking at attributes such as what the question sought from peers or how it contributed to the overall problem-solving process. The average inter-rater agreement for the four transcripts was 94%. Differences were resolved through discussion. Two researchers then divided up and coded the remaining dataset.

5. Results

The goals of this study were threefold: characterize the peer-to-peer questions; analyze patterns in the peer-to-peer questions; and identify the roles or functions that these questions played during the collaborative activity. The results indicated that a total of 959 questions were asked across all 63 groups, with an average of 15 questions per group. Overall, 2 to 44 questions were asked within each group.

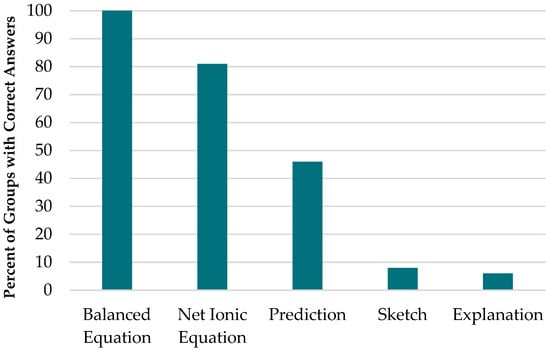

Furthermore, as shown in Figure 2, the analysis of the correctness of their submitted responses indicated that students did relatively better on algorithmic prompts or tasks with which they were familiar than on tasks that required the transfer of knowledge or reasoning, which is what we expected.

Figure 2.

Percentage of groups with correct responses for each of the five prompts.

5.1. What Was the Nature of Questioning Behavior Within Groups?

To answer this question, we looked at patterns in the asking of questions within each group. In particular, we sought to determine whether all group members asked at least one question. Promisingly, in most groups, all the students engaged in asking each other questions. In fact, in 53 of the 63 groups, all group members asked at least one question. Of the 10 groups in which not everyone asked a question, there were 4 groups that had two members with only one that asked questions; 4 groups of three members with only two of the three group members that asked questions; and 2 groups with four members with three of the four participants that asked questions.

5.2. What Types of Questions Did Students Ask?

The students’ peer-to-peer questions fell into three main categories: social, process, and content. Below, each category of questions is described, and a sample excerpt is used to illustrate the question type. Within the following excerpts, all the questions are italicized, and representative questions are both italicized and bolded.

5.2.1. Social Questions

The social questions were not about the assigned task or the content of acid–base neutralization; these questions did not help students understand the activity better, nor were they related to the content of the activity. The bolded questions in the excerpt below are examples of ‘social’ questions.

- S1: C’mon. I’m the calligrapher. All right, if you have one, I’ll take it.

- S2: Calligrapher, do you want a pen? Do you want a fountain pen?

- S1: If you have one, I’ll take it.

- S2: I do have like not but like you played with before.

- S1: I’ll draw something pretty.

- S2: Like a smiley face?

- S1: Yeah, like or whatever you want really.

- S3: Oh, really?

- S1: Yeah.

- S3: Like your doodle?

- S1: Yeah.

- S2: I’m sure he’d appreciate that. Garfield? You should draw Garfield.

- S1: I will. I will draw Garfield.

- S2: Garfield cool?

- S3: No.

5.2.2. Process Questions

The process questions were those inquiries that sought out what was required in the activity; what a specific prompt was asking of students; or how to complete a process such as balancing an equation or sketching a graph. The excerpt below has a bolded example of a ‘process’ question.

- S1: Net ionic equation.

- S2: I’m gonna look something up.

- S2: Oh, is it adding the plus and minuses and aqueous? Yeah.

- S1: Which ones how do we know if it’s aqueous or not?

- S2: I don’t know how we know that though.

- S1: Well should we just write everything spaced out like that and then have the same on each side like that?

- S2: Well, that makes sense. Is that kind of like what they have?

- S1: I’ll just do that.

5.2.3. Content Questions

The content questions inquired about the activity’s subject matter, which included acid–base neutralization and conductivity. In the excerpt below, the bolded questions are examples of content questions asked within a group.

- S4: Is that electronegativity?

- S2: Did we even talk about it?

- S1: OK, it’s like the stronger the acid or the stronger the base, the higher the conductivity?

- S4: Wouldn’t it become more electrically conductive, since they’re splitting off into electrons?

- S1: But won’t it decrease since you’re neutralizing it?

- S2: Yeah, if.

- S4: Oh yeah, that’s true.

- S2: It’s weak electrical conductivity, It’s a weak acid or base.

- S1: Yeah, it’s like you’re getting closer to seven, which is neutral pH.

- S3: Yeah.

- S2: And sodium hydroxide, that’s a strong base, right?

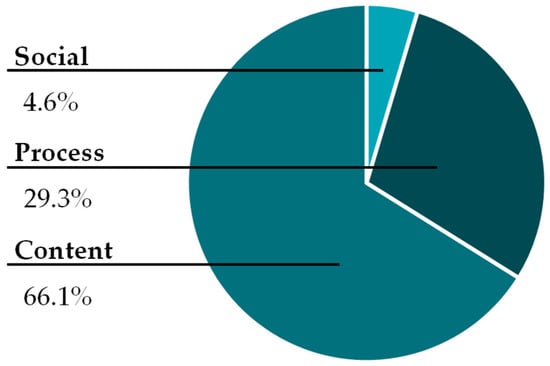

Figure 3 shows the relative proportions of each type of question posed. Most of the peer-to-peer questions were content questions, with social questions being the fewest.

Figure 3.

Proportion of the categories of questions asked.

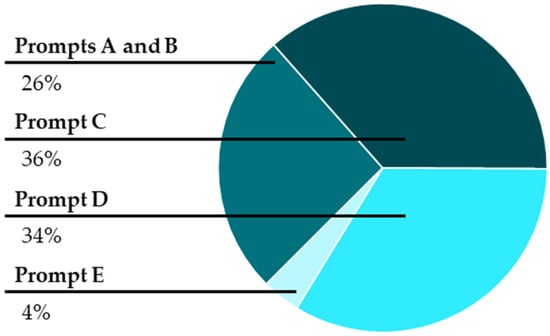

Due to their prevalence, we chose to further analyze the content questions to uncover which of the activity prompts elicited the greatest number of questions and their Bloom’s taxonomy classification. We assumed the prompts that were algorithmic in nature, such as writing and balancing an equation, would elicit fewer questions because we expected most students would already know how to perform these tasks. Similarly, we expected that the prompts that asked students to make a prediction, sketch a graph, or explain their reasoning would elicit the most content questions.

Figure 4 shows the proportion of content questions elicited by each prompt. Prompts A and B, which required writing and balancing of equations, elicited almost one-fourth of all the content questions. However, as expected, prompts C and D, which were the most challenging for the students, elicited most of the content questions.

Figure 4.

Percentage of content questions elicited by each of the activity’s prompts.

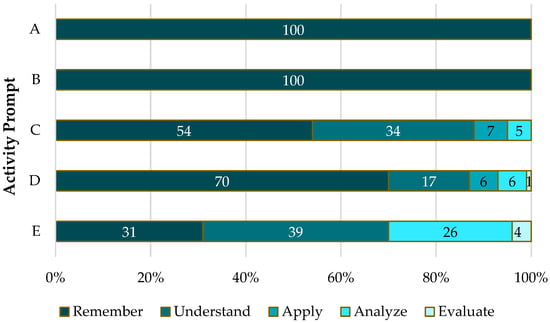

Furthermore, we analyzed the types of content questions that students asked using Bloom’s taxonomy (). We expected that the algorithmic-type prompts (such as writing and balancing an equation) would elicit questions at lower levels of Bloom’s taxonomy, while prompts asking for predictions, sketching (creating), and explaining would elicit questions at higher levels of Bloom’s taxonomy. Figure 5 shows the proportions of content questions asked at each of the levels of Bloom’s taxonomy. Unfortunately, across all prompts, the largest proportion of the questions posed were at the two lowest levels of Bloom’s taxonomy. However, it is encouraging to see that some of the peer-to-peer questions ranked high in Bloom’s taxonomy.

Figure 5.

Proportion of content questions asked, ranked using Bloom’s taxonomy.

5.3. What Roles or Functions Did the Peer-to-Peer Questions Play in Completing the Collaborative Task?

We found that the peer-to-peer questions helped to initiate and promote conversations and engagement in the activity, elicit additional ideas, challenge ideas raised in groups, express one’s ideas or doubts, seek consensus, and promote social metacognition. Each of these roles/functions is described below, with illustrative examples provided.

5.3.1. Initiate and Promote Conversations and Engagement

We noted that in many groups, conversations started with a question from one of the group members. As in the excerpt below, the questions were followed by responses, which led to more questions and responses. In this way, the peer-to-peer questions both initiated and sustained conversations and therefore helped keep students engaged in their collaborative activity. The excerpt below is from a group in which a total of 44 peer-to-peer questions were asked.

- S1: Isn’t hydrochloric acid just HCl?

- S2: Yeah, just HCl.

- S3: Write a balanced equation for the acid base reaction.

- S2: Then you get water at the end too, right?

- S3: Yeah. It’s NaOH + HCl.

- S2: What is it supposed to equal?

- S1: Water and NaCl.

- S2: It is sodium chloride and water.

- S3: And then this is our balanced equation. And write a net ionic equation for neutralize.

- S2: So, the net ionic equation. Isn’t that just the hydrogens and the OH? Then we get the water.

- S3: Would you cancel out the water?

- S2: So, I’m not sure.

5.3.2. Express One’s Ideas

We noted that in some cases the students expressed their ideas as questions. It could be that these students were unsure of their ideas, or they were seeking confirmation of their ideas from their peers. In the bolded question below, the student seems to be commenting on their peer’s statement but phrases their comment in the form of a question.

- S2: And then I think it’s supposed to be add base. For our prediction, I think the conductivity would start positive because of the H pluses, right?

- S1: Yeah.

- S2: So, start out positive charge from the H plus of the acid and then is slowly neutralized with the strong base.

- S1: So, then the conductivity would become more negative?

- S2: Yeah, then the conductivity is negative as excess strong base is added.

5.3.3. Elicit Additional Ideas

The students asked questions to elicit additional ideas from their peers. For example, in the bolded question below, the student posed a question to see if their peers had any more ideas to add.

- S1: To make it more or to have a higher level of electrical conductivity? Yes.

- You’re going to write about excess NaOH?

- S2: So, anything else?

- S1: I think we’re good. We’ve got a bit. I think we’re at the equivalence point.

- S2: Should we go at the equivalence point? Would it be zero?

- S1: I don’t know if it’s like zero because I don’t know how it’s measured. But I’d say it’s like neutral.

- S2: Can conductivity be neutral?

- S1: Yeah, I think so. This or maybe it is zero. I think either way it gets your point across.

5.3.4. Challenge Ideas Raised by Peers

Our results showed that the students challenged their peers’ ideas by asking questions. The bolded question below is an example where a student offered a counter idea by posing a question.

- S4: Is that electronegativity?

- S2: Did we even talk about it?

- S1: OK, it’s like the stronger the acid or the stronger the base, the higher the conductivity?

- S4: Wouldn’t it become more electrically conductive, since they’re splitting off into electrons?

- S1: But won’t it decrease since you’re neutralizing it?

- S2: Yeah, if.

- S4: Oh yeah, that’s true.

- S2: It’s weak electrical conductivity. It’s a weak acid or base.

- S1: Yeah, it’s like you’re getting closer to seven, which is neutral pH.

- S3: Yeah.

- S2: And sodium hydroxide, that’s a strong base, right?

5.3.5. Seek Consensus

One of the ways that students checked for consensus was through asking questions to ensure that the whole group agreed. In the excerpt below, the bolded question illustrates a student checking whether or not their group agreed on the final response.

- S1: So, to make a balanced equation, is it like NaOH plus HCl are those the two things we’re working with?

- S2: That’s what I understood.

- S1: OK, so what do we make with that?

- S2: So, can you just go ok, so if you’re making them an acid and base this is your, this is your acid.

- S1: So, would it be NaCl and H2O?

- S2: That’s what I would say.

- S3: OK, are we in the first part?

- S1: That’s what we did. Ok, do we think that’s right?

- S2: I think so.

5.3.6. Promoting Social Metacognition

Our results show that the peer-to-peer questions promoted social metacognition. We found that students in groups exhibited the metacognitive skills of monitoring and evaluation. For example, in the excerpt below, the bolded question shows a student checking with their peers to see if their reasoning is right.

- S1: (Reads aloud the question from assignment about predicting conductivity)

- S4: It would become less conductive conductivity would go lower, right?

- S2: Yes.

- S4: Because you are adding a base. Is that right?

- S2: Yea I think so.

- S1: Base was added I think the, yeah it would go down. Is what you said?

- S3: What did you say?

- S1: Okay, if the base is added.

- S2: The conductivity goes down.

- S1: The conductivity will go down.

6. Discussion and Conclusions

This study sought to characterize peer-to-peer questions, analyze patterns in those questions, and explore the role that the questions played during the course of completing a collaborative activity. In a classroom setting where students are collaborating on an assigned activity, peer-to-peer questions are a form of student interaction as well as a way for students to engage with the activity (). Such student engagement has cognitive, behavioral, and emotional dimensions (). More specifically, asking questions is considered to be a form of behavioral engagement (). As expected, in this study student engagement—as characterized by question frequency—varied with 2–44 questions posed per group; students in most of the groups asked at least one question. Therefore, through the lens of peer-to-peer questions, most students were engaged in the collaborative activity.

Moreover, from our results, one key role that the peer-to-peer questions played was that of initiating and sustaining conversations within the groups. In the sample excerpt used to illustrate this role, the conversation in that group started with a question, followed by conversations interspersed with questions.

As indicated above, this study identified three broad categories of peer-to-peer questions: social, process, and content questions. Social questions were the least commonly asked questions, while content questions were the most commonly asked. This result differs from the extant research in which most of the questions asked were procedural questions (). It is worth noting here that the context of each activity may affect this finding. While social questions are not relevant to the subject matter of the activity, we suggest that they play a role in the social dynamics of a group. For example, students who engage in side talk would seem to be more ‘free’ or open with each other and are therefore more likely to contribute to the activity. Process questions help students obtain clarification on the various aspects of the activity, such as understanding the meaning of specific prompts or how to complete each part of the activity. Content questions, which were the majority of the questions in this study, could point to a number of things, such as gaps in what students know or what they are thinking about ().

When students enter a course, the instructors for the course assume the students possess a basic level of prior process and content knowledge. In this collaborative activity within General Chemistry II, we presumed that all students would be fluent in writing and balancing equations. Students were expected to transfer their understanding of acid–base neutralization to the scenario in the activity. We expected that there would be fewer or no content questions involving writing and balancing equations. At the same time, we expected content questions for the prompts involving predicting, sketching a graph, and explaining reasoning. Interestingly, there were still many content questions asked about the ‘familiar concepts’, providing evidence for the need to review relevant material covered in prior courses.

Some of the prompts in the collaborative activity required mere recall while others required conceptual understanding. The classification of the content questions revealed that most of the student content questions were at the lower levels of Bloom’s taxonomy. As we anticipated, all the content questions posed while the students were actively writing and balancing equations were at the remember level of Bloom’s taxonomy.

However, contrary to what we expected, the prompts that asked students to predict, create, and explain elicited more questions at the lower levels of Bloom’s taxonomy than at the higher levels. The right kinds of questions could lead students to appropriate discussions, which in turn could lead them towards correct responses. When students ask lower-level questions, this may be a sign of a lack of conceptual understanding. It also provides further evidence that spontaneously generated student questions often tend to be lower-level questions. This finding lends support to the notion that there is a link between the level or type of question asked and the possible level of knowledge construction that occurs ().

Students were not explicitly instructed to ask each other questions. It is therefore encouraging to see that even though students were not required to ask each other questions, they did, and that these peer-to-peer questions played a role during the collaborative activity. For a number of students, their contributions were phrased as questions. While we cannot infer intent, the questions allowed students to run their ideas by their peers to confirm accuracy. In this way, these students were able to both contribute to the group and check their own ideas. Student statements phrased as questions could also be a form of self-questioning, which is considered to be a metacognitive skill, helping students engage in metacognition ().

Peer-to-peer questions were instrumental in promoting social metacognition. Two metacognitive skills evident in the student conversations were monitoring and evaluation (). In the example excerpt shown above (see Section 5.3.6), student questions such as ‘does that make sense’; ‘does that look okay’; and ‘did we do that correctly’ allowed them to examine their thinking and their resulting solutions. At the same time, such questions also helped ensure there was consensus within the group, particularly when students asked questions such as ‘are we on the same page’ or ‘do we all agree’.

7. Implications for Research and Teaching

Our results add to what is already known in science education about the benefits of student-centered instructional strategies such as collaborative learning. As part of the problem-solving process within their collaborative groups, the students asked each other questions that ultimately helped them complete the assigned activity. This occurred in the social setting created through the assigned activity. The focus on student peer-to-peer questions illuminated the fact that questions are a way through which students engage with both the assigned task and each other. Unlike in lecture settings, where students seldom ask questions, our study shows that the students in a group activity were engaged in asking many peer-to-peer questions. These peer-to-peer questions were beneficial because they not only supported student problem-solving efforts but also supported student social metacognition. Thus, for these benefits to be realized, it is necessary that we create environments that support students working together.

While our results showed that most students asked their peers a question during the activity, there were groups in which some students did not ask any questions. Furthermore, there was a wide range of questions asked within the various groups (2–44 questions), with an average of 15 questions per group. This leaves us to wonder why in one group only two questions were asked while in another 44 questions were asked.

Future research should therefore explore the causes for this variation in the number of questions posed. Are these specific results due to demographic factors such as gender, societal or classroom culture, group composition, student major, or year in college? Furthermore, in this study students were instructed to work with those who sat next to them. The groups were not pre-assigned; nevertheless, the students could not choose their group from the entire class roster. Could this factor have had any impact on the questioning behavior within the groups? How would the number and type of questions change across a semester if students consistently worked within the same group of students? How would the results differ if students were first instructed about the different types of questions and levels of Bloom’s taxonomy before engaging in group work? Would it only take one instructional period about how to engage in questioning, or would it require multiple instructional periods throughout the semester to enhance student questioning behavior? Each of these questions warrants future studies in order to better understand how to best support students posing effective peer-to-peer questions.

We did not anticipate students having many content questions about writing and balancing equations because this is covered both in high school chemistry classes and first-semester general chemistry. Moreover, these content questions were at the lowest level of Bloom’s taxonomy. Therefore, another area of future research could be whether students pose higher-level questions when solving familiar tasks or whether familiar tasks only elicit recall questions. It is interesting to note that students were most successful in these tasks and yet they had questions about the prompts’ subject matter. As noted above, student questions can be a source of feedback for teachers (). One key takeaway from our results is that instructors should review relevant prior course material to ensure that all students have the fundamental knowledge to be successful in the course.

The results from our study revealed that students struggled to answer questions that required an explanation of their predictions, the sketching of a graph, or an explanation of why they drew their graphs the way they did. One major possibility to explain this observation is a lack of conceptual understanding of acid–base neutralization and conductometry. The assigned activity required students to transfer their knowledge of acid–base neutralization to the context of conductometry. Thus, the lack of conceptual understanding made this transfer difficult. This can also explain why there were very few peer-to-peer questions from the higher levels of Bloom’s taxonomy. Future research could systematically study the connection between student conceptual understanding and the nature of student questions.

Our results showed that most of the posed content questions were in the two lowest levels of Bloom’s taxonomy. Furthermore, we observed that most student responses to prompts that required higher-order thinking were incorrect, as shown in Figure 2 above. Did the fact that most of the content questions posed while answering these prompts were at the knowledge and remembering levels affect their ability to come up with correct responses? We hypothesize that when students pose questions at higher levels of Bloom’s taxonomy, this should help them construct knowledge at higher levels of Bloom’s taxonomy. Therefore, a future study should use Bloom’s taxonomy to explore whether there is a causal relationship between the levels of the questions posed and the resulting correctness of the group responses.

Author Contributions

Conceptualization: T.M.D., K.G. and J.N.; Methodology: T.M.D., K.G., S.M. and J.N.; Transcribing T.M.D., K.G., S.M. and J.N.; Formal analysis: T.M.D., K.G., S.M. and J.N.; Writing—original draft preparation T.M.D., K.G., S.M. and J.N.; Writing—review and editing: T.M.D., K.G. and J.N.; Administration and supervision: J.N. All authors have read and agreed to the published version of the manuscript.

Funding

Funding to support Grieger was provided by the National Science Foundation (NSF funding number 2411805). Funding to support Miller’s summer REU research was provided by the National Science Foundation (NSF funding number DUE1560142 and DUE 1852045).

Institutional Review Board Statement

The study was approved under Protocol No. IRB0004625 by the Institutional Review Board of North Dakota State University on 9 January 2023.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are not available due to privacy restrictions.

Acknowledgments

This study was supported in part by the National Science Foundation (2411805, DUE1560142, and DUE 1852045). The authors wish to thank the students who participated in this project and Kristina Caton from the NDSU Center for Writers for her help with revising the manuscript for clarity.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Adams, P. (2006). Exploring social constructivism: Theories and practicalities. Education, 34(3), 243–257. [Google Scholar] [CrossRef]

- Anderson, N. J. (2004). Metacognitive reading strategy awareness of ESL and EFL learners. The CATESOL Journal, 16(1), 11–27. [Google Scholar] [CrossRef]

- Biddulph, F., & Osborne, R. (1982). Some issues relating to children’s questions and explanations: Working Paper no. 106. University of Waikato. [Google Scholar]

- Black, P., Harrison, C., Lee, C., Marshall, B., & William, D. (2002). Working inside the black box: Assessment for learning in the classroom. King’s College London. [Google Scholar]

- Bloom, B. S. (1956). Bloom’s taxonomy of educational objectives, handbook 1: Cognitive domain. David McKay Company. [Google Scholar]

- Blosser, P. E. (2000). How to ask the right questions. NSTA Press. [Google Scholar]

- Bybee, R. W. (2000). Teaching science as inquiry. In J. Minstrell, & E. H. van Zee (Eds.), Inquiring into inquiry learning and teaching in science (pp. 20–46). American Association for the Advancement of Science. [Google Scholar]

- Carner, R. L. (1963). Levels of questioning. Education, 83, 546–550. [Google Scholar]

- Chin, C., Brown, D. E., & Bruce, B. C. (2002). Student-generated questions: A meaningful aspect of learning in science. International Journal of Science Education, 24(5), 521–549. [Google Scholar] [CrossRef]

- Chin, C., & Osborne, J. (2008). Students’ questions: A potential resource for teaching and learning science. Studies in Science Education, 44(1), 1–39. [Google Scholar] [CrossRef]

- Chiu, M. M., & Kuo, S. W. (2009). From metacognition to social metacognition: Similarities, differences and learning. Journal of Education Research, 3(4), 1–19. [Google Scholar]

- Crawford, T., Kelly, G. J., & Brown, C. (2000). Ways of knowing beyond facts and laws of science: An ethnographic investigation of student engagement in scientific practices. Journal of Research in Science Teaching, 37(3), 237–258. [Google Scholar] [CrossRef]

- Dogan, F., & Yucel-Toy, B. (2022). Students’ question asking process: A model based on the perceptions of elementary school students and teachers. Asia Pacific Journal of Education, 42(4), 786–801. [Google Scholar] [CrossRef]

- Erdogan, I. (2017). Turkish elementary students’ classroom discourse: Effects of structured and guided inquiry experiences that stimulate student questions and curiosity. International Journal of Environmental and Science Education, 12(5), 1111–1137. [Google Scholar]

- Fredricks, J. A., Blumenfeld, P. C., & Paris, A. H. (2004). School engagement: Potential of the concept, state of the evidence. Review of Educational Research, 74(1), 59–109. [Google Scholar] [CrossRef]

- Goos, M., Galbraith, P., & Renshaw, P. (2002). Socially mediated metacognition: Creating collaborative zones of proximal development in small group problem solving. Educational Studies in Mathematics, 49(2), 193–223. [Google Scholar] [CrossRef]

- Hakkarainen, K. (2003). Progressive inquiry in a computer-supported biology class. Journal of Research in Science Teaching, 40(10), 1072–1088. [Google Scholar] [CrossRef]

- Halmo, S. M., Bremers, E. K., Fuller, S., & Stanton, J. D. (2022). “Oh, that makes sense”: Social metacognition in small-group problem solving. CBE—Life Sciences Education, 21(3), ar58. [Google Scholar] [CrossRef] [PubMed]

- Harper, K. A., Etkina, E., & Lin, Y. (2003). Encouraging and analyzing student questions in a large physics course: Meaningful patterns for instructors. Journal of Research in Science Teaching, 40(8), 776–791. [Google Scholar] [CrossRef]

- Lai, M., & Law, N. (2013). Questioning and the quality of knowledge constructed in a CSCL context: A study on two grade-levels of students. Instructional Science, 41(3), 597–620. [Google Scholar] [CrossRef]

- Marbach-Ad, G., & Sokolove, P. G. (2000). Can undergraduate biology students learn to ask higher level questions? Journal of Research in Science Teaching, 37(8), 854–870. [Google Scholar] [CrossRef]

- Miller, R., & Osborne, J. (1988). Beyond 2000: Science education for the future. King’s College London. [Google Scholar]

- Naibert, N., Vaughan, E. B., Lamberson, K. M., & Barbera, J. (2022). Exploring Student Perceptions of Behavioral, Cognitive, and Emotional Engagement at the Activity Level in General Chemistry. Journal of Chemical Education, 99(3), 1358–1367. [Google Scholar] [CrossRef]

- NGSS Lead States. (2013). Next generation science standards: For states, by states. In Next generation science standards: For states, by states. National Academies Press. [Google Scholar] [CrossRef]

- Nyachwaya, J. M. (2016). General chemistry students’ conceptual understanding and language fluency: Acid–base neutralization and conductometry. Chemistry Education Research and Practice, 17(3), 509–522. [Google Scholar] [CrossRef]

- Nystrand, M., Wu, L. L., Gamoran, A., Zeiser, S., & Long, D. A. (2003). Questions in time: Investigating the structure and dynamics of unfolding classroom discourse. Discourse Processes, 35(2), 135–198. [Google Scholar] [CrossRef]

- Penuel, W. R., Yarnall, L., Koch, M., & Roschelle, J. (2004). Meeting teachers in the middle: Designing handheld computer-supported activities to improve student questioning. In Embracing diversity in the learning sciences (pp. 404–409). Routledge. [Google Scholar]

- Powell, K. C., & Kalina, C. J. (2009). Cognitive and social constructivism: Developing tools for an effective classroom. Education, 130(2), 241–250. [Google Scholar]

- Rosenshine, B., Meister, C., & Chapman, S. (1996). Teaching students to generate questions: A review of the intervention studies. Review of Educational Research, 66(2), 181–221. [Google Scholar] [CrossRef]

- Smith, C. L., Maclin, D., Houghton, C., & Hennessey, M. G. (2000). Sixth-grade students’ epistemologies of science: The impact of school science experiences on epistemological development. Cognition and Instruction, 18(3), 349–422. [Google Scholar] [CrossRef]

- Tiffany, G., Grieger, K., Johnson, K., & Nyachwaya, J. (2023). Characterizing students’ peer–peer questions: Frequency, nature, responses and learning. Chemistry Education Research and Practice, 24(3), 852–867. [Google Scholar] [CrossRef]

- Tracy, S. J. (2013). Qualitative research methods: Collecting evidence, crafting analysis, communicating impact. Wiley-Blackwell. [Google Scholar]

- van Aalst, J. (2009). Distinguishing knowledge-sharing, knowledge-construction, and knowledge-creation discourses. International Journal of Computer-Supported Collaborative Learning, 4(3), 259–287. [Google Scholar] [CrossRef] [PubMed]

- Van De Bogart, K. L., Dounas-Frazer, D. R., Lewandowski, H. J., & Stetzer, M. R. (2017). Investigating the role of socially mediated metacognition during collaborative troubleshooting of electric circuits. Physical Review Physics Education Research, 13(2), 020116. [Google Scholar] [CrossRef]

- van Zee, E. H., Iwasyk, M., Kurose, A., Simpson, D., & Wild, J. (2001). Student and teacher questioning during conversations about science. Journal of Research in Science Teaching, 38(2), 159–190. [Google Scholar] [CrossRef]

- Vygotsky, L. S. (1978). Mind in society: Development of higher psychological processes. Harvard University Press. [Google Scholar]

- Watts, M., Gould, G., & Alsop, S. (1997). Questions of understanding: Categorizing pupils’ questions in science. School Science Review, 79(286), 57–63. [Google Scholar]

- Webb, N. M. (2009). The teacher’s role in promoting collaborative dialogue in the classroom. British Journal of Educational Psychology, 79(1), 1–28. [Google Scholar] [CrossRef]

- Woodward, C. (1992). Raising and answering questions in primary science: Some considerations. Evaluation & Research in Education, 6(2–3), 145–153. [Google Scholar] [CrossRef]

- Yu, F.-Y. (2009). Scaffolding student-generated questions: Design and development of a customizable online learning system. Computers in Human Behavior, 25(5), 1129–1138. [Google Scholar] [CrossRef]

- Zeegers, Y., & Elliott, K. (2019). Who’s asking the questions in classrooms? Exploring teacher practice and student engagement in generating engaging and intellectually challenging questions. Pedagogies: An International Journal, 14(1), 17–32. [Google Scholar] [CrossRef]

- Zhang, J., Scardamalia, M., Lamon, M., Messina, R., & Reeve, R. (2007). Socio-cognitive dynamics of knowledge building in the work of 9- and 10-year-olds. Educational Technology Research and Development, 55(2), 117–145. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).