AI and Eye Tracking Reveal Design Elements’ Impact on E-Magazine Reader Engagement

Abstract

1. Introduction

1.1. Eye-Tracking Case Studies: Emotional Responses to E-Magazine Content

1.2. Discrepancy Between Traditional and AI Eye Tracking in Reading Behaviour

1.2.1. Intelligible Background Speech

1.2.2. Neuromarketing Methodologies and Implications for Reading Interventions

- RQ 1. How do content structure and background colour contrast in e-magazine articles affect readers’ sustained attention levels?

- RQ 2. To what extent do structured content and high background contrast reduce cognitive demand compared with unstructured content and low background contrast in e-magazine articles?

- RQ 3. What are the differences in emotional responses elicited by e-magazine articles with structured content and high background contrast versus those with unstructured content and low background contrast?

- RQ 4. Are content structure and background colour contrast effects on reader engagement, cognitive processing, and emotional responses consistent across different article subjects?

- RQ 5. Which specific aspects of content structure (e.g., headings, paragraphing, bullet points) have the most significant impact on reader engagement and cognitive processing?

- RQ 6. How does the interaction between content structure and background colour contrast influence the overall reader experience in e-magazine articles?

2. Materials and Methods

2.1. Research Methods

2.1.1. Research Methods for Attention Analysis

2.1.2. Research Methods for Emotional Analysis

2.2. Testing on Human Studies—Setup

2.3. Testing with AI Eye Tracking—Setup

3. Attentional Processing Results

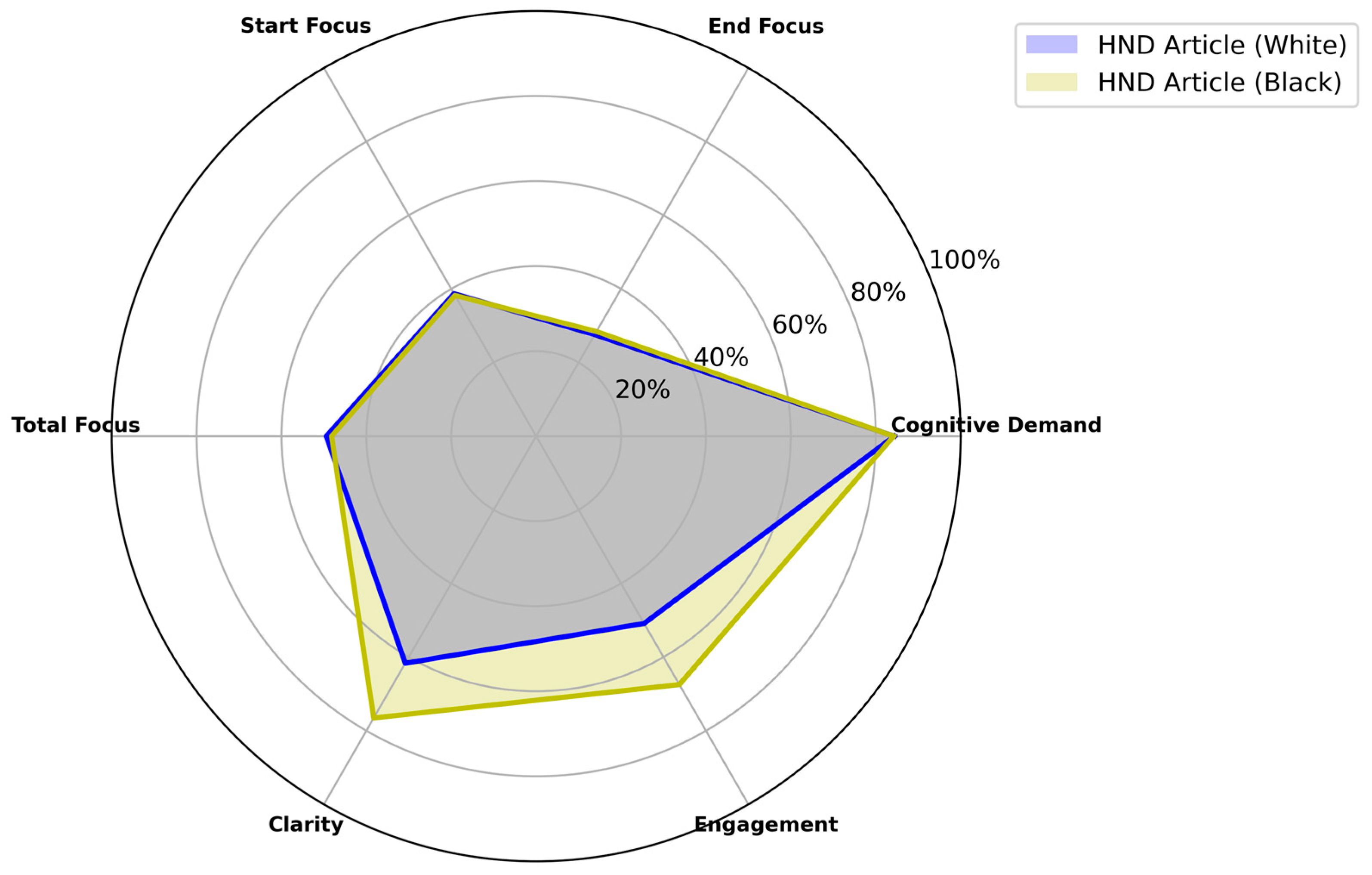

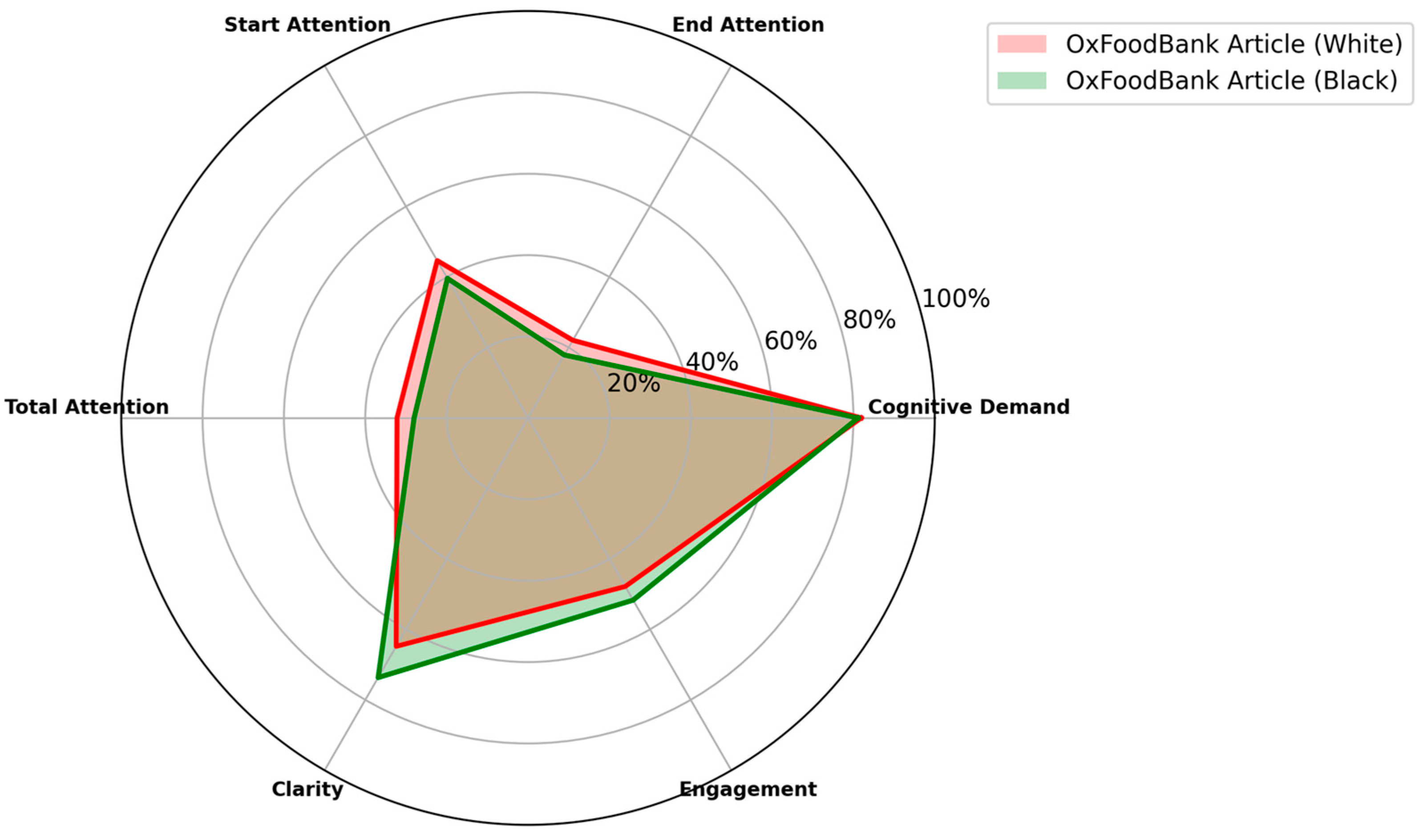

3.1. A Comparative Analysis on Key Attention Metrics Between HND and OxFood Article

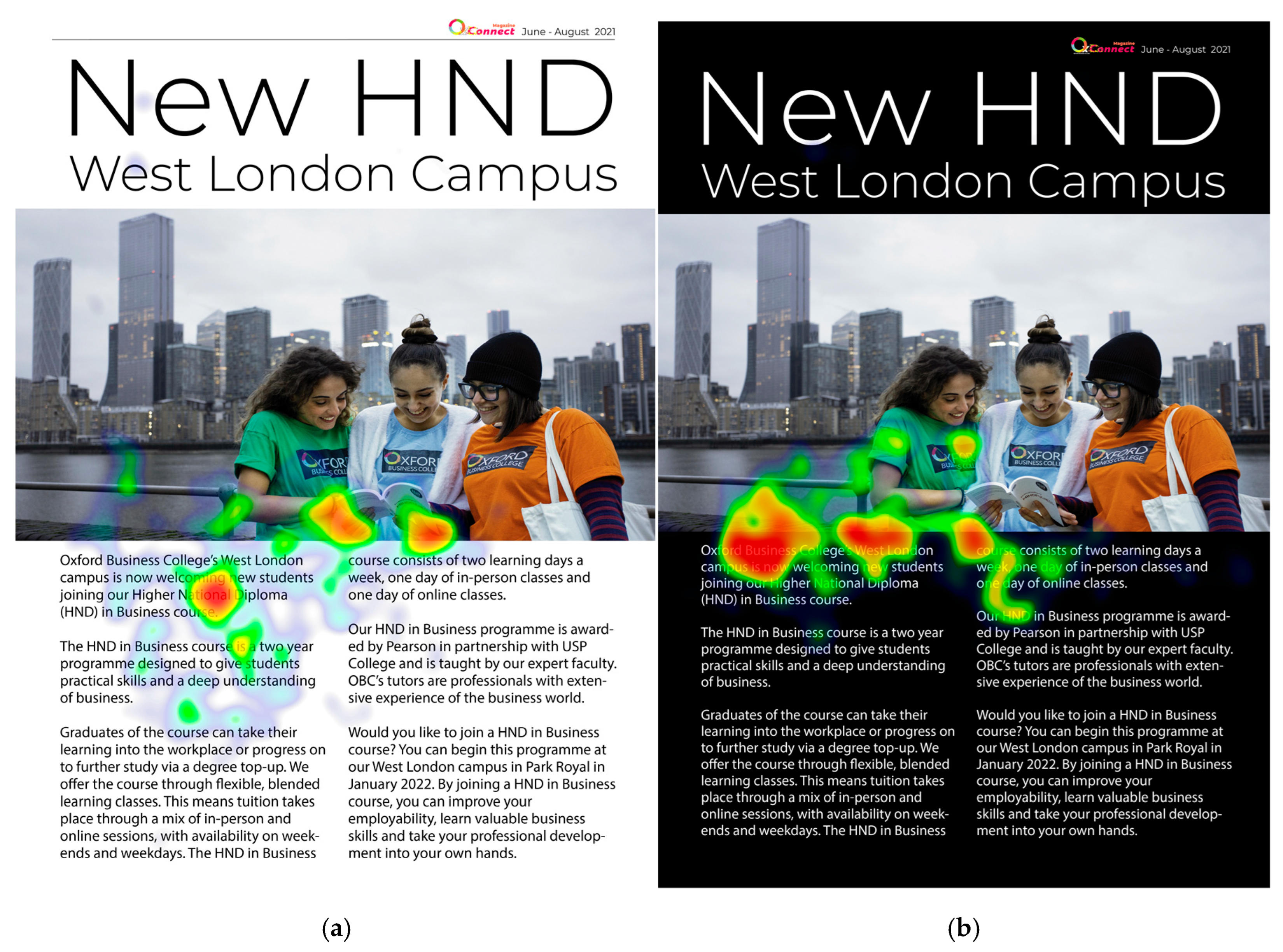

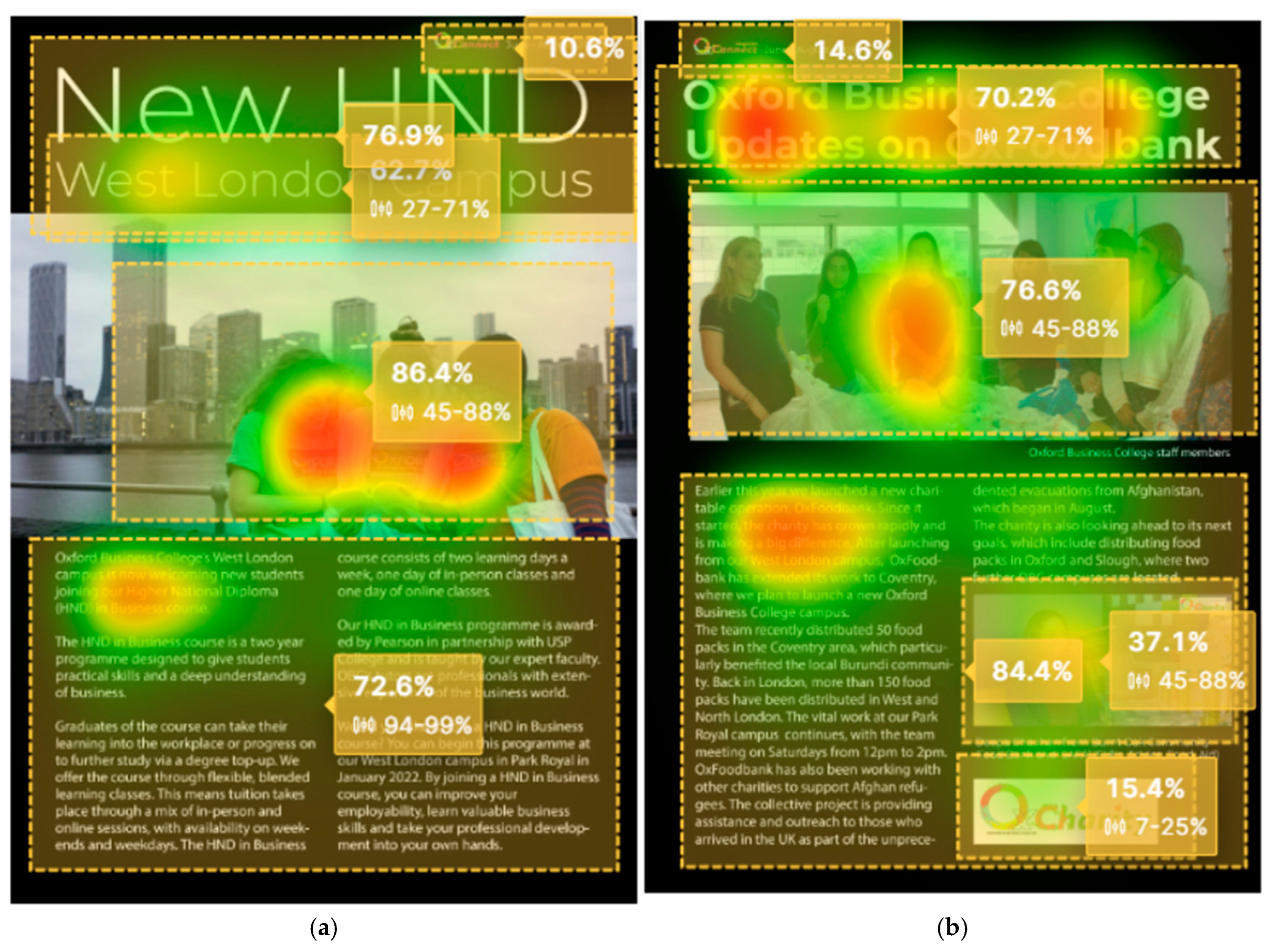

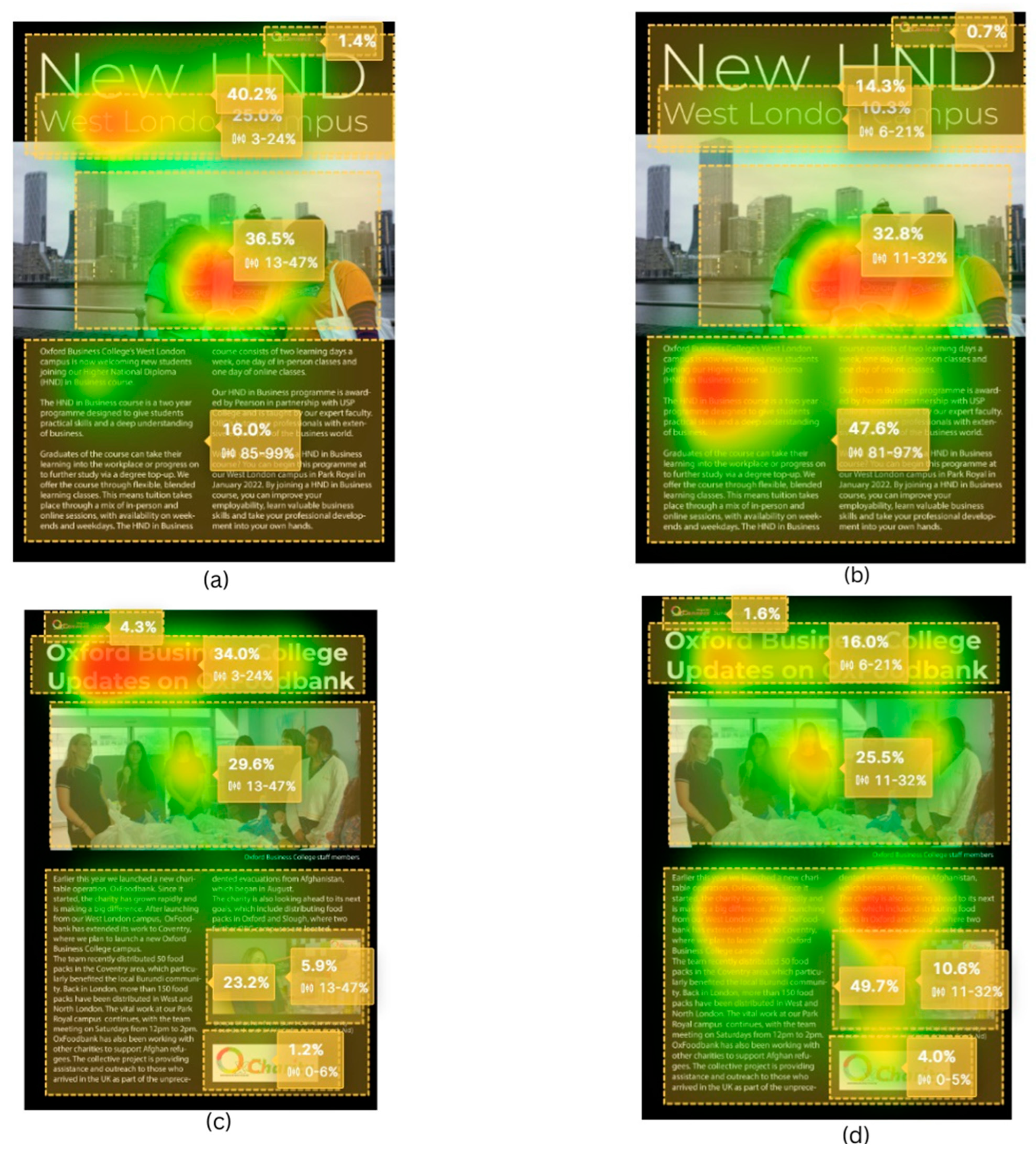

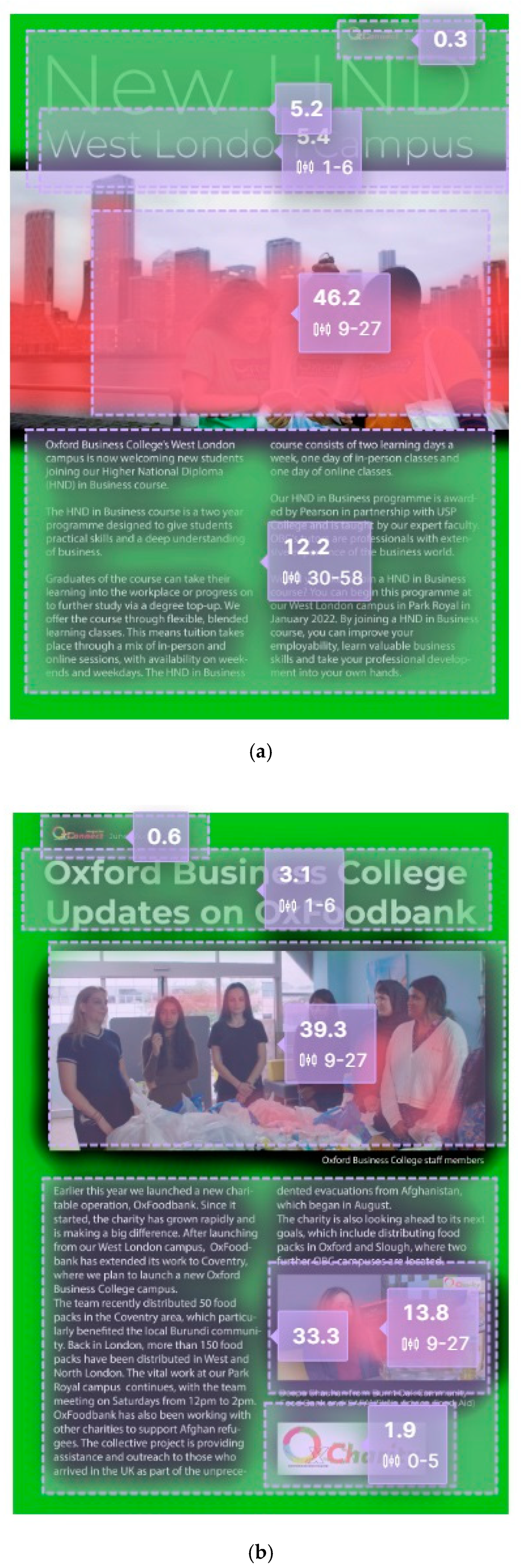

3.2. Reading Patterns in HND and OxFoodbank Articles with White Background

3.3. Reading Patterns in HND and OxFoodbank Articles with Black Background

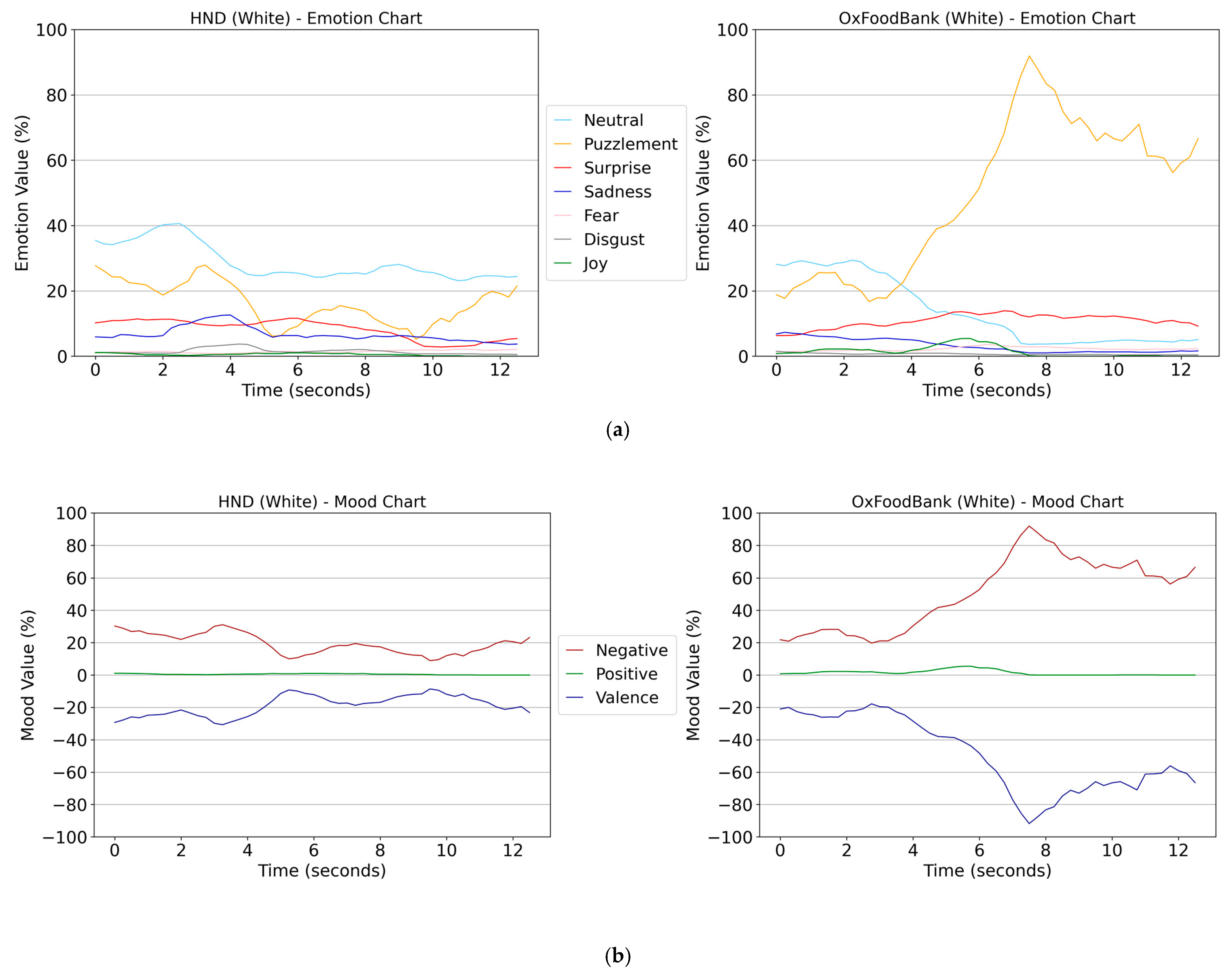

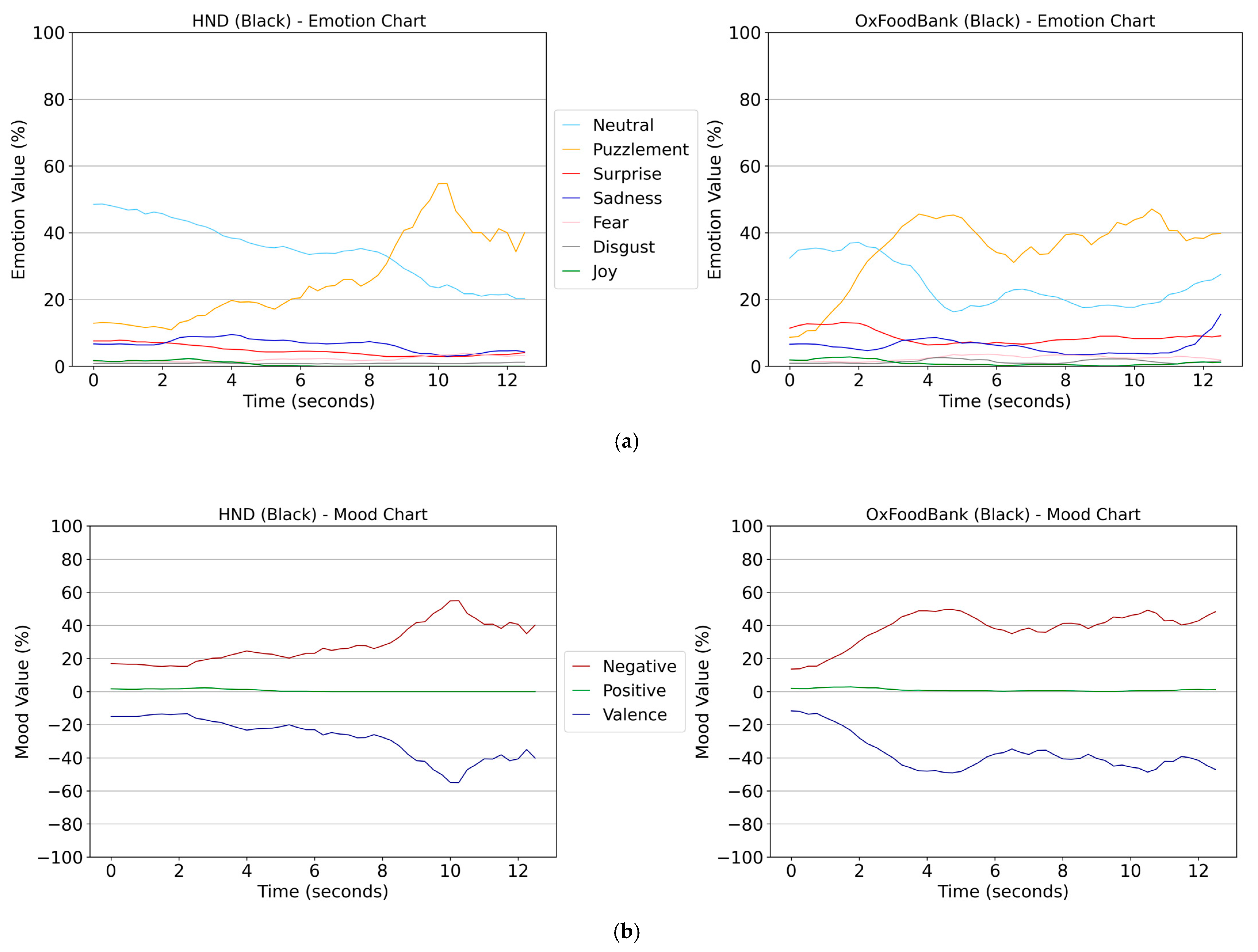

4. Emotional Processing Results

5. Discussion

6. Proposed Additional Neuromarketing Analyses for Enhanced Insights

- Electroencephalography (EEG) Analysis:EEG measurements would enable a more direct assessment of cognitive load and attention. This methodology could elucidate the neural correlates of engagement and cognitive demand during reading tasks. Metrics such as frontal theta power and alpha band suppression could be analysed to quantify cognitive workload and attentional processes.

- Galvanic Skin Response (GSR):Incorporating GSR measurements would allow for the assessment of physiological arousal levels. This metric could provide supplementary data on emotional engagement and stress responses during reading, complementing the existing eye-tracking and emotional response data.

- Heart Rate Variability (HRV):Measuring HRV would enable the assessment of cognitive load and emotional states. Changes in HRV can indicate shifts in cognitive effort and emotional engagement, providing an additional layer of physiological data to corroborate eye-tracking findings.

- Fixation-Related Potentials (FRPs):Combining EEG with eye-tracking would facilitate the analysis of FRPs. This technique could provide insights into the cognitive processes occurring during specific fixations, offering a more temporally precise understanding of information processing.

- Text Linguistics Analysis:Conducting a detailed linguistic analysis of the articles would involve examining factors such as sentence complexity, vocabulary difficulty, and coherence. This could elucidate differences in cognitive demand and engagement between the articles.

- Individual Differences Analysis:Investigating how individual differences in reading ability, prior knowledge, and cognitive style influence engagement and comprehension would be beneficial. This could involve administering standardised tests and correlating results with eye-tracking and neurophysiological data.

- Multimodal Data Fusion:Employing advanced machine learning techniques to integrate data from multiple modalities (eye tracking, EEG, GSR, etc.) would be advantageous. This approach could provide a more comprehensive understanding of the cognitive and emotional processes of reading and comprehension.

- Semantic Gaze Mapping:Utilising semantic gaze mapping techniques would enable the analysis of how readers’ gaze patterns relate to specific semantic content within the articles. This could provide insights into which types of information attract and maintain attention most effectively.

7. Recommendations for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| OBC | Oxford Business College |

| PREDICT | AI eye-tracking software for predicting human behaviour |

| HND | Article |

| OxFoodBank | Article |

| CD | Cognitive demand |

| AOI | Area of interest |

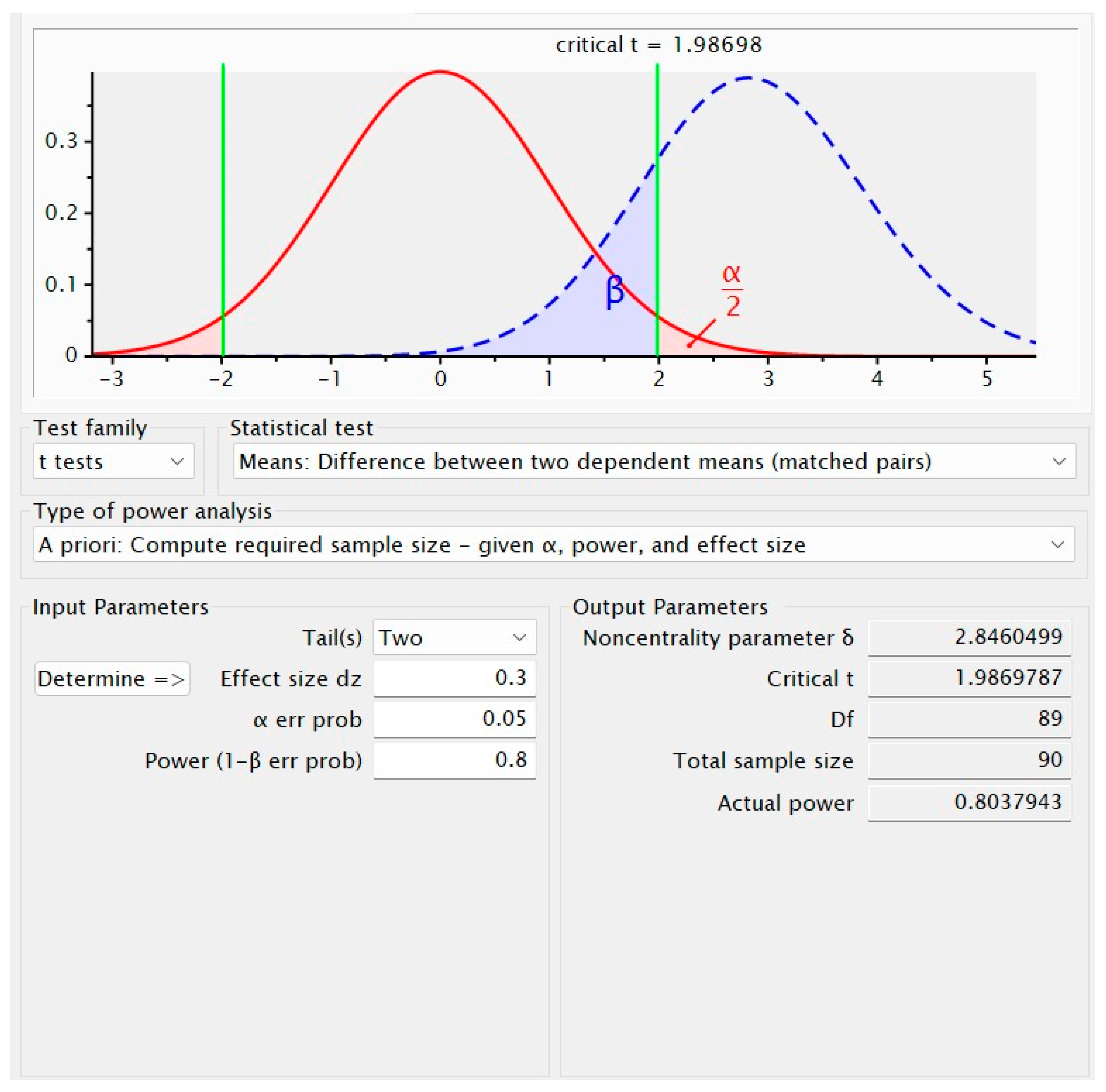

Appendix A. G*Power Software Results

Appendix B. Selected Articles for Research

References

- Adam, K. C. S., Yang, Z., & Serences, J. (2023). First encounters: Estimating the initial magnitude of attentional capture. arXiv. [Google Scholar] [CrossRef]

- Ao, N., & Zhang, M. (2024). Unravelling the relationship between English reading habits and individual, family and school factors: A Chinese perspective. European Journal of Education, 59(3), e12668. [Google Scholar] [CrossRef]

- Arapakis, I., Lalmas, M., Cambazoglu, B. B., Marcos, M., & Jose, J. M. (2014). User engagement in online N ews: Under the scope of sentiment, interest, affect, and gaze. Journal of the Association for Information Science and Technology, 65(10), 1988–2005. [Google Scholar] [CrossRef]

- Arfé, B., Delatorre, P., & Mason, L. (2023). Effects of negative emotional valence on readers’ text processing and memory for text: An eye-tracking study. Reading and Writing, 36(7), 1743–1768. [Google Scholar] [CrossRef]

- Astudillo, C., Muñoz, K., & Maldonado, P. E. (2018). Emotional content modulates attentional visual orientation during free viewing of natural images. Frontiers in Human Neuroscience, 12, 459. [Google Scholar] [CrossRef]

- Awadh, F. H. R., Zoubrinetzky, R., Zaher, A., & Valdois, S. (2022). Visual attention span as a predictor of reading fluency and reading comprehension in Arabic. Frontiers in Psychology, 13, 868530. [Google Scholar] [CrossRef]

- Bagić Babac, M. (2023). Emotion analysis of user reactions to online news. Information Discovery and Delivery, 51(2), 179–193. [Google Scholar] [CrossRef]

- Bayat, A., & Pomplun, M. (2016, December 14–15). The influence of text difficulty level and topic on eye-movement behavior and pupil size during reading. 2016 2nd International Conference of Signal Processing and Intelligent Systems (ICSPIS) (pp. 1–5), Tehran, Iran. [Google Scholar] [CrossRef]

- Ben Nasr, L. (2014, April 23–26). Neuroscience techniques and priming processes significance to neuromarketing advertising. 1st Mediterranean Interdisciplinary Forum on Social Sciences and Humanities (pp. 255–267), Beirut, Lebanon. Available online: https://www.researchgate.net/profile/Kujtim-Bytyqi/publication/263661555_Principles_of_State-Building_The_case_of_Kosovo/links/0f31753b9889103c56000000/Principles-of-State-Building-The-case-of-Kosovo.pdf#page=266 (accessed on 15 October 2024).

- Chen, T., Samaranayake, P., Cen, X., Qi, M., & Lan, Y.-C. (2022). The impact of online reviews on consumers’ purchasing decisions: Evidence from an eye-tracking study. Frontiers in Psychology, 13, 865702. [Google Scholar] [CrossRef]

- Collins, M. L., & Davies, T. C. (2023, July 24–27). Emotion differentiation through features of eye-tracking and pupil diameter for monitoring well-being. 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC) (pp. 1–4), Sydney, Australia. [Google Scholar] [CrossRef]

- Daily, S. B., James, M. T., Cherry, D., Porter, J. J., Darnell, S. S., Isaac, J., & Roy, T. (2017). Affective computing: Historical foundations, current applications, and future trends. In Emotions and affect in human factors and human-computer interaction (pp. 213–231). Elsevier. [Google Scholar] [CrossRef]

- Dercon, Q., Mehrhof, S. Z., Sandhu, T. R., Hitchcock, C., Lawson, R. P., Pizzagalli, D. A., Dalgleish, T., & Nord, C. L. (2024). A core component of psychological therapy causes adaptive changes in computational learning mechanisms. Psychological Medicine, 54(2), 327–337. [Google Scholar] [CrossRef] [PubMed]

- Drieghe, D., Fitzsimmons, G., & Liversedge, S. P. (2017). Parafoveal preview effects in reading unspaced text. Journal of Experimental Psychology: Human Perception and Performance, 43(10), 1701–1716. [Google Scholar] [CrossRef]

- Duan, Y. (2017). The impact of colour on impulsivity, arousal and emotion [Ph.D. thesis, University of Leeds]. Available online: https://etheses.whiterose.ac.uk/17468/ (accessed on 1 October 2024).

- Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. [Google Scholar] [CrossRef] [PubMed]

- Faul, F., Erdfelder, E., Buchner, A., & Lang, A.-G. (2009). Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41, 1149–1160. Available online: https://www.psychologie.hhu.de/fileadmin/redaktion/Fakultaeten/Mathematisch-Naturwissenschaftliche_Fakultaet/Psychologie/AAP/gpower/GPower31-BRM-Paper.pdf (accessed on 21 October 2024). [CrossRef]

- Glova, B., & Mudryk, I. (2020, August 21–25). Application of deep learning in neuromarketing studies of the effects of unconscious reactions on consumer behavior. 2020 IEEE Third International Conference on Data Stream Mining & Processing (DSMP) (pp. 337–340), Lviv, Ukraine. [Google Scholar] [CrossRef]

- Guo, H., Wang, W., Song, F., Liu, Y., Liu, H., & Bo, Z. (2022). The effect of color combinations on the efficiency of text recognition in digital devices. International Journal of Human–Computer Interaction, 40(5), 1317–1326. [Google Scholar] [CrossRef]

- Hall, R. H., & Hanna, P. (2004). The impact of web page text-background colour combinations on readability, retention, aesthetics and behavioural intention. Behaviour & Information Technology, 23(3), 183–195. [Google Scholar] [CrossRef]

- Hansen, C., Hansen, C., Simonsen, J. G., Larsen, B., Alstrup, S., & Lioma, C. (2020, July 25–30). Factuality checking in news headlines with eye tracking. 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval (pp. 2013–2016), Pisa, Italy. [Google Scholar] [CrossRef]

- Hao, H., & Conway, A. R. A. (2022). The impact of auditory distraction on reading comprehension: An individual differences investigation. Memory & Cognition, 50(4), 852–863. [Google Scholar] [CrossRef]

- He, J., Gao, B., & Wang, Y. (2024). From attraction to retention: How vlogger attributes, vlog news content quality, and platform features affect continuance intention of vlog news. International Journal of Human–Computer Interaction, 2024, 1–22. [Google Scholar] [CrossRef]

- Hille, S., & Bakker, P. (2013). I like news. Searching for the ‘Holy Grail’ of social media: The use of Facebook by Dutch news media and their audiences. European Journal of Communication, 28(6), 663–680. [Google Scholar] [CrossRef]

- Hoppitt, L., Mathews, A., Yiend, J., & Mackintosh, B. (2010). Cognitive mechanisms underlying the emotional effects of bias modification. Applied Cognitive Psychology, 24(3), 312–325. [Google Scholar] [CrossRef]

- Hyönä, J., & Ekholm, M. (2016). Background speech effects on sentence processing during reading: An eye movement study. PLoS ONE, 11(3), e0152133. [Google Scholar] [CrossRef] [PubMed]

- Ikermane, M., & Mouatasim, A. E. (2023). Dyslexia deep clustering using webcam-based eye tracking. IAES International Journal of Artificial Intelligence (IJ-AI), 12(4), 1892. [Google Scholar] [CrossRef]

- King, J. L., Simper, C., Razzouk, J., & Merten, J. W. (2021). The impact of varying warning color on E-cigarette advertisements: Results from an online experiment among young adults. Nicotine & Tobacco Research, 23(9), 1536–1541. [Google Scholar] [CrossRef]

- Kruikemeier, S., Lecheler, S., & Boyer, M. M. (2018). Learning from news on different media platforms: An eye-tracking experiment. Political Communication, 35(1), 75–96. [Google Scholar] [CrossRef]

- Lee, C.-L., Pei, W., Lin, Y.-C., Granmo, A., & Liu, K.-H. (2023). Emotion detection based on pupil variation. Healthcare, 11(3), 322. [Google Scholar] [CrossRef] [PubMed]

- Lewandowska, A., & Olejnik-Krugly, A. (2021). Do background colors have an impact on preferences and catch the attention of users? Applied Sciences, 12(1), 225. [Google Scholar] [CrossRef]

- Liao, S., Yu, L., Kruger, J.-L., & Reichle, E. D. (2024). Dynamic reading in a digital age: New insights on cognition. Trends in Cognitive Sciences, 28(1), 43–55. [Google Scholar] [CrossRef] [PubMed]

- Liew, T. W., Tan, S.-M., Gan, C. L., & Pang, W. M. (2022). Colors and learner’s gender evoke different emotional and cognitive effects in multimedia learning. Human Behavior and Emerging Technologies, 2022, 1–15. [Google Scholar] [CrossRef]

- Lischka, J. A., & Messerli, M. (2016). Examining the benefits of audience integration. Digital Journalism, 4(5), 597–620. [Google Scholar] [CrossRef]

- Locher, F. M., & Philipp, M. (2023). Measuring reading behavior in large-scale assessments and surveys. Frontiers in Psychology, 13, 1044290. [Google Scholar] [CrossRef]

- Meng, Z., Lan, Z., Yan, G., Marsh, J. E., & Liversedge, S. P. (2020). Task demands modulate the effects of speech on text processing. Journal of Experimental Psychology: Learning, Memory, and Cognition, 46(10), 1892–1905. [Google Scholar] [CrossRef]

- Meyerhoff, H. S., & Papenmeier, F. (2020). Individual differences in visual attention: A short, reliable, open-source, and multilingual test of multiple object tracking in PsychoPy. Behavior Research Methods, 52(6), 2556–2566. [Google Scholar] [CrossRef]

- Mooney, S. W. J., Hill, N. J., Tuzun, M. S., Alam, N. M., Carmel, J. B., & Prusky, G. T. (2018). Curveball: A tool for rapid measurement of contrast sensitivity based on smooth eye movements. Journal of Vision, 18(12), 7. [Google Scholar] [CrossRef]

- Möller, J., van de Velde, R. N., Merten, L., & Puschmann, C. (2020). Explaining online news engagement based on browsing behavior: Creatures of habit? Social Science Computer Review, 38(5), 616–632. [Google Scholar] [CrossRef]

- Neurons. (2024a). Predict Datasheet.

- Neurons. (2024b). Predict Tech Paper.

- Pattemore, M., & Gilabert, R. (2023). Using eye-tracking to measure cognitive engagement with feedback in a digital literacy game. The Language Learning Journal, 51(4), 472–490. [Google Scholar] [CrossRef]

- Perlman, B., & Mor, N. (2022). Cognitive bias modification of inferential flexibility. Behaviour Research and Therapy, 155, 104128. [Google Scholar] [CrossRef] [PubMed]

- Pointon, M., Walton, G., Turner, M., Lackenby, M., Barker, J., & Wilkinson, A. (2023). Information discernment and online reading behaviour: An experiment. Online Information Review, 47(3), 522–549. [Google Scholar] [CrossRef]

- Ponz, A., Montant, M., Liegeois-Chauvel, C., Silva, C., Braun, M., Jacobs, A. M., & Ziegler, J. C. (2014). Emotion processing in words: A test of the neural re-use hypothesis using surface and intracranial EEG. Social Cognitive and Affective Neuroscience, 9(5), 619–627. [Google Scholar] [CrossRef]

- Powell, T. E., Van der Meer, T. G. L. A., & Peralta, C. B. (2019). Picture power? The contribution of visuals and text to partisan selective exposure. Media and Communication, 7(3), 12–31. [Google Scholar] [CrossRef]

- Ramsøy, T. Z. (2019). Building a foundation for neuromarketing and consumer neuroscience research: How researchers can apply academic rigor to the neuroscientific study of advertising effects. Journal of Advertising Research, 59(3), 281–294. [Google Scholar] [CrossRef]

- Ramsøy, T. Z., Plassmann, H., Yoon, C., & Devlin, J. T. (2023). Consumer neuroscience—Foundation, validation, and relevance. Available online: https://www.frontiersin.org/research-topics/10200/consumer-neuroscience---foundation-validation-and-relevance (accessed on 1 October 2024).

- Riapina, N., Permyakova, T., & Balezina, E. (2023). Approbation of pedagogical communication scales for educational online interaction in Russian universities. Voprosy Obrazovaniya/Educational Studies Moscow, 2023, 2. [Google Scholar] [CrossRef]

- Santhosh, J., Pai, A. P., & Ishimaru, S. (2024). Toward an interactive reading experience: Deep learning insights and visual narratives of engagement and emotion. IEEE Access, 12, 6001–6016. [Google Scholar] [CrossRef]

- Skaramagkas, V., Giannakakis, G., Ktistakis, E., Manousos, D., Karatzanis, I., Tachos, N., Tripoliti, E., Marias, K., Fotiadis, D. I., & Tsiknakis, M. (2023). Review of eye tracking metrics involved in emotional and cognitive processes. IEEE Reviews in Biomedical Engineering, 16, 260–277. [Google Scholar] [CrossRef]

- Smit, E. G., Boerman, S. C., & van Meurs, L. (2015). The power of direct context as revealed by eye tracking. Journal of Advertising Research, 55(2), 216–227. [Google Scholar] [CrossRef]

- Stoops, A., & Montag, J. L. (2023). Effects of individual differences in text exposure on sentence comprehension. Scientific Reports, 13(1), 16812. [Google Scholar] [CrossRef] [PubMed]

- Straub, E. R., Schmidts, C., Kunde, W., Zhang, J., Kiesel, A., & Dignath, D. (2022). Limitations of cognitive control on emotional distraction—Congruency in the color stroop task does not modulate the emotional stroop effect. Cognitive, Affective, & Behavioral Neuroscience, 22(1), 21–41. [Google Scholar] [CrossRef]

- Sungkur, R. K., Antoaroo, M. A., & Beeharry, A. (2016). Eye tracking system for enhanced learning experiences. Education and Information Technologies, 21(6), 1785–1806. [Google Scholar] [CrossRef]

- Šola, H. M., Qureshi, F. H., & Khawaja, S. (2024a). AI-powered eye tracking for bias detection in online course reviews: A udemy case study. Big Data and Cognitive Computing, 8(11), 144. [Google Scholar] [CrossRef]

- Šola, H. M., Qureshi, F. H., & Khawaja, S. (2024b). Exploring the untapped potential of neuromarketing in online learning: Implications and challenges for the higher education sector in europe. Behavioral Sciences, 14(2), 80. [Google Scholar] [CrossRef]

- Šola, H. M., Qureshi, F. H., & Khawaja, S. (2024c). Predicting behaviour patterns in online and PDF magazines with AI eye-tracking. Behavioral Sciences, 14(8), 677. [Google Scholar] [CrossRef]

- Thaqi, E., Mantawy, M. O., & Kasneci, E. (2024, June 4–7). SARA: Smart AI reading assistant for reading comprehension. 2024 Symposium on Eye Tracking Research and Applications (pp. 1–3), Glasgow, UK. [Google Scholar] [CrossRef]

- Usée, F., Jacobs, A. M., & Lüdtke, J. (2020). From abstract symbols to emotional (In-)sights: An eye tracking study on the effects of emotional vignettes and pictures. Frontiers in Psychology, 11, 905. [Google Scholar] [CrossRef]

- Vacas, J., Antolí, A., Sánchez-Raya, A., Pérez-Dueñas, C., & Cuadrado, F. (2024). Eye-tracking methodology to detect differences in attention to faces between developmental language disorder and autism. Journal of Speech, Language, and Hearing Research, 67(9), 3148–3162. [Google Scholar] [CrossRef] [PubMed]

- Vadiraja, P., Santhosh, J., Moulay, H., Dengel, A., & Ishimaru, S. (2021, September 21–26). Effects of counting seconds in the mind while reading. Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers (pp. 486–490), Virtual. [Google Scholar] [CrossRef]

- van den Broek, G., Takashima, A., Wiklund-Hörnqvist, C., Karlsson Wirebring, L., Segers, E., Verhoeven, L., & Nyberg, L. (2016). Neurocognitive mechanisms of the “testing effect”: A review. Trends in Neuroscience and Education, 5(2), 52–66. [Google Scholar] [CrossRef]

- van der Sluis, F., & van den Broek, E. L. (2023). Feedback beyond accuracy: Using eye-tracking to detect comprehensibility and interest during reading. Journal of the Association for Information Science and Technology, 74(1), 3–16. [Google Scholar] [CrossRef] [PubMed]

- van der Wel, R., Böckler, A., Breil, C., & Welsh, T. (2022). What’s in gaze, what’s in a face? Emotion expression modulates direct gaze processing. Journal of Vision, 22(14), 4278. [Google Scholar] [CrossRef]

- Verney, S. P., Gibbons, L. E., Dmitrieva, N. O., Kueider, A. M., Williams, M. W., Meyer, O. L., Manly, J. J., Sisco, S. M., & Marsiske, M. (2019). Health literacy, sociodemographic factors, and cognitive training in the active study of older adults. International Journal of Geriatric Psychiatry, 34(4), 563–570. [Google Scholar] [CrossRef]

- Wahl-Jorgensen, K. (2020). An emotional turn in journalism studies? Digital Journalism, 8(2), 175–194. [Google Scholar] [CrossRef]

- Wang, X., & Hickerson, A. (2016). The role of presumed influence and emotions on audience evaluation of the credibility of media content and behavioural tendencies. Journal of Creative Communications, 11(1), 1–16. [Google Scholar] [CrossRef]

- Whitehead, P. S., Ooi, M. M., Egner, T., & Woldorff, M. G. (2019). Neural dynamics of cognitive control over working memory capture of attention. Journal of Cognitive Neuroscience, 31(7), 1079–1090. [Google Scholar] [CrossRef]

- Wilcox, E. G., Ding, C., Jäger, L. A., & Sachan, M. (2023). Mouse tracking for reading (MoTR): A new naturalistic incremental processing measurement tool. Journal of Memory and Language, 138, 104534. [Google Scholar] [CrossRef]

- Winterhalter, L., Kofler, F., Ströbele, D., Othman, A., & von See, C. (2024). AI-assisted diagnostics in dentistry: An eye-tracking study on user behavior. Journal of Clinical and Experimental Dentistry, 16(5), e547–e555. [Google Scholar] [CrossRef] [PubMed]

- Woods, C., Luo, Z., Watling, D., & Durant, S. (2022). Twenty seconds of visual behaviour on social media gives insight into personality. Scientific Reports, 12(1), 1178. [Google Scholar] [CrossRef]

- Yan, G., Meng, Z., Liu, N., He, L., & Paterson, K. B. (2018). Effects of irrelevant background speech on eye movements during reading. Quarterly Journal of Experimental Psychology, 71(6), 1270–1275. [Google Scholar] [CrossRef]

- Yang, C.-K. (2020, June 2–5). Identifying reading patterns with eye-tracking visual analytics. ACM Symposium on Eye Tracking Research and Applications (2020, pp. 1–3), Stuttgart, Germany. [Google Scholar] [CrossRef]

- Zagermann, J., Pfeil, U., & Reiterer, H. (2018, April 21–26). Studying eye movements as a basis for measuring cognitive load. Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems (pp. 1–6), Montreal, QC, Canada. [Google Scholar] [CrossRef]

- Zhu, T., & Yang, Y. (2023). Research on mobile learning platform interface design based on college students’ visual attention characteristics. PLoS ONE, 18(7), e0283778. [Google Scholar] [CrossRef] [PubMed]

| Mean Difference | T-Statistic | p-Value | Cohen’s d | Effect Size Interpretation | |

|---|---|---|---|---|---|

| Emotions | |||||

| Neutral | 0.1514 | 18.89 | 0.0000 * | 2.65 | Large |

| Puzzlement | −0.3296 | −8.33 | 0.0000 * | −1.17 | Large |

| Surprise | −0.0252 | −4.51 | 0.0000 * | −0.63 | Large |

| Sadness | 0.0352 | 11.69 | 0.0000 * | 1.64 | Large |

| Fear | −0.0079 | −8.61 | 0.0000 * | −1.21 | Large |

| Disgust | 0.0082 | 6.94 | 0.0000 * | 0.97 | Large |

| Joy | −0.0093 | −4.62 | 0.0000 * | −0.65 | Large |

| Mood | |||||

| Positive | −0.0093 | −4.62 | 0.0000 * | −0.65 | Large |

| Negative | −0.3130 | −8.27 | 0.0000 * | −1.16 | Large |

| Valence | 0.3035 | 7.90 | 0.0000 * | 1.11 | Large |

| Mean Difference | T-Statistic | p-Value | Cohen’s d | Effect Size Interpretation | |

|---|---|---|---|---|---|

| Emotions | |||||

| Neutral | 0.1008 | 10.93 | 0.0000 * | 1.53 | Large |

| Puzzlement | −0.0918 | −5.79 | 0.0000 * | −0.81 | Large |

| Surprise | −0.0413 | −19.59 | 0.0000 * | −2.74 | Large |

| Sadness | 0.0044 | 1.24 | 0.2220 | 0.17 | Small |

| Fear | −0.0034 | −2.72 | 0.0089 * | −0.38 | Medium |

| Disgust | −0.0051 | −6.22 | 0.0000 * | −0.87 | Large |

| Joy | −0.0035 | −4.72 | 0.0000 * | −0.66 | Large |

| Mood | |||||

| Positive | −0.0035 | −4.72 | 0.0000 * | −0.66 | Large |

| Negative | −0.0993 | −6.82 | 0.0000 * | −0.96 | Large |

| Valence | 0.0961 | 6.43 | 0.0000 * | 0.90 | Large |

| Mean Difference | T-Statistic | p-Value | Cohen’s d | Effect Size Interpretation | |

|---|---|---|---|---|---|

| Emotions | |||||

| Neutral | −0.0587 | −7.53 | 0.0000 * | −1.05 | Large |

| Puzzlement | −0.0972 | −4.03 | 0.0002 * | −0.56 | Large |

| Surprise | 0.0358 | 11.92 | 0.0000 * | 1.67 | Large |

| Sadness | 0.0027 | 1.34 | 0.1864 | 0.19 | Small |

| Fear | −0.0073 | −10.66 | 0.0000 * | −1.49 | Large |

| Disgust | 0.0059 | 4.64 | 0.0000 * | 0.65 | Large |

| Joy | −0.0012 | −1.06 | 0.2951 | −0.15 | Small |

| Mood | |||||

| Positive | −0.0012 | −1.06 | 0.2951 | −0.15 | Small |

| Negative | −0.0919 | −4.08 | 0.0002 * | −0.57 | Large |

| Valence | 0.0907 | 3.90 | 0.0003 * | 0.55 | Large |

| Mean Difference | T-Statistic | p-Value | Cohen’s d | Effect Size Interpretation | |

|---|---|---|---|---|---|

| Emotions | |||||

| Neutral | −0.1093 | −13.97 | 0.0000 * | −1.96 | Large |

| Puzzlement | 0.1406 | 4.73 | 0.0000 * | 0.66 | Large |

| Surprise | 0.0197 | 3.58 | 0.0008 * | 0.50 | Large |

| Sadness | −0.0281 | −7.69 | 0.0000 * | −1.08 | Large |

| Fear | −0.0029 | −4.37 | 0.0001 * | −0.61 | Large |

| Disgust | −0.0074 | −8.51 | 0.0000 * | −1.19 | Large |

| Joy | 0.0046 | 1.84 | 0.0711 | 0.26 | Medium |

| Mood | |||||

| Positive | 0.0046 | 1.84 | 0.0711 | 0.26 | Medium |

| Negative | 0.1217 | 4.24 | 0.0001 * | 0.59 | Large |

| Valence | −0.1167 | −4.00 | 0.0002 * | −0.56 | Large |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Šola, H.M.; Qureshi, F.H.; Khawaja, S. AI and Eye Tracking Reveal Design Elements’ Impact on E-Magazine Reader Engagement. Educ. Sci. 2025, 15, 203. https://doi.org/10.3390/educsci15020203

Šola HM, Qureshi FH, Khawaja S. AI and Eye Tracking Reveal Design Elements’ Impact on E-Magazine Reader Engagement. Education Sciences. 2025; 15(2):203. https://doi.org/10.3390/educsci15020203

Chicago/Turabian StyleŠola, Hedda Martina, Fayyaz Hussain Qureshi, and Sarwar Khawaja. 2025. "AI and Eye Tracking Reveal Design Elements’ Impact on E-Magazine Reader Engagement" Education Sciences 15, no. 2: 203. https://doi.org/10.3390/educsci15020203

APA StyleŠola, H. M., Qureshi, F. H., & Khawaja, S. (2025). AI and Eye Tracking Reveal Design Elements’ Impact on E-Magazine Reader Engagement. Education Sciences, 15(2), 203. https://doi.org/10.3390/educsci15020203