Aligning the Operationalization of Digital Competences with Perceived AI Literacy: The Case of HE Students in IT Engineering and Teacher Education

Abstract

1. Introduction

2. Related Work

2.1. AI Literacy

2.2. Objective Assessment of AI Literacy Among Higher Education Students

2.3. Self-Assessment of AI Literacy Among Higher Education Students

2.4. Research on AI Literacy Among Pre-Service Teachers and IT/Computer Science Engineers

2.5. Digital Competence Framework for Citizens (DigComp) and Its Relationship with AI Literacy

3. Methodology

4. Results and Discussion

4.1. Pedagogical Implications

4.2. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Abubakari, M. S., Zakaria, G. A. N., & Musa, J. (2025). Validating the DigComp framework among university students across different educational systems. Discover Education, 4(1), 200. [Google Scholar] [CrossRef]

- Alenezi, M., & Akour, M. (2025). AI-driven innovations in software engineering: A review of current practices and future directions. Applied Sciences, 15(3), 1344. [Google Scholar] [CrossRef]

- Audrin, C., & Audrin, B. (2022). Key factors in digital literacy in learning and education: A systematic literature review using text mining. Education and Information Technologies, 27(6), 7395–7419. [Google Scholar] [CrossRef]

- Ayanwale, M. A., Adelana, O. P., Molefi, R. R., Adeeko, O., & Ishola, A. M. (2024). Examining artificial intelligence literacy among pre-service teachers for future classrooms. Computers and Education Open, 6, 100179. [Google Scholar] [CrossRef]

- Bajpai, A., Yadav, S., & Nagwani, N. K. (2025). An extensive bibliometric analysis of artificial intelligence techniques from 2013 to 2023. The Journal of Supercomputing, 81(4), 540. [Google Scholar] [CrossRef]

- Becker, J., Rush, N., Barnes, E., & Rein, D. (2025). Measuring the impact of early-2025 AI on experienced open-source developer productivity. arXiv, arXiv:2507.09089. [Google Scholar] [CrossRef]

- Bećirović, S., Polz, E., & Tinkel, I. (2025). Exploring students’ AI literacy and its effects on their AI output quality, self-efficacy, and academic performance. Smart Learning Environments, 12(1), 29. [Google Scholar] [CrossRef]

- Bewersdorff, A., Hornberger, M., Nerdel, C., & Schiff, D. S. (2024). AI advocates and cautious critics: How AI attitudes, AI interest, use of AI, and AI literacy build university students’ AI self-efficacy. Computers & Education: Artificial Intelligence, 8, 100340. [Google Scholar] [CrossRef]

- Biagini, G., Cuomo, S., & Ranieri, M. (2024). Developing and validating a multidimensional AI literacy questionnaire: Operationalizing AI literacy for higher education. Available online: https://ceur-ws.org/Vol-3605/1.pdf (accessed on 25 October 2025).

- Bird, E., Fox-Skelly, J., Jenner, N., Larbey, R., Weitkamp, E., & Winfield, A. (2020). The ethics of artificial intelligence: Issues and initiatives. European Parliamentary Research Service, Directorate-General for Parliamentary Research Services. [Google Scholar] [CrossRef]

- Brandl, L., Richters, C., Kolb, N., & Stadler, M. (2025, March 3). Can generative artificial intelligence ever be a true collaborator? Rethinking the nature of collaborative problem-solving. 2nd Workshop on Generative AI for Learning Analytics (GenAI-LA) (pp. 80–87), Dublin, Ireland. Available online: https://ceur-ws.org/Vol-3994/short6.pdf (accessed on 25 October 2025).

- Carolus, A., Koch, M. J., Straka, S., Latoschik, M. E., & Wienrich, C. (2023). MAILS—Meta AI literacy scale: Development and testing of an AI literacy questionnaire based on well-founded competency models and psychological change- and meta-competencies. Computers in Human Behavior Artificial Humans, 1(2), 100014. [Google Scholar] [CrossRef]

- Chiu, T. K. F. (2025). AI literacy and competency: Definitions, frameworks, and implications. Interactive Learning Environments, 33(5), 3225–3229. [Google Scholar] [CrossRef]

- Ding, L., Lawson, C., & Shapira, P. (2025). Rise of generative artificial intelligence in science. Scientometrics, 130, 5093–5114. [Google Scholar] [CrossRef]

- Dörner, D., & Funke, J. (2017). Complex problem solving: What it is and what it is not. Frontiers in Psychology, 8(8), 1153. [Google Scholar] [CrossRef]

- Ebert, J., & Kramarczuk, K. (2025, February 26–March 1). Leveraging undergraduate perspectives to redefine AI literacy. 56th ACM Technical Symposium on Computer Science Education (SIGCSE 2025) (pp. 290–296), Pittsburgh, PA, USA. [Google Scholar] [CrossRef]

- European Commission. (2022). DigComp 2.2, the digital competence framework for citizens—With new examples of knowledge, skills and attitudes. Publications Office of the European Union. [Google Scholar] [CrossRef]

- Garzón, J., Patiño, E., & Marulanda, C. (2025). Systematic review of artificial intelligence in education: Trends, benefits, and challenges. Multimodal Technologies and Interaction, 9(8), 84. [Google Scholar] [CrossRef]

- Hong, L. (2025). Development and validation of a competency-based ladder pathway for AI literacy enhancement among higher vocational students. Scientific Reports, 15(1), 29898. [Google Scholar] [CrossRef] [PubMed]

- Hornberger, M., Bewersdorff, A., & Nerdel, C. (2023). What do university students know about artificial intelligence? Development and validation of an AI literacy test. Computers and Education: Artificial Intelligence, 5, 100165. [Google Scholar] [CrossRef]

- Hornberger, M., Bewersdorff, A., Schiff, D. S., & Nerdel, C. (2025). A multinational assessment of AI literacy among university students in Germany, the UK, and the US. Computers in Human Behavior: Artificial Humans, 4, 100132. [Google Scholar] [CrossRef]

- Hsu, T. C., & Chen, M. S. (2024). Effects of students using different learning approaches for learning computational thinking and AI applications. Education and Information Technologies, 30, 7549–7757. [Google Scholar] [CrossRef]

- Jin, Y., Martinez-Maldonado, R., Gašević, D., & Yan, L. (2024). GLAT: The generative AI literacy assessment test. arXiv, arXiv:2411.00283. [Google Scholar] [CrossRef]

- Knoth, N., Decker, M., Laupichler, M. C., Pinski, M., Buchholtz, N., Bata, K., & Schultz, B. (2024). Developing a holistic AI literacy assessment matrix—Bridging generic, domain-specific, and ethical competencies. Computers and Education Open, 6, 100177. [Google Scholar] [CrossRef]

- Laupichler, M. C., Aster, A., Meyerheim, M., Raupach, T., & Mergen, M. (2024). Medical students’ AI literacy and attitudes towards AI: A cross-sectional two-center study using pre-validated assessment instruments. BMC Medical Education, 24(1), 401. [Google Scholar] [CrossRef]

- Lintner, T. (2024). A systematic review of AI literacy scales. NPJ Science of Learning, 9(1), 50. [Google Scholar] [CrossRef]

- Long, D., & Magerko, B. (2020, April 25–30). What is AI literacy? Competencies and design considerations. 2020 CHI Conference on Human Factors in Computing Systems (pp. 1–16), Honolulu, HI, USA. [Google Scholar] [CrossRef]

- López-Meneses, E., Sirignano, F. M., Vázquez-Cano, E., & Ramírez-Hurtado, J. M. (2020). University students’ digital competence in three areas of the DigComp 2.1 model: A comparative study at three European universities. Australasian Journal of Educational Technology, 36(3), 69–88. [Google Scholar] [CrossRef]

- Luckin, R., Holmes, W., Griffiths, M., & Pearson, L. (2016). Intelligence unleashed: An argument for AI in education. Open Ideas Series. Pearson. Available online: https://edu.google.com/pdfs/Intelligence-Unleashed-Publication.pdf (accessed on 15 November 2025).

- Mansoor, H. M. H., Bawazir, A., Alsabri, M. A., Alharbi, A., & Okela, A. H. (2024). Artificial intelligence literacy among university students—A comparative transnational survey. Frontiers in Communication, 9, 1478476. [Google Scholar] [CrossRef]

- Ng, D. T. K., Leung, J. K. L., Chu, S. K. W., & Qiao, M. (2021). Conceptualizing AI literacy: An exploratory review. Computers & Education: Artificial Intelligence, 2, 100041. [Google Scholar] [CrossRef]

- Oberländer, M., Beinicke, A., & Bipp, T. (2020). Digital competencies: A review of the literature and applications in the workplace. Computers & Education, 146, 103752. [Google Scholar] [CrossRef]

- OECD. (2025). Empowering learners for the age of AI: An AI literacy framework for primary and secondary education. Available online: https://ailiteracyframework.org/ (accessed on 20 October 2025).

- Pusey, P., Gupta, M., Mittal, S., & Abdelsalam, M. (2024). An analysis of prerequisites for artificial intelligence/machine learning-assisted malware analysis learning modules. Journal of the Colloquium for Information Systems Security Education, 11(1), 5. [Google Scholar] [CrossRef]

- Qazi, A., Hasan, N., Abayomi-Alli, O., Hardaker, G., Scherer, R., Sarker, Y., Kumar Paul, S., & Maitama, J. Z. (2021). Gender differences in information and communication technology use & skills: A systematic review and meta-analysis. Education and Information Technologies, 27(3), 4225–4258. [Google Scholar] [CrossRef]

- Rioseco-Pais, M., Silva-Quiroz, J., & Vargas-Vitoria, R. (2024). Digital competences and years of access to technologies among Chilean university students: An analysis based on the DigComp framework. Sustainability, 16(22), 9876. [Google Scholar] [CrossRef]

- Runge, I., Hebibi, F., & Lazarides, R. (2025). Acceptance of pre-service teachers towards artificial intelligence (AI): The role of AI-related teacher training courses and AI-TPACK within the technology acceptance model. Education Sciences, 15(2), 167. [Google Scholar] [CrossRef]

- Shen, Y., & Cui, W. (2024). Perceived support and AI literacy: The mediating role of psychological needs satisfaction. Frontiers in Psychology, 15, 1415248. [Google Scholar] [CrossRef]

- Siddharth, S., Prince, B., Harsh, A., & Ramachandran, S. (2025). ‘The World of AI’: A novel approach to AI literacy for first-year engineering students. In A. I. Cristea, E. Walker, Y. Lu, O. C. Santos, & S. Isotani (Eds.), Artificial intelligence in education. Posters and late breaking results, workshops and tutorials, industry and innovation tracks, practitioners, doctoral consortium, blue sky, and WideAIED (pp. 250–257). Springer. [Google Scholar] [CrossRef]

- Simon, H. A., & Newell, A. (1971). Human problem solving: The state of the theory in 1970. American Psychologist, 26(2), 145–159. [Google Scholar] [CrossRef]

- Tenberga, A., & Daniela, L. (2024). Artificial intelligence literacy competencies for teachers through self-assessment tools. Sustainability, 16(23), 10386. [Google Scholar] [CrossRef]

- Van Audenhove, L., Vermeire, L., Van den Broeck, W., & Demeulenaere, A. (2024). Data literacy in the new EU DigComp 2.2 framework: How DigComp defines competences on artificial intelligence, internet of things and data. Information and Learning Sciences, 125(5–6), 406–436. [Google Scholar] [CrossRef]

- Walter, Y. (2024). Embracing the future of artificial intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education. International Journal of Educational Technology in Higher Education, 21(1), 15. [Google Scholar] [CrossRef]

- Wang, B., Rau, P. L. P., & Yuan, T. (2022). Measuring user competence in using artificial intelligence: Validity and reliability of artificial intelligence literacy scale. Behaviour & Information Technology, 42(9), 1324–1337. [Google Scholar] [CrossRef]

- Wang, H., Fu, T., Du, Y., Gao, W., Huang, K., Liu, Z., Chandak, P., Liu, S., Van Katwyk, P., Deac, A., Anandkumar, A., Bergen, K., Gomes, C. P., Ho, S., Kohli, P., Lasenby, J., Leskovec, J., Liu, T.-Y., Manrai, A., & Marks, D. (2023). Scientific discovery in the age of artificial intelligence. Nature, 620(7972), 47–60. [Google Scholar] [CrossRef]

- Weichert, J., Kim, D., Zhu, Q., Kim, J., & Eldardiry, H. (2025). Assessing computer science student attitudes towards AI ethics and policy. AI and Ethics, 5, 5985–6006. [Google Scholar] [CrossRef]

- Wen, H., Lin, X., Liu, R., & Su, C. (2025). Enhancing college students’ AI literacy through human-AI co-creation: A quantitative study. Interactive Learning Environments, 1–19. [Google Scholar] [CrossRef]

- Wu, D., Sun, X., Liang, S., Qiu, C., & Wei, Z. (2025). Construction of AI literacy evaluation system for college students and an empirical study at Wuhan University. Frontiers of Digital Education, 2, 6. [Google Scholar] [CrossRef]

- Xia, Q., Chiu, T. K. F., Lee, M., Sanusi, I. T., Dai, Y., & Chai, C. S. (2022). A self-determination theory (SDT) design approach for inclusive and diverse artificial intelligence (AI) education. Computers & Education, 189, 104582. [Google Scholar] [CrossRef]

- Zhang, Y., & Tian, Z. (2025). Digital competence in student learning with generative artificial intelligence: Policy implications from world-class universities. Journal of University Teaching and Learning Practice, 22(2), 1–22. [Google Scholar] [CrossRef]

| Male | Female | |||||

|---|---|---|---|---|---|---|

| AI Literacy Construct | Items | α | M | SD | M | SD |

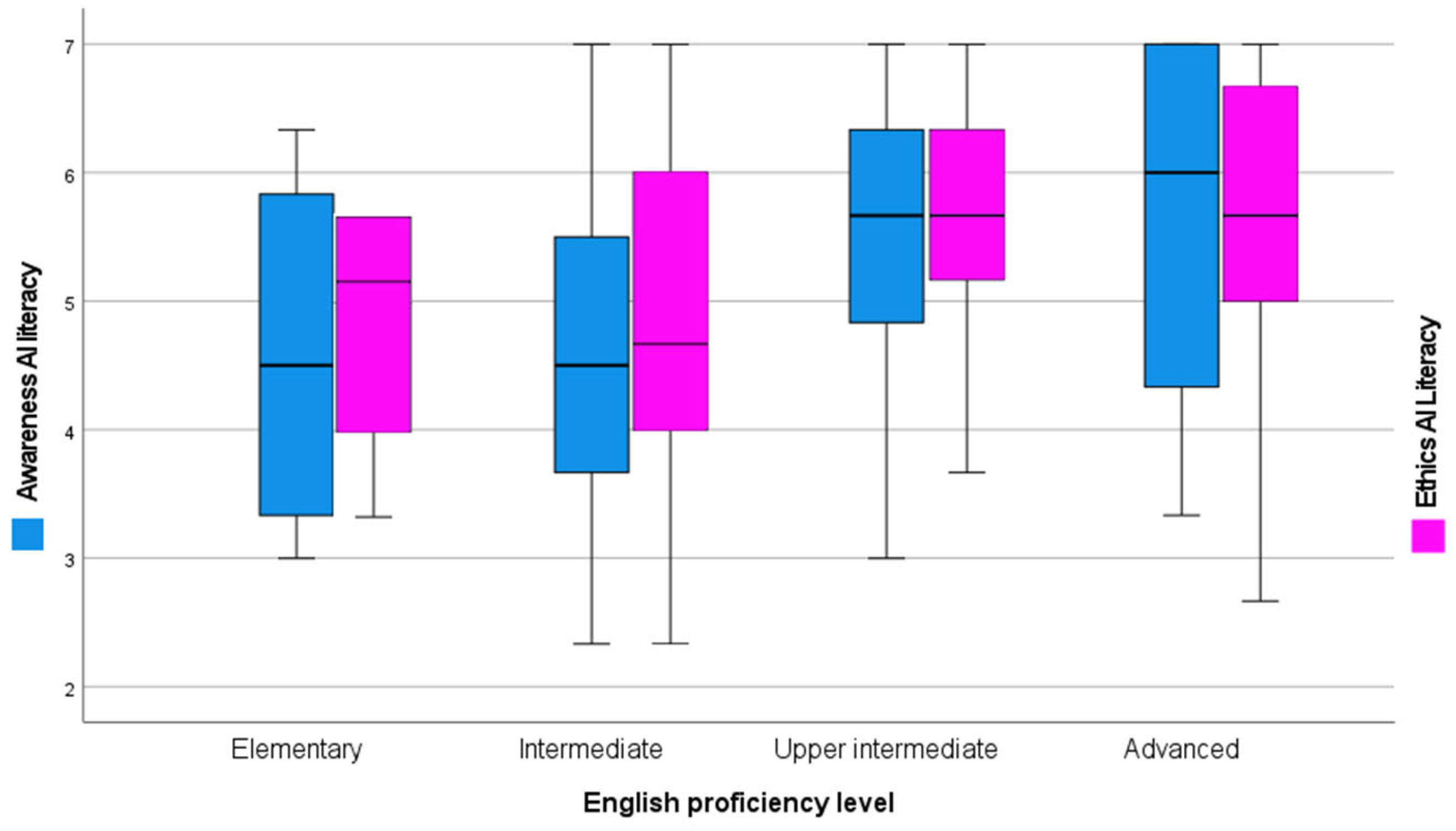

| Awareness | 3 | 0.769 | 5.16 | 1.54 | 5.32 | 1.64 |

| Usage | 3 | 0.788 | 5.29 | 1.48 | 5.53 | 1.50 |

| Evaluation | 3 | 0.631 | 5.04 | 1.52 | 5.23 | 1.54 |

| Ethics | 3 | 0.715 | 5.29 | 1.48 | 5.59 | 1.48 |

| Male | Female | |||

|---|---|---|---|---|

| Digital Competence and Skill | M | SD | M | SD |

| Digital signal processing | 5.01 | 2.46 | 5.84 | 3.26 |

| Computer architecture | 5.00 | 2.32 | 5.92 | 2.48 |

| Programming | 6.51 | 2.40 | 7.36 | 2.20 |

| Software development | 3.37 | 2.50 | 2.48 | 2.35 |

| 3D modeling | 3.79 | 2.35 | 4.72 | 3.19 |

| Computer animation | 3.65 | 2.51 | 4.24 | 3.39 |

| Teamwork/communication | 2.87 | 2.40 | 4.24 | 3.09 |

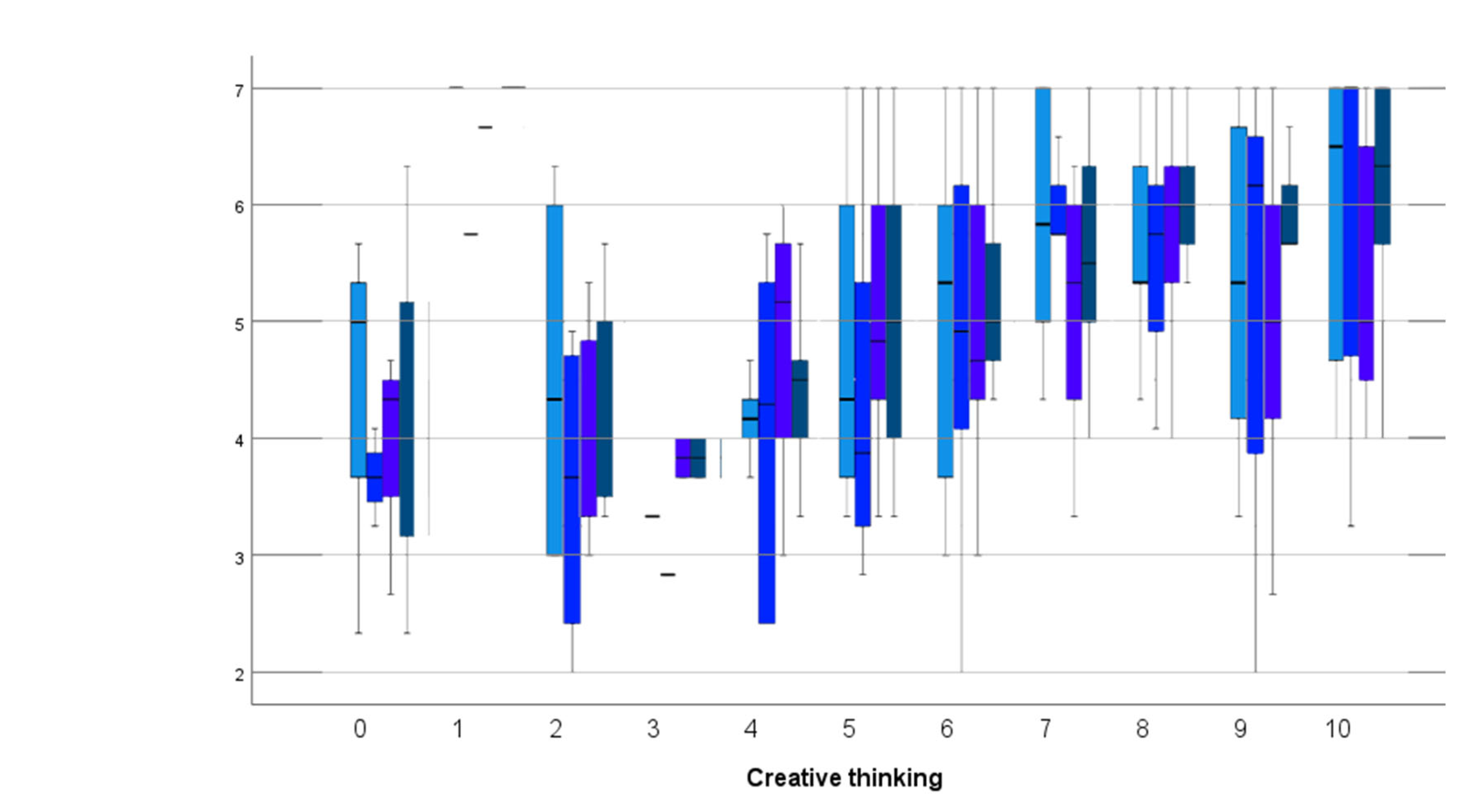

| Creative thinking | 6.09 | 2.49 | 7.12 | 2.31 |

| Digital design | 4.99 | 2.64 | 6.00 | 3.19 |

| Project management | 5.40 | 2.47 | 6.48 | 2.96 |

| AI Literacy | ||||

|---|---|---|---|---|

| Digital Competence and Skill | Awareness | Usage | Evaluation | Ethics |

| Digital signal processing | 0.35 ** | 0.29 ** | 0.34 ** | 0.29 ** |

| Computer architecture | 0.30 ** | 0.17 | 0.30 ** | 0.30 ** |

| Programming | 0.32 ** | 0.28 ** | 0.24 * | 0.28 ** |

| Software development | 0.10 | −0.13 | 0.04 | 0.10 |

| 3D modeling | 0.08 | −0.09 | 0.09 | 0.16 |

| Computer animation | 0.11 | −0.10 | 0.09 | 0.08 |

| Teamwork/communication | −0.02 | −0.13 | 0.06 | 0.05 |

| Creative thinking | 0.36 ** | 0.44 ** | 0.27 ** | 0.43 ** |

| Digital design | 0.33 ** | 0.20 | 0.21 * | 0.27 ** |

| Project management | 0.13 | 0.05 | 0.13 | 0.14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aleksić, V.; Mandić, M.; Ivanović, M. Aligning the Operationalization of Digital Competences with Perceived AI Literacy: The Case of HE Students in IT Engineering and Teacher Education. Educ. Sci. 2025, 15, 1582. https://doi.org/10.3390/educsci15121582

Aleksić V, Mandić M, Ivanović M. Aligning the Operationalization of Digital Competences with Perceived AI Literacy: The Case of HE Students in IT Engineering and Teacher Education. Education Sciences. 2025; 15(12):1582. https://doi.org/10.3390/educsci15121582

Chicago/Turabian StyleAleksić, Veljko, Milinko Mandić, and Mirjana Ivanović. 2025. "Aligning the Operationalization of Digital Competences with Perceived AI Literacy: The Case of HE Students in IT Engineering and Teacher Education" Education Sciences 15, no. 12: 1582. https://doi.org/10.3390/educsci15121582

APA StyleAleksić, V., Mandić, M., & Ivanović, M. (2025). Aligning the Operationalization of Digital Competences with Perceived AI Literacy: The Case of HE Students in IT Engineering and Teacher Education. Education Sciences, 15(12), 1582. https://doi.org/10.3390/educsci15121582