Mapping Collaborations in STEM Education: A Scoping Review and Typology of In-School–Out-of-School Partnerships

Abstract

1. Introduction

2. Cooperation in STEM Education

3. The Present Study

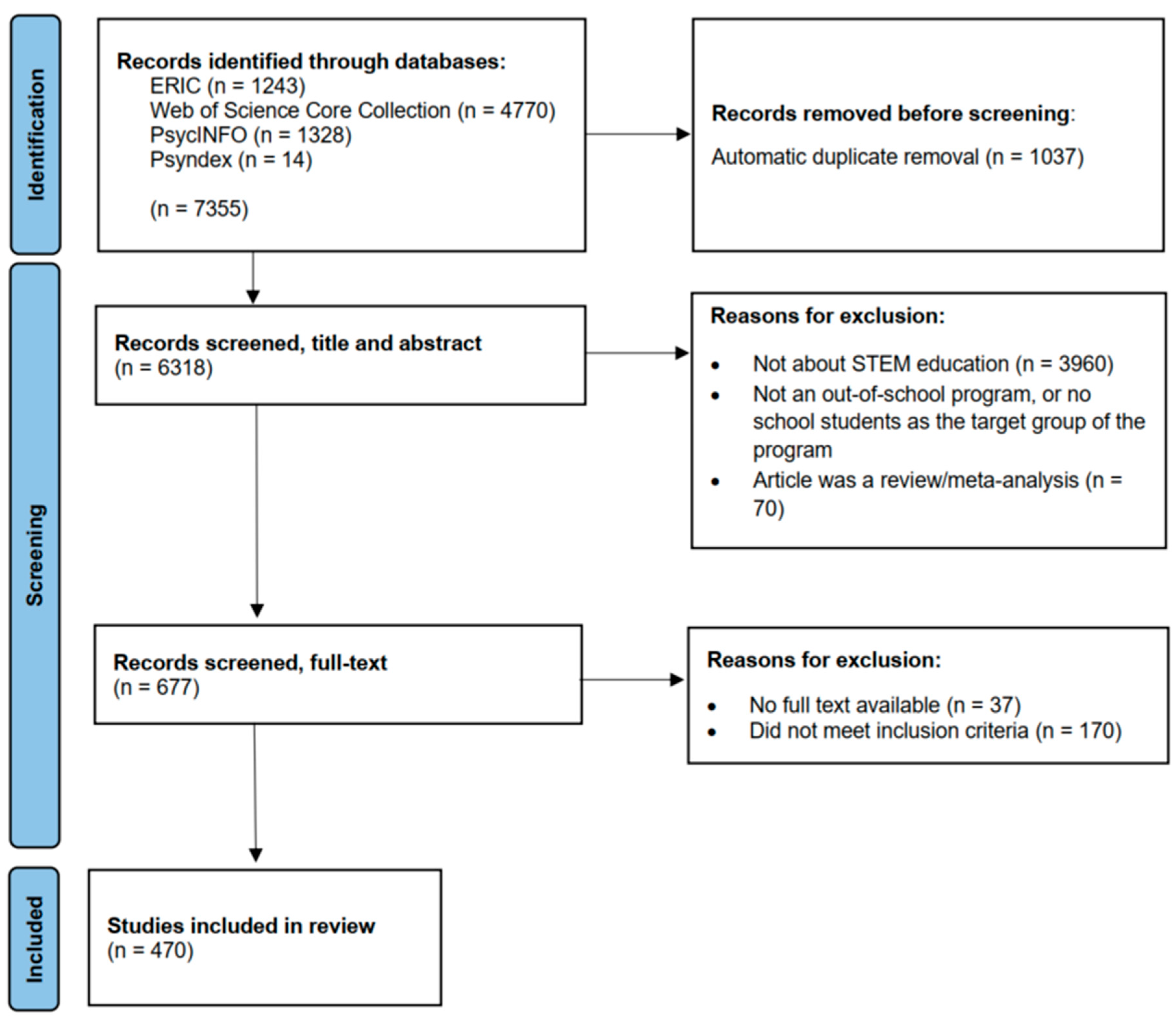

4. Method

4.1. Literature Search and Inclusion Criteria

- (a)

- The article had to be about STEM education, in whole or in part.

- (b)

- The article had to be about an out-of-school program and target school students as its primary audience.

- (c)

- The article needed to be a primary study.

4.2. Data Extraction and Coding

4.3. Collaboration Typology Development and Coding

5. Results

5.1. Overview of Included Programs

5.2. Program Characteristics

5.2.1. Type of Program

5.2.2. Subject Areas

5.2.3. Location and Timing

5.2.4. Duration

5.3. Characteristics of the Reviewed Studies

5.3.1. Research Designs and Article Types

5.3.2. Measured Outcomes

5.3.3. Target Groups and Sample Sizes

5.3.4. Geographic Distribution

5.4. Collaboration Patterns

5.4.1. Reporting of Collaboration

5.4.2. Types of Collaboration

5.5. Variation in Collaboration Patterns

5.5.1. Program Setting

5.5.2. Program Duration and Type

5.5.3. Subject Areas

5.5.4. Research and Target Group Characteristics

6. Discussion

6.1. Limitations

6.2. Directions for Future Research

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Search Strings (Carried Out on 28 June 2024)

- Web of Science

- ((TI = ((STEM OR STEAM OR STREAM OR STEMM OR iSTEM OR SMET OR pSTEM OR science OR technology OR engineering OR mathematics OR math OR maths OR biology OR chemistry OR physics OR computer) AND (out-of-school OR informal OR nonformal OR after-school OR (summer NEAR/2 camp) OR (summer NEAR/2 program) OR (optional NEAR/2 experience) OR workshop OR (outreach NEAR/2 activity) OR museum OR zoo OR science NEAR/2 center OR aquarium OR botanic garden OR club OR science fair OR exhibition OR field trip OR extracurricular OR fab lab OR makerspace OR out-of classroom) AND (program OR intervention OR initiative OR offering OR cooperation OR collaboration)))) OR AB = ((STEM OR STEAM OR STREAM OR STEMM OR iSTEM OR SMET OR pSTEM OR science OR technology OR engineering OR mathematics OR math OR maths OR biology OR chemistry OR physics OR computer) AND (out-of-school OR informal OR nonformal OR after-school OR (summer NEAR/2 camp) OR (summer NEAR/2 program) OR (optional NEAR/2 experience) OR workshop OR (outreach NEAR/2 activity) OR museum OR zoo OR science NEAR/2 center OR aquarium OR botanic garden OR club OR science fair OR exhibition OR field trip OR extracurricular OR fab lab OR makerspace OR out-of classroom) AND (program OR intervention OR initiative OR offering OR cooperation OR collaboration))

- ERIC

- ((TI = ((STEM OR STEAM OR STREAM OR STEMM OR iSTEM OR SMET OR pSTEM OR science OR technology OR engineering OR mathematics OR math OR maths OR biology OR chemistry OR physics OR computer) AND (out-of-school OR informal OR nonformal OR after-school OR (summer NEAR/2 camp) OR (summer NEAR/2 program) OR (optional NEAR/2 experience) OR workshop OR (outreach NEAR/2 activity) OR museum OR zoo OR science NEAR/2 center OR aquarium OR botanic garden OR club OR science fair OR exhibition OR field trip OR extracurricular OR fab lab OR makerspace OR out-of classroom) AND (program OR intervention OR initiative OR offering OR cooperation OR collaboration)))) OR AB = ((STEM OR STEAM OR STREAM OR STEMM OR iSTEM OR SMET OR pSTEM OR science OR technology OR engineering OR mathematics OR math OR maths OR biology OR chemistry OR physics OR computer) AND (out-of-school OR informal OR nonformal OR after-school OR (summer NEAR/2 camp) OR (summer NEAR/2 program) OR (optional NEAR/2 experience) OR workshop OR (outreach NEAR/2 activity) OR museum OR zoo OR science NEAR/2 center OR aquarium OR botanic garden OR club OR science fair OR exhibition OR field trip OR extracurricular OR fab lab OR makerspace OR out-of classroom) AND (program OR intervention OR initiative OR offering OR cooperation OR collaboration))

- PsycINFO/PSYNDEX

- TI ((STEM OR STEAM OR STREAM OR STEMM OR iSTEM OR SMET OR pSTEM OR science OR technology OR engineering OR mathematics OR math OR maths OR biology OR chemistry OR physics OR computer) AND (out-of-school OR informal OR nonformal OR after-school OR (summer camp) OR (summer program) OR (optional experience) OR workshop OR (outreach activity) OR museum OR zoo OR science center OR aquarium OR botanic garden OR club OR science fair OR exhibition OR field trip OR extracurricular OR fab lab OR makerspace OR out-of classroom) AND (program OR intervention OR initiative OR offering OR cooperation OR collaboration)) OR AB ((STEM OR STEAM OR STREAM OR STEMM OR iSTEM OR SMET OR pSTEM OR science OR technology OR engineering OR mathematics OR math OR maths OR biology OR chemistry OR physics OR computer) AND (out-of-school OR informal OR nonformal OR after-school OR (summer camp) OR (summer program) OR (optional experience) OR workshop OR (outreach activity) OR museum OR zoo OR science center OR aquarium OR botanic garden OR club OR science fair OR exhibition OR field trip OR extracurricular OR fab lab OR makerspace OR out-of classroom) AND (program OR intervention OR initiative OR offering OR cooperation OR collaboration))

| 1 | This refers to, for instance, an abstract mentioning students without enough context to say whether these are school or university students. We included those articles, checked the full texts, and then excluded them if the students were related to the university. |

References

- Akkerman, S. F., & Bakker, A. (2011). Boundary crossing and boundary objects. Review of Educational Research, 81(2), 132–169. [Google Scholar] [CrossRef]

- Allen, P. J., Chang, R., Gorrall, B. K., Waggenspack, L., Fukuda, E., Little, T. D., & Noam, G. G. (2019). From quality to outcomes: A national study of afterschool STEM programming. International Journal of STEM Education, 6(1), 37. [Google Scholar] [CrossRef]

- Anderson-Butcher, D., Bates, S., Lawson, H. A., Childs, T. M., & Iachini, A. L. (2022). The community collaboration model for school improvement: A scoping review. Education Sciences, 12(12), 918. [Google Scholar] [CrossRef]

- Archer, L., DeWitt, J., Osborne, J., Dillon, J., Willis, B., & Wong, B. (2013). ‘Not girly, not sexy, not glamorous’: Primary school girls’ and parents’ constructions of science aspirations. Pedagogy, Culture & Society, 21(1), 171–194. [Google Scholar] [CrossRef]

- Archer, L., Freedman, E., Nag Chowdhuri, M., DeWitt, J., Garcia Gonzalez, F., & Liu, Q. (2025). From STEM learning ecosystems to STEM learning markets: Critically conceptualising relationships between formal and informal STEM learning provision. International Journal of STEM Education, 12(1), 22. [Google Scholar] [CrossRef]

- Banerjee, P., Graham, L., & Given, G. (2024). A systematic literature review identifying inconsistencies in the inclusion of subjects in research reports on STEM workforce skills in the UK. Cogent Education, 11(1), 2288736. [Google Scholar] [CrossRef]

- Becher, T., & Trowler, P. (2002). Academic tribes and territories: Intellectual enquiry and the cultures of disciplines (2nd ed.). Open University Press. [Google Scholar]

- Bell, P., Lewenstein, B., Shouse, A. W., & Feder, M. A. (Eds.). (2009). Learning science in informal environments: People, places, and pursuits. National Academies Press. [Google Scholar] [CrossRef]

- Beller, E. M., Glasziou, P. P., Altman, D. G., Hopewell, S., Bastian, H., Chalmers, I., Gøtzsche, P. C., Lasserson, T., & Tovey, D. (2013). Prisma for Abstracts: Reporting systematic reviews in journal and conference abstracts. PLoS Medicine, 10(4), e1001419. [Google Scholar] [CrossRef]

- Braund, M., & Reiss, M. (2006). Towards a more authentic science curriculum: The contribution of out-of-school learning. International Journal of Science Education, 28(12), 1373–1388. [Google Scholar] [CrossRef]

- Breiner, J. M., Harkness, S. S., Johnson, C. C., & Koehler, C. M. (2012). What is STEM? A discussion about conceptions of STEM in education and partnerships. School Science and Mathematics, 112(1), 3–11. [Google Scholar] [CrossRef]

- Bryan, J., & Griffin, D. (2010). A multidimensional study of school-family-community partnership involvement: School, school counselor, and training factors. Professional School Counseling, 14(1), 75–86. [Google Scholar] [CrossRef]

- Burke, L. E. C.-A., & Navas Iannini, A. M. (2021). Science engagement as insight into the science identity work nurtured in community-based science clubs. Journal of Research in Science Teaching, 58(9), 1425–1454. [Google Scholar] [CrossRef]

- Bybee, R. W. (2010). Advancing STEM education: A 2020 vision. Technology and Engineering Teacher, 70(1), 30–35. [Google Scholar]

- Campbell, M., McKenzie, J. E., Sowden, A., Katikireddi, S. V., Brennan, S. E., Ellis, S., Hartmann-Boyce, J., Ryan, R., Shepperd, S., Thomas, J., Welch, V., & Thomson, H. (2020). Synthesis without meta-analysis (SWiM) in systematic reviews: Reporting guideline. BMJ, 368, l6890. [Google Scholar] [CrossRef]

- Chen, C., Hardjo, S., Sonnert, G., Hui, J., & Sadler, P. M. (2023). The role of media in influencing students’ STEM career interest. International Journal of STEM Education, 10(1), 56. [Google Scholar] [CrossRef]

- Chiu, T. K. F., Li, Y., Ding, M., Hallström, J., & Koretsky, M. D. (2025). A decade of research contributions and emerging trends in the international journal of STEM education. International Journal of STEM Education, 12(1), 12. [Google Scholar] [CrossRef]

- Clark, M. A., & Breman, J. C. (2009). School counselor inclusion: A collaborative model to provide academic and social-emotional support in the classroom setting. Journal of Counseling & Development, 87(1), 6–11. [Google Scholar] [CrossRef]

- Crane, V., Chen, M., Bitgood, S., Serrel, B., Thompson, D., Nicholson, H., Weiss, F., & Campbell, P. (1994). Informal science learning: What the research says about television, science museums, and community-based projects. Science Press. [Google Scholar]

- Davis, K., Fitzgerald, A., Power, M., Leach, T., Martin, N., Piper, S., Singh, R., & Dunlop, S. (2023). Understanding the conditions informing successful STEM clubs: What does the evidence base tell us? Studies in Science Education, 59(1), 1–23. [Google Scholar] [CrossRef]

- De Jong, L., Meirink, J., & Admiraal, W. (2022). School-based collaboration as a learning context for teachers: A systematic review. International Journal of Educational Research, 112, 101927. [Google Scholar] [CrossRef]

- Denton, M., & Borrego, M. (2021). Funds of knowledge in STEM education: A scoping review. Studies in Engineering Education, 1(2), 71–92. [Google Scholar] [CrossRef]

- Dierking, L. D., & Falk, J. H. (2003). Optimizing out-of-school time: The role of free-choice learning. New Directions for Youth Development, 2003(97), 75–88. [Google Scholar] [CrossRef]

- Dou, R., Villa, N., Cian, H., Sunbury, S., Sadler, P. M., & Sonnert, G. (2025). Unlocking STEM identities through family conversations about topics in and beyond STEM: The contributions of family communication patterns. Behavioral Sciences, 15(2), 106. [Google Scholar] [CrossRef]

- Durlak, J. A., & DuPre, E. P. (2008). Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41(3–4), 327–350. [Google Scholar] [CrossRef] [PubMed]

- Eccles, J. S., Barber, B. L., Stone, M., & Hunt, J. (2003). Extracurricular activities and adolescent development. Journal of Social Issues, 59(4), 865–889. [Google Scholar] [CrossRef]

- English, L. D. (2017). Advancing elementary and middle school STEM education. International Journal of Science and Mathematics Education, 15(1), 5–24. [Google Scholar] [CrossRef]

- Epstein, J. L., Sanders, Mavis, G., Sheldon, S. B., Simon, B. S., Clark Salinas, K., Rodriguez Jansorn, N., van Voorhis, F. L., Martin, C. S., Thomas, B. G., Greenfeld, M. D., Hutchins, D. J., & Williams, K. J. (2018). School, family, and community partnerships: Your handbook for action (4th ed.). SAGE Publications Company. [Google Scholar]

- Falk, J., & Dierking, L. (2010). The 95 percent solution: School is not where most Americans learn most of their science. American Scientist, 98(6), 486–493. [Google Scholar] [CrossRef]

- Fallik, O., Rosenfeld, S., & Eylon, B.-S. (2013). School and out-of-school science: A model for bridging the gap. Studies in Science Education, 49(1), 69–91. [Google Scholar] [CrossRef]

- Falloon, G., Stevenson, M., Hatisaru, V., Hurrell, D., & Boden, M. (2024). Principal leadership and proximal processes in creating STEM ecosystems: An Australian case study. Leadership and Policy in Schools, 23(2), 180–202. [Google Scholar] [CrossRef]

- Fan, X., & Chen, M. (2001). Parental involvement and students’ academic achievement: A meta-analysis. Educational Psychology Review, 13(1), 1–22. [Google Scholar] [CrossRef]

- Feldman, A. F., & Matjasko, J. L. (2005). The role of school-based extracurricular activities in adolescent development: A comprehensive review and future directions. Review of Educational Research, 75(2), 159–210. [Google Scholar] [CrossRef]

- Fischer, N., Theis, D., & Zücher, I. (2014). Narrowing the gap? The role of all-day schools in reducing educational inequality in Germany. International Journal for Research on Extended Education, 2(1), 79–96. [Google Scholar] [CrossRef]

- Foster, K. M., Bergin, K. B., McKenna, A. F., Millard, D. L., Perez, L. C., Prival, J. T., Rainey, D. Y., Sevian, H. M., VanderPutten, E. A., & Hamos, J. E. (2010). Science education: Partnerships for STEM education. Science, 329(5994), 906–907. [Google Scholar] [CrossRef] [PubMed]

- Godec, S., Archer, L., & Dawson, E. (2022). Interested but not being served: Mapping young people’s participation in informal STEM education through an equity lens. Research Papers in Education, 37(2), 221–248. [Google Scholar] [CrossRef]

- Gupta, R., Voiklis, J., Rank, S. J., La Dwyer, J. D. T., Fraser, J., Flinner, K., & Nock, K. (2020). Public perceptions of the STEM learning ecology—Perspectives from a national sample in the US. International Journal of Science Education, Part B, 10(2), 112–126. [Google Scholar] [CrossRef]

- He, L., Murphy, L., & Luo, J. (2016). Using social media to promote STEM education: Matching college students with role models. In B. Berendt, B. Bringmann, É. Fromont, G. Garriga, P. Miettinen, N. Tatti, & V. Tresp (Eds.), Machine learning and knowledge discovery in databases (pp. 79–95). Springer International Publishing. [Google Scholar] [CrossRef]

- Hofstein, A., & Rosenfeld, S. (1996). Bridging the gap between formal and informal science learning. Studies in Science Education, 28(1), 87–112. [Google Scholar] [CrossRef]

- Honey, M., Pearson, G., & Schweingruber, H. (Eds.). (2014). STEM integration in K-12 education. National Academies Press. [Google Scholar] [CrossRef]

- Itzek-Greulich, H., & Vollmer, C. (2017). Emotional and motivational outcomes of lab work in the secondary intermediate track: The contribution of a science center outreach lab. Journal of Research in Science Teaching, 54(1), 3–28. [Google Scholar] [CrossRef]

- Jaggy, A.-K., Wagner, W., Fütterer, T., Göllner, R., & Trautwein, U. (2025). Teaching quality in STEM education: Differences between in- and out-of-school contexts from the perspective of gifted students. International Journal of STEM Education, 12(1), 53. [Google Scholar] [CrossRef]

- Jeynes, W. (2012). A meta-analysis of the efficacy of different types of parental involvement programs for urban students. Urban Education, 47(4), 706–742. [Google Scholar] [CrossRef]

- Jones, A. L., & Stapleton, M. K. (2017). 1.2 million kids and counting: Mobile science laboratories drive student interest in STEM. PLoS Biology, 15(5), e2001692. [Google Scholar] [CrossRef]

- Krishnamurthi, A., Ballard, M., & Noam, G. G. (2014). Examining the impact of afterschool STEM programs. Available online: http://files.eric.ed.gov/fulltext/ED546628.pdf (accessed on 14 January 2025).

- Li, Y., Wang, K., Xiao, Y., Froyd, J. E., & Nite, S. B. (2020). Research and trends in STEM education: A systematic analysis of publicly funded projects. International Journal of STEM Education, 7(1), 17. [Google Scholar] [CrossRef]

- Li, Y., Wang, K., Xiao, Y., & Wilson, S. M. (2022). Trends in highly cited empirical research in STEM education: A literature review. Journal for STEM Education Research, 5(3), 303–321. [Google Scholar] [CrossRef]

- Mahoney, J. L., Larson, R. W., & Eccles, J. S. (Eds.). (2005). Organized activities as contexts of development: Extracurricular activities, after-school and community programs. Lawrence Erlbaum. [Google Scholar]

- Maltese, A. V., & Tai, R. H. (2011). Pipeline persistence: Examining the association of educational experiences with earned degrees in STEM among U.S. students. Science Education, 95(5), 877–907. [Google Scholar] [CrossRef]

- Margherio, C., Doten-Snitker, K., Williams, J., Litzler, E., Andrijcic, E., & Mohan, S. (2020). Cultivating strategic partnerships to transform STEM education. In K. White, A. Beach, N. Finkelstein, C. Henderson, S. Simpkins, L. Slakey, M. Stains, G. Weaver, & L. Whitehead (Eds.), Transforming institutions: Accelerating systemic change in higher education (pp. 177–188). Pressbooks. [Google Scholar]

- Maschke, S., & Stecher, L. (2018). Non-formale und informelle Bildung. In A. Lange, H. Reiter, S. Schutter, & C. Steiner (Eds.), Handbuch kindheits- und jugendsoziologie (pp. 149–163). Springer VS Wiesbaden. [Google Scholar] [CrossRef]

- McHugh, M. L. (2012). Interrater reliability: The kappa statistic. Biochemia Medica, 22(3), 276–282. [Google Scholar] [CrossRef]

- McMullen, J. M., George, M., Ingman, B. C., Pulling Kuhn, A., Graham, D. J., & Carson, R. L. (2020). A systematic review of community engagement outcomes research in school-based health interventions. The Journal of School Health, 90(12), 985–994. [Google Scholar] [CrossRef]

- Moehlman, A. H. (2012). Comparative educational systems. Literary Licensing, LLC. [Google Scholar]

- Moore, E. M., Hock, A., Bevan, B., & Taylor, K. H. (2022). Measuring STEM learning in after-school summer programs: Review of the literature. Journal of Youth Development, 17(2), 75–105. [Google Scholar] [CrossRef]

- Mu, G. M., Gordon, D., Xu, J., Cayas, A., & Madesi, S. (2023). Benefits and limitations of partnerships amongst families, schools and universities: A systematic literature review. International Journal of Educational Research, 120, 102205. [Google Scholar] [CrossRef]

- Munn, Z., Peters, M. D. J., Stern, C., Tufanaru, C., McArthur, A., & Aromataris, E. (2018). Systematic review or scoping review? Guidance for authors when choosing between a systematic or scoping review approach. BMC Medical Research Methodology, 18(1), 143. [Google Scholar] [CrossRef] [PubMed]

- Murawski, W. W., & Lochner, W. W. (2011). Observing co-teaching: What to ask for, look for, and listen for. Intervention in School and Clinic, 46(3), 174–183. [Google Scholar] [CrossRef]

- National Academies of Sciences, Engineering, and Medicine. (2016). Promising practices for strengthening the regional STEM workforce development ecosystem. The National Academies Press. [Google Scholar] [CrossRef]

- Noam, G. G., Biancarosa, G., & Dechausay, N. (2002). Afterschool education: Approaches to an emerging field. Harvard Education Press. [Google Scholar]

- Noam, G. G., & Tillinger, J. R. (2004). After-school as intermediary space: Theory and typology of partnerships. New Directions for Youth Development, 2004(101), 75–113. [Google Scholar] [CrossRef]

- Penuel, W. R., Allen, A.-R., Coburn, C. E., & Farrell, C. (2015). Conceptualizing research–practice partnerships as joint work at boundaries. Journal of Education for Students Placed at Risk, 20(1–2), 182–197. [Google Scholar] [CrossRef]

- Sahin, A., Ayar, M. C., & Adiguzel, T. (2014). STEM related after-school program activities and associated outcomes on student learning. Educational Sciences: Theory & Practice, 14(1), 309–322. [Google Scholar] [CrossRef]

- Salame, A. H., Tengku Shahdan, T. S., Kayode, B. K., & Pek, L. S. (2025). Enhancing STEM education in rural schools through play activities: A scoping review. International Journal on Studies in Education, 7(1), 103–124. [Google Scholar] [CrossRef]

- Scott-Little, C., Hamann, M. S., & Jurs, S. G. (2002). Evaluations of after-school programs: A meta-evaluation of methodologies and narrative synthesis of findings. The American Journal of Evaluation, 23(4), 387–419. [Google Scholar] [CrossRef]

- So, W. W. M., Zhan, Y., Chow, S. C. F., & Leung, C. F. (2018). Analysis of STEM activities in primary students’ science projects in an informal learning environment. International Journal of Science and Mathematics Education, 16(6), 1003–1023. [Google Scholar] [CrossRef]

- Staus, N., Riedinger, K., & Storksdieck, M. (2023). Informal STEM learning. In R. J. Tierney, F. Rizvi, & K. Ercikan (Eds.), International Encyclopedia of Education (pp. 244–250). Elsevier. [Google Scholar] [CrossRef]

- Stoeger, H., Heilemann, M., Debatin, T., Hopp, M. D. S., Schirner, S., & Ziegler, A. (2021). Nine years of online mentoring for secondary school girls in STEM: An empirical comparison of three mentoring formats. Annals of the New York Academy of Sciences 1483, 153–173. [Google Scholar] [CrossRef]

- Sutton, J., & Austin, Z. (2015). Qualitative research: Data collection, analysis, and management. The Canadian Journal of Hospital Pharmacy, 68(3), 226–231. [Google Scholar] [CrossRef]

- Torres, C. A., Arnove, R. F., & Misiaszek, L. (Eds.). (2022). Comparative education: The dialectic of the global and the local (5th ed.). Rowman & Littlefield. [Google Scholar]

- Tricco, A. C., Lillie, E., Zarin, W., O’Brien, K. K., Colquhoun, H., Levac, D., Moher, D., Peters, M. D. J., Horsley, T., Weeks, L., Hempel, S., Akl, E. A., Chang, C., McGowan, J., Stewart, L., Hartling, L., Aldcroft, A., Wilson, M. G., Garritty, C., … Straus, S. E. (2018). Prisma extension for scoping reviews (PRISMA-ScR): Checklist and explanation. Annals of Internal Medicine, 169(7), 467–473. [Google Scholar] [CrossRef]

- UNESCO. (2021). Engineering for sustainable development: Delivering on the sustainable development goals. UNESCO. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000375644/PDF/375644eng.pdf.multi (accessed on 14 January 2025).

- Vadeboncoeur, J. A. (2006). Engaging young people: Learning in informal contexts. Review of Research in Education, 30(1), 239–278. [Google Scholar] [CrossRef]

- Vangrieken, K., Dochy, F., Raes, E., & Kyndt, E. (2015). Teacher collaboration: A systematic review. Educational Research Review, 15, 17–40. [Google Scholar] [CrossRef]

- Walker, S., & Bond, C. (2025). Strength in partnership: A systematic review of key characteristics underpinning home-school collaboration. European Journal of Special Needs Education, 1–19. [Google Scholar] [CrossRef]

- Wang, M.-T., & Degol, J. L. (2017). Gender gap in science, technology, engineering, and mathematics (STEM): Current knowledge, implications for practice, policy, and future directions. Educational Psychology Review, 29(1), 119–140. [Google Scholar] [CrossRef] [PubMed]

- Watters, J. J., & Diezmann, C. M. (2016). Engaging elementary students in learning science: An analysis of classroom dialogue. Instructional Science, 44(1), 25–44. [Google Scholar] [CrossRef]

- Xia, X., Bentley, L. R., Fan, X., & Tai, R. H. (2025). STEM outside of school: A meta-analysis of the effects of informal science education on students’ interests and attitudes for STEM. International Journal of Science and Mathematics Education, 23(4), 1153–1181. [Google Scholar] [CrossRef]

| Collaboration Type | Description | Subcategories | Examples |

|---|---|---|---|

| Personnel | An exchange of personnel between in-school and out-of-school actors. | People; training, professional development | Teachers are involved in teaching in an informal learning space. Teachers receive professional development as part of the out-of-school program. |

| Infrastructural | Infrastructural resources are shared between in-school and out-of-school actors. | Location; transportation; equipment/material; scheduling; food | An out-of-school program takes place in the school building. An out-of-school initiative provides laptops for the school students who participate. An out-of-school program is scheduled to fit the school’s calendar. |

| Curricular | The out-of-school curriculum is aligned with some in-school standards. | Curriculum | The summer camp addresses mathematical concepts aligned with the state’s mathematics standards. |

| Recruitment | Recruitment of participants for the out-of-school program is done in collaboration with the in-school program. | School; school administration; teachers; school visit; flyer; community | Schools or the school administration are responsible for recommending participants to the out-of-school program providers. Teachers choose one student in their class to participate in an out-of-school program; Flyers are distributed at school to recruit participants. |

| Didactics | The didactical methods for the out-of-school program are developed in collaboration with the in-school program. | Didactics | The out-of-school intervention program was co-developed by a teacher |

| Funding | Financial resources are shared between out-of-school and in-school actors. | General funding; compensation; scholarships | General funding for an out-of-school program came from a university grant. Teachers received compensation from the out-of-school program provider. |

| General collaboration | Collaborations and partnerships on a general level between in-school and out-of-school partners were mentioned. | Industry; university; researchers; various organization | A cybersecurity company partnered with schools in the district to realize the out-of-school program. The university’s physics faculty worked collaboratively with the school to conduct the out-of-school program. |

| Program Type | Frequency |

|---|---|

| After-school/extra-curricular/out-of-school program | 104 |

| Summer/vacation/holiday program | 80 |

| Camp | 44 |

| Workshop | 37 |

| (STEM) club | 27 |

| Makerspace, laboratory | 26 |

| Outreach program | 24 |

| STEM program | 14 |

| Enrichment program | 13 |

| Field trip | 12 |

| Other | 77 |

| Subject Area | Frequency |

|---|---|

| STEM | 117 |

| Science | 108 |

| Mathematics | 64 |

| Computer science | 39 |

| Engineering | 30 |

| STEAM | 23 |

| Biology | 19 |

| Robotics | 16 |

| Technology | 13 |

| Chemistry | 11 |

| Physics | 10 |

| Other | 122 |

| Location | Frequency | Timing | Frequency | School Days | Frequency |

|---|---|---|---|---|---|

| Out of school | 257 | After school | 263 | Yes | 148 |

| Within School | 75 | During school | 47 | No | 147 |

| Hybrid | 35 | Both | 17 | Both | 34 |

| Online | 11 | No information | 142 | No information | 140 |

| Online+ | 30 | ||||

| No information | 61 |

| Research Design | Frequency |

|---|---|

| Case study | 155 |

| Pre-post test without control group | 106 |

| Experimental design | 54 |

| Descriptive | 48 |

| Phenomenological design | 23 |

| Ethnographic design | 16 |

| Correlational study | 13 |

| Other | 40 |

| Measured Outcome | Frequency |

|---|---|

| Engagement and interest | 197 |

| Knowledge and skills | 184 |

| Attitudes and perceptions | 179 |

| Identity and self-efficacy | 140 |

| Social–emotional factors | 71 |

| Other | 68 |

| Sample | Frequency | Authors | Frequency |

|---|---|---|---|

| United States | 276 | United States | 235 |

| United Kingdom | 20 | United Kingdom | 18 |

| Turkey | 19 | Turkey | 17 |

| Australia | 9 | Spain | 8 |

| Germany | 9 | Australia | 7 |

| Spain | 8 | Canada | 7 |

| Israel | 7 | Germany | 6 |

| Canada | 6 | Israel | 6 |

| China | 6 | Italy | 5 |

| Other | 63 | Other | 50 |

| No information | 46 | No information | 39 |

| Collaboration Type | Frequency |

|---|---|

| Personnel | 147 |

| Infrastructure | 104 |

| Recruitment | 87 |

| Curriculum | 71 |

| Funding | 27 |

| Didactics | 16 |

| General (not specified) | 31 |

| Gap Area | Observed Pattern | N | Educational Significance |

|---|---|---|---|

| Medical programs | No collaboration reported | 8 | Healthcare education typically involves cross-sector settings (e.g., hospitals and schools). A lack of collaboration suggests a missed opportunity for institutional integration. |

| Long-term (>1 year) programs | Collaboration inconsistently reported | 23 | Long-term collaboration is central to sustainability and system change, but it remains under-theorized and empirically underdeveloped. |

| Didactic collaboration in computer science | Rarely documented | <5 | As computer science rapidly expands in schools, co-designed didactics are expected under modern instructional design frameworks (e.g., CT integration). |

| Engineering with funding collaboration | Rarely described | <5 | Engineering programs frequently require external resources and industry input. The absence of funding partnerships highlights implementation blind spots. |

| Programs in low- and middle-income countries (LMICs) | Underrepresented in the dataset | <10 | STEM equity frameworks (e.g., UNESCO, 2021) emphasize global inclusion; however, most research is concentrated in high-income countries. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ziegler, A.; Shiani, M.; Wengler, D.; Stoeger, H. Mapping Collaborations in STEM Education: A Scoping Review and Typology of In-School–Out-of-School Partnerships. Educ. Sci. 2025, 15, 1513. https://doi.org/10.3390/educsci15111513

Ziegler A, Shiani M, Wengler D, Stoeger H. Mapping Collaborations in STEM Education: A Scoping Review and Typology of In-School–Out-of-School Partnerships. Education Sciences. 2025; 15(11):1513. https://doi.org/10.3390/educsci15111513

Chicago/Turabian StyleZiegler, Albert, Maryam Shiani, Diana Wengler, and Heidrun Stoeger. 2025. "Mapping Collaborations in STEM Education: A Scoping Review and Typology of In-School–Out-of-School Partnerships" Education Sciences 15, no. 11: 1513. https://doi.org/10.3390/educsci15111513

APA StyleZiegler, A., Shiani, M., Wengler, D., & Stoeger, H. (2025). Mapping Collaborations in STEM Education: A Scoping Review and Typology of In-School–Out-of-School Partnerships. Education Sciences, 15(11), 1513. https://doi.org/10.3390/educsci15111513