Bridging Accessibility Gaps in Dyslexia Intervention: Non-Inferiority of a Technology-Assisted Approach to Dyslexia Instruction

Abstract

1. Introduction

1.1. Dyslexia Intervention

1.2. Traditional Approaches to Teacher Training

1.3. Rise of Technology-Assisted Interventions

1.4. Rationale for the Study

2. Methods

2.1. Substudy 1: Effects of the Instruction

2.1.1. Participants

2.1.2. Procedure

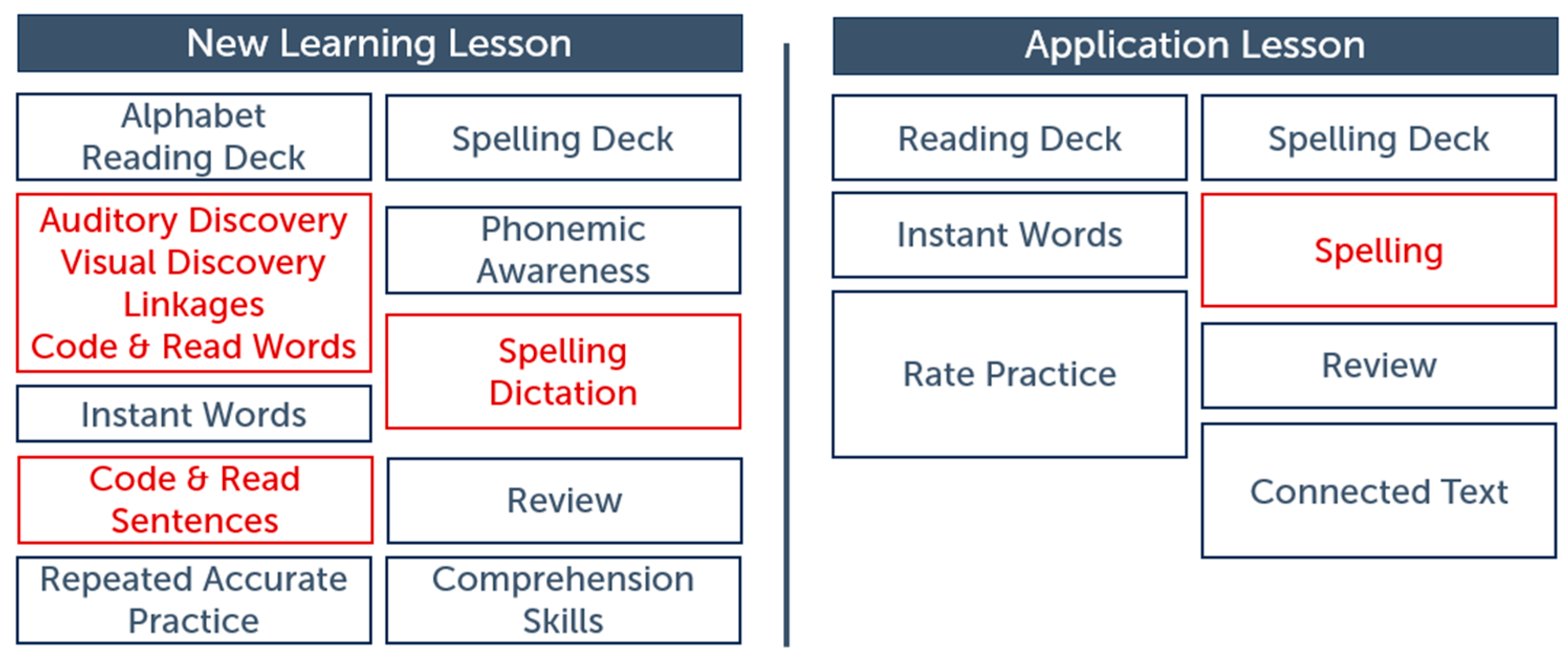

Traditional Dyslexia Instruction

Technology-Assisted Dyslexia Instruction

2.1.3. Measures

2.1.4. Data Analysis

2.2. Substudy 2: Factors Related to Scalability

2.2.1. Participants

2.2.2. Fidelity

2.2.3. Procedure

2.2.4. Measures

2.2.5. Data Analysis

3. Results

3.1. Substudy 1

3.1.1. Aim 1: Effect of Traditional Intervention

3.1.2. Aim 2: Non-Inferiority Comparison in Clinical Setting

3.2. Substudy 2

3.2.1. Aim 3: Effectiveness in a Routine Setting

3.2.2. Aim 4: Comparative Growth Across Skills in a Routine Setting

4. Discussion

4.1. Effect of Instruction

4.2. Non-Inferiority of a Technology-Assisted Approach to Intervention

4.3. Evidence of Scalability in a Routine Setting

4.3.1. Fidelity of Implementation

4.3.2. Heterogeneity of Student Profiles

4.4. Rate of Skill Development

4.5. Practical Implications

4.6. Limitations and Future Directions

4.7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Academic Language Therapy Association. (2024, January). What is a CALT? Available online: https://www.altaread.org/about/what-is-calt/ (accessed on 14 August 2025).

- Al Otaiba, S., McMaster, K., Wanzek, J., & Zaru, M. W. (2023). What we know and need to know about literacy interventions for elementary students with reading difficulties and disabilities, including dyslexia. Reading Research Quarterly, 58(2), 313–332. [Google Scholar] [CrossRef]

- Arel-Bundock, V., Greifer, N., & Heiss, A. (2024). How to interpret statistical models using marginaleffects for R and Python. Journal of Statistical Software, 111(9), 1–32. [Google Scholar] [CrossRef]

- Avrit, K., Allen, C., Carlsen, K., Gross, M., Pierce, D., & Rumsey, M. (2006). Take flight: A comprehensive intervention for students with dyslexia. Texas Scottish Rite Hospital for Children. [Google Scholar]

- Barnes, M. A., Clemens, N. H., Simmons, D., Hall, C., Fogarty, M., Martinez-Lincoln, A., Vaughn, S., Simmons, L., Fall, A.-M., & Roberts, G. (2024). A randomized controlled trial of tutor- and computer-delivered inferential comprehension interventions for middle school students with reading difficulties. Scientific Studies of Reading, 28(4), 411–440. [Google Scholar] [CrossRef]

- Beck, I. L., McKeown, M. G., Sandora, C., Kucan, L., & Worthy, J. (1996). Questioning the author: A yearlong classroom implementation to engage students with text. The Elementary School Journal, 96(4), 385–414. [Google Scholar] [CrossRef]

- Benner, G. J., Nelson, J. R., Stage, S. A., & Ralston, N. C. (2011). The influence of fidelity of implementation on the reading outcomes of middle school students experiencing reading difficulties. Remedial and Special Education, 32(1), 79–88. [Google Scholar] [CrossRef]

- Boucher, A. N., Bhat, B. H., Clemens, N. H., Vaughn, S., & O’Donnell, K. (2024). Reading interventions for students in grades 3–12 with significant word reading difficulties. Journal of Learning Disabilities, 57(4), 203–223. [Google Scholar] [CrossRef]

- Bureau of Labor Statistics, U.S. Department of Labor. (2025). Occupational outlook handbook. Special Education Teachers. Available online: https://www.bls.gov/ooh/education-training-and-library/kindergarten-and-elementary-school-teachers.htm (accessed on 14 August 2025).

- Capin, P., Cho, E., Miciak, J., Roberts, G., & Vaughn, S. (2021). Examining the reading and cognitive profiles of students with significant reading comprehension difficulties. Learning Disabilities Quarterly, 44(3), 183–196. [Google Scholar] [CrossRef] [PubMed]

- Castles, A., Rastle, K., & K., N. (2018). Ending the reading wars: Reading acquisition from novice to expert. Psychological Science in the Public Interest, 19(1), 5–51. [Google Scholar] [CrossRef]

- Catts, H. W., & Petscher, Y. (2022). A cumulative risk and resilience model of dyslexia. Journal of Learning Disabilities, 55(3), 171–184. [Google Scholar] [CrossRef]

- Chambers, B., Slavin, R. E., Madden, N. A., Abrami, P., Logan, M. K., & Gifford, R. (2011). Small-group, computer-assisted tutoring to improve reading outcomes for struggling first and second graders. The Elementary School Journal, 111(4), 625–640. [Google Scholar] [CrossRef]

- Cheung, A., & Slavin, R. (2013). Effects of Educational technology applications on reading outcomes for struggling readers: A best-evidence synthesis. Reading Research Quarterly, 48(3), 277–299. [Google Scholar] [CrossRef]

- Cheung, A., & Slavin, R. (2016). How methodological features affect effect sizes in education. Educational Researcher, 45(5), 283–292. [Google Scholar] [CrossRef]

- Cho, E., Capin, P., Roberts, G., Roberts, G. J., & Vaughn, S. (2019). Examining sources and mechanisms of reading comprehension difficulties: Comparing English learners and non-English learners within the simple view of reading. Journal of Educational Psychology, 111(6), 982–1000. [Google Scholar] [CrossRef] [PubMed]

- Clemens, N. H., Solari, E., Kearns, D. M., Fien, H., Nelson, N. J., Stelega, M., Burns, M., St. Martin, K., & Hoeft, F. (2021). They say you can do phonemic awareness instruction “in the dark”, but should you? A critical evaluation of the trend toward advanced phonemic awareness training. Available online: https://osf.io/preprints/psyarxiv/ajxbv_v1 (accessed on 14 March 2022).

- Connor, C. M., May, H., Sparapani, N., Hwang, J. K., Adams, A., Wood, T. S., Siegal, S., Wolfe, C., & Day, S. (2022). Bringing assessment-to-instruction (A2i) technology to scale: Exploring the process from development to implementation. Journal of Educational Psychology, 114(7), 1495–1532. [Google Scholar] [CrossRef] [PubMed]

- Cox, A. R. (1985). Alphabetic phonics: An organization and expansion of Orton-Gillingham. Annals of Dyslexia, 35(1), 187–198. [Google Scholar] [CrossRef]

- Erbeli, F., Rice, M., Xu, Y., Bishop, M. E., & Goodrich, J. M. (2024). A meta-analysis on the optimal cumulative dosage of early phonemic awareness instruction. Scientific Studies of Reading, 28(4), 345–370. [Google Scholar] [CrossRef]

- Fletcher, J. M., Lyon, G. R., Fuchs, L. S., & Barnes, M. A. (2018). Learning disabilities: From identification to intervention (2nd ed.). Guilford Publications. [Google Scholar]

- Fortson, K., Verbitsky-Savitz, N., Kopa, E., & Gleason, P. (2012). Using an experimental evaluation of charter schools to test whether nonexperimental comparison group methods can replicate experimental impact estimates (NCEE Technical Methods Report 2012-4019). National Center for Education Evaluation and Regional Assistance, Institute of Education Sciences, U.S. Department of Education. Available online: https://ies.ed.gov/ncee/2025/01/20124019-pdf (accessed on 14 August 2025).

- Francis, D. A., Caruana, N., Hudson, J. L., & McArthur, G. M. (2019). The association between poor reading and internalising problems: A systematic review and meta-analysis. Clinical Psychology Review, 67, 45–60. [Google Scholar] [CrossRef]

- Fuchs, D., & Fuchs, L. S. (2006). Introduction to response to intervention: What, why, and how valid is it? Reading Research Quarterly, 41(1), 93–99. [Google Scholar] [CrossRef]

- Fuchs, L. S., Fuchs, D., & Malone, A. S. (2017). The taxonomy of intervention intensity. Teaching Exceptional Children, 50(1), 35–43. [Google Scholar] [CrossRef]

- Georgiou, G. K., Martinez, D., Vieira, A. P. A., Antoniuk, A., Romero, S., & Guo, K. (2022). A meta-analytic review of comprehension deficits in students with dyslexia. Annals of Dyslexia, 72(2), 204–248. [Google Scholar] [CrossRef]

- Georgiou, G. K., Parrila, R., & McArthur, G. (2024). Dyslexia and mental health problems: Introduction to the special issue. Annals of Dyslexia, 74(1), 1–3. [Google Scholar] [CrossRef] [PubMed]

- Gersten, R., Haymond, K., Newman-Gonchar, R., Dimino, J., & Jayanthi, M. (2020). Meta-analysis of the impact of reading interventions for students in the primary grades. Journal of Research on Educational Effectiveness, 13(2), 401–427. [Google Scholar] [CrossRef]

- Greenberg, J., McKee, A., & Walsh, K. (2014). Teacher prep review: A review of the nation’s teacher preparation programs. SSRN. [Google Scholar] [CrossRef]

- Hall, C., Dahl-Leonard, K., Cho, E., Solari, E. J., Capin, P., Conner, C. L., Henry, A. R., Cook, L., Hayes, L., Vargas, I., Richmond, C. L., & Kehoe, K. F. (2023). Forty years of reading intervention research for elementary students with or at risk for dyslexia: A systematic review and meta-analysis. Reading Research Quarterly, 58(2), 285–312. [Google Scholar] [CrossRef]

- Hansen, B. B. (2004). Full matching in an observational study of coaching for the SAT. Journal of the American Statistical Association, 99(467), 609–618. [Google Scholar] [CrossRef]

- Hansen, B. B., & Klopfer, S. (2006). Optimal full matching and related designs via network flows. Journal of Computational and Graphical Statistics, 15(3), 609–627. [Google Scholar] [CrossRef]

- Hudson, A., Koh, P. W., Moore, K. A., & Binks-Cantrell, E. (2020). Fluency Interventions for elementary students with reading difficulties: A synthesis of research from 2000–2019. Education Sciences, 10(3), 52. [Google Scholar] [CrossRef]

- Hulleman, C. S., & Cordray, D. S. (2009). Moving from the lab to the field: The role of fidelity and achieved relative intervention strength. Journal of Research on Educational Effectiveness, 2(1), 88–110. [Google Scholar] [CrossRef]

- Irwin, V., Wang, K., Jung, J., Kessler, E., Tezil, T., Alhassani, S., Filbey, A., Dilig, R., & Bullock Mann, F. (2024). Report on the condition of education 2024 (NCES 2024-144). U.S. Department of Education, National Center for Education Statistics. Available online: https://nces.ed.gov/pubsearch/pubsinfo.asp?pubid=2024144 (accessed on 14 August 2025).

- Klingner, J. K., & Vaughn, S. (1998). Promoting reading comprehension, content learning, and English acquisition through Collaborative Strategic Reading (CSR). The Reading Teacher, 52(7), 738–747. [Google Scholar]

- Lane, H. B., Pullen, P. C., Eisele, M. R., & Jordan, L. (2002). Preventing reading failure: Phonological awareness assessment and instruction. Preventing School Failure: Alternative Education for Children and Youth, 46(3), 101–110. [Google Scholar] [CrossRef]

- Leite, W. (2017). Practical propensity score methods using R. Sage Publications. [Google Scholar]

- Lenth, R. (2025). emmeans: Estimated marginal means, aka least-squares means (R package version 1.11.0). R Project.

- Madden, N. A., & Slavin, R. E. (2017). Evaluations of technology-assisted small-group tutoring for struggling readers. Reading & Writing Quarterly, 33(4), 327–334. [Google Scholar] [CrossRef]

- McMahan, K. M., Oslund, E. L., & Odegard, T. N. (2019). Characterizing the knowledge of educators receiving training in systematic literacy instruction. Annals of Dyslexia, 69(1), 21–33. [Google Scholar] [CrossRef]

- McMaster, K. L., Fuchs, D., & Fuchs, L. S. (2006). Research on peer-assisted learning strategies: The promise and limitations of peer-mediated instruction. Reading & Writing Quarterly, 22(1), 5–25. [Google Scholar] [CrossRef]

- McMaster, K. L., Kendeou, P., Kim, J., & Butterfuss, R. (2023). Efficacy of a technology-based early language comprehension intervention: A randomized control trial. Journal of Learning Disabilities, 57(3), 139–152. [Google Scholar] [CrossRef] [PubMed]

- Melby-Lervåg, M., Lyster, S. A., & Hulme, C. (2012). Phonological skills and their role in learning to read: A meta-analytic review. Psychological Bulletin, 138(2), 322–352. [Google Scholar] [CrossRef] [PubMed]

- Miciak, J., Ahmed, Y., Capin, P., & Francis, D. J. (2022). The reading profiles of late elementary English learners with and without risk for dyslexia. Annals of Dyslexia, 72(2), 276–300. [Google Scholar] [CrossRef]

- Middleton, A. E., Davila, M., & Frierson, S. L. (2024). English learners with dyslexia benefit from English dyslexia intervention: An observational study of routine intervention practices. Frontiers in Education, 9, 1495043. [Google Scholar] [CrossRef]

- Middleton, A. E., Farris, E. A., Ring, J. J., & Odegard, T. N. (2022). Predicting and evaluating treatment response: Evidence toward protracted response patterns for severely impacted students with dyslexia. Journal of Learning Disabilities, 55(4), 272–291. [Google Scholar] [CrossRef]

- Moll, K., Gangl, M., Banfi, C., Schulte-Körne, G., & Landerl, K. (2020). Stability of deficits in reading fluency and/or spelling. Scientific Studies of Reading, 24(3), 241–251. [Google Scholar] [CrossRef]

- National Center for Education Statistics. (2023). Teacher openings in elementary and secondary schools. Condition of education. U.S. Department of Education, Institute of Education Sciences. Available online: https://nces.ed.gov/programs/coe/indicator/tls (accessed on 14 August 2025).

- National Center on Improving Literacy. (2025, May). Dyslexia by the numbers. Available online: https://www.stateofdyslexia.org (accessed on 14 August 2025).

- National Institute of Child Health and Human Development (NICHD). (2000). Report of the national reading panel. Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction. U.S. Government Printing Office.

- Nguyen, T.-L., Collins, G. S., Spence, J., Daurès, J.-P., Devereaux, P. J., Landais, P., & Le Manach, Y. (2017). Double-adjustment in propensity score matching analysis: Choosing a threshold for considering residual imbalance. BMC Medical Research Methodology, 17, 78. [Google Scholar] [CrossRef]

- Nye, B. D., Graesser, A. C., & Hu, X. (2014). AutoTutor and family: A review of 17 years of natural language tutoring. International Journal of Artificial Intelligence in Education, 24(4), 427–469. [Google Scholar] [CrossRef]

- O’Connor, M., Geva, E., & Koh, P. W. (2019). Examining reading comprehension profiles of grade 5 monolinguals and English language learners through the lexical quality hypothesis lens. Journal of Learning Disabilities, 52(3), 232–246. [Google Scholar] [CrossRef]

- Odegard, T. N., Farris, E. A., Middleton, A. E., Rimrodt-Frierson, S. L., & Washington, J. A. (in press). Recent trends in dyslexia legislation. In C. M. Okolo, N. Patton Terry, & L. E. Cutting (Eds.), Handbook of learning disabilities (3rd ed.). Guilford.

- O’Donnell, C. L. (2008). Defining, conceptualizing, and measuring fidelity of implementation and its relationship to outcomes in K–12 curriculum intervention research. Review of Educational Research, 78(1), 33–84. [Google Scholar] [CrossRef]

- Ogle, D. M. (1986). K-W-L: A teaching model that develops active reading of expository text. The Reading Teacher, 39(6), 564–570. [Google Scholar] [CrossRef]

- Palinscar, A. S., & Brown, A. L. (1984). Reciprocal teaching of comprehension-fostering and comprehension-monitoring activities. Cognition and Instruction, 1(2), 117–175. [Google Scholar] [CrossRef]

- Piasta, S. B., Farley, K. S., Mauck, S. A., Soto Ramirez, P., Schachter, R. E., O’Connell, A. A., Justice, L. M., Spear, C. F., & Weber-Mayrer, M. (2020). At-scale, state-sponsored language and literacy professional development: Impacts on early childhood classroom practices and children’s outcomes. Journal of Educational Psychology, 112(2), 329–343. [Google Scholar] [CrossRef]

- Pinheiro, J. C., & Bates, D. M. (2000). Mixed-effects models in S and S-PLUS. Springer. [Google Scholar]

- Pinheiro, J. C., Bates, D. M., & R Core Team. (2023). nlme: Linear and nonlinear mixed effects models (R package version 3.1-163). Available online: https://CRAN.R-project.org/package=nlme (accessed on 1 June 2024).

- Porter, S. B., Odegard, T. N., McMahan, M., & Farris, E. A. (2022). Characterizing the knowledge of educators across the tiers of instructional support. Annals of Dyslexia, 72(1), 79–96. [Google Scholar] [CrossRef]

- Regtvoort, A., Zijlstra, H., & van der Leij, A. (2013). The effectiveness of a 2-year supplementary tutor-assisted computerized intervention on the reading development of beginning readers at risk for reading difficulties: A randomized controlled trial. Dyslexia, 19(4), 256–280. [Google Scholar] [CrossRef]

- Reis, A., Araujo, S., Morais, I. S., & Faisca, L. (2020). Reading and reading-related skills in adults with dyslexia from different orthographic systems: A review and meta-analysis. Annals of Dyslexia, 70(3), 339–368. [Google Scholar] [CrossRef]

- Ring, J. J., Avrit, K. J., & Black, J. L. (2017). Take Flight: The evolution of an Orton Gillingham-based curriculum. Annals of Dyslexia, 67(3), 383–400. [Google Scholar] [CrossRef]

- Ring, J. J., & Black, J. L. (2018). The multiple deficit model of dyslexia: What does it mean for identification and intervention? Annals of Dyslexia, 68(2), 104–125. [Google Scholar] [CrossRef] [PubMed]

- Rosenbaum, P. R. (1991). A characterization of optimal designs for observational studies. Journal of the Royal Statistical Society: Series B (Methodological), 53(3), 597–610. [Google Scholar] [CrossRef]

- Solari, E. J., Petscher, Y., & Folsom, J. S. (2014). Differentiating literacy growth of ELL students with LD from other high-risk subgroups and general education peers: Evidence from grades 3–10. Journal of Learning Disabilities, 47(4), 329–348. [Google Scholar] [CrossRef]

- Solari, E. J., Terry, N. P., Gaab, N., Hogan, T. P., Nelson, N. J., Pentimonti, J. M., Petscher, Y., & Sayko, S. (2020). Translational science: A road map for the science of reading. Reading Research Quarterly, 55, S347–S360. [Google Scholar] [CrossRef]

- Stein, B. N., Solomon, B. G., Kitterman, C., Enos, D., Banks, E., & Villanueva, S. (2021). Comparing technology-based reading intervention programs in rural settings. The Journal of Special Education, 56(1), 14–24. [Google Scholar] [CrossRef]

- Texas Education Agency. (2024). Dyslexia handbook: Procedures concerning dyslexia and related disorders. Available online: https://tea.texas.gov/academics/special-student-populations/dyslexia-and-related-disorders (accessed on 14 August 2025).

- Torgesen, J. K., Wagner, R. K., Rashotte, C. A., Herron, J., & Lindamood, P. (2010). Computer-assisted instruction to prevent early reading difficulties in students at risk for dyslexia: Outcomes from two instructional approaches. Annals of Dyslexia, 60(1), 40–56. [Google Scholar] [CrossRef] [PubMed]

- U.S. Department of Education. (2004). Individuals with disabilities education improvement act, 20 U.S.C. § 1400. Available online: https://sites.ed.gov/idea/statute-chapter-33/subchapter-i/1400 (accessed on 14 August 2025).

- Varghese, C., Bratsch-Hines, M., Aiken, H., & Vernon-Feagans, L. (2021). Elementary teachers’ intervention fidelity in relation to reading and vocabulary outcomes for students at risk for reading-related disabilities. Journal of Learning Disabilities, 54(6), 484–496. [Google Scholar] [CrossRef]

- Wagner, R. K., Torgesen, J. K., & Rashotte, C. A. (1999). Comprehensive test of phonological processing. Pro-ed. [Google Scholar]

- Wagner, R. K., Torgesen, J. K., Rashotte, C. A., & Pearson, N. A. (2013). Comprehensive test of phonological processing (2nd ed.). Pro-Ed. [Google Scholar]

- Wagner, R. K., Zirps, F. A., Edwards, A. A., Wood, S. G., Joyner, R. E., Becker, B. J., Liu, G., & Beal, B. (2020). The prevalence of dyslexia: A new approach to its estimation. Journal of Learning Disabilities, 53(5), 354–365. [Google Scholar] [CrossRef]

- Walker, J. (2019). Non-inferiority statistics and equivalence studies. BJA Education, 19(8), 267–271. [Google Scholar] [CrossRef] [PubMed]

- Wanzek, J., Stevens, E. A., Williams, K. J., Scammacca, N., Vaughn, S., & Sargent, K. (2018). Current evidence on the effects of intensive early reading interventions. Journal of Learning Disabilities, 51(6), 612–624. [Google Scholar] [CrossRef]

- Wanzek, J., & Vaughn, S. (2007). Research-based implications from extensive early reading interventions. School Psychology Review, 36(4), 541–561. [Google Scholar] [CrossRef]

- Wanzek, J., & Vaughn, S. (2008). Response to varying amounts of time in reading intervention for students with low response to intervention. Journal of Learning Disabilities, 41(2), 126–142. [Google Scholar] [CrossRef] [PubMed]

- Wanzek, J., Vaughn, S., Wexler, J., Swanson, E. A., Edmonds, M., & Kim, A.-H. (2006). A synthesis of spelling and reading interventions and their effects on the spelling outcomes of students with LD. Journal of Learning Disabilities, 39(6), 528–543. [Google Scholar] [CrossRef]

- Wechsler, D. (2003). Wechsler Intelligence scale for children (4th ed.). Psychological Corporation. [Google Scholar]

- Wechsler, D. (2009). Wechsler individual achievement test (3rd ed.). Psychological Corporation. [Google Scholar]

- Wechsler, D. (2011). Wechsler abbreviated scale of intelligence (2nd ed.). Psychological Corporation. [Google Scholar]

- What Works Clearinghouse. (2022). Procedures and standards handbook (Version 5). Available online: https://ies.ed.gov/ncee/wwc/Docs/referenceresources/Final_WWC-HandbookVer5.0-0-508.pdf (accessed on 21 September 2023).

- Wiederholt, J. L., & Bryant, B. R. (2012). Gray oral reading test—Fifth edition: Examiner’s manual. Pro-Ed. [Google Scholar]

- Wilson, B. A., & Felton, R. H. (2004). Word identification and spelling test (WIST). Pro-Ed. [Google Scholar]

- Woodcock, R. W. (2011). Woodcock reading mastery test (3rd ed.). WRMT-III. Pearson. [Google Scholar]

- Youman, M., & Mather, N. (2013). Dyslexia laws in the USA. Annals of Dyslexia, 63(2), 133–153. [Google Scholar] [CrossRef] [PubMed]

| Lesson Emphasis | ||

|---|---|---|

| ||

| Activity Component | Traditional | Technology-Assisted |

| Preparation | Therapist provides review and preparation of target content for decoding sentences on the whiteboard (e.g., diacritical marking formulas for target concept and other relevant GPCs, affixes, syllable division patterns). | Avatar narrates while a simulated whiteboard presented on the monitor builds target decoding concepts, diacritic formulas, and other content to be applied in the practice sentences. |

| Monitoring and Feedback | Therapist monitors student engagement and understanding prior to assigning practice items, using Socratic questioning and clarification as needed. | Educator monitors student engagement and understanding prior to assigning practice items, repeating the presentation of concepts and providing additional clarification and guidance as needed. |

| Practice | Therapist assigns practice items to each child based on diagnostic teaching principles. While students complete the activity in their student workbook, the Therapist monitors for accuracy and provides immediate corrective feedback. | Educator assigns practice items to each child. While students complete the activity in their student workbook, the Educator monitors for accuracy by checking student responses against an answer key and provides corrective feedback. |

| Review and Activity Closure | Therapist concludes the activity by reviewing the target new learning concept for the lesson. | Educator concludes the activity by reviewing the target new learning concept for the lesson. |

| TRAD n = 69 | TECH n = 13 | ||

|---|---|---|---|

| Age (y;m) | 9;7 (1;6) | 9;4 (1;1) | t(80) = 0.58, ns |

| Gender (%F) | 49.3 | 53.8 | χ2(1) = 0.09, ns |

| Race/Ethnicity (%) | χ2(3) = 1.75, ns | ||

| White | 60.9 | 75.0 | |

| Black | 11.6 | 0.0 | |

| Hispanic | 17.4 | 16.7 | |

| Other | 10.1 | 8.3 | |

| Maternal Education (% High) | 49.3 | 53.8 | χ2(1) = 0.09, ns |

| ADHD | 33.3 | 30.8 | χ2(1) = 0.03, ns |

| SLI | 8.7 | 0.0 | χ2(1) = 1.22, ns |

| Full Scale IQ * | 101.1 (8.5) | 102.5 (8.5) | t(75) = −0.54, ns |

| Diagnosis | Pre-Test | Mid-Test | Post-Test | |||||

|---|---|---|---|---|---|---|---|---|

| n | M (SD) | n | M (SD) | n | M (SD) | n | M (SD) | |

| TRAD | ||||||||

| PA | 69 | 86.25 (8.58) | 65 | 86.52 (8.66) | 69 | 95.55 (9.8) | 69 | 100.43 (12.13) |

| Word Reading | 64 | 75.95 (9.91) | 64 | 73.89 (8.33) | 69 | 79.12 (7.79) | 69 | 85.28 (9.58) |

| Spelling | 66 | 77.91 (10.8) | 64 | 76.91 (10.02) | 69 | 78.3 (7.87) | 69 | 79.83 (8.27) |

| Reading Rate | 56 | 72.14 (10.21) | 64 | 73.12 (10.40) | 69 | 77.68 (10.34) | 69 | 82.75 (11.23) |

| TECH | ||||||||

| PA | 10 | 84.20 (7.76) | 13 | 79.38 (8.43) | 13 | 91.00 (10.82) | 13 | 98.46 (12.06) |

| Word Reading | 9 | 79.78 (8.33) | 13 | 73.38 (6.36) | 13 | 82.12 (10.17) | 13 | 88.15 (11.99) |

| Spelling | 10 | 77.70 (7.41) | 13 | 75.46 (7.02) | 13 | 76.85 (7.70) | 13 | 79.38 (7.75) |

| Reading Rate | 8 | 72.50 (9.63) | 13 | 74.23 (9.75) | 13 | 78.84 (7.94) | 13 | 81.15 (9.16) |

| Phonological Awareness | Word Reading | Spelling | Oral Reading Rate | |||||

|---|---|---|---|---|---|---|---|---|

| Null Model | Conditional Model | Null Model | Conditional Model | Null Model | Conditional Model | Null Model | Conditional Model | |

| Fixed Effects | ||||||||

| (Intercept) | 91.8 (0.98) *** | 91.14 (1.46) *** | 78.65 (0.87) *** | 76.23 (1.29) *** | 78.19 (0.94) *** | 76.45 (1.26) *** | 76.75 (1.08) *** | 71.97 (1.56) *** |

| Age | −0.01 (0.05) | −0.12 (0.05) * | −0.19 (0.04) *** | −0.05 (0.06) | ||||

| Maternal Education | 0.53 (1.87) | 3.99 (1.69) * | 3.06 (1.61) + | 7.79 (2.06) ** | ||||

| Waitlist Duration | 4.51 (2.62) + | −1.42 (2.28) | −0.83 (2.28) | −2.41 (2.74) | ||||

| Waitlist Period | 3.61 (2.12) + | −2.36 (0.71) ** | −0.98 (0.93) | 0.40 (0.96) | ||||

| Intervention Year 1 | 9.07 (1.08) *** | 5.4 (0.76) *** | 1.53 (0.91) + | 4.75 (0.99) *** | ||||

| Intervention Year 2 | 4.86 (1.40) *** | 6.24 (0.9) *** | 1.45 (0.74) * | 5.01 (0.86) *** | ||||

| Waitlist * Contrast1 | 1.25 (5.52) | −2.75 (2.03) | −4.93 (2.62) + | −3.64 (2.76) | ||||

| Waitlist * Contrast2 | −1.95 (2.93) | −2.98 (2.09) | −0.48 (2.52) | −0.58 (2.75) | ||||

| Waitlist * Contrast3 | −1.45 (3.65) | 2.38 (2.34) | −1.99 (1.92) | −1.92 (2.25) | ||||

| Random Effects | ||||||||

| σ2 | 157.28 | 14.11 | 59.77 | 5.84 | 33.70 | 6.99 | 2.64 | 7.93 |

| τ00 subject | 23.60 | 56.58 | 37.00 | 46.82 | 52.09 | 46.42 | 2.47 | 66.83 |

| τ11subject.Contrast1 | 222.20 | 17.40 | 38.96 | 32.01 | ||||

| τ11subject.Contrast2 | 45.96 | 25.05 | 38.32 | 46.25 | ||||

| τ11subject.Contrast3 | 100.50 | 41.26 | 21.64 | 33.23 | ||||

| Model Fit | ||||||||

| AIC | 2097.79 | 1976.56 | 1932.98 | 1779.89 | 1843.54 | 1809.14 | 1922.68 | 1812.49 |

| BIC | 2108.49 | 2051.41 | 1943.73 | 1855.14 | 1854.31 | 1884.55 | 1933.34 | 1887.10 |

| Marginal R2 | 0.28 | 0.27 | 0.17 | 0.25 | ||||

| Conditional R2 | 0.92 | 0.94 | 0.92 | 0.94 | ||||

| Unweighted | Weighted | |||||

|---|---|---|---|---|---|---|

| TECH | TRAD | Std. Mean Diff. | TECH | TRAD | Std. Mean Diff. | |

| distance | 0.30 | 0.13 | 0.91 | 0.30 | 0.28 | 0.08 |

| Age | −2.55 | −0.02 | −0.20 | −2.55 | −4.28 | 0.13 |

| Maternal Education | 0.54 | 0.56 | −0.04 | 0.54 | 0.54 | 0.01 |

| White | 0.77 | 0.62 | 0.36 | 0.77 | 0.83 | −0.14 |

| Black | 0.00 | 0.12 | −0.40 | 0.00 | 0.03 | −0.09 |

| Hispanic | 0.15 | 0.16 | −0.02 | 0.15 | 0.10 | 0.16 |

| Other Race | 0.08 | 0.10 | −0.10 | 0.08 | 0.05 | 0.10 |

| PA | 79.38 | 86.31 | −0.82 | 79.38 | 79.74 | −0.04 |

| Word Reading | 73.38 | 73.43 | −0.01 | 73.38 | 72.27 | 0.18 |

| Spelling | 75.46 | 76.65 | −0.17 | 75.46 | 76.44 | −0.14 |

| Reading Rate | 74.23 | 72.65 | 0.16 | 74.23 | 73.72 | 0.05 |

| TRAD n = 78 | TECH n = 79 | ||

|---|---|---|---|

| Age (y;m) | 8;8 (1;2) | 8;6 (1;0) | t(155) = 0.73, ns |

| Grade (median) | 4 | 3 | |

| Sex (%F) | 52.6 | 54.4 | χ2(1) = 0.09, ns |

| Race/Ethnicity (%) | χ2(3) = 11.61, p = 0.009 | ||

| White | 55.1 | 30.4 | |

| Black | 12.8 | 20.3 | |

| Hispanic | 20.5 | 39.2 | |

| Other | 11.5 | 10.1 | |

| SES (% Eligible FRL) | 41.0 | 58.2 | χ2(1) = 4.64, p = 0.03 |

| ELL | 9.0 | 27.8 | χ2(1) = 9.28, p = 0.002 |

| ADHD | 12.8 | 3.8 | χ2(1) = 4.20, p = 0.04 |

| SLI | 9.0 | 12.7 | χ2(1) = 0.55, ns |

| Full Scale IQ * | 105.4 (13.0) | 92.9 (12.3) |

| Pre-Test | Mid-Test | Post-Test | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TRAD | TECH | TRAD | TECH | TRAD | TECH | |||||||

| n | M (SD) | n | M (SD) | n | M (SD) | n | M (SD) | n | M (SD) | n | M (SD) | |

| PA | 78 | 91.35 (14.69) | 79 | 86.54 (14.42) | 78 | 97.01 (14.11) | 77 | 91.09 (13.34) | 65 | 99.17 (12.89) | 64 | 92.29 (13.47) |

| WR | 78 | 83.81 (13.1) | 79 | 78.23 (11.86) | 78 | 85.35 (14.86) | 77 | 79.62 (12.58) | 66 | 89.27 (13.26) | 64 | 86.26 (14.61) |

| SP | 78 | 76.25 (9.79) | 79 | 71.57 (7.49) | 77 | 79.57 (11.82) | 77 | 74.53 (10.47) | 66 | 83.25 (14.38) | 64 | 80.19 (15.38) |

| RATE | 78 | 83.78 (13.53) | 79 | 77.97 (11.69) | 78 | 85.71 (12.34) | 77 | 82.40 (11.08) | 65 | 88.38 (11.46) | 64 | 83.91 (10.59) |

| Unweighted | Weighted | |||||

|---|---|---|---|---|---|---|

| TECH | TRAD | Std. Mean Diff. | TECH | TRAD | Std. Mean Diff. | |

| distance | 0.56 | 0.45 | 0.81 | 0.56 | 0.56 | 0.01 |

| Age | −0.67 | 0.85 | −0.13 | −0.67 | −2.20 | 0.13 |

| FRL | 0.58 | 0.41 | 0.35 | 0.58 | 0.62 | −0.07 |

| White | 0.70 | 0.76 | −0.13 | 0.70 | 0.67 | 0.07 |

| Black | 0.23 | 0.14 | 0.21 | 0.23 | 0.27 | −0.09 |

| Hispanic | 0.43 | 0.24 | 0.38 | 0.43 | 0.41 | 0.05 |

| Other | 0.08 | 0.10 | −0.10 | 0.08 | 0.07 | 0.03 |

| PA | 86.54 | 91.35 | −0.33 | 86.54 | 86.41 | 0.01 |

| Word Reading | 78.23 | 83.81 | −0.47 | 78.23 | 80.38 | −0.18 |

| Spelling | 71.57 | 76.25 | −0.63 | 71.57 | 71.94 | −0.05 |

| Reading Rate | 77.97 | 83.62 | −0.48 | 77.97 | 80.67 | −0.23 |

| Predictors | Phonological Awareness | Word Reading | Spelling | Oral Reading Rate | ||||

|---|---|---|---|---|---|---|---|---|

| Null Model | Cond. Model | Null Model | Cond. Model | Null Model | Cond. Model | Null Model | Cond. Model | |

| Fixed Effects | ||||||||

| (Intercept) | 92.36 (1.05) *** | 96.77 (1.45) *** | 83.16 (1.03) *** | 85.91 (1.44) *** | 76.98 (0.88) *** | 78.48 (1.25) *** | 83.21 (0.88) *** | 85.18 (1.23) *** |

| Age | −0.08 (0.07) | −0.22 (0.07) ** | −0.02 (0.05) | −0.19 (0.06) ** | ||||

| Black | −13.33 (2.63) *** | −5.38 (2.57) * | −2.68 (1.86) | −2.98 (2.23) | ||||

| Other/Multiple Race | −6.34 (3.35) + | −3.66 (3.27) | −0.66 (2.37) | −2.12 (2.84) | ||||

| Hispanic | −1.84 (1.24) | −1.56 (1.21) | −2.06 (0.88) * | −1.09 (1.05) | ||||

| SES (low) | −1.59 (1.16) | −2.25 (1.13) * | −1.12 (0.82) | −2.59 (0.99) ** | ||||

| Treatment Group | −3.64 (1.9) + | −3.32 (1.9) + | −2.90 (1.68) + | −2.91 (1.61) + | ||||

| Intervention Year 1 | 5.66 (1.22) *** | 1.55 (0.72) * | 3.32 (0.72) *** | 2.69 (0.84) ** | ||||

| Intervention Year 2 | 0.85 (1.12) | 2.92 (1.07) ** | 2.43 (0.92) ** | 1.82 (0.83) * | ||||

| Group * Year 1 | −0.95 (1.72) | −0.05 (1.02) | −0.37 (1.02) | 1.70 (1.18) | ||||

| Group * Year 2 | −0.89 (1.59) | 2.70 (1.52) + | 2.08 (1.31) | −0.62 (1.18) | ||||

| Random Effects | ||||||||

| σ2 | 13.58 | 9.69 | 6.79 | 8.12 | ||||

| τ00student | 121.47 | 122.36 | 99.16 | 87.92 | ||||

| τ11student:Year 1 | 85.16 | 20.16 | 25.69 | 35.43 | ||||

| τ11student:Year 2 | 57.33 | 55.20 | 41.23 | 28.39 | ||||

| Model Fit | ||||||||

| AIC | 3310.58 | 3310.58 | 3286.19 | 3168.39 | 3249.10 | 3022.20 | 3158.41 | 3069.99 |

| BIC | 3384.18 | 3384.18 | 3298.46 | 3242.029 | 3261.37 | 3095.81 | 3170.66 | 3143.47 |

| Marginal R2 | 0.24 | 0.22 | 0.14 | 0.23 | ||||

| Conditional R2 | 0.94 | 0.95 | 0.95 | 0.94 | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Middleton, A.E.; Avrit, K.J.; Zielke, M.; DeFries, E.; Davila, M.; Frierson, S.L. Bridging Accessibility Gaps in Dyslexia Intervention: Non-Inferiority of a Technology-Assisted Approach to Dyslexia Instruction. Educ. Sci. 2025, 15, 1460. https://doi.org/10.3390/educsci15111460

Middleton AE, Avrit KJ, Zielke M, DeFries E, Davila M, Frierson SL. Bridging Accessibility Gaps in Dyslexia Intervention: Non-Inferiority of a Technology-Assisted Approach to Dyslexia Instruction. Education Sciences. 2025; 15(11):1460. https://doi.org/10.3390/educsci15111460

Chicago/Turabian StyleMiddleton, Anna E., Karen J. Avrit, Marjorie Zielke, Erik DeFries, Marcela Davila, and Sheryl L. Frierson. 2025. "Bridging Accessibility Gaps in Dyslexia Intervention: Non-Inferiority of a Technology-Assisted Approach to Dyslexia Instruction" Education Sciences 15, no. 11: 1460. https://doi.org/10.3390/educsci15111460

APA StyleMiddleton, A. E., Avrit, K. J., Zielke, M., DeFries, E., Davila, M., & Frierson, S. L. (2025). Bridging Accessibility Gaps in Dyslexia Intervention: Non-Inferiority of a Technology-Assisted Approach to Dyslexia Instruction. Education Sciences, 15(11), 1460. https://doi.org/10.3390/educsci15111460