Exploring the Impact of Different Assistance Approaches on Students’ Performance in Engineering Lab Courses

Abstract

1. Introduction

2. Literature Review and Motivations

2.1. Literature Review

2.2. Motivations

- The development of automated grading and feedback systems aimed at reducing instructors’ workload and improving post-class efficiency.

- The deployment of LLMs in classroom teaching as a replacement or supplement for traditional teaching assistants, often accompanied by comparative evaluations.

- The construction of expert-tuned LLMs to improve output accuracy and contextual relevance in discipline-specific applications.

- Domain-Specific Demands: Prior works have largely focused on tasks such as writing, translation, and programming, where abundant datasets and pretrained knowledge bases enable generic-LLMs to perform effectively. In contrast, STEM lab courses often require specialized domain knowledge and context-aware problem-solving. This necessitates both rigorous evaluation of generic-LLMs and the development of expert-tuned models tailored to specific STEM domains.

- Binary Treatment Design: Most of previous studies have adopted a binary experimental design—comparing classrooms with LLM assistance versus those without. However, in real-world STEM education, especially within lab-based courses, instructional support is rarely singular. It often involves a combination of teaching assistants, peer collaboration, and technological aids. Therefore, it is critical to explore a more comprehensive set of assistance configurations to understand the nuanced impact of LLM integration.

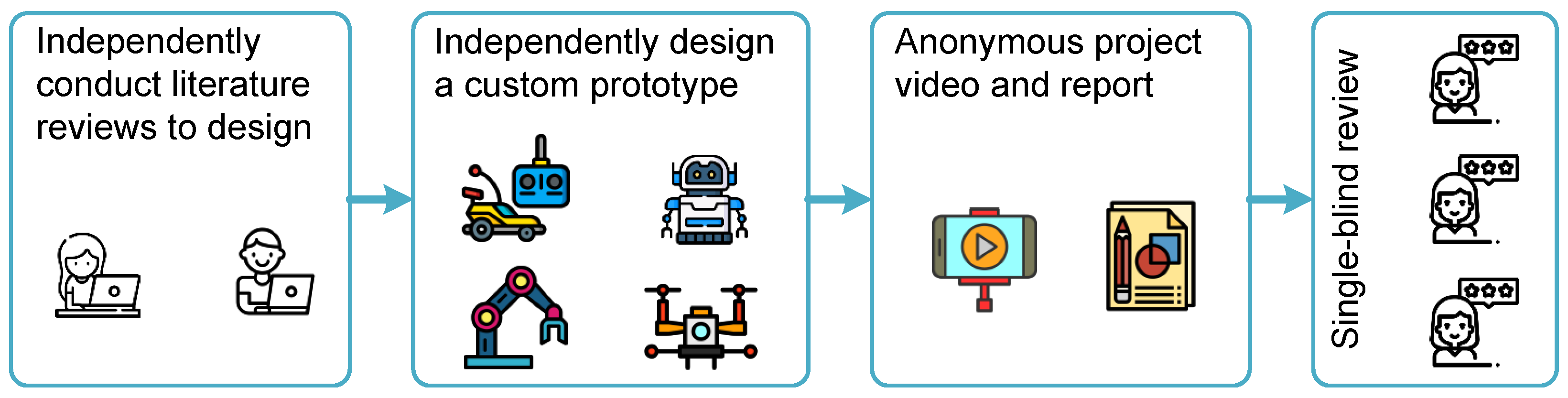

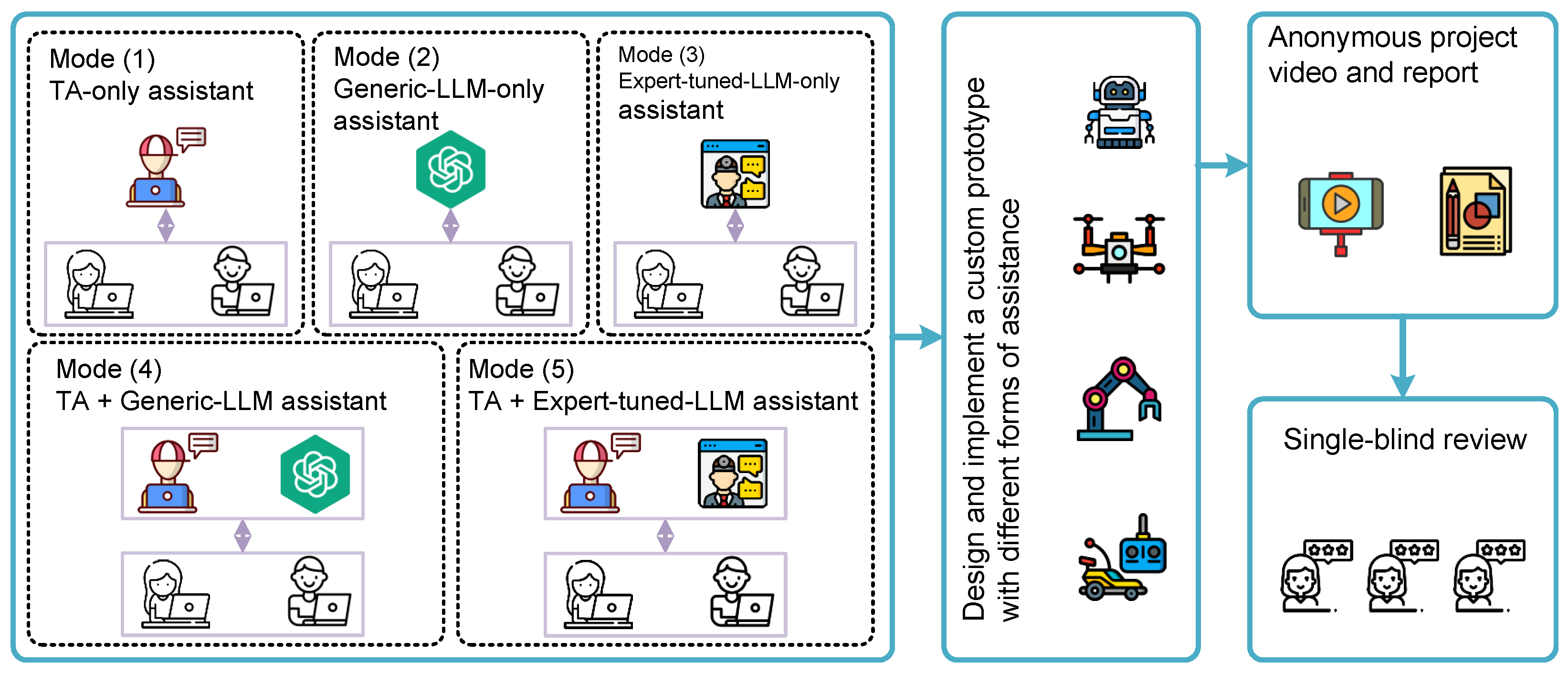

3. Methodology

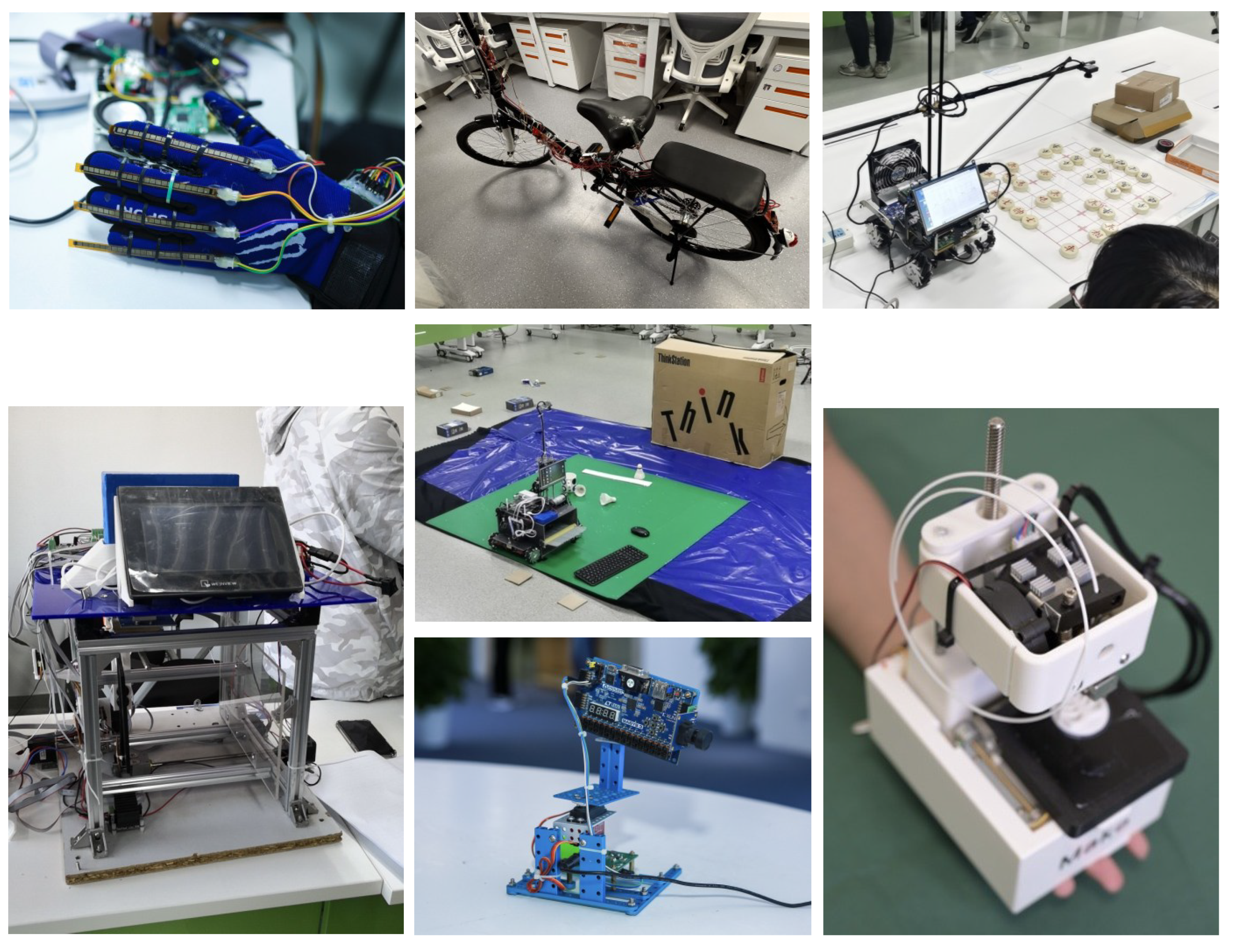

3.1. Background of Lab Course

3.2. Method Overview

3.3. Framework of Redesign Course

3.4. Expert-Tuned LLM Design

3.5. Participants

- Every Tuesday, from 2:00–4:00 PM, for students in the TA-only assistant mode;

- Every Wednesday, from 2:00–4:00 PM, for students in the TA + Generic-LLM assistant mode;

- Every Thursday, from 2:00–4:00 PM, for students in the TA + Expert-tuned-LLM assistant mode.

3.6. Data Collection

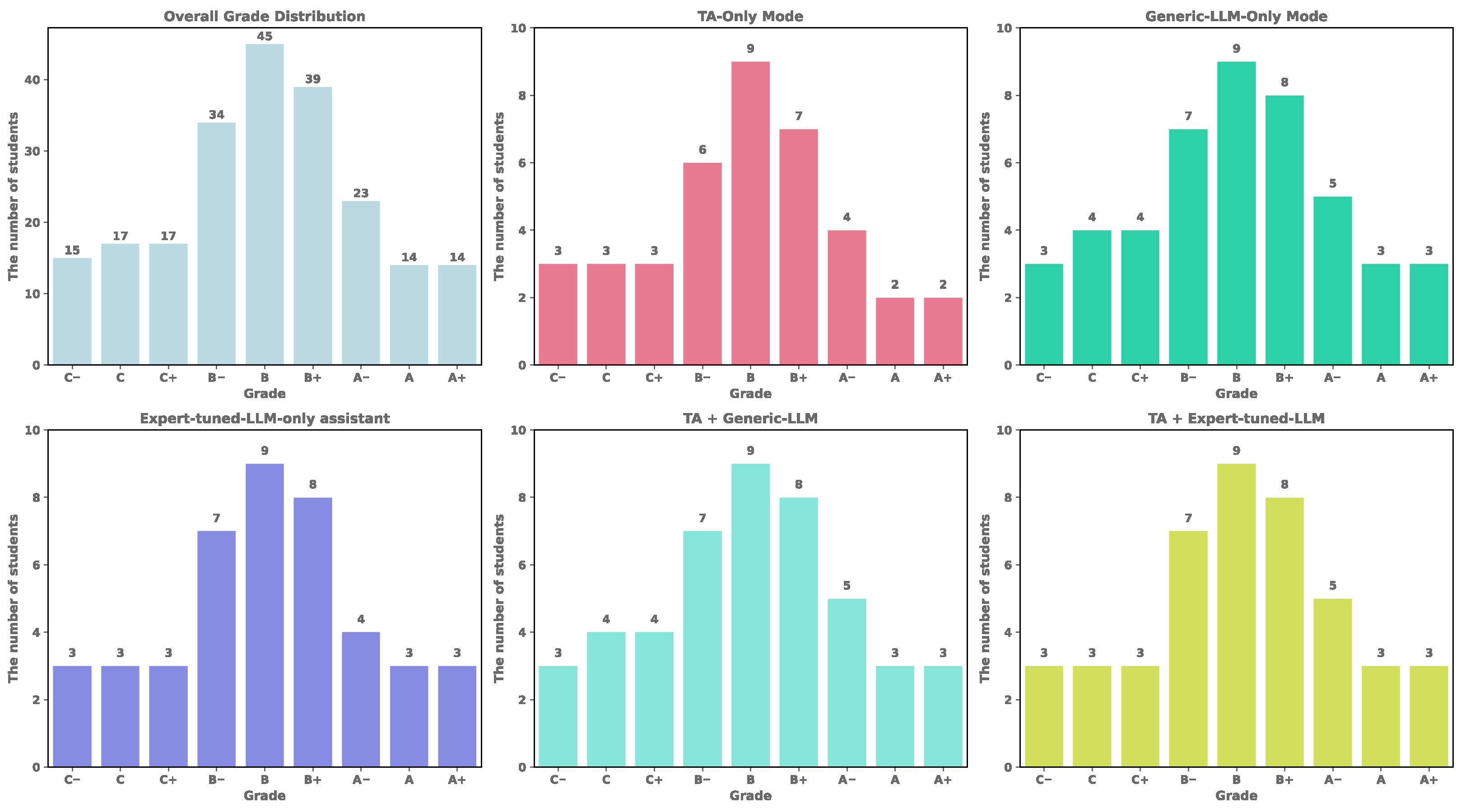

4. Results and Data Analysis

4.1. Results for Proposed LLM

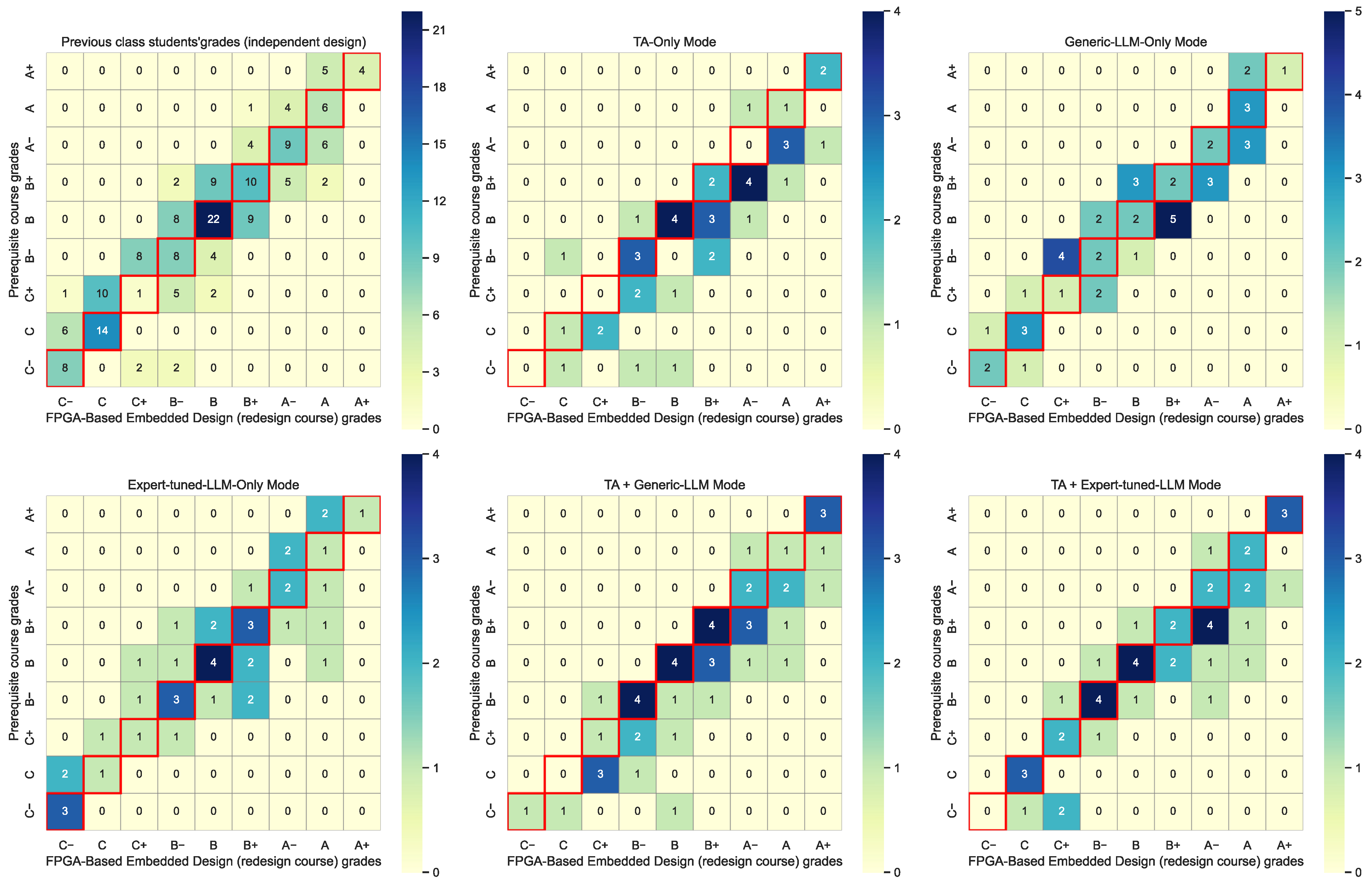

4.2. Results for Student Grades

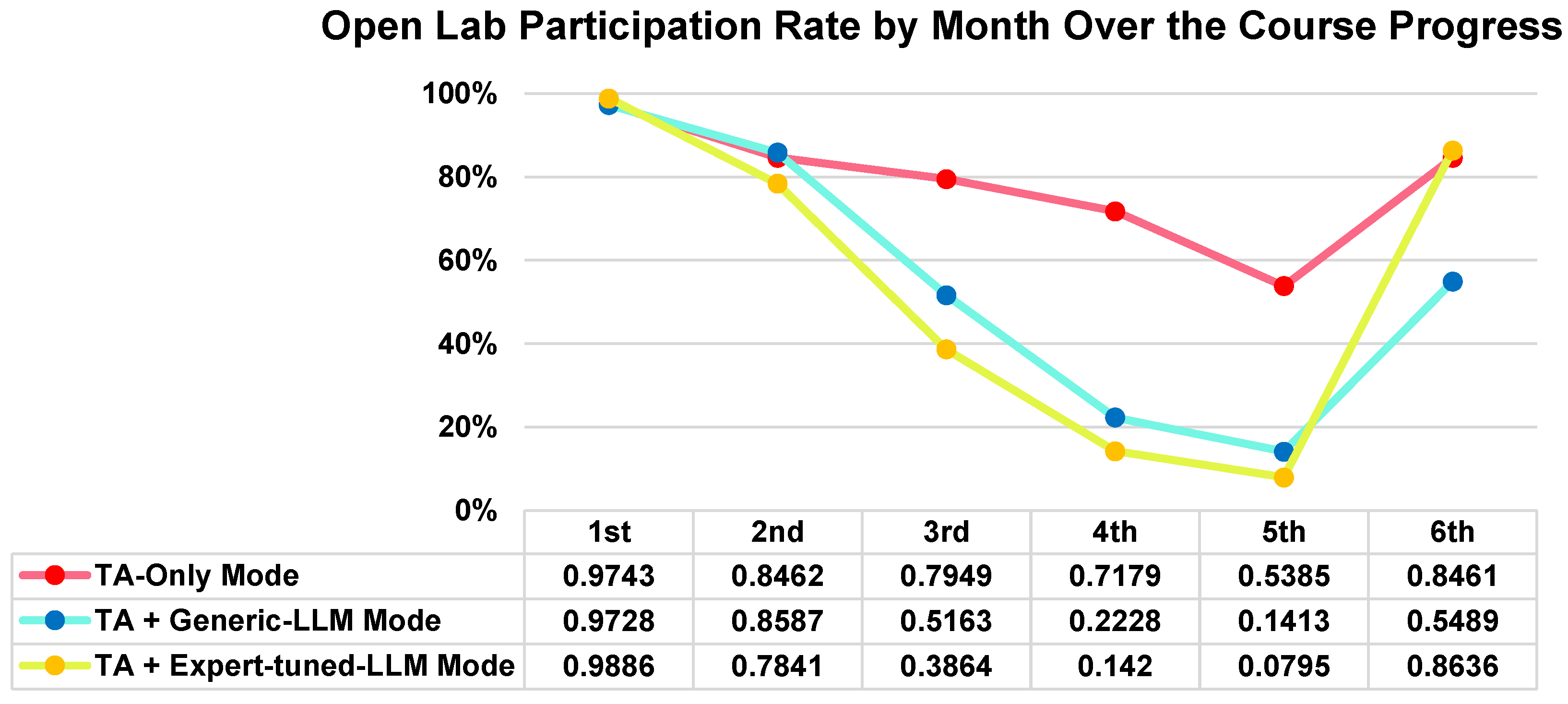

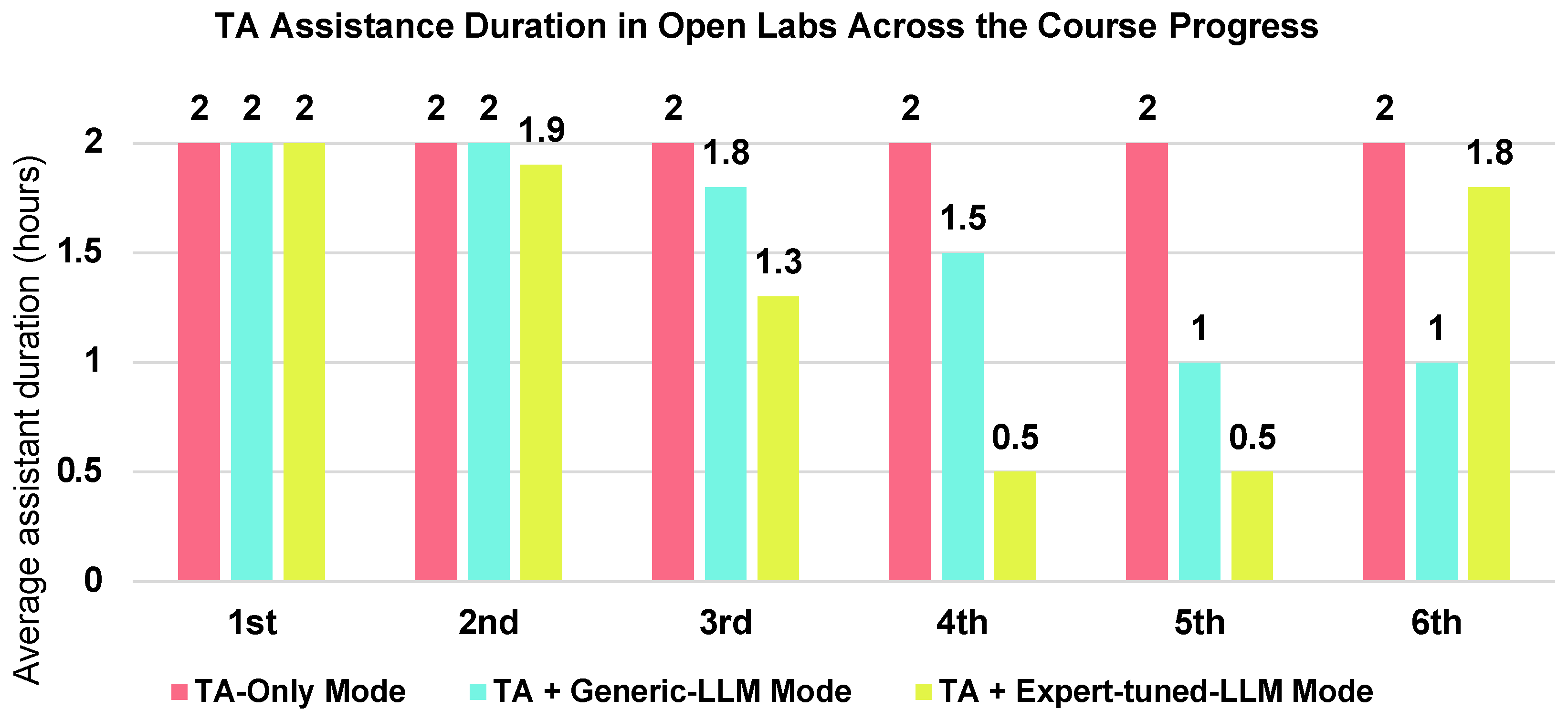

4.3. TA-Collected Observations

5. Conclusions, Limitations, and Future Works

5.1. Conclusions

- The Generic-LLM-only mode yielded limited benefits: Compared to the baseline of prior self-directed learning cohorts, this mode did not lead to statistically significant improvements in student performance. This suggests that in specialized scientific and engineering domains, the unguided use of general-purpose language models may not produce meaningful learning gains.

- Human TA involvement yielded significant benefits: All TA participation modes led to notable improvements in student performance, with a consistent upward trend in grades and a marked reduction in the risk of score decline. These results strongly affirm the enduring value of human expertise in lab-based instructional settings, particularly in guiding complex, hands-on learning tasks.

- Expert-tuned LLM outperform Generic LLMs in domain-specific contexts: The expert-tuned LLM demonstrates superior performance in both HDL code generation accuracy and student learning outcomes, even in the absence of direct TA involvement. This highlights the critical importance of domain-specific optimization for educational AI tools, particularly in technical fields like hardware design.

- Synergistic Effects in Hybrid Modes: The TA + LLM modes achieve the best balance between performance improvement and learner autonomy. Early-stage guidance from human TAs appeared to foster more effective and independent use of LLMs in later phases of the course. This highlights the pedagogical advantage of structured hybrid learning environments where human guidance and AI support are strategically integrated.

5.2. Limitations

- The study focused exclusively on an FPGA-based embedded design course within electronics-related majors, which may limit generalizability to other STEM domains or non-laboratory courses.

- Although the tested performance of generic-LLMs under the course task requirements is a minor difference (as shown in Table 6), it would be preferable to control for all variables by restricting students to a single generic-LLM.

- While attendance logs and TA working hours provide objective measures of engagement and workload, the study does not capture qualitative aspects of student-TA or student-LLM interactions (such as the complexity of questions or the presence of hallucinations in model responses).

- Cross-condition contamination may have occurred. Students in the LLM-only modes may have informally consulted human TAs, while those assigned to the expert-tuned LLM condition may have accessed generic-LLMs. Although such behaviors were discouraged, they cannot be entirely ruled out.

- Although this study included 218 students, the data was collected within a single academic term. Longer-term experimentation and multi-semester data collection would help reduce statistical noise and provide more stable observations. Such longitudinal studies could also uncover finer-grained distinctions among the three TA-support models.

5.3. Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| EE | Electrical and Electronics |

| EDA | Electronic Design Automation |

| FPGA | Field-Programmable Gate Array |

| GPU | Graphics Processing Unit |

| HDL | Hardware Description Languages |

| IP | Intellectual Property |

| JSON | JavaScript Object Notation |

| KS | Kolmogorov–Smirnov |

| LLM | Large Language Model |

| RAG | Retrieval-Augmented Generation |

| STEM | Science, Technology, Engineering and Mathematics |

| TA | Teaching Assistant |

| TVD | Total Variation Distance |

References

- Ali, M., Rao, P., Mai, Y., & Xie, B. (2024, August 13–15). Using benchmarking infrastructure to evaluate LLM performance on CS concept inventories: Challenges, opportunities, and critiques. 2024 ACM Conference on International Computing Education Research (Volume 1, pp. 452–468), Melbourne, Australia. [Google Scholar] [CrossRef]

- Angrist, J., Lang, D., & Oreopoulos, P. (2009). Incentives and services for college achievement: Evidence from a randomized trial. American Economic Journal: Applied Economics, 1(1), 136–163. [Google Scholar] [CrossRef]

- Arora, U., Garg, A., Gupta, A., Jain, S., Mehta, R., Oberoi, R., Prachi, Raina, A., Saini, M., Sharma, S., Singh, J., Tyagi, S., & Kumar, D. (2025, February 12–13). Analyzing LLM usage in an advanced computing class in India. 27th Australasian Computing Education Conference (pp. 154–163), Brisbane, Australia. [Google Scholar] [CrossRef]

- Askarbekuly, N., & Aničić, N. (2024). LLM examiner: Automating assessment in informal self-directed e-learning using ChatGPT. Knowledge and Information Systems, 66(10), 6133–6150. [Google Scholar] [CrossRef]

- Chen, R., Zhang, H., Li, S., Tang, E., Yu, J., & Wang, K. (2023, September 4–8). Graph-opu: A highly integrated fpga-based overlay processor for graph neural networks. 2023 33rd International Conference on Field-Programmable Logic and Applications (FPL) (pp. 228–234), Gothenburg, Sweden. [Google Scholar] [CrossRef]

- Englmeier, K., & Contreras, P. (2024, August 29–31). How AI can help learners to develop conceptual knowledge in digital learning environments. 2024 IEEE 12th International Conference on Intelligent Systems (IS) (pp. 1–6), Varna, Bulgaria. [Google Scholar] [CrossRef]

- Gürtl, S., Scharf, D., Thrainer, C., Gütl, C., & Steinmaurer, A. (2024). Design and evaluation of an LLM-based mentor for software architecture in higher education project management classes. In International conference on interactive collaborative learning (pp. 375–386). Springer. [Google Scholar] [CrossRef]

- Hailikari, T., Katajavuori, N., & Lindblom-Ylanne, S. (2008). The relevance of prior knowledge in learning and instructional design. American Journal of Pharmaceutical Education, 72(5), 113. [Google Scholar] [CrossRef] [PubMed]

- Ho, H.-T., Ly, D.-T., & Nguyen, L. V. (2024, November 3–6). Mitigating hallucinations in large language models for educational application. 2024 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia) (pp. 1–4), Sheraton Grand Danang, Vietnam. [Google Scholar] [CrossRef]

- Hu, B., Zheng, L., Zhu, J., Ding, L., Wang, Y., & Gu, X. (2024). Teaching plan generation and evaluation with GPT-4: Unleashing the potential of LLM in instructional design. IEEE Transactions on Learning Technologies, 17, 1445–1459. [Google Scholar] [CrossRef]

- Imran, M., & Almusharraf, N. (2023). Analyzing the role of ChatGPT as a writing assistant at higher education level: A systematic review of the literature. Contemporary Educational Technology, 15(4), ep464. [Google Scholar] [CrossRef] [PubMed]

- Jacobsen, L. J., & Weber, K. E. (2025). The promises and pitfalls of large language models as feedback providers: A study of prompt engineering and the quality of AI-driven feedback. AI, 6(2), 35. [Google Scholar] [CrossRef]

- Jamieson, P., Bhunia, S., Ricco, G. D., Swanson, B. A., & Van Scoy, B. (2025, June 22–25). LLM prompting methodology and taxonomy to benchmark our engineering curriculums. 2025 ASEE Annual Conference & Exposition, Montreal, QC, Canada. [Google Scholar] [CrossRef]

- Jia, Q., Cui, J., Du, H., Rashid, P., Xi, R., Li, R., & Gehringer, E. (2024, July 14–17). LLM-generated feedback in real classes and beyond: Perspectives from students and instructors. 17th International Conference on Educational Data Mining (pp. 862–867), Atlanta, GA, USA. [Google Scholar] [CrossRef]

- Jishan, M. A., Allvi, M. W., & Rifat, M. A. K. (2024, December 11–12). Analyzing user prompt quality: Insights from data. 2024 International Conference on Decision aid Sciences and Applications (DASA) (pp. 1–5), Manama, Bahrain. [Google Scholar] [CrossRef]

- Kim, J., Lee, S., Hun Han, S., Park, S., Lee, J., Jeong, K., & Kang, P. (2023, November 1). Which is better? Exploring prompting strategy for LLM-based metrics. 4th Workshop on Evaluation and Comparison of NLP Systems (pp. 164–183), Bali, Indonesia. [Google Scholar] [CrossRef]

- Lee, J. X., & Song, Y.-T. (2024, July 5–7). College exam grader using LLM AI models. 2024 IEEE/ACIS 27th International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD) (pp. 282–289), Beijing, China. [Google Scholar] [CrossRef]

- Li, R., Li, M., & Qiao, W. (2025). Engineering students’ use of large language model tools: An empirical study based on a survey of students from 12 universities. Education Sciences, 15(3), 280. [Google Scholar] [CrossRef]

- Lyu, W., Wang, Y., Chung, T. R., Sun, Y., & Zhang, Y. (2024, July 18–20). Evaluating the effectiveness of LLMs in introductory computer science education: A semester-long field study. Eleventh ACM Conference on Learning @ Scale (pp. 63–74), Atlanta, GA, USA. [Google Scholar] [CrossRef]

- Ma, S., Wang, J., Zhang, Y., Ma, X., & Wang, A. Y. (2025, April 26–May 1). DBox: Scaffolding algorithmic programming learning through learner-LLM co-decomposition. 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan. [Google Scholar] [CrossRef]

- Ma, Y., Shen, X., Wu, Y., Zhang, B., Backes, M., & Zhang, Y. (2024, November 12–16). The death and life of great prompts: Analyzing the evolution of LLM prompts from the structural perspective. 2024 Conference on Empirical Methods in Natural Language Processing (pp. 21990–22001), Miami, FL, USA. [Google Scholar] [CrossRef]

- Maiti, P., & Goel, A. K. (2024, July 14–17). How do students interact with an LLM-powered virtual teaching assistant in different educational settings? 17th International Conference on Educational Data Mining Workshops (pp. 1–9), Atlanta, GA, USA. [Google Scholar] [CrossRef]

- Marquez-Carpintero, L., Viejo, D., & Cazorla, M. (2025). Enhancing engineering and STEM education with vision and multimodal large language models to predict student attention. IEEE Access, 13, 114681–114695. [Google Scholar] [CrossRef]

- Matzakos, N., & Moundridou, M. (2025). Exploring large language models integration in higher education: A case study in a mathematics laboratory for civil engineering students. Computer Applications in Engineering Education, 33(3), e70049. [Google Scholar] [CrossRef]

- Meissner, R., Pögelt, A., Ihsberner, K., Grüttmüller, M., Tornack, S., Thor, A., Pengel, N., Wollersheim, H.-W., & Hardt, W. (2024). LLM-generated competence-based e-assessment items for higher education mathematics: Methodology and evaluation. Frontiers in Education, 9, 1427502. [Google Scholar] [CrossRef]

- Mienye, I. D., & Swart, T. G. (2025). ChatGPT in education: A review of ethical challenges and approaches to enhancing transparency and privacy. Procedia Computer Science, 254, 181–190. [Google Scholar] [CrossRef]

- Morton, J. L. (2025). From meaning to emotions: LLMs as artificial communication partners. AI & SOCIETY, 1–14. [Google Scholar] [CrossRef]

- O’Keefe, J. G. (2024). Increasing practice time for university presentation class students by implementing large language models (LLM). Fukuoka Jo Gakuin University Professional Teacher Education Support Center Educational Practice Research, 8, 33–52. [Google Scholar] [CrossRef]

- Pan, Y., & Nehm, R. H. (2025). Large language model and traditional machine learning scoring of evolutionary explanations: Benefits and drawbacks. Education Sciences, 15(6), 676. [Google Scholar] [CrossRef]

- Polakova, P., & Klimova, B. (2023). Using DeepL translator in learning English as an applied foreign language—An empirical pilot study. Heliyon, 9(8), e18595. [Google Scholar] [CrossRef] [PubMed]

- Razafinirina, M. A., Dimbisoa, W. G., & Mahatody, T. (2024). Pedagogical alignment of large language models (llm) for personalized learning: A survey, trends and challenges. Journal of Intelligent Learning Systems and Applications, 16(4), 448–480. [Google Scholar] [CrossRef]

- Reichardt, C. S. (2002). Experimental and quasi-experimental designs for generalized causal inference. JSTOR. [Google Scholar]

- Rogers, K., Davis, M., Maharana, M., Etheredge, P., & Chernova, S. (2025, April 26–May 1). Playing dumb to get smart: Creating and evaluating an LLM-based teachable agent within university computer science classes. 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan. [Google Scholar] [CrossRef]

- Rossi, V., Scantamburlo, T., & Melonio, A. (2025, July 22–26). Generative AI for non-techies: Empirical insights into LLMs in programming education for novice non-STEM learners. International Conference on Artificial Intelligence in Education (pp. 162–175), Palermo, Italy. [Google Scholar] [CrossRef]

- Rui, Y., Li, Y., Wang, R., Chen, R., Zhu, Y., Di, Z., Wang, X., & Ling, M. (2025, May 9–12). ChaTCL: LLM-based multi-agent RAG framework for TCL script generation. 2025 International Symposium of Electronics Design Automation (ISEDA) (pp. 736–742), Hong Kong, China. [Google Scholar] [CrossRef]

- Salehi, S., Burkholder, E., Lepage, G. P., Pollock, S., & Wieman, C. (2019). Demographic gaps or preparation gaps?: The large impact of incoming preparation on performance of students in introductory physics. Physical Review Physics Education Research, 15(2), 020114. [Google Scholar] [CrossRef]

- Slavin, R. E. (2002). Evidence-based education policies: Transforming educational practice and research. Educational Researcher, 31(7), 15–21. [Google Scholar] [CrossRef]

- Song, T., Zhang, H., & Xiao, Y. (2024). A high-quality generation approach for educational programming projects using LLM. IEEE Transactions on Learning Technologies, 17, 2242–2255. [Google Scholar] [CrossRef]

- Tan, K., Yao, J., Pang, T., Fan, C., & Song, Y. (2024). ELF: Educational LLM framework of improving and evaluating AI generated content for classroom teaching. ACM Journal of Data and Information Quality, 17, 1–23. [Google Scholar] [CrossRef]

- Taveekitworachai, P., Abdullah, F., & Thawonmas, R. (2024, November 12–16). Null-shot prompting: Rethinking prompting large language models with hallucination. 2024 Conference on Empirical Methods in Natural Language Processing (pp. 13321–13361), Miami, FL, USA. [Google Scholar] [CrossRef]

- Torgerson, D. (2008). Designing randomised trials in health, education and the social sciences: An introduction. Springer. [Google Scholar]

- Wermelinger, M. (2023, March 15–18). Using GitHub copilot to solve simple programming problems. 54th ACM Technical Symposium on Computer Science Education (pp. 172–178), Toronto, ON, Canada. [Google Scholar] [CrossRef]

- Xu, W., & Ouyang, F. (2022). The application of AI technologies in STEM education: A systematic review from 2011 to 2021. International Journal of STEM Education, 9(1), 59. [Google Scholar] [CrossRef]

- Yan, L., Sha, L., Zhao, L., Li, Y., Martinez-Maldonado, R., Chen, G., Li, X., Jin, Y., & Gašević, D. (2024). Practical and ethical challenges of large language models in education: A systematic scoping review. British Journal of Educational Technology, 55(1), 90–112. [Google Scholar] [CrossRef]

- Yang, Y., Sun, W., Sun, D., & Salas-Pilco, S. Z. (2025a). Navigating the AI-enhanced STEM education landscape: A decade of insights, trends, and opportunities. Research in Science & Technological Education, 43(3), 693–717. [Google Scholar] [CrossRef]

- Yang, Y., Teng, F., Liu, P., Qi, M., Lv, C., Li, J., Zhang, X., & He, Z. (2025b, March 31–April 2). Haven: Hallucination-mitigated llm for verilog code generation aligned with hdl engineers. 2025 Design, Automation & Test in Europe Conference (DATE) (pp. 1–7), Lyon, France. [Google Scholar] [CrossRef]

- Yao, X., Li, H., Chan, T. H., Xiao, W., Yuan, M., Huang, Y., Chen, L., & Yu, B. (2025). HDLdebugger: Streamlining HDL debugging with Large Language Models. ACM Transactions on Design Automation of Electronic Systems, 30, 1–26. [Google Scholar] [CrossRef]

- Zheng, Q., Mo, T., & Wang, X. (2023). Personalized feedback generation using LLMs: Enhancing student learning in STEM education. Journal of Advanced Computing Systems, 3(10), 8–22. [Google Scholar] [CrossRef]

- Zheng, X., Li, Z., Gui, X., & Luo, Y. (2025, April 26–May 1). Customizing emotional support: How do individuals construct and interact with LLM-powered chatbots. 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan. [Google Scholar] [CrossRef]

- Zhui, L., Fenghe, L., Xuehu, W., Qining, F., & Wei, R. (2024). Ethical considerations and fundamental principles of large language models in medical education. Journal of Medical Internet Research, 26, e60083. [Google Scholar] [CrossRef]

| Assistance Modes | Features |

|---|---|

| Mode (1) Teaching Assistant (TA) only | Addressing practical engineering issues and writing-related questions. TA does not respond to any inquiries regarding LLM usage, even if students privately use LLM. |

| Mode (2) Generic LLM only | No human TA is involved. Students complete their designs solely with the assistance of publicly available generic LLMs (such as ChatGPT, DeepSeek, Gemini, and Grok), but cannot access the expert-tuned model. |

| Mode (3) Expert-tuned LLM only | No human TA is involved. Students complete their designs solely with the assistance of the expert-tuned LLM, although it is not possible to fully prevent students from privately using generic LLMs. |

| Mode (4) TA + Generic LLM | TAs do not directly assist with practical engineering or writing issues. Their contribution is confined to helping students enhance or adjust prompts used in generic LLM interactions. |

| Mode (5) TA + Expert-tuned LLM | TAs do not directly assist with practical engineering or writing issues. Their contribution is confined to helping students enhance or adjust prompts used in expert-tuned LLM interactions. |

| Integrated Circuit Design and Integrated Systems | Microelectronics Science and Engineering | Electronic Science and Technology | Total |

|---|---|---|---|

| 83 | 69 | 66 | 218 |

| Grade | Score Ranges |

|---|---|

| A+ | >95 |

| A | 90–95 |

| A− | 85–89 |

| B+ | 80–84 |

| B | 75–79 |

| B− | 70–74 |

| C+ | 65–69 |

| C | 60–64 |

| C− | <60 |

| Assistant Modes | The Number of Students | TVD | KS-Test | |

|---|---|---|---|---|

| KS | p | |||

| TA-Only | 39 | 0.0331 | 0.0879 | 0.9371 |

| Generic-LLM-Only | 46 | 0.0231 | 0.0718 | 0.9800 |

| Expert-tuned-LLM-Only | 43 | 0.0289 | 0.0669 | 0.9929 |

| TA + Generic-LLM | 46 | 0.0231 | 0.0734 | 0.9755 |

| TA + Expert-tuned-LLM | 44 | 0.0221 | 0.0730 | 0.9801 |

| Types of Data | |

|---|---|

| Expert-tuned LLM | HDL code generation pass rate |

| Student grades | Previous class students’ course grades and prerequisite course grades |

| 218 students’ course grades and prerequisite course grades | |

| TA perspective | Relationship between student participation and course progress |

| Models | Pass@1 | Pass@5 | Pass@10 |

|---|---|---|---|

| Ours | 60.7% | 78.5% | 88.4% |

| ChatGPT-4o | 47.9% | 75.2% | 78.7% |

| DeepSeek | 27.9% | 32.2% | 55.6% |

| Gemini 1.5 Pro | 47.9% | 65.9% | 79.3% |

| Grok 1.5 | 42.9% | 56.6% | 58.8% |

| Assistant Modes | Max | Min | Mean | Std. | Proportion by Score Change Range (%) | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| <−10 | [−10, −5) | [−5, 0) | [0, 5) | [5, 10) | ≥10 | |||||

| Previous class (independent) | 25.70 | −10.00 | −0.21 | 4.78 | 0.00 | 10.73 | 44.63 | 37.85 | 4.52 | 2.26 |

| TA-only | 45.40 | −9.60 | 4.11 | 8.58 | 0.00 | 2.56 | 17.95 | 48.72 | 20.51 | 10.26 |

| Generic-LLM-only | 25.50 | −6.80 | 0.44 | 5.18 | 0.00 | 6.52 | 43.48 | 43.48 | 4.35 | 2.17 |

| Expert-tuned-LLM-only | 13.00 | −9.80 | 0.07 | 5.40 | 0.00 | 18.60 | 37.21 | 27.91 | 11.63 | 4.65 |

| TA + Generic-LLM | 16.40 | −6.90 | 4.19 | 4.73 | 0.00 | 2.17 | 8.70 | 50.00 | 28.26 | 10.87 |

| TA + Expert-tuned-LLM | 22.10 | −3.80 | 3.74 | 5.92 | 0.00 | 0.00 | 22.73 | 47.73 | 18.18 | 11.36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Z.; Yang, B.; Huang, S. Exploring the Impact of Different Assistance Approaches on Students’ Performance in Engineering Lab Courses. Educ. Sci. 2025, 15, 1443. https://doi.org/10.3390/educsci15111443

Liu Z, Yang B, Huang S. Exploring the Impact of Different Assistance Approaches on Students’ Performance in Engineering Lab Courses. Education Sciences. 2025; 15(11):1443. https://doi.org/10.3390/educsci15111443

Chicago/Turabian StyleLiu, Ziqi, Bolan Yang, and Shizhen Huang. 2025. "Exploring the Impact of Different Assistance Approaches on Students’ Performance in Engineering Lab Courses" Education Sciences 15, no. 11: 1443. https://doi.org/10.3390/educsci15111443

APA StyleLiu, Z., Yang, B., & Huang, S. (2025). Exploring the Impact of Different Assistance Approaches on Students’ Performance in Engineering Lab Courses. Education Sciences, 15(11), 1443. https://doi.org/10.3390/educsci15111443