Artificial Intelligence Performance in Introductory Biology: Passing Grades but Poor Performance at High Cognitive Complexity

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection

2.2. Grading Outputs

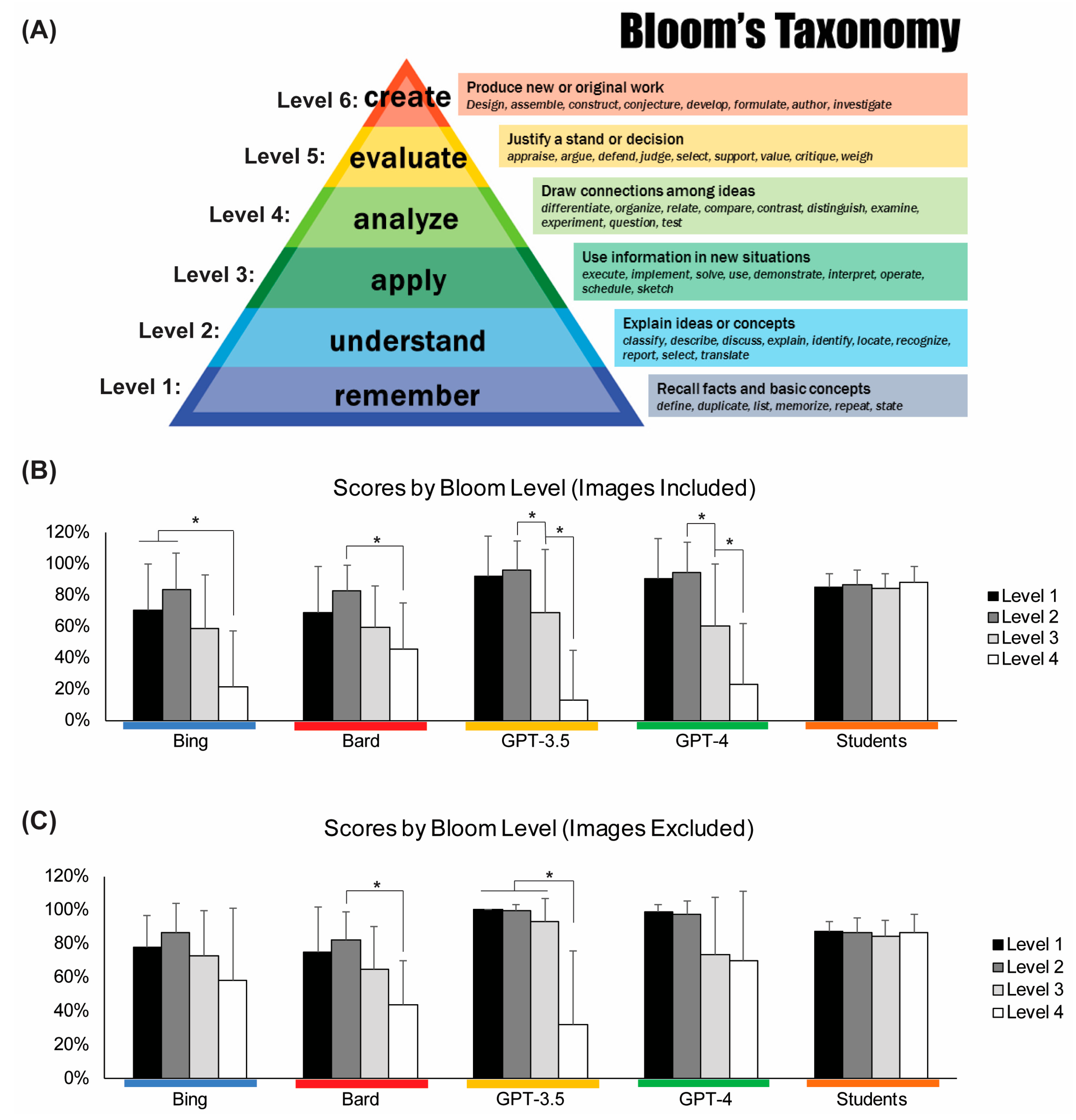

2.3. Bloom’s Taxonomy

2.4. Statistical Analysis

3. Results

3.1. Performance by Assessment Type

3.2. Performance by Bloom’s Taxonomy Level

3.3. Final Paper

3.4. Final Grades and Rankings

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Bloom’s Taxonomy Level | Sample Input/Prompt | Sample Output | Entity and Score Received |

|---|---|---|---|

| 1 | “Your body produces a liter of mucus per day secreted from a variety of cell types. One of the major components of mucus is mucin, a protein with many chemical modifications. Explain the processes of going from MUC1 (the gene that codes for mucin) to the secretion of this protein outside of the cell. Answer this question based on what we have learned in class, NOT looking up mucin production from external sources!” | “Sure, I can help you with that. The MUC1 gene is transcribed into mRNA, which is then translated into a protein called mucin. Mucin is a large complex protein that is made up of many different amino acids. It is also heavily glycosylated, which means that it has many sugar molecules attached to it. The mucin protein is then processed in the Golgi apparatus. The Golgi apparatus is a series of membrane-bound compartments that are responsible for processing and exporting proteins. In the Golgi apparatus the mucin protein is cleaved into smaller pieces and the sugars are attached to the protein. The processed mucin protein is then processed into vesicles. These vesicles are then transported into the plasma membrane, where they fuse with the membrane and release the mucin protein outside of the cell. The secretion of mucin is a complex process that involves many different steps. The MUC1 gene, mRNA, protein synthesis, Golgi apparatus, vesicles, and plasma membrane all play a role in the secretion of mucin. Here is a diagram of the process of mucin secretion: DNA → mRNA → Protein synthesis → Golgi apparatus → Vesicles → Plasma membrane → Mucin secretion The following are some of the key steps in the process of mucin secretion:

Mucin is a very important protein. It plays a role in a variety of functions, including:

| Bard, 95% |

| 2 | “Why is complementary base pairing critical for replication?” | “Complementary base pairing is critical for DNA replication because it allows the two strands of DNA to be separated and used as templates to build two new strands with complementary bases. The hydrogen bonds between the bases hold the two strands of DNA together and serve as a way for DNA to replicate. The pairing of complementary bases in DNA means that the information contained within each strand is redundant. I hope this helps. Let me know if you have any other questions.” | Bing, 100% |

| 3 | “Given that codons consist of three nucleotides, how many amino acids could the genetic code support?” | “The genetic code is the set of rules that determines how the nucleotide sequence of a gene is translated into the corresponding sequence of amino acids in a protein. Since codons consist of three nucleotides, there are 64 possible combinations (4^3) of nucleotides to form codons.” | GPT-3.5, 80% |

| 4 | “Assuming the promoter and terminator lay just outside of the sequence above and also assuming there are no introns (no splicing occurs), what is the sequence of the RNA?” | “To determine the sequence of RNA transcribed from the given DNA sequence “CTACGCTACAGTTTAAGCCAGGATAGCAGACGGCATATAAATTACGG,” we need to identify the complementary RNA bases. RNA is synthesized using complementary base pairing with the DNA template strand, where thymine (T) is replaced by uracil (U) in RNA. The template strand is: CTACGCTACAGTTTAAGCCAGGATAGCAGACGGCATATAAATTACGG Using the rules of complementary base pairing and replacing T with U, the corresponding RNA sequence is: GAUGCGAUGUCAAAACGGUCCUAUCGUCUGCCGUAUAUAAUUACGCC Therefore, the sequence of the RNA transcribed from the given DNA sequence is “GAUGCGAUGUCAAAACGGUCCUAUCGUCUGCCGUAUAUAAUUACGCC.”” | GPT-4.0, 70% |

| 5 | “Keeping ethical standards in mind, which model organism should you choose to study the role of four chambers in heart function? Which model organism should you choose to study contracting heart tissue?” | “Ethical standards for animal research are important to ensure the humane care and welfare of the animals used, as well as the scientific validity and social responsibility of the research. Some of the general principles that guide ethical animal research are replacement, reduction, refinement, and respect. To study the role of four chambers in heart function, you should choose a model organism that has a similar heart structure to humans, but also has a lower ethical cost. One possible option is Chicken, which has a four-chambered heart with two atria and two ventricles, but is considered less sentient and more replaceable than mammals. To study contracting heart tissue, you should choose a model organism that has a simple heart structure that can be easily manipulated and observed, but also has a lower ethical cost. One possible option is Tunicate, which has a single layer of contracting cells that forms a primitive heart, but is considered less sentient and more replaceable than vertebrates.” | Bing, 0% |

| 6 | “GTACGTCATGCCGTACTGTCCATCTGGCTGCCTGAT” “Design primers (6 base pairs in length) to amplify this gene. Report your primers in 5′ to 3′ orientation. How large would your PCR product be?” | “To amplify the gene from the start codon to the stop codon, you will need to design 6 bp forward and reverse primers to amplify the gene. Here are some guidelines for designing your PCR primers:

Forward primer: GTCATG Reverse primer: AGCTCA The product size will be ~27 bp. I hope this helps!” | Bing, 25% |

References

- Abbas, M., Jam, F. A., & Khan, T. I. (2024). Is it harmful or helpful? Examining the causes and consequences of generative AI usage among university students. International Journal of Educational Technology in Higher Education, 21(1), 10–22. [Google Scholar] [CrossRef]

- Anderson, L. W., & Krathwohl, D. R. (2001). A taxonomy for learning, teaching and assessing: A revision of Bloom’s Taxonomy of educational objectives: Complete edition. Longman. ISBN 978-0801319037. [Google Scholar]

- Annepaka, Y., & Partha, P. (2025). Large language models: A survey of their development, capabilities, and applications. Knowledge and Information Systems, 67, 2967–3022. [Google Scholar] [CrossRef]

- Baillifard, A., Gabella, M., Lavenex, P. B., & Martarelli, C. S. (2025). Effective learning with a personal AI tutor: A case study. Education and Information Technologies, 30(1), 297–312. [Google Scholar] [CrossRef]

- Bandi, A., Adapa, P. V. S. R., & Kuchi, Y. E. V. P. K. (2023). The power of generative AI: A review of requirements, models, input–output formats, evaluation metrics, and challenges. Future Internet, 15(8), 260. [Google Scholar] [CrossRef]

- Bertolini, R., Finch, S. J., & Nehm, R. H. (2021). Testing the impact of novel assessment sources and machine learning methods on predictive outcome modeling in undergraduate biology. Journal of Science Education and Technology, 30(2), 193–209. [Google Scholar] [CrossRef]

- Bhayana, R., Bleakney, R. R., & Krishna, S. (2023). GPT-4 in radiology: Improvements in advanced reasoning. Radiology, 307(5), 230987. [Google Scholar] [CrossRef]

- Chelli, M., Descamps, J., Lavoué, V., Trojani, C., Azar, M., Deckert, M., Raynier, J., Clowez, G., Boileau, P., & Ruetsch-Chelli, C. (2024). Hallucination rates and reference accuracy of ChatGPT and bard for systematic reviews: Comparative Analysis. Journal of Medical Internet Research, 26, e53164. [Google Scholar] [CrossRef]

- Hanshaw, G., & Sullivan, C. (2025). Exploring barriers to AI course assistant adoption: A mixed-methods study on student non-utilization. Discover Artificial Intelligence, 5, 178. [Google Scholar] [CrossRef]

- Hatzius, J. (2023). The potentially large effects of artificial intelligence on economic growth (Briggs/Kodnani). Goldman Sachs, 1, 268–296. [Google Scholar]

- Hoskins, S. G., Lopatto, D., & Stevens, L. M. (2011). The C.R.E.A.T.E. Approach to primary literature shifts undergraduates’ self-assessed ability to read and analyze journal articles, attitudes about science, and epistemological beliefs. CBE—Life Sciences Education, 10(4), 368. [Google Scholar] [CrossRef]

- Jumper, J., Evans, R., Pritzel, A., Green, T., Figurnov, M., Ronneberger, O., Tunyasuvunakool, K., Bates, R., Žídek, A., Potapenko, A., Bridgland, A., Meyer, C., Kohl, S. A. A., Ballard, A. J., Cowie, A., Romera-Paredes, B., Nikolov, S., Jain, R., Adler, J., … Back, T., et al. (2021). Highly accurate protein structure prediction with AlphaFold. Nature, 596(7873), 583–589. [Google Scholar] [CrossRef]

- Katz, D. M., Bommarito, M. J., Gao, S., & Arredondo, P. (2024). GPT-4 passes the bar exam. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 382(2270), 20230254. [Google Scholar] [CrossRef] [PubMed]

- Khlaif, Z. N., Mousa, A., Hattab, M. K., Itmazi, J., Hassan, A. A., Sanmugam, M., & Ayyoub, A. (2023). The potential and concerns of using AI in scientific research: ChatGPT performance evaluation. JMIR Medical Education, 9, e47049. [Google Scholar] [CrossRef]

- Khosravi, T., Rahimzadeh, A., Motallebi, F., Vaghefi, F., Mohammad Al Sudani, Z., & Oladnabi, M. (2024). The performance of GPT-3.5 and GPT-4 on genetics tests at PhD-level: GPT-4 as a promising tool for genomie medicine and education. Journal of Clinical and Basic Research, 8(4), 22–26. Available online: https://jcbr.goums.ac.ir/article-1-476-en.html (accessed on 7 October 2025).

- Kipp, M. (2024). From GPT-3.5 to GPT-4.o: A leap in AI’s medical exam performance. Information, 15(9), 543. [Google Scholar] [CrossRef]

- Koć-Januchta, M. M., Schönborn, K. J., Tibell, L. A. E., Chaudhri, V. K., & Heller, H. C. (2020). Engaging with biology by asking questions: Investigating students’ interaction and learning with an artificial intelligence-enriched textbook. Journal of Educational Computing Research, 58(6), 1190–1224. [Google Scholar] [CrossRef]

- Kozeracki, C. A., Carey, M. F., Colicelli, J., & Levis-Fitzgerald, M. (2006). An intensive primary-literature–based teaching program directly benefits undergraduate science majors and facilitates their transition to doctoral programs. CBE—Life Sciences Education, 5(4), 340–347. [Google Scholar] [CrossRef]

- Lahat, A., Sharif, K., Zoabi, N., Patt, Y. S., Sharif, Y., Fisher, L., Shani, U., Arow, M., Levin, R., & Klang, E. (2024). Assessing generative pretrained transformers (GPT) in clinical decision-making: Comparative analysis of GPT-3.5 and GPT-4. Journal of Medical Internet Research, 26, e54571. [Google Scholar] [CrossRef] [PubMed]

- Lin, J., & Ngiam, K. Y. (2023). How data science and AI-based technologies impact genomics. Singapore Medical Journal, 64(1), 59–66. [Google Scholar] [CrossRef] [PubMed]

- Liu, M., Okuhara, Y., Chang, X., Shirabe, R., Nishiie, Y., Okada, H., & Kiuchi, T. (2024). Performance of ChatGPT across different versions in meical licensing examinations worldwide: Systematic review and meta-analysis. Journal of Medical Internet Research, 26, e60807. [Google Scholar] [CrossRef] [PubMed]

- Martínez, E. (2025). Re-evaluating GPT-4’s bar exam performance. Artificial Intelligence and Law, 33, 581–604. [Google Scholar] [CrossRef]

- Melisa, R., Ashadi, A., Triastuti, A., Hidayati, S., Salido, A., Ero, P. E. L., Marlini, C., Zefrin, Z., & Al Fuad, Z. (2025). Critical thinking in the age of AI: A systematic review of AI’s effects on higher education. Educational Process International Journal, 14, e2025031. [Google Scholar] [CrossRef]

- Michel-Villarreal, R., Vilalta-Perdomo, E., Salinas-Navarro, D. E., Thierry-Aguilera, R., & Gerardou, F. S. (2023). Challenges and opportunities of generative AI for higher education as explained by ChatGPT. Education Sciences, 13(9), 856. [Google Scholar] [CrossRef]

- Moharreri, K., Ha, M., & Nehm, R. H. (2014). EvoGrader: An online formative assessment tool for automatically evaluating written evolutionary explanations. Evolution: Education and Outreach, 7(1), 15. [Google Scholar] [CrossRef]

- Mukhiddinov, M., & Kim, S.-Y. (2021). A systematic literature review on the automatic creation of tactile graphics for the blind and visually impaired. Processes, 9(10), 1726. [Google Scholar] [CrossRef]

- Nasution, N. E. A. (2023). Using artificial intelligence to create biology multiple choice questions for higher education. Agricultural and Environmental Education, 2(1), em002. [Google Scholar] [CrossRef]

- Nazari, N., Shabbir, M. S., & Setiawan, R. (2021). Application of artificial intelligence powered digital writing assistant in higher education: Randomized controlled trial. Heliyon, 7(5), e07014. [Google Scholar] [CrossRef]

- Nikolopoulou, K. (2024). Generative artificial intelligence in higher education: Exploring ways of harnessing pedagogical practices with the assistance of ChatGPT. International Journal of Changes in Education, 1(2), 103–111. [Google Scholar] [CrossRef]

- National Science Foudnation and the American Association for the Advancement of Science. (2009). Vision and change in undergraduate biology education: A call to action. Available online: https://www.aaas.org/sites/default/files/content_files/VC_report.pdf (accessed on 7 October 2025).

- Pan, M., Guo, K., & Lai, C. (2024). Using artificial intelligence chatbots to support English-as-a-foreign language students’ self-regulated reading. RELC Journal, 1–13. [Google Scholar] [CrossRef]

- Perez-Lopez, R., Laleh, N. G., Mahmood, F., & Kather, J. N. (2024). A guide to artificial intelligence for cancer researchers. Nature Reviews Cancer, 24(6), 427–441. [Google Scholar] [CrossRef] [PubMed]

- Rudolph, J. (2023). War of the chatbots: Bard, bing chat, ChatGPT, ernie and beyond. The new AI gold rush and its impact on higher education. Journal of Applied Learning and Teaching, 6(1), 364–389. [Google Scholar] [CrossRef]

- Sajja, R., Sermet, Y., Cikmaz, M., Cwiertny, D., & Demir, I. (2024). Artificial intelligence-enabled intelligent assistant for personalized and adaptive learning in higher education. Information, 15(10), 596. [Google Scholar] [CrossRef]

- Salloum, S. A. (2024). AI perils in education: Exploring ethical concerns. In Artificial intelligence in education: The power and dangers of ChatGPT in the classroom (Vol. 144, pp. 669–675). Springer Nature. [Google Scholar] [CrossRef]

- Schön, E.-M., Neumann, M., Hofmann-Stölting, C., Baeza-Yates, R., & Rauschenberger, M. (2023). How are AI assistants changing higher education? Frontiers in Computer Science, 5, 1208550. [Google Scholar] [CrossRef]

- Selvam, A. A. A. (2024). Exploring the impact of artificial intelligence on transforming physics, chemistry, and biology education. Journal of Science with Impact, 2. [Google Scholar] [CrossRef]

- Stribling, D., Xia, Y., Amer, M. K., Graim, K. S., Mulligan, C. J., & Renne, R. (2024). The model student: GPT-4 performance on graduate biomedical science exams. Scientific Reports, 14(1), 1278. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y., Sheng, D., Zhou, Z., & Wu, Y. (2024). AI hallucination: Towards a comprehensive classification of distorted information in artificial intelligence-generated content. Humanities and Social Sciences Communications, 11(1), 1–14. [Google Scholar] [CrossRef]

- Thomas, S., Abraham, A., Baldwin, J., Piplani, S., & Petrovsky, N. (2022). Artificial intelligence in vaccine and drug design. In N. J. Clifton (Ed.), Vaccine design: Methods and protocols, volume 1. Vaccines for human diseases (pp. 131–146). Springer US. [Google Scholar] [CrossRef]

- Walters, W. H. (2023). The effectiveness of software designed to detect AI-generated writing: A comparison of 16 AI text detectors. Open Information Science, 7(1), e001568-268. [Google Scholar] [CrossRef]

- Walters, W. H., & Wilder, E. I. (2023). Fabrication and errors in the bibliographic citations generated by ChatGPT. Scientific Reports, 13(1), 14045. [Google Scholar] [CrossRef]

- Watkins, M. (2025). AI-powered reading assistants: A tool for equity in first-year writing. In Rethinking writing eduction in the age of generative AI (1st ed.). Routledge. [Google Scholar] [CrossRef]

- Xu, W., Meng, J., Raja, S. K. S., Priya, M. P., & Devi, M. K. (2023). Artificial intelligence in constructing personalized and accurate feedback systems for students. International Journal of Modeling, Simulation, and Scientific Computing, 14(01), 2341001. [Google Scholar] [CrossRef]

- Yeadon, W., Peach, A., & Testrow, C. (2024). A comparison of human, GPT-3.5, and GPT-4 performance in a university-level coding course. Scientific Reports, 14, 23285. [Google Scholar] [CrossRef]

- Zhai, C., & Wibowo, S. (2023). A systematic review on artificial intelligence dialogue systems for enhancing English as foreign language students’ interactional competence in the university. Computers and Education: Artificial Intelligence, 4, 100134. [Google Scholar] [CrossRef]

| Images Included | Images Excluded | |||

|---|---|---|---|---|

| Assessment | Entity | Average Score | Average Score | p-Value |

| P-set | Students | 86.6% | 87.9% | not significant |

| P-set | GPT-4 | 64.2% | 84.6% | 0.03 |

| P-set | GPT-3.5 | 63.7% | 90.8% | >0.01 |

| P-set | Bing | 62.1% | 84.4% | >0.01 |

| P-set | Bard | 60.7% | 71.6% | not significant |

| Exam | Students | 85.1% | 85.4% | not significant |

| Exam | GPT-4 | 65.4% | 91.6% | >0.01 |

| Exam | GPT-3.5 | 68.0% | 93.1% | >0.01 |

| Exam | Bing | 56.3% | 77.0% | >0.01 |

| Exam | Bard | 66.8% | 73.3% | not significant |

| Assessment | Entity | Average Score | p-Value |

|---|---|---|---|

| P-set | Students | 87.9% | |

| P-set | GPT-3.5 | 90.8% | not significant |

| P-set | GPT-4 | 84.6% | not significant |

| P-set | Bing | 84.4% | not significant |

| P-set | Bard | 71.6% | >0.01 |

| Exam | Students | 85.4% | |

| Exam | GPT-3.5 | 93.1% | 0.03 |

| Exam | GPT-4 | 91.6% | not significant |

| Exam | Bing | 77.0% | not significant |

| Exam | Bard | 73.3% | 0.01 |

| Bloom Level | Images | Bing | Bard | GPT-3.5 | GPT-4 | Students |

|---|---|---|---|---|---|---|

| Level 1 | Included | 70.2% | 69.0% | 92.0% | 90.8% | 85.0% |

| Level 2 | Included | 83.8% | 82.6% | 96.1% | 94.4% | 86.7% |

| Level 3 | Included | 58.4% | 59.4% | 68.4% | 60.3% | 84.3% |

| Level 4 | Included | 21.6% | 45.4% | 13.2% | 22.6% | 88.5% |

| Level 1 | Excluded | 77.7% | 75.0% | 100.0% | 98.4% | 87.0% |

| Level 2 | Excluded | 86.6% | 82.0% | 99.3% | 97.5% | 86.5% |

| Level 3 | Excluded | 72.9% | 64.6% | 92.9% | 73.2% | 84.2% |

| Level 4 | Excluded | 58.0% | 43.3% | 32.0% | 70.0% | 86.2% |

| Entity | Images Included | Grade | Images Excluded | Grade |

|---|---|---|---|---|

| Bing | 60.1% | D− | 76.0% | C |

| Bard | 67.9% | D | 73.0% | C |

| GPT-3.5 | 72.6% | C− | 92.4% | A− |

| GPT-4 | 72.3% | C− | 92.1% | A− |

| Students | 86.4% | B | 86.7% | B |

| Rank | Entity | Average Score | Statistical Significance Between Entities |

|---|---|---|---|

| 1 | GPT-3.5 | 93.1% | Higher than 3 entities (students, Bard, Bing); Same as 1 entity (GPT-4), same 1 |

| 2 | GPT-4 | 91.6% | Higher than 2 entities (Bard, Bing); Same as 2 entities (GPT-3.5, students) |

| 3 | Students | 85.4% | Higher than 1 entity (Bard); Same as 2 entities (GPT-4, Bing); Lower than 1 entity (GPT-3.5) |

| 4 | Bing | 77.0% | Same as 2 entities (Students, Bard), Lower than 2 entities (GPT-3.5, GPT-4) |

| 5 | Bard | 73.3% | Same as 1 entity (Bing); Lower than 3 entities (GPT-3.5, GPT-4, Students) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rai, M.E.; Ngaw, M.; Nannas, N.J. Artificial Intelligence Performance in Introductory Biology: Passing Grades but Poor Performance at High Cognitive Complexity. Educ. Sci. 2025, 15, 1400. https://doi.org/10.3390/educsci15101400

Rai ME, Ngaw M, Nannas NJ. Artificial Intelligence Performance in Introductory Biology: Passing Grades but Poor Performance at High Cognitive Complexity. Education Sciences. 2025; 15(10):1400. https://doi.org/10.3390/educsci15101400

Chicago/Turabian StyleRai, Megan E., Michael Ngaw, and Natalie J. Nannas. 2025. "Artificial Intelligence Performance in Introductory Biology: Passing Grades but Poor Performance at High Cognitive Complexity" Education Sciences 15, no. 10: 1400. https://doi.org/10.3390/educsci15101400

APA StyleRai, M. E., Ngaw, M., & Nannas, N. J. (2025). Artificial Intelligence Performance in Introductory Biology: Passing Grades but Poor Performance at High Cognitive Complexity. Education Sciences, 15(10), 1400. https://doi.org/10.3390/educsci15101400