Abstract

This study examines GPT usage in university-level programming education, focusing on patterns and correlations in students’ learning behaviors. A survey of 438 students from four universities was conducted to analyze their adoption of AI, learning dispositions, and behavioral patterns. The research aimed to understand the current state of GPT adoption and the connection between student learning approaches and their use of these technologies. Findings show that while a majority of students use GPT applications, the frequency and depth of this engagement vary significantly. Students who favor self-directed learning tend to leverage this technology more for personalized learning and self-assessment. Conversely, students more accustomed to traditional teaching methods use it more conservatively. The study identified four distinct learner groups through cluster analysis, each with unique interaction styles. Furthermore, a correlation analysis indicated that learning orientations, such as Technology-Driven Learning and intrinsic motivation, are positively associated with more frequent and effective GPT use.

1. Introduction

The integration of artificial intelligence (AI) in education (Zhai et al., 2021) has profoundly transformed traditional teaching and learning methodologies, offering unprecedented opportunities for personalized, efficient, and adaptive learning environments. Recent studies have highlighted the pivotal role of AI in enhancing student engagement, optimizing teaching strategies, and bridging the gap between theoretical knowledge and practical application (Crompton & Burke, 2023; Ilieva et al., 2023). For instance, research has explored how AI tools can align educational outcomes with the evolving demands of the digital economy through personalized feedback, adaptive assessments, and self-directed learning (Crompton & Burke, 2023; Roldán-álvarez & Mesa, 2024).

In higher education, the integration of AI and digital tools has become a focal point, offering institutions the ability to meet diverse student needs, accommodate various learning styles, and promote critical thinking—skills that are crucial for learners in the 21st century (Ardito et al., 2021; Guan et al., 2020; Maqableh & Jaradat, 2021). While research has shown that AI can support personalized feedback and adaptive assessments, few studies have investigated how these benefits are mediated by student characteristics such as learning styles or behavioral tendencies (Roldán-álvarez & Mesa, 2024). Therefore, the purpose of this study is to fill this gap by analyzing how students’ learning behaviors and profiles relate to their use of AI tools1.

To address this gap, this study pursues two primary research objectives:

- To identify and classify the different learner types currently in universities. This objective focuses on categorizing students based on their behavioral patterns, engagement levels, and preferences. The resulting classification provides a framework for understanding how different students approach learning in the context of AI tools like GPT.

- To examine the relationship between these learner types and their GPT usage behaviors. Building upon the first objective, this goal is to explore how the identified learner types interact with GPT tools, specifically investigating how their unique learning dispositions influence the adoption and use of these technologies.

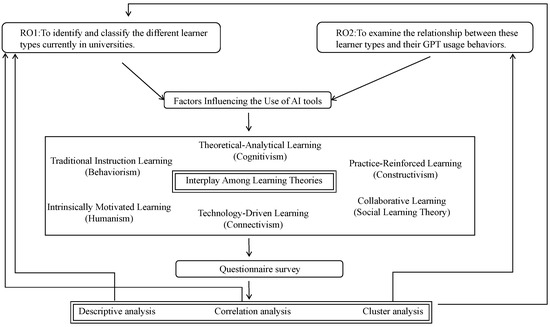

To achieve these objectives, we employed a combination of survey-based data collection and advanced statistical techniques (as shown in Figure 1). Our first objective was met through a detailed questionnaire designed to assess six learning orientations based on established educational theories (behaviorism, cognitivism, constructivism, social learning theory, connectivism, and humanism). The responses were then analyzed using cluster analysis, which successfully identified four distinct learner profiles: Balanced Learners, High-Performing Learners, Low-Engagement Learners, and Practice-Oriented Learners.

Figure 1.

Overall framework based on six theories, combined with a survey regarding respondents’ AI tools usage for programming learning in university.

Our second objective was subsequently realized through a cross-cluster analysis. By examining the relationships between the identified learner types and their engagement with GPT tools, we uncovered distinct patterns of AI usage for each group, particularly in tasks such as Code Generation, debugging, and optimization. This analysis provided deeper insights into how various learner types interact with AI technologies like GPT, offering a nuanced understanding of AI tool usage in higher education.

These methods enabled us to achieve both research objectives by examining learner diversity and their corresponding AI usage behaviors. Unlike previous studies focusing on the technical aspects of GPT tools (Kikalishvili, 2023; Saif et al., 2024), this research explores how GPT can be tailored to meet the needs of diverse learners, enhancing the personalization and effectiveness of educational strategies in higher education. The findings offer actionable recommendations for administrators and policymakers on integrating GPT tools into teaching practices, supporting the digital transformation of education.

2. Literature Review

2.1. Factors Shaping AI Tool Adoption in Programming Education

The adoption of AI tools in educational environments is influenced by multiple factors, including gender, academic background, technological familiarity, subject knowledge, and social education background (Delcker et al., 2024; Kolade et al., 2024), and understanding these factors is critical for designing effective AI-enhanced learning environments (Alshammari & Alshammari, 2024). It is also worth noting that other psychological characteristics, such as student attitudes, are an important influencing factor (Groothuijsen et al., 2024). In addition, technological skepticism has also been confirmed as an important personal trait that affects the acceptance of new technology, a factor that must be considered (Oprea et al., 2024).

Gender has been identified as a significant variable. While some studies suggest that socially constructed gender roles may influence technology self-efficacy (Huffman et al., 2013), the focus in contemporary educational design is shifting. Rather than emphasizing differences, the imperative is to create inclusive AI-powered tools that effectively support a diverse student body, ensuring equitable engagement and learning outcomes for all.

Recent research shows significant disparities in AI tool adoption and adaptation rates across different disciplines (Parviz, 2024). Students from different academic disciplines show varying degrees of dependence on AI tools, influenced by the nature of the discipline and course requirements. Students in STEM (Science, Technology, Engineering, and Mathematics) fields, especially those majoring in Computer Science and Data Science, are typically exposed to AI tools earlier and can seamlessly integrate these technologies into their learning and research. In contrast, students in the Humanities and Social Sciences often rely on specific learning contexts and course guidance to effectively use AI tools (Fošner, 2024). This difference underscores the importance of aligning AI tool integration with the specific needs of each discipline. This difference also highlights the importance of properly integrating AI tools based on disciplinary needs, especially when considering varying teaching methods and skill requirements across fields.

The academic background and prior learning experiences of students significantly affect their engagement with AI tools. Research has shown that students with a stronger academic foundation are more likely to systematically apply AI in research-related tasks, such as using GPT-4 for experimental Code Generation (Poldrack et al., 2023). Conversely, students who focus on practical applications, such as those in the engineering fields, tend to use AI for tasks like writing FMEA (Failure Mode and Effects Analysis) reports and optimizing risk assessments through iterative dialogue with AI tools like GPT-4 (OpenAI et al., 2024). Furthermore, interdisciplinary students show unique patterns in their tool usage, often combining AI with other specialized technologies.

Students’ social and educational backgrounds also influence their use of AI. Research indicates that those from higher-education backgrounds tend to be exposed to digital technologies earlier, shaping their positive attitudes toward AI (Hwang et al., 2020). Additionally, students from resource-rich schools benefit from technology training and support, enabling them to use AI tools more effectively (Williamson et al., 2023).

Peer influence and the exchange of ideas within learning communities further motivate students to explore AI tools. Collaboration with peers can enhance AI tool adoption and stimulate interest in AI, as students learn from each other and share experiences (Hodges et al., 2022; Resnick, 2017).

2.2. A Review of Classic Learning Theories in AI Research

Bibliometric data indicates a growing trend in the publication volume of research at the intersection of AI and educational theory over the past five years (Ivanashko et al., 2024; Khalil et al., 2023). In a similar vein, X. Chen et al. (2022) analyzed two decades of AI in education, highlighting the significant contributions of AI to the educational landscape. However, existing studies tend to focus on single theoretical perspectives (L. Chen et al., 2020), often confined to specific theoretical frameworks, resulting in a lack of systematic comparison. This theoretical fragmentation undermines the holistic understanding of learning behaviors, limiting the comprehensive explanation of complex learning phenomena.

In reality, students’ learning behaviors and decisions are often the result of dynamic coupling between multiple theoretical mechanisms. To overcome this limitation, this paper draws upon a wealth of literature from the fields of educational psychology and learning sciences, integrating six classic learning paradigms supported by interdisciplinary theoretical foundations. Building on a systematic review of traditional theoretical systems, such as behaviorism, cognitivism, and constructivism, this paper proposes a multidimensional discussion framework aimed at fostering a more comprehensive understanding of complex learning behaviors.

To provide a clear comparative overview, Table 1 synthesizes the core principles of these six theories, their associated learner characteristics, and their distinct applications in AI-driven educational environments.

Table 1.

Six Learning Theories and Their Application in AI Environments.

2.2.1. Behaviorism

Behaviorism views learning as a conditioned response to external stimuli, emphasizing the reinforcement of behavior through repetition and feedback. Students guided by behaviorist principles tend to perform best in structured environments, particularly when clear instructions and timely feedback are provided (Koedinger et al., 2012). In such environments, students can strengthen their skills through repetitive practice, with automated feedback mechanisms being crucial for their learning (Hattie & Timperley, 2007).

In engineering education, for example, these students often use AI tools to manage repetitive tasks such as programming exercises. In this context, the automated feedback provided by AI helps standardize procedures and enhance skill development. Moreover, studies have compared the effectiveness of different tutoring systems in educational contexts, highlighting the potential of AI-based intelligent tutoring systems to support student learning, particularly when tailored to provide feedback on specific skills or tasks (VanLehn, 2011).

2.2.2. Cognitivism

Cognitivism focuses on the internalization of knowledge and the development of cognitive structures (Piaget, 1977). Cognitivism-oriented students prioritize logical reasoning and the organization of knowledge. When using GPT tools, they often leverage features like knowledge graphs and self-analysis to deepen their understanding of complex concepts (Cherukunnath & Singh, 2022). This approach enables them to systematically internalize and apply theoretical knowledge in practical contexts.

2.2.3. Constructivism

Constructivism theory posits that knowledge is actively constructed through social interaction and contextual practice (Cole et al., 1978). As Lave (1991) further emphasizes, learning is situated within specific contexts, and learners actively engage in “legitimate peripheral participation” in communities of practice. This theoretical framework highlights three core characteristics of learners:

- Project-Based Learning (PBL): Learners are inclined to adopt this method to construct their knowledge system. This problem-oriented teaching model has been shown to significantly promote deep learning (Hmelo-Silver & DeSimone, 2013). PBL fosters an environment where students actively engage with real-world problems, applying their knowledge to complex, hands-on scenarios.

- Experiential Learning Cycle: Learners follow an iterative cycle of concrete experience, reflective observation, abstract conceptualization, and active experimentation to develop their competencies (Kolb, 2014). This cyclical model emphasizes the importance of engaging with material in a personal, hands-on manner, allowing learners to refine their understanding through action and reflection.

- Collaborative Knowledge Construction: Constructivist learning emphasizes the social aspect of knowledge construction. In collaborative learning settings, learners work together to solve problems, fostering the development of higher-order thinking skills and cognitive growth (Lave, 1991).

In the context of engineering education, constructivist learners exhibit distinctive patterns of technology application. They effectively integrate GPT tools to tackle complex engineering problems, demonstrating an evolving approach to technology integration in learning environments. For instance, recent research has explored how AI-based tools such as ChatGPT are challenging traditional blended learning methodologies in engineering education, enhancing engagement and deepening understanding in mathematics (Sánchez-Ruiz et al., 2023). This reflects a growing trend where learners not only benefit from AI tools used for immediate feedback but also utilize them in collaborative environments to solve complex problems, illustrating the intersection of constructivist theory with cutting-edge technological tools in modern education.

2.2.4. Social Learning Theory

Social learning theory emphasizes learning through observation and interaction (Bandura & Walters, 1977; Locke, 1987). In the learning process, students with a social learning orientation deepen their understanding of knowledge by observing others’ problem-solving processes, participating in group discussions (Peungcharoenkun & Waluyo, 2024), and engaging in collective collaboration. AI tools, by facilitating teamwork and communication, provide scaffolding (Chiu et al., 2024) that helps students collaborate more effectively in learning. For instance, GPT can be applied in collaborative writing during group discussions or serve as a bridge for information sharing, promoting knowledge exchange among students (Atman Uslu et al., 2022; Cao et al., 2021; Kasneci et al., 2023).

2.2.5. Connectivism

Connectivism defines learning as a cognitive process of establishing connections within dynamic information networks (Siemens, 2004). The theory includes three core propositions: first, knowledge construction is achieved through distributed cognition within an individual’s Personal Learning Network (PLN), where learners connect diverse nodes to acquire heterogeneous knowledge and develop critical decision-making abilities (Downes, 2022). Second, in the era of information overload, metacognitive abilities are reflected in the dynamic filtering and optimization of knowledge sources rather than simple information storage (Bell, 2011). Third, the rapid pace of technological knowledge iteration demands that learners acquire knowledge navigation skills, which have now surpassed the traditional focus on content mastery (Bell, 2010). Connectivism-oriented students utilize AI tools to connect information across various domains, integrating multiple resources to construct their knowledge systems. By accessing diverse information sources, they continuously update their understanding of cutting-edge knowledge and engage in interdisciplinary learning. The powerful information processing and cross-platform integration features of GPT tools (Kasneci et al., 2023) enable such learners to efficiently integrate knowledge from various fields, addressing complex learning tasks.

2.2.6. Humanism

Humanism theory emphasizes the self-actualization and growth of the individual, advocating that human potential should be fully realized (Maslow, 1943). Within the framework of humanist educational philosophy, learning is not merely the acquisition of knowledge but, more importantly, the promotion of students’ holistic development, especially in emotional, motivational, and personal goal-oriented growth (Deci & Ryan, 2000).

Students guided by humanist principles tend to focus not only on whether the learning process can support their academic needs but also on whether it can cater to their personalized needs, interests, and life goals (Merriam & Bierema, 2013). Consequently, when selecting and using AI tools, these students prioritize whether the tools will support their personal growth and aspirations rather than simply transmitting knowledge or enhancing skills (Luan & Tsai, 2021). These students can leverage AI tools to gain personalized learning experiences, making learning content and methods more aligned with their interests and abilities (Verner et al., 2020).

For example, GPT-based tools can provide personalized feedback and suggestions based on students’ learning progress, interests, and emotional needs, helping them achieve a sense of accomplishment and satisfaction in areas of personal interest.

Moreover, humanism-oriented students value self-reflection and self-assessment. They typically seek support and guidance in their learning process but avoid excessive intervention or constraints. The flexibility and adaptability of GPT tools allow students to adjust learning strategies according to their personal needs, thereby gaining greater control over their learning process. This approach to autonomous learning helps students maintain intrinsic motivation while striving towards their personal goals, ultimately achieving self-actualization.

2.3. Interplay Among Learning Theories

When exploring AI-driven learning analytics frameworks, the six major learning theories—behaviorism, cognitivism, constructivism, social learning theory, connectivism, and humanism—each provide unique perspectives to explain the learning process. These theories are interconnected yet distinct, collectively forming the theoretical foundation for understanding complex learning phenomena. Behaviorism (Skinner, 1938) emphasizes behavioral changes through external stimuli and reinforcement, focusing on the impact of the external environment on learning. This contrasts with cognitivism (Ausubel, 1968), which focuses more on internal cognitive processes such as information processing and memory. Together, they explain the micro-mechanisms of learning, but behaviorism overlooks the learner’s internal psychological processes, while cognitivism emphasizes the learner’s agency. Constructivism (Piaget, 1954) further extends the cognitivist perspective, emphasizing that learning is an active process of knowledge construction through interaction with the environment. It is closely related to social learning theory (Bandura & Walters, 1977), both of which emphasize the social and contextual nature of learning. However, constructivism focuses more on individual knowledge construction, while social learning theory emphasizes observation and imitation. Connectivism (Siemens, 2004), from a technology-driven perspective, emphasizes that learning is the process of building knowledge networks, with knowledge distributed across nodes in the network. It shares similarities with situated cognition but focuses more on the role of technology in learning. Humanism (Maslow, 1943) approaches learning from the perspective of intrinsic motivation and personalized development, emphasizing that learning is a process of self-actualization for the learner. It shares similarities with constructivism but focuses more on the learner’s emotions and motivations. The diversity and complementarity of these theories provide an important theoretical basis for classifying students with different learning tendencies.

It is worth highlighting that no single theory can fully capture the complexity of learning, particularly in AI-driven environments where behavior, cognition, social interactions, and technology use are interconnected. First, the six learning theories explain the learning process from different angles, covering multiple levels from micro (behaviorism, cognitivism, humanism) to macro (constructivism, social learning theory, connectivism). A single theory cannot fully explain complex learning phenomena. Second, in AI-driven learning environments, learners’ behaviors, cognition, social interactions, and technology use are intertwined. Multidimensional theoretical support helps to more comprehensively understand the learning process. Although these theories differ in their core perspectives, they are not entirely opposed but rather complementary. For example, behaviorism and cognitivism can explain the micro-mechanisms of learning, while constructivism and social learning theory can explain the social aspects of learning. Through integrative frameworks such as Vygotsky’s activity theory (Engeström, 2018), these theories can be coordinated on a single platform, ensuring conceptual consistency and logical coherence.

The contributions of these theories to the classification of learning tendencies are reflected in multiple aspects. Behaviorism is suitable for students who tend to learn through external feedback and reinforcement. These students may rely more on the immediate feedback and reward mechanisms provided by GPT tools. Cognitivism is suitable for students who tend to learn through logical reasoning and information processing. These students may be more adept at using GPT tools for data analysis and pattern recognition. Constructivism is suitable for students who tend to learn through active exploration and knowledge construction. These students may benefit more from AI-driven exploratory learning environments. Social learning theory is suitable for students who tend to learn through observing and imitating others. These students may rely more on AI-driven collaborative learning tools. Connectivism is suitable for students who tend to acquire and share knowledge through technological networks. These students may be more adept at using AI-driven knowledge network tools. These students may benefit more from AI-driven simulations and virtual environments. Humanism is suitable for students who tend to learn through intrinsic motivation and personalized development. These students may rely more on AI-driven personalized recommendation and feedback systems.

2.4. Six Core Learner Orientations for the AI Era

This study defines learning tendencies based on six theories, as shown in Figure 1, with the questionnaire carefully designed and named to better reflect the specific learning characteristics of students. While the original six theories cover a range of learning behaviors, the newly defined learning tendencies—such as “Traditional Instructional” and “Technology-Driven”—more accurately capture students’ preferences in these fields, refining the research focus and expanding its scope. This approach also helps translate the research into actionable teaching strategies.

2.4.1. Traditional Instruction Learning (Behaviorism)

Traditional Instruction Learning tends to achieve optimal results in structured teaching processes and standardized training. They rely on clear goal setting, feedback mechanisms, and rewards, emphasizing external stimuli and reinforcement. Research shows that behaviorism emphasizes shaping learning behaviors through external incentives (Skinner, 1938). Many AI applications, particularly adaptive learning systems, have been proven to provide timely feedback and personalized learning paths, aligning closely with behaviorist principles (Baker & Siemens, 2014). For example, AI-based learning platforms adjust the difficulty of learning content based on student performance, promoting knowledge acquisition and retention.

2.4.2. Theoretical–Analytical Learning (Cognitivism)

Theoretical–Analytical learners tend to understand and master complex theories through deep thinking, information processing, and data analysis. Cognitivism emphasizes internal cognitive processes, such as information processing, storage, and retrieval (Ausubel, 1968). Research shows that AI can help students identify patterns and trends in data through analytical tools, bridging theory and practice. For example, AI-driven learning platforms provide case studies and real-world data, helping learners analyze complex problems to deepen their theoretical understanding.

2.4.3. Practice-Reinforced Learning (Constructivism)

Practice-Reinforced learners focus on deepening understanding through hands-on practice, project-based learning, and contextual application, which aligns closely with constructivist perspectives. Research shows that constructivism emphasizes two fundamental processes: (1) active knowledge construction through situated experiences (Piaget, 1954), and (2) cognitive development via authentic application of learned concepts (Lave, 1991). This dual emphasis aligns with Vygotsky’s assertion that meaningful learning occurs at the intersection of social interaction and practical implementation (Cole et al., 1978). Studies have shown that AI-based simulations and virtual practice environments enable learners to experiment in risk-free settings, enhancing practical skills (Grieves & Vickers, 2017). This approach not only improves learning efficiency but also deepens understanding of complex concepts.

2.4.4. Collaborative Learning (Social Learning Theory)

Collaborative learners emphasize group interaction and knowledge sharing, preferring to solve problems collectively, making the learning process more social. Social learning theory posits that learning occurs not only within individuals but also through observing and imitating others (Bandura & Walters, 1977). Studies show that collaborative learning enhances information sharing and innovation within groups (Johnson & Johnson, 2009). Many GPT tools, such as group dynamics analysis tools, have been proven to monitor and optimize group interactions, providing personalized recommendations to enhance effective collaboration among members (Ouyang & Zhang, 2024; Wise & Schwarz, 2017).

2.4.5. Technology-Driven Learning (Connectivism)

Technology-driven learners excel at using technology and adaptive systems to personalize their learning experiences, valuing online resources and intelligent tools to enhance learning outcomes. Connectivism views learning as occurring within vast network systems, where knowledge is formed through connections and interactions (Downes, 2022). Research demonstrates that AI-driven adaptive learning platforms analyze learner behavior and data to dynamically adjust content and recommend pathways, improving learning effectiveness and efficiency (Gligorea et al., 2023). This approach allows learners to select materials based on their interests and progress, promoting personalized learning.

2.4.6. Intrinsically Motivated Learning (Humanism)

Intrinsically motivated learners focus on self-directed learning and personal interests, striving to achieve self-actualization and personal growth through learning. Humanism suggests that individual learning motivation stems from intrinsic needs for self-actualization and growth (Rogers, 1969). Research indicates that intrinsic motivation is a key factor in effective learning, significantly enhancing learner engagement and quality (Ryan & Deci, 2020). AI-based learning analytics tools track learners’ motivational changes and recommend customized content based on their interests, maintaining high levels of motivation. These tools detect emotional changes and provide motivational feedback to enhance the learning drive.

3. Methodology

This study employs a quantitative survey-based approach to analyze students’ GPT usage behaviors in programming education. The investigation is framed by a theoretical framework integrating six learning theories: behaviorism, cognitivism, constructivism, social learning, connectivism, and humanism. This section details the research design, participant selection, data collection procedures, measurement instruments, and analytical methods used.

3.1. Research Design and Participants

3.1.1. Sampling Strategy

This study utilized a purposive sampling strategy. The target population was students enrolled in specific programming courses across four universities in China. Contrary to a voluntary response model, participation was integrated as an optional component of the coursework. This approach was chosen to ensure a high response rate and to capture a comprehensive dataset from the intended population, thereby mitigating the self-selection bias often associated with voluntary surveys (Pater et al., 2021). No additional incentives were provided, which reduces the risk of attracting participants who might provide perfunctory or inattentive responses purely for a reward (Head, 2009).

3.1.2. Institutional and Participant Characteristics

The selection of the participating institutions was based on a stratified purposive sampling strategy, designed to capture a representative cross-section of China’s diverse higher education landscape within a specific geographical region. The selected institutions include

- A national key comprehensive university (Anhui University, AU) and its subbranch Stony Brook Institute at Anhui University (SB), representing China’s top-tier, research-intensive academic environment with a highly selective student intake.

- A provincial key university with a specialized focus on applied sciences (Anhui Jianzhu University, AJ), representing a strong, application-oriented engineering context where programming is a critical professional tool.

- An undergraduate college with a primary focus on teaching (Jianghuai College, JH), representing an environment centered on pedagogical support and practical skills development for a broader range of students.

This deliberate stratification allows for a nuanced analysis of how student behaviors might differ across distinct academic cultures, admission standards, and institutional resource levels.

Crucially, this institutional diversity is intentionally paired with strict curricular uniformity to create a robust comparative framework. All participants from AU and JH, regardless of their institution, were enrolled in the same standardized programming course. This course was delivered using an identical textbook, a unified online learning platform, and the same lead instructor. All participants from AJ were enrolled in another programming course by another instructor. This design strengthens the study in two critical ways: (1) it rigorously controls for confounding variables related to curriculum, teaching style, and resources, and (2) it allows for a more valid comparison of how different institutional contexts and student populations influence the adoption of tools like GPT. By holding the educational intervention constant, we can more clearly isolate and understand the behavioral patterns of learners.

The participants were undergraduates in programming-intensive majors (i.e., Computer Science, AI, Robotics Engineering, Digital Media), ensuring the sample’s direct relevance to the study’s focus.

3.2. Data Collection and Screening

Data were collected anonymously via the professional survey platform Wenjuan.com. To ensure the quality and validity of the collected data, a rigorous screening process was implemented, serving as the primary participant filtering standard.

- Initial Collection: An initial dataset of 438 responses was gathered. The response rates across the three institutions were consistently high and showed no significant differences, ensuring a balanced dataset from each academic context.

- Data Screening: We applied a pre-defined exclusion criterion to filter out inattentive or invalid responses. Following established data quality protocols, any survey where the standard deviation (std) of the responses across all Likert-scale items was less than 0.5 was discarded. This objective measure helps identify participants who likely did not respond thoughtfully (e.g., by selecting the same answer for all questions).

- Final Sample: This filtering process led to the removal of 140 responses. A final valid sample of 298 was retained for analysis.

3.3. Research Instrument

3.3.1. Instrument Development and Validation

The instrument was developed through a systematic process to ensure its validity. The initial item pool was generated based on an extensive literature review covering the six core learning theories. To establish content validity, the draft questionnaire was reviewed by three experts in education. Their feedback on item clarity, relevance, and coverage was incorporated into subsequent revisions. A pilot test was then conducted with 10 students to refine the instrument further.

3.3.2. Instrument Structure and Reliability

The measurement instrument was a self-developed 48-item questionnaire aimed at assessing programming experience, learning styles, and GPT usage. The final questionnaire consists of multiple-choice questions for demographic data and statements rated on a five-point Likert scale (1 = Strongly Disagree to 5 = Strongly Agree). As detailed in the Table 2, items were mapped to six learning orientations: Traditional Instruction (Behaviorism), Practice-Reinforced (Constructivism), Theoretical–Analytical (Cognitivism), Collaborative (Social Learning), Technology-Driven (Connectivism), and Intrinsically Motivated (Humanism). Some items were reverse-coded to mitigate response bias.

Table 2.

Survey Questions on Learning Theories and AI Attitudes. Likert-Scale: a five-point Likert scale was employed in the questionnaire, ranging from 1 (strongly disagree) to 5 (strongly agree).

The internal consistency reliability for the primary scales was assessed using Cronbach’s Alpha. The analysis demonstrated good reliability, with coefficients of 0.895, indicating the instrument provides stable and consistent measurements.

3.4. Data Analysis

The quantitative data were analyzed using cluster analysis implemented with the tools from the “scikit-learn” library in Python 3.8. This method allowed us to classify students into distinct groups based on their learning orientations and AI usage patterns.

3.5. Limitations and Potential for Bias

While the methodology was designed to be robust, we acknowledge several limitations that may influence the interpretation and generalizability of the findings. (1) Sampling Bias: The sample is a convenience sample of students within specific courses and institutions, not a random sample of all programming students. Therefore, the findings may not be generalizable beyond this specific context. The students’ responses might also be influenced by the specific curriculum, teaching style, or culture of their courses. (2) Respondent Bias: While our data screening filtered out overtly inattentive responses, it cannot completely eliminate social desirability bias or a potential reluctance from students to fully disclose certain learning habits.

3.6. Population Cluster Analysis

Cluster analysis is a widely recognized method in data analysis that categorizes objects into groups based on their similarities, thereby uncovering underlying patterns and distinct characteristics within the data (Duran & Odell, 2013). In educational research, this approach is particularly valuable as it accommodates complex data structures and reveals unique learning behaviors across diverse groups (Saenz et al., 2011). By segmenting the data into multiple subgroups, cluster analysis enables a deeper understanding of the heterogeneity within the dataset, highlighting variations in learning behaviors across different student groups.

The dataset used in clustering analysis consists of survey responses from students, encompassing answers of Q4 to Q37. These questions span 6 learning theories, including Traditional Guided Learning, Practical Reinforcement Learning, Theoretical–Analytical Learning, Collaborative Interactive Learning, Technology-Driven Learning, intrinsic motivation learning, and contextual applied learning. Prior to the application of clustering techniques, the dataset undergoes standardization using the StandardScaler function from the scikit-learn library. This standardization step ensures that each feature contributes equally to the clustering process by transforming the data to have zero mean and unit variance.

K-Means clustering is employed to partition the students into distinct groups based on their learning feature profiles. The number of clusters is determined through a combination of domain knowledge and experimentation, with an optimal value of selected for this analysis (James, 2013). The K-Means algorithm works by minimizing the sum of squared distances between data points and their assigned cluster centroids, effectively grouping students with similar learning characteristics together. Following the K-Means clustering process, each student is assigned to a cluster, and the resulting clusters are subsequently interpreted and labeled based on their dominant learning characteristics.

3.7. Correlation Analysis

In this study, a systematic correlation analysis was conducted to explore the relationships between AI-related questions and various learning theories. Correlation analysis provides statistical evidence on the strength and direction of these associations. By recognizing significant correlations, we can better understand which learning theories are most relevant in AI-related contexts, guiding further research and the development of educational frameworks.

The analysis was performed in a series of methodical steps. Initially, each learning theory answer group was consolidated into a single composite score. This was achieved by averaging the values of the individual answers within each learning theory group. The composite scores provided a comprehensive summary of the characteristics of each learning theory group for each participant, enabling a clearer understanding of how these groups interacted with the AI-related questions. The AI-related questions, specifically those from AI-Q1 to AI-Q10, were selected to capture various aspects of participants’ attitudes, experiences, and knowledge regarding AI. These questions served as the dependent variables in the analysis, and their relationships with the feature groups were the focal point of the investigation.

Subsequently, Pearson correlation coefficients were then calculated using pandas.DataFrame.corr2 for all pairs of variables, including both the feature group scores and AI-related questions. The Pearson correlation coefficient measures the strength and direction of the linear relationship between two variables, with values ranging from −1 (indicating a perfect negative correlation) to +1 (indicating a perfect positive correlation). A coefficient near zero suggests a weak or negligible relationship between the variables.

To assess the statistical significance of the observed correlations, p-values were computed. A p-value indicates whether the observed correlation is statistically significant, with values less than 0.05 typically considered indicative of a meaningful relationship (Sachs, 2012). This step was critical to ensure that the identified correlations were not due to random chance.

Finally, the correlation matrix was annotated with significance stars to highlight the statistical significance of each correlation. A double asterisk (**) indicated a highly significant correlation (p < 0.01), while a single asterisk (*) denoted a moderate level of significance (p< 0.05). This annotation facilitated the identification of the most meaningful relationships in the data.

4. Results

4.1. Questionnaire Reliability and Validity

We use Statistical Package for the Social Sciences (SPSS)3 to perform the statistical analysis. The Kaiser–Meyer–Olkin (KMO) value of the questionnaire is 0.815, indicating that the sample is suitable for factor analysis. The Cronbach’s alpha coefficient is 0.895, demonstrating a high level of reliability (Sachs, 2012). Overall, the questionnaire performs well in terms of both reliability and validity, providing a solid foundation for subsequent statistical analysis and ensuring the reliability of the research conclusions.

4.2. Demography of Participants

A survey of 438 students from four universities was conducted, focusing on their use of AI tools, learning dispositions, and behavioral patterns. After filtering invalid responses, a total of 298 out of 438 valid questionnaires were retained, resulting in a 68% validity rate. Key demographic information, such as participants’ major and gender, is presented in Table 3.

Table 3.

Participants’ Distribution of Academic Discipline, and Gender.

The distribution of students across the four participating universities is as follows: At AU, the largest group is from the AI program, with 142 students, accounting for 32.4% of the total sample. The Robotics Engineering program at AU consists of 81 students, representing 18.5% of the total. At AJ University, the Computer Science (CS) program has 93 students, making up 21.2% of the sample. At SB University, the Digital Media (DM) program has 40 students, comprising 9.1%. Finally, at JH University, the Computer Science program includes 82 students, accounting for 18.7% of the sample. Among the 438 respondents, all were third-year students.

Table 3 also presents the distribution of students by gender. Among the 438 respondents, the majority are male, with 312 participants, accounting for 71.2%, while 126 are female, representing 28.8%. This indicates a higher proportion of male students in the sample, which may be attributed to the gender distribution characteristics of the technical and engineering disciplines represented by the respondents.

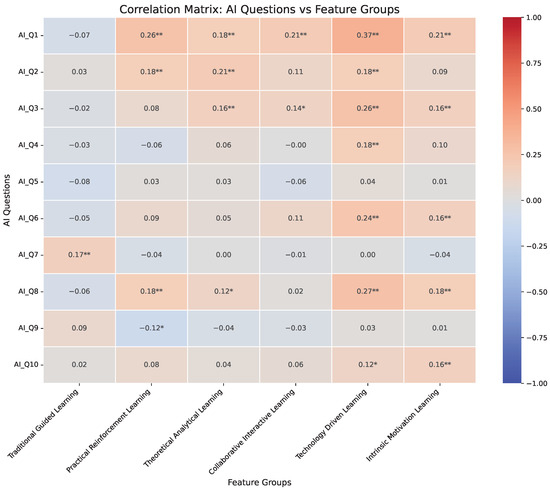

4.3. Correlation Between GPT Usage and Learning Theory

A correlational analysis was conducted to examine the relationships between learning styles and various aspects of GPT tool adoption in programming. Pearson correlation coefficients (r) were computed, with significance levels set at p < 0.05 and p < 0.01. The study assessed familiarity, perceived utility, role in programming, experience with GPT tools, assignment usage, support, skill development, concerns about learning depth, learning enhancement, dependency, and sense of accomplishment. The analysis is shown in Figure 2. An extensive examination of each learner is provided subsequently.

Figure 2.

Correlation Matrix. A single asterisk (*) indicates the correlation is statistically significant (p < 0.05). A double asterisk (**) indicates the correlation is highly statistically significant (p < 0.01).

4.3.1. Technology-Driven Learners: Comprehensive Early Adopters and Active Advocates

The data in Figure 2 indicate that Technology-Driven Learners exhibit the broadest and strongest positive correlations with AI tools. These students not only have the highest awareness of AI tools (AI-Q1: r = 0.37, p < 0.01) but also frequently use them in their actual studies, whether for daily coding assistance (AI-Q3: r = 0.26, p < 0.01) or for course assignments and projects (AI-Q4: r = 0.18, p < 0.01). More importantly, their active engagement leads to positive experiences and perceptions. They strongly believe that AI can enhance their personal programming skills (AI-Q6: r = 0.24, p < 0.01) and perceive AI tools as significantly improving their learning experience (AI-Q8: r = 0.27, p < 0.01). This positive outlook also translates into a greater sense of learning achievement (AI-Q10: r = 0.12, p < 0.05).

The learning model of these students inherently leans towards embracing and utilizing emerging technologies to improve efficiency. According to the Technology Acceptance Model (TAM) (de Camargo Fiorini et al., 2018), an individual’s intention to use a technology increases significantly when they perceive its usefulness and ease of use. Evidently, Technology-Driven Learners view AI as an efficient learning instrument and naturally integrate it into their learning ecosystem.

4.3.2. Theoretical–Analytical Learners: Rational and Purposeful Users

Theoretical–Analytical Learners demonstrate a rational and deliberate pattern of adopting AI tools. They possess a moderate level of awareness of AI tools (AI-Q1: r = 0.18, p < 0.01) and are one of the primary groups who perceive AI as “helpful" in programming (AI-Q2: r = 0.21, p < 0.01). This suggests that before using them, they are more inclined to evaluate the tools’ value from a theoretical standpoint. In terms of actual use, they also leverage AI for coding assistance (AI-Q3: r = 0.16, p < 0.01), though the correlation is not as strong as that of their technology-driven peers.

The core objective of these students is to build a rigorous knowledge system and logical framework. They may use AI tools not merely for “efficiency" but as a cognitive tool to validate theories, explore alternative problem-solving approaches, or deepen their understanding of complex concepts. They are goal-oriented, engaging with AI when it can assist their analytical and comprehension processes.

4.3.3. Intrinsically Motivated Learners: Users in Pursuit of Growth and Achievement

The engagement of Intrinsically Motivated Learners with AI is primarily driven by their internal learning motivations. The data show significant positive correlations in areas such as using AI for assignments/projects (AI-Q4: r = 0.10, n.s.), believing AI enhances skills (AI-Q6: r = 0.16, p < 0.01), having a positive learning experience (AI-Q8: r = 0.18, p < 0.01), and boosting their sense of learning achievement (AI-Q10: r = 0.16, p < 0.01).

According to Self-Determination Theory (SDT) (Manninen et al., 2022), individuals with strong intrinsic motivation actively seek challenges and derive satisfaction from the process of mastering skills. These students view AI tools as a partner for achieving personal growth and satisfying their curiosity. They use AI not due to external requirements, but because they believe it helps them “learn better and faster," thereby gaining greater internal satisfaction and a sense of accomplishment.

4.3.4. Practical Collaborative Learners: Context-Specific Users

The interaction patterns of these two learner types are more context-specific.

Practice-Reinforced Learners have a high awareness of AI tools (AI-Q1: r = 0.26, p < 0.01) and find them helpful (AI-Q2: r = 0.18, p < 0.01). Interestingly, they are the only group to show a significant negative correlation with “dependency on AI” (AI-Q9: r = −0.12, p < 0.05). This indicates that while they use AI, they place greater emphasis on consolidating knowledge through hands-on practice, thereby avoiding dependency.

Collaborative Learners show a slight positive correlation with using AI for coding assistance (AI-Q3: r = 0.14, p < 0.05). This may imply that they use AI as a “third party” or a “virtual partner” in discussions, using it to quickly generate code prototypes or resolve disputes during group collaboration.

This reflects how learning styles shape the manner in which tools are used. Practical learners see AI as an aid in their practice, but the core remains “hands-on” work. Collaborative learners, on the other hand, may integrate AI into their socially interactive learning processes.

4.3.5. Traditional Guided Learners: Potential Skeptics

In contrast to the aforementioned groups, Traditional Guided Learners exhibit a degree of reservation or even a negative attitude towards AI tools. The data show that their support for AI-assisted programming has a slight negative correlation (AI-Q5: r = −0.08, n.s.), and they are the only group to express significant concern about whether “AI might hinder deep learning” (AI-Q7: r = −0.17, p < 0.01).

These students are accustomed to learning within the framework of teacher guidance and structured curricula. The open-ended and autonomous nature of AI tools may disrupt their accustomed learning order, making them feel uncomfortable or concerned. They may believe that over-reliance on AI could weaken the development of fundamental skills, reflecting a potential conflict between traditional educational philosophies and emerging technological tools. Nonetheless, additional research is essential to validate the rationale mentioned above.

In summary, students do not interact with AI in a monolithic fashion. Technology-driven and intrinsically motivated learners are the most active adopters and beneficiaries of AI tools, viewing AI as an empowering instrument for increasing efficiency and achieving personal growth. Theoretical–Analytical and Practical Learners, on the other hand, act as more selective and purposeful users, deciding when and how to use AI based on their core learning needs. In contrast, traditional guided learners hold a more cautious or even skeptical attitude, focusing more on the potential negative impacts of AI on traditional learning paths. These findings underscore that when promoting AI-assisted programming education, it is essential to consider the heterogeneity of student learning styles and to provide differentiated guidance strategies.

4.4. Population Clustering

4.4.1. Clustering Description

The cluster centers provide a comprehensive snapshot of the distinct learning tendencies of students, measured across six dimensions: Traditional Guided Learning, practical reinforcement, Theoretical–Analytical Learning, Collaborative Interactive Learning, Technology-Driven Learning, and intrinsic motivation. The significance of cluster analysis in this study is particularly evident, as the data highlight that many students exhibit multiple learning tendencies (e.g., behaviorism, constructivism), making it difficult for traditional analysis methods to fully capture the complexity of their learning behaviors. By grouping students based on their learning tendencies, cluster analysis offers a more nuanced understanding of behavior patterns across different learning modes. This method also facilitates the identification of variances in AI tool usage among learning tendency groups, offering insights into which groups are more likely to utilize specific types of AI tools, such as GPT-based applications. Furthermore, cluster analysis allows for a deeper exploration of the relationship between AI tool usage and learning tendencies, thus contributing valuable insights to address the study’s research questions.

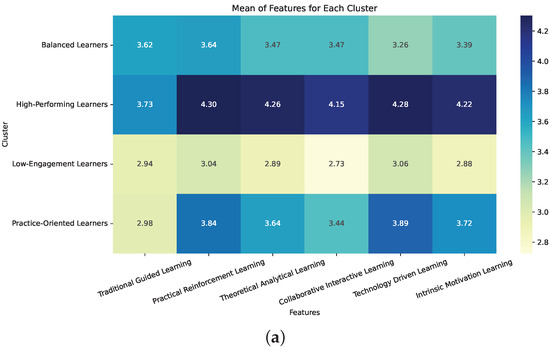

Table 4 presents the feature values for the six learning dimensions across each cluster. We give each cluster a specific name based on its properties, and those identified clusters are as follows:

Table 4.

Feature Means of Each Cluster for Different Learning Approaches.

- Balanced Learners: Students demonstrating a well-rounded mix of learning strategies.

- High-Performing Learners: Students showing robust engagement across various learning domains.

- Low-Engagement Learners: Students exhibiting minimal engagement across most learning domains.

- Practice-Oriented Learners: Students prioritizing practical, hands-on learning approaches.

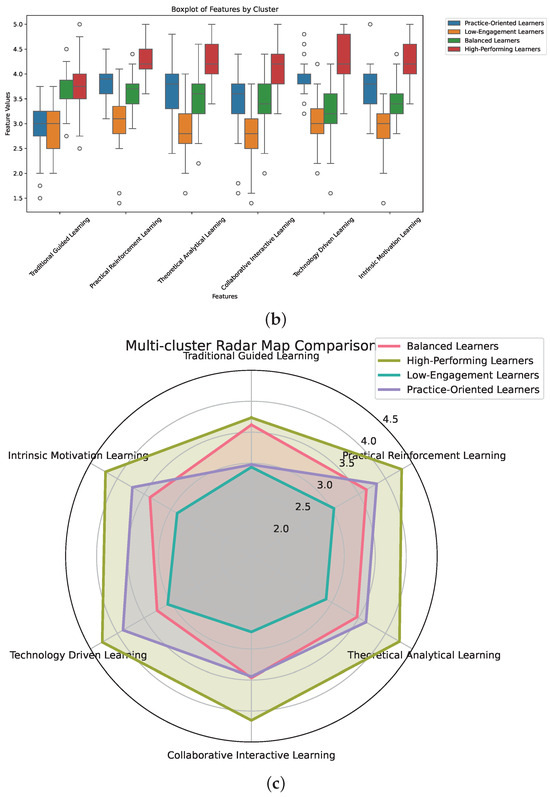

As shown in Table 4 and Figure 3, Balanced Learners exhibited moderate engagement across all learning approaches. Their highest engagement was observed in Practical Reinforcement Learning (mean = 3.64). Additionally, they reported a balanced approach to Technology-Driven Learning (mean = 3.26) and Intrinsic Motivation Learning (mean = 3.39), reflecting their general preference for diverse learning methods. High-Performing Learners displayed a strong preference for Practical Reinforcement Learning (mean = 4.30), Theoretical–Analytical Learning (mean = 4.26), and Technology-Driven Learning (mean = 4.28). This group demonstrated a significant inclination towards structured and technology-enhanced learning environments, suggesting that they excel when provided with advanced learning tools and clear frameworks.

Figure 3.

Visualizations of learner types. (a) Heatmap of Clusters. (b) Boxplot of Clusters. (c) Radar Map of Clusters.

Low-Engagement Learners showed comparatively lower levels of engagement across most learning approaches, particularly in Practical Reinforcement Learning (mean = 3.04) and Theoretical–Analytical Learning (mean = 2.89). Practice-Oriented Learners displayed a similar learning profile to High-Performing Learners, with significant engagement in Technology-Driven Learning (mean = 3.89) and Practical Reinforcement Learning (mean = 3.84). Nevertheless, they showed a slightly stronger preference for practical applications and real-world problem-solving compared to High-Performing Learners.

According to Table 4, Balanced Learners represent the largest group, consisting of 119 individuals, indicating that this learning profile is the most prevalent among the sample. In contrast, High-Performing Learners constitute the smallest group with only 49 individuals. Low-Engagement Learners and Practice-Oriented Learners have intermediate group sizes, with 43 and 87 individuals, respectively.

The underrepresentation of High-Performing (49 individuals) and Low-Engagement (43 individuals) learners is a natural outcome because these two groups represent the statistical extremes of the student population. Most students do not fit into the absolute top or bottom of the engagement spectrum; instead, they cluster in the middle, which is reflected in the much larger “Balanced” (119) and “Practice-Oriented” (87) groups. The “High-Performing” profile requires excellence across all six measured learning domains, a demanding standard that only a small minority is expected to meet. Conversely, the “Low-Engagement” profile identifies students who are consistently disengaged across most areas, another behavioral extreme that is logically less common than moderate or specialized engagement. Therefore, this distribution signifies that the typical student is a pragmatic and adaptable learner who falls within the central range of behaviors, rather than being an outlier who is either universally proficient or consistently disengaged.

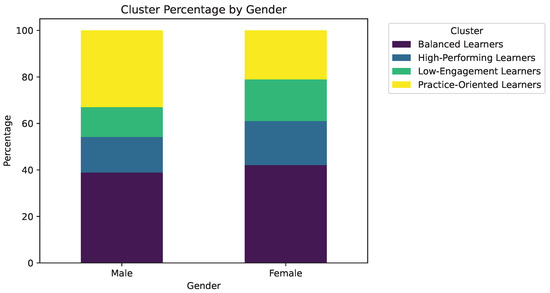

4.4.2. Cluster Distribution by Gender

The gender distribution across the identified learner clusters is presented in Figure 4 and Table 5. The data indicate that Balanced Learners were predominantly female (38.92%) compared to male students (42.11%). High-Performing Learners exhibited a relatively balanced gender distribution, with a slightly higher proportion of male students (18.95%) than female students (15.27%). Low-Engagement Learners demonstrated a higher proportion of female students (17.89%) compared to male students (12.81%). Similarly, Practice-Oriented Learners were predominantly female (33.00%) compared to male students (21.05%).

Figure 4.

Cluster Distribution by Gender.

Table 5.

Learner type with respect to gender.

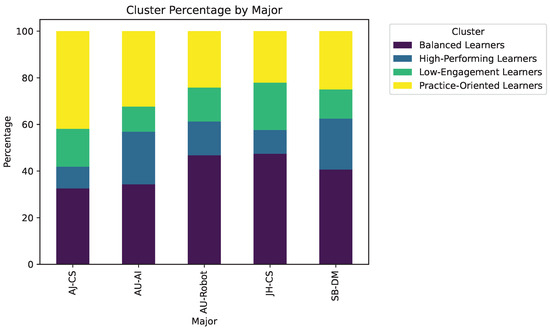

4.4.3. Cluster Distribution by Major

The distribution of learner types across four academic disciplines—AI, Robotics Engineering (RE), Computer Science (CS), and Digital Media (DM)—is illustrated in Figure 5 and Table 6.

Figure 5.

Cluster Distribution by Major.

Table 6.

Learner cluster distribution by major (%).

The data clearly demonstrates that a student’s academic major is strongly associated with their learning style, as evidenced by the starkly different profiles across disciplines. The student body is far from uniform; for instance, the Computer Science program at AJ is dominated by Practice-Oriented Learners, who comprise a substantial 41.86% of the cohort. This contrasts sharply with the Robotics program at AU, which is characterized by a plurality of Balanced Learners who make up 46.77% of the students. This initial comparison shows that the educational environment and student composition are unique to each major.

Certain specialized and cutting-edge fields appear to be hubs for the most capable students. The artificial intelligence program at AU, for example, boasts the highest concentration of High-Performing Learners at 22.55%, a figure nearly matched by the Digital Media program at SB, where they account for 21.88% of students. This concentration of top-tier learners, coupled with some of the lowest proportions of Low-Engagement students, suggests that these demanding, interdisciplinary subjects attract individuals who are already well-rounded, highly motivated, and adept across multiple learning dimensions.

The two Computer Science programs in the study present vastly different educational contexts, highlighting that even within the same discipline, institutional differences are critical. The AJ-CS program is clearly defined by a hands-on ethos, with a commanding 41.86% of its students identified as Practice-Oriented, while having the lowest share of High-Performing learners at just 9.30%. In stark contrast, the JH-CS program reveals a more polarized student body. While it has the largest percentage of Balanced Learners of any program at 47.46%, it simultaneously contains the highest proportion of Low-Engagement Learners, at a concerning 20.34%.

The Robotics program at AU stands out for its remarkable concentration of Balanced Learners, who constitute 46.77% of the students in that major. This significant plurality is fitting for a field that inherently integrates mechanical, electrical, and software engineering. The necessity of mastering both abstract theoretical principles and concrete practical applications in robotics likely fosters this versatile and holistic approach to learning, making a balanced profile the most common path to success.

These distinct data-driven profiles have direct pedagogical implications. For the AJ-CS program, where 41.86% of students are Practice-Oriented, a curriculum heavily centered on project-based learning would be most effective. Conversely, educators in the AU-AI and SB-DM programs, where High-Performing Learners exceed 21%, can confidently assign sophisticated, open-ended challenges. The most complex scenario is presented by the JH-CS program; with 20.34% of students being Low-Engagement, a differentiated instructional approach is essential to provide foundational support for this group while simultaneously engaging the large 47.46% cohort of Balanced Learners.

In conclusion, the diversity in learning types across programs highlights the importance of aligning teaching approaches with the specific needs and characteristics of the learners in each program. Tailoring educational strategies to accommodate these differences could enhance learning outcomes and foster a more inclusive academic environment.

4.4.4. GPT Utilization of Each Learner Type

Table 7 presents the comparison of how different learner clusters engage with various programming tasks using GPT, as reported by students in response to the multi-choice question Q0. The question asked students to indicate which tasks they use GPT for, including Code Generation, Code Debugging, Code Optimization, Project Design, and others. The table shows the percentage of students in each cluster who selected each task, reflecting their level of engagement with these tasks.

Table 7.

Tasks in GPT Utilization in Each Cluster.

The data reveals that the Balanced and High-Performing clusters show high engagement across all tasks, particularly in Code Optimization and Code Generation, with the Balanced cluster leading in optimization (73.95%). The Practice-Oriented cluster is most engaged in Code Debugging (71.26%) and Code Optimization (72.41%), but shows lower interest in Project Design (44.83%). The Low-Engagement cluster has moderate participation in Code Generation (69.77%) but significantly lower involvement in Code Debugging (44.19%) and Project Design (37.21%). Overall, the findings highlight that high-performing and balanced students engage more across tasks, while low-engagement students participate less, especially in debugging and design.

While the individual selection counts and percentages show how popular each option is on its own, the combination of responses reveals more about how people are answering multiple questions at once. Therefore, we analyze the combinations of responses across all learners, then count how frequently each unique combination of answers occurs in the data. The top five most common response combinations are identified, and the percentage of respondents who selected each combination is calculated, similar to how the percentages for individual options are determined.

Table 8 presents the top five combinations of task selections across four different learner categories. Each row represents a combination of task selections for Code Generation, Code Debugging, Code Optimization, and Project Design, showing the frequency and percentage of learners in each category who selected that particular combination.

Table 8.

Top 5 combinations of task selections across four different learner categories. The √ symbol indicates that the learner selected the task, while the × symbol indicates that the task was not selected.

The most common combination for Practice-Oriented Learners is Code Generation, Code Debugging, Code Optimization, and Project Design (27.59%), suggesting that these learners are most likely to engage in all four tasks. The second most common combination is Code Debugging and Code Optimization, without Project Design (14.94%), indicating that these learners focus more on improving existing code than on planning larger projects. Other notable combinations include Debugging with Optimization, but without Code Generation or Project Design (9.20%), and Debugging with both Code Generation and Optimization (9.20%).

For Low-Engagement Learners, the most frequent combination is Code Generation only (23.26%), showing that these learners tend to use GPT primarily for generating code. The second most frequent combination is Code Generation, Code Debugging, Code Optimization, and Project Design (16.28%), indicating that some Low-Engagement Learners are still willing to use GPT across all tasks. Other combinations include Code Debugging and Code Optimization, without Project Design (9.30%).

The most common combination for Balanced Learners is Code Generation, Code Debugging, Code Optimization, and Project Design (30.25%), suggesting that these learners actively engage in all programming tasks. Other common combinations include Code Debugging and Code Optimization, without Project Design (12.61%), and Code Generation, Code Debugging, and Code Optimization, without Project Design (10.08%).

For High-Performing Learners, the most frequent combination is Code Generation, Code Debugging, Code Optimization, and Project Design (18.37%), showing strong involvement in all tasks, though less than the Balanced group. Other combinations include Code Optimization alone (14.29%) and Code Debugging and Code Optimization without Project Design (14.29%).

This data highlights that Balanced Learners are the most likely to engage across all tasks, while Low-Engagement Learners show a tendency to focus more on specific tasks, like Code Generation. Practice-Oriented Learners show a variety of engagement combinations but tend to engage more in debugging and optimization tasks than in Project Design. High-Performing Learners show a preference for a mix of optimization and debugging tasks.

5. Discussion

5.1. Summary of Results

This study’s findings move beyond simply identifying if students use AI tools but reveal how distinct learner archetypes engage with them. The cluster analysis empirically identified four learner profiles—Balanced, High-Performing, Low-Engagement, and Practice-Oriented—whose interactions with AI are deeply connected to their underlying learning orientations. This discussion interprets these principal findings and outlines their implications for educational theory, pedagogy, and AI tool development, situating them within the broader context of existing literature on educational technology and learning sciences.

5.2. Beyond “Use vs. Non-Use": The Case for Learner Profiling in the AI Era

The rapid proliferation of AI in educational settings has ignited a discourse often characterized by a binary focus on adoption versus prohibition. However, this study contributes to a more sophisticated conversation by demonstrating that the critical question is not if students use AI, but how and why. The empirical identification of distinct learner archetypes argues for the necessity of moving beyond monolithic assumptions about student behavior.

This approach aligns with a long-standing tradition in educational research that recognizes the fundamental diversity of learners. Foundational models of learning styles, such as the Felder–Silverman Index, have long categorized learners along various dimensions, such as Active versus Reflective, Sensing versus Intuitive, Visual versus Verbal, and Sequential versus Global, to better understand their preferences for processing and organizing information (Van Zwanenberg et al., 2000). These models established a crucial precedent that a one-size-fits-all approach to instruction is inherently limited because it fails to account for the varied cognitive and procedural preferences among students (Romanelli et al., 2009).

While the concept of static learning “styles” has faced criticism, the underlying principle of learner diversity has evolved into the more dynamic and holistic concept of “learner profiles”. In modern online and personalized learning environments, a learner profile is understood not as a fixed label but as a comprehensive and evolving portrait of an individual student. It encompasses not only cognitive attributes but also a wide array of personal and environmental factors, including individual interests and motivations, cultural background, social-emotional needs, and even preferences for physical and digital learning settings. This multi-faceted understanding rejects the notion of an “average student” and instead provides educators with a roadmap to navigate each student’s unique path to success.

This study extends this tradition into the nascent field of student–AI interaction. The identification of the four archetypes—Balanced, High-Performing, Low-Engagement, and Practice-Oriented—provides an empirically grounded typology specifically for this new context. These archetypes should not be viewed as immutable traits but as dynamic “patterns of engagement” that emerge from the complex interplay between a student’s predispositions and the affordances of the AI tool within a specific pedagogical context. Research in online learning has shown that learners’ goals and the flexibility of the environment critically affect what they do and, consequently, what they learn. In a flexible, learner-centered environment where students have the freedom to make decisions about their workflow, clearly distinguishable approaches and profiles are more likely to emerge. Our findings confirm that this principle holds true for AI-integrated learning, revealing that students do not use GPT tools monolithically; their usage patterns are nuanced, predictable, and deeply intertwined with their learning orientations.

5.3. An Empirically Grounded Typology of Student-AI Engagement

The four learner archetypes identified in this study represent distinct modes of interaction with generative AI, each reflecting a different underlying relationship with the technology and the learning process. A detailed examination of these profiles, contextualized with existing literature, illuminates the varied ways in which AI is being integrated into students’ academic lives. The High-Performing and Balanced Learners exemplify the most sophisticated and strategic use of AI. These students leverage AI tools not as a crutch but as a cognitive partner for complex tasks such as Code Optimization, brainstorming Project Designs, and exploring alternative problem-solving approaches. Their behavior aligns with the vision of AI as a tool for augmenting human intellect and scaffolding expert-level thinking. This proactive and agentic engagement is characteristic of learners who are self-directed and intrinsically motivated and possess strong metacognitive skills—profiles that have been consistently associated with success in online and technology-mediated learning environments (Dabbagh, 2007). These learners actively seek to enhance their understanding and capabilities, using the AI to push the boundaries of their own knowledge rather than simply completing assigned tasks. Their approach reflects a high level of what has been termed “agentic engagement,” where students proactively contribute to the flow of instruction and self-regulate their own learning (Li et al., 2025).

In stark contrast, the Low-Engagement Learner tends to use AI primarily for basic, transactional purposes, such as generating boilerplate code or finding direct answers to well-defined problems. This pattern highlights a significant risk: that without proper pedagogical guidance, these powerful tools may inadvertently encourage superficial learning habits. This behavior is not necessarily indicative of academic dishonesty or a lack of ability, but can be understood as a form of rational, effort-minimizing behavior. When faced with tasks they perceive as routine or low-value, students may delegate the cognitive effort to an external tool, a phenomenon known as “cognitive offloading” (Gerlich, 2025). This highlights a crucial challenge for educators: if assignments do not demand higher-order thinking, AI provides an unprecedentedly efficient means to bypass the cognitive work that leads to deep learning, potentially leading to long-term skill erosion (L. Chen et al., 2020).

Finally, the Practice-Oriented Learner underscores the paramount importance of context in mediating the effectiveness of AI tools. This group demonstrated the most productive and engaged use of AI when it was embedded within tangible, project-based activities that had a clear, practical outcome. Their behavior provides strong empirical support for constructivist and project-based learning theories, which posit that learning is most effective when it is active, situated, and applied to authentic, real-world problems (Li et al., 2025). For these students, AI is not an abstract information source but a practical tool, akin to a compiler, a debugger, or a design assistant, that helps them build, create, and solve concrete problems. This finding confirms that the perceived utility and effectiveness of educational technology are deeply tied to the pedagogical approach in which it is embedded.

A deeper analysis reveals that these archetypes are not merely reflections of innate student psychology, such as traditional learning styles, but are better understood as emergent properties of a complex socio-technical system. They are co-constructed at the intersection of three critical elements: the learner’s individual orientation (their motivations, prior knowledge, and self-regulation skills), the specific technological affordances of the AI tool (its capacity for instant answer generation, its limitations in creative reasoning), and the pedagogical demands of the curriculum (the nature of assignments, the assessment criteria, and the classroom culture). For instance, the “Low-Engagement Learner” archetype does not exist in a vacuum. It emerges when a student with a pragmatic or efficiency-focused orientation encounters an AI tool that excels at providing rapid, low-effort solutions (Gerlich, 2025) within an educational context that may feature assignments that can be completed superficially without significant penalty. Similarly, the “High-Performing Learner” emerges when a motivated student is presented with a complex, open-ended problem that necessitates the use of AI as a creative partner rather than a simple answer machine. This socio-technical perspective shifts the focus of inquiry from merely categorizing students to analyzing and designing the educational ecosystems that shape their interactions with technology. It provides a more actionable explanatory framework, suggesting that educators can influence which archetypal behaviors manifest by carefully designing the learning tasks and the classroom environment.

5.4. Implications for Theory and Practice in Education

The findings of this study, the identification of nuanced learner archetypes, the documentation of AI’s dual potential for cognitive amplification and superficiality, and the exposure of a critical gap in social and humanistic engagement, do not merely describe the current state of AI adoption. They collectively issue a tripartite mandate for a fundamental rethinking of how AI is conceptualized in educational theory, implemented in pedagogy and policy, and designed by technologists. This mandate calls for a decisive shift away from a one-size-fits-all, technology-centric model toward a more dynamic, differentiated, and learner-centric future.

5.4.1. For Educational Theory: Challenging Techno-Determinism with a Socio-Technical Framework

This study contributes to a more complex understanding of technological determinism, i.e., the theory that technology single-handedly drives social and educational outcomes (Héder, 2021). Our results show that learners with different profiles interact with the same AI tool in distinct patterns, which highlights that the technology’s influence is not a one-size-fits-all phenomenon. The impact of AI is deeply intertwined with the learner’s own agency, prior experiences, and the pedagogical setting. This suggests the technology’s role is not predetermined but is instead dynamically shaped through the interaction between the tool, the user, and their environment.

In place of a deterministic view, these findings lend strong support to a learner-centric, socio-technical framework for understanding AI in education. Such a framework recognizes that educational technologies are not neutral artifacts but are embedded within complex systems of human behavior, social arrangements, and institutional values (Babanoğlu et al., 2025). The effectiveness and consequences of an AI tool cannot be understood by analyzing its technical features in isolation. One must consider the entire “ensemble" such as the technology, the learner, the teacher, and the broader institutional goals. This perspective aligns with the human-centered approach to AI advocated by global organizations like UNESCO, which emphasizes that the integration of AI must be guided by core principles of inclusion, equity, and the preservation of human agency. By showing how and why different students interact with AI differently, it underscores the need for theories that can account for this variability and guide the design of more adaptive and responsive educational ecosystems.

5.4.2. For Pedagogy and Policy: The Imperative of Differentiated AI-Powered Instruction

The most immediate and actionable implication of this research is for pedagogy and policy. If students do not use AI monolithically, then our instructional strategies for integrating AI cannot be monolithic either. The empirical identification of distinct learner archetypes creates a clear imperative for differentiated instructional strategies that are tailored to the specific needs, opportunities, and risks associated with each profile. A one-size-fits-all policy, whether it be unrestricted access or total prohibition, is guaranteed to be suboptimal, failing to harness the potential of AI for some students while exacerbating its risks for others.

The growing field of AI-powered differentiated instruction offers a rich set of tools and strategies to meet this challenge. AI can be a powerful tool for teachers seeking to personalize learning, helping to automate the creation of varied materials, provide real-time feedback, and recommend customized learning paths based on student performance. For a “Low-Engagement Learner,” an instructor might use AI tools to generate highly structured, scaffolded exercises that break down complex problems into manageable steps and provide immediate, targeted feedback to guide the student’s thinking process. The pedagogical goal would be to use AI as a guided tutor that encourages metacognition, for example, by requiring students to explain the reasoning behind an AI-generated solution rather than using it as a simple answer key.

Conversely, for a “High-Performing Learner,” the instructional approach would be entirely different. These students would benefit from open-ended, ill-structured, and authentic problem-based learning scenarios where AI is positioned as a creative partner or a Socratic co-pilot. The goal would be to challenge them to use AI for ideation, experimentation, and pushing the boundaries of their knowledge, fostering the skills of critical inquiry and creative synthesis. The “Practice-Oriented Learner” would thrive in environments where AI tools are integrated directly into hands-on, project-based work, serving as just-in-time resources that bridge the gap between abstract theory and practical application.

5.5. Limitations and a Future Research Agenda

While this study provides a novel, empirically grounded typology of student–AI interaction and offers significant implications for theory and practice, it is essential to acknowledge its limitations. These boundaries also serve to illuminate a clear and compelling agenda for future research that can build upon this work to further advance our understanding of the complex relationship between learners and artificial intelligence.

5.5.1. The Boundaries of the Current Study