3. Materials and Methods

For this study, a COIL experience was implemented between the Schools of Architecture at the National University of San Agustín of Arequipa (UNSA) in Peru and the Tecnológico de Monterrey, Chihuahua campus (TEC) in Mexico. The research followed a case study design with a mixed-methods approach, placing greater emphasis on the qualitative component. Pre- and post-questionnaires with Likert-type scales were administered, complemented by open-ended questions that captured students’ perceptions, challenges, and learning outcomes throughout the COIL experience. The quantitative phase served an exploratory and triangulation role, whereas the qualitative analysis focused on gaining a deeper understanding of the participants’ personal and professional transformation processes.

3.1. Participants

A total of 39 Peruvian students (see

Figure 1) enrolled in the course Architectural Design Studio 2 (Taller de Diseño Arquitectónico 2) at the National University of San Agustín of Arequipa (Universidad Nacional de San Agustín de Arequipa, UNSA), and 11 students enrolled in the course Design and Construction of an Ephemeral Habitat (Diseño y Construcción de un Hábitat Efímero) at Tecnológico de Monterrey, Chihuahua campus (TEC), participated in the study. It is relevant to note that, for the Peruvian teaching team, this was their first experience implementing the COIL methodology, whereas the professor from the Mexican counterpart contributed prior experience in these types of collaborations. This dynamic positioned the process as a learning opportunity for the Peruvian professors, guided by the practice of the partner institution. For the development of the international collaboration, seven binational groups were formed, each consisting of eight students (six Peruvians and two Mexicans). Although the COIL experience was binational, the loss of data from the Mexican group prevented its inclusion in the analysis; therefore, the focus of this study is limited to the Peruvian group.

3.2. Data Collection Instruments

To measure the impact of the COIL experience on architecture students’ perceptions of transversal competencies, two instruments were administered at two stages—before and after the experience—as detailed in

Appendix A. The first was a quantitative questionnaire designed to assess leadership, self-regulation in virtual learning, and emotional intelligence in teamwork, using a 5-point Likert scale. The internal consistency of the scales was evaluated with Cronbach’s α and McDonald’s ω coefficients, ensuring that the items consistently measured the intended competencies.

Each competency was operationalized into theoretically grounded sub-dimensions, which informed item construction. Leadership was assessed through assuming roles, decision-making, influence, and motivation; self-regulation in virtual learning included time management, goal monitoring, concentration and procrastination control, and use of collaborative tools; and emotional intelligence in teamwork encompassed emotional management, adaptability, stress management, conflict resolution, and perceived teamwork impact. A detailed description of the theoretical foundations and the full mapping of items by subdimension is provided in

Appendix A.

The second instrument consisted of open-ended questions administered before and after the experience (PRE-COIL_6, PRE-COIL_7, and P_COIL6 to P_COIL9), which captured perceived learning outcomes, challenges, and students’ evaluations of the COIL experience.

3.3. Procedure

The international collaboration took place over five weeks (17 September–22 October 2024). The experience was collaboratively designed and directed by the faculty teams from both universities. This design role is fundamental, as the academic staff establishes the pedagogical scaffolding that frames student learning. The process included intensive planning to jointly define the learning objectives, tasks, timelines, and collaboration tools. This faculty collaboration, similar to a Professional Learning Community (PLC), focused on continuous reflection to improve practice and ensure that the virtual learning environment was coherent and structured for students in both countries (

Collett et al., 2024).

Appendix B details the reflections and lessons learned by the teachers from this experience, as well as improvements for future teaching practices.

The theme, coordinated by both courses, was “Design for Vulnerable Populations”, focusing on the creation of a minimal space for migrants in transit (

Giorgi et al., 2022b). Students worked in binational groups using collaborative tools—WhatsApp Messenger (version 25.22.79; WhatsApp LLC, Menlo Park, CA, USA), Zoom (Zoom Video Communications, San Jose, CA, USA), Google Docs (Google LLC, Mountain View, CA, USA), and Epicollect5 (Imperial College London, London, UK;

https://five.epicollect.net)—during the first three weeks, and in separate national groups during the last two weeks, as detailed below.

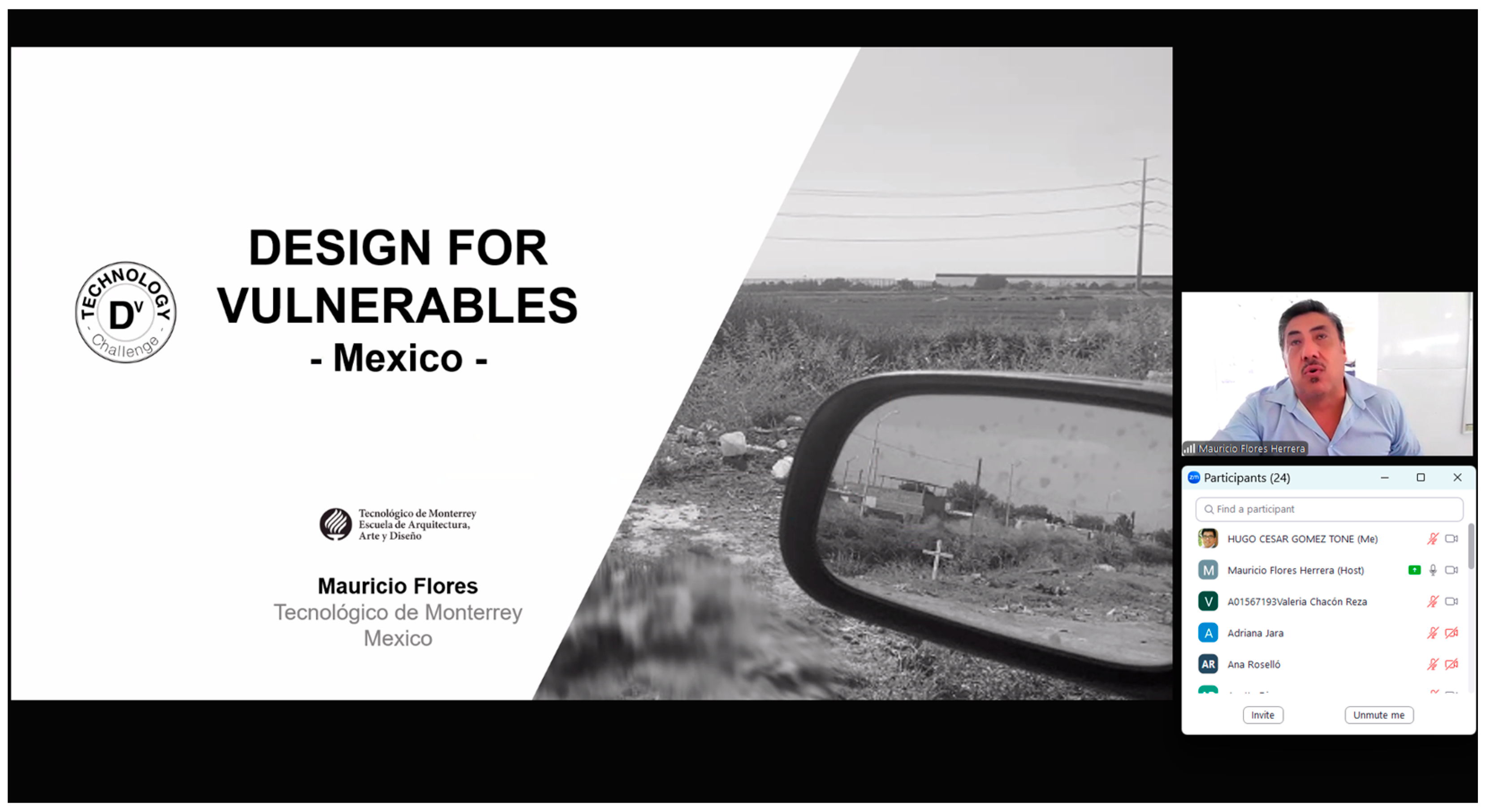

During week 1, students received theoretical instruction focused on the collaborative methodology and the “Design for Vulnerable Populations” methodology (

Giorgi et al., 2022a, pp. 449–465) as can be seen in

Figure 2. They were tasked with conducting a descriptive study of the “migrant” user in both the Mexican and Peruvian contexts. Field visits were carried out to the Ruta del Migrante in Chihuahua, Mexico, and the Cono Norte in Arequipa, Peru. Students completed these activities synchronously, supported by digital tools such as Epicollect and WhatsApp.

During week 2, students collaboratively via Zoom (see

Figure 3) prepared a site analysis and presented their findings. In week 3, they worked together to characterize the user, identifying needs and activities to establish design requirements. As a result, they developed an architectural program emphasizing the anthropometric study of activities deemed relevant to the user.

In week 4, the seven binational groups split into national teams to carry out the architectural design phase. The Peruvian groups focused on designing spaces for rest, dining, hygiene, connectivity, and first aid, forming temporary shelters for the “migrant in transit,” while the Mexican groups developed foldable, easily transportable resting areas.

In week 5, all groups presented their results in a binational session (

Figure 4 and

Figure 5), leading to final conclusions on the international collaborative work.

Finally, upon concluding the five weeks of collaboration, a phase of reflection and feedback was implemented. Dialogue sessions were conducted with the Peruvian students to qualitatively deepen the understanding of their perception of the learning outcomes and challenges, which allowed for the contextualization and validation of the questionnaire findings. Concurrently, the faculty from both universities held reflection meetings to evaluate the pedagogical implementation, discuss obstacles and successes, and establish improvements for future iterations of the COIL experience. These reflections have been integrated into

Appendix B and have informed both the interpretation of the results and the formulation of the practical implications presented in

Section 5.

3.4. Data Analysis

The data analysis was conducted at two complementary levels: quantitative and qualitative.

For the quantitative analysis, statistical procedures were performed using IBM SPSS Statistics (version 31.0.0.0; IBM Corp., Armonk, NY, USA). First, internal consistency coefficients (Cronbach’s α and McDonald’s ω) were calculated to assess the reliability of each scale for both pretest and posttest measurements.

Then, a descriptive analysis of frequencies and distributions was performed on the Likert scale items (1 = strongly disagree, 5 = strongly agree) to identify patterns of change in students’ self-perception before and after the COIL experience. Normality was then assessed using the Shapiro–Wilk test. For the leadership scale, pretest scores did not follow a normal distribution (W = 0.906, p = 0.003), whereas posttest scores approached normality (W = 0.971, p = 0.392). For self-regulation in Virtual Learning, similar results were observed: the pretest did not meet normality assumptions (W = 0.962, p = 0.212), in contrast to the posttest (W = 0.971, p = 0.417). Finally, for emotional intelligence in teamwork scale, pretest scores also violated normality (W = 0.938, p = 0.033), although posttest scores conformed to normality (W = 0.969, p = 0.357).

Given that normality assumptions were not met in some cases, non-parametric Wilcoxon tests for paired samples were applied to identify significant differences between pretest and posttest measurements. Additionally, Spearman correlations were calculated to explore relationships between scores obtained at both evaluation points.

For the qualitative analysis, open-ended responses from the pre- and post-COIL questionnaires were analyzed through an inductive thematic coding process. To ensure rigor and reliability, the procedure was developed in several phases. First, two researchers independently coded a sub-set of the data to identify emerging categories related to the transversal competencies analyzed. They then met to compare their codes, discuss discrepancies, and organize a consensual coding matrix, which served as a reference for applying the system of categories to the rest of the data. To reinforce reliability, cross-verification between researchers was employed, and any disagreements were resolved through consensus to consolidate the final categories. Finally, a systematic record was maintained to preserve the traceability of textual citations, including both the question code and the participant identifier, thereby ensuring the transparency and validity of the analysis (see

Appendix A,

Table A4 and

Table A5 for the list of open-ended questions and their corresponding codes).

Overall, this combined quantitative and qualitative approach allowed for the triangulation of results, providing a more comprehensive, critical, and contextualized understanding of the development and self-perception of transversal competencies during the COIL experience.

4. Results

4.1. Leadership

The reliability of the leadership scale was assessed for both the pretest and posttest versions using Cronbach’s α and McDonald’s ω coefficients, as shown in

Table 1. In the pretest measurement, α = 0.766 and ω = 0.776; in the posttest, α = 0.719 and ω = 0.751, indicating acceptable internal consistency in both cases. No items showed problematic values in the corrected item-total correlations, and therefore, all items were retained for subsequent analysis.

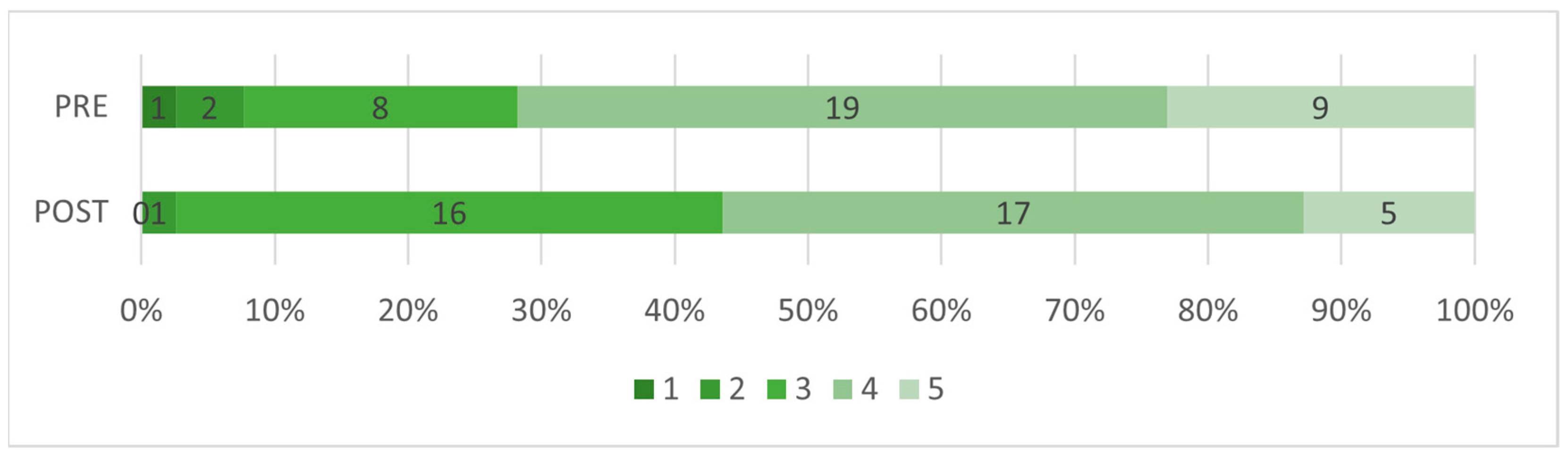

Figure 6 shows the frequency analysis of leadership competency, which revealed a shift toward more moderate responses following the COIL experience. Individual scores were computed from a four-item Likert scale measuring students’ frequency in assuming leadership roles within teamwork, comfort in making key decisions, ability to influence peers, and skill in motivating team members, assessed at two stages: pre- and post-COIL. Categorization of the averages revealed a slight reduction in the highest levels of the scale (value 5 decreased from 9 to 5, and value 4 from 19 to 17), accompanied by an increase in students at level 3 (from 8 to 16). While most participants remained within the medium-high range (values 3–5), the COIL experience appears to have promoted a more reflective and calibrated self-perception of leadership, likely influenced by the authentic challenges encountered during the collaborative process.

The Wilcoxon signed-rank test was conducted to compare scores before and after the COIL experience. As shown in

Table 2, results revealed a marginally significant difference (Z = −1.887,

p = 0.059) with a moderate effect size (r = 0.33). While not reaching conventional significance, the data suggest a downward trend: posttest scores decreased in 54% of cases, increased in 28%, and remained unchanged in 18%. This shift, along with a higher concentration of responses in the intermediate scale levels, indicates that some students moderated their initial self-assessments, reflecting a more reflective and critical perspective on leadership after the COIL experience.

Table 3 presents the Spearman correlation analysis, which revealed a significant and moderately strong relationship between pre- and post-COIL scores (ρ = 0.536,

p < 0.001). Although an overall decrease in leadership self-perceptions was observed, this correlation suggests that students maintained their relative positions within the group. Nonetheless, it is essential to interpret this stability in the context of the qualitative findings.

To gain a deeper understanding of the reasons behind the decrease in leadership self-perceptions, students’ qualitative responses before and after the experience were analyzed. These responses revealed a consistency between their initial concerns and the challenges they encountered. The main operational and interactional challenges inherent to online international collaboration that impacted their leadership were:

Communication Barriers: Responses such as: “I am concerned about the lack of communication and direct interaction” (Participant 20243077, PRE_COIL6) indicate that “lack of communication” or “communication gaps” was an anticipated concern. This directly affects a leader’s ability to provide direction, motivate, and inspire the team. This issue was later confirmed in post-experience responses, for example: “The lack of constant communication […] the limited synchronous activity time” (Participant 20243077, P_COIL7).

Coordination Challenges: “Difficult organization,” “clashing schedules,” and the difficulty in “matching schedules” or “coordinating work and schedules” were mentioned as significant obstacles by several participants. These challenges complicated team interaction and limited collaboration. For example: “Difference in the pace of progress between the two countries and the limited interaction” (Participant 20220185, P_COIL9). Such issues posed a barrier to leadership, as they hindered the establishment of common goals, the organization of joint work, and the management of group processes.

Lack of Interaction: Statements such as “limited interaction,” “not being able to participate more,” and “lack of interest from the other side” suggest that the virtual environment may have constrained students’ ability to influence or assume active leadership roles. Leadership is strengthened through direct interaction, which enables motivating the team, resolving conflicts, and maintaining engagement. In this regard, one student noted: “Lack of good communication, lack of interest from the other side” (Participant 20243063, P_COIL9), highlighting how limited communication and perceived disinterest negatively affected group dynamics and the development of leadership competencies.

Challenges Of Virtual Environments: The virtual environment itself, along with technical issues (e.g., microphone malfunctions) and the lack of in-person contact with users or peers, was identified as a significant factor limiting effective teamwork and decision-making. These conditions impacted students’ self-perception of leadership by constraining the exercise of key competencies, such as effective communication, prompt coordination, and maintaining team motivation. One student highlighted these challenges at the beginning of the process, noting “time availability […] distance” (Participant 20230136, PRE_COIL7), and reaffirmed them at the conclusion: “limitations due to distance. Scheduling conflicts” (Participant 20230136, P_COIL9). These findings suggest that the virtual context maintained a persistent influence on the development and expression of leadership skills throughout the experience.

Although the COIL experience did not drastically alter the relative ranking of participants, the inherent challenges of the virtual environment exerted a negative influence throughout the experience. These conditions limited the demonstration of essential competencies such as effective communication, timely coordination, and team motivation, and appear to have fostered a critical and more uniform adjustment toward a realistic—or even reduced—self-perception of leadership for some students.

This suggests that, although students maintained their relative positions, their perception of how effective they truly were as leaders may have been influenced as the anticipated challenges became reality, reinforcing the idea that contextual conditions directly affected their ability to exercise leadership skills.

Although students initially rated themselves as highly capable leaders, the challenges inherent to the COIL environment—particularly those involving communication, coordination, and remote interactions—shaped their perceptions of leadership effectiveness throughout the experience. Furthermore, the difficulties anticipated in the pretest materialized during the posttest, highlighting how contextual factors directly constrained the enactment of leadership competencies.

4.2. Self-Regulation in Virtual Learning

This competency was assessed using a five-point Likert scale (1 = strongly disagree, 5 = strongly agree), consisting of five items that examined aspects such as time management, monitoring of academic goals, concentration and procrastination control, and the use of collaborative technological tools.

Table 4 shows that the Virtual Learning Self-Regulation scale (5 items) demonstrated high internal consistency in the pretest (α = 0.851; ω = 0.857) and acceptable consistency in the posttest (α = 0.726; ω = 0.747). Corrected item-total correlations ranged from 0.575 to 0.758 in the pretest and from 0.271 to 0.705 in the posttest. In both cases, removing any item did not substantially improve reliability, so all items were retained.

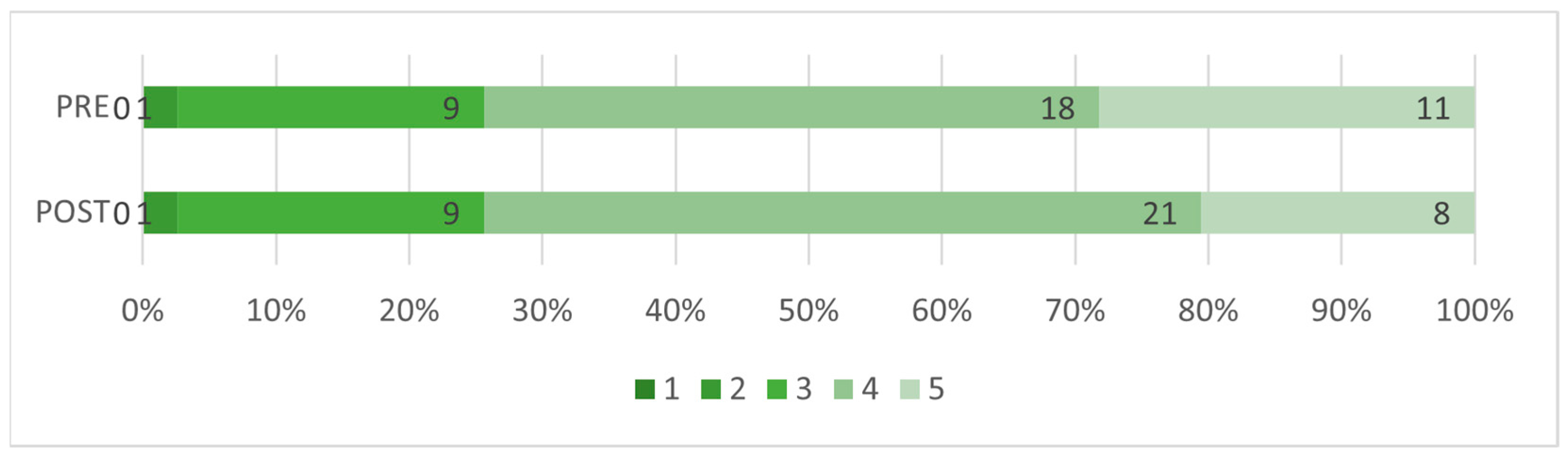

Figure 7 presents the frequency distribution for Self-Regulation in Virtual Learning. The results indicate a shift toward more moderate responses following the COIL experience, particularly among students who initially rated themselves at the highest levels on the scale. In the pretest, 11 students scored an average of 5 (“strongly agree”), 18 scored 4 (“agree”), and 9 scored 3 (“neither agree nor disagree”), reflecting a high self-assessment of their ability to manage learning effectively in virtual environments. In the posttest, however, only 8 students remained at the highest level, 21 were at 4, and the number at level 3 remained unchanged. This shift suggests that, after participating in the COIL experience, several students recalibrated their initial self-perception, adopting a more critical and realistic view of their self-regulation skills.

Because the data did not meet the assumption of normality, the Wilcoxon signed-rank test was used to assess changes in this competency.

Table 5 presents the results, showing no significant differences between pre- and posttest scores (Z = −0.214,

p = 0.831), with a very small effect size (r = 0.036). Regarding individual score distributions, 41% of students scored lower on the posttest compared to the pretest, 51% showed increases, and 8% remained unchanged, consistent with the frequency analysis presented earlier. This balanced trend, together with the negligible effect size, suggests that the COIL experience did not produce consistent or sustained changes in students’ self-perception of self-regulation, although variability in individual trajectories was observed and will be analyzed further.

A Spearman correlation analysis was also conducted, with results presented in

Table 6. A significant positive correlation was observed between pre- and posttest (ρ = 0.340,

p = 0.034), indicating that higher pretest scores tended to correspond with higher posttest scores. Although the strength of the association is moderate, the finding suggests a consistent relationship between the two measurements.

Both tests indicate that the averages remained stable and changes in frequencies were minimal, suggesting that the COIL experience did not produce substantial variations in this competency at the group level. However, for the purposes of this study, it is necessary to examine individual changes and the factors that led to adjustments in this competency.

To gain a deeper understanding of the slight decrease in self-regulation in virtual learning observed after the COIL experience, open-ended responses from students with declining scores in this competency were categorized. Qualitative data highlights the factors that influence self-regulation. The main barriers identified are presented below:

Autonomy and Proactivity: Self-regulation involves taking an active and autonomous role in the learning process. Within the COIL context, this dimension relates to the initiative to coordinate actions, achieve goals, and maintain engagement. One student noted: “There was no initiative to work on one side” (Participant 20240199, P_COIL9). This highlights that, in a virtual environment, the lack of proactivity among group members can negatively impact the motivation and workflow of others.

Organization and Collaborative Work Planning: Effective planning and organization of shared tasks are critical for maintaining self-regulation and were tested within the COIL environment. Participants highlighted challenges such as “Difficulty in organizing the workgroup” (Participant 20190165, P_COIL9) and “Lack of coordination among students” (Participant 20243077, P_COIL9), illustrating how insufficient planning or coordination of collaborative tasks affected project progress and generated frustration within the teams.

Adaptation and Management of Virtual Environment and Tools: Proficiency in collaborative digital platforms is a prerequisite for self-regulation within the COIL framework, as technical difficulties and the quality of virtual interaction directly affect task management. Responses such as “The use of virtual platforms does not allow for clear interaction among all participant” (Participant 20240205, P_COIL9) highlight the barriers posed by inadequate handling or difficulty adapting to virtual tools. These challenges undermined group members’ engagement and, for most participants, resulted in a negative perception of the COIL experience.

Time Management and Schedule Coordination: Self-regulation also requires effective time management and coordination of schedules. The COIL environment, with its time zone differences and local academic demands, posed a significant challenge in this dimension. Students reflected this in responses such as: “Some team members were working, and their availability was limited […] meetings were very short, and as a result, conversations were fleeting.” (Participant 20224114, P_COIL7). This illustrates how incompatible schedules and work commitments impacted planning and hindered consistent participation, negatively affecting other group members.

Although the quantitative results did not show statistically significant changes in self-regulation, the qualitative findings indicate that the COIL experience prompted a critical adjustment in several students’ self-perception of this competency. Many participants initially rated their abilities to organize time, maintain focus, and apply autonomous learning strategies highly. However, facing the real challenges of international collaborative work revealed that these skills could be constrained by contextual conditions. Barriers such as working in a virtual environment, lack of organization, limited initiative, and scheduling difficulties tested and strained this competency. As a result, some participants, particularly those whose scores decreased, adopted a more realistic and contextually grounded perception of their capacity to self-regulate in collaborative virtual learning environments.

4.3. Emotional Intelligence in Teamwork

In this competency, a slight shift toward more moderate responses was also observed following COIL experience. To measure it, a Likert scale (1 = strongly disagree, 5 = strongly agree) was used, comprising five items related to emotional intelligence in teamwork. These items addressed the management of one’s own emotions, adaptation to others’ emotions, stress management, conflict resolution, and the perception of how teamwork affects academic performance.

The internal consistency of this five-item scale was low in the pretest (α = 0.57; ω = 0.60) and acceptable in the posttest (α = 0.76; ω = 0.77), as shown in

Table 7. This increase may reflect the COIL experience, after which students’ responses were more consistent in evaluating their socio-emotional skills. Despite the low initial values, all items were retained to preserve the conceptual integrity of the scale and ensure comparability across measurement points, acknowledging this limitation when interpreting the results.

After categorizing the individual averages, a decrease was observed in the number of students at the highest scale level (5) from 16 to 13, along with slight increases at level 3 (from 4 to 5) and level 2 (from 0 to 1). This suggests that some participants reassessed their perception of the competency of emotional intelligence in teamwork. However, the majority remained at the medium-high levels of the scale (values 4 and 5), showing overall stability between pre- and post-experience results, as illustrated in

Figure 8.

Emotional intelligence scores also violated the assumption of normality, so the Wilcoxon signed-rank test was applied. The analysis presented in

Table 8 revealed no statistically significant differences between pre- and post-experience scores (Z = −0.877,

p = 0.380), with a small effect size (r = 0.15). Regarding individual changes, 46% of students had lower posttest scores than pretest scores, 41% showed increases, and 13% remained the same. These results, showing no clear trend in the quantitative data, underscore the need for qualitative analysis to capture the nuances in students’ self-perception of emotional intelligence and teamwork following the COIL experience.

The Spearman correlation between PRE_IE_AVER and P_IE_AVER was positive but very low (ρ = 0.121) and not significant (

p = 0.463), as shown in

Table 9. This indicates that there is insufficient evidence to support a relationship between the scores obtained before and after the COIL experience for this variable. The low correlation suggests that individual changes are not consistently reflected in the quantitative results, highlighting the need for qualitative analysis to understand the reasons behind the variability in students’ perceptions of their emotional intelligence in teamwork.

It is particularly relevant to understand why some students who initially perceived themselves as having strong emotional skills for collaborative work reported a decline in self-assessment after the COIL experience. To explore this, the open-ended responses from the pre- and post-tests were analyzed comparatively, focusing on perceptions, barriers, and learning outcomes related to the development of emotional intelligence in teamwork during the COIL.

The qualitative findings indicate that poor communication, influenced by both human and technological factors, was a major challenge affecting students’ perceptions of their emotional intelligence competence. Limitations inherent to the virtual format, technical issues, and scheduling conflicts constrained opportunities for fluid interaction, timely feedback, and emotional support.

Several students highlighted how their initial expectations regarding cultural openness, idea exchange, and collaborative work were diminished in practice. For instance, participant 20243063 (PRE_IE_AVER = 4.4, P_IE_AVER = 3.8) shifted from expressing interest in “listening to experiences and learning about the culture of other places” (PRE_COIL6) to reporting “lack of good communication, lack of interest from the other side” (P_COIL9). Similarly, participant 20242041 (PRE_IE_AVER = 4.2, P_IE_AVER = 3.2) initially expressed a desire “to learn about their culture, lifestyle, how they learn, and how they approach architecture,” but ultimately reported a “communication gap, scheduling conflicts, and uncoordinated work” (P_COIL9).

These obstacles hindered not only cultural exchange but also empathy and group cohesion. Some participants even identified “inequality in participation” (participant 20243055, P_COIL9) as a factor negatively affecting the team’s emotional climate. Overall, the responses indicate that, while several students maintained a high perception of their emotional competencies, for others the COIL experience challenged these skills, leading to a more critical self-assessment of their ability to manage interpersonal relationships and communication in virtual, multicultural contexts.

4.4. Cross-Cutting Patterns and General Trends

The three competencies analyzed—leadership, self-regulation in virtual learning, and emotional intelligence in teamwork—remained within medium-to-high ranges of self-perception, although significant adjustments occurred in some cases. Students who initially rated themselves highly tended to moderate their scores, while those with lower initial ratings showed improvements. This pattern, common across all three competencies, reflects the impact of challenges such as communication barriers, remote coordination, time zone differences, the limitations inherent to virtual environments, and uneven participant engagement. Rather than simply serving as a space for improvement, the COIL experience functioned as a setting where expectations confronted reality, testing competencies in real contexts of international and virtual collaboration. This led to a more balanced, realistic, and context-sensitive perception of students’ own performance, alongside meaningful learning about working in global educational environments.

5. Discussion

The hypothesis proposed that participating in the COIL experience would enhance transversal competencies. However, examining the results across the three competencies—leadership, self-regulation in virtual learning, and emotional intelligence in teamwork—reveals a clear pattern: COIL fostered a more critical and context-aware perception of students’ own skills, serving as a valuable reality check. Overall, self-perceptions remained in the medium-to-high range, but a significant pattern of self-assessment calibration emerged: students with initial lower scores tended to improve, whereas those with higher initial ratings moderated their assessments. This trend suggests that COIL acted as a ‘testing ground’ where idealized expectations confronted the authentic challenges of international collaboration.

As

Rodríguez Marconi et al. (

2023) note, systemic competencies such as leadership and self-regulation are particularly challenged in virtual and intercultural contexts, where time management, decision-making, and remote coordination demand constant adaptation. In this study, the main barriers included poor communication, time zone differences, disparate work rhythms, and unequal participation. These challenges prompted students to develop problem-solving strategies and resilience, consistent with the observations of

Catacutan et al. (

2023).

Specifically, for leadership, initial ratings tended to moderate, consistent with literature describing intercultural virtual environments as “testing environments” (

Macht et al., 2019;

Cheung et al., 2022). Leadership was understood not only as initiative or decision-making but also as a practice demanding adaptation to diverse communication, negotiation, and coordination styles (

Snider et al., 2024). Regarding self-regulation, the findings align with

Zimmerman (

2002) and

de la Fuente et al. (

2017) in highlighting its dynamic and adaptive nature. While overall averages remained stable, students who initially perceived themselves as less capable of managing time or working effectively in virtual settings showed improvement, consistent with reports by

Asojo et al. (

2019) and

Ali et al. (

2023).

For emotional intelligence in teamwork, reductions in initial ratings were associated with communication challenges and differences in participants’ commitment to collaborative work. This prompted a reassessment of students’ perceived capacity to cooperate and adapt, aligning with

Goleman (

2009) and

Hebles et al. (

2022), who emphasize that empathy and emotional management are strengthened when tested in real-world interaction and conflict-resolution scenarios.

Although quantitative changes did not always reach statistical significance, qualitative findings underscored the pedagogical value of the COIL experience by exposing students to real-world challenges requiring adaptation, negotiation, and uncertainty management. This process fostered a deeper critical awareness of their own competencies—a key learning outcome in itself. The observed phenomenon is not a failure to improve, but a valuable recalibration of self-perception, where students adjust their self-assessment criteria based on lived experience, shifting from abstract self-concepts to more realistic and contextually grounded evaluations of their skills.

Overall, the COIL experience played a dual role: it promoted the development of competencies in some students while prompting others to reevaluate their self-perception. This mixed effect indicates that learning in virtual and intercultural environments extends beyond skill acquisition to include essential processes of self-reflection and adjustment, preparing future architects for global and dynamic professional contexts. Moreover, the quality of pedagogical and organizational scaffolding, including communication protocols, role definitions, and instructor feedback, proved critical in ensuring that COIL serves as a competency accelerator rather than merely a mirror for performance evaluation.

The study included all enrolled students in Architectural Design Studio 2 (n = 39), ensuring representativeness within this specific academic context, although limiting broader statistical generalization. While the case study approach allows for a deep understanding of the Peruvian students’ experience in this COIL program, we acknowledge that the homogeneity of the sample (a single university and discipline) means that the findings should be interpreted with caution when extending them to other educational, disciplinary, or geographical contexts. During the post-test phase, partial data loss occurred among international participants (Mexican group), preventing binational comparisons. This omission goes beyond a simple lack of comparative data and limits a comprehensive understanding of how bilateral interaction patterns affect competency development. For instance, our qualitative findings revealing Peruvian students’ perceptions of a ‘lack of interest from the other side’ or ‘unequal participation’ cannot be contrasted with the Mexican perspective. This makes it impossible to discern whether these perceived barriers stemmed from different communication styles, logistical challenges unique to the Mexican group, or other variables inherent to their dynamic. Since the main objective was to assess the impact on Peruvian students—and complete data were retained for this group—the findings remain valid for the population of interest. Future studies should replicate this research with larger and more diverse samples to increase the external validity of the results, strengthen this aspect by implementing follow-up mechanisms to minimize data loss in multicultural contexts, and prioritize data collection from all collaborating groups to enable a richer dyadic analysis of the intercultural experience.

Additionally, the single-group pre–post design does not isolate the COIL effect from external factors, limiting causal inference. The initially low reliability of one scale constrains the robustness of comparisons, and responses are not fully independent, as they originate from seven teams, suggesting potential uncontrolled group effects. Finally, reliance solely on self-reports, without instructor observations or objective performance data, limits objectivity and may introduce social desirability bias.

While this study focuses on the impact on students, it is crucial to acknowledge that the COIL experience also represented an intensive learning process for the faculty team. Faculty members often benefit as much as, if not more than, the students, as continuous planning and reflection on practice are inherent to the model. The identified challenges, such as communication and coordination barriers, served as data for the faculty’s pedagogical reflection, a process analogous to participatory action research where educators investigate and improve their own practice (

Collett et al., 2024). This faculty learning is fundamental for refining future implementations and strengthening the pedagogical design.

Future research should employ quasi-experimental designs with comparison groups, include multilevel analyses that control for team membership, and apply validated scales with pre–post invariance testing. Extending the duration of COIL experiences is also recommended, alongside incorporating longitudinal measurements to observe sustained effects. Specifically, to address the temporal validity of the conclusions, future designs should go beyond a single post-test and conduct both ‘short-term tracking’ (e.g., one month after COIL concludes) and ‘long-term tracking’ (e.g., one semester after COIL concludes). This would make it possible to determine whether the impact of COIL on transversal competencies is ‘sustained’ over time. More importantly, given that our primary finding was a ‘calibration effect’ rather than a simple increase in skills, a longitudinal approach would allow researchers to explore if this more realistic and critical self-perception is a stable outcome. Such tracking could investigate whether this newfound awareness translates into targeted, tangible skill development in subsequent academic terms or if students’ self-assessments revert once the intensive intercultural experience has ended. Moreover, integrating objective performance indicators (instructor rubrics, peer co-evaluation, digital participation traces) and using mixed-method approaches with joint displays linking quantitative changes to qualitative categories would strengthen findings.

The findings suggest practical implications for maximizing COIL’s value in early architectural training. Recommended actions include establishing team agreements and clear roles from the outset; providing brief training in digital tools and intercultural management; scheduling mandatory synchronous checkpoints to ensure regular interaction; incorporating peer and instructor evaluations to promote equitable engagement; and delivering targeted feedback on situational leadership, emotional regulation, and conflict resolution. These measures can transform COIL from merely a setting for critical self-reflection into an environment that fosters sustained development of transversal competencies in future architects, while also preparing them for real international experiences.

6. Conclusions

The primary conclusion of this study is that the COIL experience led to a significant and beneficial critical adjustment in students’ self-perception. This ‘calibration effect’ was most evident in leadership and self-regulation. Students who began with highly optimistic self-assessments tended to moderate them after facing practical challenges—such as communication gaps and asynchronous work—while those with initially lower self-perceptions demonstrated improvement. This finding suggests that the main pedagogical value of this COIL experience was not only the development of competencies but, more importantly, the fostering of a more realistic, critical, and contextually grounded evaluation of students’ own abilities, which represents an essential step for genuine professional growth.

The main obstacles were the lack of synchronous interaction, communication gaps, and misalignment of schedules and work rhythms. These factors constrained the full exercise of competencies such as leadership and emotional intelligence, highlighting the need to design COIL experiences with more structured collaborative activities and tools that foster group cohesion and ensure balanced representation of participants from each nationality within groups.

Despite these challenges, students acknowledged that the experience enhanced their adaptability, resilience, and intercultural awareness. Working with international peers allowed them to navigate diverse communication and management styles—skills that are essential for their future professional practice in a globalized labor market.

The study demonstrates that COIL constitutes a valuable pedagogical strategy for strengthening transversal competencies in architecture students, although its effectiveness depends on specific adjustments in design and implementation. Greater emphasis is needed during the preparation phase, including training in digital tools and the establishment of clear group norms. Additionally, designing more structured synchronous interactions is necessary to foster trust and engagement among participants, and providing guided support and reflection sessions— such as tutorials— can help students manage challenges and critically reflect on the learning outcomes of the experience.