Abstract

Collaborative design is recognized as a promising approach to strengthening preservice teachers’ digital competence, yet its potential when design tasks approximate the complexities of classroom practice remains underexplored. This mixed-methods study investigated a Synthesis of Qualitative Evidence (SQD)-aligned collaborative design course in which 23 final-year preservice secondary mathematics teachers, organized in six teams, spent ten weeks designing technology-enhanced lesson materials for authentic design challenges posed by in-service teachers. Using questionnaires and interviews, this study explored preservice teachers’ perceived digital competence development and their perceptions of the SQD-aligned course supports. Regarding competence development, participants indicated increases in self-assessed cognitive (technological knowledge, TPACK) and motivational (technology-integration self-efficacy, perceived ease of use) digital competence dimensions. Qualitative findings linked these perceptions to heightened technological awareness and confidence but noted limited tool mastery due to reliance on familiar technologies and efficiency-driven task division. Concerning course supports, authentic challenges enhanced motivation and context-sensitive reasoning, while layering scaffolds (guidelines, coaching, and microteaching feedback) supported navigating the open-endedness of the design task. Yet calls for earlier feedback and technology-related modeling underscore the need for further scaffolding to adequately support autonomy in technology selection and integration. Findings inform teacher education course design for fostering preservice teachers’ digital competencies.

1. Introduction

The rapid digitalization of education, exemplified by policies such as the 2020 Flemish “Digital Leap”1, is reshaping the educational landscape. To navigate these policy demands and design meaningful learning experiences, teachers require robust digital competencies that empower them to make context-sensitive, pedagogically sound decisions about technology integration. Teacher education plays a pivotal role in developing these competencies in preservice teachers (PSTs), as the quality of technology preparation significantly influences how they implement technology in their future classrooms (Tondeur et al., 2012). One widely adopted approach in teacher education to foster these competencies is engaging PSTs in collaborative design (CD) (Alayyar & Fisser, 2019; Wilson et al., 2020), which Voogt et al. (2015) define as situations in which “teachers create new or adapt existing curricular materials in teams to comply with the intention of the curriculum designers and with the realities of their context” (p. 260). Research has demonstrated that CD can strengthen PSTs’ technological pedagogical content knowledge (TPACK) (Wilson et al., 2020), pedagogical beliefs (Chai et al., 2017), technology-related motivation (Liu et al., 2024), self-efficacy (Lee & Lee, 2014), design capacities (Chai et al., 2017; Liu et al., 2024), and problem-solving skills (Liu et al., 2024). Furthermore, a substantial body of research has investigated how to support PSTs as novice designers in these contexts, with the Synthesis of Qualitative Evidence (SQD) model proposed by Tondeur et al. (2012), which includes six micro-level strategies such as instructional design, collaboration, authentic experiences, role models, feedback, and reflection, shown to be particularly effective (e.g., Agyei & Voogt, 2015; da Silva Bueno & Niess, 2023; Lachner et al., 2021). Despite these insights, important questions remain regarding under which design ecologies (e.g., available resources, levels of guidance, and task structures) CD is most effective in fostering digital competencies (Baran & AlZoubi, 2024; Papanikolaou et al., 2017; Wilson et al., 2020).

A common trend observable in the existing CD literature has been to deliberately simplify the design space for PSTs as novice designers, for example, by pre-selecting tools, providing structured technical training, or using decontextualized, fictitious design tasks. While such scaffolding undoubtedly facilitates the design process, it simultaneously obscures the complex realities they will face when making technology-integration decisions in their future practice, including infrastructural limitations, diverse learner needs, institutional policies, an ever-broadening technological landscape, and multifaceted pedagogical classroom challenges (Jordan, 2016; Nguyen & Bower, 2018). Consequently, there remains limited empirical insight into whether and how CD environments that more closely approximate authentic classroom contexts can effectively foster PSTs’ digital competencies, and whether SQD’s benefits hold when design tasks are both ill-structured and authentically bounded. Against this backdrop, we report phase 1 of a design-based research program aimed at exploring whether and how more authentic, ill-structured CD contexts, supported by the SQD strategies, can cultivate PSTs’ digital competencies. Over ten weeks, preservice mathematics teachers collaborated in teams to design technology-enhanced lesson materials in response to authentic design challenges (ADCs) posed by in-service teachers. Drawing on pre- and post-intervention questionnaires and follow-up semi-structured interviews, this study examines (1) how PSTs perceive changes in their digital competencies and (2) which specific features of the intervention they consider most supportive or constraining in their design processes. By doing so, the study aims to provide nuanced insights into how CD can be structured to better prepare future educators for the complex, context-dependent challenges inherent in contemporary technology integration.

1.1. Digital Competencies

Digital competencies are essential for educators to effectively integrate technology into their teaching practice (Backfisch et al., 2023; Scherer & Teo, 2019). In this study, we focus on three foundational components of digital competence: professional knowledge, self-efficacy, and attitudes, each of which has demonstrated established relevance for fostering successful technology integration among PSTs (Farjon et al., 2019; Scherer & Teo, 2019). From a knowledge perspective, the Technological Pedagogical Content Knowledge (TPACK) framework of Mishra and Koehler (2006) is the most widely recognized model to capture the complexity of professional knowledge needed for meaningful technology integration. Extending Shulman’s (1986) concept of pedagogical content knowledge (PCK), TPACK asserts that it is not sufficient for teachers to develop technological knowledge (TK), pedagogical knowledge (PK), and content knowledge (CK) in isolation; rather, they must be able to integrate these domains in specific classroom contexts to design and enact effective technology-enhanced learning (Mishra & Koehler, 2006).

Self-efficacy, rooted in Bandura’s (1977, 2001) social cognitive theory, refers to individuals’ beliefs in their capability to organize and execute actions to achieve specific goals. In the context of technology integration, it reflects teachers’ confidence in their ability to select, master, and use technological tools effectively to meet pedagogical objectives (Ertmer, 2005; Q. Wang & Zhao, 2021). Rather than reflecting actual skills, self-efficacy emphasizes perceived competence, which has been shown to strongly predict teachers’ intentions, persistence, and classroom adoption of technology (Anderson et al., 2012). Recent large-scale evidence further confirms this link: early-career teachers with stronger general self-efficacy and ICT literacy developed higher technology integration self-efficacy during the pandemic, which subsequently drove greater ICT use in their post-pandemic classrooms (Paetsch et al., 2023). Similarly, Afari et al. (2023) found that preservice teachers’ computer self-efficacy, particularly their basic and pedagogical technology skills, was a significant predictor of both traditional and constructivist ICT use, highlighting the importance of strengthening self-efficacy to foster meaningful technology integration. Consequently, fostering high self-efficacy is crucial for encouraging PSTs to confidently engage with digital tools in practice.

Attitudes toward digital technologies play a significant role in shaping teachers’ adoption behaviors (e.g., Scherer & Teo, 2019; Teo, 2008). They can be understood as individuals’ predispositions to respond favorably or unfavorably to an object, person, or event (Aslan & Zhu, 2017). In this study, we focus on two key dimensions: general attitudes toward digital technologies (GATT) and perceived ease of use (EASE). General attitudes reflect overall openness, interest, and willingness to engage with digital technologies, regardless of specific pedagogical contexts (Aslan & Zhu, 2017; Evers et al., 2009). In contrast, perceived ease of use captures teachers’ beliefs about how straightforward and manageable it is to adopt and apply technological tools effectively (Scherer et al., 2018; Teo, 2008). Both positive general attitudes and high perceived ease of use have been associated with greater competence in designing technology-rich learning environments and supporting PSTs’ digital skills (Tondeur et al., 2018). Notably, recent findings indicate that ease of use is particularly critical, as it strongly relates to technological knowledge and remains an area where many PSTs continue to require support, especially when working in unfamiliar educational contexts (Merjovaara et al., 2024).

The development of digital competence is a gradual, multifaceted process that extends far beyond isolated workshops or single training moments. It requires sustained cultivation of professional knowledge and motivational dispositions over time (Falloon, 2020; Ilomäki et al., 2016). While much of this growth takes place through sustained, practice-based engagement with technology, establishing strong foundational competencies early on is crucial. Teacher education programs therefore play a pivotal role in enhancing future teachers’ confidence, intention, and capacity to integrate technology effectively (Howard et al., 2021; Lachner et al., 2021; Scherer & Teo, 2019), thereby laying the groundwork for meaningful and sustained technology integration throughout their careers (Aslan & Zhu, 2017; Gill & Dalgarno, 2017).

1.2. The Synthesis of Qualitative Evidence Model

Several strategies have been proposed to support PSTs’ development of digital competencies (Wilson et al., 2020). To synthesize evidence on successful approaches, Tondeur et al. (2012) conducted a review of qualitative studies and proposed the Synthesis of Qualitative Evidence (SQD) model. This model outlines six micro-level strategies essential for preparing PSTs for effective technology integration: instructional design, collaboration, authentic experiences, role models, feedback, and reflection.

Instructional design involves providing PSTs with structured opportunities to engage in the design of technology-integrated curriculum materials. As PSTs generally have little experience in designing instructional content (Agyei & Voogt, 2015), effective scaffolding is essential to guide their design process (Baran & AlZoubi, 2024; McKenney et al., 2022). Collaboration encompasses sharing technological knowledge and experiences and jointly developing technology-enhanced lesson activities. This strategy has been shown to alleviate feelings of insecurity (Lee & Lee, 2014) while providing a safe environment to exchange ideas and explore diverse perspectives (Howard et al., 2021; Nguyen & Bower, 2018). PSTs should also be offered authentic experiences in which they can apply their knowledge of digital technologies in real or simulated educational settings, allowing them to experience the educational advantages of technology and grow their practical TPACK (Alayyar & Fisser, 2019; Valtonen et al., 2015). Furthermore, teacher educators acting as role models offer valuable observational learning opportunities, allowing PSTs to witness effective, pedagogically sound technology use in practice (Polly et al., 2020), which has been shown to encourage the adoption of similar instructional behaviors and approaches (Ellis et al., 2020). Feedback, in both formative and summative formats, plays a vital role in helping PSTs enhance their digital competencies (Tondeur et al., 2021). Finally, reflection involves critically analyzing one’s own experiences with (designing for) technology integration, including perceived successes, challenges encountered, and personal attitudes toward educational technology, thereby fostering deeper understanding and informing future practice (Howard et al., 2021; Kimmons et al., 2015).

Since the release of the model, multiple scholars have examined relationships between SQD strategies and PSTs’ digital competencies, particularly their development of TPACK. Cross-sectional studies have demonstrated moderate to strong associations between the perceived availability of SQD strategies and self-reported TPACK (Baran et al., 2019; Howard et al., 2021; Tondeur et al., 2018). Intervention studies further show that courses explicitly integrating all SQD strategies tend to yield stronger gains in technology-integration self-efficacy, TPACK, and positive attitudes toward technology use (e.g., Buchner & Hofmann, 2022; Christensen & Trevisan, 2023; da Silva Bueno & Niess, 2023; Hsu & Lin, 2020; Lachner et al., 2021). For example, Hsu and Lin (2020) reported particularly strong effects for reflection and instructional design in a four-week module for preservice language teachers, while Buchner and Hofmann (2022) demonstrated that holistic implementations were more effective than partial approaches. Other studies echo these findings across diverse contexts, such as a semester-long mathematics teaching practice course in Brazil (da Silva Bueno & Niess, 2023), subject-specific TPACK modules in Germany (Lachner et al., 2021), and a technology integration course in the United States (Christensen & Trevisan, 2023). Collectively, these studies suggest a growing consensus that comprehensive, multi-strategy implementations of the SQD model are more effective than isolated applications. This aligns with earlier work by Tondeur (2018), one of the original authors of the SQD model, who identified teacher design teams as a particularly promising context for integrating all SQD strategies in preservice teacher education.

1.3. Engaging Preservice Teachers in Collaborative Design

Across the literature, terms such as “Learning by Design” (Koehler & Mishra, 2005), “Collaborative Design” (Voogt et al., 2015), “Teacher Design Teams” (Handelzalts, 2019), and “lesson planning” (Wilson et al., 2020) describe approaches in which (preservice) teachers collaboratively design educational materials. While each term has its own nuances, they all build on the notion of teaching as a design science (Laurillard, 2012), positioning teachers as reflective practitioners who iteratively improve their practice through inquiry and shared reflection. Such practices are considered promising for fostering teachers’ digital competencies, as they enable engagement in socially situated learning, promote agentic ownership over (re)shaping one’s practice, and support learning through iterative cycles of testing and redesign (Voogt et al., 2015).

Given its potential for in-service contexts, many scholars have also explored CD in preservice teacher education to foster technology integration (e.g., Backfisch et al., 2023; da Silva Bueno & Niess, 2023; Lachner et al., 2021; Ma et al., 2024; Nguyen & Bower, 2018). For instance, Nguyen and Bower (2018) identified that tutor support, technological capabilities, and group collaboration strongly influenced PSTs’ co-development of Moodle-based courses. Similarly, Ma et al. (2024) found that intra- and inter-group online collaboration modes were essential for preservice TESOL (Teaching English to Speakers of Other Languages) teachers to holistically develop TPACK using a learning-by-design approach grounded in corpus-based language pedagogy. Backfisch et al. (2023) found that motivational heterogeneity in PSTs’ design groups fostered richer discussions and higher-quality lesson plans, while knowledge heterogeneity hindered individual TPACK development, illustrating that group composition can simultaneously enhance product quality and impede individual learning. Finally, Agyei and Voogt (2015) supported Ghanaian PSTs in collaboratively learning to integrate spreadsheet software, showing that scaffolding authentic experiences and feedback from try-outs substantially enhanced their integration competencies. Collectively, these studies contribute to a richer understanding of how to prepare teachers for designing technology-enhanced learning activities. Nonetheless, scholarly work in this area remains limited (Papanikolaou et al., 2017; Voogt et al., 2015), highlighting the need for further innovative approaches to deepen insights into supporting PSTs’ design capabilities.

Arguably, the enactment of CD across research contexts varies considerably in the extent to which it approximates authentic design practices. By nature, design entails tackling ill-structured, context-bound problems that demand the exploration of multiple solutions, the reconciliation of competing constraints, and iterative refinement in continuous dialogue with users and settings (Jordan, 2016). Within technology-enhanced learning, this means that teachers must make strategic decisions about how and when to integrate digital tools to serve specific pedagogical goals, while simultaneously navigating school-specific realities such as infrastructural limitations, learner diversity, and institutional policies (Nguyen & Bower, 2018). However, in preservice CD research, this inherent complexity is often deliberately simplified to accommodate PSTs’ status as novice designers. One common approach is to pre-select technological tools and provide focused technical training before engaging PSTs in design activities (e.g., spreadsheet software in Agyei & Voogt, 2015). In addition, design tasks are often situated in fictitious or decontextualized scenarios (e.g., Lachner et al., 2021; Nguyen & Bower, 2018; Ma et al., 2024), allowing PSTs to make unconstrained design choices and bypass the contextual realities that fundamentally shape decision-making in authentic classroom settings, including timetabling, infrastructure, and student characteristics. Further variation concerns the authenticity of the design products: some studies confine tasks to lesson blueprints (e.g., Ma et al., 2024; Nguyen & Bower, 2018), while others have PSTs create and implement learning materials, either in microteaching simulations (e.g., Agyei & Voogt, 2015; Lachner et al., 2021) or authentic classroom contexts (da Silva Bueno & Niess, 2023). Although all of these forms of scaffolding are pedagogically justifiable to mitigate cognitive overload, situating CD more firmly within the everyday work of teaching may help PSTs not only better understand (Ruthven, 2009), but also become better prepared to navigate the complex real-world challenges of incorporating technologies into classroom practice (Banas & York, 2014; Lee & Lee, 2014). Nevertheless, it remains underexplored whether and under which supportive ecologies of resources, guidance, and task structures CD settings can effectively foster PSTs’ digital competencies (Baran & AlZoubi, 2024), especially when they more closely mirror the authentic design conditions encountered by in-service teachers (e.g., tool autonomy, contextual realism, material development, and implementation opportunities), which forms the focus of this study.

1.4. Research Questions

This pilot study forms part of a broader design-based research project aimed at preparing PSTs for technology integration in an increasingly digitalized educational context. Addressing gaps in understanding how SQD strategies can be holistically implemented within authentic CD settings that reflect in-service teaching realities, the study examines final-year preservice mathematics teachers’ experiences in a ten-week CD intervention. Designed to approximate real-world design conditions (e.g., tool autonomy, student diversity, infrastructural limitations), the intervention holistically integrated all six SQD strategies and tasked participants with developing technology-enhanced learning materials for authentic design challenges (ADCs) posed by in-service teachers. The research questions guiding this study are as follows:

- RQ1. How do preservice teachers’ perceived digital competencies change during an SQD-aligned, authentic collaborative design course?

- RQ2. How do preservice teachers perceive specific SQD-aligned course elements (i.e., instructional design, collaboration, reflection, feedback, authentic experiences, role models) in supporting their technology-related design processes?

2. Materials and Methods

A complementary mixed-methods design (Creswell & Clark, 2017) was employed to provide a comprehensive understanding of PSTs’ perceived growth in digital competencies and their experiences within a collaborative design intervention intentionally incorporating the six SQD strategies. Quantitative data were collected to assess perceptions of digital competence development and the presence of SQD strategies while semi-structured interviews further illuminated participants’ perceptions of their competence development and how specific SQD-aligned course elements supported their design processes.

2.1. Collaborative Design Intervention

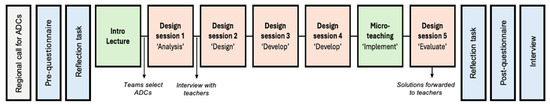

A 10-week CD intervention was embedded in a compulsory ICT in Mathematics Education course in the penultimate semester of a Flemish teacher training program. The 23 participants reflected the full cohort of prospective secondary mathematics teachers entering their final year; while course participation was mandatory, participation in this study’s measurements (questionnaires and interviews) was voluntary and had no bearing on course results. The intervention was co-designed with two mathematics teacher educators and explicitly incorporated the six SQD strategies (see Table 1), drawing on insights from CD literature (Ma et al., 2024; Nguyen & Bower, 2018; Yeh et al., 2021). In total, the intervention comprised seven in-person sessions of 3–4 h each, including an introductory lecture, five design sessions, and a microteaching (see Figure 1).

Table 1.

Overview of SQD strategy implementation in the intervention.

Figure 1.

Visualization of the CD intervention.

2.1.1. Participants, Teams, Authentic Design Challenges, and Designed Solutions

The participants in this study were 23 final-year preservice secondary mathematics teachers (Mage 22.4 years, SDage = 3.03), with nine identifying as male and fourteen as female. As they were in the final year of their three-year teacher training program, their digital competencies had already been partially developed through various program components, including a course on general tools (e.g., Padlet, H5P), social media, and interactive whiteboards, as well as a separate mathematics-specific course focused on GeoGebra. Additionally, each participant completed two two-week internships, through which, due to the increasing digitalization in Flemish education, they gained some initial experience in integrating technology into their teaching. For the intervention, PSTs organized themselves into six design teams of three to four members.

The six design teams were tasked with designing learning materials for an authentic design challenge (ADC): real-world instructional challenges submitted by in-service mathematics teachers through a regional call (see Appendix A for examples). PSTs were granted full agency over their design choices, including the selection of technological tools, instructional methods, format, and scope. This autonomy was guided by two key criteria: technology integration had to add instructional value, and solutions needed to be contextually aligned with the classroom context of the associated mathematics teacher. The ADC approach was deliberately integrated to reflect the complexity and real-world significance of educational practice, drawing on the characteristics of authentic learning environments identified by Herrington et al. (2014), which underscores the value of situated, ill-defined, and complex tasks that require sustained investigation and culminate in polished, practically valuable products. In this way, the ADCs provided a realistic, situated context for developing technology-enhanced learning materials intended for actual classroom implementation. Appendix A provides a detailed overview of each team’s composition, selected ADC, and designed solution.

2.1.2. Procedure of Intervention

The intervention commenced with an introductory lecture designed to prepare PSTs for the CD process. The session first contextualized the ongoing digitalization of Flemish secondary education (e.g., the Digital Leap2) and highlighted the increasing demand for strong digital competencies among teachers across Europe (e.g., DigCompEdu framework). Drawing on a prior self-reflection assignment, PSTs were invited to assess and discuss their own digital competencies in small groups, surfacing initial beliefs and identifying areas for further growth. Next, the pedagogical potential of technology for mathematics education was discussed, facilitated by insights from studies conducted by the authors with in-service Flemish mathematics teachers, detailing their most frequently used digital tools and their underpinning pedagogical rationales (Cabbeke et al., 2022, 2024). Building on these insights, a curated set of tools, categorized according to McCulloch et al.’s (2018) framework, was introduced. From each category (e.g., dynamic mathematical environments, learning management systems, instructional software, assessment tools), one representative tool was demonstrated, providing concrete illustrations of pedagogically grounded technology use, including brief hands-on explorations. Additional tools were presented in an overview to broaden PSTs’ awareness of possible resources. Finally, the pedagogical integration of technology was emphasized through the introduction of the TPACK framework (Mishra & Koehler, 2006) and the 4E framework (Cabbeke et al., 2024). In groups, PSTs critically reflected on their prior technology-rich experiences using these frameworks, discussing pedagogical value and intended learning impact. The session concluded with the formation of design teams and the selection of an ADC to guide their collaborative work.

Following this, PSTs engaged in five structured design sessions guided by the ADDIE model (Gustafson & Branch, 1997). As novice designers, they were supported with detailed guidelines via Microsoft Sway that outlined structured activities (Appendix B), templates, an overview of tools, and prompts to encourage pedagogically sound decision-making (Nguyen & Bower, 2018; McKenney et al., 2022). Such scaffolding aimed to support design decision-making and prevent overlooking essential design steps (Baran & AlZoubi, 2024; Nguyen & Bower, 2018). Teams maintained design journals in Google Docs to consolidate ideas, organize resources, and document reflections. To anchor their work in authentic classroom practice, each team interviewed the in-service teacher associated with their selected ADC, deepening their understanding of the instructional problem, student characteristics, and contextual constraints. Collaboration was facilitated by scheduling face-to-face design sessions. To optimize collaborative dynamics, explicit guidelines in the design journals acted as scaffolds for interaction, reflection, and workload planning, aimed at promoting communication, knowledge exchange, and equitable task distribution (Nguyen & Bower, 2018; Yeh et al., 2021).

Feedback was continuously integrated: the first author served as the main coach, providing just-in-time support on design processes, practical concerns, and technology integration, while mathematics teacher educators were present to offer on-demand guidance on subject-specific teaching matters. Coaching alternated among teams, and to manage this effectively, the six design teams were divided across two parallel sessions during the semester. Furthermore, reflection activities were embedded at each phase, guided by frameworks such as TPACK (Mishra & Koehler, 2006) and the 4E framework (Cabbeke et al., 2024), encouraging PSTs to critically evaluate their design decisions and approaches to technology integration.

To simulate real-world application, a microteaching session was organized between the fourth and fifth design sessions where teams presented a prototype of their design to peers and coaches, receiving feedback to inform final refinements. After incorporating this feedback and completing final adjustments during the fifth and final design session, teams submitted their final designs to the in-service teacher linked to their ADC. A summary table detailing how each SQD strategy was operationalized throughout the intervention is provided in Table 1.

2.2. Data Collection

2.2.1. Quantitative Data Collection

To examine perceived growth in digital competencies (RQ1), five validated scales were administered before (pretest) and after (posttest) the intervention: Technological Knowledge (TK), TPACK Core, Technology Integration Self-Efficacy (TISE), General Attitudes toward Technology (GATT), and Ease of Use (EASE). The TK scale captures teachers’ general technological proficiency (Schmidt et al., 2009). The TPACK Core scale focuses on the intersection of content, pedagogy, and technology, providing a concise measure of teachers’ ability to integrate technology meaningfully into subject teaching (Fisser et al., 2013). The TISE scale (L. Wang et al., 2004) measures PSTs’ self-efficacy beliefs in selecting, implementing, and adapting technology to support instructional goals. Attitudes toward technology were assessed using two scales: the GATT scale (Evers et al., 2009) included items related to overall openness and affective responses to digital technologies (including interest, enjoyment, and perceived usefulness), and the EASE scale, which captures perceived effortlessness in using digital technologies (Scherer et al., 2018; Tondeur et al., 2020).

For RQ2, the SQD-6D scale (Tondeur et al., 2016) was used pre- and post-intervention to assess PSTs’ perceptions of six support strategies, represented as six subscales: instructional design (DES), collaboration (COL), authentic experiences (AUT), feedback (FEE), role models (ROL), and reflection (REF).

Participants indicated their agreement with statements reflecting perceived support in their teacher training across the six SQD domains. Knezek et al. (2023) demonstrated that the six subscales have strong reliability and can be used as independent measures.

All measures were rated on a five-point Likert scale (1 = strongly disagree, 5 = strongly agree). Example items, item counts, and reliability estimates (Cronbach’s α) for each (sub)scale are presented in Table 2. Reliability estimates ranged from 0.70 to 0.94, considered “respectable” to “very good” according to DeVellis and Thorpe’s (2021) guidelines, though they should be interpreted cautiously given the relatively small sample size (Taber, 2017). Nevertheless, the reliability and suitability of these scales have been consistently confirmed in large-scale studies with PSTs (e.g., Evers et al., 2009; Schmidt et al., 2009; Tondeur et al., 2016; L. Wang et al., 2004), supporting their robust application in this research context.

Table 2.

Overview of scales, item counts, example items, and reliability estimates (Cronbach’s α).

2.2.2. Qualitative Data Collection

Semi-structured interviews were conducted following the final design session to examine PSTs’ perceptions of their digital competence development and the support encountered during the CD process. At the start and end of the intervention, PSTs completed reflection tasks on their digital competencies, with the post-task additionally addressing perceived changes. Insights from these reflections informed the first part of the interview protocol, which focused on perceived development. Moreover, to identify remaining development needs, PSTs were asked whether and what additional initiatives (e.g., courses, training) they would need within their teacher training program to further develop their digital competencies. The second part explored perceived supportive or constraining course elements, with targeted prompts if the six SQD strategies did not emerge spontaneously. The semi-structured format enabled in-depth exploration of emergent themes while ensuring consistency across participants (Brinkmann & Kvale, 2018). All 23 participants were interviewed in Dutch (M = 43 min), conducted and recorded online via Microsoft Teams, and transcribed verbatim. The dual role of the first author as coach and interviewer, emphasizing that the coach was not involved in formal evaluations or grading decisions, was explicitly clarified to minimize social desirability bias and foster openness. Direct quotations rendered in English were included to illustrate key themes.

2.3. Data Analysis

2.3.1. Quantitative Data Analysis

Quantitative data were analyzed using IBM SPSS Statistics (version 29). For each scale, the distribution of pre–post difference scores were screened for normality using the Shapiro–Wilk test, which confirmed normality (p > 0.05). Paired-samples t tests were conducted to examine differences in PSTs’ perceived digital competencies (RQ1) and perceptions of support strategies (RQ2). Effect sizes (Cohen’s d) were calculated to assess the magnitude of changes. Given the absence of a control group, the analyses cannot be taken as evidence of causal impact but are treated as indicative of potential effects of the course and as quantitative markers interpreted in conjunction with, and further explored through, the qualitative analyses. Interpretation is situated within the semester context, which included no concurrent ICT-related courses or formal internships, thereby narrowing, though not eliminating, alternative explanations.

2.3.2. Qualitative Data Analysis

Qualitative data were analyzed using thematic analysis (Terry et al., 2017) to identify patterns and themes in PSTs’ reflections on digital competence development and supportive or constraining elements of the design process. Analysis of digital competence development (RQ1) was primarily inductive (i.e., shaped by the content of the interview), whereas analysis of supportive course elements (RQ2) adopted a deductive stance, guided by the theory of the SQD model. Coding and theme development were initially conducted by the first author and iteratively discussed and refined with the research team to ensure credibility and minimize potential bias. NVivo software facilitated data organization, coding, and analysis processes (Edwards-Jones, 2014). Qualitative findings were triangulated by comparing emerging themes with quantitative trends, providing a comprehensive understanding of how the intervention contributed to PSTs’ digital competence development and how the SQD strategies functioned as supportive or hindering mechanisms in the CD course.

3. Results

3.1. RQ1: Perceived Changes in Digital Competencies

We conducted paired-samples t tests to examine changes in PSTs’ digital competencies from pretest to posttest (see Table 3). Statistically significant increases were observed in TK, TPACK, TISE, and EASE, with moderate to large effect sizes (Cohen’s d). These increases serve as preliminary, exploratory indicators of possible developments in knowledge, skills and confidence in using and integrating technology over the semester. No significant difference was found in GATT, indicating that general attitudes toward technology remained stable. These quantitative patterns are further examined in the qualitative analysis, which offers a more nuanced account of how PSTs perceived and experienced these developments during the design process.

Table 3.

Means, standard deviations, and results of paired-samples t tests (n = 23) on digital competence measures.

3.1.1. Theme 1: Expanded Technological Awareness and Motivational Gains, Yet Selective Tool Integration Mastery

Most PSTs (20/23; 87.0%) reported a substantial increase in their awareness of technological possibilities for mathematics education. This awareness was attributed to exposure during the introductory session and the freedom to explore tools throughout the design process. Many described realizing, often for the first time, the broad range of technological options available to support mathematics teaching and learning. As Nathan (Team 1) noted, “During that first lesson I realized I didn’t use much ICT in my internships… I realized that you could do so much more (…) there are so many apps that you can use.”

Nearly half of the PSTs (12/23) also indicated that the freedom to explore technologies helped them realize new technologies were less difficult to learn than they had initially feared, boosting their confidence and sense of self-efficacy. Hannah (Team 1) explained, “Before (the course), I was afraid and thought ‘I can’t do much with ICT’. (…) Whether I will use lots of ICT from now on, that I don’t know yet. But now I’m like: ‘I know I can work with ICT, so I don’t need to be afraid of it’. I pretty much overcame that, the fear of using ICT.”

When discussing their attitudes, PSTs referred to insights from their preparatory reflection tasks. All PSTs indicated that they already held generally positive views on the value of technology in mathematics education before the course and felt this stance largely remained. However, about nine PSTs explicitly mentioned that they had become more critical and reflective about when and how technology truly adds value. As Lisa (Team 3) described, “I already thought ICT could be useful, but I became more critical about whether you really need a tool or if it’s better to stick to pen and paper. You should ask: does the tool really offer an advantage, or is it just a distraction from the learning?” Meanwhile, three other PSTs noted that they now realized how much time and effort it takes to develop technology-based materials, which made them somewhat more apprehensive about integrating technology in their future teaching.

Despite broad awareness and generally positive motivational dispositions, only 13 PSTs (56.5%) explicitly reported gaining in-depth mastery of the tools they adopted, both in terms of technological functionalities (TK) and their pedagogical application in math education (TPACK). They attributed this deeper knowledge to active hands-on experimentation, dealing with unexpected challenges, and finding ways to leverage tools’ affordances for pedagogical intentions. As Mark (Team 4) described, “I thought it was good to learn what’s out there and then have the time to explore it further on our own later. By diving deeper into the tools we used, I think we learned much more (…) because you have to figure things out yourself, run into challenges, make adjustments, and find ways to make it work.”

3.1.2. Theme 2: Efficiency-Driven Division of Development Tasks

Building on Theme 1, which described selective tool mastery among some PSTs, further analysis showed that many PSTs considered the way they divided development tasks among team members as a potential factor that might have limited their opportunities for digital competence growth. Across all six teams, PSTs described dividing tool-related development tasks after agreeing on their design direction. In four teams, responsibilities were distributed relatively evenly, with different pairs engaging with different tools and developing parallel expertise, though with minimal crossover between members. In the remaining two teams, one or two technologically proficient members assumed primary responsibility for nearly all tool-related tasks, while others focused on activities such as sourcing open educational resources (e.g., GeoGebra applets), drafting manuals, or updating progress reports. As a result, some PSTs had no hands-on experience with any tools during the development stage. Rick (Team 5) describes, “They liked to put that with me because I already knew the tool, so it was the most time-efficient option. But in the end, I think I was the only one who learned to use new software.”

PSTs generally described their division of tasks as the most practical and efficient way to make progress within time constraints. As Thomas (Team 4) noted, “That’s the thing with group work. It’s always intended for everyone to learn from each other, but in the end, the work gets divided, and the quickest way is chosen.” In addition, some PSTs noted that certain tools did not support real-time collaborative editing across different accounts or devices, which further encouraged individualized rather than shared engagement. These accounts illustrate that, even though PSTs initially explored tools collectively during design ideation, hands-on engagement during the development phase ultimately remained confined to specific tools and individuals. Overall, PSTs perceived this efficiency-driven division of labor as a sensible strategy to efficiently achieve design progression but also recognized it as a missed opportunity for more comprehensive tool mastery among all team members.

3.1.3. Theme 3: Familiarity

Another factor several PSTs (7/23) explicitly noted across different teams as potentially limiting their digital competence development was their strong reliance on familiar tools. These PSTs described how known tools made it easier to envision and design solutions, while adopting new tools was perceived as too time-consuming and uncertain. As Thomas (Team 4) explained, “It’s just much easier with something like, for example, GeoGebra, where you know exactly what you can do with it. I know I can work with vectors, link things together… and from there, your ideas grow. But with something you’re not familiar with, it might be interesting, but… yeah.” Moreover, other teams initially ventured into using novel tools but ultimately reverted to familiar options after encountering practical constraints. For example, Team 3 began with Symbaloo to create personalized learning paths but found the tool’s features too limited to support this concept, leading them to switch to Padlet and ultimately abandon the idea of personalization altogether. As Steven (Team 3) summarized, “Symbaloo just didn’t work the way we envisioned. In the end, we chose Padlet because it was simpler and we knew it would function reliably.” Similarly, Team 6 started with Classmaster.io but faced restrictions, such as a limited number of flashcards they could create, which prompted a return to the more familiar H5P. As Carol (Team 6) explained, “Classmaster.io quickly became impractical. Going back to H5P felt like a safer and more efficient choice.” Analysis confirmed this broader tendency, as only two teams ultimately integrated novel tools (e.g., Team 1: Microsoft Sway, Team 4: Gather) into their final designs, and all teams included at least one familiar tool. Together with the unequal division of development tasks to more tech-savvy team members (Theme 2), these patterns underscore the difficulties PSTs experienced in exploring, selecting, and integrating new technologies, suggesting a need for more structured guidance. This conclusion is further reinforced by PSTs’ answers on remaining training needs in their program: 18 out of 23 PSTs indicated a desire for more in-depth, tool-specific initiatives, such as subject-specific tool workshops (16/23), individual tool assignments or assessments (5/23), and program-wide portfolios (3/23).

3.2. RQ2: Perceived Support of SQD-Based Course Elements

We conducted paired-samples t tests to examine changes in PSTs’ perceptions of the SQD strategies (see Table 4). Given that PSTs were not enrolled in other ICT-related courses during the semester in which the CD course was implemented, it can be assumed that observed changes reflect experiences within this course. Significant increases were found for design, collaboration, reflection, and feedback, indicating that these strategies were salient and perceived as integrated. In contrast, no significant changes were observed for authentic experiences and role models. Notably, the increase for role models approached significance, suggesting variability in whether this strategy was perceived among PSTs. While these findings indicate which strategies were most visible to PSTs, they provide limited insight into how these elements supported or constrained their design processes. To explore this further, we draw on themes from the qualitative analysis.

Table 4.

Means, standard deviations, and results of paired samples t tests for pre- and posttest scores on SQD-6D measures.

3.2.1. Theme 4: Complementary Layers of Guidance

PSTs consistently described how different supports worked together to guide them across the design process. Rather than relying on a single source, they emphasized how the combination of structured templates, just-in-time coaching, and microteaching feedback helped them manage the task’s open-endedness. At the core of this support system were the design guidelines provided via Microsoft Sway (SQD: instructional design), which most PSTs (19/23) described as helpful in structuring their sessions and reducing uncertainty. As Lindsay (Team 6) noted, “Without the Sway, I would have no clue on how to start.” Similarly, Chelsea (Team 1) reflected, “To be honest, I’m super positive because I remember feeling worried that we weren’t going to receive much guidance (…) But those Sways provided clear steps that we could follow for should we get stuck or should we have no idea on how to get started.” Teams engaged with these materials in different ways: some used them sequentially as a step-by-step guide, while others treated them more flexibly as a checklist to monitor progress and ensure that essential design steps were addressed.

Complementing these guidelines, PSTs (17/23) valued just-in-time coaching as support they could draw on when unexpected questions or challenges arose. They described it as particularly useful for addressing tool-related issues or design decisions not covered by the guidelines, but also for prompting them to think beyond their initial ideas. As Samuel (Team 4) reflected, “We were set on just using instructional videos, but then we were asked how that would actually help students learn. It made us rethink our plan and come up with something more creative.” Others noted how the presence of a coach helped resolve uncertainties within their teams. Noah (Team 2) recalled, “I remember disagreeing on how the teacher should guide students if we used learning paths, but the guidance helped us figure out what role made sense.” Several PSTs (6/23) also appreciated knowing their progress was being monitored, which reassured them that they would be “steered back on track if needed” (Elena, Team 4). In contrast, support from the teacher educator on subject-specific issues was rarely sought (2/23). As final-year PSTs, most (18/23) felt responsible for resolving content-related challenges themselves. Ruth (Team 2) explained, “We only had one small question about a congruency rule. But mostly, we figured it out ourselves—that’s what we’ve been trained to do.”

PSTs (17/23) also identified the microteaching session as a meaningful form of guidance, offering the opportunity to test prototypes and receive peer and instructor input (SQD: feedback). This moment helped them validate design choices, identify areas for improvement, and refine their materials based on targeted suggestions. However, 13 PSTs suggested that the feedback came rather late in the process, advocating for an additional feedback moment earlier to confirm or revise initial design ideas and gain inspiration from peers from other teams. As Mila (Team 2) explained, “We were advised to add audio-bites (…) but it would have taken too much time to still make those. It would’ve been better to hear that earlier.”

3.2.2. Theme 5: Engagement with Authentic Contexts

All PSTs (23/23) reported that the ADCs enhanced their motivation and effort, with seventeen noting they invested greater time and care knowing their work would be used by a real teacher. As Nathan (Team 1) reflected, “If we just had fictive cases, it would just feel like an assignment. Now you really help someone.” This connection to real-world school contexts was further reinforced through interviews with in-service teachers, which 15 PSTs described as valuable for clarifying the problem, understanding classroom conditions and prior attempts, and aligning their designs with the teacher’s needs. Moreover, engaging with such concrete classroom conditions (e.g., student needs, technological limitations) was seen by 13 PSTs as realistic preparation for their future teaching practice. As Ricky (Team 3) reflected: “Without that [authentic context] we might have had even more decisions to make. But now the context was specified, and so were our options […] this is exactly something we’ll need to be able to do if we want to use ICT in the future […] if the school doesn’t have tablets, then the school doesn’t have tablets […] these restrictions push you to think of ‘Okay, within this context, what is most useful?’” Echoing this view, sixteen PSTs noted that such contextual characteristics prompted deeper reflection on what and how digital tools could be meaningfully adopted (SQD: reflection). Similarly, ten PSTs explained that the parameters set by their ADC helped them manage the openness of the design task and guided their design decisions (SQD: instructional design). Two contrasting cases, however, underscored the importance of calibrating how classroom conditions shape PSTs’ design agency: one team struggled with a brief that they described as too vague to guide their design direction, while another team felt limited by a teacher’s preference for game-based learning, which they felt restricted their creativity. Finally, despite the authenticity afforded by the ADCs, a few PSTs (3/23) noted that the absence of full classroom implementation beyond microteaching limited their ability to assess the practical impact of their designs.

3.2.3. Theme 6: Scaffolding Technology Integration

PSTs consistently highlighted the value of support structures that facilitated the exploration and integration of digital tools, while also signaling, often indirectly, a need for more scaffolding, suggesting that the autonomy in technology integration was not always easy to navigate. For instance, beyond its practical benefits for dividing tasks (see Theme 2), collaboration was widely valued for idea generation; 16 out of 23 PSTs emphasized how peer exchange enriched their design thinking, leading to more innovative and well-developed solutions (SQD: collaboration). However, three PSTs noted that working in homogeneous teams, composed entirely of peers from the same teacher education program, sometimes constrained idea diversity related to technology-enhanced learning. This need for broader input was echoed in 13 PSTs’ calls for earlier peer feedback opportunities (see Theme 4), which were not only aimed at validating their ideas but also at gaining exposure to other teams’ technology-related knowledge bases (SQD: Feedback).

PSTs’ strong appreciation for initial tool familiarization (see Theme 1) further underscores the importance of early support. In this context, the tool demonstrations provided during the introductory session (SQD: role models) were interpreted in two distinct ways. Ten PSTs found the brief demonstrations and hands-on exposure to tools appropriate, aligning with the ill-structured, problem-based nature of the task. In contrast, seven PSTs expressed that they would have benefited from more in-depth modeling, including step-by-step technical walkthroughs and more elaborate pedagogical examples to better understand how tools could be used in practice. As Flora (Team 6) explained: “I find it quite difficult to work with apps or websites that I don’t know very well. In those first lessons, certain apps were covered, but for me, it would have been more useful if there had been someone who demonstrated step-by-step how you use a specific tool.” This divergence in needs may help explain the near-significant effect for modeling (p = 0.07) observed in the quantitative data.

4. Discussion

This mixed-methods study examined whether a CD course grounded in authentic design challenges could foster final-year preservice mathematics teachers’ digital competencies (RQ1) and how SQD-aligned course elements (RQ2) supported or constrained their design for technology-enhanced learning. Regarding RQ1, quantitative results showed moderate to large gains in both cognitive (TK, TPACK) and motivational (EASE, TISE) dimensions, while general attitudes toward technology (GATT) remained stable, likely reflecting already positive baseline dispositions. While causality cannot be inferred given the study design, the observed differences can be regarded as exploratory trends that align with prior research demonstrating CD’s potential to strengthen both technology-related knowledge bases and confidence (e.g., Fu et al., 2022; Lachner et al., 2021; Ma et al., 2024). Moreover, qualitative evidence suggested that these changes largely reflected increased awareness of technology integration in mathematics rather than deep mastery of specific tools (TK) or their pedagogically meaningful application (TPACK), reported only by a subset of PSTs. Two factors appeared to constrain more substantive growth. First, PSTs frequently defaulted to familiar tools when novel options seemed risky, impractical, or time-intensive, consistent with prior evidence on (preservice) teachers’ risk-aversion and conservative tool selection under time pressure, uncertainty about technology’s value, and low confidence in new tools (Howard, 2013; Rowston et al., 2021; Ottenbreit-Leftwich et al., 2018). Second, during the development phase, efficiency-driven task allocation, where tool-related work was divided in parallel or delegated to more proficient members, signaled a shift from collaboration, defined as mutual engagement in joint problem solving, toward cooperation, characterized by parallel work and division of labor (Dillenbourg, 1999), a shift exacerbated by limited synchronous co-development affordances in digital tools. Overall, while CD effectively enhanced both cognitive and motivational dispositions, these findings underscore the need for additional scaffolding to promote deeper, more equitably distributed expertise, as further explored in the discussion of RQ2.

Regarding RQ2, quantitative results showed significant gains for design, collaboration, reflection, and feedback, indicating these SQD strategies were most salient, while authentic experiences and role models remained less visible. Qualitative findings elaborated on these patterns, yielding three dominant themes on perceived support. First, PSTs emphasized the value of layered guidance as complementary supports for navigating open-ended design tasks: Guidelines structured sessions, reducing uncertainty and preventing premature progression, while coaching responded to unforeseen challenges, offered help beyond the guidelines, prompted PSTs to rethink initial ideas, and supported negotiation within teams. Their strong appreciation for these supports aligns with calls for substantial guidance to offset PSTs’ limited design experience (Baran & AlZoubi, 2024; Jonassen, 2010). These findings also reflect Wu et al. (2019) which, in STEM learning design, showed that static scaffolding relieves novices of procedural burden by emphasizing key steps and principles, while adaptive scaffolding regulates emergent issues, fosters reflection and negotiation, and promotes flexibility. Wu et al. (2019) recommend complementing these scaffold types to balance structure with creative inquiry, an interplay mirrored here. Additionally, PSTs’ calls for earlier peer feedback during ideation to confirm their design ideas and draw inspiration from other teams underscore the importance of scaffold timing. For novices, such feedback can validate early directions, surface overlooked alternatives, and support incremental refinement, with research showing how early and iterative feedback fosters reflection and more coherent design development (L. J. Xu et al., 2025) and how inter-group peer feedback can add new perspectives, improve lesson design, and foster TPACK development (Ma et al., 2024). Together, these findings highlight how both the layering and temporal placement of scaffolds are critical in supporting novice designers.

Second, our findings show that embedding PSTs’ design work in authentic classroom contexts, through ADCs and interviews with in-service teachers, fostered motivation, sustained effort, and encouraged reflection on designing technology-enhanced learning that accounted for real classroom conditions. This offers empirical support for calls to integrate authentic contexts into teacher education to make design tasks more engaging (Jia et al., 2018; Nguyen & Bower, 2018) and to better prepare prospective teachers to face practical challenges such as classroom management, technical issues, and contextual constraints (Dockendorff & Zaccarelli, 2024; Nguyen & Bower, 2018). These authentic conditions also helped focus PSTs’ decision-making, a process further reinforced through interviews that offered context-specific insights and deepened their understanding of classroom realities. As such, the interviews in our course align with the function of needs assessments described by M. Xu et al. (2022), supporting PSTs in refining problem definitions and engaging in grounded, needs-driven decision-making. At the same time, our findings highlight the importance of calibrating task openness. While contextual constraints productively narrowed the design space, overly vague briefs created uncertainty, and overly prescriptive ones restricted agency. This reflects Jonassen’s (2010) view that design problems, though inherently ill-structured, vary in structuredness and require careful monitoring for novice designers. Similar issues have been observed in prior work, where collaboration with in-service teachers produced tasks that were either too easy or overly demanding, challenging PSTs’ ability to manage complexity (Jia et al., 2018). Finally, authenticity did not emerge as a significant SQD strategy in our quantitative data, indicating that while designing for authentic design contexts was valuable, further experiential learning through classroom implementation beyond simulations such as the microteaching used in this study may be beneficial. This aligns with He and Yan’s (2011) argument that the artificiality of microteaching can limit the development of real-world teaching competence, underscoring the need to complement it with richer school-based experiences.

Third, the findings highlight a need for additional scaffolding in technology integration processes. While collaboration, modeling, and feedback were valued supports, consistent with the SQD model (Tondeur et al., 2012), PSTs’ calls for earlier peer feedback to gain inspiration, requests for more extensive tool modeling, and reliance on familiar tools or expertise-based task divisions collectively suggest that navigating autonomy in technology integration was challenging and warrants stronger scaffolding. One well-established approach to fostering productive collaboration in CD is to equip (preservice) teachers with requisite knowledge prior to design activities (Yeh et al., 2021), often through modeling pre-selected digital tools combined with tool-specific workshops. Such preparation can address knowledge gaps within teams (Vogel et al., 2022) and mitigate potential drawbacks of team heterogeneity (Backfisch et al., 2023). However, it remains an open question whether modeling is most effective when centered on specific tools or whether it would also hold benefits if directed toward technology-integration decision-making processes, such as the exploration, selection, and integration of digital tools. While tool-specific modeling has demonstrated effectiveness (Tondeur et al., 2012), it risks constraining creativity and diminishing the problem-based nature of design, as PSTs may be inclined to replicate demonstrated lesson ideas rather than develop their own (Agyei & Voogt, 2015). By contrast, scaffolding decision-making processes may better support PSTs’ technology integration efforts (Yeh et al., 2021), equipping them with the skills needed to navigate and integrate unfamiliar tools both within CD contexts and, ultimately, with facing the complexities related to technology integration in their future classrooms. Clarifying how modeling can be most effectively leveraged to build PSTs’ confidence and capacity in technology integration, therefore, represents an important avenue for future research.

This study advances research on the holistic application of the SQD model (e.g., Buchner & Hofmann, 2022; da Silva Bueno & Niess, 2023; Lachner et al., 2021) by demonstrating how its six strategies, when embedded in authentic CD contexts, can interact to support PSTs’ digital competence development. The findings illustrate that these strategies function dynamically rather than in isolation: engaging PSTs in CD (‘instructional design,’ ‘collaboration’) within authentic contexts (‘authentic experiences’) prompted ‘reflection’, while ‘collaboration,’ ‘feedback,’ and ‘modeling’ appeared to jointly support design efforts (‘instructional design’), albeit with indications that additional scaffolding is needed. In doing so, the study contributes empirical evidence to ongoing scholarly work on the dynamic interactions between SQD strategies (e.g., Howard et al., 2021; Tondeur et al., 2021). Furthermore, by adopting a qualitative lens, this study responds to calls for fine-grained analyses of how SQD strategies unfold in practice (Baran et al., 2019; Buchner & Hofmann, 2022). For instance, it highlights how collaboration can facilitate design ideation but also risks devolving into task division, and how feedback, though valued, might be more impactful if introduced earlier in the design process to guide ideation. Such findings underscore that the effectiveness of SQD strategies is contingent on how they are operationalized, sequenced, and situated within course contexts, thereby further underscoring the value of qualitative inquiry for unpacking how these strategies shape PSTs’ technology integration. Lastly, this study refines understanding of the interplay between authenticity and scaffolding in CD within teacher education. It theorizes that while designing CD courses to approximate in-service teachers’ work (e.g., tool autonomy, contextual constraints, pedagogical complexity) can promote engagement and situated reflection, such authenticity also introduces design complexity and ambiguity that necessitates carefully calibrated scaffolding (e.g., regulating task openness, technology-related modeling) to support novices in managing ill-structured design problems.

Practically, this study demonstrates the value of using SQD as a framework for designing technology-focused teacher education courses and underscores the importance of carefully structuring and sequencing its strategies. For design-based courses, this study offers the following recommendations:

- Anchor design tasks in authentic contexts. Situate design work in real classroom contexts through authentic pedagogical challenges to promote motivation, ownership, and context-sensitive reasoning. Task realism and agency should be carefully calibrated. Additionally, incorporating classroom prototyping may provide additional opportunities for experiential learning.

- Support novice designers through layered scaffolding. Combine static scaffolds (e.g., stepwise design guides) to structure sessions and reduce uncertainty with dynamic scaffolds (e.g., just-in-time coaching) that address emerging challenges, prompt idea refinement, and support team negotiation.

- Embed feedback loops throughout the design process. Courses could integrate early and iterative feedback to expose teams to diverse ideas and validate their design directions. For example, inter-team reviews have been shown to promote deeper learning and strengthen design skills, outcomes, and TPACK (Ma et al., 2024).

- Scaffold technology-integration processes. When given autonomy in tool selection and integration, PSTs often revert to familiar technologies. To lower entry barriers, scaffolds can constrain autonomy (e.g., limiting tool options or pedagogical formats) or build knowledge prior to design engagement. Familiarization through modelling and exploration (Tondeur et al., 2025), operationalized via targeted workshops, demonstrations, or case-based sessions, may enrich homogeneous, limited knowledge bases and promote more balanced team member participation (Backfisch et al., 2023; Yeh et al., 2021).

- Monitor equitable collaboration opportunities. In this study, technology-related development work was unevenly allocated. Efficiency-driven division of labor may be mitigated through brief check-ins to monitor task distribution and by setting explicit collaboration expectations, particularly in heterogeneous teams (Aalto & Mustonen, 2022; Nguyen & Bower, 2018). When digital platforms restrict simultaneous use, identical task division cannot be guaranteed; teams should instead ensure equitable engagement in artefact development, either in parallel (e.g., different tools, sandbox copies, stand-alone sub-artefacts) or consecutively (e.g., rotating edit rights).

- Foster both pragmatic and ambitious forms of technology integration. Collaborative design may risk reinforcing the perception that technology integration is time-consuming and labor-intensive. To counter this, courses could foreground efficient, low-threshold tools (e.g., polling apps, interactive videos, pre-built GeoGebra applets) alongside more complex instructional designs (e.g., digital escape rooms, sequenced learning paths). Attending to efficiency may be essential for fostering both positive attitudes and future engagement with technology integration, as efficiency logics often underpin teachers’ most valued technology-integration practices (Cabbeke et al., 2024; Hughes et al., 2020).

This study is not without limitations. Its small sample, single-institution context, and focus on secondary mathematics limit generalizability, while the absence of a control group precludes strong causal claims. Moreover, the small sample size reduces statistical power, warranting cautious interpretation of the quantitative results (de Winter, 2013). The results should therefore be understood as exploratory indicators, complemented and contextualized by the qualitative analyses. Furthermore, outcomes were primarily assessed through self-report and short-term measures, constraining insight into long-term retention or classroom transfer. Additionally, the dual role of the first author as both coach and interviewer, despite mitigations, may have introduced subtle bias. The small sample also restricted the examination of team-level factors, as teams engaged with different authentic design challenges and exhibited varied collaborative patterns that may have shaped learning processes and outcomes. This study further relied on PSTs’ perceptions of support, offering insight into how SQD strategies were experienced but not necessarily into their pedagogical efficacy. While PSTs’ preferences identified perceived gaps in support, such perceptions may undervalue the role of productive struggle, such as navigating cognitively demanding, open-ended design decision-making, which may be integral to developing technology-integration expertise. Nonetheless, these voices highlight important avenues for further research, including examining the influence of team-level factors (e.g., collaboration patterns, team composition, topic complexity), as well as conducting experimental studies on scaffold optimization (e.g., timing of peer feedback, comparisons of tool-specific versus process-oriented approaches to technology-integration modeling) and investigations into the optimal calibration of authenticity (e.g., varying the specificity of design briefs or integrating design–enactment cycles). Such work would deepen understanding of how SQD strategies can be most effectively orchestrated within authenticity-approximating CD contexts, ultimately advancing the knowledge base on how to prepare PSTs for the complexities of technology-rich teaching.

5. Conclusions

This study indicates that engaging preservice teachers in an SQD-aligned collaborative design course designed to mirror the design realities of in-service teachers, including tool autonomy, classroom constraints, and instructional challenges, may enhance both the cognitive (TK, TPACK) and motivational dimensions (TISE, EASE) of digital competencies. However, these gains might primarily reflect increased awareness and confidence rather than deep tool mastery, limited by PSTs’ reliance on familiar technologies and efficiency-driven task division. The findings also showcase SQD as a dynamic support ecology in which the sequencing and temporal enactment of its micro-level strategies can both enable and constrain learning. Authentic design challenges appeared to foster motivation, effort, and context-sensitive reflection and decision-making, while layered scaffolding through structured guidelines, adaptive coaching, and microteaching supported engagement with open-ended design tasks. PSTs’ calls for earlier feedback, clearer technology-related modeling, and their limited adoption of novel tools highlight the need to better understand how preservice teachers can be supported to manage autonomy in technology-integration processes. Collectively, these insights highlight that the effectiveness of SQD hinges not merely on the presence of its strategies but on their careful and context-sensitive implementation, which is essential to fully realizing their potential in preparing preservice teachers for the complexities of technology integration.

Author Contributions

Conceptualization, B.C. and B.A.; methodology, B.C., B.A. and T.S.; software, B.C.; data curation, B.C.; formal analysis, B.C.; investigation, B.C.; visualization, B.C.; writing—original draft, B.C.; writing—review and editing, B.C., B.A., T.R., and T.S.; supervision, T.S. and T.R.; project administration, B.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This research was conducted in accordance with the Declaration of Helsinki and the ethical rules presented in the General Ethical Protocol of the Faculty of Psychology and Educational Sciences of Ghent University.

Informed Consent Statement

Informed consent was obtained for all the participants.

Data Availability Statement

Requests for anonymized data may be made to the authors.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| PSTs | Preservice teachers |

| CD | Collaborative design |

| ADC | Authentic Design Challenge |

| TPACK | Technological Pedagogical Content Knowledge |

| TK | Technological Knowledge |

| SQD | Synthesis of Qualitative Evidence |

| TISE | Technology-integration Self-efficacy |

| EASE | Perceived ease of technology use |

| GATT | General Attitudes Toward Technology |

| ICT | Information and Communication Technologies |

Appendix A

Table A1.

Overview of design teams: team size, authentic design challenges, and designed solutions.

Table A1.

Overview of design teams: team size, authentic design challenges, and designed solutions.

| Team | Size | Authentic Design Challenge | Designed Solution |

|---|---|---|---|

| 1 | 3 | Students with limited ICT and Dutch skills struggle to apply data analysis concepts (e.g., visualization, central tendency). | Self-directed exercises on data collection (Google Forms) and analysis (Google Sheets) with Microsoft Sway tutorials and YouTube videos enhance understanding and reflection. |

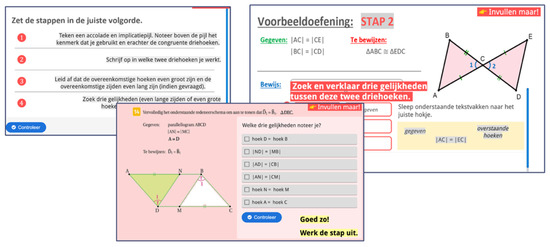

| 2 | 4 | Students with low math achievement and limited Dutch proficiency struggle to understand and prove triangle congruency. | A two-part lesson series using GeoGebra and PowerPoint for visual instruction, followed by H5P learning paths with step-by-step guidance on congruency proofs (Figure A1). |

| 3 | 4 | Students with varying fraction operation skills need differentiated support. | A Padlet learning path with BookWidgets exercises and videos allows advanced students independent practice, freeing the teacher to support students needing extra help. |

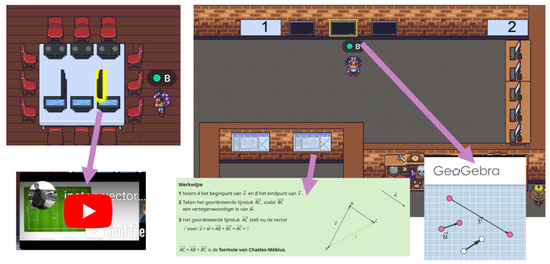

| 4 | 4 | Students with low math proficiency and motivation struggle with independent vector addition learning. | A Gather virtual escape room uses videos, GeoGebra exercises with feedback, and summary posters. Completing exercises unlocks codes to progress through rooms (Figure A2). |

| 5 | 4 | Students find core arithmetic and estimation repetitive and irrelevant; an engaging, self-paced ICT solution is needed. | A “Game of the Goose”-inspired online board game on Tabletop, paired with offline estimation and arithmetic games. H5P-based exercises to activate prior knowledge. |

| 6 | 4 | Students display varied mastery in integer operations; fast learners are bored, slower learners need more practice. | Physical Snakes and Ladders-inspired board game for practicing integer operations. Students use H5P to solve differentiated exercise sets and use an interactive video to learn the game rules. |

Figure A1.

Snapshots of Team 2’s interactive learning path on H5P on proving triangle congruency.

Figure A2.

Snapshot of Team 4’s escape room on Gather on vector addition.

Appendix B

Table A2.

Overview of the collaborative design course.

Table A2.

Overview of the collaborative design course.

| Process | ADDIE | Structured Activities |

|---|---|---|

| Introduction | / |

|

| Session 1 | Analysis |

|

| Interview | Analysis |

|

| Session 2 | Analysis |

|

| Evaluation |

| |

| Design |

| |

| ||

| Analysis |

| |

| Session 3 | Evaluation |

|

| Develop |

| |

| Session 4 | Develop |

|

| Implement |

| |

| Microteaching | Implement |

|

| Evaluation |

| |

| Session 5 | Evaluation |

|

| Design |

| |

| Develop |

|

Notes

| 1 | The Flemish “Digisprong” (Digital Leap) initiative (Flemish Government, 2020), launched in 2020 allocated approximately €385 million—nearly twelve times the usual annual ICT budget—to bolster digital equity in Flanders education. It aimed to provide one digital device per pupil from the fifth year of primary school through secondary education, significantly support school ICT infrastructure (e.g., connectivity, security, software), and enhance teachers’ digital competencies and resources. |

| 2 | See note 1. |

References

- Aalto, E., & Mustonen, S. (2022). Designing knowledge construction in pre-service teachers’ collaborative planning talk. Linguistics and Education, 69, 101022. [Google Scholar] [CrossRef]

- Afari, E., Eksail, F. A. A., Khine, M. S., & Alaam, S. A. (2023). Computer self-efficacy and ICT integration in education: Structural relationship and mediating effects. Education and Information Technologies, 28, 12021–12037. [Google Scholar] [CrossRef]

- Agyei, D. D., & Voogt, J. (2015). Preservice teachers’ TPACK competencies for spreadsheet integration: Insights from a mathematics-specific instructional technology course. Technology, Pedagogy and Education, 24(5), 605–625. [Google Scholar] [CrossRef]

- Alayyar, G., & Fisser, P. (2019). Human and blended support to assist learning about ICT integration in (preservice) teacher design teams. In J. Pieters, J. Voogt, & N. Pareja Roblin (Eds.), Collaborative curriculum design for sustainable innovation and teacher learning. Springer. [Google Scholar] [CrossRef]

- Anderson, S. E., Groulx, J. G., & Maninger, R. M. (2012). Relationships among preservice teachers’ technology-related abilities, beliefs, and intentions to use technology in their future classrooms. Journal of Educational Computing Research, 45(3), 321–338. [Google Scholar] [CrossRef]

- Aslan, A., & Zhu, C. (2017). Investigating variables predicting Turkish preservice teachers’ integration of ICT into teaching practices. British Journal of Educational Technology, 48(2), 552–570. [Google Scholar] [CrossRef]

- Backfisch, I., Franke, U., Ohla, K., Scholtz, N., & Lachner, A. (2023). Collaborative design practices in preservice teacher education for technological pedagogical content knowledge (TPACK): Group composition matters. Unterrichtswissenschaft, 51(4), 579–604. [Google Scholar] [CrossRef]

- Banas, J. R., & York, C. S. (2014). Authentic learning exercises as a means to influence preservice teachers’ technology integration self-efficacy and intentions to integrate technology. Australasian Journal of Educational Technology, 30(6), 728–746. [Google Scholar] [CrossRef]

- Bandura, A. (1977). Self-efficacy: Toward a unifying theory of behavioral change. Psychological Review, 84(2), 191–215. [Google Scholar] [CrossRef]

- Bandura, A. (2001). Social cognitive theory: An agentic perspective. Annual Review of Psychology, 52(1), 1–26. [Google Scholar] [CrossRef]

- Baran, E., & AlZoubi, D. (2024). Design thinking in teacher education: Morphing preservice teachers’ mindsets and conceptualizations. Journal of Research on Technology in Education, 56(5), 496–514. [Google Scholar] [CrossRef]

- Baran, E., Canbazoglu Bilici, S., Albayrak Sari, A., & Tondeur, J. (2019). Investigating the impact of teacher education strategies on preservice teachers’ TPACK. British Journal of Educational Technology, 50(1), 357–370. [Google Scholar] [CrossRef]

- Brinkmann, S., & Kvale, S. (2018). Doing interviews. SAGE Publications Ltd. [Google Scholar] [CrossRef]

- Buchner, J., & Hofmann, M. (2022). The more the better? Comparing two SQD-based learning designs in a teacher training on augmented and virtual reality. International Journal of Educational Technology in Higher Education, 19, 24. [Google Scholar] [CrossRef]