1. Introduction

The speed with which Artificial Intelligence (AI) has been introduced into educational institutions, particularly higher education institutions, has been unprecedented compared to any other technology. Such rapid adoption has generated significant challenges from the outset. Concerns have arisen in training institutions and among teachers, especially regarding academic integrity and ethical issues, as well as biases introduced by AI (

Sullivan et al., 2023). From the beginning, scholars have highlighted two recurrent measures to address these challenges: (a) updating evaluation methods and institutional policies in universities and (b) providing targeted training for teachers and students in the use of AI (

Lo, 2023).

This concern is reflected in the speed with which higher education institutions have developed good practice guides for AI integration. A broad review of these proposals is available in the recent publication by

González (

2024). In the Spanish-speaking context, examples include initiatives by

University of Guadalajara (

2023) and the University of Burgos (

Abella, 2024). These contextual examples suggest that cultural and linguistic factors may shape how AI is perceived and adopted by educators, highlighting potential differences from the predominantly English-language literature.

The accelerated proliferation of AI-related publications exemplifies this global trend, with 98% appearing in English. Six countries emerge as leading contributors with more than one hundred publications each: the United States (25%), China (13%), the United Kingdom (8%), Spain (5%), and both Canada and India (4%) (

Mena-Guacas et al., 2024). The predominance of English underscores the structural opportunities and constraints faced by Spanish-speaking educators, particularly in relation to linguistic accessibility, digital literacy, and the availability of institutional support, all of which may significantly influence the adoption of AI within teaching practice.

The increase in publications has led to a corresponding rise in meta-analyses, which highlight several key points: AI can enhance student learning and act as a student assistant, but poor educational practices remain a concern; teachers require training to use AI effectively; interdisciplinary approaches are recommended; and limitations such as bias, misinformation, and privacy issues must be considered (

Kim, 2023;

Bond et al., 2024;

Casanova & Martínez, 2024;

Forteza-Martínez & Alonso-López, 2024;

García-Peñalvo et al., 2024;

López Regalado et al., 2024;

Vélez-Rivera et al., 2024).

The educational use of AI thus presents both opportunities and challenges. SWOT studies have been conducted to systematically identify these aspects, consulting experts about the strengths, weaknesses, opportunities, and threats associated with this technology (

Farrokhnia et al., 2023;

Jiménez et al., 2023). While these studies provide valuable insights, most of the literature has focused on general higher education contexts. In contrast, distance education introduces specific factors—such as reduced face-to-face interaction, differences in technology access, and varied institutional support—that may influence how teachers adopt AI. These contextual nuances suggest that existing models of AI readiness and digital competence may require adaptation to accurately reflect the realities of Spanish-speaking distance education environments.

Table 1 provides a summary of responses from the SWOT analyses.

This study contributes theoretically by explicitly examining how these contextual factors—language, culture, and distance education settings—interact with teachers’ digital competencies in AI. Unlike previous research, such as

Wang et al. (

2023), which focuses primarily on general higher education populations, this study identifies the unique dynamics of Spanish-speaking distance education, highlighting how cognitive, ethical, and institutional dimensions shape AI adoption. By adapting the structural model to this context, the study provides a more nuanced understanding of AI readiness, offering a framework that can inform targeted teacher training and policy decisions in culturally and linguistically specific educational settings.

2. Teacher Use of AI

Examining AI readiness within the context of distance education is particularly salient, given that this modality depends extensively on digital tools and platforms to facilitate teaching and learning, often with limited direct support from institutions or peers. Educators in distance education therefore encounter distinct challenges when adopting AI, including managing technological constraints, maintaining student engagement remotely, and effectively integrating AI in the absence of face-to-face guidance. Comparative evidence from traditional, in-person settings suggests that while certain trends—such as the beneficial impact of cognitive training on teachers’ capacity and vision—remain consistent, other factors, including perceived risks and the uptake of innovative practices, may differ due to the unique constraints and affordances inherent to distance education (

Celik et al., 2022).

This transformation has always occurred with all technologies that have come to educational institutions, especially the disruptive ones: textbooks and the Internet. Knowing the opinions and beliefs that teachers have about it, the possibilities they grant it, and the fears it arouses are key aspects to understanding how AI is being incorporated and will be incorporated into training institutions.

However, before addressing this aspect, research indicates that AI offers various possibilities of use for educational institutions, which the

European Commission (

2022, p. 14) has established in four broad categories as noted:

Teaching students: Using AI to teach students (student-oriented).

Supporting students: Using AI to support student learning (student-oriented).

Teacher support: AI is used to support teachers (teacher-oriented).

System support: Using AI to support diagnosis or planning at the system level (system-oriented).

More specifically, when talking about ChatGPT,

Jeon and Lee (

2023), in the meta-analysis they carried out on its uses, managed to identify four application roles: interlocutor, content provider, teaching assistant, and evaluator. They also identified three roles that teachers play with it: orchestrating different resources with quality pedagogical decisions, turning students into active researchers, and increasing ethical awareness of AI.

As regards teachers, one of the first aspects to point out is that the majority of their attitudes and degree of acceptance of this technology for the educational use of AI are pretty positive (

Adekunde et al., 2022;

Cabero-Almenara et al., 2024a;

Perezchica-Vega et al., 2024). However, it must also be recognized that they express more significant concern about their excessive dependence and the ethical, social, and pedagogical implications that it may have (

Sullivan et al., 2023;

Yuk & Lee, 2023;

Perezchica-Vega et al., 2024).

These expectations and concerns lead to

Webb (

2024) pointing out that teachers can adopt three positions when faced with AI: avoid it, try to leave it behind, or adapt to it. The last option is the most coherent one, which involves understanding that AI is unavoidable and will soon be a necessary tool for students. This last option is more necessary, on the one hand, because the volume of its use by students is beginning to be broader than even their teachers (

Chao-Rebolledo & Rivera-Navarro, 2024), and on the other hand, because the uses to which it is dedicated could be considered as not at all academic, an aspect that has repercussions on the need for teachers to have good training in order to empower students in the quality and ethical use of AI (

Munar Garau et al., 2024).

As

González (

2024, p. 100) points out: “…it is far from reality, ignoring the fact that this technology is increasingly present and will form part of the necessary skills that students must acquire in order to successfully face the professional activities they will have to carry out in the future, in addition to the fact that doing so means depriving them of tools that, used appropriately, can contribute positively to their training and academic performance.”

At the same time, different studies (

Cabero-Almenara et al., 2024b) have shown that these attitudes towards its use are more significant in teachers with a constructivist conception of teaching than in those with a transmissive conception. This fact is related to the recent claim to seek new ways of using AI, which has led to transforming the conception of learning “with” technology instead of the traditional vision of learning “from” technology (

Fuertes Alpiste, 2024). This is what

Morozov (

2024) has called using AI to improve the person and not just to augment them.

On the other hand, when attempts have been made to establish relationships between the age of teachers and their gender, research has indicated that male teachers have more significant attitudes towards its use than female teachers and that younger teachers tend to see it as more positive and valuable for training than older ones (

Dúo et al., 2023;

Villegas-José & Delgado-García, 2024).

Now, suppose we initially count on the positive aspect of the significant attitudes that teachers usually have towards AI to be incorporated into teaching. In that case, we also have the limitation, as the teachers themselves recognize, of the limited training they have to incorporate into teaching, management, and research (

Hernando et al., 2022;

Tongfei, 2023;

Temitayo et al., 2024).

Training that is claimed to be associated with media, digital, and computer literacy allows them to understand what AI is, how it is used, and how the fundamental rights of people and students are promoted, so that they are clear about the risks and opportunities that this technology has when applied to training (

Hernando et al., 2022). Such is the importance of training and capacity building that some authors propose it as a future line of research in the field of AI (

Chiu, 2023), and others recommend the development of MOOC courses to facilitate self-training of teachers (

Ruiz-Rojas et al., 2023).

In any case, it must be acknowledged that this aspect of training is a complex task. On the one hand, we have a tool that is relatively young for teachers and students; on the other, the skills that teachers must have concerning this technology have not yet been sufficiently defined since the potential of AI in education has not yet been sufficiently exploited, mainly to provide active and in-depth learning; and finally, because the volume of apps that are constantly appearing and that allow different unforeseen actions to be carried out in a short period poses a difficulty.

Finally, this training is more necessary because if the teacher has adequate training for incorporating AI into teaching, it will also be very valuable for training students in its use (

Ayuso-del Puerto & Gutiérrez-Esteban, 2022). On the other hand, it should not be forgotten that according to different competency frameworks for teacher training, one of the tasks they must perform is empowering students to use a variety of ICTs (

Munar Garau et al., 2024).

4. Results

Regarding the average scores and standard deviations achieved in each of the dimensions that made up the instrument, the values obtained are presented in

Table 3.

The analysis of the means and standard deviations of the different variables shows a positive trend in teachers’ perceptions of their preparation and use of AI technologies and their job satisfaction. Cognition, with a mean of 3.92 and a standard deviation of 0.715, indicates that teachers understand the role of AI in education. The ability to integrate AI into their teaching has a slightly lower mean, 3.73, with a deviation of 0.764, suggesting that, although they feel capable, there is a more excellent dispersion in this ability. At the same time, it is noteworthy that teachers perceive that AI can have great value in creating educational innovation actions with it (4.11). The highest score refers to teachers’ job satisfaction when using AI (4.21). This score is relatively stable among teachers if we analyze as it is the lowest standard deviation of all the scores achieved (0.662). It should be noted that the low scores achieved in the standard deviations indicate that the scores offered by the different teachers are very similar.

The vision (VI) on the opportunities and challenges of AI is high, with a mean of 3.90 and a deviation of 0.769, reflecting an optimistic attitude. Regarding ethics (ET), teachers show a solid awareness of the ethical aspects of using AI (mean = 3.86), although with a slightly higher deviation (0.819), suggesting different levels of understanding or agreement on this aspect.

Perceived threats from AI (PT) have a lower mean of 3.38 with a relatively high standard deviation (0.944), reflecting that some teachers perceive potential risks or challenges associated with AI, although opinions vary considerably. On the other hand, AI-enhanced innovation (INN) scores highest, with a mean of 4.11 and a low dispersion (0.718), indicating that teachers view AI as a positive means of introducing innovative approaches in teaching.

Finally, job satisfaction (JS) is very high, with a mean of 4.21 and a deviation of 0.662. This indicates that teachers generally feel satisfied and proud of their work, possibly influenced by incorporating AI technologies that improve their work experience.

The means and standard deviations are presented regarding the values obtained in each of the items in

Table 4.

The descriptive analysis of the items shows that teachers perceive a high level of clarity in their role in the AI era, with a mean of 4.27 (CO1) and a standard deviation of 0.810. They also report a good ability to balance the relationship between AI technologies and teaching (3.94, CO2) and understand how AI technologies work in education (3.74, CO3). Although moderate in terms of skills to integrate AI into teaching, the scores are positive, highlighting the ability to optimize teaching with a mean of 3.99 (AB5) and a standard deviation of 0.867. Scores related to ethics are also high, with an average of 4.20 both in understanding digital ethics (ET1) and in teachers’ ethical obligations (ET2), although items on personal information security (3.33, ET3) show more variability (1.106). Regarding perceived threats, teachers do not alarmingly perceive AI as weakening their role (3.09, PT1), but they express concerns about reducing face-to-face communication (3.36, PT2). Scores on innovation are high, highlighting that AI allows teaching to be organized innovatively (4.19, INN3). Finally, regarding job satisfaction, teachers report high satisfaction with their job (4.28, JS3), pride in their work (4.49, JS4), and that they find their work enjoyable (4.43, JS5).

Ordered from highest to lowest, the five items that received the highest rating from teachers were as follows:

4.49 (JS4) I feel proud of my work.

4.28 (JS3) I am satisfied with my job.

4.27 (CO1) I clearly understand the new role of teachers in the era of AI.

4.20 (ET1) I understand the digital ethics teachers must possess in the era of AI.

4.20 (ET2) I understand the ethical obligations and responsibilities teachers must assume when using AI technologies.

Items that, on the one hand, denote the favourable attitude of teachers towards AI and especially their satisfaction with their work and, on the other hand, are the dimensions “Cognition,” “Innovation,” and “Job satisfaction,” where these items are mainly produced.

As regards the five lowest-rated items, the results were as follows:

3.09 (PT1) I feel that AI technologies could weaken the importance of teachers in education.

3.16 (PT5) In my opinion, the excessive use of AI technologies can reduce the need for human teachers in the classroom, making it difficult for teachers to convey correct values to students.

3.33 (ET3) I use AI technologies to keep personal information safe.

3.36 (PT2) The use of AI technologies has reduced the frequency of face-to-face communication with colleagues and students.

3.58 (PT3) Students’ over-reliance on learning guidance provided by AI technologies can undermine the relationship between teachers and students.

As can be seen, teachers indicate that they do not feel threatened at work or with a loss of social prestige in the “Perceived threats from AI” dimension.

Structural analysis models are becoming increasingly relevant in social research due to their ability to analyze manifest and latent variables. These models facilitate the effective integration of both types of variables (

Alaminos et al., 2015).

Two main methodological approaches predominate in structural equation modelling (SEM): those based on covariances and the partial least squares (PLS) approach. The PLS approach was selected in this study because it does not require the assumption of multivariate normality in the data. The PLS analysis was implemented using SmartPLS 4 software, following the standard phases established for this type of study (

Lévy, 2006;

Sampeiro, 2019).

Regarding the loadings or simple correlations between the indicators and their respective constructs,

Table 5 presents the values obtained. For an indicator to be considered part of a construct, its loading must be close to 0.6 (

Carmines & Zeller, 1979).

It is observed that all values present loads close to 0.7, which ensures that no element was eliminated during the analysis.

The next step is to assess the composite reliability, an indicator of the internal consistency of the set of indicators associated with the latent variables (

Lévy, 2006). This analysis allows us to determine whether the indicators consistently measure the same construct and whether the latent variable is adequately represented. A minimum value of 0.7 is established to consider a good fit. The corresponding results are detailed in

Table 6.

Convergent validity is simultaneously calculated to determine whether a set of indicators reflects a single underlying construct. To do so, Average Variance Extracted (AVE) is used. An AVE value greater than 0.5 is desirable, as it indicates that more than 50% of the construct’s variance can be explained by its indicators The results obtained are presented in

Table 7.

Two main methods are used to assess discriminant validity, which seeks to confirm whether each construct significantly differs from the others: the Fornell–Larcker criterion (

Table 8) and cross-factor loadings (

Table 9).

The Fornell–Larcker criterion states that a construct’s Average Variance Extracted (AVE) must exceed the variance shared with any other construct in the model. In addition, correlations between constructs must be lower, in absolute value, than the square root of the AVE. This is verified by examining the values on the matrix’s main diagonal, which represent the square root of the AVE. These values must be higher than the elements outside the diagonal, which correspond to the correlations between constructs.

Next, cross-loading analysis is carried out, allowing us to verify whether the items assigned to a construct measure the aspect of that construct. To do this, the loadings are expected to be greater in their corresponding construct than in any other, guaranteeing the indicators’ specificity in measuring each construct.

The analyses confirm that the questionnaire items present acceptable reliability and high consistency with the dimensions to which they belong in the model.

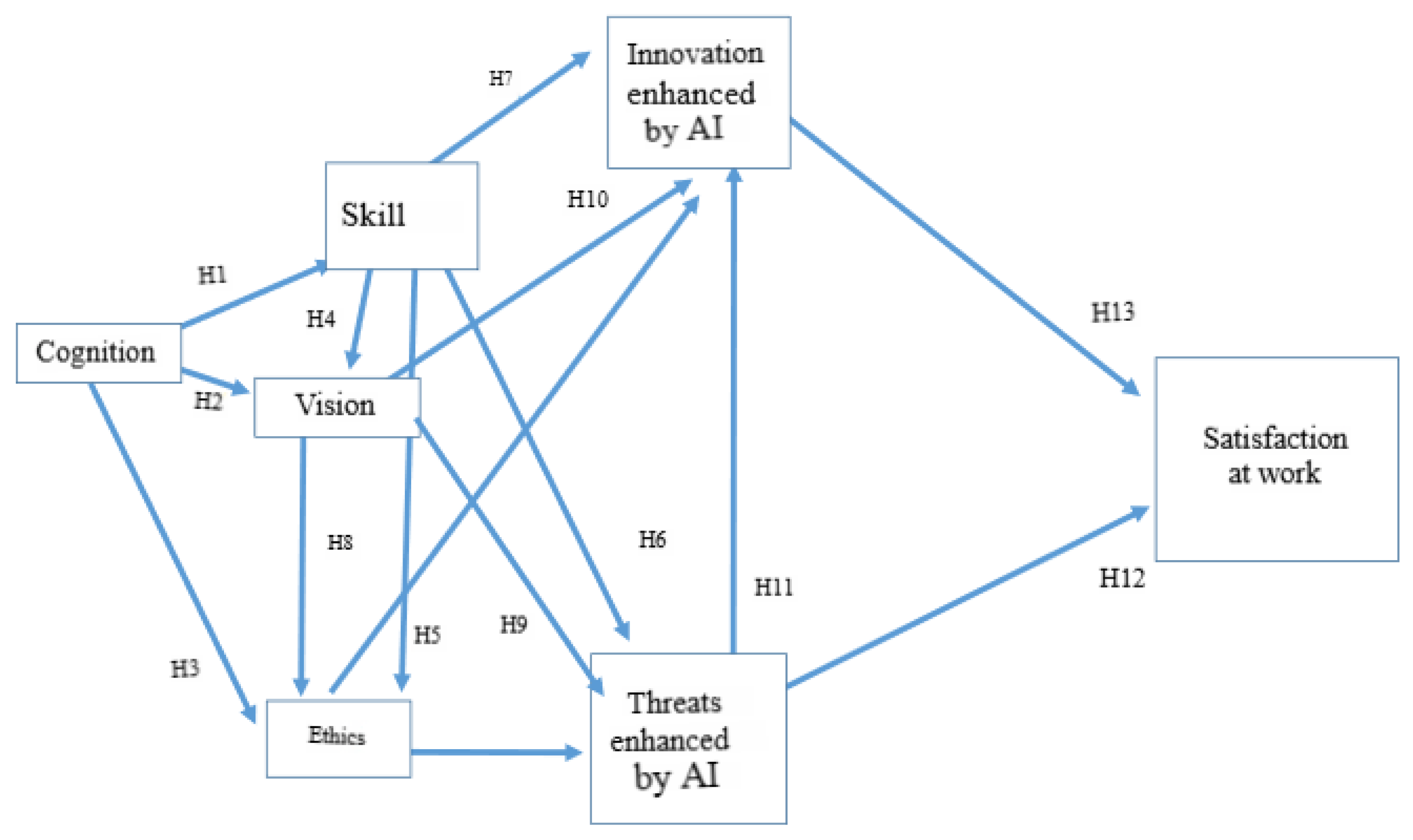

The structural model is then analyzed by obtaining the standardized regression coefficients (path coefficients), the Student’s t values, and the R

2 coefficients of determination. These indicators allow the percentage of variance of the constructs explained by the predictor variables to be assessed, providing key information for assessing the viability and fit of the proposed model (see

Figure 2).

The model reveals significant relationships between its variables, highlighting that cognition (CO) is a strong predictor of capability (CA), with a high weight (0.724), indicating that cognitive development significantly increases the perception of capability, while the latter is also negatively influenced by perceived threats to AI (PT) (−0.381) and positively by vision (VI) (0.366), explaining 52.4% of its variance. Capability, in turn, is the primary determinant of vision (0.680), which shows a high explained variance (61.8%), further strengthened by cognition (0.480), highlighting the role of cognitive skills in organizational alignment. Ethics (ET), with an R2 of 56.8%, is strongly associated with capability (0.680), while its relationship with AI-enhanced innovation (INN) (0.018) and perceived threats (PT) (0.153) is limited. On the other hand, AI-enhanced innovation, which only explains 26.8% of its variance, is moderately influenced by ethics (0.328) and slightly by vision (0.172), highlighting that factors external to the model have greater relevance in this construct. Perceived threats from AI (PT), with an R2 of 17.1%, are positively linked to ethics (0.153) and very weakly to innovation (0.038). Finally, job satisfaction (JS) shows the lowest explained variance (11.9%), with no significant direct relationships in the model, suggesting that it depends on external variables not considered herein. The model highlights the importance of cognition, ability, and vision as central variables, while ethics and innovation have more secondary roles. It also allows for incorporating other factors to understand job satisfaction and innovation better.

The results show that the relationships between the main dimensions of the model are significant. Finally, the SRMR (Standardized Root Mean Square Residual) indicator was used to evaluate the structural model’s goodness of fit. This indicator gave a value of 0.062, less than 0.08, indicating a good fit.

5. Discussion and Conclusions

The results obtained in this study provide a solid basis for understanding the relationships between the dimensions of Teaching Digital Competence (TDC) applied to Artificial Intelligence (AI), highlighting both the reliability of the instrument used and the robustness of the proposed structural model. Firstly, the validated questionnaire’s high overall and dimension-wise reliability confirms its ability to measure AI-related digital competencies in higher education consistently. These values not only exceed the recommended standards but also coincide with previous research, such as that of

Wang et al. (

2023), which consolidates the relevance of the instrument. Other studies using structural equation models in educational contexts (

Bagozzi & Yi, 1988;

Alaminos et al., 2015;

Lévy, 2006) also reinforce the methodological robustness of this approach.

The proposed structural model has proven robust and well-founded, as it does not present negative relationships between the dimensions. This reinforces its usefulness for diagnosing and designing specific training strategies. Furthermore, the adequate correspondence between the items and the dimensions supports its methodological rigour, consolidating its applicability in similar contexts. As noted by

Celik et al. (

2022), the promises and challenges of AI for teachers require solid measurement tools, and this study contributes with empirical evidence in that direction.

A notable finding is the importance of the cognition dimension, which is a key pillar in developing other competencies. This result indicates that any AI training program for teachers should prioritize the conceptual strengthening of cognitive capabilities, which is essential to understanding the possibilities of AI and applying them effectively in teaching and research. The significant influence of this dimension on capacity, vision, and ethics underlines its role in aligning individual competencies with organizational and educational values. This aligns with

Fuertes Alpiste (

2024), who frames generative AI as a tool for cognition, and with

Abella (

2024), who emphasizes that teacher training in the AI era must begin by reinforcing teachers’ conceptual knowledge before practice.

The results also reflect a predominantly positive attitude towards AI on the part of teachers, evidenced by the high levels of innovation and interest in this technology. On the other hand, the “Perceived threats” dimension recorded low scores, indicating that AI is not perceived as a significant risk but rather as an opportunity that educational institutions should take advantage of to promote its integration into teaching practice. This perception is consistent with findings from

Cabero-Almenara et al. (

2024a,

2024b), who report that teachers’ acceptance of AI is strongly mediated by their pedagogical beliefs and trust in technology, rather than by fear of risks.

However, although AI-driven innovation showed a moderate explained variance, its relationship with ethics and vision highlights that these factors are determinants for incorporating AI into teachers’ professional practice. This result connects with the need for a more rigorous ethical reflection in the use of AI in higher education, as pointed out by

Bond et al. (

2024) and the

European Commission (

2022).

Job satisfaction’s low explained variance could be due to factors external to the model, such as working conditions, institutional recognition, or access to resources. This finding suggests that it is necessary to explore how digital skills and AI can be integrated into broader teacher well-being strategies to maximize their positive impact. As

García-Peñalvo et al. (

2024) argue, the new educational reality in the era of generative AI requires not only technological adaptation but also systemic policies that ensure equitable access and support for teachers.

The study has several limitations that should be considered. First, the use of a self-report questionnaire may introduce biases related to the interpretation of questions or the honesty of responses (

Hernández-Sampieri & Mendoza, 2018). Second, the non-random nature of the sample limits the generalization of the results. We have acknowledged potential skewness in participation, noting that certain groups may have been more likely to respond, particularly those with greater interest or confidence in AI. Despite this, the high participation of teachers in distance education at this university partially mitigates this limitation.

Additionally, the questionnaire was administered before the AI training to capture baseline attitudes, providing a clearer understanding of initial perceptions and competencies, which is important for interpreting subsequent changes or effects. It is also worth noting that the explained variance for some key constructs was lower than expected, suggesting the possibility of missing variables or measurement limitations that future research could address.

Several lines of analysis are proposed for future research. First, it would be important to replicate the study in other universities to assess the consistency of results across different educational and cultural contexts. Second, comparing teachers’ digital skills in face-to-face versus distance education modalities and analyzing potential differences would provide further insight.

Another relevant line is the incorporation of sociodemographic variables, such as gender, age, professional experience, and field of expertise, to identify their influence on the model’s dimensions and enrich the analysis. As AI becomes more widely integrated into education, longitudinal studies are recommended to observe how Teaching Digital Competence evolves in relation to AI.