Abstract

This study aims to understand university professors’ perspectives on the learning assessment process, including its importance during teaching and learning, their conceptualization, and their considerations in their practices. The research used a grounded theory approach to recognize evaluation as a dialogical and intersubjective space. The methodology consisted of an open survey and a semi-structured interview with faculty professors from a university in northern Mexico. The findings highlighted the importance of educational institutions, emphasizing that faculties prioritize evaluating quality based on relevance, alignment with learning objectives, continuity throughout the process, and feedback. These aspects align with recent approaches that consider evaluation as a process that promotes learning, as evidenced by the high saturation rate in the theoretical sampling. Furthermore, the study revealed that the institution’s educational model, curricular design, and evaluation policies significantly influence the faculty members’ perspective. As a result, educational institutions must consider these factors when formulating an evaluation model, thereby making the research directly applicable to the work of educational policymakers and university professors.

1. Introduction

The global higher education landscape is influenced by globalization, technological advances (particularly in artificial intelligence), and economic, social, and political factors [1]. In Mexico, private institutions have contributed to increased higher education enrollment, but the diversity in coverage, growth, offerings, and quality varies among these institutions [2]. However, the proliferation of Higher Education Institutions (HEIs) does not automatically ensure growth and development. Legal recognition legitimizes their operations but does not guarantee the delivery of quality education that addresses the challenges emphasized by the United Nations Educational, Scientific and Cultural Organization (UNESCO).

The educational quality of HEIs should involve prioritizing their capacity to accomplish external evaluation and accreditation systems [3]. HEIs should transform their institution’s vision and culture towards providing an education contributing to social, local, and global development. In Mexico, the education sector has been slow to adapt to change, characterized by inertia and a reluctance to embrace new approaches, especially in the learning assessment process [4,5]. Today, assessment must be participatory, empowering, and emancipating for those being assessed, with a comprehensive, socio-critical, and interpretative approach based on constructivism [6,7,8]. The question remains whether schools, which emerged over 200 years ago with structures and practices that are largely anachronistic now, can adapt to alternative assessment approaches that place responsibility and decision-making about learning in the hands of the student.

Evaluation is a strategy that supports learning of a formative nature, provides feedback, and determines improvement as an element of the teaching–learning–assessment trinomial [9,10]. The Joint Committee on Standards for Educational Evaluation [11] defines four quality standards: (1) proprietary standards, which make evaluation legal and ethical; (2) useful standards, which ensure that there will be practical information provided; (3) viability, which refers to time, resources, and participation; and, finally, (4) precision, which reveals that evaluation is technically adequate.

The forms of learning, the time spent on it, and the results achieved in acquired competencies are directly conditioned by the evaluation methods, the faculty member’s evaluation capacity, and, generally, by the evaluation system followed [12]. Hess and co-authors [13] suggest that faculty members use different strategies to ensure effective assessments, including continuous evaluation of student progress, familiarity with evaluation models and tools, and constant adaptation and innovation of evaluation practices.

This study aims to understand university professors’ perspectives on the learning assessment process, including the importance placed on evaluation in the training process, their conceptualization, and the considerations they impose on their practices.

1.1. Literature Review

Evaluation is a significant global concern for educational, research, and training policies. Although there has been some progress, there is still a long way to go before evaluation becomes the expected practice in the classroom. In European countries, assessment is a standard faculty competency that helps ensure quality in educational institutions. The European University Association [14] has identified challenges such as inclusive and fair assessment, supporting student agency, and faculty member professional development. Measures recommended to address these include aligning formative and summative assessments, promoting student co-responsibility, and implementing comprehensive approaches to assessment planning. However, experts note that innovative approaches are still needed to fully implement competencies and make equitable assessment a reality [15].

The ministries of education of western and northern Canada [16] have advocated new assessment methods that contribute to learning in Canada. However, implementing modern assessment practices remains challenging due to limited learning opportunities and barriers such as time and resources. Different United States (US) educational organizations have emphasized the importance of formative assessment, but its implementation faces socio-cultural and political resistance to change [17].

In Mexico, reports have pointed to areas for improvement in learning assessment. The Organization for Economic Co-operation and Development [18] has outlined some challenges, including exam-oriented teaching, lack of consistency between classes and grades, and no classroom assessment support. In 2019, the Organisation for Economic Co-operation and Development (OECD) recommended policy changes to foster innovation and quality in higher education [19]. Reviews of studies conducted by educational bodies and researchers on the reality of assessment show that even though assessment has become more integrated into the learning process, loose ends, and grey areas still need to be studied to improve assessment practice.

In this regard, a gap between advances in the field of assessment has been found [20], especially between approaches, principles, and methodologies, which are clearly explained in the literature, and faculties’ assessment practices, which continue with a traditional approach. This was the conclusion of a study on a group of law professors from a Mexican HEI. Similar results were shown by Jaffar et al. [21], who studied the perception of 468 students from 10 universities in Pakistan about assessment tasks and their influence on the learning approach they apply. They concluded that students developed superficial learning strategies due to a negative perception of the assessment task, focused on obtaining a grade, and not achieving learning.

In Spain, researchers compared the perception of students who underwent a formative assessment with others of the same subject whose assessment was traditional, based on the grade, predominantly of a final exam [22]. The results were encouraging and worrying, firstly because, with formative assessment, the perception of learning was more favorable (including involvement in tasks) than with traditional assessment. However, it took time for the students to understand the system; they considered it complex. Furthermore, the student is an actor in the scene, and his appreciation of the evaluation methods can exert an imperceptible tension.

As the studies in these countries make clear, one of the loose ends is faculty competence to carry out the evaluation process and their reflections and considerations about the concept and practice. Other researchers speak of an acculturation of evaluation among faculty. The key lies in the faculty member’s knowledge and beliefs, willingness to incorporate new evaluation methods, and conditions for doing so [23,24].

1.2. Theoretical Framework

In the approach used in this research, the concept of quality is important, so it is necessary to understand this term. Quality is a word that abounds in business texts; it is usually associated with customer satisfaction, cost reduction, error prevention, and continuous improvement [25]. No single concept is referred to when talking about the quality of evaluation. For this research, we have not defined the idea since it is expected to recognize the attributes that denote quality from the perspective and practice of the faculty member. However, it is recognized that the quality of assessment in the classroom is not the same as that required for large-scale evaluation, in which validity and reliability indicators relate to the test and the reagent.

Table 1 details the concept that some scholars have proposed, expanding the quality indicators of large-scale assessment to classroom assessment.

Table 1.

Authors and concepts from studies of quality in evaluation.

Their definitions include evaluation that drives learning, is practical and efficient, and is not conducted excessively. Unlike large-scale evaluation, validity is associated with the evaluator’s interpretation and reliability in having enough information to make such an interpretation.

These concepts allow for an understanding of the meaning of quality in evaluation. It is a broad conceptual framework of quality that transforms what is inherited from psychometrics and adjusts it to the characteristics of classroom assessment.

1.3. Research Questions

Improving educational assessment practices is a global need that requires changing perspectives and teaching practices. While evaluation has opportunities for improvement, it still carries the legacy of psychometrics. Considering the context of this study, this research will look to answer the research questions in Table 2.

Table 2.

Research questions and context of the study.

2. Materials and Methods

This study uses a qualitative comprehensive–interpretative approach, using grounded theory methodology [31,32,33,34], an inductive analytical process, and symbolic interactionism [35,36] as an interpretation framework.

2.1. Sampling

In concordance with the method used, theoretical sampling was determined as the research and coding process progressed [37]. Based on the definition given by the various authors, an initial sample is defined in this study, anticipating that it will not necessarily be the one established at the end of the research since, as will be seen in the next section, each approach to the field generated the need to expand the theoretical sample.

2.2. Open Surveys and Semi-Structured Interviews

Theoretical sampling requires open instruments that collect social, personal, and emotional information about the research question, i.e., faculty members’ conceptions and beliefs about assessment and the strategies with which they carry it out. The application of the instruments constantly varies in direction and structure since, as Glasser [38] has established, there are no protocols in theoretical sampling.

2.2.1. Open Survey—1st Approach

The first batch of information was obtained from a brief survey of three open-ended questions (Appendix S1) for exploratory purposes. The questions were general; they had no preconceptions about the subject, although they were certainly informed by the researcher’s empirical experience. Although Corbin and Strauss [31] indicate that the researcher’s influence should be eliminated, Charmaz and Belgrave [39] argue that eliminating any personal or theoretical preconception is unnecessary, stating that the data reflect the researcher’s and participants’ mutual construction.

It was sent, through a Google form, to 60 professors belonging to a private university; for the selection, their willingness to participate was considered, and the responses of 30 professors were obtained. Table 3 shows the distribution of their disciplinary areas.

Table 3.

Disciplinary area and teaching experience of the professors who responded to the survey.

Seven of the thirty professors are part-time, and the rest are full-time. The first approach involved obtaining extensive indirect answers from the faculty member to recognize the field’s evolution. These data helped to establish lines of contact for a second direct and comprehensive approach.

2.2.2. Semi-Structured Interview—2nd Approach

The second approach to the study was a semi-structured interview. The guide can be found in Appendix S2. Twenty faculty members were interviewed (four from each discipline—business, engineering, humanities, social, and design), and the choice was made for convenience to select heterogeneous groups according to disciplinary area.

Other characteristics such as gender, semester, or academic degree were not considered when selecting participants. An appointment was scheduled with the person who accepted the invitation. It was the first contact with some of the faculty members, and others were the same ones who answered the survey. Conversations in a qualitative study do not begin and end in a single moment; sometimes it is required, as in these cases, to reopen the dialogue because the analysis and theoretical sampling demand it.

Like any semi-structured interview, the questions were presented to the interviewed faculty member according to the course of the conversation [40]. Certainly, the first question was always the trigger, and later, the same answers the faculty member provided prompted other questions considered in the guide. The average time of the interviews was 60 min, carried out with a video call tool due to the ease of recording and reproducing it by listening to and seeing the interviewee’s body language to transcribe it and make the analysis.

2.3. Methodological Strategy

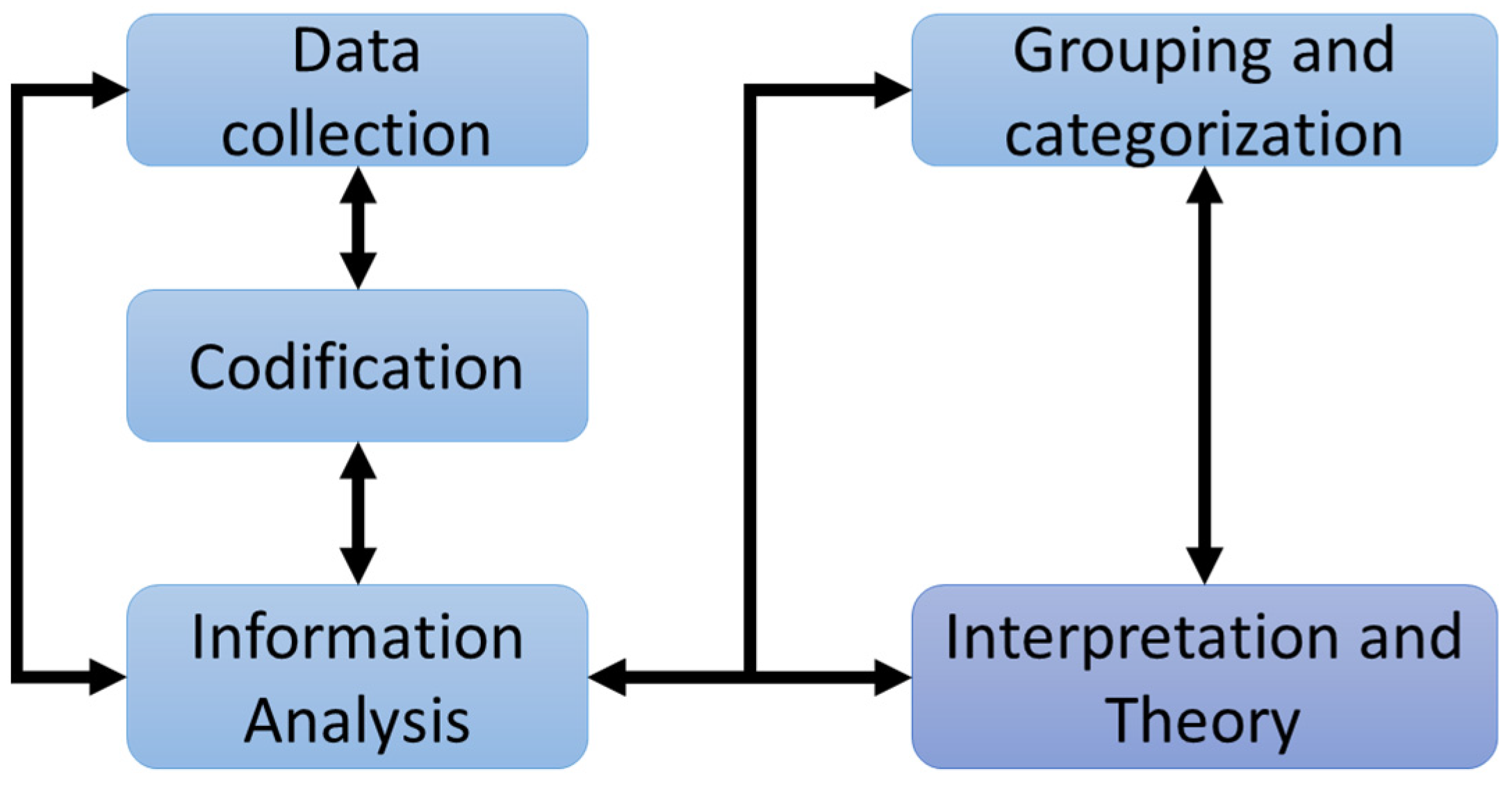

The research protocol was one in which the questions that raised the problem prevailed until the end. The phases in Figure 1, typical of grounded theory, marked an iterative process in which reality was entered and investigated.

Figure 1.

Phases of methodological work. Grounded theory represents the major phases of a qualitative approach.

The phases in the method did not happen linearly. According to grounded theory, analysis and data collection are carried out simultaneously and in a mutually driven manner. For this reason, it is possible to identify moments of approach to reality in which information was obtained and then moments of distance to review, compare, question, analyze, and consider whether it was necessary to return to the context of the classroom and the faculty.

Technological tools supported the analysis, categorization, and interpretation process. For the information from the first approach, the Excel program was used, which allowed a simple and general way to identify what the interviewee talked about. In Appendix S3, the relevant words are classified to establish the next approach in the research.

With the information from the interviews, in the second approach, Atlas.ti was used as the main computational support to develop grounded theory [41] as it provides the advantage of exploring the data and coding them, as well as grouping, categorizing, and identifying saturation. Above all, it responds to the circular nature and constant comparisons of the qualitative analysis that characterizes and promotes grounded theory to understand the reality studied and provide answers to research questions. Although Excel and Atlas.ti (version 22.0) were used, it must be said that interpretation and theorization were always the researchers’ tasks.

2.4. Process of Coding and Analysis

Open coding was carried out, in which the particular was passed to the general in a conceptual micro-analysis, with the scrutiny of each word, phrase, and idea that the faculty member expressed, as well as the analysis of silences, gestures, and even their context, from which the narrative is constructed [31].

Once the first coding was carried out, although not necessarily sequentially, codes were related in axial coding, defined by groupings from which categories were determined, and saturation was identified. The categories were the key to highlighting the results of this research. Moreover, as Glaser and Strauss [42] recommend, even so, they are easily made operational for future research (which is already noted in the notebook of research to be conducted).

Schemas to organize information, and explanatory diagrams with Atlas.ti favored finding different relationships, connections, and interactions and continuing to understand the teaching perspective on evaluation as a phenomenon of study in a context and within certain conditions.

The selective phase is at the end of the coding process and refers to integrating and refining the categories. Several codes were central as their presence was recurrent, while others were related consistently; with them, networks and diagrams were made that favored the critical and reflective analysis of what the field showed. Throughout the coding process, notes or direct comments were made on the codes, considering the reference citations from which they emerged.

The data analysis was a long, iterative, recursive process in which the constant comparison of the information obtained in each approach to the field allowed interpretations to be made and recognized that more information could still be obtained to saturate through theoretical sampling. Thus, faculty members were added to the sample.

Throughout this process, the analytical tools suggested by grounded theory and offered by Atlas.ti were used, such as diagrams, networks, notes, groupings, comparisons, and code relationships, which helped to overcome blockages and recognize biases. Asking questions and making comparisons during the analysis were the basic operations that allowed us to investigate, deepen, and direct the theoretical sampling for a better understanding of reality and to find the dimensions of the object of study—the objectification faculty members make of the evaluation in the classroom.

Based on quantitative content analysis and significantly interpreted positively, the coefficient of co-occurrence (C-coefficient) of the codes was calculated. The coefficient can vary between 0 = no co-occurrences and 1 = always co-occurring. The C-coefficient between the two pairs of codes was obtained with Equation (1).

C = (N1,2)/[(N1 + N2) − N1,2]

N12 is the frequency of co-occurrences between two codes, and N1 and N2 are the frequency of occurrence of each code.

Faculty members’ identities were kept confidential to protect their privacy and well-being. When referring to them, we employ the noun “participant,” followed by the number of the participant, for example, Participant 1, Participant 2, or P1, P2.

3. Results and Discussion

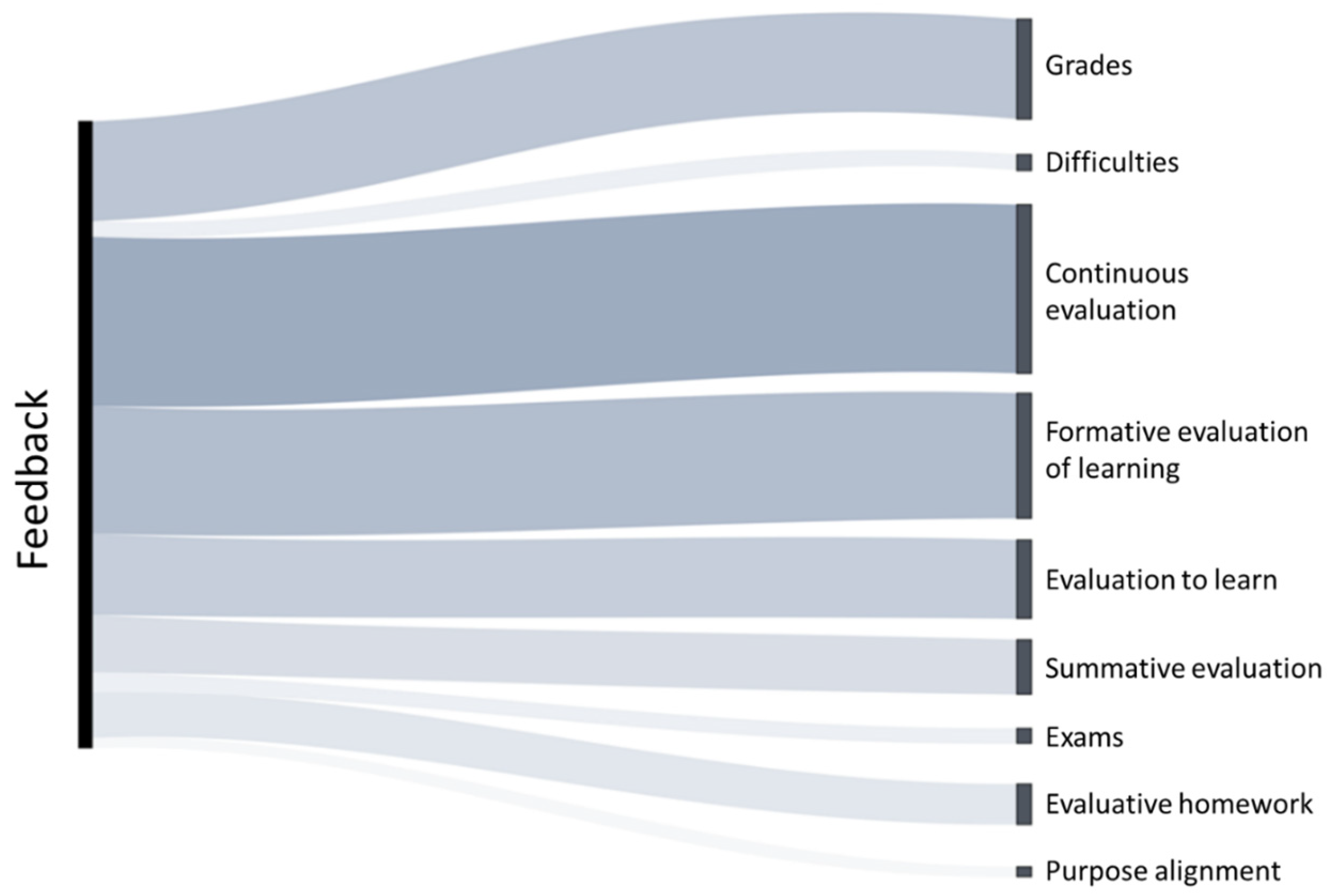

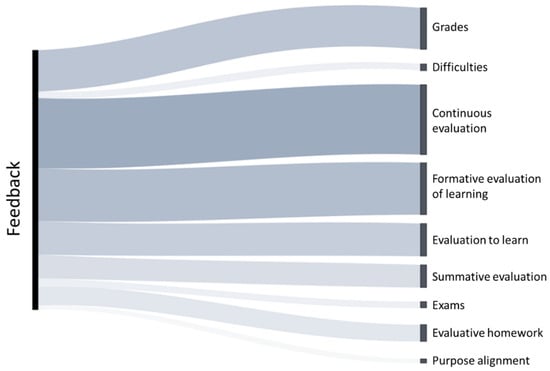

The initial coding in the theoretical sample yielded 86 codes (Appendix S4). Feedback was the main code. It was linked to other codes to a greater extent than any other. Some codes can be associated with approaches that conceptualize assessment as learning or for learning and traditional aspects, such as grading, summative assessment, and exams. The co-occurrence between the ten main codes shown in Table 4 highlights the highest numbers as being for continuous assessment (59) and feedback (86), the number of times each of these codes encountered the others.

Table 4.

Co-occurrences of the main codes.

Similarly, Table 4 shows that the greatest co-occurrence between the two codes is for feedback with continuous assessment (23 times) and the input with formative assessment that serves learning (18 times). The coefficient of co-occurrence between the feedback and constant assessment codes is 0.23, while between feedback and formative evaluation, it was 0.21. However, these indicators are far from a coefficient of 1.00, representing the greatest strength, although they have the highest intensity within the present analysis.

Another way to examine the co-occurrence of codes is with Sankey’s diagram. Figure 2 shows the breadth of the line linking feedback and continuous assessment, which co-occur together in greater numbers than other codes.

Figure 2.

Co-occurrences of the feedback code. Sankey’s diagram, obtained from Atlas.ti, shows the feedback code’s co-occurrence with other main codes.

To a very limited extent, they co-occur with the exam code. Faculty associates give feedback to the student during the learning process, not at a summative moment. The low co-occurrence with difficulties could indicate that it is not complicated for them; however, later, it will be shown why they associate it with feedback. This way of visualizing co-occurrences allows more than one code to be linked.

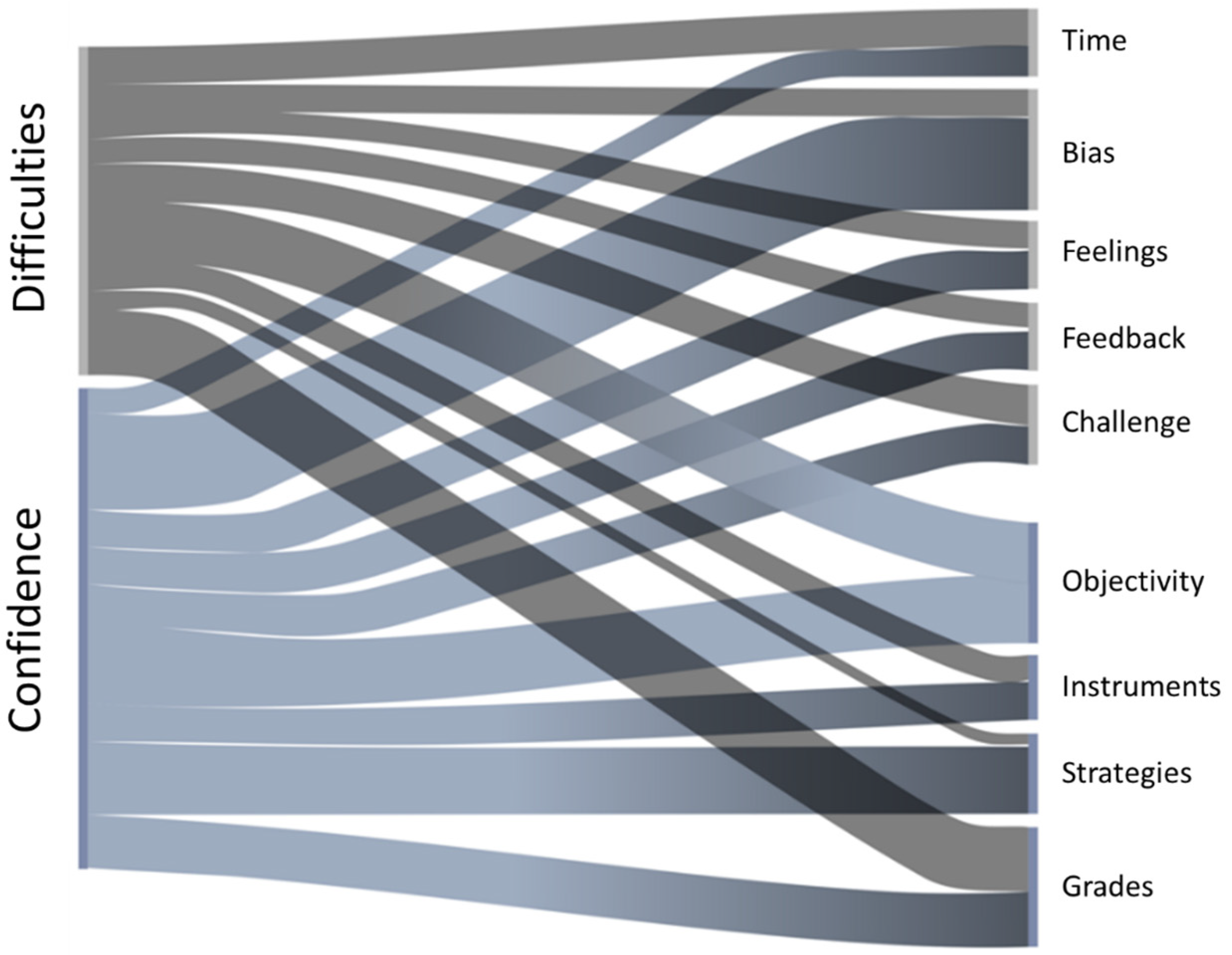

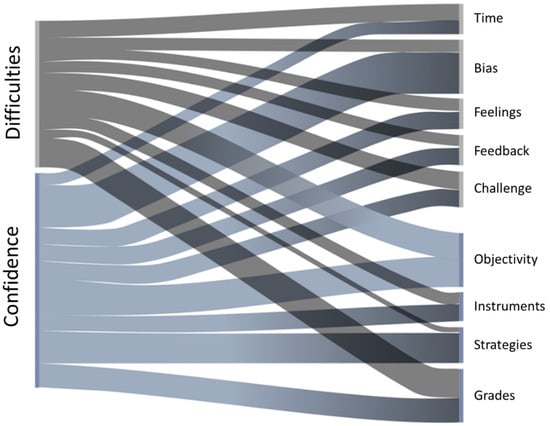

Figure 3 shows which codes appear together with confidence, which means the faculty member’s security about the evaluation, and the codes that occur simultaneously with difficulties, a code that appears in quotes that mention evaluation as a difficult, stressful, or even conflict-generating process.

Figure 3.

Co-occurrences of the codes confidence and difficulties. Sankey’s diagram, obtained from Atlas, shows the interactions that the codes difficulty and trust have with others.

This shows that the same difficult actions give the instructor confidence to judge the student’s learning or provide feedback. For example, time is observed as a condition that can determine confidence when evaluating the extent to which it allows us to know less or more about the student; when time is not enough, it represents a difficulty.

It is observed that faculty members perceive confidence in the evaluation when they use instruments and various evaluation strategies, manage to minimize subjectivity and provide feedback. However, the same empirical situations are related to difficulties.

Codes were grouped in the process of systematizing the information; of the 86 that emerged from the open or initial coding [43], 14 groups of codes were defined (Table 5), with which the faculty members’ perspectives were mainly analyzed and theorized.

Table 5.

Code groups are defined as categories.

The group with the greatest empirical extension as a category is the Formative Feedback group, in which codes are gathered to show the evaluation process as an aid to learning. The next group is the Confidence group, which is named because it encompasses codes that reflect actions associated with the faculty’s security and certainty about the result.

3.1. Evaluation from the Perspective of the Faculty

The faculty members’ concepts of assessment were analyzed. The approach implicit in its definition and the opportunities and difficulties encountered in their practice were reviewed. Next, we present the different topics that were identified in this analysis.

In the interviews, faculty members repeatedly mentioned that assessment is learning and strengthens the student’s self-analysis and self-management skills. Their points of view coincide with those of other authors [44,45,46], who, in their definitions, agree that it implies a value judgment and that it is applied to improve what is evaluated. Table 6 concentrates on the words of some faculty members who define the evaluation process and interpret these from their implicit approach.

Table 6.

Sample of faculty members’ expressions about the assessment concept as a strategy that supports learning and verifies the achievement of its goals.

Learning evaluation and the learning process are both verification exercises. Evaluation provides feedback on the process, and the faculty member’s assessment approach is modern and not limited to measurements or summative exams.

Faculty members make a clear distinction between formative assessment: “I evaluate on an ongoing basis, without punitive purposes… so that they see that this helps them know what they can improve… I try to get them to ask to be evaluated” of the summative evaluation: “even those that have many points, I try to make them see that the number is a stigma, I encourage them to analyze their grade and see in it what their achievements are and their opportunities to improve” (P7). Professor P8 says that the student should know that they will be evaluated “both to receive feedback only and to obtain a grade”. Other faculty members, such as Professor P9, assign a few points to the progress of a project but ask them to heed the suggestions she makes in the full delivery, where she evaluates in a final and global way.

Faculty members assign formative assessments the ability to influence teaching: “it determines if I have to redirect what I have planned” (P3); “… -it allows me to know if I should underpin the knowledge that students need… if you have time (he clarifies it with an inflection of voice)” (P4). This shows they recognize that formative assessment represents information they can use to improve teaching. This aligns with the authors’ arguments [46], who state that faculty members can transform their teaching using continuous and formative assessment information.

3.2. How and for What Purpose Do Faculty Members Evaluate?

Evaluating through day-to-day situations gives authenticity to the evaluation and is ideal for competencies or skills; in that sense, it is better than a written test or knowledge exam [47]. Due to the realism it has, the cognitive challenge, and the evaluative judgment that it entails [48], the student sees that authentic evaluation adds value to their future professional work. Villarroel and Bruna [49] conducted an extensive literature search. They reported that authentic assessment impacts the quality and depth of learning, develops higher-order skills, generates confidence and autonomy, and motivates and increases the capacity for self-regulation and reflection.

Hernán et al. [50] reviewed the current literature. They found that authentic assessment is defined by activities similar to real life and a context identical to what the student would encounter in the profession. The faculty members interviewed mentioned that students value the challenges they experience according to the learning they gain from them and their link with the professional reality of environments that represent future job possibilities.

The results show that faculty members use situational techniques and diversify their strategies, which is an advantage according to recent assessment approaches, particularly when they aim to develop higher cognitive competencies or processes. Despite this, they focus mostly on products: “I have an evaluation plan; it almost always has activities related to the topics we are looking at. I try to ensure that at the end of each topic, we have some deliverables, which I grade and that adds to their grade. Ultimately, a project or broader work always recapitulates or applies everything they learned in the course” (P10). This structure of the evaluation plan is the one that faculty members have; it is not in all cases, but it is perceived that the observation of performance as assessable and qualifiable evidence is left out. In addition, faculty members repeatedly alluded to the high number of students in a group as an impediment to the observation of performance and the review of individual actions within the teamwork, considering only the review of the product or deliverable prepared as a team.

Evaluation in an authentic (and formative) approach requires the faculty member to observe performances, actions, and attitudes. Those who said they did so find it comfortable when the activity does not carry a grade; it is formative: “I am more of an observer of performance during class with activities that have no value” (P11); “I like to observe how they behave, how they work on their project, what each one contributes, what they do and what they do not do as well” (P12).

Observing on-site performance in authentic evaluation is paramount and key to feedback. However, it loses relevance when only the physical and tangible deliverables they produce derived from the process are considered. This encourages the student to pay full attention to the deliverable, especially if it represents a high point value. This reinforces the student’s attitude towards the above numerical grade, prioritizing it over real, deep learning.

Each student lives their training process, and their learning is different. This implies that the evaluation is individual, even when they work as a team. In these cases, exercises designed to observe the student’s actions and assess not only what they know but also the application of that knowledge and the transfer to real or simulated situations are necessary. However, when these exercises are done “at home,” and only the product generated is reviewed, the faculty loses the opportunity to evaluate the student individually.

What is common in teamwork is that the same grade is assigned to all members because, in reality, what is being evaluated is the deliverable and not the learning of each student. “You are going to investigate, you are going to share, … they are going to work as a team, and that team activity is the one that will be graded” (P13).

Several faculty members consider it difficult to evaluate learning individually when teamwork is requested. Although they would like to do so, the belief is “I cannot control if they do it in a team if it is done by someone else … I cannot control that part, can I? … Then each one hands it over, that is how the activity goes, but well… how do you know if he did it with someone else?” (P14). They do not have procedures that allow the assessment of individual performance and the student’s contributions to the process and product of the group [51,52]. The deliverables or product provide evidence of what the student achieves individually or in teams regularly for a summative assessment with a high percentage of the grade.

Faculty members use various authentic and formative assessment strategies but are limited by traditional expectations and lack of methodologies for individual assessment in teamwork. They have evolved their assessment practices towards continuous assessment with frequent feedback and contextual relevance for students. However, there are shortcomings in systematic follow-ups for formative assessment and collaborative work evaluation.

3.3. What Do Faculty Members Evaluate?

In this section, faculty members consider the criteria formally established in their program to define evaluation strategies and tasks. A valid assessment reflects a clear purpose and correspondence with learning purposes and teaching strategies. For De la Orden [53], this coherence is a virtue of evaluation; therefore, it will be a powerful factor in educational quality and the effectiveness of the axiological system that sustains it.

Although the professors focus the evaluation on specific content or on the activities that they ask of the students on a day-to-day basis, they do not lose sight of the fact that they come from the formal objectives of the program: “If, as I said, I evaluate by the topics that we are seeing, they have to do with the objectives and in the model of our institution with the competencies that they have to develop” (P10). When this coherence does not exist, not only the virtue but also the teleological attribute of the evaluation is fragmented, and its validity is questioned; the assessment is reduced to an object of control, in which the faculty member achieves a false tranquility if the student does what they ask. Moreover, what matters to the student is accreditation. Moreno Olivos [54] points out that the process leans towards a positivist perspective when evaluating tasks that lack relevance to the purposes.

Designing the evaluation based on the aims or objectives favors the evidence, or evaluation tasks are not evaluated with external criteria of a different nature, such as compliance, form, or punctuality. The validity of the assessment supports the interpretive judgment of what it assesses [55]: “If the objective of the module is for them to design a didactic sequence to apply elements of an instructional design, then my logic would tell me that I have to do a project, … that will be evidence, I review the progress of the project to know what they are learning” (P15).

With this alignment and collection of evidence, Professor P8 recognizes in the strategy and the instrument “that the evaluation reflects that (the objectives) from the design of the instrument, (he keeps thinking, he wants to give examples) not to ask them how much it is, that is, that they make calculations, but that the instrument itself is by the vision of the course, it is about solving problems, analyzing information in context, and so on, not mechanizing a procedure”.

For Kane and Wools [56], a systematic and effective approach to achieving validity involves three actions: consistency in the interpretation and use of results, evidence that supports interpretation and intended uses, and what could be called a meta-evaluation, i.e., an evaluation of how well the evidence supports interpretation. These same authors propose that the functional perspective is the main one in classroom evaluation, which focuses on the usefulness of the evidence and the information collected.

Up to this point, evaluation has been analyzed from its teleological perspective since validity is established in the alignment with the ends; however, it is necessary to make visible that the quality of evaluation can be signified from other perspectives, methodological, axiological and psychological [57], each one representing a different approach where what is expected to be achieved is based on the method, values or the student’s representation of what they have learned.

Faculty members recognize that discipline dictates different methods of evaluation; their experiences point to this: “a course where the student has investigated or worked based on problems, then his evaluation, I would expect it to be also based on problems, based on similar situations and not just an exam” (P3); “… they have to do readings and part of the evaluation is the questions they ask about those texts, they ask them in class and I take it as participation” (P16).

Although in the evaluation processes implemented by faculty, they take care that their strategies and instruments evaluate what is expected, from the teleological perspective, they recognize substantial difficulties: “the truth is that it is difficult to know everything that the student has learned” (P17). This faculty member refers particularly to the fact that the student usually learns more than the formal curriculum establishes. The informal and the hidden influences their learning.

Whether the validity of the evaluation is related to the alignment of the aims or purposes, the methodology, or, in general, the didactic management, for faculty members, it represents a challenge, as they point out: “yes, and because I believe that in any educational model evaluation is what causes the most, the most conflict” (P3); “evaluation is a very difficult subject, I think it is the most difficult part of the teaching job. Preparing, and teaching is a pleasure… evaluation is the serious and difficult part” (P6); “Evaluation is complex, we need to learn how to do it more objectively… (look for another word) integral and be sure that what we observe is really what the student has learned” (P2).

Faculty members prioritize formative purposes for evaluation, which adds quality and validity from a teleological perspective. However, they face challenges as traditional methods cannot measure the complex learning construct. Qualitative and personalistic approaches are necessary. Education proposes models, but faculty members remain skeptical when grading student achievements.

3.4. What Do Faculty Members Evaluate for?

The reality studied confirms positions recognizing that the assessment approach for learning has become more visible in teaching practice, consistent with an educational process focused on learning [58,59]. However, it is not specified how to develop it promptly so that it impacts learning.

This research shows that the educational institution’s design and curricular strategy weigh heavily, as they trigger messages that the faculty incorporates as part of their dialogue at the individual and collegiate levels, creating institutionalized thinking. The environment in which the research was carried out is immersed in a process of change that promotes a high level of didactic training according to the educational model.

Sometimes, the changes that occur from the “top-down” are not well considered [60], but, as Fullan [61] mentions, strategies in both directions are necessary. This author argues that initiatives that emerge from “above” and are accompanied by timely capacity building that transforms faculty practice guarantee change, even radical change.

Within the environment where the research was carried out, feedback is indeed a key element that evolved, first, by an institutional “mandate,” but which was met with the indisputable commitment assumed by the faculty member in his role as trainer and began to become part of the reality in the evaluation process, with the belief of being a factor that promotes student learning.

Feedback is an action that links assessment with learning. In the research, the code with the greatest rootedness and density shows that faculty members recognize assessment as a process that contributes to learning. Even though it persists as a measure, it highlights that, in this context, faculty members are moving towards formative assessment.

For faculty members, the important thing is to provide feedback on a day-to-day basis. As Professor P14, from the business area, mentions, “…. well… At times, I took time to go with them to the laboratories, so in the laboratory, what I did is I walked among them, the doubts I had were resolved, (silence)… that is, in that interaction, I knew and realized what they were doing”.

Professor P11, from the humanities area, commented that it is not easy to provide effective feedback and, therefore “… it is important to observe them, to give them continuous feedback (upward inflection in the voice), on partial tasks, to help them improve”. As Professor P4, from the engineering area, said, “I evaluate everything given to me. If the student gives me something, I will give it feedback! (raises the tone of voice). I cannot keep something that they give me and not tell them if it was right or wrong, whatever they do, you must give them feedback, that is why I say that… (pause) … It takes a long time, but it is the greatest value I see in this process”.

This type of practice responds to an evaluation approach, whose fundamental purpose is to achieve learning through its results, observe behaviors, and comment on them in an environment of confidence. Santos Guerra [62] recommends turning the evaluation of a threat into an aid. It was also observed that feedback is not a mechanical practice, carried out by instruction of the educational model, highlighting its importance and usefulness in faculty members’ conceptualization. Professor P4 mentions “I see the evaluation process as this moment in which you give feedback to the student on their areas of opportunity, not to make a criticism that indicates the student is wrong, but rather, to tell them that you are standing at this point and you need to take these actions to get to this other place, which is the goal that was set from the beginning, at the beginning of the course, workshop or whatever”. Or Professor P16 from the area of social sciences, mentions “… assessment encourages learning, I do believe it (he seems to say it to ensure it). I often use evaluation to encourage them, so I like this feedback”.

However, faculty members are aware of the conditions of classroom work, the limitations that the number of students per group may represent, and even the students’ lack of interest. They expressed this in an interview: “I find it difficult to conduct qualitative evaluations of each student, (pause) we have large groups, and that does not help. In addition, as professors, we are always involved in projects, research, and administrative activities; even if you want to, you must try to be practical”—he mentions that several evaluation strategies cannot always give them all the feedback (P17). Or the thoughts of Professor P2: “Feedback is important, but not everyone can receive it in depth; it is not possible because it is not always of interest to the student. Some seek it; others do not; perhaps they do not see the value (accompanied by a gesture in which they raise their shoulders as if indicating indifference), or perhaps they cannot listen and not feel offended or criticized”. She adds that because of her number of groups and students, “I cannot always give immediate or timely feedback”.

The data spark debate about what the theory, the model, the design, and the reality conditions dictate. A possible interpretation is that they are on the road. In this context, they have undertaken a change, and this is not a model; it is a journey with uncertainties, apparent setbacks, and inertia, but it reflects a difference from what many faculty members interviewed said.

This research did not aim to analyze the issue of change management in education. However, each approach in the theoretical sampling revealed that contexts, understood as intersubjective constructs designed in the interaction [63] institution–a faculty member–student, could not be overlooked, and each of these contexts shapes the faculty’s narrative. Institutional change and dialogue are (de)constructed by each faculty member; from that perspective, they discuss and implement their students’ evaluation and feedback process. For this reason, the understanding given by each faculty member’s narrative shows how they assimilate, interpret, and bring feedback to life, an inherent element of assessment and relevant to learning, but also their recognition of the conditions and circumstances that, for some, are limiting and, for others, allow them to succeed. Everyone is on a journey.

3.5. Findings Implications

Using a grounded theory approach, we have examined how a few academics in Mexico perceive the purpose and significance of evaluation. It is essential to connect these findings with broader studies on how compulsory and higher education teachers view assessment processes’ purpose, function, and nature.

Our work examines the ethical aspects of assessment methods used in education. It emphasizes the need for assessment practices to align with educational institutions’ goals and society’s expectations. Transparency, academic integrity, and adherence to educational institutions’ values and standards are crucial for establishing a fair and effective assessment process that benefits everyone involved.

Our work also emphasizes the importance of student feedback in the assessment process, as it can offer valuable insights that may differ from institutional beliefs and policies, enriching the overall educational experience. We also consider the impact of timing and the duration of assessments on professors’ perceptions, especially in a modern academic environment that includes many additional responsibilities beyond teaching duties.

Other researchers have also studied the perceptions of teachers in different contexts. For example, Barnes and colleagues [64] explain that beliefs include personal truths, reality experiences, emotions, and memories in exploring teachers’ beliefs about assessment. They also suggest that beliefs are part of a dynamic mental structure consisting of rules, concepts, ideas, and preferences—essentially, knowledge. While some teachers overlook assessment because they prioritize measurability, cultural differences shape views on assessment. The authors stress that ethical considerations around power and assessment warrant further research.

Fulmer et al. [65] also undertook a study to identify the factors that shape teachers’ adoption of new instructional practices in the classroom. They categorized these factors into three levels of influence: the individual, the school, and society. The study found a significant research gap in understanding how school and societal factors influence teachers’ practices, emphasizing the need for further investigation. It also highlighted the importance of considering contextual factors in assessments and teachers’ extracurricular experiences in shaping teaching values.

Xu and Brown [66] analyzed how teachers perceive and use assessments, considering factors like the school environment. Their study introduced the Teacher Assessment Literacy in Practice (TALiP) framework, which underscores the need for teachers to reflect on their assessment practices and adapt to improve. The research highlighted the impact of teachers’ self-perception as assessors and the influence of school environments on assessment practices. It addressed teachers’ challenges in implementing preferred assessment methods due to institutional regulations and the school environment.

In a study by Bonner et al. [67], researchers used self-determination theory to show how teachers’ beliefs interact with external mandates. The study found that teachers’ beliefs and self-perceptions affect their alignment with standards-based systems. It suggested that professional development should focus on providing resources to help teachers develop assessments aligned with external standards. Schools do not always establish norms coherent with the external system, leading to difficulties in implementing alternative assessments desired by teachers. In this regard, teachers expressed resentment and fear due to feeling compelled to comply with the limited structure of the system, impacting their professional autonomy and beliefs.

Pastore and Andrade [68] studied teacher education about assessment knowledge and skills. They introduced a model with three parts: understanding concepts, putting knowledge into practice, and considering social and emotional aspects. The model emphasizes the importance of practical, conceptual, and socio-emotional understanding of assessment and received positive feedback from 35 experts. The study aims to start conversations and future research on assessment knowledge and skills to shape teacher education and professional development programs.

In their study, Estaji et al. [69] examined the skills and knowledge teachers need for assessing students in digital environments. They focused on Teachers’ Understanding of Assessment in Digital Environments (TALiDE). They discussed the use of digital assessments in education, challenges during COVID-19, academic integrity, and the skills needed for digital assessments. The study emphasized the importance of clear criteria and rubrics for scoring digital assessments to ensure fairness and concerns about academic honesty in digital evaluations.

These studies analyze how educators’ beliefs about assessment influence their teaching methods. They explore the factors that significantly affect teachers’ assessment practices, such as the school environment, extracurricular activities, and external policies. They also introduce theoretical frameworks, which help educators to understand and apply assessment methods in traditional and digital classroom settings. The literature emphasizes the practical challenges teachers face when implementing preferred assessment strategies within existing institutional and regulatory frameworks. Additionally, it highlights the role of professional development programs in assisting teachers to align their assessment practices with external standards while emphasizing the tension between educators’ autonomy and standardized educational systems.

Considering what has been studied before, it is essential to merge the ethical considerations of assessment practices with the theoretical frameworks presented in this study. By linking these ethical principles with theoretical constructs such as TALiP and TALiDE, a more comprehensive understanding of how these frameworks can incorporate ethical considerations into practical assessment methods can be achieved. Additionally, the impact of professors’ additional responsibilities, as highlighted, should be examined concerning the implementation of these theoretical frameworks. This should also include recommendations for aligning professional development with ethical practices and considering student perspectives, ultimately bridging the gap between theory and practice in assessment.

4. Conclusions

Faculty members’ views on evaluation differ and are shaped by their expertise and personal and professional experiences. However, their approach is also influenced by the educational context, the curriculum design, teaching methods, and the institution’s evaluation policies. This demonstrates that evaluation extends beyond mere content retention measurements or end-of-subject exams.

Educators assess students’ performance to determine if they have met the expected standards. They understand the importance of aligning the assessment with learning objectives to ensure a fair and accurate evaluation. They strive to uphold objectivity and avoid disadvantaging students with poorly designed assessments. Therefore, they emphasize correctly answered tests and activities demonstrating critical thinking and applying developed skills to real-world problems.

This study emphasized instructors’ ongoing assessments and their focus on feedback. They understand that their comments to students direct them toward improvement and impact their motivation, performance, and self-direction.

Based on the analysis and discussion of the results, it can be concluded that faculty members recognize the enrichment of assessment methodologies. They no longer solely rely on traditional, summative, retention-based, punitive approaches; instead, they embrace new evaluation methods, acknowledge the principles of their theoretical frameworks, adopt an evaluation approach to facilitate learning rather than learning to be evaluated and infuse creativity into their evaluation practices.

It is important to continue developing and enhancing instructors’ assessment skills. Different authors suggest the knowledge, actions, and qualities educators should possess regarding evaluation. It is recommended that programs be created that provide them with conceptual clarity, inspire them to develop effective evaluation methods that consider the specificities of their educational environment, and urge them to engage in reflective and constructive discussions about learning, teaching, and assessment.

4.1. Limitations and Future Work

The primary constraint of this study is the limited number of participating professors. Despite inviting 60 professors, only half took part in the research. Additionally, the study’s focus on a specific university in Mexico may restrict its applicability to other contexts.

Drawing from the insights gained through the theoretical sample, several research avenues are recommended:

- The moments and ways formative and summative assessments coexist simultaneously. Educators ask themselves the following questions: When is the ideal time to conduct summative evaluation? How far should the formative process of the course advance before having the purpose of making a learning evaluation? When accepting that one evaluates to learn and does not learn to be assessed, should summative evaluation be conducted when the student recognizes that they have already achieved learning?

- The impact of numerical grading on assessment. The faculty members’ concerns are reflected in these questions: What are the characteristics of valid procedures for awarding a grade with the information provided by the evaluation? What is the role of numerical grading in an assessment approach to learning? How can we ensure the grade does not condition the student’s decisions about what to do?

- The quality of the evaluation process in large groups. This can be a line of research that responds to faculty members’ strong concerns about how to implement formative assessment in large groups. What is the ratio, group size, and feedback time? What are the appropriate strategies for evaluation in large groups?

4.2. Contribution of the Research to the Educational Field

The research makes significant contributions in two key areas. Firstly, it shows how the results can benefit educational decision-making and actions. Secondly, it delves into investigating the phenomenon itself.

The value of this study lies in recognizing that substantial improvements in teaching assessment practices can be achieved through structural changes supported by administrative, academic, and technological processes. Furthermore, systematic research on the evaluation process reveals potential gaps in conceptual models, which often evolve faster than implemented in education. This research is pertinent for designing interventions to enhance educator assessment competence and improve the overall assessment quality.

Further exploration is possible in applying grounded theory to educational research, particularly in the context of classroom assessment. Grounded theory methodology challenges established paradigms, fosters reflection, and encourages researchers’ creativity and self-awareness. This approach enhances the transparency and credibility of findings, thereby adding reliability to the study. Emphasizing a qualitative and interpretative approach, it acknowledges evaluation as a dialogic and intersubjective space. This perspective enriches the educational environment for the benefit of faculty members and students, going beyond the mere quantification of learning.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/educsci14090984/s1, Appendix S1: Open-ended survey with three open-ended questions. Appendix S2: Semi-structured interview. Appendix S3: Classification of keywords obtained from the first enclosure to the field. Appendix S4: Codes emerged during systematizing information from teacher interviews (In Spanish).

Author Contributions

Conceptualization, C.H.A.-H. and A.S.G.; Methodology, C.H.A.-H.; Software, C.H.A.-H.; Formal analysis, C.H.A.-H.; Investigation, C.H.A.-H.; Resources, C.H.A.-H.; Data curation, P.V.-V.; Writing—original draft, C.H.A.-H.; Writing—review & editing, A.S.G. and P.V.-V.; Visualization, P.V.-V.; Supervision, A.S.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The authors will make the raw data supporting this article’s conclusions available upon request.

Acknowledgments

The authors would like to acknowledge the financial support of Writing Lab, Institute for the Future of Education (IFE), Tecnologico de Monterrey, Mexico.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Policy Brief: Sustainable Development and Global Citizenship in Latin America and the Caribbean Education Systems. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000375141_eng (accessed on 20 December 2022).

- El Rompecabezas de la Educación Superior Privada. Algunas Piezas Locales para Comprender las Nuevas Dinámicas del Sector Privado en México. Available online: https://bit.ly/4ceLRXI (accessed on 5 February 2023).

- Paz, A.A.H.; Cancino, V.E.C.; Gonzalez, G.T.; Cordero, O.L. Evaluación y acreditación para el aseguramiento de la calidad de la educación superior en México. Rev. Venez. Gerenc. 2023, 28, 693–712. [Google Scholar] [CrossRef]

- Del Águila Obra, A.R. Revoluciones tecnológicas, paradigmas organizativos y el futuro del trabajo. In Proceedings of the Congreso Interuniversitario Sobre El Futuro Del Trabajo, Madrid, España, 7 July 2020. [Google Scholar]

- Alvarado Nando, M. La Pandemia de la COVID-19 como Oportunidad para Repensar la Educación Superior en México; University of Guadalajara: Guadalajara, Mexico, 2020; pp. 15–42. [Google Scholar]

- Ibarra-Saiz, M.S.; Gómez, G.R. Learning to Assess to Learn in Higher Education. Rev. Iberoam. Eval. Educ. 2020, 13, 5–8. [Google Scholar]

- Rodríguez Gómez, H.M.; Salinas Salazar, M.L. Assessment for Learning in Higher Education: Challenges in the Training of the Teaching Staff in Literacy. Rev. Iberoam. Eval. Educ. 2020, 13, 111–137. [Google Scholar] [CrossRef]

- López-Pastor, V.; Sicilia-Camacho, A. Formative and shared assessment in Higher Education. Assess. Eval. High. Edu. 2018, 42, 77–97. [Google Scholar] [CrossRef]

- Hazi, H.M. Reconsidering the dual purposes of teacher evaluation. Teach. Teach. 2022, 28, 811–825. [Google Scholar] [CrossRef]

- Cabra Torres, F. Quality in Student Assessment: An Analysis from Professional Standards. MAGIS 2008, 1, 95–112. [Google Scholar]

- Joint Committee on Standards for Educational Evaluation. Available online: https://evaluationstandards.org/ (accessed on 5 February 2023).

- Córdoba Gómez, F.J. La evaluación de los estudiantes: Una discusión abierta. Rev. Iberoam. Educ. 2006, 39, 4. [Google Scholar] [CrossRef]

- Hess, L.M.; Foradori, D.M.; Singhal, G.; Hicks, P.J.; Turner, T.L. “PLEASE Complete Your Evaluations!” Strategies to Engage Faculty in Competency-Based Assessments. Acad. Pediatr. 2020, 21, 196–200. [Google Scholar] [CrossRef]

- European University Association. Available online: https://eua.eu/ (accessed on 3 August 2024).

- Syarova, H.; Sternadel, D.; Mašidlauskaitÿ, R. Assessment Practices for 21st Century Learning: Review of Evidence; NESET II Report; Publications Office of the European Union: Luxembourg, 2017. [Google Scholar] [CrossRef]

- Western and Northern Canadian Protocol for Collaboration in Education. Rethinking Classroom Assessment with Purpose in Mind. Available online: www.wncp.ca (accessed on 3 August 2024).

- Box, C.; Vernikova, E. Formative Assessment in United States Classrooms; Palgrave Macmillan: Cham, The Netherlands, 2019. [Google Scholar] [CrossRef]

- Santiago, P.; McGregor, I.; Nusche, D.; Ravela, P.; Toledo, D. OECD Reviews of Assessment in Education; INEE and OECD Centre: Mexico City, Mexico, 2012. [Google Scholar]

- OECD. Higher Education in Mexico: Results and Relevance for the Labour Market; OECD Publishing: Paris, France, 2019. [Google Scholar] [CrossRef]

- Moreno Olivos, T. La evaluación del aprendizaje en la universidad: Tensiones, contradicciones y desafíos. Rev. Mex. Investig. Educ. 2009, 14, 563–591. [Google Scholar]

- Jaffar, A.; Anwar, S.; Shah, S.M.H. Learning Approaches Related to Perception of Assessment in Students at Higher Education Level. SJESR 2020, 3, 135–140. [Google Scholar] [CrossRef]

- Alcalá, D.H.; Pueyo, Á.P.; García, V.A. Student perspective about traditional process and formative evaluation. Group contrasts in the same subjects. REICE. Rev. Iberoam. Calid. Efic. Cambio Educ. 2015, 13, 35–48. [Google Scholar] [CrossRef]

- Moreno Olivos, T. The evaluation culture and the improvement of the school. Perfiles Educ. 2011, 33, 116–130. [Google Scholar]

- Moss, C.M.; Brookhart, S.M. Advancing Formative Assessment in Every Classroom: A Guide for Instructional Leaders; ASCD: Alexandria, VA, USA, 2019. [Google Scholar]

- Maldonado, J. Total Quality Fundamentals; MDC: Tegucigalpa, Honduras, 2018. [Google Scholar]

- Stiggins, R. High Quality Classroom Assessment: What Does It Really Mean? Educ. Meas. Issues Pract. 2015, 11, 35–39. [Google Scholar] [CrossRef]

- McMillan, J. Establishing High Quality Classroom Assessments; Metropolitan Educational Research Consortium: Richmond, VA, USA, 1999. [Google Scholar]

- Gielen, S.; Dochy, F.; Dierick, S. Evaluating the Consequential Validity of New Modes of Assessment: The Influence of Assessment on Learning, Including Pre-, Post-, and True Assessment Effects. In Optimising New Modes of Assessment: In Search of Qualities and Standards; Springer: New York, NY, USA, 2003; pp. 37–54. [Google Scholar]

- Brookhart, S.M. Developing Measurement Theory for Classroom Assessment Purposes and Uses. Educ. Meas. Issues Pract. 2003, 22, 5–12. [Google Scholar] [CrossRef]

- Jobs for the Future. Ten Principles for Building a High-Quality System of Assessments. Available online: http://deeperlearning4all.org/10-principles-building-high-quality-system-assessments/ (accessed on 3 August 2024).

- Corbin, J.; Strauss, A. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory; Sage: Thousand Oaks, CA, USA, 1996. [Google Scholar]

- Flick, U. Introduction to Qualitative Research, 3rd ed.; Morata: Madrid, Spain, 2012. [Google Scholar]

- Hernández, G.E.G.; Caudillo, J.M. Procedimientos metodológicos básicos y habilidades del investigador en el contexto de la teoría fundamentada. Iztapalapa Rev. Cienc. Soc. Hum. 2010, 31, 17–39. [Google Scholar]

- Charmaz, K. Constructing Grounded Theory: A Practical Guide through Qualitative Analysis; Sage: London, UK, 2006. [Google Scholar]

- Blumer, H. Symbolic Interactionism; Time: Barcelona, Spain, 1982. [Google Scholar]

- Piñeros Suárez, J.C. El interaccionismo simbólico oportunidades de investigación en el aula de clase. RIPIE 2021, 1, 211–228. [Google Scholar] [CrossRef]

- Cuñat Giménez, R. Aplicación de la teoría fundamentada (grounded theory) al estudio del proceso de creación de empresas. In Decisiones Basadas en el Conocimiento y en el Papel Social de la Empresa: XX Congreso Anual de AEDEM; Asociación Española de Dirección y Economía de la Empresa (AEDEM): Palma de Mallorca, Spain, 2007; Volume 2. [Google Scholar]

- Glaser, B. Naturalist Inquiry and Grounded Theory. Forum Qual. Soc. Res. 2004, 5, 7. [Google Scholar] [CrossRef]

- Charmaz, K.; Belgrave, L. Qualitative Interviewing and Grounded Theory Analysis. In The SAGE Handbook of Interview Research: The Complexity of the Craft, 2nd ed.; SAGE: Thousand Oaks, CA, USA, 2012; pp. 347–365. [Google Scholar]

- Kvale, S. Interviews in Qualitative Research; Morata: Madrid, Spain, 2008. [Google Scholar]

- San Martín Cantero, D. Grounded Theory and Atlas.ti: Methodological Resources for Educational Research. REDIE 2014, 16, 104–122. [Google Scholar]

- Glaser, B.; Strauss, A. The Discovery of Grounded Theory: Strategies for Qualitative Research. In Chapter III. Theoretical Sampling; Aldine Publishing Company: New York, NY, USA, 2017; pp. 45–78. [Google Scholar]

- Charmaz, K. Constructing Grounded Theory, 2nd ed.; Sage: London, UK, 2014. [Google Scholar]

- Stufflebeam, D.; Shinkfield, A. Evaluation Theory, Models, and Applications; Jossey-Bass: San Francisco, CA, USA, 2007. [Google Scholar]

- Stake, R. Comprehensive Assessment and Standards-Based Assessment; Editorial Graó: Barcelona, Spain, 2006. [Google Scholar]

- Popham, J. Transformative Assessment. The Transformative Power of Formative Assessment; Narcea: Madrid, Spain, 2013. [Google Scholar]

- Bailey, A.; Durán, R. Language in Practice: A Mediator of Valid Interpretations of Information Generated by Classroom Assessment among Linguistically and Culturally Diverse Students. In Classroom Assessment and Educational Measurement; Brookhart, S.M., McMillan, J.H., Eds.; Taylor & Francis: Abingdon, UK, 2020; pp. 47–62. [Google Scholar]

- Villarroel, V.; Bloxham, S.; Bruna, D.; Bruna, C.; Herrera-Seda, C. Authentic Assessment: Creating a Blueprint for Course Design. Assess. Eval. High. Educ. 2018, 43, 840–854. [Google Scholar] [CrossRef]

- Villarroel, V.; Bruna, D. ¿Evaluamos lo que realmente importa? El desafío de la evaluación auténtica en educación superior. Calid. Educ. 2019, 50, 492–509. [Google Scholar] [CrossRef]

- Hernán, E.J.B.; Pastor, V.M.L.; Brunicardi, D.P. Authentic Assessment and Learning-Oriented Assessment in Higher Education: A Review of International Databases. Rev. Iberoam. Evaluación Educ. 2020, 13, 67–83. [Google Scholar]

- Brookhart, S. Summative and Formative Feedback. In The Cambridge Handbook of Instructional Feedback; Lipnevich, A., Smith, J., Eds.; Cambridge University Press: Cambridge, UK, 2018; pp. 52–78. [Google Scholar]

- Winchester-Seeto, T. Assessment of Collaborative Work—Collaboration versus Assessment. Invited Paper Presented at the Annual Uniserve Science Symposium, The University of Sydney. April 2002. Available online: https://www.cmu.edu/teaching/assessment/assesslearning/groupWorkGradingMethods.html (accessed on 3 August 2024).

- De la Orden, A. Quality and Evaluation: Analysis of a Model. ESE 2009, 16, 17–35. [Google Scholar]

- Moreno Olivos, T. The good, the bad and the ugly: The many faces of evaluation. RIES 2010, 1, 84–97. [Google Scholar]

- National Council on Measurement in Education. Supporting Decisions with Assessment Digital Module 22; Gotch, C., Ed.; Washington State University: Pullman, WA, USA, 2021. [Google Scholar]

- Kane, M.; Wools, S. Perspectives on the Validity of Classroom Assessment. In Classroom Assessment and Educational Measurement; Brookhart, S.M., McMillan, J.H., Eds.; Taylor & Francis: Abingdon, UK, 2020; pp. 11–26. [Google Scholar]

- Bermúdez Sarguera, R.; Rodríguez Rebustillo, M. Knowledge and Skill: Evaluating the Immeasurable? Rev. Univ. Soc. 2021, 13, 363–374. [Google Scholar]

- Sánchez Mendiola, M.; Martínez González, A. Evaluación de y para el Aprendizaje: Herramientas y Estrategias; Imagia Communication: Mexico City, Mexico, 2020. [Google Scholar]

- Moreno Olivos, T. Evaluación del Aprendizaje y para el Aprendizaje: Reinventar la Evaluación en el Aula; UAM: Mexico City, Mexico, 2016. [Google Scholar]

- Díaz-Barriga Arcero, F. Curricular reforms, and systematic change: An absent but needed articulation to the innovation. Rev. Iberoam. Educ. Super. 2012, 3, 23–40. [Google Scholar]

- Fullan, M. Change Forces: Probing the Depths of Educational Reform; Routledge: Abingdon, UK, 2012. [Google Scholar]

- Santos Guerra, M.A. La Evaluación como Aprendizaje. Cuando la Flecha Impacta en la Diana; Narcea: Buenos Aires, Argentina, 2014. [Google Scholar]

- Van Dijk, T.A. Discourse and Context; Editorial Gedisa: Barcelona, Spain, 2013; p. 64. [Google Scholar]

- Barnes, N.; Fives, H.; Dacey, C.M. Teachers’ Beliefs about Assessment. In International Handbook of Research on Teachers’ Beliefs; Fives, H., Gill, M.G., Eds.; Educational Psychology Handbook; Routledge: New York, NY, USA, 2015; ISBN 978-0-415-53922-7. [Google Scholar]

- Fulmer, G.W.; Lee, I.C.H.; Tan, K.H.K. Multi-Level Model of Contextual Factors and Teachers’ Assessment Practices: An Inte-grative Review of Research. Assess. Educ. Princ. Policy Pract. 2015, 22, 475–494. [Google Scholar] [CrossRef]

- Xu, Y.; Brown, G.T.L. Teacher Assessment Literacy in Practice: A Reconceptualization. Teach. Teach. Educ. 2016, 58, 149–162. [Google Scholar] [CrossRef]

- Bonner, S.M.; Torres Rivera, C.; Chen, P.P. Standards and Assessment: Coherence from the Teacher’s Perspective. Educ. Assess. Eval. Acc. 2018, 30, 71–92. [Google Scholar] [CrossRef]

- Pastore, S.; Andrade, H.L. Teacher Assessment Literacy: A Three-Dimensional Model. Teach. Teach. Educ. 2019, 84, 128–138. [Google Scholar] [CrossRef]

- Estaji, M.; Banitalebi, Z.; Brown, G.T.L. The Key Competencies and Components of Teacher Assessment Literacy in Digital Environments: A Scoping Review. Teach. Teach. Educ. 2024, 141, 104497. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).