Abstract

The transformative integration of artificial intelligence (AI) into educational settings, exemplified by ChatGPT, presents a myriad of ethical considerations that extend beyond conventional risk assessments. This study employs a pioneering framework encapsulating risk, reward, and resilience (RRR) dynamics to explore the ethical landscape of ChatGPT utilization in education. Drawing on an extensive literature review and a robust conceptual framework, the research identifies and categorizes ethical concerns associated with ChatGPT, offering decision-makers a structured approach to navigate this intricate terrain. Through the Analytic Hierarchy Process (AHP), the study prioritizes ethical themes based on global weights. The findings underscore the paramount importance of resilience elements such as solidifying ethical values, higher-level reasoning skills, and transforming educative systems. Privacy and confidentiality emerge as critical risk concerns, along with safety and security concerns. This work also highlights reward elements, including increasing productivity, personalized learning, and streamlining workflows. This study not only addresses immediate practical implications but also establishes a theoretical foundation for future AI ethics research in education.

Keywords:

ChatGPT; analytical hierarchy process; education; ethics conundrum; risk; reward; resilience; decision-making 1. Introduction

The concept of AI-driven education, as proposed by George and Wooden [1], envisions a revolutionary shift where artificial intelligence (AI) plays a central role in transforming and enriching the learning experience. This evolution in education and research has been a captivating journey [2] marked by rapid growth, substantial investments, and swift adoption. However, educators may harbor concerns about effectively leveraging the pedagogical advantages of AI and its potential positive impact on the teaching and learning processes [3]. The imminent era of AI and augmented human intelligence poses challenges for educational environments, where the demands on human capacities risk outpacing the potential response of the existing educational system [4]. Innovative technologies, representing cutting-edge advancements, have the capability to transform various aspects of society and the economy [2]. In the face of powerful new technologies, changing job landscapes, and the looming threat of increased inequality, the current configuration of the education sector is poised for a destabilizing shift. Successfully addressing the challenges ahead will necessitate a reconfigured learning sector [4] and effective policy strategies.

In light of the transformation brought about by AI technologies, the methods of learning, pedagogy, and research have undergone a significant metamorphosis [2]. For instance, the integration of AI into educational settings has ushered in unprecedented opportunities, transforming the learning landscape [1,5]. Among these AI applications, ChatGPT, a state-of-the-art language generation model, has emerged as a versatile tool in educational contexts. The academic community is increasingly leveraging ChatGPT for diverse purposes, ranging from automated grading to personalized learning experiences [6,7,8,9]. However, its proliferation brings forth a myriad of ethical challenges [10,11,12,13,14], demanding an integrated approach for an effective approach to promote responsible usage [15,16]. Accordingly, as institutions embrace these advancements, questions of ethical responsibility, transparency, and the potential societal impact of ChatGPT deployment become paramount [15].

This study recognizes the need for a holistic understanding of the ethical landscape, acknowledging that ethical considerations extend beyond risk assessment to encompass potential rewards and the resilience needed to navigate evolving challenges. As a result, the study undertakes an exploration of the ethical themes surrounding ChatGPT utilization in education [17], employing innovative risk, reward, and resilience (RRR) terminologies to guide the data extraction process. In this context, the study identifies and categorizes ethical concerns associated with ChatGPT within educational contexts, offering a structured lens for evaluating risk, reward, and resilience dynamics, by using an Analytic Hierarchy Process (AHP). This research approach positions this study at the forefront of AI ethics research. By examining the interplay between risk, reward, and resilience, the research not only offers immediate practical implications but also establishes a theoretical foundation for future studies in the rapidly evolving field of AI in education.

This study aims to investigate the ethical conundrums of ChatGPT by employing the RRR integrative framework and the AHP method. It proposes a framework to address these ethical dilemmas and supports decision-making regarding its use in educational settings. The paper is organized as follows: Section 2 reviews existing literature and identifies the research gap addressed by this study; Section 3 discusses the theoretical framework underpinning the research; and Section 4 details the research methodology, discussing the application of AHP. The study’s results, along with insights and analyses derived from the methodology, are presented in Section 5. Section 6 discusses the contributions and implications of the study, covering theoretical aspects, practical applications, limitations, and future research directions. Finally, Section 7 concludes the paper.

2. Review of Related Studies

To comprehensively understand the literature on ChatGPT, this study conducted a quick search on Scopus on 2 August 2024 using the keywords ‘ChatGPT AND utilization OR usage AND education’. The search yielded 315 documents. After excluding non-English documents, 307 remained, comprising various types of publications such as articles, conference papers, reviews, and book chapters. We further refined the selection by focusing only on journal articles, resulting in 181 documents. We assume that other publication types (conference papers, conference reviews, notes, letters, editorials, and book chapters) often repeat ideas or present work-in-progress that typically appear in journal articles eventually or already have [18,19,20,21]. Review articles were also excluded as they are not original research papers. The dataset was then downloaded, and we scanned the titles and abstracts to identify articles relevant to this study’s scope: those that investigated ChatGPT utilization and usage in the context of educational context. Among the 181 articles, several themes emerged, such as technology adoption, acceptance, usage and capabilities, performance, review and conceptual studies, and unrelated topics. However, a detailed analysis of these articles is beyond the scope of this paper. Therefore, this study discusses the articles representing the relevant literature based on taxonomy, theoretical models, methodologies, and research gaps.

2.1. Taxonomy of ChatGPT Utilization in Education

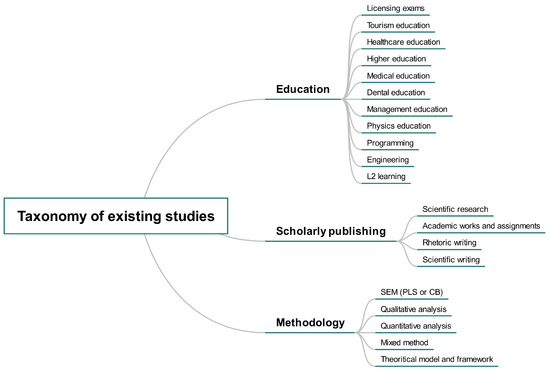

The comprehensive review of literature spans diverse fields, shedding light on the varied applications and implications of ChatGPT. Specifically, significant contributions were brought into the educational sector [6,8,9,22,23], ref. [14] provided insights into scholarly publishing, and [7] narrowed their scope to health care education through literature based on PRISMA guidelines. Similarly, Qasem [24] explored scientific research and academic works, showcasing the broader academic application. Many contributions have focused on scientific research [12,25]. Moreover, a study by Yan [26] explored L2 learning; Ray [27] delved into customer service, healthcare, and education; and Taecharungroj [28] analyzed ChatGPT tweets. In addition, Gruenebaum et al. [29] focused on medicine, specifically obstetrics and gynecology. Accordingly, Kooli [23] provided insights that span across education and research, providing a multifaceted understanding of ChatGPT’s impact on these interconnected realms. Furthermore, Cox and Tzoc [30] delve into more general applications, potentially encapsulating a broader perspective on ChatGPT utilization. Karaali [31] focused on quantitative literacy, providing valuable insights into the intersection of ChatGPT and numerical comprehension. Jungwirth and Haluza [32] ventured into scientific writing, providing valuable insights from public health perspectives. Pavlik [13] contributed to journalism and media, examining the impact of ChatGPT in shaping narratives and content creation. Finally, Geerling et al. [33] focused on economics and assessment, unraveling the potential implications of ChatGPT in these domains. These studies underline the diverse applications of ChatGPT across educational environments, emphasizing its transformative potential in reshaping various educational tasks prompting the need for effective considerations in policy, research, and practical applications. Figure 1 captures the taxonomy of specific areas and contributions made in the literature, as well as methodologies employed thus far.

Figure 1.

Taxonomy of research areas in the literature.

2.2. Theoretical Models of Prior Research

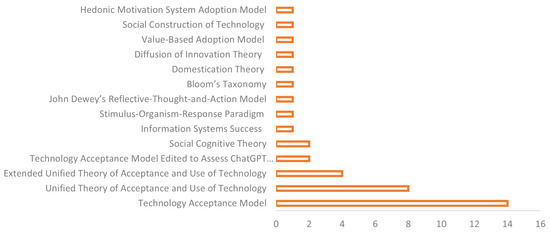

The current literature on the adoption and utilization of ChatGPT in educational settings employs a variety of theoretical models to understand and predict behavior. Among these, the unified theory of acceptance and use of technology (UTAUT) and its extension, UTAUT2, as well as the technology acceptance model (TAM) are prominently featured. Hence, Figure 2 represents the frequency of the theoretical models that investigated the ChatGPT utilization in education.

Figure 2.

Theoretical models from the existing literature.

Firstly, several studies employ the UTAUT or UTAUT2 model to investigate the behavioral intentions of students and faculty members toward ChatGPT [34,35,36,37]. These studies span across various countries and educational settings. For instance, Elkefi et al. [38] utilized a mixed-method triangulation design based on UTAUT, gathering data from engineering students in developing countries through semi-structured surveys. Bouteraa et al. [39] also adopted UTAUT in conjunction with social cognitive theory (SCT) focusing on the role of students’ integrity in adoption behavior. Similarly, Bhat et al. [40] examined educators’ acceptance and utilization using an extended UTAUT model. Strzelecki et al. [36] focused on Polish academics, incorporating personal innovativeness into the UTAUT2. In addition, Arthur et al. [41] and Grassini et al. [42] employed the UTAUT2 model to examine the predictors of higher education students. Salifu et al. [43] similarly investigate among economics students in Ghana, and Elshaer et al. [44] integrated gender and study disciplines as moderators, finding significant moderating effects on the relationship between performance expectancy and ChatGPT usage.

Secondly, TAM is another widely used framework in the literature. Gustilo et al. [45] used TAM to understand factors influencing the acceptance of ChatGPT, while Kajiwara and Kawabata [46] examined the impact of teaching ethical use among students aged 12 to 24. Cambra-Fierro et al. [47] assessed university faculty members using TAM, and Tiwari et al. [48] investigated students’ attitudes toward ChatGPT for educational purposes. Abdalla [49] used a modified version of TAM to investigate college students, with personalization acting as a moderator. Abdaljaleel et al. [50] and Sallam et al. [51] employed a TAM-based survey instrument called TAME-ChatGPT (Technology Acceptance Model Edited to Assess ChatGPT Adoption) to examine ChatGPT integration in education, with participants from various countries comprising Egypt, Iraq, Jordan, Kuwait, and Lebanon. Nonetheless, the application of TAM to investigate ChatGPT is evident by its extensive utilization in the current literature [52,53,54,55,56,57,58,59,60].

In addition to UTAUT and TAM, other theoretical frameworks have been employed. Specifically, Duong et al. [61] integrated the information systems success (ISS) model with the stimulus–organism–response (SOR) paradigm to explore factors affecting students’ trust, satisfaction, and continuance usage intention of ChatGPT. Mandai et al. [62] approached ChatGPT’s impact on higher education through John Dewey’s reflective-thought-and-action model and revised Bloom’s taxonomy. Jochim and Lenz-Kesekamp [63] used domestication theory to explore the adaptation process of text-generative AI among students and teachers. Abdalla et al. [64] applied the diffusion of innovation theory (DIT) to investigate ChatGPT adoption by business and management students. Mahmud et al. [65] evaluated factors within the extended value-based adoption model (VAM) to understand university students’ attitudes toward ChatGPT. Nonetheless, other theoretical models include social construction of technology (SCOT) theory [66], interpretative phenomenological analysis (IPA) [67], and hedonic motivation system adoption model (HMSAM) [68].

Overall, the diversity of theoretical models highlights the multifaceted nature of research on ChatGPT adoption in education, with each model providing unique insights into the factors influencing acceptance and usage. This theoretical pluralism enriches the understanding of ChatGPT’s integration into educational contexts and underscores the importance of a comprehensive approach to studying technology adoption.

2.3. Methodologies of Prior Research

The methodologies employed in the research on ChatGPT adoption and utilization in education span a variety of quantitative and qualitative approaches, often leveraging established theoretical frameworks and advanced statistical techniques to examine different influencing factors. Several studies have utilized structural equation modeling (SEM) to explore the factors influencing ChatGPT adoption [40,49,54]. For example, Cambra-Fierro et al. [47] assessed the impact of a series of factors on ChatGPT adoption among university faculty members using covariance-based structural equation modeling (CB-SEM). Abdalla et al. [64] investigated the business and management students by using partial least square structural equation modeling (PLS-SEM). Additional studies that use CB-SEM or PLS-SEM are evident across the literature, and the research spans higher institution teachers, undergraduates, and postgraduate students as well as post-primary education teachers and students in various countries including Vietnam, Norway, Egypt, Poland, Bangladesh, Oman, South Africa, Czech Republic, China, Malaysia, Saudia [34,35,36,37,41,42,44,50,55,56,57,60,68,69,70].

Additionally, hybrid methodologies have been adopted to capture both linear and nonlinear relationships in the data. Mahmud et al. [65] and Salifu et al. [43] integrated PLS-SEM with artificial neural networks (ANN) and deep neural networks (DNN) to enhance the precision of their analyses. This hybrid approach reveals a growing trend towards utilizing complex models to better understand the multifaceted nature of ChatGPT adoption. Moreover, qualitative methods [71,72,73] also play a significant role in understanding the subjective experiences and perceptions related to ChatGPT. Specifically, studies by Jangjarat et al. [72] and Komba [73] employed content and thematic analysis, using software like NVivo software program to analyze qualitative data from interviews and chat content. In addition, Espartinez [74] utilized Q-Methodology, Sun et al. [75] employed a quasi-experimental design, and others applied statistical methods such as descriptive analysis and inferential statistics like Chi-square or regression analyses [76,77] to capture the perspectives of students and academics as well as impact on programming mode behaviors regarding ChatGPT.

Furthermore, Bukar et al. [78] utilized AHP to propose a decision-making of ChatGPT utilization in education through 10 expert panels. Mixed-method designs have been used to combine the strengths of both quantitative and qualitative approaches. Adams et al. [79] employed a sequential explanatory mixed-method design to explore university students’ readiness and perceived usefulness of ChatGPT for academic purposes, using SPSS and ATLAS.ti for data analysis. These diverse methodologies highlight the comprehensive efforts undertaken by researchers to dissect the multifactorial influences on ChatGPT adoption and utilization in educational settings, providing rich insights and guiding future research directions.

2.4. Research Gap and Motivation

Despite the extensive research observed in the current literature, especially regarding the application of various theories from the technology adoption literature due to the extensive use of SEM-based and various qualitative methodologies to explore the factors influencing ChatGPT adoption in educational contexts, there is a notable gap in the application of the RRR and AHP to rank and prioritize these factors. While the RRR framework provides a robust foundation for understanding the multifaceted dimensions of Gen-AI [17], it lacks a structured approach to quantitatively assess and prioritize these components. This limitation hampers the ability of policymakers and stakeholders to make well-informed decisions regarding the adoption and utilization of Gen-AI. Secondly, because the AHP method lacks application in the contexts of ChatGPT utilization for policy and decision-making [78], as well as the need to quantitatively assess and prioritize the RRR components, its application can be instrumental. AHP is a powerful decision-making tool that can systematically rank and prioritize the components of the RRR framework. Hence, the study aims to close this by employing this methodological approach to evaluate the relative importance of risk, reward, and resilience themes, facilitating an evidence-based approach to Gen-AI utilization decisions.

3. Theoretical Background and Framework

The diverse studies identified from the literature have collectively reported a spectrum of findings regarding ChatGPT. The insights from these studies encompass a wide range of themes, including ethical concerns, perceived benefits, and strategies for individuals and society to navigate the implications of ChatGPT. The information was extracted and strategically classified into the three interconnected categories of risk, reward, and resilience through a systematic literature review (SLR) as outlined in Bukar et al. [17]. This classification was motivated by the integrated policy-making framework known as RRR, as proposed by Roberts [80].

This framework provided a structural foundation for understanding and categorizing the identified issues, ensuring the exploration of ChatGPT’s landscape. Following this, the study delved into a frequency analysis to quantify the prevalence of these identified concerns. Subsequently, ethical and risk-related themes, specifically those falling under the risk category, were selected for a detailed examination, where the study employed these themes to construct a decision-support framework, leveraging the AHP to discern their relative importance and guide decisions on whether to restrict or legislate ChatGPT utilization [78]. Accordingly, this study elevated this analysis by encompassing all elements of the RRR framework: risk, reward, and resilience. This comprehensive approach allowed for a holistic consideration of themes gleaned from previous studies. By incorporating all the associated themes of RRR, the study ensured that ethical concerns, risks, and potential rewards associated with ChatGPT were thoroughly examined and prioritized. This methodological progression underscores the depth and rigor of the study, providing valuable insights for policymakers and stakeholders grappling with the ethical implications of ChatGPT in the educational environment. The proceeding sections discuss these issues accordingly.

3.1. Risk

Risk arises where threat, exposure, and vulnerability converge [80]. This convergence depends on the severity of the threat and its interaction with the exposure and vulnerability. In the context of ChatGPT utilization in education, risk emerges at the intersection of potential threats, such as misuse or ethical breaches (exposure) which includes the extent to which ChatGPT is integrated into educational practices, and vulnerability, referring to the susceptibility of students and educators to these threats. The level of risk is influenced by the severity of the potential threats and how they interact with the degree of exposure and vulnerability within the educational environment. Accordingly, the SLR and RRR conceptual study [17] played a crucial role in identifying and synthesizing a comprehensive list of ethical and risk-related concerns associated with the implementation of ChatGPT. Building upon the insights gleaned from previous studies [17,78], the current study refined and narrowed down the list [78]. As a result, seven concerns were selected for their frequency count, as presented in Table 1. For a detailed account of the extraction process, refer to Bukar et al. [17,78]. The selected concerns are considered relevant based on their frequency count and have been earmarked as risk themes for this investigation. This approach aims to investigate the most significant risks and intricately assess and rank them among reward and resilience issues.

Table 1.

Risk ethical themes based on frequency count.

3.2. Reward

The reward is determined by factors like opportunity, access, and capability [80]. In the context of ChatGPT utilization in education, opportunity refers to the potential benefits that ChatGPT can offer, like enhanced learning experiences and personalized education. Access involves the conditions and channels through which educators and students can effectively leverage ChatGPT, such as technological infrastructure and availability of resources. Capability encompasses the internal attributes of the educational system and its participants, including their digital literacy and adaptability, which determine the extent of the benefits they can achieve from utilizing ChatGPT. Together, these elements define the potential rewards that can be realized through the strategic and informed integration of ChatGPT into educational practices.

Accordingly, themes associated with the rewards of ChatGPT usage were extracted [17] and synthesized. This process involved not only identifying these themes but also computing their frequency count to discern patterns and assess their significance within the dataset. Further, a detailed examination of the reward-related information was performed, focusing on identifying recurring themes such as common topics, ideas, patterns, and approaches. The identification of primary themes was grounded in the observation of connections among sub-themes sharing a logical context. For instance, themes like providing feedback, prompt writing, collaboration and friendship, and increased student engagement were collectively categorized under the umbrella term “question answering”. This systematic approach was consistently applied to the remaining themes.

Building upon this analysis, seven reward-related themes were singled out based on their frequency count and thematic relevance, as outlined in Table 2. These carefully selected themes are regarded as more significant and have been designated as criteria for further investigation utilizing the AHP. This strategic selection aims to concentrate on the most significant rewards stemming from the utilization of ChatGPT in educational settings. Nevertheless, some themes were not analyzed in this study due to their low frequency and thematic relevance, comprising decision support, expertise, and judgment [23,81], multilingual communication and translation [14,81], cost-saving [7], passed exams [82], pitches [83], support societal megatrends [84], and transformation [11].

Table 2.

Reward themes of ChatGPT based on frequency count.

3.3. Resilience

The core of resilience is found in the “ability of entities and systems to absorb, adapt to, and transform in response to ongoing changes” [80,85]. In the context of ChatGPT utilization in education, resilience is rooted in the capacity of educational systems and stakeholders (students, educators, and institutions) to absorb, adapt to, and transform in response to the ongoing changes introduced by Gen-AI. As ChatGPT and similar AI tools continue to evolve and impact educational practices, the emphasis on resilience thinking becomes essential. This enables educational systems to not only withstand risk-related concerns but also to adapt and transform these challenges into opportunities for innovation and growth, like developing plagiarism detection tools, encouraging higher-level reasoning skills, etc. Drawing from the literature, resilience-related themes connected to addressing the ethical challenges posed by ChatGPT were identified [17] and synthesized. Accordingly, the frequency of these themes was computed to unravel underlying patterns and significance within them.

In addition, this study further synthesized the gathered information, aiming to adopt a primary theme that would become the focal point for further investigation. For example, themes such as improved human–AI interaction, balance between AI-assisted innovation and human expertise, are considered as recurrent terminologies in the resilience discourse. Recognizing their shared logical context, these were amalgamated under the overarching theme of “co-creation between humans and AI”, as presented in Table 3. Extending this process to encompass various other keywords and conceptual clusters, this study curated a selection of seven resilience-related themes. This strategic curation was guided by both the frequency of occurrence and thematic relevance. The chosen themes stand as pivotal factors within the resilience category, laying the foundation for their exploration in the subsequent AHP analysis. Nonetheless, other resilience-related themes that were not analyzed in this study include addressing the digital divide and potential mitigation strategies [27], auditing the trail of queries [86], sustainability, raising awareness, scientific discourse [23], and experimental learning framework [33].

Table 3.

Resilience themes of ChatGPT based on frequency count.

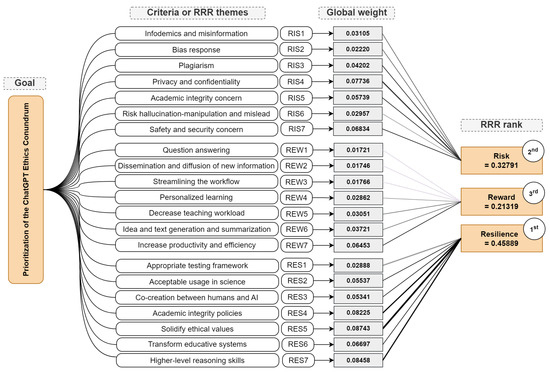

3.4. Summary and Conceptual Framework Based on AHP

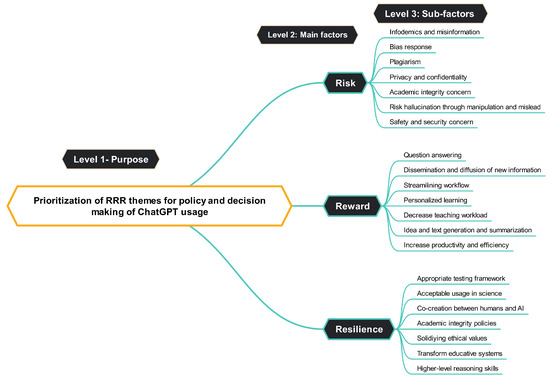

Given the research’s objective to employ the AHP for its popularity [87,88,89,90,91,92,93] for prioritizing themes crucial to policymaking and decision-making in ChatGPT utilization, a conceptual framework was devised. This framework unfolds across three hierarchical levels encompassing the overarching objective, criteria or primary themes or factors, and sub-criteria or sub-factors, or sub-themes. As a result, the primary focus of this study is to ascertain priorities among elements shaping the policies and decisions surrounding ChatGPT application. Positioned at the peak of this hierarchical structure is the central research objective, representing the goal of the study. At level 2, the framework delineates the main factors or overarching categories, namely, risk, reward, and resilience, drawn from the well-established integrated policymaking model known as RRR [17,80]. These categories stand as pivotal themes influencing decisions regarding ChatGPT usage in educational settings. Further, level 3 of the hierarchy details the sub-factors, with the potential to exert influence on decisions concerning ChatGPT’s utilization in the educational sector. To give equal priority to the RRR categories, seven (7) elements were considered from each category to provide equal opportunity to investigate them. Hence, the detailed structure of this conceptual framework is visually presented in Figure 3, providing a typical illustration of the hierarchical relationships. Subsequently, the breakdown of the research methodology is expounded upon in the succeeding section, explaining the systematic steps undertaken to unravel the multifactorial relevance of ChatGPT’s ethical themes within the educational context.

Figure 3.

Conceptual framework based on hierarchical structure of the AHP.

4. Research Design and Methodology

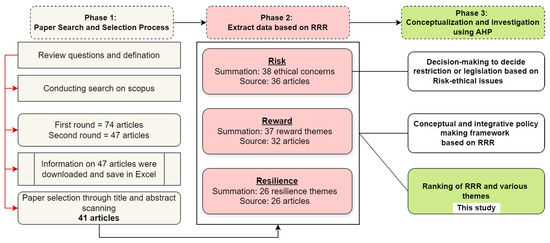

This study is divided into three parts, each making a distinct and significant contribution to the ethics conundrum of ChatGPT and similar LLM models, as depicted in Figure 4. Firstly, a systematic review was used to identify articles concerning the ethical issues of ChatGPT. The outcomes of the review help this study to identify issues of ChatGPT and propose a policymaking framework based on the RRR integrated framework for policy making [17,80]. The proposed framework discussed the complex nature of creating and developing policy to guide the implementation and utilization of LLMs. One key limitation of the framework was that there is a lack of objective assessment of the elements proposed in RRR, which is conceptual and theoretical in nature. Accordingly, there is a lack of investigation and examples on how elements will guide initial policy creation and how to weigh the components of RRR objectively by considering their various components. The former was investigated and reported in Bukar et al. [78], and the latter is covered in this study. In particular, this study utilized the concept of AHP by following the guidelines provided by Gupta et al. [87] to compare and prioritize the various elements of RRR.

Figure 4.

Research design and process [17,78].

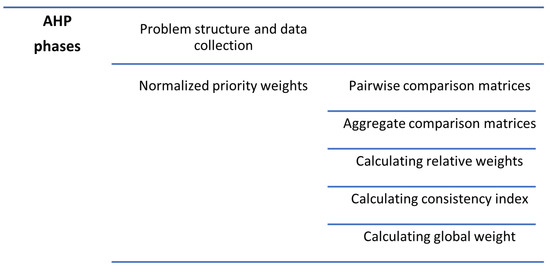

Accordingly, the AHP stands as a widely applied technique in the literature on multiple-criteria decision-making (MCDM), especially suited for scenarios involving a multitude of criteria or factors, addressing complex challenges within MCDM [87,93,94]. The core methodology of AHP involves breaking down an MCDM problem into a hierarchical structure with a minimum of three levels comprising objectives, criteria, and decision alternatives [94]. In this hierarchical model, AHP systematically constructs an evaluation framework, gauges the relative priorities of the criteria, conducts comparisons among available decision alternatives for each criterion, and ultimately establishes a ranking of these alternatives [95]. The determination of ranking of factors using the AHP involves expert pairwise comparisons, where judgments express the degree to which one element dominates another concerning a specific attribute, as outlined by [94]. The AHP unfolds through various phases, as illustrated in Figure 5, to analyze the RRR themes regarding ChatGPT utilization in education.

Figure 5.

Phases of AHP approach.

Firstly, the initial phase of the AHP approach is dedicated to the construction of a cohesive hierarchy for the research problem. Consequently, the structure of the AHP problem specific to this study is illustrated in Figure 3. It is crucial to emphasize that the AHP model employed in this study deliberately excludes alternative options, as the primary objective revolves around prioritizing these identified RRR themes.

Secondly, an AHP questionnaire was carefully crafted utilizing the pairwise comparison method proposed by [94]. This comprehensive questionnaire encompassed all the primary themes and sub-themes relevant to the study. This study developed the AHP questionnaire based on the comparison concept, where each factor is compared on a scale of 1–9. The questionnaire is organized into several sections. The introduction includes the invitation letter and privacy notice/PDPA clause. Detailed instructions on how to complete the questionnaire follow. The main body comprises comparison tables for the main classifications (risk, reward, and resilience), as well as for risk-related themes, reward-related themes, and resilience-related themes. The final section collects the respondents’ demographic information.

Accordingly, the questionnaire was distributed among academics within the university environment and a total of 12 responses were garnered. This sample is considered adequate for AHP investigation for several reasons: AHP yields reliable results with small samples [96,97] and typically uses sample sizes ranging from 2 to 100 experts [98]. There is no strict minimum sample size [99], and involving more experts may introduce repetitive information, diminishing the value of additional responses [100]. Smaller samples make AHP efficient, especially when analyzing equally important alternatives [96,97]. As AHP is not a statistical method, it does not require a statistically significant sample size [101,102], and the emphasis is on decisions made rather than the respondents, negating the need for a representative sample [102]. Finally, AHP is often applied to knowledgeable individuals, further justifying smaller sample sizes [103]. Accordingly, the participating academics provided valuable data through pair-wise comparisons, grounded in the themes of risk, reward, and resilience. The relative scores assigned to these pairwise comparisons adhered to the well-established nine-point scale introduced by Saaty [94], as delineated in Table 4.

Table 4.

The proposed AHP scale based on Saaty.

Thirdly, the study undertook the transformation of raw pairwise comparison data into priority weights. This pivotal step involved converting the judgments provided by the respondents into a quantifiable format, laying the groundwork for subsequent analyses. Hence, the detailed methodology employed to ensure the accurate reflection of the relative importance of RRR component in this study is elaborated in the following section. These steps systematically outline the determination of normalized weights and ranking of the RRR element, aligning to the methodological approach by Gupta et al. [87].

4.1. Building Pairwise Comparison Matrices

The process of pairwise comparison plays a crucial role in assessing the relative significance of factors, a methodology introduced by Saaty [94,104] and further expounded upon by Forman and Peniwati [105]. In this comparison phase, judgments are formulated and expressed as integers, representing the preference for one factor over another. If the judgment signifies that the factor is more important than the factor, the integer is placed in the row and column of the comparison matrix. Simultaneously, the reciprocal of this integer is recorded in the row and column of the matrix. In situations where the factors being compared are deemed equally important, a value of one is assigned to both locations in the matrix. Consequently, each comparison matrix, denoted as , takes the form of a square matrix of order n, where n is the number of factors compared, and it includes reciprocated elements, as depicted in Equation (1). This systematic process sets the stage for the subsequent computation of normalized priority weights.

4.2. Construction of Aggregate Comparison Matrices

To synthesize the evaluations or judgment for each element within the comparison matrix, we employ the geometric mean method, a technique that involves the aggregation of the responses obtained from all academics engaged in the pairwise comparisons of various themes and sub-themes of RRR. This approach facilitates the attainment of a consensus assessment [104,105]. The resulting aggregated comparison matrix, denoted as , is specifically generated. In this matrix, each element signifies the geometric mean of judgments provided by N decision-makers. The calculation is outlined in Equation (2), where N represents the number of academics participating in the assessments, and denotes the individual judgments furnished by each participant for the corresponding factor or sub-factor pair under comparison. This aggregation process contributes to obtaining a collective perspective on the relative importance of these elements within the realm of ChatGPT utilization decisions.

4.3. Computation of RRR Theme Relative Weights

To ascertain the precedence of each category (primary factor) and sub-factor using the AHP methodology, a normalized matrix denoted as N is formulated. Derived from the corresponding comparison matrix A, the creation of N follows the procedure outlined in Equation (3). Here, N represents the normalized matrix specific to the category or sub-factor, with denoting the element at the row and column of the normalized matrix N, representing the corresponding element in the comparison matrix A, and n representing the total count of factors or sub-factors under consideration. This normalization procedure serves to standardize the data within the matrix, rendering them amenable to subsequent calculations of priority weights. The resultant normalized matrix N enables a quantitative evaluation of the relative significance of each category and sub-factor within the context of ChatGPT utilization in the educational sector.

Moreover, to derive the priority weights for each factor, the investigation computed the average of the elements within each row of the normalized matrix N. This computation yields a priority vector denoted as , representing a column matrix of order , as depicted in Equation (4). In this context, W signifies the priority vector, where each element corresponds to the priority weight of a specific factor, and n denotes the total count of factors under consideration. Significantly, the priority vector W serves as a concise quantitative depiction of the relative significance of each factor within the study. This allows the study to effectively rank and prioritize these themes based on the collective evaluations provided by the academics engaged in the pairwise comparisons and normalization procedures.

4.4. Validation of the Comparison Matrix through Consistency Test

According to the AHP approach in responding to the variability in human responses, it is essential to evaluate the consistency of the comparison matrices to validate the predicted priority vectors. The consistency ratio (CR) acts as the metric for assessing pairwise comparisons. If the is equal to or less than 0.10 (), it signifies an acceptable level of consistency within the comparison matrix A. In such cases, the ranking results can be considered reliable and accepted, aligning with the guidelines from [94]. However, if the surpasses the threshold (), it indicates that the ranking results are unacceptable due to excessive inconsistency. In such situations, it is recommended that the decision-maker revisits the evaluation process, as advised by prior research [87,93]. Ensuring consistency in the comparison matrices is crucial for obtaining robust and dependable priority vectors. Thus, matrix A is deemed consistent if it fulfills the conditions outlined in Equation (5). These conditions are fundamental in ensuring the reliability of the prioritization results, where A represents the comparison matrix, and W is the priority vector.

Additionally, the mathematical expression in Equation (4) is an eigenvalue problem. In this scenario, the principal eigenvalue, referred to as , must be equal to or greater than n, as specified by [94]. A crucial criterion for consistency is that the larger is, in Equation (4) the closer it should be to n. This correlation ensures increased consistency in the matrix A, as the principal eigenvalue converges toward the magnitude of the number of themes n. Therefore, to evaluate the consistency of associated with a comparison matrix A, the typical steps involve the following:

- Step 1: Calculating the principal Eigenvalue by using Equation (6):

- Step 2: Calculate the , where is the consistency index given by Equation (7):

- Step 3: Calculate the random index (), where represents the predefined value determined by the matrix order (n). It is acquired from a reference table corresponding to the matrix order, resulting in distinct values of for different numbers of criteria (n), as outlined in Table 5.

Table 5. Saaty (1980) predefined value of the random index ().

Table 5. Saaty (1980) predefined value of the random index (). - Step 4: Calculating CR by using Equation (8):

- Step 5: Verify the acceptance of . If the is equal to or less than , it signifies that the level of inconsistency within the comparison matrix A is acceptable, and the reliability of the ranking results can be affirmed. Among the 12 responses, only 10 passed the consistency test; therefore, only those are reported in this study.

4.5. Computation of Global Weights of the RRR Themes

Equation (4) is utilized to compute the local weights for both the primary category and sub-themes associated with ChatGPT utilization decisions. These local weights offer insights into the relative importance of themes and sub-themes within their specific categories. For the primary themes (categories), their global weights coincide with their local weights. In essence, the local weights directly signify the global weights for the main categories. However, concerning sub-themes, the determination of global weights follows a distinct process. Equation (9) is employed to calculate the global weights for sub-themes. This implies that the significance of sub-themes within their parent category is evaluated in connection with the overall priorities of all themes and sub-themes considered in the study. This methodology facilitates a comprehensive assessment of the importance of sub-themes in the broader context of decision-making regarding ChatGPT utilization.

Accordingly, stands for the global weight of the sub-themes, represents the local weight of the sub-themes, and signifies the global weight of the corresponding main factor (category).

5. Results and Discussion

The AHP results and discussion were prepared following the presentation and guidelines in Gupta et al. [87]. Accordingly, the data amassed for this study were saved in MS Excel. Expert responses gathered through the intricate process of pairwise comparisons for various themes and sub-themes were systematically aggregated using the geometric mean approach outlined in Equation (4). The presentation of findings encapsulates comparison matrices, weights, and consistency ratios for all categories within the hierarchical model. These values were derived, adhering to the methodology elucidated in the preceding section. It is noteworthy that not all CR values obtained from 12 responses fall below the predefined threshold of . Accordingly, 10 responses signify a commendable level of consistency in the comparison matrices, as reported in this study. This underscores the reliability of the calculated weights or priorities. The high consistency observed in the matrices serves as a crucial validation, fortifying the trustworthiness of the prioritization results for key themes and dimensions in the intricate landscape of ChatGPT RRR element ranking. Hence, the proceeding sections discuss the results obtained in this study.

5.1. RRR Normalized Matrix and Weight

Upon delving into the results presented in Table 6, a discernible pattern emerges. Among the three primary categories, “Resilience” emerges as the heavyweight with a substantial weight of . This underscores resilience as the preeminent factor wielding the most influence in the ethical conundrum surrounding ChatGPT utilization. Following closely, “Risk” occupies an intermediate position with a weight of , denoting its significant but balanced importance. On the other end, “Reward” assumes the role of the third primary category with a weight of , highlighting its role as a critical consideration. These weights not only provide a hierarchical perspective but also offer valuable insights into the relative priorities of these categories within the framework of RRR for ChatGPT utilization in education. Accordingly, the calculated values for , , and indicated that the consistency of the RRR matrices is acceptable.

Table 6.

Normalized matrix and weight of primary RRR themes.

5.2. Normalized Matrix and Weight of Risk Themes

The analysis of the “Risk” category delves into the intricate sub-themes, unraveling the ethical complexities associated with ChatGPT. Table 7 presents a detailed breakdown of the weight analysis for the risk-related themes. The result reveals that privacy and confidentiality concerns take precedence as the most pivotal and pertinent dimension in the realm of risk-related concerns. Following closely is safety and security concerns , emphasizing the paramount importance of data security and confidentiality. Next, in descending order of significance, are academic integrity concern , plagiarism , infodemics and misinformation , risk hallucination through manipulation and misleading , and biased responses . The respondents’ heightened concerns regarding privacy and confidentiality underscore the critical nature of safeguarding data and confidential information, alongside the pressing need for safety and security measures.

Table 7.

Normalized matrix and weight of risk-related themes: Infodemics and misinformation (RIS1), biased responses (RIS2), plagiarism (RIS3), privacy and confidentiality (RIS4), academic integrity concern (RIS5), risk hallucination through manipulation and misleading (RIS6), and safety and security concerns (RIS7).

Secondly, the calculated values of , , and affirm the acceptable consistency of the comparison matrices employed in the analysis, solidifying the reliability of the ranking results. These rankings provide a comprehensive understanding of the themes shaping risk-related concerns in ChatGPT utilization. Stakeholders and policymakers can leverage this information to craft tailored policy strategies and guidelines, considering the relative importance of each concern. This strategic approach should aim to foster the responsible and ethical utilization of ChatGPT and by extension generative AI.

5.3. Normalized Matrix and Weight of Reward Themes

The comprehensive analysis within the “Reward” category delves into the multifaceted themes that wield substantial influence over the benefits emanating from the integration of ChatGPT, as presented in Table 8. Each dimension, discerned through the discerning perspectives of the respondents, contributes uniquely to the overall landscape of rewards in ChatGPT utilization. Notably, increased productivity and efficiency emerge as the preeminent reward, commanding the highest weight of 0.303. This underscores the unanimous acknowledgment by respondents of the transformative impact that ChatGPT can have on enhancing productivity and streamlining various tasks. Furthermore, idea and text generation and summarization follow closely, emphasizing the prowess of ChatGPT in creative ideation and content summarization. Subsequently, the remaining weight of reward themes includes decreased teaching workload , personalized learning , streamlining workflow , dissemination and diffusion of new information , and question answering . Notably, the respondents accorded the highest importance to increased productivity and efficiency as the most significant reward derived from the utilization of ChatGPT.

Table 8.

Normalized matrix and weight of reward-related themes: Question answering (REW1), dissemination and diffusion of new information (REW2), streamlining the workflow (REW3), personalized learning (REW4), decrease teaching workload (REW5), idea and text generation and summarization (REW6), and increased productivity and efficiency (REW7).

Secondly, the calculated values of , , and affirm the acceptable consistency of the comparison matrices used in the analysis, reinforcing the reliability of the ranking results. The insights obtained from this investigation into the varied themes of rewards associated with ChatGPT utilization offer stakeholders a profound understanding of the potential benefits. From empowering educators to streamlining organizational workflows, the findings underscore the transformative potential of ChatGPT across diverse domains. Stakeholders can leverage this comprehensive understanding to formulate targeted policies and strategies that maximize the positive impact of ChatGPT while addressing specific challenges and concerns associated with its application.

5.4. Normalized Matrix and Weight of Resilience Themes

The exploration within the “Resilience” category illuminates the pivotal themes that underpin the resilience of ChatGPT adoption, as meticulously outlined in Table 9. These themes, shaped by the discerning perspectives of respondents, collectively contribute to the overarching landscape of resilience in the utilization of ChatGPT. At the forefront is the dimension of solidifying ethical values , underscoring the paramount importance of ethical considerations in fortifying the resilience of ChatGPT adoption. This dimension reflects the commitment to upholding ethical standards and ensuring responsible usage. In addition, higher-level reasoning skills follow closely, emphasizing the role of ChatGPT in fostering advanced cognitive abilities and critical thinking. Subsequently, the weight of the remaining resilience themes include academic integrity policies , transforming educative systems , acceptable usage in science , co-creation between humans and AI , and appropriate testing framework .

Table 9.

Normalized matrix and weight of resilience-related themes: Appropriate testing framework (RES1), acceptable usage in science (RES2), co-creation between humans and AI (RES3), academic integrity policies (RES4), solidifying ethical values (RES5), transform educative systems (RES6), and higher-level reasoning skills (RES7).

Furthermore, the computed values, including , , and , provide compelling evidence of the consistency within the comparison matrices employed in the analysis. These metrics not only validate the robustness of the analytical process but also underscore the reliability of the obtained ranking results. The weights obtained from this analysis offer insights into the multifaceted nature of the resilience dimension. Hence, educational stakeholders can leverage these weightings to tailor policies and strategies that bolster the resilience of ChatGPT adoption, fostering an environment where ethical considerations, cognitive development, academic integrity, transformative potential, and collaborative frameworks converge for responsible and impactful utilization.

5.5. Global Weights

Table 10 presents the global weights and rankings of the RRR dimensions concerning the ethical conundrum of ChatGPT utilization in education. Each element is assigned a global weight, reflecting its importance within the study’s context. The rankings of the RRR themes are discussed based on the study’s findings. Notably, solidifying ethical values (global weight = 0.08743) from the resilience category claims the top position in the ranking order, emphasizing the significant influence that ethical values hold in decision-making for ChatGPT utilization. The result indicates the importance of informed decisions by educational stakeholders in promoting ethical values within the educational system. Following closely is higher-level reasoning skills (global weight = 0.08458) from the resilience category, emphasizing the importance of improving human reasoning capabilities. This ranking guides stakeholders in prioritizing strategies to enhance higher-order cognitive and reasoning skills for effective ChatGPT utilization in education. Similarly, academic integrity policies (global weight = 0.08225) from the resilience category secure the third position, highlighting the importance of establishing policies for academic integrity.

Table 10.

Ranking of RRR themes for ChatGPT ethics conundrums.

Furthermore, in the risk category, privacy and confidentiality (global weight = 0.07736) claim the fourth rank, stressing the need to address privacy and confidentiality issues impacting users’ data. Safety and security concerns (global weight = 0.06834) from the risk category secure the fifth position, emphasizing the significance of addressing security-related issues in the ethical conundrum of ChatGPT utilization. Transformative educational systems (global weight = 0.06697) from the resilience category take the sixth spot, indicating the fundamental role of transformative educational systems in ChatGPT utilization. Increasing productivity and efficiency (global weight = 0.06453) is the only factor from the reward category in the top ten, ranking seventh, suggesting that ChatGPT enhances work efficiency. Academic integrity concern (global weight = 0.05739) from the risk category occupies the eighth position, highlighting concerns related to integrity emerging with the advent of ChatGPT. Acceptable usage in science (global weight = 0.05537) from the resilience category ranks ninth, indicating the possibility of implementing acceptable usage policies for ChatGPT. Finally, the co-creation between humans and AI (global weight = 0.05341) from the resilience category completes the top ten themes, showcasing respondents’ support for the co-existence of AI tools and humans. It is noteworthy that the resilience category dominates the top ten RRR elements, accounting for six themes, while the risk category contributes three themes, and the reward category features only one.

5.6. Discussion

The transformative integration of Gen-AI into educational settings presents a myriad of ethical considerations that extend beyond conventional risk assessments. Unlike technology adoption theories such as UTAUT [34,35,36,37,38,39,40,41,42,43,44] and TAM [45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60], this study employs a RRR framework (risk, reward, and resilience) to comprehensively explore the ethical landscape of ChatGPT utilization in education. Additionally, the study uses the AHP methodology, in contrast to PLS-SEM and CB-SEM [40,47,48,49,54,64], or qualitative analysis text analysis [66,106,107,108,109,110,111], to prioritize ethical themes based on their global weights. The prioritization framework, as illustrated in Figure 6 highlights the paramount importance of resilience elements such as solidifying ethical values, enhancing higher-level reasoning skills, and transforming educational systems. Privacy and confidentiality emerge as critical risk concerns, along with safety and security issues. The research also highlights reward elements including increased productivity, personalized learning, and streamlined workflows.

Figure 6.

Framework of RRR ranking for decision-making.

These findings align with the work of Gammoh [112], who conducted a thematic analysis and classified risks associated with ChatGPT integration, such as plagiarism, compromised originality, overdependency on technology, diminished critical thinking skills, and reduced overall assignment quality. Similarly, Murtiningsih et al. [113] emphasized that excessive dependence on ChatGPT risks diminishing the quality of human resources in education. In addition, Gammoh [112] further suggested risk mitigation strategies, including using plagiarism detection tools, implementing measures to improve student assignments, raising awareness about the benefits and risks, and establishing clear guidelines. These recommendations reinforce the findings of this study, particularly supporting resilience themes that balance risk control with the efficient utilization of benefits (rewards), emphasizing solidifying ethical values. Moreover, Murtiningsih et al. [113] advocated for the development of strategies by educators to harness technological advancements effectively while fostering critical thinking skills in students, which supports the findings of this study that identify higher-level reasoning skills as key resilience elements.

Additionally, the emphasis on instructional guidance for engagement with Gen-AI tools [114] suggests that the literature favors the use of ChatGPT as a means to enhance resilience—the ability to balance risks and rewards. Similarly, the findings of Moorhouse [115]—which report that experienced teachers generally recognize the potential of Gen-AI to support their professional work—along with those of Espartinez [74]—who identified ethical tech guardians and balanced pedagogy integrators as key factors—further emphasize this point. Furthermore, Ogugua et al. [116] identified several recommendations, such as the integration of Gen-AI into the curriculum, defining specific goals for using Gen-AI tools in classes, establishing clear guidelines and boundaries, and emphasizing the importance of critical thinking and independent problem-solving skills. These studies collectively support several resilience themes highlighted in this study [74,114,116], including co-creation between humans and AI, academic integrity policies, solidifying ethical values, acceptable usage, transforming educational systems, and fostering higher-level reasoning skills.

The ranking provide valuable insights into the themes that should be prioritized when evaluating the ethical conundrum associated with ChatGPT utilization in education. The dominance of resilience elements underscores their critical role in adopting and absorbing concerns related to ChatGPT utilization, influencing people’s ability to use ChatGPT ethically and responsibly. However, the inclusion of elements from the risk and reward categories suggests a balanced approach that considers not only how individuals adapt to ChatGPT but also how risks are mitigated and rewards optimized. The findings highlight the complexity of ChatGPT utilization in an academic environment, emphasizing the need for a holistic approach that considers various facets of how individuals interact with the technology. This understanding can inform more effective and targeted efforts aimed at building ethical and responsible usage of ChatGPT and similar generative AI tools.

6. Contribution and Implications

In this section, the distinctive and substantial contributions of the study concerning the ethical challenges posed by ChatGPT in educational settings are discussed. Accordingly, this study uniquely explores the risk, reward, and resilience within the landscape of ChatGPT implementation. By employing the AHP, our study systematically evaluates and prioritizes the significance of sub-themes under RRR, providing insights into their pivotal roles in shaping decisions surrounding ChatGPT utilization. Consequently, the following sections expound on the novel contributions and implications that emerge from this study while also acknowledging its limitations and suggesting avenues for future research.

6.1. Theoretical Contributions

This study contributes to and expands the theoretical landscape of ChatGPT utilization and other related Gen-AI tools. This approach is distinct from the theoretical models (UTAUT, TAM, etc.) employed by previous studies. Accordingly, this study addresses the need for evaluating and integrating the dynamics of risk, reward, and resilience within the ethical considerations of AI utilization. By exploring the interplay of these themes, this study enhances our understanding of how the RRR framework can be applied to decision-making in the context of ChatGPT utilization objectively, enriching the theoretical foundations laid by existing research [17,80]. While the initial RRR framework offers a structured model for navigating complex problems, this study goes a step further by providing a framework capable of systematically weighing different risks and rewards, considering the aspect of resilience through the AHP. This contribution stands as a valuable theoretical foundation for future research in this domain.

Furthermore, the framework’s examination of three interconnected categories (risk, reward, and resilience) allows for a systematic assessment of their relative priorities, fostering comparisons and resulting in the establishment of a category ranking. The study’s methodology involves expert pair-wise comparisons, allowing judgments to quantify the dominance of one element over another concerning specific attributes. In contrast, the existing RRR framework does not prescribe specific conclusions for policymakers but guides them in approaching complex problems. This approach facilitates the inclusion of diverse perspectives, contributing to the decision-making process. In contrast, this study offers an objective tool for decision-making by analyzing and weighing themes or factors objectively. Notably, the prioritization of RRR elements establishes a hierarchy that informs future research, policymaking, and implementation strategies of Gen-AI utilization in education.

6.2. Practical Implications

This study provides valuable practical implications for a range of stakeholders engaged in formulating policies within the educational sector. These practical implications extend throughout the educational landscape, offering actionable insights for entities such as organizations, government agencies, policymakers, and researchers. Therefore, by acknowledging the central role of Gen-AI and navigating through the complexities of risk, reward, and resilience, educational stakeholders can make well-informed decisions, develop policies with care, and devise strategies that strengthen individuals’ ability to effectively manage the ethical challenges posed by ChatGPT. To illustrate, the findings furnish actionable insights for educational leaders and decision-makers by pinpointing elements with the most significant influence related to ChatGPT utilization. This guidance aids in strategically shaping policies to enhance the ethical and responsible use of AI tools. Additionally, grasping the relative importance of themes can assist educational institutions in crafting more effective policies for promoting responsible ChatGPT usage, involving targeted investments in training and promotion initiatives.

Moreover, the study’s prioritization of RRR elements, particularly the dominance of resilience themes in the top ten, provides valuable insights for practical decision-making in the utilization of ChatGPT in educational environments. This prioritization framework aids decision-makers by offering a hierarchy of themes that need attention. Policymakers, educators, and institutions can use these insights to develop targeted strategies, policies, and guidelines for responsible ChatGPT utilization in educational settings. Hence, employing AHP contributes a practical methodology for systematically evaluating and prioritizing these themes, enhancing the systematic and structured analysis of Gen-AI tool utilization. Overall, the practical implication is that stakeholders should adopt a balanced approach, considering resilience, risks, and rewards when integrating ChatGPT into educational practices to ensure ethical, responsible, and effective utilization.

6.3. Limitations and Future Work

The study is not exempt from limitations, and a transparent discussion of these constraints is imperative for guiding future researchers’ endeavors. These limitations and aspects of the methodology present opportunities for future exploration. Firstly, even though the elements of RRR were derived from the general literature, the findings of the study may lack context generality, as they are not analyzed from specific educational institutions and participant demographics were not taken into account. The focus on a particular institution, country, or demographic group might necessitate further investigation. Hence, expanding this research to encompass a broader range of cultural and regional contexts could unveil variations in the significance of ethical concerns associated with ChatGPT utilization in educational settings, thereby enhancing the generalizability of the findings. Secondly, the methodology employed in this study relies on expert judgments, which inherently possess subjectivity. Conducting a comparative analysis of the effectiveness of AHP against other decision-making methodologies in the realm of ChatGPT ethics could illuminate the strengths and weaknesses of different approaches. Furthermore, the study focused on seven themes from each category (risk, reward, and resilience), potentially overlooking other pertinent themes that could influence decision-making in ChatGPT utilization. Future research may explore additional themes that contribute to the ethical considerations surrounding ChatGPT implementation in education.

Additionally, this study has paved the way for a comprehensive exploration, underscoring the imperative need to thoroughly investigate the adoption of ChatGPT and similar technologies from three critical perspectives. It is recommended that forthcoming studies examine the primary themes, namely, risk, rewards, and resilience, as individual outcome variables or themes within the adoption of LLMs. This approach to analysis will offer a more granular understanding of the multifaceted landscape surrounding LLM adoption. By dissecting these themes independently, researchers can gain deeper insights into the intricate dynamics that shape the utilization of ChatGPT. This, in turn, will facilitate the formulation of well-informed policies, ensuring the responsible and beneficial deployment of ChatGPT for societal advancement. For instance, elucidating the specific risks associated with ChatGPT adoption, exploring the resilience mechanisms embedded in its deployment, or assessing the tangible rewards can collectively inform another research direction that addresses the complex interplay of themes or factors in the utilization of ChatGPT.

Moreover, considering the mixed nature of academics regarding ChatGPT, this study’s findings may not fully capture the comprehensive scope of changes in ethical concerns over time. Developing dynamic models that account for the evolving nature of ethical considerations associated with ChatGPT utilization would provide a more realistic representation of the landscape. Therefore, while this study makes a substantial contribution to understanding the themes or factors influencing decision-making related to ChatGPT, it also underscores avenues for further research to address these limitations and propel the field forward. Finally, the elements extracted for risk, rewards, and resilience could be updated with additional information according to the recent literature. Thus, future studies should conduct similar studies to capture additional factors based on the current literature. This will help consolidate the findings of this study as well as add more insight into the ChatGPT utilization in educational settings.

6.4. Next Steps

Building on the insights gleaned from this study, our future research endeavors are poised to delve deeper into the themes influencing ChatGPT utilization within educational contexts. Our next step involves employing SEM to investigate the intricate relationships among the identified RRR elements. SEM offers a robust analytical framework that allows for the assessment of both observed and latent variables [117,118,119], providing a more perspective on the complex dynamics involved. To gauge the effectiveness of our model, we plan to collect empirical data directly from users or students engaged with ChatGPT in educational settings. This user-centric approach will enable us to measure the real-world impact of RRR elements surrounding ChatGPT utilization. Developing a tailored instrument for data collection will be crucial, allowing us to probe into user experiences and perceptions related to ethical considerations and the risk, reward, and resilience themes.

Nevertheless, the future research methodology should encompass various statistical techniques, with SEM taking center stage in validating the relationships between the structural elements. By employing SEM, we aim to ascertain the interplay between observed variables and latent constructs, providing a more comprehensive understanding of the factors influencing ChatGPT usage. Additionally, we will explore the application of regression models and artificial neural networks, similar to previous research [120,121,122,123], to further validate and complement future research efforts. These advanced analytical tools will offer a multi-faceted approach to scrutinizing the complex relationships of factors within the educational environment concerning ChatGPT.

7. Conclusions

This study delves into the complex landscape of ChatGPT utilization within educational environments, focusing on the ethical conundrum associated with its adoption. Employing SLR and frequency analysis, we selected seven themes for each of the RRR components. Our study not only contributes a decision-making prioritization framework for educational stakeholders but also offers an understanding of the ethical considerations, providing valuable insights for policymakers and institutions navigating the integration of ChatGPT. Furthermore, our exploration into the risk, reward, and resilience themes, guided by AHP, yielded critical insights. The results show that solidifying ethical values, higher-level reasoning skills, and academic integrity policies emerged as the top-ranking themes, emphasizing their paramount importance in decision-making for ChatGPT utilization. These findings inform a holistic understanding of the themes influencing ethical considerations.

In addition, the study’s practical implications extend to diverse stakeholders involved in educational policymaking. By acknowledging the intertwined dynamics of risk, reward, and resilience, institutions can make informed decisions, formulate cautious policies, and develop strategies to enhance ethical decision-making surrounding ChatGPT. Our research provides actionable insights for educational leaders and policymakers, guiding the creation of policies that promote responsible ChatGPT utilization. While this study contributes substantially to the theoretical foundations and practical considerations in the ethical implementation of ChatGPT in education, it is not without limitations. Future research endeavors should address these limitations, fostering a continuous dialogue and exploration of the multifaceted landscape surrounding ChatGPT utilization. In this ever-evolving domain, the study offers a valuable framework for decision-makers, researchers, and institutions navigating the ethical complexities of integrating ChatGPT into educational settings.

Author Contributions

Conceptualization, U.A.B. and M.S.S.; investigation, U.A.B. and R.S.; resources, M.S.S. and S.F.A.R.; writing—original draft preparation, U.A.B. and R.S.; writing—review and editing, U.A.B. and S.Y.; methodology, U.A.B. and R.S.; visualization, U.A.B. and S.Y.; supervision, M.S.S. and S.F.A.R.; project administration, M.S.S. and S.Y.; funding acquisition, M.S.S. All authors have read and agreed to the published version of the manuscript.

Funding

Telekom Malaysia Research and Development under Project No. MMUI/220169 at Multimedia University Malaysia.

Institutional Review Board Statement

This work involved human subjects in its research. Approval of all ethical and experimental procedures and protocols was granted by the MMU Research Ethics Committee under Application No. EA0202023, and performed in line with the Personal Data Protection Act 2010 (PDPA Act 2010) and other relevant research ethics.

Informed Consent Statement

Informed consent was obtained from all participants involved in the study.

Data Availability Statement

The data supporting this study are available on Mendeley Data and can be accessed at https://data.mendeley.com/preview/jfkbrx8m2x. The file covers all relevant information, computation, and findings that underlie the results presented in this study, allowing for further analysis and replication of the research.

Acknowledgments

This work was made possible through support rendered by Telekom Malaysia Research and Development at Multimedia University Malaysia. Gratitude is also extended to all individuals and entities whose contributions were instrumental in the successful execution of this research endeavor.

Conflicts of Interest

The authors declare no conflict of interest.

References

- George, B.; Wooden, O. Managing the strategic transformation of higher education through artificial intelligence. Adm. Sci. 2023, 13, 196. [Google Scholar] [CrossRef]

- Kumar, D. How Emerging Technologies are Transforming Education and Research: Trends, Opportunities, and Challenges. In Infinite Horizons: Exploring the Unknown; CIRS Publication: Patna, India, 2023; p. 89. [Google Scholar]

- Tan, S. Harnessing Artificial Intelligence for innovation in education. In Learning Intelligence: Innovative and Digital Transformative Learning Strategies: Cultural and Social Engineering Perspectives; Springer Nature: Singapore, 2023; pp. 335–363. [Google Scholar]

- Natriello, G.; Chae, H. The Paradox of Learning in the Intelligence Age: Creating a New Learning Ecosystem to Meet the Challenge. In Bridging Human Intelligence and Artificial Intelligence; Springer International Publishing: Cham, Switzerland, 2022; pp. 287–300. [Google Scholar]

- Michel-Villarreal, R.; Vilalta-Perdomo, E.; Salinas-Navarro, D.; Thierry-Aguilera, R.; Gerardou, F. Challenges and opportunities of generative AI for higher education as explained by ChatGPT. Educ. Sci. 2023, 13, 856. [Google Scholar] [CrossRef]

- Farrokhnia, M.; Banihashem, S.; Noroozi, O.; Wals, A. A SWOT analysis of ChatGPT: Implications for educational practice and research. Innov. Educ. Teach. Int. 2023, 61, 460–474. [Google Scholar] [CrossRef]

- Sallam, M. ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef] [PubMed]

- Cotton, D.; Cotton, P.; Shipway, J. Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innov. Educ. Teach. Int. 2023, 61, 228–239. [Google Scholar] [CrossRef]

- Su, J.; Yang, W. Unlocking the power of ChatGPT: A framework for applying generative AI in education. ECNU Rev. Educ. 2023, 6, 355–366. [Google Scholar]

- Liebrenz, M.; Schleifer, R.; Buadze, A.; Bhugra, D.; Smith, A. Generating scholarly content with ChatGPT: Ethical challenges for medical publishing. Lancet Digit. Health 2023, 5, 105–106. [Google Scholar] [CrossRef] [PubMed]

- Tlili, A.; Shehata, B.; Adarkwah, M.; Bozkurt, A.; Hickey, D.; Huang, R.; Agyemang, B. What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 2023, 10, 15. [Google Scholar] [CrossRef]

- Lee, H. The rise of ChatGPT: Exploring its potential in medical education. Anat. Sci. Educ. 2023, 17, 926–931. [Google Scholar] [CrossRef]

- Pavlik, J. Collaborating with ChatGPT: Considering the Implications of Generative Artificial. J. Mass Commun. Educ. 2023, 78, 84–93. [Google Scholar]

- Lund, B.; Wang, T.; Mannuru, N.; Nie, B.; Shimray, S.; Wang, Z. ChatGPT and a new academic reality: Artificial Intelligence-written research papers and the ethics of the large language models in scholarly publishing. J. Assoc. Inf. Sci. Technol. 2023, 74, 570–581. [Google Scholar] [CrossRef]

- Dwivedi, Y.; Kshetri, N.; Hughes, L.; Slade, E.; Jeyaraj, A.; Kar, A.; Wright, R. So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Lim, W.; Gunasekara, A.; Pallant, J.; Pallant, J.; Pechenkina, E. Generative AI and the future of education: Ragnarök or reformation? A paradoxical perspective from management educators. Int. J. Manag. Educ. 2023, 21, 100790. [Google Scholar] [CrossRef]

- Bukar, U.A.; Sayeed, M.S.; Razak, S.F.A.; Yogarayan, S.; Amodu, O.A. An integrative decision-making framework to guide policies on regulating ChatGPT usage. PeerJ Comput. Sci. 2024, 10, e1845. [Google Scholar] [CrossRef]

- Bukar, U.A.; Jabar, M.A.; Sidi, F.; Nor, R.N.H.B.; Abdullah, S.; Othman, M. Crisis informatics in the context of social media crisis communication: Theoretical models, taxonomy, and open issues. IEEE Access 2020, 8, 185842–185869. [Google Scholar] [CrossRef]

- Zhang, L.; Glänzel, W. Proceeding papers in journals versus the “regular” journal publications. J. Inf. 2012, 6, 88–96. [Google Scholar] [CrossRef]

- Zhang, Y.; Jia, X. Republication of conference papers in journals? Learn. Publ. 2013, 26, 189–196. [Google Scholar] [CrossRef]

- Montesi, M.; Owen, J.M. From conference to journal publication: How conference papers in software engineering are extended for publication in journals. J. Am. Soc. Inf. Sci. Technol. 2008, 59, 816–829. [Google Scholar] [CrossRef]

- Perkins, M. Academic Integrity considerations of AI Large Language Models in the post-pandemic era: ChatGPT and beyond. J. Univ. Teach. Learn. Pract. 2023, 20, 07. [Google Scholar] [CrossRef]

- Kooli, C. Chatbots in education and research: A critical examination of ethical implications and solutions. Sustainability 2023, 15, 5614. [Google Scholar] [CrossRef]

- Qasem, F. ChatGPT in scientific and academic research: Future fears and reassurances. Libr. Tech News 2023, 40, 30–32. [Google Scholar] [CrossRef]

- Ariyaratne, S.; Iyengar, K.; Nischal, N.; Chitti Babu, N.; Botchu, R. A comparison of ChatGPT-generated articles with human-written articles. Skelet. Radiol. 2023, 52, 1755–1758. [Google Scholar] [CrossRef]

- Yan, D. Impact of ChatGPT on learners in a L2 writing practicum: An exploratory investigation. Educ. Inf. Technol. 2023, 28, 13943–13967. [Google Scholar] [CrossRef]

- Ray, P. ChatGPT: A comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet Things-Cyber-Phys. Syst. 2023, 3, 121–154. [Google Scholar] [CrossRef]

- Taecharungroj, V. What Can ChatGPT Do?” Analyzing Early Reactions to the Innovative AI Chatbot on Twitter. Big Data Cogn. Comput. 2023, 7, 35. [Google Scholar] [CrossRef]

- Grünebaum, A.; Chervenak, J.; Pollet, S.; Katz, A.; Chervenak, F. The exciting potential for ChatGPT in obstetrics and gynecology. Am. J. Obstet. Gynecol. 2023, 228, 696–705. [Google Scholar] [CrossRef]

- Cox, C.; Tzoc, E. ChatGPT: Implications for academic libraries. Coll. Res. Libr. News 2023, 84, 99. [Google Scholar] [CrossRef]

- Karaali, G. Artificial Intelligence, Basic Skills, and Quantitative Literacy. Numeracy 2023, 16, 9. [Google Scholar] [CrossRef]

- Jungwirth, D.; Haluza, D. Artificial intelligence and public health: An exploratory study. Int. J. Environ. Res. Public Health 2023, 20, 4541. [Google Scholar] [CrossRef]

- Geerling, W.; Mateer, G.; Wooten, J.; Damodaran, N. ChatGPT has aced the test of understanding in college economics: Now what? Am. Econ. 2023, 68, 233–245. [Google Scholar] [CrossRef]