Analysing Conversation Pathways with a Chatbot Tutor to Enhance Self-Regulation in Higher Education

Abstract

1. Introduction

1.1. Chatbots in Education

1.2. Chatbots for Self-Regulated Learning

- R.Q.1. How many messages make up the conversations and what is their frequency?

- R.Q.2. How are users’ interactions with the tool distributed over time?

- R.Q.3. At what point in the conversational flow do users leave the interaction with the tool?

- R.Q.4. What is the duration (in time) of the conversations?

- R.Q.5. How do user-selected options define the flow of the conversation?

- R.Q.6. What are the most common replica sequences?

- R.Q.7. How do users interact with the infographic resources for SRL embedded in the tool?

- R.Q.8. How do users respond to the chatbot’s open questions?

2. Materials and Methods

2.1. Research Background

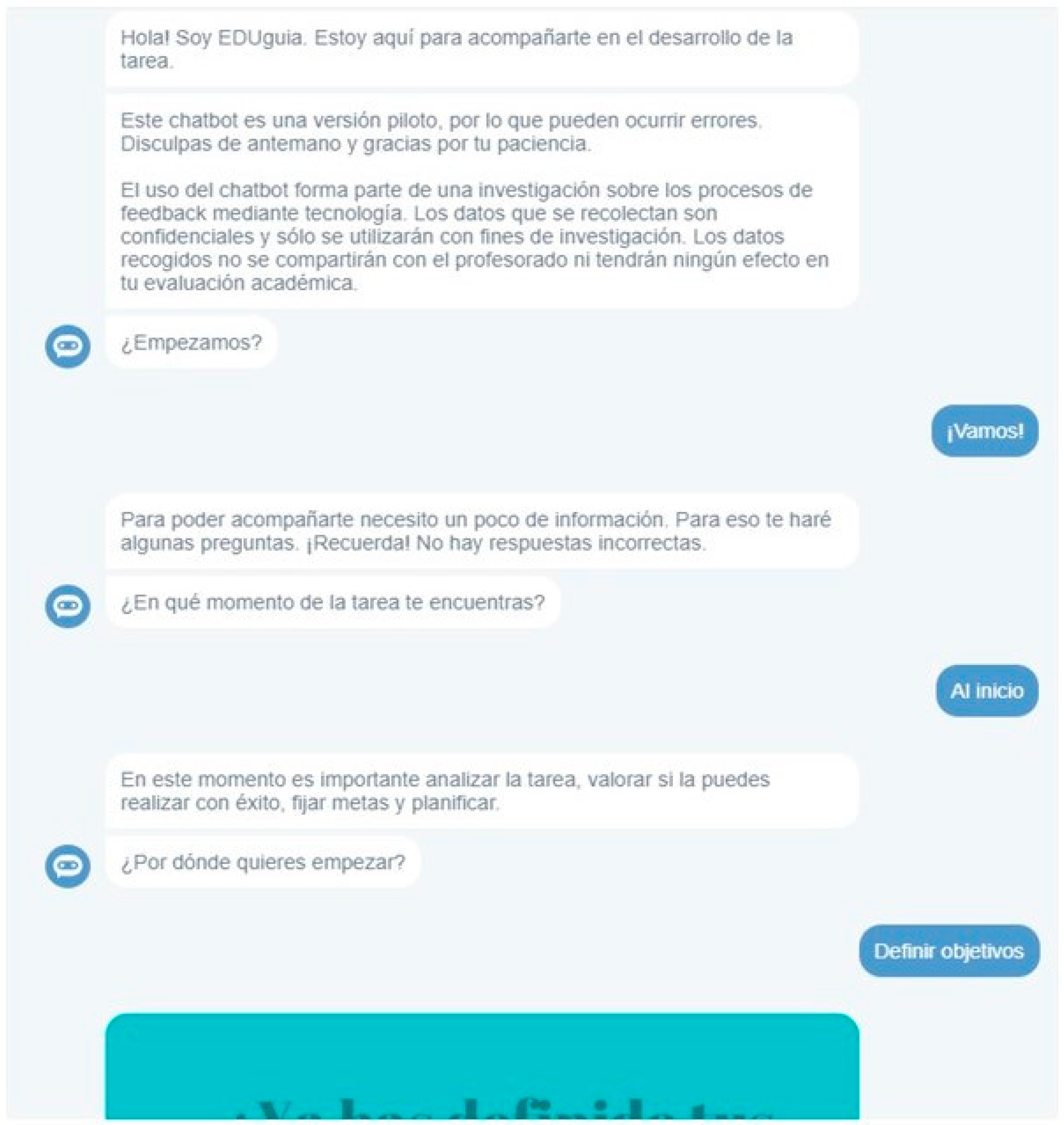

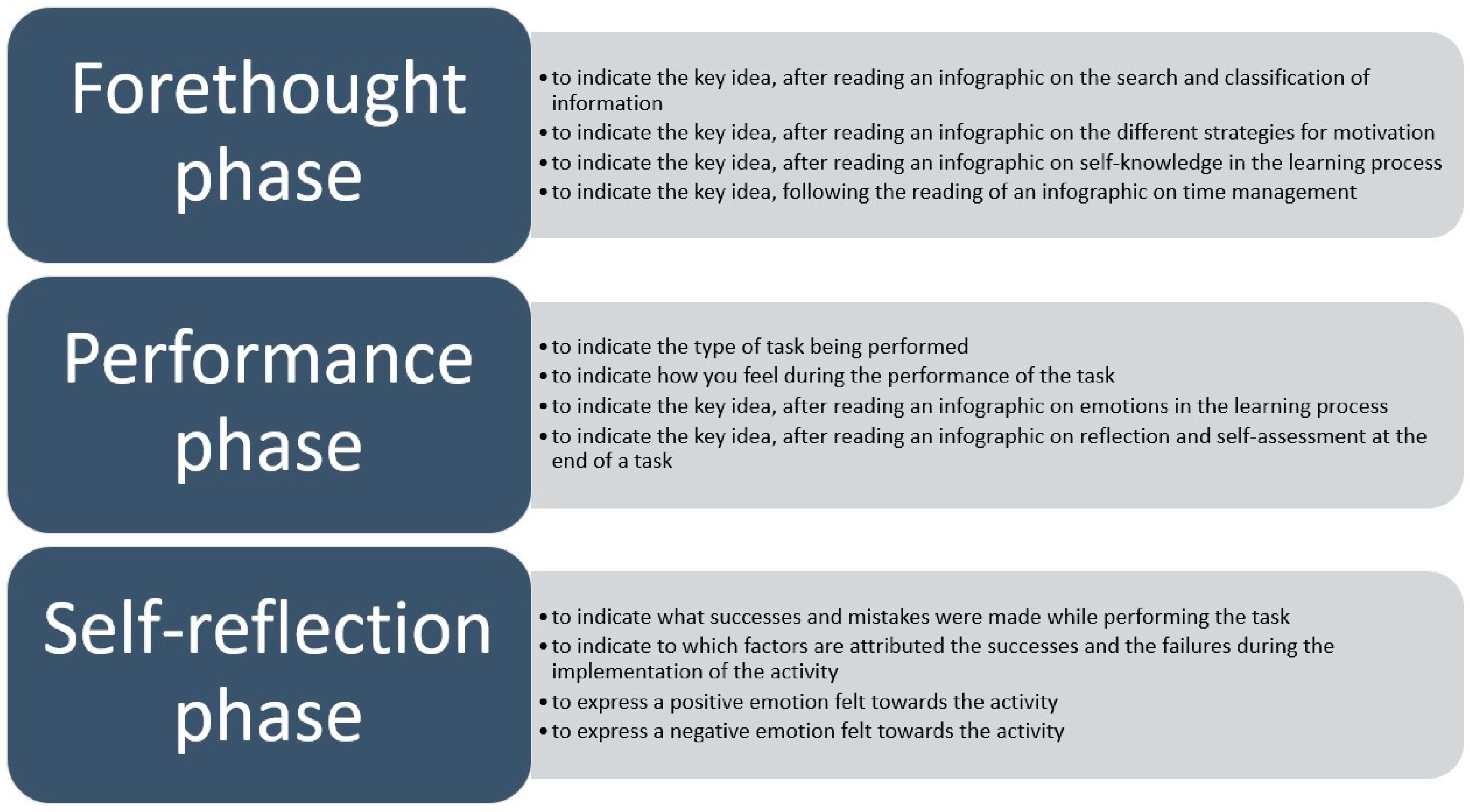

2.2. EDUguia Chatbot

2.3. Data Collection and Analysis

3. Results

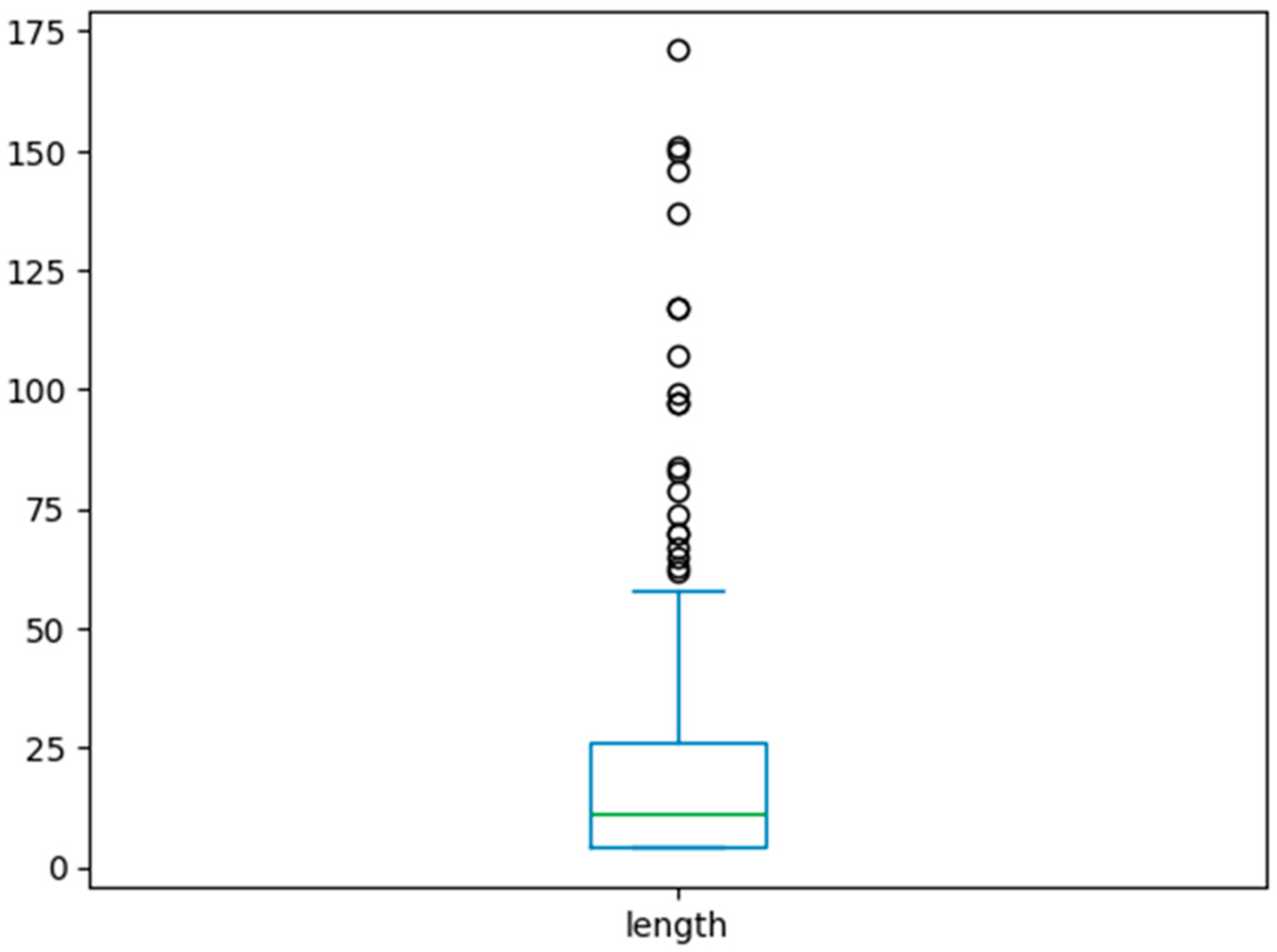

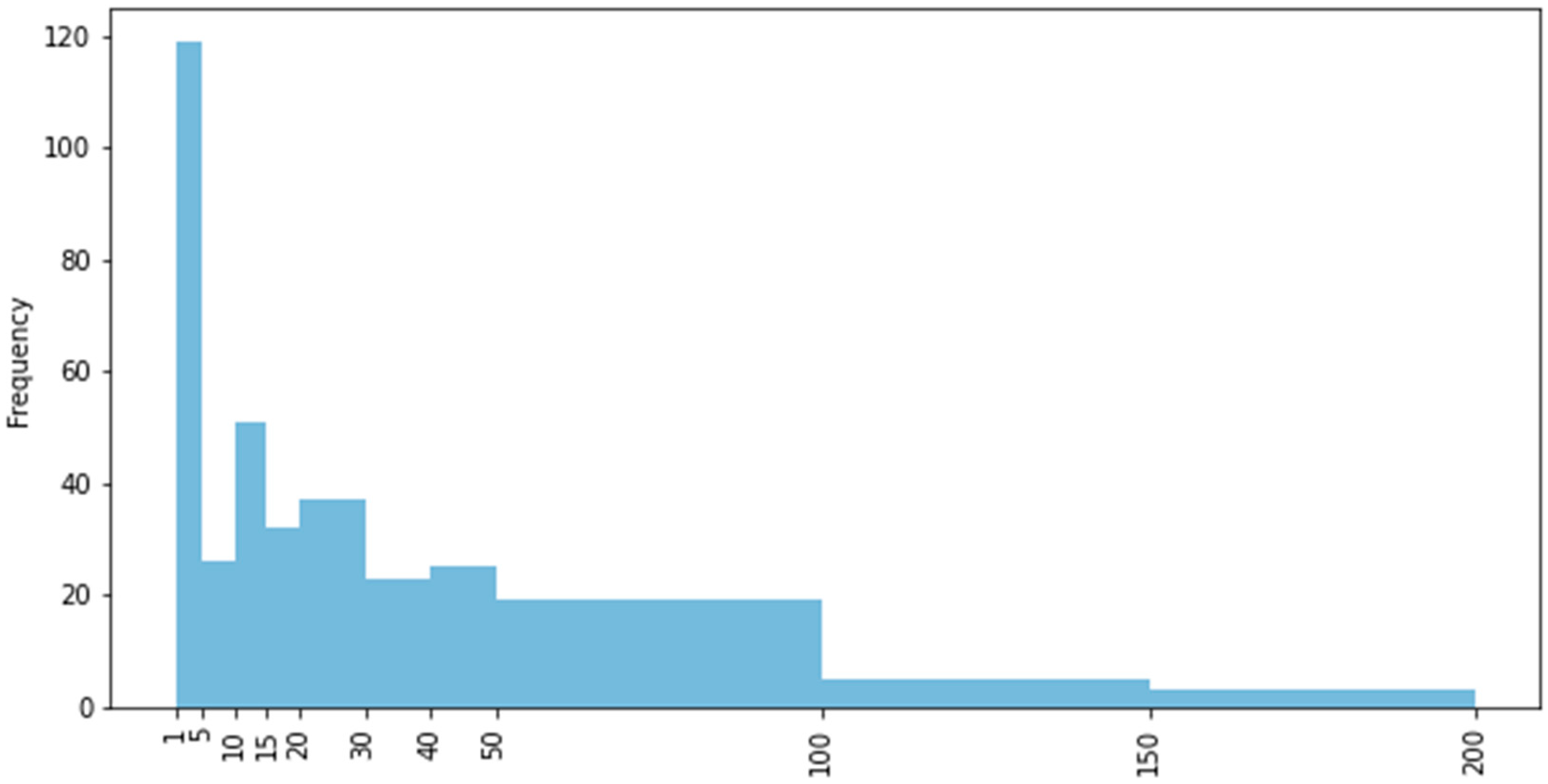

3.1. R.Q.1. How Many Messages Make Up the Conversations and What Is Their Frequency?

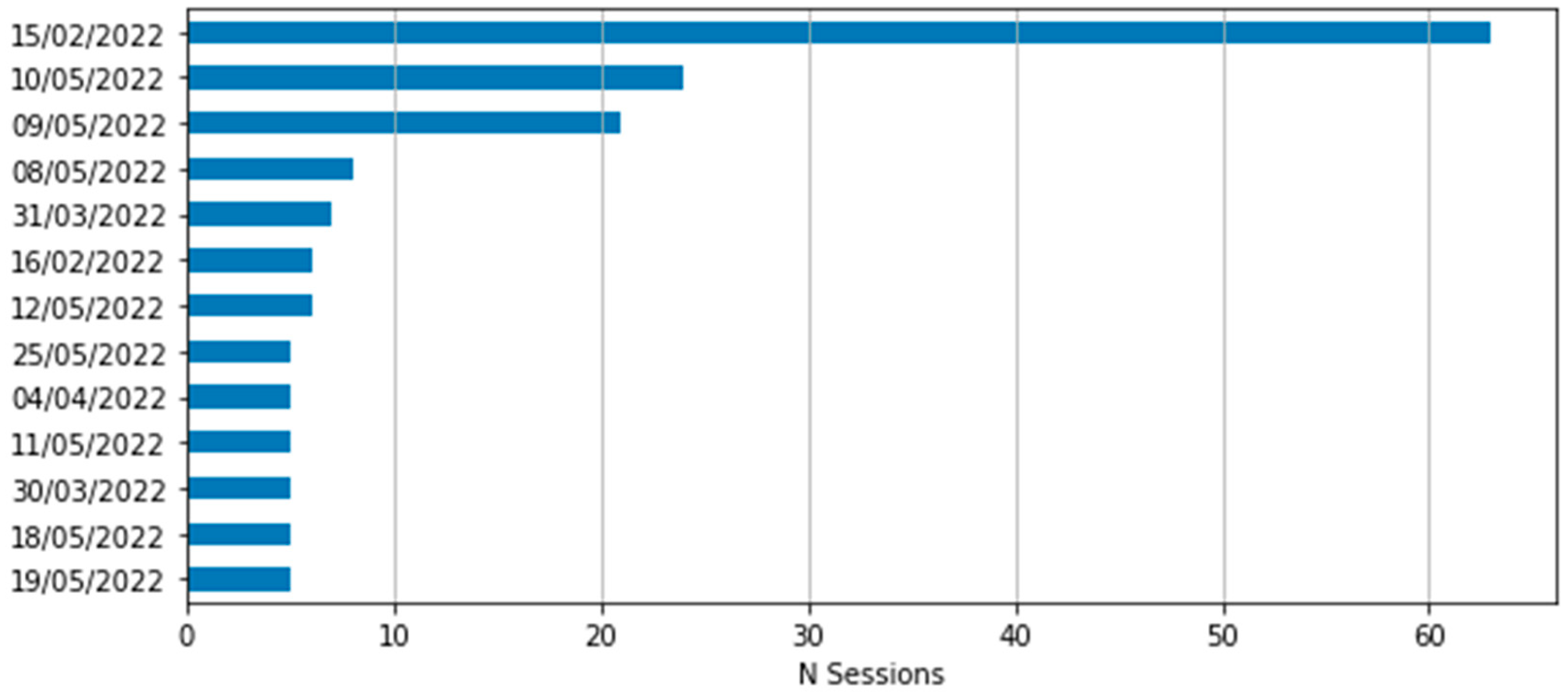

3.2. R.Q.2. How Are Users’ Interactions with the Tool Distributed over Time?

3.3. R.Q.3. At What Point in the Conversational Flow Do Users Leave the Interaction with the Tool?

3.4. R.Q.4. What Is the Duration (in Time) of the Conversations?

3.5. R.Q.5. How Do User-Selected Options Define the Flow of Conversation?

3.6. R.Q.6. What Are the Most Common Replica Sequences?

3.7. R.Q.7. How Do Users Interact with the Infographic Resources for SRL Embedded in the Tool?

3.8. R.Q.8. How Do Users Respond to the Chatbot’s Open Questions?

3.9. Limitations and Implications

4. Discussion

5. Conclusions

5.1. Findings and Their Impact on Design

5.2. EDUguiachatbot and Self-Regulation Learning

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Villegas-Ch, W.; Arias-Navarrete, A.; Palacios-Pacheco, X. Proposal of an Architecture for the Integration of a Chatbot with Artificial Intelligence in a Smart Campus for the Improvement of Learning. Sustainability 2020, 12, 1500. [Google Scholar] [CrossRef]

- Hobert, S. How Are You, Chatbot? Evaluating Chatbots in Educational Settings–Results of a Literature Review; DELFI; Gesellschaft für Informatik e.V.: Bonn, Germany, 2019. [Google Scholar] [CrossRef]

- Fichter, D.; Wisniewski, J. Chatbots Introduce Conversational User Interfaces. Online Search 2017, 41, 56–58. [Google Scholar]

- Zahour, O.; El Habib Benlahmar, A.E.; Ouchra, H.; Hourrane, O. Towards a Chatbot for educational and vocational guidance in Morocco: Chatbot E-Orientation. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 2479–2487. [Google Scholar] [CrossRef]

- Dutta, D. Developing an Intelligent Chat-Bot Tool to Assist High School Students for Learning General Knowledge Subjects. Georgia Institute of Technology. 2017. Available online: http://hdl.handle.net/1853/59088 (accessed on 21 May 2024).

- Cahn, B.J. CHATBOT: Architecture, Design, & Development. Master’s Thesis, University of Pennsylvania School of Engineering and Applied Science Department of Computer and Information Science, Philadelphia, PA, USA, 2017. Available online: https://www.academia.edu/download/57035006/CHATBOTthesisfinal.pdf (accessed on 21 May 2024).

- Han, V. Are Chatbots the Future of Training? TD Talent Dev. 2017, 71, 42–46. [Google Scholar]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar] [CrossRef]

- Monereo, C. La evaluación del conocimiento estratégico a través de tareas auténticas. Rev. Pensam. Educ. 2003, 32, 71–89. [Google Scholar]

- Durall Gazulla, E.; Martins, L.; Fernández-Ferrer, M. Designing learning technology collaboratively: Analysis of a chatbot co-design. Educ. Inf. Technol. 2023, 28, 109–134. [Google Scholar] [CrossRef] [PubMed]

- Kerly, A.; Hall, P.; Bull, S. Bringing chatbots into education: Towards natural language negotiation of open learner models. Knowl.-Based Syst. 2007, 20, 177–185. [Google Scholar] [CrossRef]

- Pradana, A.; Goh, O.S.; Kumar, Y.J. Intelligent conversational bot for interactive marketing. J. Telecommun. Electron. Electron. Comput. Eng. 2018, 10, 1–7. [Google Scholar]

- Laurillard, D. Rethinking University Teaching: A Conversational Framework for the Effective Use of Learning Technologies; Routledge: London, UK, 2013. [Google Scholar]

- D’aniello, G.; Gaeta, A.; Gaeta, M.; Tomasiello, S. Self-regulated learning with approximate reasoning and situation awareness. J. Ambient. Intell. Humaniz. Comput. 2016, 9, 151–164. [Google Scholar] [CrossRef]

- Winkler, R.; Söllner, M. Unleashing the Potential of Chatbots in Education: A State-Of-The-Art Analysis; Dins Academy of Management Annual Meeting (AOM): Chicago, IL, USA, 2018; Available online: https://www.alexandria.unisg.ch/254848/1/JML_699.pdf (accessed on 21 May 2024).

- Suhni, A.; Hameedullah, K. Measuring effectiveness of learning chatbot systems on Student’s learning outcome and memory retention. Asian J. Appl. Sci. Eng. 2014, 3, 57–66. [Google Scholar]

- Lindsey, R.V.; Shroyer, J.D.; Pashler, H.; Mozer, M.C. Improving students’ long-term knowledge retention through personalized review. Psychol. Sci. 2014, 25, 639–647. [Google Scholar] [CrossRef] [PubMed]

- Benotti, L.; Martínez, M.C.; Schapachnik, F. Engaging high school students using chatbots. In ITiCSE’14, Proceedings of the 2014 Conference on Innovation & Technology in Computer Science Education, Uppsala, Sweden, 21–25 June 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 63–68. [Google Scholar] [CrossRef]

- Hadwin, A.F.; Winne, P.H.; Nesbit, J.C. Roles for software technologies in advancing research and theory in educational psychology. Br. J. Educ. Psychol. 2005, 75, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Sandoval, Z.V. Design and implementation of a chatbot in online higher education settings. Issues Inf. Syst. 2018, 19, 44–52. [Google Scholar]

- Pengel, N.; Martin, A.; Meissner, R.; Arndt, T.; Meumann, A.T.; de Lange, P.; Wollersheim, H.W. TecCoBot: Technology-aided support for self-regulated learning? Automatic feedback on writing tasks with chatbots. arXiv 2021, arXiv:2111.11881. [Google Scholar] [CrossRef]

- Mokmin, N.A.M.; Ibrahim, N.A. The evaluation of chatbot as a tool for health literacy education among undergraduate students. Educ. Inf. Technol. 2021, 26, 6033–6049. [Google Scholar] [CrossRef] [PubMed]

- Følstad, A.; Brandtzaeg, P.B.; Feltwell, T.; Law, E.L.-C.; Tscheligi, M.; Luger, E.A. SIG: Chatbots for social good. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems; SIG06:1-SIG06:4; ACM: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Abd-Alrazaq, A.; Safi, Z.; Alajlani, M.; Warren, J.; Househ, M.; Denecke, K. Technical metrics used to evaluate health care Chatbots: Scoping review. J. Med. Internet Res. 2020, 22, e18301. [Google Scholar] [CrossRef] [PubMed]

- Pilato, G.; Augello, A.; Gaglio, S. A modular architecture for adaptive ChatBots. In Proceedings of the 5th IEEE International Conference on Semantic Computing, Palo Alto, CA, USA, 18–21 September 2011. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Wollny, S.; Schneider, J.; DiMitri, D.; Weidlich, J.; Rittberger, M.; Drachsler, H. Are We There Yet?—A Systematic Literature Review on Chatbots in Education. Front. Artif. Intell 2021, 4, 654924. [Google Scholar] [CrossRef]

- Dimitriadis, G. Evolution in education: Chatbots. Homo Virtualis 2020, 3, 47–54. [Google Scholar] [CrossRef]

- Brandtzaeg, P.B.; Følstad, A. Why people use chatbots. In Internet Science, Proceedings of the 4th International Conference, INSCI 2017, Thessaloniki, Greece, 22–24 November 2017; Springer International Publishing: Cham, Switzerland, 2017; pp. 377–392. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Theories of self-regulated learning and academic achievement: An overview and analysis. In Self-regulated Learning and Academic Achievement: Theoretical Perspectives; Zimmerman, B.J., Schunk, D.H., Eds.; Lawrence Erlbaum: London, UK, 2001; pp. 1–37. [Google Scholar]

- Zimmerman, B.J. Becoming a Self-Regulated Learner: An Overview. Theory Into Pract. 2002, 41, 64–70. [Google Scholar] [CrossRef]

- Panadero, E. A Review of Self-regulated Learning: Six Models and Four Directions for Research. Front. Psychol. 2017, 8, 422. [Google Scholar] [CrossRef] [PubMed]

- Pintrich, P.R. The role of goal orientation in self-regulated learning. In Handbook of Self-Regulation; Academic Press: Cambridge, MA, USA, 2000; pp. 451–502. [Google Scholar] [CrossRef]

- Pintrich, P.R. A Conceptual Framework for Assessing Motivation and Self-Regulated Learning in College Students. Educ. Psychol. Rev. 2004, 16, 385–407. [Google Scholar] [CrossRef]

- Panadero, E.; Alonso-Tapia, J. ¿Cómo autorregulan nuestros alumnos? Modelo de Zimmerman sobre estrategias de aprendizaje. An. Psicol./Ann. Psychol. 2014, 30, 450–462. [Google Scholar] [CrossRef]

- Scheu, S.; Benke, I. Digital Assistants for Self-Regulated Learning: Towards a State-Of-The-Art Overview. ECIS 2022 Research-in-Progress Papers. 2022. 46. Available online: https://aisel.aisnet.org/ecis2022_rip/46 (accessed on 21 May 2024).

- Song, D.; Kim, D. Effects of self-regulation scaffolding on online participation and learning outcomes. J. Res. Technol. Educ. 2021, 53, 249–263. [Google Scholar] [CrossRef]

- Sáiz-Manzanares, M.C.; Marticorena-Sánchez, R.; Martín-Antón, L.J.; Díez, I.G.; Almeida, L. Perceived satisfaction of university students with the use of chatbots as a tool for self-regulated learning. Heliyon 2023, 9, e12843. [Google Scholar] [CrossRef] [PubMed]

- Ortega-Ochoa, E.; Pérez, J.Q.; Arguedas, M.; Daradoumis, T.; Puig, J.M.M. The Effectiveness of Empathic Chatbot Feedback for Developing Computer Competencies, Motivation, Self-Regulation, and Metacognitive Reasoning in Online Higher Education. Internet Things 2024, 25, 101101. [Google Scholar] [CrossRef]

- Calle, M.; Edwin, N.; Maldonado-Mahauad, J. Proposal for the Design and Implementation of Miranda: A Chatbot-Type Recommender for Supporting Self-Regulated Learning in Online Environments. In Proceedings of the LALA’21: IV Latin American Conference on Learning Analytics, 2021, Arequipa, Peru, 19–21 October 2021. [Google Scholar]

- Du, J.; Huang, W.; Hew, K.F. Supporting students goal setting process using chatbot: Implementation in a fully online course. In Proceedings of the 2021 IEEE International Conference on Engineering, Technology & Education (TALE), Wuhan, China, 5–8 December 2021; pp. 35–41. Available online: https://ieeexplore.ieee.org/document/9678564 (accessed on 21 May 2024).

- Lluch Molins, L.; Cano García, E. How to Embed SRL in Online Learning Settings? Design through Learning Analytics and Personalized Learning Design in Moodle. J. New Approaches Educ. Res. 2023, 12, 120–138. [Google Scholar] [CrossRef]

| SRL Phase | Moment of Task Performance | Skills or Strategies to Be Developed |

|---|---|---|

| Forethought phase | At the beginning | Define my objectives |

| Manage my resources | ||

| Motivate myself | ||

| Performance phase | In the middle | Monitor my progress |

| Manage my emotions | ||

| Maintain my interest | ||

| Self-reflection phase | Almost at the end | Reflect on the mistakes I made and successes I achieved while performing the task |

| Code | Chatbot Message | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Shall we start? | 1. Let’s go! | 2. Not now | ||||||

| 2 | At what stage of the task are you? | 1. At the beginning | 2. In the middle | 3. Almost at the end | |||||

| 3 | Now that you’re doing the task, how can I help you? | 1. Managing Emotions | 2. Maintain Interest | 3. Track Progress | |||||

| 4 | What you’re doing, does it get you closer to achieving your learning goals? | 1. I guess! | 2. I don’t think so | 3. I don’t know | |||||

| 5 | Do you find it difficult to do your task? | 1. A lot | 2. A little bit | 3. Not at all | |||||

| 6 | What kind of task are you doing? | ||||||||

| 7 | Each task is different, but some problems are recurring. Which of the following is difficult for you? | 1. Search information | 2. Select methods | 3. Analyse-synthesise | 4. Work as a team | 5. Manage projects | 6. Communicate | 7. Social impact | 8. Other(s) |

| Session Type | Description |

|---|---|

| One-day session | User–chatbot interactions occurred within the same day. |

| “Type A”; 2 sessions | The user initiated the conversation on one date and returned on another date to finish the user session. |

| “Type B”; 3 sessions | The user initiated the conversation on one date, then returned on another date, and finished the user session in the third interaction. |

| “Type C”; 4 sessions | The user initiated the conversation on one date, then returned on two different dates, and finished the user session in the fourth interaction. |

| “Type D”; 5 sessions | The user initiated the conversation on one date, then returned on three different dates, and finished the user session in the fifth interaction. |

| N (sessions) | Valid | 340 |

| Lost | 0 | |

| Mean | 20.55 | |

| Median | 11.00 | |

| S.D. | 25.785 | |

| Min | 4 | |

| 25% | 4 | |

| 50% | 11 | |

| 75% | 26.250 | |

| Max | 171 | |

| Session Type | Number of Users (Cases) |

|---|---|

| A | 46 |

| B | 7 |

| C | 2 |

| D | 1 |

| Sub-total | 56 |

| One-day | 284 |

| Total | 340 |

| SRL Phase | Moment/Times of Task Performance | Skills or Strategies to Be Developed/Times |

|---|---|---|

| Forethought phase | At the beginning 93 times | Define my objectives 77 times |

| Manage my resources 31 times | ||

| Motivate myself 19 times | ||

| Performance phase | In the middle 79 times | Monitor my progress 54 times |

| Manage my emotions 13 times | ||

| Maintain my interest 14 times | ||

| Self-reflection phase | Almost at the end 33 times | Reflect on the mistakes I made and successes I achieved while performing the task 22 times |

| Level of Sequence Interaction | Most Common Sequences of Chatbot Replicas |

|---|---|

| 1 |

|

| 2 |

|

| 3 |

|

| 4 |

|

| 5 |

|

| 6 |

|

| Self-Regulation Learning Phase | Infographic Title | Times Viewed |

|---|---|---|

| Forethought phase | Have you already defined your objectives? | 77 times |

| Have you thought about what to do first to achieve your learning goals? | 54 times | |

| What do you want to achieve at the end of the whole process? | 49 times | |

| Have you estimated how much time you need to complete the task? | 27 times | |

| How to register doubts and take notes? | 23 times | |

| Have you ever considered what obstacles the task may pose? | 21 times | |

| How can you organise your time? | 20 times | |

| Motivate yourself | 15 times | |

| Do you know yourself well? | 13 times | |

| Motivate yourself II | 13 times | |

| Processing, organisation and use of information | 13 times | |

| Values and interests | 13 times | |

| How to organise task monitoring? | 13 times | |

| Execution phase | Have you considered how to develop your learning capacity? | 22 times |

| Self-reflection phase | How did you do it? | 22 times |

| Remember: Mistakes are also learning | 22 times | |

| How you felt doing this task | 17 times | |

| It is a good time to reflect on the help received | 16 times | |

| Record those activities or content you want to review | 15 times |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martins, L.; Fernández-Ferrer, M.; Puertas, E. Analysing Conversation Pathways with a Chatbot Tutor to Enhance Self-Regulation in Higher Education. Educ. Sci. 2024, 14, 590. https://doi.org/10.3390/educsci14060590

Martins L, Fernández-Ferrer M, Puertas E. Analysing Conversation Pathways with a Chatbot Tutor to Enhance Self-Regulation in Higher Education. Education Sciences. 2024; 14(6):590. https://doi.org/10.3390/educsci14060590

Chicago/Turabian StyleMartins, Ludmila, Maite Fernández-Ferrer, and Eloi Puertas. 2024. "Analysing Conversation Pathways with a Chatbot Tutor to Enhance Self-Regulation in Higher Education" Education Sciences 14, no. 6: 590. https://doi.org/10.3390/educsci14060590

APA StyleMartins, L., Fernández-Ferrer, M., & Puertas, E. (2024). Analysing Conversation Pathways with a Chatbot Tutor to Enhance Self-Regulation in Higher Education. Education Sciences, 14(6), 590. https://doi.org/10.3390/educsci14060590