Attitudes towards Research Methods in Education: Development of the ATRMQ Scale

Abstract

1. Introduction and Objectives

- Studies on the attitudes and perceptions of university students towards the subjects they take help understand the development of the teaching and learning process, facilitating curricular adaptation.

- Attitudes towards statistics content in social and legal sciences degrees have been prolifically studied, leading to the development of specific scales for this purpose.

- The attitude of students towards RM subjects as a holistic educational unit has not been studied in the Spanish higher educational system.

2. Methodology

2.1. Design and Participants

2.2. Instrument

- Sociodemographic questions: age, gender, family members with a similar degree, and employment status. Academic questions of interest: degree program, year of study, whether the degree was the first choice of access, overall satisfaction with the degree, and general satisfaction with RM.

- Two items on positioning regarding RM. These two items were taken from Estrada (2002) and used as external comparison criteria for the convergence and divergence of measures.

- An adapted version of the ATSQ [34]. The original version of this questionnaire (named CAHE in its original version in Spanish) consists of 16 items grouped into three dimensions (see Appendix A). For this research, an adaptation was made, which could be considered naïve, as it simply involved changing the word “statistics” to “research methods”.

- Teacher with 23 years of experience in RM and Diagnostics in Education.

- Specialist in Early Intervention and Diagnostics in Education.

- Teacher with 20 years of experience in Didactics and School Organisation, specialising in inclusive education and with one six-year period of research.

- Teacher with 30 years of experience in RM and Diagnostics in Education.

- Specialist in Educational Diagnosis, with one six-year period of research.

2.3. Data Analysis

- The normalised robust Chi-square test (X2/d.f.), the absolute Chi-square index, and its ratio to degrees of freedom, where values between 3 and 5 are considered acceptable for a global fit.

- The RMSEA (Root Mean Square Error of Approximation) parsimony statistic to assess the residual matrix, which is considered acceptable with values below 0.08.

- The SRMR (Standardised Root Mean Square Residual) index, with a cut-off point of 0.08 or less recommended [46].

- Standardised indices, the CFI (Comparative Fit Index) and TLI (Tucker–Lewis Index), where values between 0.90 and 0.95 are considered acceptable. Values above 0.95 are considered good [47]. The BIC (Bayesian Information Criterion) and the AIC (Akaike Information Criterion) were used in model comparison, selecting the one with smaller indices [48]. In the next phase, the reliability of the scale and subscales was calculated using Cronbach’s alpha coefficient and McDonald’s omega.

3. Results

3.1. Exploratory Factor Analysis (EFA)

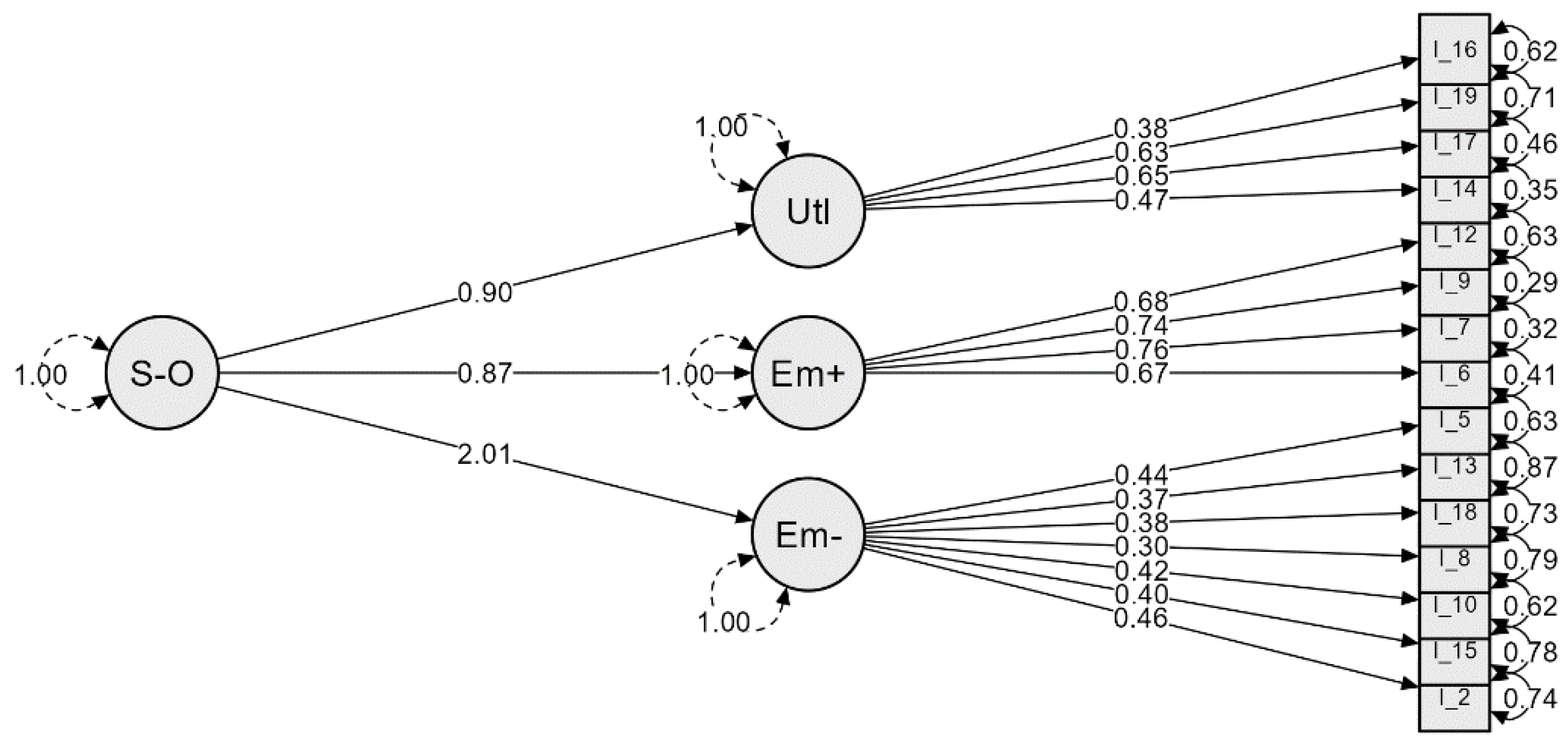

3.2. Confirmatory Factor Analysis (CFA)

3.3. Reliability of the Measure

3.4. Correlation with Criterion Items

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Factor | Items Included | Explained Variance | Internal Consistency (Cronbach’s Alpha) |

|---|---|---|---|

| Emotion− | 20, 15, 10, 8, 18, 13, 5, 11 | 33.485% | 0.921 |

| Emotion+ | 6, 7, 9, 12 | 15.938% | 0.774 |

| Utility | 14, 17, 19, 16 | 13.352% | 0.745 |

| Attitude (global) | 62.775% | 0.902 |

References

- Ordoñez, X.G.; Romero, S.J.; Ruiz De Miguel, C. Cuestionario de Actitudes Hacia La Estadística (CAHE): Evidencias de Validez y Fiabilidad de Las Puntuaciones En Una Muestra de Alumnos de Educación. Bord. Rev. Pedag. 2016, 68, 121. [Google Scholar] [CrossRef]

- Jefatura del Estado. Ley Orgánica 2/2006, de 3 de Mayo, de Educación; Boletín Oficial del Estado: Madrid, Spain, 2006; Volume BOE-A-2006-7899, pp. 17158–17207. Available online: https://www.boe.es/eli/es/lo/2006/05/03/2/con (accessed on 23 February 2024).

- Jefatura del Estado. Ley Orgánica 3/2020, de 29 de Diciembre, Por La Que Se Modifica La Ley Orgánica 2/2006, de 3 de Mayo, de Educación; Boletín Oficial del Estado: Madrid, Spain, 2020; Volume BOE-A-2020-17264, pp. 122868–122953. Available online: https://www.boe.es/eli/es/lo/2020/12/29/3 (accessed on 23 February 2024).

- Johnson, R.B.; Christensen, L.B. Educational Research: Quantitative, Qualitative, and Mixed Approaches, 7th ed.; SAGE: Los Angeles, CA, USA, 2020; ISBN 978-1-5443-3783-8. [Google Scholar]

- McKenney, S.; Schunn, C.D. How Can Educational Research Support Practice at Scale? Attending to Educational Designer Needs. Br. Educ. Res. J. 2018, 44, 1084–1100. [Google Scholar] [CrossRef]

- Everton, T.; Galton, M.J.; Pell, T. Educational Research and the Teacher. Res. Pap. Educ. 2002, 17, 373–401. [Google Scholar] [CrossRef]

- Cain, T. Teachers’ Engagement with Published Research: Addressing the Knowledge Problem. Curr. J. 2015, 26, 488–509. [Google Scholar] [CrossRef]

- Winch, C.; Oancea, A.; Orchard, J. The Contribution of Educational Research to Teachers’ Professional Learning: Philosophical Understandings. Oxf. Rev. Educ. 2015, 41, 202–216. [Google Scholar] [CrossRef]

- Cohen, L.; Manion, L.; Morrison, K. Research Methods in Education, 8th ed.; Routledge: London, NY, USA, 2017; ISBN 978-1-315-45653-9. [Google Scholar] [CrossRef]

- Fraenkel, J.; Wallen, N.; Hyun, H. How to Design and Evaluate Research in Education; McGraw-Hill: New York, NY, USA, 2011; Volume 60, ISBN 978-0-07-809785-0. [Google Scholar]

- Patton, M.Q. Qualitative Research & Evaluation Methods: Integrating Theory and Practice, 4th ed.; SAGE Publications, Inc.: Thousand Oaks, CA, USA, 2015; ISBN 978-1-4129-7212-3. [Google Scholar]

- Guba, E.G.; Lincoln, Y.S. Paradigmatic Controversies, Contradictions, and Emerging Confluences. In The Sage Handbook of Qualitative Research, 3rd ed.; Sage Publications Ltd.: Thousand Oaks, CA, USA, 2005; pp. 191–215. ISBN 978-0-7619-2757-0. [Google Scholar]

- Tabachnick, B.G.; Fidell, L.S.; Ullman, J.B. Using Multivariate Statistic, 7th ed.; Pearson: New York, NY, USA, 2019; ISBN 978-0-13-479054-1. [Google Scholar]

- Erickson, F. Métodos Cualitativos de Investigación Sobre La Enseñanza. In La Investigación de la Enseñanza II. Métodos Cualitativos de Observación; Wittrock, M.C., Ed.; Coll. Paidós Educador; Paidós MEC: Barcelona, Spain, 1989; pp. 203–247. ISBN 84-7509-518-6. [Google Scholar]

- Eccles, J.S.; Wigfield, A. Motivational Beliefs, Values, and Goals. Annu. Rev. Psychol. 2002, 53, 109–132. [Google Scholar] [CrossRef]

- Fives, H.; Gill, M.G. International Handbook of Research on Teachers’ Beliefs, 1 ed.; Routledge: New York, NY, USA, 2014; ISBN 978-1-136-26583-9. [Google Scholar]

- Wishkoski, R.; Meter, D.J.; Tulane, S.; King, M.Q.; Butler, K.; Woodland, L.A. Student Attitudes toward Research in an Undergraduate Social Science Research Methods Course. High. Educ. Pedagog. 2022, 7, 20–36. [Google Scholar] [CrossRef]

- Byman, R.; Maaranen, K.; Kansanen, P. Consuming, Producing, and Justifying: Finnish Student Teachers’ Views of Research Methods. Int. J. Res. Method Educ. 2021, 44, 319–334. [Google Scholar] [CrossRef]

- Hidalgo, J.P.; Aldana, G.M.; León, P.; Ucedo, V.H. Escala de Actitudes Hacia La Investigación (EACIN-R): Propiedades Psicométricas En Universitarios Peruanos. Propósitos Y Represent. 2023, 11. [Google Scholar] [CrossRef]

- Howard, A.; Michael, P.G. Psychometric Properties and Factor Structure of the Attitudes Toward Research Scale in a Graduate Student Sample. Psychol. Learn. Teach. 2019, 18, 259–274. [Google Scholar] [CrossRef]

- Papanastasiou, E.C.; Schumacker, R. Attitudes Toward Research Scale--30 Item 2014. APA PsycTests. [CrossRef]

- Böttcher, F.; Thiel, F. Evaluating Research-Oriented Teaching: A New Instrument to Assess University Students’ Research Competences. High. Educ. 2018, 75, 91–110. [Google Scholar] [CrossRef]

- Böttcher-Oschmann, F.; Groß Ophoff, J.; Thiel, F. Preparing Teacher Training Students for Evidence-Based Practice Promoting Students’ Research Competencies in Research-Learning Projects. Front. Educ. 2021, 6, 642107. [Google Scholar] [CrossRef]

- Vilà Baños, R.; Rubio Hurtado, M.J. Actitudes Hacia La Estadística En El Alumnado Del Grado de Pedagogía de La Universidad de Barcelona. REDU Rev. Docencia Univ. 2016, 14, 131. [Google Scholar] [CrossRef]

- Ruiz De Miguel, C. Actitudes Hacia La Estadística de Los Alumnos Del Grado En Pedagogía, Educación Social, y Maestro de Educación Infantil y Maestro de Educación Primaria de La UCM. Educ. XX1 2015, 18, 351–374. [Google Scholar] [CrossRef][Green Version]

- Rosenberg, M.J.; Hovland, C.I. Cognitive, Affective and Behavioral Components of Attitudes. In Attitude Organization and Change: An Analysis of Consistency among Attitude Components; Yale studies in attitude and communication; Yale University Press: New Haven, CT, USA, 1960; Volume 3. [Google Scholar]

- Auzmendi, E. Las Actitudes Hacia la Matemática-Estadística en Las Enseñanzas Medias y Universitaria: Características y Medición; Recursos e Instrumentos Psico-Pedagógicos; Mensajero: Bilbao, Spain, 1992; ISBN 978-84-271-1768-6. [Google Scholar]

- Gil Flores, J. Actitudes hacia la estadística. Incidencia de las variables sexo y formación previa. Rev. Esp. Pedagog. 1999, 57, 567–589. [Google Scholar] [CrossRef]

- Rodríguez-Santero, J.; Gil-Flores, J. Actitudes Hacia La Estadística En Estudiantes de Ciencias de La Educación. Propiedades Psicométicas de La Versión Española Del Survey of Attitudes Toward Statistics (SATS-36). RELIEVE-Rev. Electrónica Investig. Eval. Educ. 2019, 25. [Google Scholar] [CrossRef]

- Roberts, D.M.; Bilderback, E.W. Reliability and Validity of a Statistics Attitude Survey. Educ. Psychol. Meas. 1980, 40, 235–238. [Google Scholar] [CrossRef]

- Wise, S.L. The Development and Validation of a Scale Measuring Attitudes toward Statistics. Educ. Psychol. Meas. 1985, 45, 401–405. [Google Scholar] [CrossRef]

- Schau, C.; Stevens, J.; Dauphinee, T.L.; Vecchio, A.D. The Development and Validation of the Survey of Antitudes toward Statistics. Educ. Psychol. Meas. 1995, 55, 868–875. [Google Scholar] [CrossRef]

- Darias-Morales, E.J. Escala de Actitudes Hacia La Estadística. Psicothema 2000, 12, 175–178. [Google Scholar]

- Méndez, D.; Macía, F. Análisis factorial confirmatorio de la escala de actitudes hacia la estadística. Cuad. Neuropsicol. 2007, 1, 337–345. [Google Scholar]

- Matas, A. Diseño Del Formato de Escalas Tipo Likert: Un Estado de La Cuestión. Rev. Electrónica Investig. Educ. 2018, 20, 38–47. [Google Scholar] [CrossRef]

- Hill, A.; Pelis, M.; Puncochar, V. Reasoning by Analogy in Inductive Logic. In The Logica Yearbook 2011; Pelis, M., Puncochar, V., Eds.; College Publications: Oxfordshire, UK, 2012; pp. 63–76. [Google Scholar]

- Fisher, A.A. Inductive Reasoning in the Context of Discovery: Analogy as an Experimental Stratagem in the History and Philosophy of Science. Stud. Hist. Philos. Sci. Part A 2018, 69, 23–33. [Google Scholar] [CrossRef]

- Curado, M.A.S.; Teles, J.; Marôco, J. Analysis of Variables That Are Not Directly Observable: Influence on Decision-Making during the Research Process. Rev. Esc. Enferm. USP 2014, 48, 146–152. [Google Scholar] [CrossRef] [PubMed]

- Dima, A.L. Scale Validation in Applied Health Research: Tutorial for a 6-Step R-Based Psychometrics Protocol. Health Psychol. Behav. Med. 2018, 6, 136–161. [Google Scholar] [CrossRef] [PubMed]

- Pallant, J. SPSS Survival Manual: A Step by Step Guide to Data Analysis Using SPSS, 4th ed.; Open University Press: Maidenhead, UK, 2010; ISBN 978-0-335-24239-9. [Google Scholar]

- Carretero-Dios, H.; Pérez, C. Normas Para El Desarrollo y Revisión de Estudios Instrumentales: Consideraciones Sobre La Selección de Tests En La Investigación Psicológica. Int. J. Clin. Health Psychol. 2007, 7, 863–882. [Google Scholar]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis, 7th ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2010; ISBN 978-0-13-813263-7. [Google Scholar]

- Stevens, J. Applied Multivariate Statistics for the Social Sciences, 3rd ed.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1996; ISBN 978-0-8058-1670-9. [Google Scholar]

- Lorenzo-Seva, U.; Ferrando, P.J. FACTOR: A Computer Program to Fit the Exploratory Factor Analysis Model. Behav. Res. Methods 2006, 38, 88–91. [Google Scholar] [CrossRef] [PubMed]

- Brown, T.A. Confirmatory Factor Analysis for Applied Research; Confirmatory Factor Analysis for Applied Research; The Guilford Press: New York, NY, USA, 2006; ISBN 978-1-59385-274-0. [Google Scholar]

- Cho, G.; Hwang, H.; Sarstedt, M.; Ringle, C.M. Cutoff Criteria for Overall Model Fit Indexes in Generalized Structured Component Analysis. J. Mark. Anal. 2020, 8, 189–202. [Google Scholar] [CrossRef]

- Hu, L.; Bentler, P.M. Cutoff Criteria for Fit Indexes in Covariance Structure Analysis: Conventional Criteria versus New Alternatives. Struct. Equ. Model. Multidiscip. J. 1999, 6, 1–55. [Google Scholar] [CrossRef]

- Akaike, H. Information Theory and the Maximum Likelihood Principle. In Information Theory: Proceedings of the 2nd International Symposium; Petrov, B.N., Csäki, F., Eds.; Akademiai Ki à do: Budapest, Hungary, 1973. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2018. [Google Scholar]

- The Jamovi Project. Jamovi (version 2.3). 2022. Available online: https://www.jamovi.org (accessed on 20 March 2024).

- Jain, V. 3D Model of Attitude. Int. J. Adv. Res. Manag. Soc. Sci. 2014, 3, 1–12. [Google Scholar]

- George, D.; Mallery, P. SPSS for Windows Step-by-Step: A Simple Guide and Reference, 14.0 Updat, 7th ed.; Pearson Education: Boston, MA, USA, 2003; ISBN 0-205-51585-1. [Google Scholar]

- Barbero García, M.I.; Holgado Trello, F.P.; Vila Abad, E. Psicometría; Sanz y Torres SL: Madrid, Spain, 2015; ISBN 978-84-15550-89-1. [Google Scholar]

- Sarabia Liaño, A. Inés Gómez Chacón (2000). Matemática Emocional. Los Afectos En El Aprendizaje Matemático. Madrid: Narcea, 276 pp. Estud. Sobre Educ. 2018, 3, 158. [Google Scholar] [CrossRef]

- Renaud, R.D. Attitudes and Dispositions. In International Guide to Student Achievement; Hattie, J., Anderman, E.M., Eds.; Routledge: New York, NY, USA, 2013; pp. 57–59. ISBN 978-0-415-87898-2. [Google Scholar]

- Angie, A.D.; Connelly, S.; Waples, E.P.; Kligyte, V. The Influence of Discrete Emotions on Judgement and Decision-Making: A Meta-Analytic Review. Cogn. Emot. 2011, 25, 1393–1422. [Google Scholar] [CrossRef] [PubMed]

- Bandyopadhyay, D.; Pammi, V.S.C.; Srinivasan, N. Role of Affect in Decision Making. In Progress in Brain Research; Elsevier: Amsterdam, The Netherlands, 2013; Volume 202, pp. 37–53. ISBN 978-0-444-62604-2. [Google Scholar]

- Lerner, J.S.; Li, Y.; Valdesolo, P.; Kassam, K.S. Emotion and Decision Making. Annu. Rev. Psychol. 2015, 66, 799–823. [Google Scholar] [CrossRef] [PubMed]

| Factor | ||||

|---|---|---|---|---|

| Emotion− | Emotion+ | Utility | Uniqueness | |

| 20. I’m afraid of research methods. | 0.74079 | |||

| 15. Research methods are complicated subjects. | 0.73455 | 0.548 | ||

| 10. Working with research methods makes me feel very nervous. | 0.70037 | 0.403 | ||

| 8. When I face a research methods problem, I feel incapable of thinking clearly. | 0.67867 | 0.455 | ||

| 18. I feel frustrated when doing research methods tests. | 0.66143 | 0.476 | ||

| 13. I feel insecure when working on research methods problems. | 0.64978 | 0.546 | ||

| 5. I’m not very good at research methods subjects. | 0.47494 | 0.497 | ||

| 11. I don’t get upset when I have to work on research methods problems. | 0.793 | |||

| 6. Using research methods is fun for me. | 0.8558 | |||

| 7. I enjoy discussing research methods with others. | 0.8314 | |||

| 9. Research methods are enjoyable and stimulating for me. | 0.8072 | |||

| 12. I would like to have an occupation that requires the use of research methods. | 0.5514 | 0.585 | ||

| 14. Statistics are useless. | 0.6492 | 0.509 | ||

| 17. Research methods are not useful for the common professional. | 0.5659 | 0.687 | ||

| 19. I will not use research methods in my profession. | 0.5218 | 0.485 | ||

| 16. Research methods are a requirement in my professional training. | 0.4715 | 0.615 | ||

| Explained Variance | 22.6% | 17.3% | 10.8% | 50.7% |

| Model | X2/df. | CFI | LTI | SRMR | RMSEA | AIC | BIC |

|---|---|---|---|---|---|---|---|

| [A] ATRMQ with item 11 (original ATSQ). | 1.801 | 0.939 | 0.927 | 0.060 | 0.067 | 7237 | 7399 |

| [B] ATRMQ without 11. | 1.793 | 0.944 | 0.933 | 0.058 | 0.066 | 6734 | 6887 |

| [C] ATRMQ without 11—second order. | 1.794 | 0.944 | 0.933 | 0.058 | 0.067 | 6734 | 6887 |

| Coefficient | Emotion− | Emotion+ | Utility | Attitude |

|---|---|---|---|---|

| Cronbach’s alpha | 0.886 | 0.862 | 0.752 | 0.897 |

| McDonald’s omega | 0.877 | 0.864 | 0.754 | 0.898 |

| Items | Criterion | Emotion− | Emotion+ | Utility | Attitude |

|---|---|---|---|---|---|

| I1. Research methods are useless. | Utility | 0.383 | 0.375 | 0.625 | 0.507 |

| I2. Research methods help make more informed decisions. | Decision-making | 0.531 | 0.461 | 0.513 | 0.584 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Matas-Terrón, A.; Aranda, L.; Franco-Caballero, P.D.; Mena-Rodríguez, E. Attitudes towards Research Methods in Education: Development of the ATRMQ Scale. Educ. Sci. 2024, 14, 374. https://doi.org/10.3390/educsci14040374

Matas-Terrón A, Aranda L, Franco-Caballero PD, Mena-Rodríguez E. Attitudes towards Research Methods in Education: Development of the ATRMQ Scale. Education Sciences. 2024; 14(4):374. https://doi.org/10.3390/educsci14040374

Chicago/Turabian StyleMatas-Terrón, Antonio, Lourdes Aranda, Pablo Daniel Franco-Caballero, and Esther Mena-Rodríguez. 2024. "Attitudes towards Research Methods in Education: Development of the ATRMQ Scale" Education Sciences 14, no. 4: 374. https://doi.org/10.3390/educsci14040374

APA StyleMatas-Terrón, A., Aranda, L., Franco-Caballero, P. D., & Mena-Rodríguez, E. (2024). Attitudes towards Research Methods in Education: Development of the ATRMQ Scale. Education Sciences, 14(4), 374. https://doi.org/10.3390/educsci14040374