Abstract

In the context of L2 academic reading, teachers tend to use a variety of question formats to assess students’ reading comprehension. Studies have revealed that not only question formats but also L2 language proficiency might affect how students use metacognitive strategies. Moreover, studies have determined that students’ L2 reading comprehension is positively influenced by their metacognitive knowledge, though whether this positive influence is reflected in students’ test scores is still under debate. This study therefore adopted path analyses to investigate the relationship between metacognitive knowledge, L2 reading proficiency, L2 reading test scores, and question formats. A total of 108 ESL students took English reading tests in multiple-choice and short-answer question formats and completed a reading strategy survey to measure their metacognitive knowledge of three types of strategies: global, problem-solving, and supporting. In both question formats, path analyses indicated that (1) metacognitive knowledge contributed to L2 reading test scores and (2) students’ L2 reading proficiency mediated the impact of metacognitive knowledge on their test performance. Moreover, path analyses revealed that question formats might play an important role in altering the impact of metacognitive knowledge on L2 reading test scores. Implications for instruction and L2 reading assessment are discussed.

1. Introduction

In recent years, teachers have focused significantly on adopting a variety of question formats to assess second language (L2) learners’ reading proficiency. Among L2 reading question formats, multiple-choice questions (MCQs) and short-answer questions (SAQs) are the most frequently adopted formats [1,2]; it is worth noting, however, that MCQs have received more attention than SAQs because of their popularity in testing (e.g., easy to rate, wide range of content coverage). MCQs are those reading questions that require students to select the correct answer among included distracters based on their reading comprehension. Conversely, SAQs require students to write short sentences based on their understanding of the texts. Researchers have invested considerable effort in examining question formats, revealing that students often utilize different strategies based on the format of the questions they encounter (e.g., [3,4,5,6,7,8]).

While studying learners’ approaches to L2 reading texts, researchers have established the importance of metacognitive knowledge, encompassing both awareness and regulation of strategies, in facilitating L2 reading comprehension [4,9,10,11,12,13]. However, the contribution of metacognitive strategies to L2 reading test scores remains a subject of debate among researchers. Some studies have found that employing metacognitive strategies can enhance students’ awareness of their reading processes, enabling them to monitor comprehension and engage more deeply with the reasoning aspects of reading, thereby improving their performance in tests (e.g., [10,11,14,15,16,17,18,19,20,21]). Conversely, others indicated that the use of metacognitive strategies does not necessarily guarantee improved reading test performance, as various factors, such as students’ existing linguistic knowledge, need to be considered (e.g., [22,23,24,25,26,27,28,29,30,31]).

Research has highlighted the role of question format in determining students’ reading processes (e.g., [4,5,32,33,34]). However, there is a dearth of studies investigating whether different question formats elicit varying levels of metacognitive knowledge, potentially impacting L2 learners’ test performance. Despite this gap, researchers have also discovered that learners’ L2 language proficiency may contribute significantly to students’ reading test scores while also mediating the contribution of metacognitive strategies (e.g., [35,36,37,38]). Therefore, this study aims to investigate the relationship between metacognitive knowledge, L2 language proficiency, and reading test scores in relation to MCQ and SAQ formats.

2. Literature Review

2.1. Metacognitive Knowledge

In coining the term metacognition, Flavell (1976) [39] defined it as “…the active monitoring and consequent regulation and orchestration of these processes [information processing activities] in relation to the cognitive objects or data on which they bear, usually in service of some concrete goal or objective.” (p. 232). Hacker (1998) [40] further defined metacognition as the ability to be consciously aware of, monitor, and regulate learning processes [or cognition].

In Flavell’s (1979) [2] model of metacognition, metacognitive knowledge encompasses a person’s understanding of the factors influencing their cognitive processes. It includes beliefs about the strategies necessary to achieve cognitive goals, such as comprehending reading content or solving problems. These strategies, known as metacognitive strategies, arise from learners’ awareness and concerns when tackling tasks [41]. In reading, metacognitive strategies may involve identifying context clues, monitoring comprehension, rereading for clarity, adjusting the reading pace, and considering connections to prior knowledge.

According to Brown (1987) [42], learners’ knowledge of their own cognition consists of several components that influence the implementation of metacognitive strategies. Declarative, procedural, and conditional knowledge are the primary components contributing to the effectiveness of metacognitive strategy use. Declarative knowledge involves factual information, procedural knowledge pertains to understanding how to perform tasks, and conditional knowledge encompasses knowing when and why to apply declarative and procedural knowledge. These components strengthen metacognitive knowledge and enable learners to employ strategies appropriately, particularly in challenging situations such as comprehending difficult texts [43].

Flavell’s concept of metacognition has extended its influence beyond psychology into diverse academic disciplines. In the context of reading, Grabe (2009) [44] defines metacognitive knowledge as “the knowledge and control that we have over our cognitive processes” (p. 222). Additionally, Schreiber (2005) [45] characterizes metacognitive knowledge as involving the awareness, monitoring, and regulation of strategies. In essence, metacognitive knowledge in L2 reading entails the deliberate use of strategies to monitor and regulate reading comprehension, thus enhancing the readers’ level of engagement with the texts [12,46].

2.2. Metacognitive Knowledge and L2 Reading

The importance of metacognitive knowledge in L2 reading comprehension has been well established [4,9,10,11,12,13]. However, the debate surrounding whether metacognitive knowledge can enhance students’ L2 reading test performance has persisted for decades.

In a recent study conducted by Zhang (2018) [20], structural equation modeling (SEM) was utilized to investigate the impact of reading strategies on students’ L2 reading performance. The findings indicated that the use of metacognitive strategies could effectively improve students’ L2 test scores. Similarly, Seedanont and Pookcharoen (2019) [19], Mohseni et al. (2020) [17], and Khellab et al. (2022) [16] investigated the instruction of metacognitive strategies in English as a foreign language (EFL) classes and reported similar findings. Seedanont and Pookcharoen (2019) [19] and Khellab et al. (2022) [16] examined students’ English reading test scores before and after weeks of instruction on metacognitive strategy use, while Mohseni et al. (2020) [17] compared the reading performance of three groups: one receiving training on metacognitive strategy use, another receiving training on reading awareness, and a control group. All three studies concluded that instruction in metacognitive strategies significantly increased students’ reading test scores. Furthermore, Mohseni et al. (2020) [17] discovered that training in reading awareness could also yield similar benefits in improving students’ test performance.

While some studies suggest that metacognitive knowledge can enhance students’ L2 reading scores, others present contrasting views, suggesting that the use of metacognitive strategies may have no direct impact, or at least a negligible one, on L2 reading test scores. A study by Shang (2018) [29] examining the utilization of metacognitive strategies by EFL students during the reading of academic texts found that students did indeed employ a variety of metacognitive strategies to enhance their reading comprehension. However, despite the frequent use of these strategies, the results of reading comprehension tests did not necessarily reflect their benefits. Shang observed that while several of the 27 metacognitive strategies studied might have individually contributed positively to test performance, collectively, they did not significantly impact test outcomes.

Likewise, Ghaith and El-Sanyoura (2019) [23] and Arabmofrad et al. (2021) [22] found that the effects of metacognitive strategies on L2 reading test performance were minimal. Employing correlation analysis, both studies revealed that overall, metacognitive strategies did not significantly correlate positively with test performance. However, Ghaith and El-Sanyoura (2019) [23] observed a slight positive correlation with strategies related to identifying answers to questions. Despite this correlation, the coefficients remained relatively low, at 0.30 and below.

In a similar vein, Yan and Kim (2023) [31] noted that despite students demonstrating the use of metacognitive strategies during L2 reading, their test performance did not exhibit significant improvement. In their study, EFL students underwent an initial English comprehension test, followed by three months of instruction in metacognitive strategies focusing on idea mapping, connecting with background knowledge, and inference-making, before taking a post-instruction comprehension test. Additionally, students participated in interviews to describe how the strategy instruction affected their reading processes. The findings showed that while the instruction did enhance students’ reading awareness, the anticipated improvement in test performance was not observed.

While earlier studies did not explicitly focus on the impacts of question formats, those adopting a variety of question formats for measuring reading test performance suggested that learners may adapt their strategies based on the format of the reading questions. Guterman (2002) [47] proposed that when learners confront challenging reading questions, such as short-answer questions (SAQs), it stimulates their thinking, prompting them to employ more strategies, particularly metacognitive ones, to improve reading comprehension and test scores. However, findings from studies such as Phakiti (2003) [18] and Tang and Moore (1992) [30] present conflicting results. Phakiti observed that the use of metacognitive strategies could enhance L2 reading test scores, whereas Tang and Moore found no significant effect. Notably, neither study found differences in learners’ metacognitive strategy use when tasking with different question formats. As a result, the role of question formats in the relationship between metacognitive strategy use and test performance remains uncertain.

Although prior studies were unable to verify the relationship between metacognitive knowledge, L2 reading test scores, and question formats, researchers have discovered that learners’ L2 reading skills were a stronger predictor of L2 reading test scores than metacognitive strategy use (e.g., [35,38,48,49]). Schoonen et al. (1998) [37] and Kim (2016) [36] further indicated that learners’ L2 proficiency played an important role not only in L2 reading performance but also in the predictive strength of metacognitive strategy use.

Researchers have emphasized the significance of metacognitive knowledge in enhancing L2 reading comprehension. Despite this recognition, studies also indicate gaps in the literature that have yet to be explored. For example, although the relationship between metacognitive knowledge and reading comprehension has been confirmed, studies that focus on exploring the impact of metacognitive knowledge on reading test scores—particularly with regard to question formats—are sparse. Researchers indicated that learners’ L2 proficiency, in comparison to the use of metacognitive strategies, is a stronger predictor of L2 reading test scores [12,35,48,49]; indeed, it is demonstrable that L2 language competence contributes more heavily to L2 reading test scores when compared to metacognitive knowledge. Studies also indicated that L2 proficiency might help determine whether learners’ metacognitive strategy use benefits reading test scores [36,37]. In other words, L2 proficiency may mediate the effects of metacognitive strategies on L2 reading test scores. To address these gaps in the literature, the present study investigated the roles that metacognitive knowledge and L2 language proficiency play in L2 reading assessments regarding question formats (i.e., MCQs and SAQs). With these objectives in mind, the following research questions will be addressed in this study:

- What is the relationship between metacognitive knowledge, L2 reading proficiency, L2 reading test performance, and question formats?

- How do question formats influence the impact of metacognitive strategies on L2 test scores?

3. Methods

3.1. Participants

This study was conducted in a midwestern college in the U.S. Participants included 108 students from predominantly intermediate and high-intermediate reading and writing classes of the ESL program. Among these ESL students, 49 were male and 59 were female. The majority of the students spoke either Arabic (41%) or French (43%). The remaining 16% of the students spoke Chinese, Spanish, Portuguese, Swahili, Russian, Thai, or Vietnamese. The students had an average of 3.5 years of English instruction. Before they participated in this study, the students were required to take an English placement exam, which for this college was the Accuplacer test developed by the College Board. The Accuplacer test is a standardized assessment commonly adopted by colleges and universities in the United States to evaluate the English reading and writing skills of incoming international students. It offers schools valuable insights into students’ English proficiency for college-level coursework and helps determine the appropriate level of English courses for placement. In the Accuplacer reading test, students read passages or paragraphs and then answer multiple-choice questions to evaluate their comprehension skills, including understanding vocabulary meaning, identifying main ideas and supporting details, making inferences, and grasping sentence relationships. According to the placement exam results, students’ reading scores ranged from 20 to 80 (score range: 20 to 120); the mean was 53.86, with a standard deviation of 19.77.

3.2. Instruments

Reading tests. For the present study, four English reading passages were made available to the students, with a total of 20 comprehension questions. The passages and questions were adopted from Pearson Education’s reading test booklets: Get Ready to Read: A Skills-Based Reader [50] and Ready to Read More: A Skills-Based Reader [51]. The topics of these passages addressed business, history, and science. On average, the word count of each passage was 300 words. According to Microsoft Word’s readability statistics, the Flesch Reading Ease scores for the four passages were 40.5, 49.7, 60.5, and 65.3, indicating that the text difficulty was between intermediate (standard) and high-intermediate (difficult to read). Furthermore, according to The Lexile Analyzer®, the Lexile scores of these four passages ranged from 610 L to 1200 L, corresponding to A2 to B2 CEFR levels [52].

In a group of 20 comprehension questions, eight were factual, seven were vocabulary, and five were inferential. The comprehension questions were written in both MCQ and SAQ formats. Reading texts and comprehension questions in both formats were piloted and carefully examined by experienced English teachers and testing experts.

Metacognitive Awareness of Reading Strategies Inventory (MARSI-R). This study adopted Mokhtari et al.’s (2018) [53] MARSI-R, specifically designed to measure the level of metacognitive strategy utilization and the extent of metacognitive awareness among ESL students. When completing the questionnaire, students were asked to specify the frequency of metacognitive strategy use via a 5-point Likert scale: 1 (never or almost never used), 2 (rarely used), 3 (sometimes used), 4 (often used), and 5 (always used).

The MARSI-R includes a total of 15 strategy statements divided into three major categories: global strategies, problem-solving strategies, and supporting strategies. Global strategies are intentionally used by readers to set the stage for reading and include establishing a reading purpose or connecting the background knowledge with the reading. Problem-solving strategies are employed when learners have difficulty comprehending the reading or identifying the correct answer for the reading question and may involve adjusting the reading speed according to the difficulty level of the text or as an attempt to get back on track when losing concentration. Finally, supporting reading strategies are techniques that involve responding to the content (for instance, by underlining or circling important information in the text). Cronbach’s alpha for the overall scale is 0.93; the subscales are 0.92 (global strategies), 0.79 (problem-solving strategies), and 0.87 (supporting strategies).

3.3. Data Collection Procedure

Because the reading passages in both the MCQ and SAQ tests are content-equivalent, a counterbalanced design was conducted to minimize the potential threat of carryover effects. At the beginning of the data collection procedure, 108 students were divided into two approximately equivalent reading proficiency groups based on how they performed on the college placement exam. At this point, one group took the MCQ reading test while the other group took the SAQ reading test. After approximately one month, the group that had taken the MCQ reading test took the SAQ reading test, and vice-versa. Each time the students completed a different format of the reading test, they also completed the MARSI-R to assess their metacognitive knowledge, including strategy use and awareness degree. Before the students took the second reading test, the order of reading passages and comprehension questions was also randomly shuffled. The students also did not have access to the answer sheet after they completed the first reading test.

Because SAQs require students to construct short-written responses, we developed a holistic scoring rubric (see Table 1 for the rubric). Two experienced ESL teachers served as raters, scoring students’ SAQ responses using the rubric. The interrater reliability between the two raters was r = 0.92 with p < 0.001. In cases of score discrepancies, a third rater, also an experienced ESL teacher, joined the scoring process.

Table 1.

Scoring rubrics of SAQ responses.

To test the carryover effects, independent-sample t-tests were employed to investigate whether a performance difference existed between students who first took the MCQ test and those who took the MCQ test later; the same procedure was also applied to the SAQ groups. According to the results, no statistically significant performance difference was found between those who took the MCQ first and those who did so later, t(106) = 0.52, p = 0.60 > 0.05; the same can be said for the SAQ, t(106) = −0.63, p = 0.53 > 0.05. The correlation between MCQ and SAQ test scores is another way to ensure the success of the counterbalanced design. If the two tests are highly correlated, it suggests that students apply what they learned from the first test to the second test; conversely, if the two tests are poorly correlated, there may be an issue with whether they are assessing the same construct [54]. In this study, the correlation between MCQ and SAQ test scores was moderate (r = 0.58, p = 0.000). Additionally, there was no statistically significant performance difference in the results of sample t-tests, indicating that the two reading tests were assessing the same construct and that the impact of carryover effects was minimized.

3.4. Data Analysis

In order to investigate the relationship between metacognitive knowledge (i.e., global, problem-solving, and supporting strategies), L2 reading proficiency (i.e., Accuplacer reading scores), and L2 reading test performance (i.e., MCQ and SAQ test scores), we employed descriptive statistics, correlation analysis, and path analysis. The statistical assumptions were verified. First, the residuals were distributed as normal according to the histograms and normal Predicted Probability plots. Second, the scatterplots showed that the residuals were homoscedastic, as there was no obvious pattern. Third, the results of Cook’s distance did not identify any significant outlier. Fourth, the multicollinearity of the data was also checked. The VIF values for MCQ and SAQ tests are listed below. For the MCQ test, the VIF value for global strategies was 2.0, for problem-solving strategies was 2.4, for supporting strategies was 1.9, and the Accuplacer reading scores was 1.2. For the SAQ test, the VIF values for global strategies was 2.7, for problem-solving strategies was 2.7, for supporting strategies was 2.8, and the Accuplacer reading score was 1.2. All the VIF values were below 10, and therefore there was no issue of multicollinearity.

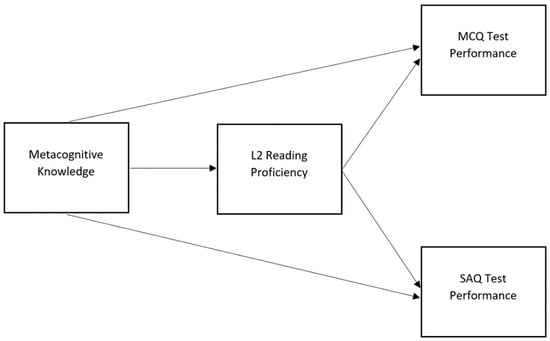

In path analysis, a series of multiple regressions are conducted simultaneously to evaluate hypothesized causal models by estimating interrelations among observed variables. Moreover, path analysis estimates the direct effects of a predictor variable on an outcome variable and also the indirect effects that are generated by an intervening variable influencing the relationship between predictor and outcome variables [55]. To address the research questions, a hypothesized path model (Figure 1) was established to investigate (1) the relationship between metacognitive knowledge and L2 reading test performance, (2) the role that L2 reading proficiency plays in this relationship, and (3) whether metacognitive strategies differentially influence the test performance in different question formats. In this hypothesized path model, metacognitive knowledge (i.e., strategies) serves as the predictor variable, while the outcome variables are MCQ and SAQ test performance. The intervening variable in the model is L2 reading proficiency.

Figure 1.

The hypothesized path model.

We conducted path analysis using Mplus version 8.10 with robust maximum likelihood estimation (MLR) to assess the hypothesized path model. The results of path analysis obtained from Mplus were cross-checked with those yielded by R.

4. Results

4.1. Descriptive Statistics

Table 2 presents the descriptive statistics for students’ MCQ and SAQ test scores, the degree of metacognitive strategy use in three categories, and the Accuplacer reading scores. In terms of L2 reading test scores, students performed slightly better on the MCQ test than on the SAQ test. Moreover, the descriptive statistics indicate that the degree of metacognitive strategy use was similar in both MCQ and SAQ reading tests. Generally, global strategies were the most frequently used, followed by problem-solving strategies and supporting strategies.

Table 2.

Descriptive statistics.

4.2. Correlations

Table 3 and Table 4 show that Accuplacer reading scores had the highest correlation of MCQ (r = 0.36) and SAQ (r = 0.52) test scores. Moreover, there was a low negative correlation between MCQ test scores and supporting strategies. These correlations not only show that students’ L2 reading proficiency impacts MCQ and SAQ test scores to a significant degree but also suggest that students’ metacognitive strategy use may not positively contribute to their reading performance as expected.

Table 3.

Correlations between MCQ test scores, degrees of strategy use, and Accuplacer reading scores.

Table 4.

Correlations between SAQ test scores, degrees of strategy use, and Accuplacer reading scores.

4.3. Path Analysis

All the observed variables (i.e., three types of metacognitive strategies, L2 reading proficiency, and MCQ and SAQ test performances) were collectively computed for the merits of path analysis. However, it should be noted that drawing paths for a number of observed variables in one path model may be confusing. Given that metacognitive knowledge, measured by global, problem-solving, and supporting strategies, serves as the predictor variable, the hypothesized path model (Figure 1) was utilized as a reference to depict a path model for each type of metacognitive strategy.

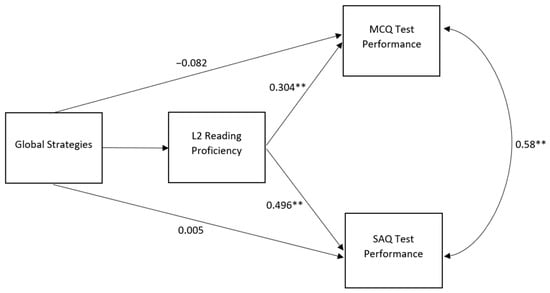

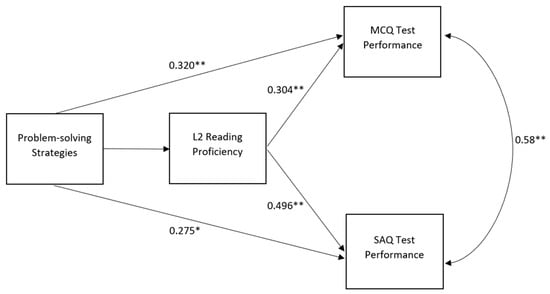

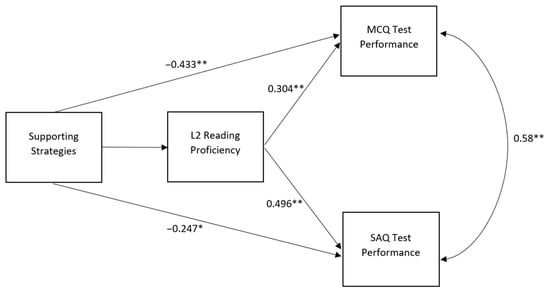

We report the estimates of all the direct and indirect paths posited in the relationships between the use of metacognitive strategies and MCQ and SAQ scores, with the role of reading proficiency as measured by Accuplacer reading scores as a mediator. For that reason, we relied on the just-identified model that fits perfectly with the data. Figure 2, Figure 3 and Figure 4 indicate the standardized model parameter estimates for each type of strategy based on the proposed path model.

Figure 2.

The path model for global strategies. Note. ** p < 0.01.

Figure 3.

The path model for problem-solving strategies. Note. * p < 0.05; ** p < 0.01.

Figure 4.

The path model for supporting strategies. Note. * p < 0.05; ** p < 0.01.

Table 5 presents the comparison results of direct effects for global, problem-solving, and supporting strategies. According to the results, the standardized estimates (i.e., standardized path coefficients) revealed that several variables had a direct effect on outcome variables (i.e., MCQ and SAQ test performance). Following the interpretation guidelines for path analysis [56], it was observed that global strategies showed no significant effect on either MCQ or SAQ test performance.

Table 5.

Standardized estimates and standard errors of the paths in models for global, problem-solving, and supporting strategies.

Problem-solving strategies demonstrated a moderate positive effect on MCQ test performance (β = 0.32, p < 0.01) and a small, nearly moderate positive effect on SAQ test performance (β = 0.28, p < 0.05). Conversely, supporting strategies exhibited a moderate negative effect on MCQ test performance (β = −0.43, p < 0.01) and a small negative effect on SAQ test performance (β = −0.25, p < 0.05).

Additionally, L2 reading proficiency exhibited a moderate positive effect on MCQ test performance (β = 0.30, p < 0.01) and a nearly large positive effect on SAQ test performance (β = 0.49, p < 0.01) in all three strategy models.

In addition to direct effects, we also examined both indirect and total effects. Table 6 and Table 7 indicate that global and problem-solving strategies yielded significant indirect effects on MCQ test performance via L2 reading proficiency. Regarding the total effects of metacognitive knowledge on MCQ and SAQ test performance, the three types of metacognitive strategies collectively had no significant effect on either MCQ or SAQ test performance.

Table 6.

Standardized indirect and total effects on MCQ test performance.

Table 7.

Standardized indirect and total effects on SAQ test performance.

In summary, correlation analysis revealed a significant positive correlation between L2 reading proficiency and MCQ and SAQ test scores. The results of path analysis confirmed the unique and significant contribution of L2 reading proficiency to both MCQ and SAQ test scores. Additionally, a negative correlation was identified between supporting strategies and MCQ test scores. Path analysis further specified that both problem-solving and supporting strategies contributed to the score variance of MCQ and SAQ tests. Moreover, path analysis indicated that the impact of global and problem-solving strategies on L2 reading test performance in MCQ format was influenced by L2 reading proficiency.

5. Discussion

Several findings emerged based on the results of data analysis. First, L2 reading proficiency, problem-solving, and supporting strategies significantly predicted L2 reading test performance. Second, types of metacognitive strategies may determine whether metacognitive knowledge had indirect effects on reading test performance via L2 reading proficiency. Third, question formats might influence the effects of metacognitive knowledge on L2 reading test performance. This section will discuss and interpret each of these major findings.

5.1. The Relationship between Metacognitive Knowledge, L2 Reading Proficiency, L2 Reading Test Performance, and Question Formats

Similar to previous studies, path analysis revealed that students’ L2 reading proficiency was a significant predictor of their L2 reading test performance [35,37,38,48,49]. Undoubtedly, L2 learners’ performance on language tests is heavily dictated by their L2 language proficiency [57]. It is worth noting that L2 reading proficiency had a more positive impact on SAQ test scores than MCQ test scores, primarily due to the larger value of β. Because SAQs do not come with prescribed options, they are usually more challenging than MCQs [58], which is also illustrated in the descriptive statistics of this study. Thus, when working on a challenging reading test, students’ L2 reading proficiency might play a more important role.

Descriptive statistics showed that for both MCQ and SAQ tests, the most frequent use of metacognitive strategies was global strategies, followed by problem-solving and supporting strategies. Although global strategies were used more often than other types of strategies, correlation and path analyses indicated that there was no relationship between global strategy use and students’ test performance. For problem-solving and supporting strategies, the results showed that both types of strategies significantly contributed to L2 test performance in MCQ and SAQ formats; specifically, while problem-solving strategies had a positive contribution, supporting strategies had a negative contribution.

In comparison to supporting strategies, path analysis indicated that problem-solving strategy use made a beneficial contribution to the MCQ test scores. A similar result was also found in path analysis for the SAQ test. These results suggest that problem-solving strategies were effective in promoting reading test scores, regardless of question formats. These findings aid previous research, clarifying that students’ metacognitive knowledge positively impacts their reading test scores [10,11,14,15,16,17,18,19,20,21]. However, these positive effects were largely attributed to problem-solving strategies [11,23,29] and were not restricted by question formats. Conversely, supporting strategies were significantly and negatively correlated with the MCQ test scores. Path analysis further specified that the contributions of supporting strategy use on both MCQ and SAQ test performance were negative.

A potential explanation for why problem-solving strategies benefited students’ test performance while global and support strategies did not lie in their respective purposes. Global and supporting strategies are primarily employed for tasks such as setting reading goals and making notes to aid in remembering key points. In contrast, problem-solving strategies are predominantly used for regulating reading comprehension and identifying answers to questions. This fundamental difference in purpose suggests that problem-solving strategies may have a more direct impact on test performance, as they are specifically geared towards addressing comprehension challenges and locating correct answers. Conversely, global and supporting strategies, while useful for organizing information and setting goals, may not directly contribute to improving comprehension or answering test questions. Therefore, the potential impact of global and supporting strategies on enhancing students’ reading test performance may be limited compared to problem-solving strategies.

In terms of indirect effects, path analysis revealed that the influence of global and problem-solving strategies on MCQ test performance was mediated by L2 reading proficiency. This finding aligns with Schoonen et al. (1998) [37] and Kim (2016) [36], affirming the pivotal role of students’ L2 reading proficiency in mediating the predictive strength of metacognitive strategies on L2 reading test performance. This suggests that proficient readers are likely to employ metacognitive strategies more frequently and effectively than less proficient readers to enhance their test performance. However, this mediating effect was only significant in the MCQ reading test.

Learners often employ various reading strategies to facilitate their understanding of the text and increase their chances of answering questions correctly. However, to apply these strategies effectively, learners must attain a level of reading proficiency that matches the difficulty level of the test [38]. Considering that the SAQ format poses greater challenges than the MCQ format, inadequate L2 reading proficiency may limit the mediating effects of metacognitive strategies on test performance. Even when L2 reading proficiency meets the test requirements, not all metacognitive strategies will necessarily result in significant positive impacts on test performance, except for those, like problem-solving strategies, that have the potential to directly address questions while deepening reading comprehension.

5.2. The Impact of Metacognitive Knowledge on L2 Reading Test Performance across Two Different Question Formats

Path analysis showed that the influence of metacognitive knowledge may vary depending on the question format. The contributions of global, problem-solving, and supporting strategies to test performance in MCQ and SAQ formats are illustrated in Figure 2, Figure 3 and Figure 4. As demonstrated by Figure 3 and Figure 4, problem-solving and supporting strategies differentially impact reading test performance regarding question formats. Although problem-solving strategies did not seem to make any apparent difference in contributions (positive β values) to MCQ and SAQ test scores, the difference between supporting strategies’ contributions (negative β values) to the test scores was evident. These findings contradict Phakiti’s (2003) [18] and Tang and Moore’s (1992) [30] studies but substantiate Barnett’s (1998) [10] and Chern’s (1993) [11] work, which indicate that question formats may vary the effects of metacognitive strategies on L2 reading test performance. However, this differing impact does not guarantee that metacognitive strategies positively contribute to test performance.

As noted by Schoonen et al. (1998) [37], the difficulty of reading tests determines how metacognitive strategies are used and how they contribute to student test scores. Moreover, researchers have observed that different question formats impose varying demands, such as language proficiency and cognitive processes, necessitating students to employ diverse strategies to meet these demands or compensate for deficiencies [8]. Further research is warranted to comprehensively grasp how question formats, particularly test difficulty, shape the interplay between various types of metacognitive strategy utilization and reading test performance.

6. Implications

The results of this study have several implications for the instruction and assessment of academic English reading. While the findings indicate that problem-solving strategies are the type of metacognitive strategies that could effectively improve their test scores, it should be noted that the effects of reading strategies complement each other; this is shown in the correlations between global, problem-solving, and supporting strategies. Without global and supporting strategies, the effectiveness of problem-solving strategies may be limited. For example, by establishing a reading goal at the beginning of a test (an example of a global strategy) and taking notes on key information while reading a passage (an example of a supporting strategy), students are encouraged to adjust their reading speed and hone their concentration (examples of problem-solving strategies). Therefore, teachers should emphasize the collaborative use of strategies to enhance reading processes and comprehension, rather than overly focusing on strategies aimed at improving students’ test scores.

When assessing students’ L2 reading ability, teachers should also consider students’ L2 reading proficiency, as doing so will help ensure the effectiveness of metacognitive strategies. The findings of this study indicate that students with higher L2 reading proficiency may employ more metacognitive strategies, potentially leading to improved scores on L2 reading tests. Consequently, teachers may need to focus more on students with lower L2 reading proficiency and teach them how to use metacognitive strategies more effectively. Additionally, teachers must consider the question format; indeed, our findings show that certain types of metacognitive strategies might be more effective with particular question formats. Understanding how different types of metacognitive strategies interact with question formats is crucial. Providing students with ample opportunities to apply these strategies across various formats can help ensure that question formats do not impede students from demonstrating their L2 reading proficiency.

7. Limitations

This study has a number of limitations that must be noted. Firstly, the sample size in this study was far from substantial, and thus more studies with larger sample sizes are needed to render more compelling findings. Secondly, while the international student population in the U.S. largely consists of Asian students, the participants in this study were predominantly Arabic and French speakers; this factor may have influenced the findings, and as such it should be considered a limitation of the study. Thirdly, the investigation of this study only focuses on how L2 reading proficiency contributes to test scores; future studies should therefore consider examining more specific L2 reading components, including grammar, vocabulary, or topic-related background knowledge [59,60,61]. A final limitation of this study concerns the possible carryover effects. Although a counterbalanced design was conducted, there is a possibility that the participants applied what they learned from the first reading test to the second reading test. These potential carryover effects may therefore have impacted their metacognitive strategy use.

8. Conclusions

Through an investigation into the influence of metacognitive strategies and L2 reading proficiency across different question formats, this study makes significant contributions to the literature on reading assessments and strategy instruction. The findings indicate that when students employ metacognitive strategies during reading tests, the effects of these strategies on students’ test performance vary, and this variation may depend on the question format. Furthermore, our findings reveal that students’ L2 reading proficiency may mediate the relationship between metacognitive strategies and test performance, suggesting that students with higher L2 reading proficiency may utilize metacognitive strategies more effectively during test completion. These insights not only offer guidance for practitioners in teaching reading strategies but also encourage further investigation into the interplay between metacognitive strategy use, L2 reading proficiency, and question formats.

Author Contributions

Conceptualization, R.J.T.L.; methodology, R.J.T.L. and K.L.; software, R.J.T.L. and K.L.; validation, R.J.T.L. and K.L.; formal analysis, R.J.T.L. and K.L.; investigation, R.J.T.L. and K.L.; resources, R.J.T.L.; data curation, R.J.T.L.; writing—original draft preparation, R.J.T.L. and K.L.; writing—review and editing, R.J.T.L. and K.L.; visualization, R.J.T.L.; supervision, R.J.T.L. and K.L.; project administration, R.J.T.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Boards of Kirkwood Community College.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Due to ethical restrictions, access to the data is unavailable.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alderson, J.C. Assessing Reading; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Flavell, J.H. Metacognition and cognitive monitoring: A new area of cognitive–developmental inquiry. Am. Psychol. 1979, 34, 906–911. [Google Scholar] [CrossRef]

- Cohen, A.D.; Upton, T.A. I want to go back to the text’: Response strategies on the reading subtest of the new TOEFL®. Lang. Test. 2007, 24, 209–250. [Google Scholar] [CrossRef]

- Liao, R.J.T. Exploring task-completion processes in L2 reading assessments: Multiple- choice vs. short-answer questions. Read. A Foreign Lang. 2021, 33, 168–190. [Google Scholar]

- Liu, H. Does questioning strategy facilitate second language (L2) reading comprehension? The effects of comprehension measures and insights from reader perception. J. Res. Read. 2021, 44, 339–359. [Google Scholar] [CrossRef]

- Plakans, L.; Liao, J.T.; Wang, F. “I should summarize this whole paragraph”: Shared processes of reading and writing in iterative integrated assessment tasks. Assess. Writ. 2019, 40, 14–26. [Google Scholar] [CrossRef]

- Rupp, A.A.; Ferne, T.; Choi, H. How assessing reading comprehension with multiple-choice questions shapes the construct: A cognitive processing perspective. Lang. Test. 2006, 23, 441–474. [Google Scholar] [CrossRef]

- Weigle, S.C.; Yang, W.; Montee, M. Exploring reading processes in an academic reading test using short-answer questions. Lang. Assess. Q. 2013, 10, 28–48. [Google Scholar] [CrossRef]

- Ahmadi, M.R.; Ismail, H.N.; Abdullah, M.K.K. The importance of metacognitive reading strategy awareness in reading comprehension. Engl. Lang. Teach. 2013, 6, 235–244. [Google Scholar] [CrossRef]

- Barnett, M.A. Reading through context: How real and perceived strategy use affects L2 comprehension. Mod. Lang. J. 1988, 72, 150–162. [Google Scholar] [CrossRef]

- Chern, C.L. Chinese students’ word-solving strategies in reading in English. In Second Language Reading and Vocabulary Learning; Huckin, T., Haynes, M., Coady, J., Eds.; Ablex Publishing: New York, NY, USA, 1993; pp. 67–85. [Google Scholar]

- van Gelderen, A.; Schoonen, R.; de Glopper, K.; Hulstijn, J.; Simis ASnellings, P.; Stevenson, M. Linguistic knowledge, processing speed and metacognitive knowledge in first and second language reading comprehension: A componential analysis. J. Educ. Psychol. 2004, 96, 19–30. [Google Scholar] [CrossRef]

- Wang, J.; Spencer, K.; Xing, M. Metacognitive beliefs and strategies in learning Chinese as a foreign language. System 2009, 37, 46–56. [Google Scholar] [CrossRef]

- Dabarera, C.; Renandya, W.A.; Zhang, L.J. The impact of metacognitive scaffolding and monitoring on reading comprehension. System 2014, 42, 462–473. [Google Scholar] [CrossRef]

- Karimi, M.N. L2 multiple-documents comprehension: Exploring the contributions of L1 reading ability and strategic processing. System 2015, 52, 14–25. [Google Scholar] [CrossRef]

- Khellab, F.; Demirel, Ö.; Mohammadzadeh, B. Effect of teaching metacognitive reading strategies on reading comprehension of engineering students. SAGE Open 2022, 12. [Google Scholar] [CrossRef]

- Mohseni, F.; Seifoori, Z.; Ahangari, S. The impact of metacognitive strategy training and critical thinking awareness-raising on reading comprehension. Cogent Educ. 2020, 7, 1–22. [Google Scholar] [CrossRef]

- Phakiti, A. A closer look at the relationship of cognitive and metacognitive strategy use to EFL reading achievement test performance. Lang. Test. 2003, 20, 26–56. [Google Scholar] [CrossRef]

- Seedanont, C.; Pookcharoen, S. Fostering metacognitive reading strategies in Thai EFL classrooms: A focus on proficiency. Engl. Lang. Teach. 2019, 12, 75–86. [Google Scholar] [CrossRef]

- Zhang, L. Metacognitive and Cognitive Strategy Use in Reading Comprehension; Springer: Dordrecht, The Netherlands, 2018. [Google Scholar]

- Zhang, L.; Goh, C.C.M.; Kunnan, A.J. Analysis of Test takers’ metacognitive and cognitive strategy use and EFL reading test performance: A multi-sample SEM approach. Lang. Assess. Q. 2014, 11, 76–102. [Google Scholar] [CrossRef]

- Arabmofrad, A.; Badi, M.; Pitehnoee, M.R. The relationship among elementary English as a foreign language learners’ hemispheric dominance, metacognitive reading strategies preferences, and reading comprehension. Read. Writ. Q. 2021, 37, 413–424. [Google Scholar] [CrossRef]

- Ghaith, G.; El-Sanyoura, H. Reading comprehension: The mediating role of metacognitive strategies. Read. Foreign Lang. 2019, 31, 19–43. [Google Scholar]

- Guo, Y.; Roehrig, A.D. Roles of general versus second language (L2) knowledge in L2 reading comprehension. Read. Foreign Lang. 2011, 23, 42–64. [Google Scholar]

- Mehrdad, A.G.; Ahghar, M.R.; Ahghar, M. The Effect of Teaching Cognitive and Metacognitive Strategies on EFL Students’ Reading Comprehension Across Proficiency Levels. Procedia Soc. Behav. Sci. 2012, 46, 3757–3763. [Google Scholar] [CrossRef][Green Version]

- Meniado, J.C. Metacognitive reading strategies, motivation, and reading comprehension performance of Saudi EFL students. Engl. Lang. Teach. 2016, 9, 117. [Google Scholar] [CrossRef]

- Pei, L. Does Metacognitive Strategy Instruction Indeed Improve Chinese EFL Learners’ Reading Comprehension Performance and Metacognitive Awareness? J. Lang. Teach. Res. 2014, 5, 1147–1152. [Google Scholar] [CrossRef]

- Purpura, J.E. An Analysis of the Relationships Between Test Takers’ Cognitive and Metacognitive Strategy Use and Second Language Test Performance. Lang. Learn. 1997, 47, 289–325. [Google Scholar] [CrossRef]

- Shang, H.-F. EFL medical students’ metacognitive strategy use for hypertext reading comprehension. J. Comput. High. Educ. 2018, 30, 259–278. [Google Scholar] [CrossRef]

- Tang, H.N.; Moore, D.W. Effects of cognitive and metacognitive pre-reading activities on the reading comprehension of ESL learners. Educ. Psychol. 1992, 12, 315–331. [Google Scholar] [CrossRef]

- Yan, X.; Kim, J. The effects of schema strategy training using digital mind mapping on reading comprehension: A case study of Chinese university students in EFL context. Cogent Educ. 2023, 10, 2163139. [Google Scholar] [CrossRef]

- Ferrer, A.; Vidal-Abarca, E.; Serrano, M.; Gilabert, R. Impact of text availability and question format on reading comprehension processes. Contemp. Educ. Psychol. 2017, 51, 404–415. [Google Scholar] [CrossRef]

- Gordon, C.M.; Hanauer, D. The interaction between task and meaning construction in EFL reading comprehension tests. TESOL Q. 1995, 29, 299. [Google Scholar] [CrossRef]

- Primor, L.; Yeari, M.; Katzir, T. Choosing the right question: The effect of different question types on multiple text integration. Read. Writ. 2021, 34, 1539–1567. [Google Scholar] [CrossRef]

- Ardasheva, Y.; Tretter, T.R. Contributions of Individual differences and contextual variables to reading achievement of English language learners: An empirical investigation using hierarchical linear modeling. TESOL Q. 2013, 47, 323–351. [Google Scholar] [CrossRef]

- Kim, H. The relationships between Korean university students’ reading attitude, reading strategy use, and reading proficiency. Read. Psychol. 2016, 37, 1162–1195. [Google Scholar] [CrossRef]

- Schoonen, R.; Hulstijn, J.; Bossers, B. Metacognitive and language-specific knowledge in native and foreign language reading comprehension: An empirical study among Dutch students in grades 6, 8 and 10. Lang. Learn. 1998, 48, 71–106. [Google Scholar] [CrossRef]

- van Gelderen, A.; Schoonen, R.; Stoel, R.D.; de Glopper, K.; Hulstijn, J. Development of adolescent reading comprehension in language 1 and language 2: A longitudinal analysis of constituent components. J. Educ. Psychol. 2007, 99, 477–491. [Google Scholar] [CrossRef]

- Flavell, J.H. Metacognitive aspects of problem solving. In The Nature of Intelligence; Resnick, L., Ed.; Lawrence Erlbaum: Mahwah, NJ, USA, 1976; pp. 231–236. [Google Scholar]

- Hacker, D.J. Definitions and empirical foundations. In Metacognition in Educational Theory and Practice; Hacker, D.J., Dunlosky, J., Graesser, A.C., Eds.; Lawrence Erlbaum: Mahwah, NJ, USA, 1998; pp. 15–38. [Google Scholar]

- Bachman, L.; Adrian, P. Language Assessment in Practice: Developing Language Assessments and Justifying Their Use in the Real World; Oxford University Press: Oxford, UK, 2022. [Google Scholar]

- Brown, A.L. Metacognition, executive control, self-regulation, and other more mysterious mechanisms. In Metacognition, Motivation, and Understanding; Weinert, F.E., Kluwe, R.H., Eds.; Psychology Press: Hillsdale, NJ, USA, 1987; pp. 65–116. [Google Scholar]

- van Velzen, J. (Ed.) Metacognitive knowledge in theory. In Metacognitive Learning: Advancing Learning by Developing General Knowledge of the Learning Process; Springer: Berlin/Heidelberg, Germany, 2016; pp. 13–25. [Google Scholar]

- Grabe, W. Reading in a Second Language: Moving from Theory to Practice; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Schreiber, F.J. Metacognition and self-regulation in literacy. In Metacognition in Literacy Learning: Theory, Assessment, Instruction, and Professional Development; Israel, S.E., Block, C.C., Bauserman, K.L., Kinnucan-Welsch, K., Eds.; Routledge: London, UK, 2005; pp. 215–239. [Google Scholar]

- Anderson, N.J. Metacognition: Awareness of language learning. In Psychology for Language Learning: Insights from Research, Theory and Practice; Mercer, S., Ryan, S., Williams, M., Eds.; Palgrave Macmillan: London, UK, 2012; pp. 169–187. [Google Scholar]

- Guterman, E. Toward dynamic assessment of reading: Applying metacognitive awareness guidance to reading assessment tasks. J. Res. Read. 2002, 25, 283–298. [Google Scholar] [CrossRef]

- Sarbazi, M.; Khany, R.; Shoja, L. The predictive power of vocabulary, syntax and metacognitive strategies for L2 reading comprehension. S. Afr. Linguist. Appl. Lang. Stud. 2021, 39, 244–258. [Google Scholar] [CrossRef]

- Zhang, L. Chinese college test takers’ individual differences and reading test performance. Percept. Mot. Ski. 2016, 122, 725–741. [Google Scholar] [CrossRef] [PubMed]

- Blanchard, K. Get Ready to Read: Test Booklet, 1st ed.; Pearson Education: London, UK, 2004. [Google Scholar]

- Blanchard, K. Ready to Read Now: Test Booklet, 1st ed.; Pearson Education: London, UK, 2005. [Google Scholar]

- Smith, M.; Turner, J. The Common European Framework for Reference for Languages (CEFR) and The Lexile Framework for Reading; MetaMetrics: Durham, NC, USA, 2016; Available online: https://metametricsinc.com/wp-content/uploads/2018/01/CEFR_1.pdf (accessed on 15 February 2023).

- Mokhtari, K.; Dimitrov, D.M.; Reichard, C.A. Revising the metacognitive awareness of reading strategies inventory (MARSI) and testing for factorial invariance. Stud. Second Lang. Learn. Teach. 2018, 8, 219–246. [Google Scholar] [CrossRef]

- Downing, S.M. Selected-response item formats in test development. In Handbook of Test Development; Downing, S.M., Haladyna, T.M., Eds.; Lawrence Erlbaum: Mahwah, NJ, USA, 2006; pp. 287–301. [Google Scholar]

- Hamilton, M. Path analysis. In The Sage Encyclopedia of Communication Research Methods; Allen, M., Ed.; SAGE Publications: Thousand Oaks, CA, USA, 2017; pp. 1194–1197. [Google Scholar]

- Keith, T.Z. Multiple Regression and Beyond; Pearson Education: London, UK, 2006. [Google Scholar]

- Kunnan, A.J. Approaches to validation in language assessment. In Validation in Language Assessment; Kunnan, A.J., Ed.; Lawrence Erlbaum: Mahwah, NJ, USA, 1998; pp. 1–16. [Google Scholar]

- Elley, W.B.; Mangubhai, F. Multiple-choice and open-ended items in reading tests: Same or different? Stud. Educ. Eval. 1992, 18, 191–199. [Google Scholar] [CrossRef]

- Droop, M.; Verhoeven, L. Language proficiency and reading ability in first- and second-language learners. Read. Res. Q. 2003, 38, 78–103. [Google Scholar] [CrossRef]

- Shiotsu, T.; Weir, C.J. The relative significance of syntactic knowledge and vocabulary breadth in the prediction of reading comprehension test performance. Lang. Test. 2007, 24, 99–128. [Google Scholar] [CrossRef]

- Yamashita, J.; Shiotsu, T. Comprehension and knowledge components that predict L2 reading: A latent-trait approach. Appl. Linguist. 2017, 38, 43–67. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).