Impact of Learning Analytics Guidance on Student Self-Regulated Learning Skills, Performance, and Satisfaction: A Mixed Methods Study

Abstract

1. Introduction

2. Background

2.1. Learning Analytics

2.2. Strong vs. Minimal Teacher Guidance

2.3. Learning Analytics and Student Outcomes

2.3.1. SRL Skills

2.3.2. How to Increase Performance through Strong versus Minimal Guidance

2.3.3. LA-Based Feedback Satisfaction

2.4. Research Questions

- Does the LA-based minimal and strong guidance learning approach have the same impact on student performance and SRL skills?

- What are the students’ learning perceptions and satisfaction under LA-based guidance?

3. Method

3.1. Instructional Design

3.2. Type of Guidance

- The MG group followed a low prompting approach informing students with grades and statistics (max, minimum, average grade, and exercise duration);

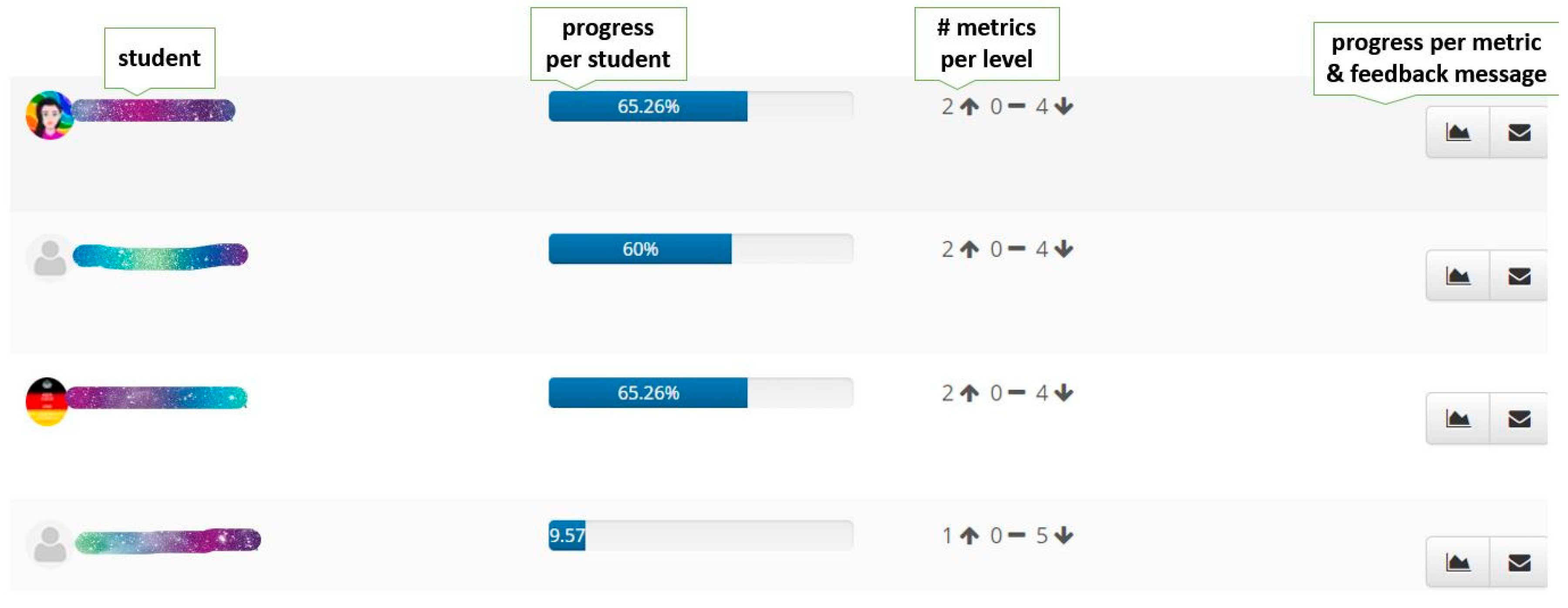

- The SG group followed a highly prompting and individualized approach informing students about grades and statistics. Instructors implemented a learner-centered intervention protocol that they created, consisting of (a) posting a traffic signal indicator (Red Yellow Green—RYG) message to indicate how each student performed based on performance and engagement and (b) an online interview (MI) with the instructor for self-evaluation.

3.3. Participants and the Context

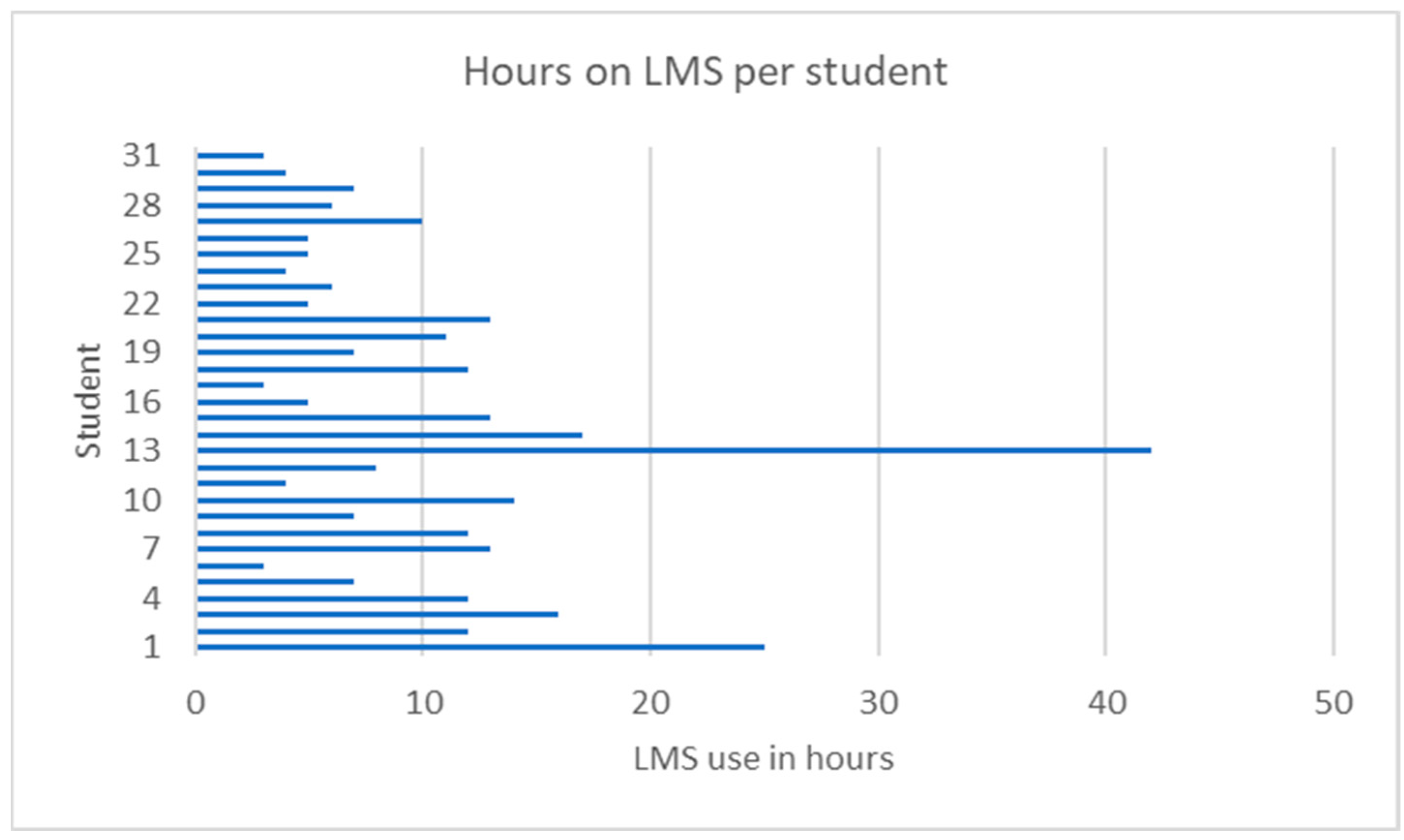

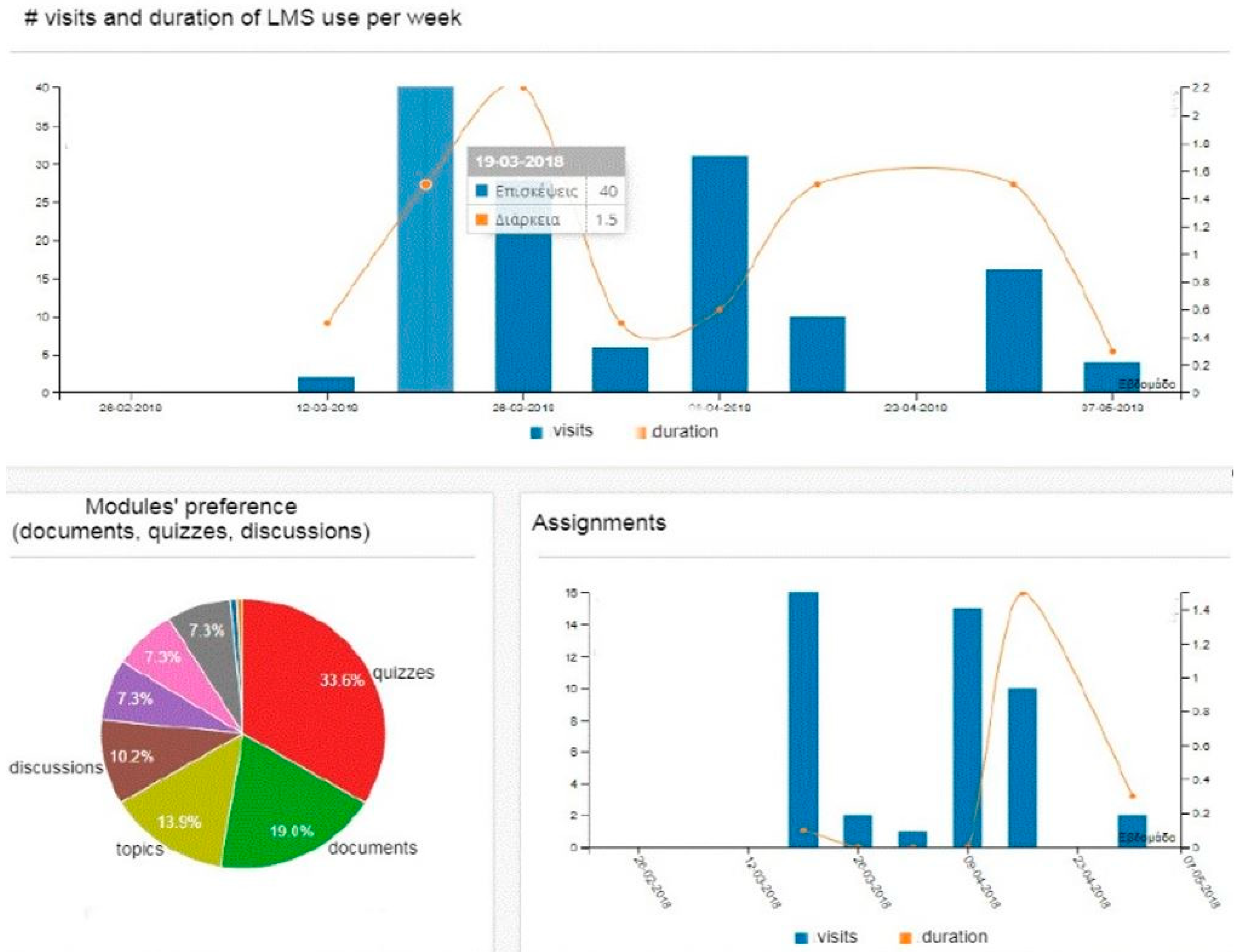

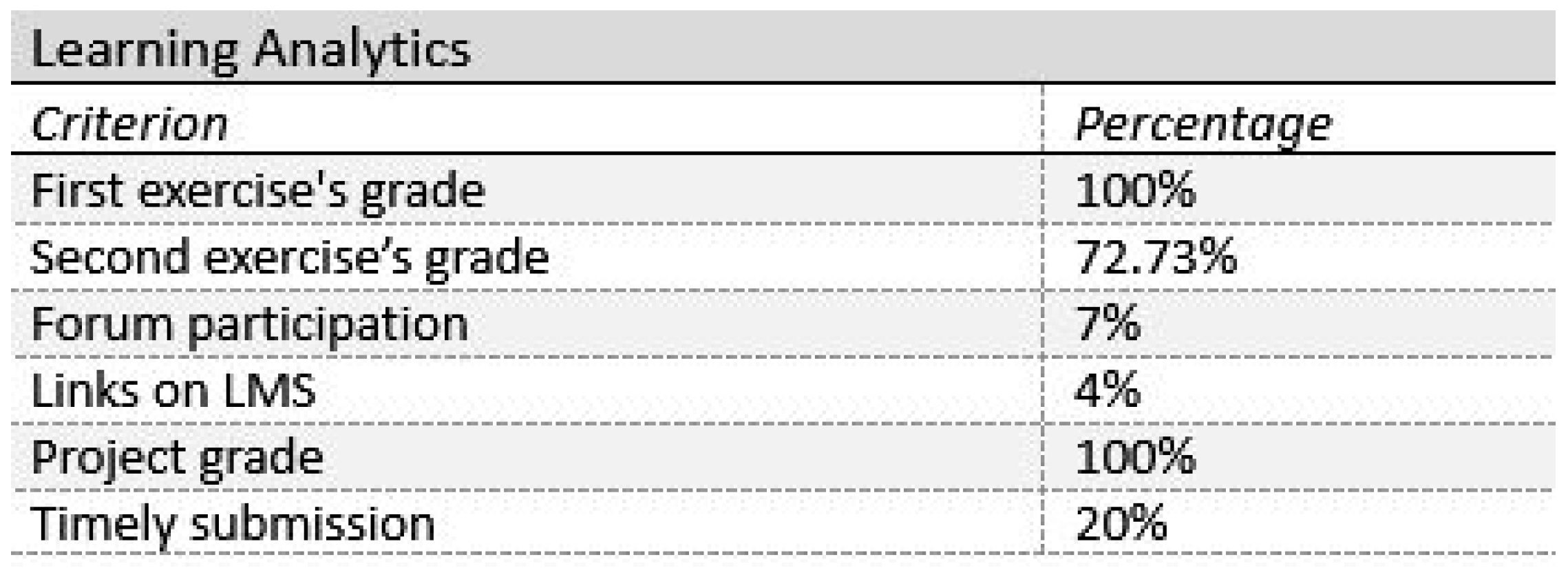

3.3.1. Learner Model

3.3.2. Learning Design

3.4. Research Design

3.4.1. Measures and Research Instruments

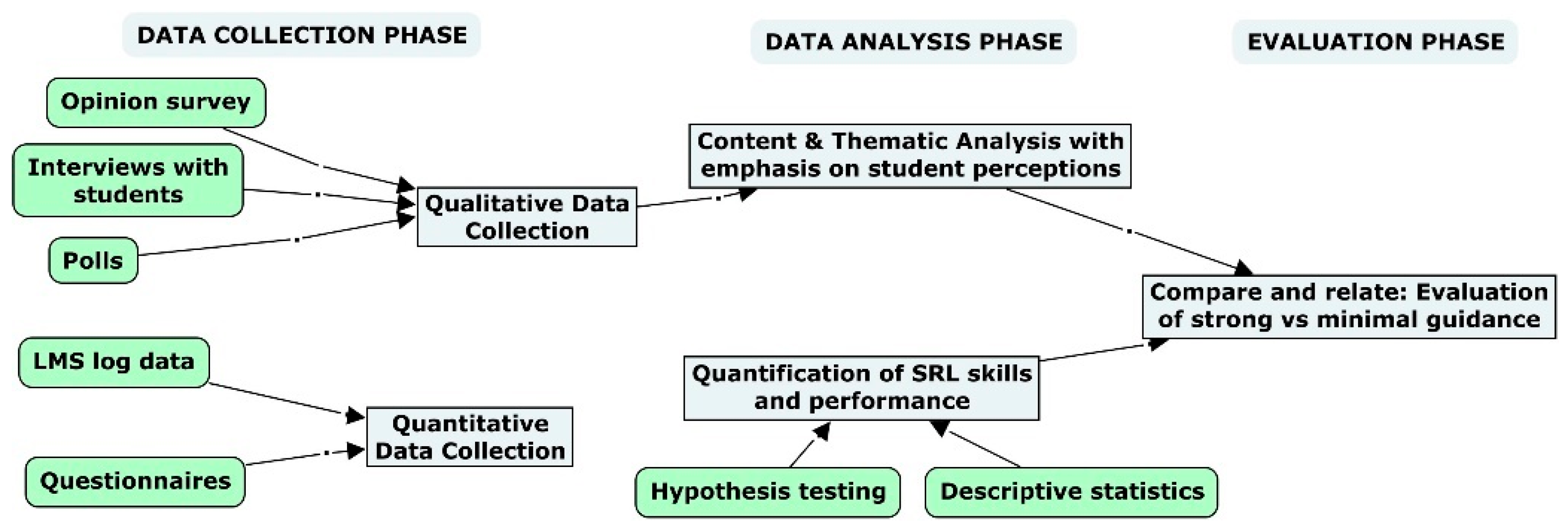

3.4.2. Data Collection and Analysis

4. Results

4.1. Research Question 1

4.2. Research Question 2

5. Discussion and Conclusions

5.1. Research Question 1

5.2. Research Question 2

5.3. Study Limitations and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

- I think about what I need to learn before I begin a task in this online course.

- I ask myself questions about what I will study before I begin to learn for this online course.

- I set short-term (daily or weekly) goals as well as long-term goals (monthly or for the whole online course).

- I have set goals to help me manage my study time for this online course.

- I set specific goals before beginning a task in this online course.

- I think of alternative ways to solve a problem and choose the best one in this online course.

- At the start of a task, I think about the study strategies I will use.

- 8.

- When I study for this online course, I try to use strategies that have worked in the past.

- 9.

- I have a specific purpose for each strategy used in this online course.

- 10.

- I am aware of the strategies I use when I study for this online course.

- 11.

- I change strategies when I do not make progress while learning for this online course.

- 12.

- I periodically review to help me understand the important relationships in this online course.

- 13.

- I find myself pausing regularly to check my comprehension of this online course.

- 14.

- I ask myself questions about how well I am doing while learning something in this online course.

- 15.

- I think about what I have learned after I finish working on this online course.

- 16.

- I ask myself how well I accomplished my goals once I’m finished working on this online course.

- 17.

- After studying for this online course, I reflect on what I have learned.

- 18.

- I find myself analyzing the usefulness of the strategies after I studied for this online course.

- 19.

- I ask myself if there are other ways to do things after I finish learning for this online course.

- 20.

- After learning about this online course, I think about the study strategies I used.

- 21.

- I make good use of my study time for this online course.

- 22.

- I find it hard to adhere to a study schedule for this online course.

- 23.

- I ensure that I keep up with the weekly readings and assignments for this online course.

- 24.

- I often find that I do not spend very much time on this online course because of other activities.

- 25.

- I allocate my study time to this online course.

- 26.

- I choose the location where I will study for this online course to avoid too much distraction.

- 27.

- I find a comfortable place to study for this online course.

- 28.

- I know where I can study most efficiently for this online course.

- 29.

- I have a regular place to study in this online course.

- 30.

- When I feel bored studying for this online course, I force myself to pay attention.

- 31.

- When my mind begins to wander during a learning session for this online course, I make a special effort to keep concentrating.

- 32.

- When I begin to lose interest in this online course, I push myself even further.

- 33.

- I work hard to do well in this online course even if I don’t like what I have to do.

- 34.

- Even when the materials in this online course are dull and uninteresting, I manage to keep working until I finish.

- 35.

- Even when I feel lazy or bored while studying for this online course, I finish what I plan to do.

- 36.

- When work is difficult in this online course, I continue to work.

- 37.

- When I do not completely understand something, I ask other course members in this online course for ideas.

- 38.

- I share my problems with my classmates in this course online so that we know what we are struggling with and how to solve our problems.

- 39.

- I am persistent in getting help from the instructor of this online course.

- 40.

- When I am not sure about some material in this online course, I check with other people.

- 41.

- I communicate with my classmates to determine how I am doing in this online course.

- 42.

- When I have trouble learning, I ask for help.

Appendix B

- LA was simple to understand.

- LA helped increase participation.

- I prefer LA use in the learning process over traditional LA use.

- LA helped me perform better.

- I would like LA to be applied to other courses.

- LA was an enjoyable learning experience.

- LA had pedagogical value.

- LA was confusing/non-functional.

- There was an understandable explanation using LA.

- LA made me feel I had better control over the learning process.

- LA has boosted my confidence.

- There was anonymization when using LA.

- LA helped me be aware of the course.

- There was a sufficient interpretation of LA.

- A discussion was provided to explain the LA results.

- The LA service helped me make decisions during the course through encouragement and suggestions.

- LA maximized my motivation to engage with the course.

- LA resulted in putting more effort into the course.

- The guidance for using LA was adequate.

- I ignored the use of LA throughout the course.

- Comparing the performance of my fellow students helps me (e.g., increases competitiveness).

- Using LA helps me seek help from fellow students and teachers.

- What feelings does using LA evoke (e.g., dissatisfaction, encouragement, anxiety, confidence)?

- How did LA help or hinder the learning process?

- Feel free to comment on your experience with LA.

References

- Gašević, D.; Dawson, S.; Siemens, G.; Gašević, B.D.; Dawson, S. Let’s not forget: Learning analytics are about learning. TechTrends 2015, 59, 64–71. [Google Scholar] [CrossRef]

- Siemens, G.; Long, P. Penetrating the fog: Analytics in learning and education. EDUCAUSE Rev. 2011, 46, 30–40. [Google Scholar]

- Ranjeeth, S.; Latchoumi, T.P.; Paul, P.V. A survey on predictive models of learning analytics. Procedia Comput. Sci. 2020, 167, 37–46. [Google Scholar] [CrossRef]

- Lang, C.; Siemens, G.; Wise, A.; Gasevic, D. Handbook of Learning Analytics; SOLAR, Society for Learning Analytics and Research: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Banihashem, S.K.; Noroozi, O.; van Ginkel, S.; Macfadyen, L.P.; Biemans, H.J.A. A systematic review of the role of learning analytics in enhancing feedback practices in higher education. Educ. Res. Rev. 2022, 37, 100489. [Google Scholar] [CrossRef]

- Herodotou, C.; Maguire, C.; McDowell, N.; Hlosta, M.; Boroowa, A. The engagement of university teachers with predictive learning analytics. Comput. Educ. 2021, 173, 104285. [Google Scholar] [CrossRef]

- Guzmán-Valenzuela, C.; Gómez-González, C.; Rojas-Murphy Tagle, A. Learning analytics in higher education: A preponderance of analytics but very little learning? Int. J. Educ. Technol. High. Educ. 2021, 18, 23. [Google Scholar] [CrossRef] [PubMed]

- Park, Y.; Jo, I.H. Development of the learning analytics dashboard to support students’ learning performance. J. Univers. Comput. Sci. 2015, 21, 110–133. [Google Scholar] [CrossRef]

- Wong, J.; Baars, M.; de Koning, B.B.; van der Zee, T.; Davis, D.; Khalil, M.; Houben, G.; Paas, F. Educational theories and learning analytics: From data to knowledge. In Utilizing Learning Analytics to Support Study Success; Springer: Berlin/Heidelberg, Germany, 2019; pp. 3–25. [Google Scholar] [CrossRef]

- Creswell, J.W. Research Design: Qualitative, Quantitative and Mixed Methods Approaches, 4th ed.; Sage: Thousand Oaks, CA, USA, 2014. [Google Scholar] [CrossRef]

- Dimitriadis, Y.; Martínez-Maldonado, R.; Wiley, K. Human-Centered Design Principles for Actionable Learning Analytics. In Research on E-Learning and ICT in Education; Springer: Berlin/Heidelberg, Germany, 2021; pp. 277–296. [Google Scholar] [CrossRef]

- Viberg, O.; Hatakka, M.; Bälter, O.; Mavroudi, A. The current landscape of learning analytics in higher education. Comput. Hum. Behav. 2018, 89, 98–110. [Google Scholar] [CrossRef]

- Tzimas, D.; Demetriadis, S. Ethical issues in learning analytics: A review of the field. Educ. Technol. Res. Dev. 2021, 69, 1101–1133. [Google Scholar] [CrossRef]

- Tzimas, D.; Demetriadis, S. Culture of Ethics in Adopting Learning Analytics. In Augmented Intelligence and Intelligent Tutoring Systems. ITS 2023. Lecture Notes in Computer Science; Frasson, C., Mylonas, P., Troussas, C., Eds.; Springer: Cham, Switzerland, 2023; Volume 13891. [Google Scholar] [CrossRef]

- Matcha, W.; Gašević, D.; Uzir, N.A.; Jovanović, J.; Pardo, A. Analytics of learning strategies: Associations with academic performance and feedback. ACM Int. Conf. Proc. Ser. 2019, 461–470. [Google Scholar] [CrossRef]

- Bjork, R.; Dunlosky, J.; Kornell, N. Self-Regulated Learning: Beliefs, Techniques, and Illusions. Annu. Rev. Psychol. 2012, 64, 417–444. [Google Scholar] [CrossRef]

- Tzimas, D.; Demetriadis, S. The impact of learning analytics on student performance and satisfaction in a higher education course. In Proceedings of the 14th International Conference on Educational Data Mining (EDM21), Paris, France, 29 June–2 July 2021; pp. 654–660. [Google Scholar]

- Sun, Z.; Xie, K.; Anderman, L.H. The role of self-regulated learning in students’ success in flipped undergraduate math courses. Internet High. Educ. 2018, 36, 41–53. [Google Scholar] [CrossRef]

- Arnold, K.E.; Pistilli, M.D. Course signals at Purdue: Using learning analytics to increase student success. ACM Int. Conf. Proc. Ser. 2012, 2012, 267–270. [Google Scholar] [CrossRef]

- Kirschner, P.; Hendrick, C.; Heal, J. How Teaching Happens: Seminal Works in Teaching and Teacher Effectiveness and What They Mean in Practice; Routledge: London, UK, 2022. [Google Scholar] [CrossRef]

- Ott, C.; Robins, A.; Haden, P.; Shephard, K. Illustrating performance indicators and course characteristics to support students’ self-regulated learning in CS1. Comput. Sci. Educ. 2015, 25, 174–198. [Google Scholar] [CrossRef]

- Kirschner, P.; Hendrick, C. How learning happens: Seminal works in educational psychology and what they mean in practice. Routledge. TechTrends 2020, 65, 120–121. [Google Scholar] [CrossRef]

- Soderstrom, C.; Yue, L.; Bjork, L. Metamemory and Education. In The Oxford Handbook of Metamemory; Dunlosky, J., Tauber, S.K., Eds.; Oxford Library of Psychology: Oxford, UK, 2015. [Google Scholar] [CrossRef]

- Zimmerman, B.J. A social cognitive view of self-regulated academic learning. J. Educ. Psychol. 1989, 81, 329–339. Available online: https://pdfs.semanticscholar.org/e1ff/53e710437e009f06bc264b093a2ba9523879.pdf (accessed on 12 November 2023). [CrossRef]

- Pardo, A.; Han, F.; Ellis, R.A. Combining university student self-regulated learning indicators and engagement with online learning events to predict academic performance. IEEE Trans. Learn. Technol. 2017, 10, 82–92. [Google Scholar] [CrossRef]

- Jovanovic, J.; Gašević, D.; Dawson, S.; Pardo, A.; Mirriahi, N. Learning analytics to unveil learning strategies in a flipped classroom. Internet High. Educ. 2017, 33, 74–85. [Google Scholar] [CrossRef]

- Edisherashvili, N.; Saks, K.; Pedaste, M.; Leijen, L. Supporting Self-Regulated Learning in Distance Learning Contexts at Higher Education Level: Systematic Literature Review. Front. Psychol. 2022, 12, 792422. [Google Scholar] [CrossRef] [PubMed]

- Jansen, R.S.; van Leeuwen, A.; Janssen, J.; Kester, L.; Kalz, M. Validation of the self-regulated online learning questionnaire. J. Comput. High. Educ. 2017, 29, 6–27. [Google Scholar] [CrossRef]

- Bodily, R.; Verbert, K. Trends and issues in student-facing learning analytics reporting systems research. ACM Int. Conf. Proc. Ser. 2017, 309–318. [Google Scholar] [CrossRef]

- West, D.; Heath, D.; Lizzio, A.; Toohey, D.; Miles, C.; Searle, B. Higher education teachers’ experiences with learning analytics in relation to student retention. Australas. J. Educ. Technol. 2016, 32, 48–60. [Google Scholar] [CrossRef]

- Goggins, S.P.; Galyen, K.D.; Petakovic, E.; Laffey, J.M. Connecting performance to social structure and pedagogy as a pathway to scaling learning analytics in MOOCs: An exploratory study. J. Comput. Assist. Learn. 2016, 32, 244–266. [Google Scholar] [CrossRef]

- Kim, D.; Park, Y.; Yoon, M.; Jo, I.H. Toward evidence-based learning analytics: Using proxy variables to improve asynchronous online discussion environments. Internet High. Educ. 2016, 30, 30–43. [Google Scholar] [CrossRef]

- Papamitsiou, Z.; Economides, A.A.; Pappas, I.O.; Giannakos, M.N. Explaining learning performance using response-Time, self-Regulation and satisfaction from content: An fsQCA approach. ACM Int. Conf. Proceeding Ser. 2018, 181–190. [Google Scholar] [CrossRef]

- Kitto, K.; Lupton, M.; Davis, K.; Waters, Z. Designing for student-facing learning analytics. Australas. J. Educ. Technol. 2017, 33, 152–168. [Google Scholar] [CrossRef]

- Ifenthaler, D. Are higher education institutions prepared for learning analytics? TechTrends 2017, 61, 366–371. [Google Scholar] [CrossRef]

- Demmans Epp, C.; Phirangee, K.; Hewitt, J.; Perfetti, C.A. Learning management system and course influences on student actions and learning experiences. In Educational Technology Research and Development; Springer: New York, NY, USA, 2020; Volume 68. [Google Scholar] [CrossRef]

- Tsai, Y.S.; Moreno-Marcos, P.M.; Tammets, K.; Kollom, K.; Gašević, D. SHEILA policy framework: Informing institutional strategies and policy processes of learning analytics. ACM Int. Conf. Proc. Ser. 2018, 5, 320–329. [Google Scholar] [CrossRef]

- Zuckoff, A. Welcome to MITRIP. Motiv. Interviewing Train. Res. Implement. Pract. 2012, 1, 1. [Google Scholar] [CrossRef]

- Twining, P.; Heller, R.; Nussbaum, M.; Tsai, C. Some guidance on conducting and reporting qualitative studies. Comput. Educ. 2017, 106, A1–A9. [Google Scholar] [CrossRef]

- Khalil, M.; Ebner, M. Clustering patterns of engagement in Massive Open Online Courses (MOOCs): The use of learning analytics to reveal student categories. J. Comput. High. Educ. 2017, 29, 114–132. [Google Scholar] [CrossRef]

- Jansen, R.S.; van Leeuwen, A.; Janssen, J.; Jak, S.; Kester, L. Self-regulated learning partially mediates the effect of self-regulated learning interventions on achievement in higher education: A meta-analysis. Educ. Res. Rev. 2019, 28, 100292. [Google Scholar] [CrossRef]

- Kizilcec, R.F.; Pérez-Sanagustín, M.; Maldonado, J.J. Self-regulated learning strategies predict learner behavior and goal attainment in Massive Open Online Courses. Comput. Educ. 2017, 104, 18–33. [Google Scholar] [CrossRef]

- Chen, L.; Lu, M.; Goda, Y.; Shimada, A.; Yamada, M. Learning Analytics Dashboard Supporting Metacognition; Springer: Berlin/Heidelberg, Germany, 2021; pp. 129–149. [Google Scholar] [CrossRef]

- Nguyen, Q.; Rienties, B.; Toetenel, L.; Ferguson, R.; Whitelock, D. Examining the designs of computer-based assessment and its impact on student engagement, satisfaction, and pass rates. Comput. Hum. Behav. 2017, 76, 703–714. [Google Scholar] [CrossRef]

- Smith, P. Engaging online students through peer-comparison progress dashboards. J. Appl. Res. High. Educ. 2019, 12, 38–56. [Google Scholar] [CrossRef]

- Roberts, L.D.; Howell, J.A.; Seaman, K.; Gibson, D.C. Student attitudes toward learning analytics in higher education: “The fitbit version of the learning world”. Front. Psychol. 2016, 7, 1959. [Google Scholar] [CrossRef] [PubMed]

- Barreiros, C.; Leitner, P.; Ebner, M.; Veas, E.; Lindstaedt, S. Students in Focus–Moving Towards Human-Centred Learning Analytics. In Practicable Learning Analytics. Advances in Analytics for Learning and Teaching; Viberg, O., Grönlund, Å., Eds.; Springer: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Buckingham Shum, S.; Ferguson, R.; Martinez-Maldonado, R. Human-Centred Learning Analytics. J. Learn. Anal. 2019, 6, 1–9. [Google Scholar] [CrossRef]

| Code | Questions |

|---|---|

| (Q1) | What is your LA-based learning experience as a whole? Were the analytics valuable? |

| (Q2) | Are you satisfied with your study behavior? Under which criteria did you decide to change? |

| (Q3) | What changes do you need to make to improve your performance? |

| (Q4) | Specify which interventions helped improve your performance. Explain how they influenced your behavior. |

| (Q5) | How much would you rate yourself thus far, and why? |

| (Q6) | How similar are your test scores to those of others? |

| (Q7) | What positive or negative emotions do analytics evoke in you? Have you considered dropping out of the course? |

| (Q8) | Do you have any comments to add that we have not already discussed? |

| Group | N | M | SD | t (91) | p |

|---|---|---|---|---|---|

| Experimental | 47 | 7.22 | 2.71 | 2.75 | 0.007 |

| Control | 46 | 5.28 | 3.95 |

| SRL Skills | SG (N = 38) | MG (N = 31) | p | t (67) |

|---|---|---|---|---|

| M (SD) | M (SD) | |||

| Metacognitive activities before learning | 3.47 (1.33) | 3.19 (1.22) | 0.370 | 0,90 |

| Metacognitive activities during learning | 3.50 (1.20) | 3.29 (1.27) | 0.485 | 0.70 |

| Metacognitive activities after learning | 2.90 (1.29) | 3.06 (1.15) | 0.602 | −0.52 |

| Time management | 3.21 (1.11) | 3.22 (1.23) | 0.957 | −0.54 |

| Environmental structuring | 5.42 (1.64) | 5.09 (1.61) | 0.415 | 0.82 |

| Persistence | 3.10 (1.35) | 2.83 (1.09) | 0.379 | 0.88 |

| Help seeking | 3.34 (1.75) | 3.16 (1.45) | 0.647 | 0.46 |

| SRL Skills | SG (N = 38) | MG (N = 31) | ANCOVA |

|---|---|---|---|

| M (SD) | M (SD) | ||

| Metacognitive activities before learning | 5.21 (1.50) | 4.20 (1.17) | F [1,66] = 8.375, p = 0.005 *, ηp2 = 0.113 |

| Metacognitive activities during learning | 4.21 (1.31) | 4.09 (1.16) | F [1,66] = 0.001, p = 0.975, ηp2 = 0.000 |

| Metacognitive activities after learning | 4.93 (1.38) | 3.70 (1.20) | F [1,66] = 27.398, p = 0.000 *, ηp2 = 0.293 |

| Time management | 5.34 (1.12) | 4.12 (1.28) | F [1,66] = 22.502, p = 0.000 *, ηp2 = 0.254 |

| Environmental structuring | 5.35 (1.51) | 4.75 (1.54) | F [1,66] = 2.521, p = 0.117, ηp2 = 0.037 |

| Persistence | 5.21 (1.52) | 3.70 (1.21) | F [1,66] = 22.181, p = 0.000 *, ηp2 = 0.252 |

| Help seeking | 5.17 (1.43) | 3.80 (1.54) | F [1,66] = 25.266, p = 0.000 *, ηp2 = 0.277 |

| Survey Statements | M | SD |

|---|---|---|

| LA quality | ||

| LA was simple to understand | 5.0 | 1.8 |

| LA helped increase participation | 4.9 | 1.6 |

| The guidance for using LA was adequate | 5.0 | 1.6 |

| There was a sufficient interpretation of the LA | 5.0 | 1.2 |

| Effectiveness of LA on SRL skills | ||

| I prefer LA use in the learning process over the traditional one | 4.7 | 1.6 |

| I would like LA to be applied to other courses | 4.9 | 1.8 |

| LA resulted in putting more effort into the course | 4.4 | 1.7 |

| LA made me feel I had better control over the learning process | 4.6 | 1.9 |

| Student satisfaction | ||

| LA was an enjoyable learning experience | 4.9 | 1.5 |

| LA had pedagogical value | 4.5 | 1.5 |

| LA has boosted my confidence | 4.4 | 1.5 |

| LA maximized my motivation to engage in the course | 4.8 | 1.5 |

| Motivation to use | ||

| There was an understandable explanation using the LA | 5.0 | 1.2 |

| A discussion was conducted to explain the LA results | 5.0 | 1.6 |

| LA helped me be aware of the course | 5.2 | 1.3 |

| Theme | Sample Evidence Quotes | Freq. (n = 36) |

|---|---|---|

| Behavior change | The RYG alert awakened me, and I decided to start doing exercises (ST3) | 52% |

| Guidance | My grades were below the class average; therefore, this comparison changed my study habits (ST17) | 45% |

| Help seeking | LA services encouraged me to ask for support (ST32) | 39% |

| Motivation | LA motivated me to keep trying (ST5) | 34% |

| Involvement | LA should be tailored to my needs (ST15) | 17% |

| Time management | LA gave me study orientation, e.g., time management (ST11) | 17% |

| Persistence | LA resulted in putting more effort (ST29) | 16% |

| Stress | LA intrigued and stressed me creatively (ST26) | 14% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tzimas, D.E.; Demetriadis, S.N. Impact of Learning Analytics Guidance on Student Self-Regulated Learning Skills, Performance, and Satisfaction: A Mixed Methods Study. Educ. Sci. 2024, 14, 92. https://doi.org/10.3390/educsci14010092

Tzimas DE, Demetriadis SN. Impact of Learning Analytics Guidance on Student Self-Regulated Learning Skills, Performance, and Satisfaction: A Mixed Methods Study. Education Sciences. 2024; 14(1):92. https://doi.org/10.3390/educsci14010092

Chicago/Turabian StyleTzimas, Dimitrios E., and Stavros N. Demetriadis. 2024. "Impact of Learning Analytics Guidance on Student Self-Regulated Learning Skills, Performance, and Satisfaction: A Mixed Methods Study" Education Sciences 14, no. 1: 92. https://doi.org/10.3390/educsci14010092

APA StyleTzimas, D. E., & Demetriadis, S. N. (2024). Impact of Learning Analytics Guidance on Student Self-Regulated Learning Skills, Performance, and Satisfaction: A Mixed Methods Study. Education Sciences, 14(1), 92. https://doi.org/10.3390/educsci14010092