Abstract

(1) Background: Integrated standardized patient examinations (ISPEs) allow students to demonstrate competence with curricular learning and communication. Digital recordings of these experiences provide an objective permanent record, allowing students to review and improve their performance. Although recordings have been utilized as a tool in physical therapy education, no studies have described the impact of reviewing recordings of ISPE. This qualitative pilot study aimed to investigate student perceptions and learning after reviewing their recordings of ISPE. (2) Methods: Second-year Doctor of Physical Therapy students (n= 23) participated in the study by completing an anonymous online survey after reviewing their recordings from three ISPEs. Thematic analysis was used to identify codes and central themes from the survey data. (3) Results: The results showed that 95.6% of students found the video review process beneficial. Five themes emerged: (i) digital recordings provide an objective performance assessment, (ii) approaches to self-review vary, (iii) it provides an opportunity for growth, (iv) a holistic review is possible, and (v) students need structure and guidance in the process. (4) Conclusions: Study findings indicate that a review of the recordings of ISPEs facilitates the development of clinical skills for physical therapy students. Implementing an explicit framework for reviewing the recordings may enhance the process and facilitate further promotion of reflection-on-action.

1. Introduction

Integrated learning, which connects theoretical concepts from the basic sciences to the application of patient care, has been shown to result in a positive student experience [1]. Training compassionate, thoughtful, and reflective clinicians who integrate foundational knowledge to deliver quality patient care is essential to enhance the overall patient experience and improve health outcomes.

Integrated practical examinations (IPEs) provide patient-based scenarios in which students must draw upon their knowledge of basic sciences, as well as the application of appropriate communication, evaluation, assessment, and treatment principles. This provides a measure of the student’s level of competency in clinical practice [2]. Integrated standardized patient examinations (ISPEs) enhance this curricular approach by using standardized patients, who are individuals who received specialized training to portray the role of the patient, with all the desired characteristics, symptoms, and attributes necessary for a particular illness and diagnosis. This allows the ISPEs to be tailored to the level of clinical preparedness expected of the student physical therapist’s level of learning [3]. Both the IPEs and ISPEs provide opportunities for formative and summative feedback in the assessment of clinical competency.

Students also need the opportunity to assess their own performance, thereby identifying areas for growth and improvement. Nearly forty years ago, Schön described the seminal model of reflective practice that included the ideas of reflection-in-action and reflection-on-action [4]. In reflection-in-action, an individual considers the elements of the situation in real-time, thinking and adjusting during the situation. In reflection-on-action, the student looks back on the situation, considering their performance retrospectively. Both methods increase knowledge and facilitate professional growth [4]. The importance of student daily reflection in a clinical setting was described by Larsen et al. in 2016 [5]. In order to use reflection-on-action, students must be able to recall or review the experience and also have an element of time to be able to develop a continuous plan for growth, which is necessary for the integration of knowledge and ideas [6]. Reflection has been demonstrated to be important in the education and clinical development of physical therapy students [7]. The opportunity to reflect on encounters with standardized patients during training by using digital technologies could greatly enhance the reflective process, providing an exact, objective record, as well as unlimited time to synthesize the lessons from experience.

Advanced technology in simulation-based experiences has provided a mechanism for students to reflect on the demonstration of abilities. Simulation experiences in healthcare include the use of standardized patients, mannequins, task trainers, software simulation, and virtual reality. Video, or other forms of digital recordings, are categorized as simulation-based experiences. Simulation can help student clinicians think critically about their respective performance and opportunities for growth. In a cross-sectional study of healthcare professionals, Aitken et al. found that participation in simulation-based training (SBT) could help facilitate a reflective mindset [8]. Reflection can play a role in engaging the student learner while facilitating more profound experiences [9].

In medical education, the use of video feedback has been demonstrated to be an effective simulation tool to improve medical students’ communication and clinical skills [10,11]. In a systematic review by Hammoud et al., the authors recommended that self-assessment and review of videotape or video recordings occur in combination with faculty-to-student feedback in order to achieve an optimal benefit [12]. In a randomized controlled trial of second-year medical students, Ozcakar et al. found that the review of videotaped patient interviews augmented feedback to medical students compared to verbal feedback alone [13]. Previous research has demonstrated the benefits of student review of patient encounters in medicine and nursing [10,11,14,15,16,17,18]. However, these studies do not address the reflective process or how students review recordings of patient encounters in integrated practical examinations. It is unclear if there are benefits to using videos of standardized patient encounters in physical therapy education.

In 1985, Palmer, Henry, and Rohe found no differences in the accuracy of physical therapy student evaluation after viewing a videotape replay of their performance of manual muscle testing and goniometry skills on a simulated patient [19]. In a study of 51 physical therapy students reviewing videos of their practical examinations, there was no improvement in examination scores, professionalism, or accuracy in self-scoring between groups of physical therapy students [20]. However, a study of physical therapy students’ self-recordings of a simulated patient history and examination was deemed helpful in pre-clinical training [21]. Further, in a quasi-experimental design pilot study, Ebert et al. found that physical therapy students felt an improved sense of ability to evaluate verbal and nonverbal communication, provide feedback, and refine psychomotor skills after reviewing a video of themselves performing a musculoskeletal examination and intervention skills on a peer [22]. It remains unclear if video is a learning tool in facilitating the integration of content, especially in the form of integrated standardized patient examinations (ISPEs). To date, no research exists exploring how physical therapy students use digital recordings of their integrated practical examinations with standardized patients to develop as clinicians.

Advancements in technology have resulted in the transformation of video capture on analog tapes to digital recordings stored on a cloud-based system. Digital recordings of deidentified clinical encounters can serve as an opportunity for clinicians to view performance and reflect on their individual practice. The use of digital review by clinicians could aid in improving patient encounters and outcomes by providing the opportunity for the clinician to review their performance.

While the research cited above addresses the integration of video to facilitate learning outcomes, the process by which physical therapy students review digital video recordings of themselves encountering a standardized patient, and how they utilize these recordings for reflection on clinical practice, has not been explored. The purpose of this pilot study was to explore how physical therapy students review their own digital recordings from integrated standardized patient examinations to augment learning. To answer this explanatory question, an educational case study approach combined with Kirkpatrick’s four-level evaluation model was used as the theoretical framework to scaffold this pilot study. An educational case study can be an effective approach to exploring the phenomenon in an educational context [23]. Kirkpatrick’s evaluation model is pragmatic and appropriate for evaluating a teaching tool: digital recordings [24]. Participants’ reactions to the digital recordings and review (Level 1), self-assessment of learning (Level 2), and self-assessment of behavioral change (Level 3) were gathered in data collection. A qualitative research approach was used as it allows for in-depth exploration and context of the student learners’ experience [25]. Concepts generated from this pilot study will help provide scaffolding for future research in this topic area.

2. Materials and Methods

2.1. Study Design

This descriptive cross-sectional study utilized survey methodology to collect qualitative data via narrative responses to open-ended questions (See Table 1). Survey responses were collected, recorded, and managed online using REDCap® 13.7.11 electronic data capture tools hosted by the academic institution [26,27]. REDCap® (research electronic data capture) is a secure, web-based software platform designed to support data capture for research studies, providing (1) an intuitive interface for validated data capture; (2) audit trails for tracking data manipulation and export procedures; (3) automated export procedures for seamless data downloads to common statistical packages; and (4) procedures for data integration and interoperability with external sources. A thematic analysis was used to interpret, code, and develop themes from the survey responses.

Table 1.

Survey questions asked following the student’s review of a digital recording of the IPSE.

2.2. Study Program Characteristics

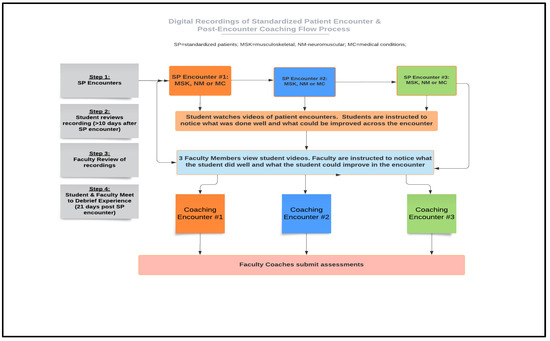

The physical therapy education program involved in this study is part of a health science university in a large metropolitan area. The entry-level physical therapy education program consists of seven academic semesters over two and a half years. At two time points in the curriculum (end of semesters three and five), students have standardized patient encounters that are digitally recorded as part of the summative assessment process (See Figure 1). The goal of the ISPE is to determine students’ synthesis of content knowledge across the curriculum to determine their readiness for their clinical experiences. In this instance, all students passed this ISPE and participated in their clinical internships in the following semesters. Each student was recorded performing an examination and interventions in a 40-min encounter on three separate standardized patients, reflecting their curricular tracks (musculoskeletal, medical conditions, and neuromuscular). Following their respective standardized patient encounters, the standardized patients (SPs) were debriefed with students about their communication skills. A week following the digital recording of the standardized patient encounters, students and faculty coaches had access to review the digital recordings as part of the assessment process. A still shot of a mock standardized patient encounter can be seen in Figure 2. Approximately three weeks post standardized patient encounter, the students had individual (1:1) coaching sessions with the faculty. The faculty coaches discussed students’ performances during the encounters.

Figure 1.

Process diagram of an integrated standardized patient examination (ISPE) and post-exam recording review.

Figure 2.

Screen capture of a digital recording of a mock standardized patient encounter.

2.3. Subjects

The participants in the study were second-year graduate students enrolled in an entry-level physical therapy education program who completed in-person integrated standardized patient examinations (ISPEs) as part of their summative assessment during training. The ISPEs were recorded and stored via a cloud-based system via Simulation iQTM (Education Management Solutions, LLC, a Collegis Company: Wayne, Pennsylvania, USA) through the university’s simulation center. Students reviewed their videos one week after their standardized patient encounters. Students had previously completed three ISPEs at the end of semester 3 in professional year 1 (8 months earlier) and had experience in accessing and reviewing their digital recordings.

2.4. Data Collection

All second-year students in the entry-level physical therapy education program who were assessed in the required ISPEs were invited to participate in this study. As core faculty members in the physical therapy education program, the primary and secondary investigators were known by participants. An email was sent to eligible participants by the primary investigator. Included in the email was an overview and description of the study, with a link to REDCap® links for informed consent, and an anonymous survey (Table 1). The primary and secondary authors derived the survey questions through aspects of the Kirkpatrick framework for the program evaluation [24], simulation of the debriefing [28], and observation of the simulation experiences for students over six years. Study participants answered 10 open-ended survey questions with text fields for narrative responses. Survey responses were anonymous. The survey closed seven days after distribution. Collected data were downloaded to an Excel spreadsheet for analysis.

2.5. Data Analysis

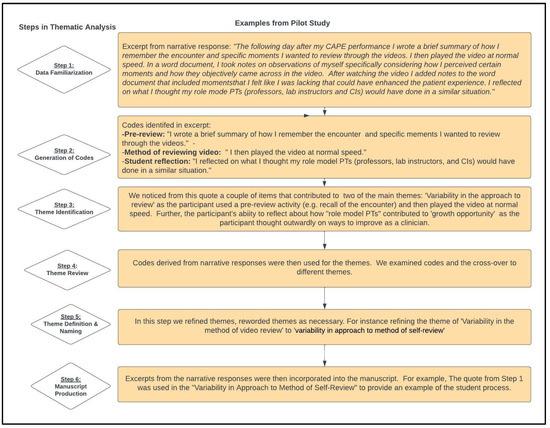

The thematic analysis approach described by Braun and Clarke was used as the framework to explore the data [29]. The steps in the thematic analysis were further described by Kiger and Varpio to assist in the review, identification, development, and refinement of themes [30]. An outline of the six steps and examples from our own research are provided in Figure 3. The primary and secondary investigators independently generated initial codes and then met to refine codes. Next, they both autonomously searched and developed themes. The themes were developed based on reviewing, comparing, and analyzing codes.

Figure 3.

Steps in the thematic analysis with examples from the pilot study.

2.6. Trustworthiness

To ensure trustworthiness, the authors followed the constructs outlined in the Standards for Reporting Qualitative Research [31]. Credibility was ensured by the authors practicing individual and team reflexivity during the process through discussion. Confirmability was assured through a declaration of the study’s shortcomings and limitations and the provision of details of the methodology.

3. Results

Out of 67 eligible participants, 41 chose to initiate the survey, with 23 completions (34.3%). The remaining 18 students did not respond to requests to complete the survey. Of the completers, 22 out of 23 (95.6%) participants affirmed that the video review process was beneficial in their physical therapy education.

Five qualitative themes emerged from the data that described how digital recordings were used in physical therapy education: (i) viewing digital recordings provided an objective picture of performance; (ii) participants had variability in their approach and method of self-review; (iii) the review was seen as a growth opportunity; (iv) the review provided an opportunity for holistic patient encounter; and (v) there was a need for structure and guidance in the process.

3.1. Viewing Digital Recordings Provided an Objective Picture of Performance

Participants commented that the objectivity provided by their respective recordings facilitated their learning. One participant stated that the review was helpful and “Shows objectively what was done and isn’t left up for interpretation” (S3). Additionally, another participant found that the review provided reassurance about aspects of the patient encounter that were performed well, stating, ” It was most helpful for me to objectively see and give myself credit for moments that were successful in my encounter” (S2). Despite the stress surrounding the assessment, it was evident that the post-encounter review of digital recordings was helpful in the objective self-assessment of a participant’s encounter. S21 described that the review of the digital recording, “…helped me by providing me a concrete recollection of the session versus my own self-reflection of how the session went. With the stress that comes with being assessed and knowing there is an instructor right behind a wall, my memory of the session is incoherent at best”.

3.2. Variability in the Approach to the Method of Self-Review

A review of the narrative responses to the survey questions revealed no uniform approach to a participant’s review of their digital recordings. Most students reported using note-taking and a faculty-developed assessment rubric to guide the review of their videotaped encounters. A few students used pause and varying playback speeds during viewing. Approaches ranged from a complex approach with preliminary reflection note-taking prior to active review to a simplistic approach in playing video at various speeds. One participant described their method, “The following day after my performance I wrote a brief summary of how I remember the encounter and specific moments I wanted to review through the videos. I then played the video at normal speed. In a word document, I took notes on observations of myself specifically considering how I perceived certain moments and how they objectively came across in the video” (S2). S20 noted a similar approach: “I reviewed by jotting down my initial thoughts of the encounter without seeing video. Then I assessed each portion of my encounter by patient communication, physical therapy skills/intervention and interview”.

Another participant revealed a more stepwise approach: “I reviewed each section (interview, exam, intervention) and tried to make sure I hit the main points on each section. I used the assessment form we were given to understand what the main points were” (S6). Another strategy used was watching the video at a faster speed. S11 wrote, “I watched it [the video] on 2× speed because I hate watching myself and wanted to get it done as fast as possible”.

Some participants described simultaneously tasking (e.g., taking notes, and/or completing an assessment form) while reviewing the recordings. One participant responded that they “Used standardized assessment tools to review video, write down everything done and supplement information with rationale and findings” (S3). Participant S8 wrote that their method was to do the following: “Watched the video and take notes”. Lastly, S9 “watched the videos on my laptop one at a time. I took some notes while watching and then compiled the notes at the end for a better summary of the session”.

3.3. Growth Opportunity

Question 7 of the survey asked, “Did review of your patient encounters help or hinder your development as a clinician?” A consensus among the participants (22/23) was that a review of recordings was helpful in their clinical development. The respondent (S18) who was not sure if it helped in clinical development wrote, “I don’t know. It’s frustrating that being in a nerve-wracking environment such as CAPE [Center for Advancing Professional Education] clouds my mind a bit because I’m trying so hard to reach all aspects of the rubric, develop a good relationship with the patient and get all aspects of the ICF in only 40 min. It makes me more systematic in order to check the boxes versus just going where the interview and exam naturally take me”. The participant later stated, “However, I know there isn’t really another way to assess us to make sure we’re ready to go out into clinic”.

In response to whether the review of patient encounters was helpful (Q7), one participant noted, “There is no doubt in my mind how much this has helped me develop. Being able to externally see yourself helps to evaluate yourself in ways that you cannot do internally” (S5). Another response from a participant indicated, “Help, made me understand what I should work on in the future” (S8). S9 went further by responding, “This has helped my development as a clinician. It allows me to critically think about the clinician I want to be and allows me to pick out areas of improvement”. The opportunity for growth was further reflected in the comment, “Usually when I walk out of a patient encounter, I remember some of the mistakes I made, but with the video I can see all of them. And watching myself mess up ingrains it in my brain, so I won’t do that again” (S11). Additionally, S7 offered, “I think it [video review] helps even though it is hard. It’s impossible to reflect on everything you did in that scenario so being able to rewatch it to reflect is necessary to make an accurate assessment”.

3.4. An Opportunity for Holistic Review

Another theme that emerged in the analysis of the narrative responses was that of a holistic review. Students found value in reviewing the entirety of their standardized patient encounter, affording them the opportunity to examine disaggregated component actions of their performance, as well as the holistic overview of the encounter. For example, “I think the whole thing was helpful because I could see the whole thing and see how I did or did not connect interventions to exam and interview items” (S4). Communication skills were commonly mentioned. One participant commented, “Watching how I interact with patients and reviewing how they respond to my communication style. Learning how to adapt my language for patient understanding” (S12). Participant S2, noted, “This process was very effective in developing my skills of reflection and communication. While it [video review] was challenging and awkward to rewatch videos of something I experienced, it was rewarding to observe myself as a clinician and engage in a process for professional development”. Lastly, another student commented, “It [video review] was helpful for sure. Because it allowed me to watch how I perform an encounter from start to finish and to reflect on my performance” (S10).

3.5. Need for Structure and Guidance in the Process

All participants identified aspects of the review that were helpful and challenging. Although participants were provided an encounter checklist (See Appendix A for a summary of items) and assessment form prior to the standardized patient encounter, a theme that materialized from the review of narrative responses was the need for structure and guidance in reviewing the video. There were differing examples, but the need for structure, in addition to the checklists and instructions prior to the encounters, was noted. For example, in response to Question 9 about enhancing the process, a participant noted, “Explicit instructions about different ways of approaching the video review could be helpful” (S7). Participant S6 provided an additional comment, “I think maybe a layout of how you should review your videos would be helpful”. The need for further direction to avoid perseveration on mistakes was noted, “I think it might help to have a bit more of a guided outline to follow while reviewing the video. Areas of suggestion to focus on. Sometimes I think it is easy to get in your head about mistakes” (S20).

4. Discussion

This study confirms the benefit of video review as a method that enables the learner to make connections between theoretical knowledge and clinical application [32]. It also confirms the utility of integrated practical examinations to assess clinical competence and preparedness [3,33]. Previous studies that examined guided student self-evaluation through the use of video demonstrated mixed results; also, these studies did not record the student during an integrated standardized patient examination (ISPE) [20,21,22,34]. Furthermore, no studies explicitly stated utilizing the Kirkpatrick framework for the assessment of levels of learning for students in an ISPE experience with digital recordings.

The current study provided data that substantiated self-reflection with digital recordings of ISPE. Participants reported strong satisfaction with this learning modality (Kirkpatrick Level 1), with 96.5% of students finding the review process helpful. This correlates with a similar study in which 75% of occupational therapy students found the video of their comprehensive practical exams helpful in guiding self-reflection [35]. The results from both studies demonstrate that participants consider the review of digital recordings of their standardized patient encounters as strongly beneficial in their clinical development.

Based on the qualitative themes that emerged, the majority of participants reported some specific knowledge and skills (Kirkpatrick Level 2) during the process of reviewing their digital recordings of the ISPE. The opportunity to see what the learner did well and could improve upon from a holistic perspective was evident. There was consistent reporting from participants of improved recollection of the patient encounter, reviewing ways of communicating with the patient, developing more systematic approaches to the component parts of the examination, identifying areas for improvement, and learning from their mistakes (Kirkpatrick Level 2). Long-term results and patient outcomes (Kirkpatrick Level 4) were beyond the scope of this study. Nevertheless, the findings here demonstrate that reviewing digital recordings encourages self-reflection and stimulates connections between theoretical knowledge and clinical skills; it can also be tailored to meet higher-order levels of learning.

Narrative responses from some participants expressed the need for structured guidance when reviewing their recordings; the open format of the review potentially contributed to variability in the process. Typically, students will be instructed to review the video and complete either an evaluation form [34], rubric [19], behavioral criteria checklist [21], and/or questionnaire [22]. As part of the pre-briefing process, students were provided with standardized checklists and assessment forms to provide a framework for grading. Students who participated in this study completed a required self-assessment and had a clinical checklist available for guidance. However, it is interesting to note that some participants used the checklists/forms to guide the review, while other participants used strategies ranging from double-speed and/or pause to help during the process. Furthermore, some students developed notes immediately following the ISPE to help guide their video review. Previous research has recommended explicit instructions and rubrics to maximize student learning when using a videotape for practical exams [20]. A standardized viewing checklist might maximize the benefits of viewing the recordings. To date, there does not exist a valid and reliable structure to review digital recordings of standardized patient encounters. However, evidence also exists that a less structured process enables students to self-regulate their learning [36]. The checklist could be offered to students as an option; further research should examine whether the checklist should be optional or required. In addition, providing students with explicit instructions and an example of how an expert clinician may review a digital recording to enhance clinical practice from this simulation-based tool, may result in application and behavior change to clinical practice (Kirkpatrick 3).

The students who seemed to have deep self-reflection following the standardized patient encounter appeared to develop their own framework and questions while viewing their digital recording, which is consistent with the metacognitive approach, which increases self-control and mastery of knowledge and skills [37]. Therefore, the depth of student knowledge gained from the review may be proportional to the depth of reflection following the standardized patient encounter. Utilizing established metacognitive techniques such as note-taking, active recall, and spaced learning could result in improvements in learning. Future studies of this could provide guidance for designing the encounter and follow-up learning activities.

Participants noted the initial difficulty in watching themselves on video. To decrease this self-focus and maximize the opportunity to deepen reflection, students could have graded exposure to recordings over time in the curriculum. Previous research has reported that it may be helpful to have student physical therapists use video-assisted self- and peer-assessment earlier in their training [22]. More frequent use of digital recordings with lower assessment stakes in a vertically integrated curriculum may diminish the initial shock, decrease focus on idiosyncrasies, and maximize focus on the integration of curricular content and standardized patient interaction. Another consideration in facilitating clinical growth is having a peer review the recording simultaneously to provide additional feedback. The use of peers to review recordings has been deemed helpful in developing clinical skills [21].

The findings from this study support the use of digital recordings to augment learning from integrated standardized patient examinations (ISPEs). The recordings can provide an overall objective picture for the student learner to identify aspects of the encounter they performed well and areas for improvement. Some student comments revealed a tendency to review a video with a “negative” lens on what was not performed, rather than the elements of the encounter that were done well. Using a balanced approach in viewing to reinforce positive elements of the patient encounter while simultaneously encouraging areas for growth could be helpful in maximizing this educational tool. Additionally, archiving digital recordings of students’ ISPEs in a longitudinal integrated curriculum may allow for tracking student development over time.

Limitations

A main limitation of this study is that the observational data were collected from a small sample cohort from a single site. The small sample size is a limiting factor in the transferability of findings. Also, while the collection of data using an online survey tool is convenient, flexible, and anonymous, narrative responses could have been bolstered using semi-structured interviews of students in focus groups. However, in this instance, the primary and secondary authors elected to not perform focus groups to minimize bias towards students in future classes. Focus groups conducted by someone other than the instructors could have provided further participant insights and added to this study.

5. Conclusions

The review of digital recordings following standardized patient encounters in an integrated practical assessment by physical therapy students is perceived as beneficial in integrating knowledge content and applying skills in a simulated patient environment. This appears to achieve learning goals at Kirkpatrick Levels 1,2, and 3. This learning opportunity allows for reflection-on-action of a simulated clinical encounter that can facilitate improvement in future clinical encounters. Further research is needed to develop best practices in designing the learning experience, including guidelines for student review of recordings, reflection, and self-assessment. Future research should utilize a mixed-methods approach to further study how students utilize digital recordings to enhance learning from integrated standardized patient examinations. Future research in this domain should include (1) the development of a valid and reliable tool for viewing digital recordings to improve clinical practice; (2) a comparison of early incorporation of digitally assisted self-assessment of ISPEs to those without early exposure to digital review; and (3) how a review of the recordings of ISPEs change clinical practice patterns and outcomes (Kirkpatrick 4).

Author Contributions

Conceptualization, A.E.K. and A.N.-C.; methodology, A.E.K., A.N.-C., S.K.-E. and A.T.; formal analysis, A.E.K. and A.N.-C.; investigation, A.E.K., A.N.-C., S.K.-E. and A.T.; data curation, A.E.K.; writing—original draft preparation, A.E.K.; writing—review and editing, A.E.K., A.N.-C., S.K.-E. and A.T.; supervision, A.N.-C., S.K.-E. and A.T.; project administration, A.E.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no direct external funding. Access and use of REDCap was provided through grant support NIH/NCATS Colorado CTSA Grant Number UL1 TR002535.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and considered exempt and approved by the Colorado Multiple Institutional Review Board (COMIRB) of University of Colorado Anschutz Medical Campus (21-4882) on 11/24/2021.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Acknowledgments

The authors would like to thank Subha Ramani, M.B.B.S., M.P.H. and Talia Thompson for their consultation and guidance in qualitative methodology, and to Anshul Kumar for his conceptual review.

Conflicts of Interest

The authors declare no conflict of interest.

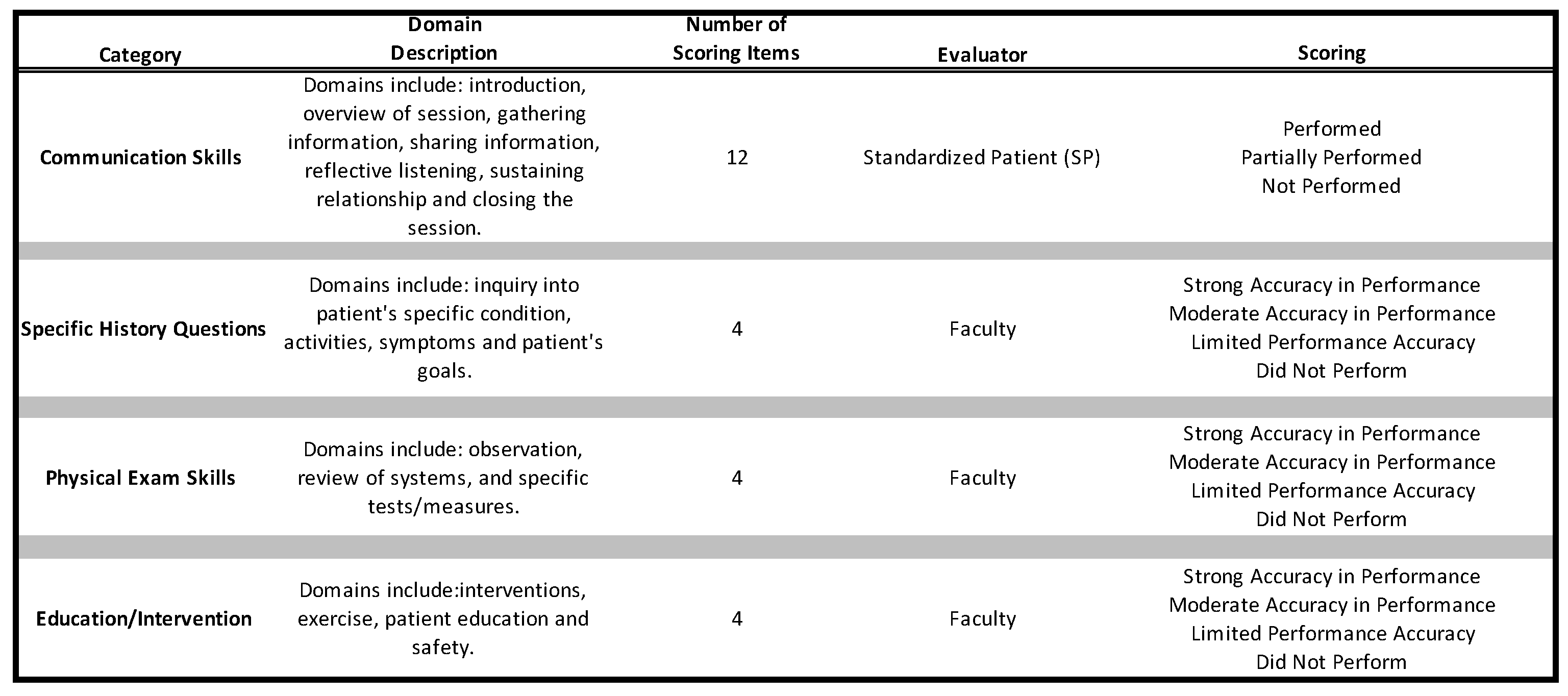

Appendix A

Summary of the Performance Assessment Rubric Used for Integrated Standardized Patient Examinations

References

- Drake, S.M.; Burns, R.C. Integrated Curriculum; Association for Supervision and Curriculum Development: Alexandria, VI, USA, 2000. [Google Scholar]

- Shafi, R.; Irshad, K.; Iqbal, M. Competency-based integrated practical examinations: Bringing relevance to basic science laboratory examinations. Med. Teacher 2010, 32, e443–e447. [Google Scholar] [CrossRef] [PubMed]

- Panzarella, K.J.; Manyon, A.T. Using the integrated standardized patient examination to assess clinical competence in physical therapist students. J. Phys. Ther. Educ. 2008, 22, 24–32. [Google Scholar] [CrossRef]

- Schön, D.A. Educating the Reflective Practitioner: Toward a New Design for Teaching and Learning in the Professions; Jossey-Bass: San Francisco, CA, USA, 1987. [Google Scholar]

- Larsen, D.P.; London, D.A.; Emke, A.R. Using reflection to influence practice: Student perceptions of daily reflection in clinical education. Perspect. Med. Educ. 2016, 5, 285–291. [Google Scholar] [CrossRef] [PubMed]

- Brauer, D.G.; Ferguson, K.J. The integrated curriculum in medical education: AMEE Guide No. 96. Med. Teach. 2015, 37, 312–322. [Google Scholar] [CrossRef]

- Ziebart, C.; MacDermid, J.C. Reflective Practice in Physical Therapy: A Scoping Review. Phys. Ther. 2019, 99, 1056–1068. [Google Scholar] [CrossRef]

- Aitken, J.A.; Torres, E.M.; Kaplan, S.; DiazGranados, D.; Su, L.; Parker, S. Influence of Simulation-based Training on Reflective Practice. BMJ Simul. Technol. Enhanc. Learn. 2021, 7, 638–640. [Google Scholar] [CrossRef]

- Mann, K.V. Reflection’s role in learning: Increasing engagement and deepening participation. Perspect. Med. Educ. 2016, 5, 259–261. [Google Scholar] [CrossRef]

- Roter, D.L.; Larson, S.; Shinitzky, H.; Chernoff, R.; Serwint, J.R.; Adamo, G.; Wissow, L. Use of an innovative video feedback technique to enhance communication skills training. Med. Educ. 2004, 38, 145–157. [Google Scholar] [CrossRef]

- Parish, S.J.; Weber, C.M.; Steiner-Grossman, P.; Milan, F.B.; Burton, W.B.; Marantz, P.R. Teaching clinical skills through videotape review: A randomized trial of group versus individual reviews. Teach. Learn. Med. 2006, 18, 92–98. [Google Scholar] [CrossRef]

- Hammoud, M.M.; Morgan, H.K.; Edwards, M.E.; Lyon, J.A.; White, C. Is video review of patient encounters an effective tool for medical student learning? A review of the literature. Adv. Med. Educ. Pract. 2012, 3, 19–30. [Google Scholar] [CrossRef]

- Ozcakar, N.; Mevsim, V.; Guldal, D.; Gunvar, T.; Yildirim, E.; Sisli, Z.; Semin, I. Is the use of videotape recording superior to verbal feedback alone in the teaching of clinical skills? BMC Public. Health 2009, 9, 474. [Google Scholar] [CrossRef]

- Engel, I.M.; Resnick, P.J.; Levine, S.B. The use of programmed patients and videotape in teaching medical students to take a sexual history. J. Med. Educ. 1976, 51, 425–427. [Google Scholar] [CrossRef]

- Cassata, D.M.; Conroe, R.M.; Clements, P.W. A program for enhancing medical interviewing using video-tape feedback in the family practice residency. J. Fam. Pract. 1977, 4, 673–677. [Google Scholar]

- Hansen, M.; Oosthuizen, G.; Windsor, J.; Doherty, I.; Greig, S.; McHardy, K.; McCann, L. Enhancement of medical interns’ levels of clinical skills competence and self-confidence levels via video iPods: Pilot randomized controlled trial. J. Med. Internet Res. 2011, 13, e29. [Google Scholar] [CrossRef] [PubMed]

- Cardoso, A.F.; Moreli, L.; Braga, F.T.; Vasques, C.I.; Santos, C.B.; Carvalho, E.C. Effect of a video on developing skills in undergraduate nursing students for the management of totally implantable central venous access ports. Nurse Educ. Today 2012, 32, 709–713. [Google Scholar] [CrossRef]

- Ibrahim, A.M.; Varban, O.A.; Dimick, J.B. Novel Uses of Video to Accelerate the Surgical Learning Curve. J. Laparoendosc. Adv. Surg. Tech. A 2016, 26, 240–242. [Google Scholar] [CrossRef]

- Palmer, K.K.; Chinn, K.M.; Robinson, L.E. Using Achievement Goal Theory in Motor Skill Instruction: A Systematic Review. Sports Med. 2017, 47, 2569–2583. [Google Scholar] [CrossRef]

- Kachingwe, A.F.; Phillips, B.; Beling, J. Videotaping Practical Examinations in Physical Therapist Education: Does It Foster Student Performance, Self-Assessment, Professionalism, and Improve Instructor Grading? J. Phys. Ther. Educat. 2015, 29, 25–33. [Google Scholar] [CrossRef]

- Seif, G.A.; Brown, D. Video-recorded simulated patient interactions: Can they help develop clinical and communication skills in today’s learning environment? J. Allied Health 2013, 42, e37–e44. [Google Scholar] [PubMed]

- Ebert, J.G.; Anderson, J.R.; Taylor, L.F. Enhancing Reflective Practice of Student Physical Therapists Through Video-Assisted Self and Peer-Assessment: A Pilot Study. Int. J. Teach. Learn. Higher Educ. 2020, 32, 31–38. [Google Scholar]

- Bunton, S.A.; Sandberg, S.F. Case Study Research in Health Professions Education. Acad. Med. 2016, 91, e3. [Google Scholar] [CrossRef] [PubMed]

- Kirkpatrick, D.L.; Kirkpatrick, J.D. Evaluating Training Programs: The Four Levels, 3rd ed.; Berret-Koehler: San Francisco, CA, USA, 2006. [Google Scholar]

- Creswell, J.W.; Poth, C.N. Qualitative Inquiry & Research Design: Choosing Among Five Approaches, 4th ed.; Sage Publications: Los Angeles, CA, USA, 2018. [Google Scholar]

- Harris, P.A.; Taylor, R.; Thielke, R.; Payne, J.; Gonzalez, N.; Conde, J.G. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J. Biomed. Inform. 2009, 42, 377–381. [Google Scholar] [CrossRef] [PubMed]

- Harris, P.A.; Taylor, R.; Minor, B.L.; Elliott, V.; Fernandez, M.; O’Neal, L.; McLeod, L.; Delacqua, G.; Delacqua, F.; Kirby, J.; et al. The REDCap consortium: Building an international community of software platform partners. J. Biomed. Inform. 2019, 95, 103208. [Google Scholar] [CrossRef]

- Sawyer, T.; Eppich, W.; Brett-Fleegler, M.; Grant, V.; Cheng, A. More Than One Way to Debrief: A Critical Review of Healthcare Simulation Debriefing Methods. Simul. Healthc. 2016, 11, 209–217. [Google Scholar] [CrossRef] [PubMed]

- Braun, V.; Clarke, V. Using Thematic Analysis in Psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Kiger, M.E.; Varpio, L. Thematic analysis of qualitative data: AMEE Guide No. 131. Med. Teach. 2020, 42, 846–854. [Google Scholar] [CrossRef]

- O’Brien, B.C.; Harris, I.B.; Beckman, T.J.; Reed, D.A.; Cook, D.A. Standards for reporting qualitative research: A synthesis of recommendations. Acad. Med. 2014, 89, 1245–1251. [Google Scholar] [CrossRef]

- Huber, M.; Hutchings, P. Integrative Learning: Mapping the Terrain; Association of American Colleges and Universities: Washington, DC, USA, 2004. [Google Scholar]

- Dannemiller, L.; Basha, E.; Kriekels, W.; Nordon-Craft, A. Student perception of preparedness for clinical management of adults with lifelong disability using a standardized patient assessment. J. Phys. Ther. Educat. 2017, 31, 76–82. [Google Scholar] [CrossRef]

- Palmer, P.B.; Henry, J.N.; Rohe, D.A. Effect of Videotape Replay on the Quality and Accuracy of Student Self-Evaluation. Phys. Ther. 1985, 65, 497–501. [Google Scholar] [CrossRef]

- Giles, A.K.; Carson, N.E.; Breland, H.L.; Coker-Bolt, P.; Bowman, P.J. Use of simulated patients and reflective video analysis to assess occupational therapy students’ preparedness for fieldwork. Am. J. Occup. Ther. 2014, 68 Suppl. S2, S57–S66. [Google Scholar] [CrossRef]

- Brydges, R.; Manzone, J.; Shanks, D.; Hatala, R.; Hamstra, S.J.; Zendejas, B.; Cook, D.A. Self-regulated learning in simulation-based training: A systematic review and meta-analysis. Med. Educ. 2015, 49, 368–378. [Google Scholar] [CrossRef] [PubMed]

- Stanton, J.D.; Sebesta, A.J.; Dunlosky, J. Fostering metacognition to support student learning and performance. CBE Life Sci. Educ. 2021, 20, fe3. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).