Abstract

Using video technology to support individual and collaborative reflection in pre-service teacher education is an increasingly common practice. This paper explores the type of teaching practice challenges identified by the pre-service teachers and the feedback provided during analysis by school mentors and university tutors through the use of the VEO app to supervise a teaching practicum. Student teachers selected and uploaded a short clip of their dissatisfied interventions during the practicum to the app. Each student analyzed their clip and received online feedback from their school mentor and university tutor. The objectives were to analyze the challenges in the chosen video clips, identify which mentoring feedback episodes occurred, characterize them according to their feedback strategies and analyze differences between school mentors’ and university tutors’ feedback. We conducted a descriptive and exploratory study with a sample of 12 pre-service teachers, their school mentors and their university tutors. Pre-service teachers identified communication and the learning climate as frequent challenges. University tutors used more emotional feedback strategies and a greater range of task assistance feedback than school mentors. Three types of feedback episodes were identified (complementary, collaboration and school mentor-centered episodes). Implications in teacher learning and mentoring programs were discussed.

1. Introduction

Digital environments that use video for feedback are increasingly used to promote teachers’ professional learning. Innovative resources such as VEO (Video Enhanced Observation) were often used to implement meaningful distance learning and reflective experiences during COVID-19, and especially to promote learners’ second language acquisition [1,2,3]. In this paper, we used VEO in a different way. In our study, VEO was used as a resource complementary to face-to-face oral feedback to promote student teacher reflection through the challenges that the student teachers faced during their practicum at school. We analyzed how mentors and university tutors used this app according to the type of written feedback given and their differences. The ultimate purpose was to create useful guidelines about using powerfully written feedback to promote professional development using video technology.

1.1. Using Video Technology to Support Individual and Collaborative Reflection in Pre-Service Teacher Education

Reflective practice using technological tools such as video-recorded lesson observations is essential to develop teachers’ beliefs, classroom performance and pedagogical knowledge. In [4], the authors discussed the impact of video technology in pre-service and in-service teacher preparation and concluded that the use of this digital resource not only fosters reflection and pedagogical knowledge but also helps teachers focus on their student’s learning experience. In Prilop et al. [5], the authors demonstrated that digital video-based environments elicit stronger effects than traditional face-to-face settings.

Students positively perceived video-based feedback, seeing it as more detailed, clearer and richer, and noting that it improved higher-order thinking skills and prepared them for future work. Video-based feedback also positively influenced their perceptions of cognitive and social perceptions. When students negatively perceived video-based feedback, they cited accessibility problems, the linear nature of feedback and the evocation of negative emotions as the adverse effects of receiving video feedback. Most studies have focused on oral feedback through the visualization of videos. One of the contributions of the VEO app is the possibility to provide feedback in written format. Our study specifically focuses on analyzing the types of written feedback provided when utilizing this tool.

One challenge is how to promote a truly collaborative written reflection. Our understanding of collaborative reflection draws from the approaches of [6,7], wherein mentors, pre-service teachers and university teachers cooperate by sharing expertise, discussing subject content and reflecting on teaching practices. Through this collaboration, teacher trainees and mentors learn from one another, enhancing their ability to identify and explain their teaching practices. The willingness of mentor teachers to openly discuss their challenges is essential in conversing with pre-service teachers.

1.2. The Influence of Effective Feedback in Constructing Teachers’ Professional Knowledge

The importance of useful feedback for advancing student learning is well established (e.g., [8]). Feedback provides individuals with information about their current performance to help them improve and reach the desired standards [9]. Studies on expertise have shown that feedback is essential to improve performance [10,11,12,13].

A growing body of research (e.g., [10,11,12,13,14,15]) has confirmed the substantial effects of feedback sessions on teacher knowledge, practices, beliefs and, consequently, student achievement. However, in different domains, in [16], the authors established that receiving feedback does not necessarily lead to improved performance; that is, fostering expertise requires high-quality feedback [17].

Such feedback occasions are increasingly incorporated into pre-service teacher education [18,19,20]. Feedback sessions occur after observing a teacher’s lesson or specific skills training. They can involve either an expert who possesses more advanced knowledge than the teacher or peers who share a similar level of teaching expertise. In [5,21], the authors demonstrated expert feedback containing more high-quality suggestions than peer feedback groups.

Extant studies measuring feedback quality [22,23,24] have largely been based on a set of criteria originally suggested by [25]. First, feedback comments must be appropriate for the specific context; that is, the evaluator must be able to evaluate performance based on defined criteria (Feed Up). Second, the evaluator must be able to explain their judgments and highlight specific examples [23]. Third, feedback must contain constructive suggestions, which are part of the tutoring component of feedback. These suggestions provide learners with additional information in addition to the evaluative aspects, including task constraints, concepts, mistakes, how to proceed or teaching strategies [9]. Explaining one’s judgments can be viewed as a concept of Feed Back [8], whereas suggestions can be compared with Feed Forward. Fourth, feedback messages should contain “thought-provoking questions” [23] (p. 307) that aim to enhance individuals’ active engagement [26]. Fifth, in Gielen and De Wever [24], the authors determined that feedback messages should contain both positive and negative comments, since both can enhance future performance [16,27]. Finally, high-quality feedback should be written in the first person, with a clear structure and wording [22].

According to [28,29], adequate mentoring involves a combination of offering emotional support and task assistance. This emotional support encompasses elements such as mentor teacher accessibility, sympathetic and positive support, spending time together and offering empathy. In [30], the authors emphasized the paramount importance of emotional support from the supervising teacher, as it greatly influences the positive practicum experiences for prospective teachers. However, when they perceive a lack of this support, it adversely affects their self-confidence and attitude toward the practicum [31].

The importance of the practicum in shaping the professional identity of teachers has been widely studied [32,33]. Identity is dialogically constructed between the student teacher, the mentor and the university tutor in different learning scenarios during the practicum, at either school or the university. This is one of the reasons to explore the feedback given in this learning scenario. Some studies have explored the differences between mentors’ and university teachers’ oral feedback [34]. In our study, we aim to explore possible differences in written feedback by employing VEO.

This study has three objectives. The first is to learn about the types of challenges that pre-service teachers identify when they analyze their classroom intervention using VEO; the second is to identify which written feedback episodes occurred and to characterize each episode according to their feedback strategies; and the third is to analyze differences between school mentors’ and university tutors’ feedback.

2. Materials and Methods

2.1. Context and Participants

We developed an educational innovation project during the 2021/22 academic year by designing an inquiry-based practicum in our Faculty of Education. A Spanish pre-service teacher training degree program lasts four academic years. Out of a total of 240 ECTS (European Credit Transfer System credits), a maximum of 60 ECTS correspond to the practicum, following the Spanish Ministry of Education [35] requirements. The practicum consists of three courses, with the first practicum (6 ECTS) taking place in the second year and focusing on classroom observation and the design of a short activity. In the third year, the second practicum (14 ECTS) occurs, during which pre-service teachers are responsible for planning and delivering a lesson. In the fourth and final year, the last practicum (18 ECTS) takes place, where students reflect on their professional identity as teachers and independently implement a long-term classroom activity. This study was conducted during the first and second practicum. In these practicums, pre-service teachers spend 120 h and 240 h, respectively, in schools under the supervision of a school mentor. Additionally, they participate for 22 h in a university seminar led by a university tutor.

The participants consisted of 12 pre-service teachers (10 females and 2 males), their respective school mentors and their 5 university tutors. Seven students were from the first teaching practicum and five were from the second teaching practicum.

The inquiry-based practicum was organized into six phases that are summarized as follows: (a) analyzing the classroom context; (b) pinpointing an area of improvement; (c) learning about the area of improvement; (d) designing an evidence-informed practice; (e) implementing the practice; and (f) evaluating it. This study was carried out during the evaluation phase in which the students followed a reflection guideline to analyze the recording of their teaching practice at school using VEO.

2.2. Data-Collection Instrument

The VEO app was the instrument for collecting mentor and university tutor feedback on the pre-service teachers; we have used it in a similar manner as previous research conducted [36]. VEO is a system designed to improve learning in, from and by practice. By employing a system of “tags”, it enables the coding of analyzed practice and facilitates the inclusion of comments from various users. This feature makes both the video and the analysis performed on it accessible to specific users. The tags, apart from their value as data, aid in the analysis of the videos as students and educators identify key moments for review. Additionally, the program generates statistics on tag usage and allows the qualitative analysis of different user comments. In this way, VEO combines qualitative and quantitative data generated by all participants within the app, aiming to enhance the understanding of interaction, processes and practices for improvement.

In our study, the VEO application was used during the last phase of the practicum, wherein students evaluated their classroom practice in collaboration with their school mentor and university tutor.

We adopted a three-phase approach to implement the VEO app.

Phase 1. Training of participants involved.

To create awareness of and familiarity with the program, pre-service teachers, school mentors and university tutors underwent training. This involved the development of a tutorial as a comprehensive guide and a virtual meeting for each group. During this training, access to the program was provided to all 12 students, who were assigned the responsibility of managing the platform.

Phase 2. Analysis of the videos by the students

Each student was instructed to record as many of their classroom practice sessions as possible and choose a pedagogically significant negative moment from the recordings (5–10 min). This selected moment, referred to as a “clip”, was uploaded to the VEO platform and analyzed using a tagging system. This system, based on the classification by [37], consisted of six categories: classroom management, learning climate, communication skills, predisposition and involvement of students in learning, attention to diversity and teaching and learning strategies. After labeling the different moments in the video, students were required to justify each labeled moment. Finally, they were asked to exclusively share the fully labeled and justified video with their school mentor and university tutor.

Phase 3. Mentor and tutor analysis

Following a sequential process, the school mentor received the video via the VEO app and provided feedback on each labeled and justified moment by their respective student. Subsequently, the university tutor conducted a similar analysis, considering both the student’s and the mentor’s analysis. With no word limit, an asynchronous written feedback dialogue was established, allowing for extensive comments. Later, the student and their student mentor and university tutor met to discuss and comment on this written feedback.

2.3. Data Analysis Procedure

The written data obtained for each pre-service teacher in the VEO app were divided into different episodes according to each sequence analyzed by the student. Thus, each episode started with choosing a clip, and after that, the pre-service teacher usually commented on this selection (why that moment was good or bad), and then the mentor and university tutor gave their feedback.

We established a total of 129 feedback episodes. These were qualitatively analyzed by three independent researchers over three phases. After each phase, the analysis results were thoroughly discussed until a complete consensus was reached regarding the final system of categories. In the first phase, each episode was categorized according to the type of challenge that the pre-service teachers identified. To do so, we considered [37] previous categories such as classroom management, learning climate, communication skills, pupils’ predisposition towards and involvement in learning, attention to diversity and teaching and learning strategies. Secondly, each episode was classified as complete or incomplete depending on whether all three actors were involved (pre-service teacher, mentor and university tutor). Each complete episode was then characterized according to the type of participation and feedback strategies that the mentor and university teacher provided. Finally, we analyzed the differences between mentor and university tutor feedback strategies in each episode. We considered the proposal in [28] for this analysis. Table 1 shows the different categories used.

Table 1.

Feedback strategies of school mentors and university tutors.

3. Results

The following section shows the main findings of this study, including the type of challenges that the pre-service teachers identified when they analyzed their classroom intervention, the mentoring feedback episodes and the characterization of each episode according to their feedback strategies and the differences between school mentors’ and university tutors’ feedback.

3.1. Type of Challenges That Pre-Service Teachers Identified when They Analyzed Their Classroom Intervention

The episodes were analyzed as complete or incomplete according to the challenge to which they referred. Of the 129 episodes identified, 50 were classified as complete, while 79 were considered incomplete. Among the incomplete episodes, 36 (45.5%) episodes lacked feedback from both university tutors and school mentors. In thirty-four (43.1%) episodes, the students solely received feedback from the university tutors, while in nine (11.4%) episodes, feedback was solely provided by the school mentors.

Table 2 shows the types of challenges that complete and incomplete episodes represent. In both complete and incomplete episodes, communication with students in the classroom is the most frequent challenge (51.2%), since clearly communicating objectives and providing clear instructions are communication challenges. The second most frequent challenge is creating a good learning climate or atmosphere in the classroom (19.4%), followed by having strategies for classroom management (18.6%) focused on developing a well-structured session or managing student behavior in the classroom. Less frequent challenges are related to promoting pupils’ predisposition towards and involvement in learning (7.8%). Finally, not enough teaching and learning strategies and attention to diversity (1.5% each) were also cited.

Table 2.

Challenges in the complete and incomplete episodes.

3.2. Identifying and Characterizing Mentoring Episodes

Considering the complete feedback episodes, i.e., those in which both mentors and university tutors participated, three types of episodes were identified according to the type of feedback provided, as described below.

3.2.1. Collaborative Episode to Facilitate Comprehension and/or Seek Solutions

Of all the completed episodes (n = 50), 48% (n = 24) were categorized as “collaborative”. In this type of episode, after the pre-service teacher explained the moment in the clip chosen, the mentor and the tutor immediately participated by agreeing with the student’s evaluative statement. After showing agreement, they participated by giving information (GI) or advice/instructions (GA).

To illustrate this episode, here is an example of how the two added information (GI) after the university tutor agreed with what the school mentor told the pre-service teacher:

Pre-service teacher 04: I didn’t use technologies to facilitate student participation and learning. I think it would have been very enriching to add the support of tablets for students to search for information online.

School mentor 04: The time available to do the activity made it difficult and limited and conditioned the use of different media.

University tutor 01: I totally agree. The instructions were clear, and that was all the time you had.

In this other example, we can see how the school mentor and university tutor showed agreement (I agree and Indeed) and then gave guidelines on how to deal with the problem detected:

Pre-service teacher 08: In this case, the fact that a specific person is reading and changing creates a moment of disconnection, and the children who do not read stop listening.

School mentor 08: I agree. This is a problem that I think was because you didn’t have the projector, since with only the story it is very normal for the other children at this age to disconnect.

University tutor 02: Indeed, I also think that if all the students could see the story, it would be easier for them to understand it and make hypotheses. On the one hand, it seems to me that the letter is read a bit fast, and it would be better to do it more slowly and to “dramatize” it a bit. On the other hand, when you ask what PD means, there is confusion, so maybe it would be a good idea to write the letters on the board.

3.2.2. Complementary Feedback

A second type of episode identified was when the university tutor participated using the same feedback strategy as the mentor, who previously participated, in an attempt to complement what the mentor emphasized. This type of episode appeared on ten occasions (20%).

The episodes in question were equally divided into five types of feedback, including attentive behavior (AB), expressing positive opinions (PO), showing agreement (SA), giving advice (GA) or giving information (GI).

In this example, we can see how the mentor and tutor advised the student how they could improve their teaching practice:

Pre-service teacher 13: I don’t give clear instructions at the beginning, and once they start, I realize that most students don’t know what to do. I try to get their attention, but it’s hard for me.

School mentor 13: You have to be very clear about the task you are asking students to do and how you explain it so that the students understand it. It would be a great idea to look at how other classroom teachers do it.

University tutor 04: It is important to do a guided practice before moving on to the phase where students work autonomously.

In the next example, the school mentor showed agreement, and the university tutor did exactly the same:

Pre-service teacher 12: I present the material too quickly and briefly, focusing too much on the rules rather than on what is happening at that moment.

School mentor 12: I agree PS-T12!

University tutor 03: I completely agree!

3.2.3. School Mentor-Centered Episode to Facilitate Comprehension and/or Seek Solutions

The third type of feedback episode (8%, n = 4) had the following structure: the school mentor gave advice/instructions (GA) or gave information (GI) to help the pre-service teacher understand the assessment better, after which the university tutor only showed agreement (SA). Thus, the university tutor played a more passive role in providing information to help the pre-service teacher understand the situation or to propose new strategies.

This is an example of this type of episode:

Pre-service teacher 01: I did not give the students enough time to finish the triptych and do the assessment properly.

School mentor 01: It would be great if the questions were more open-ended and not only aimed at how to use this element.

University tutor 01: I agree with SM01.

A common characteristic of all three types of episodes was that no disagreement between the school mentor and the university tutor was ever expressed.

Although these three episodes were the most frequent, there were 12 that we classified as “Other episodes” (24%) because they follow very diverse patterns that do not fit in with the three most frequent ones. Eight of these were initiated by a mentor’s emotional feedback and four by task feedback with a variety of different specific strategies.

3.3. Differences between School Mentors’ and University Tutors’ Feedback

First, we present the feedback strategies according to whether they were aimed at emotional support or task support. We analyzed a total of 194 feedback strategies. Each turn in the VEO app was considered one feedback strategy. We analyzed a total of 93 emotional feedback strategies and 101 task support strategies.

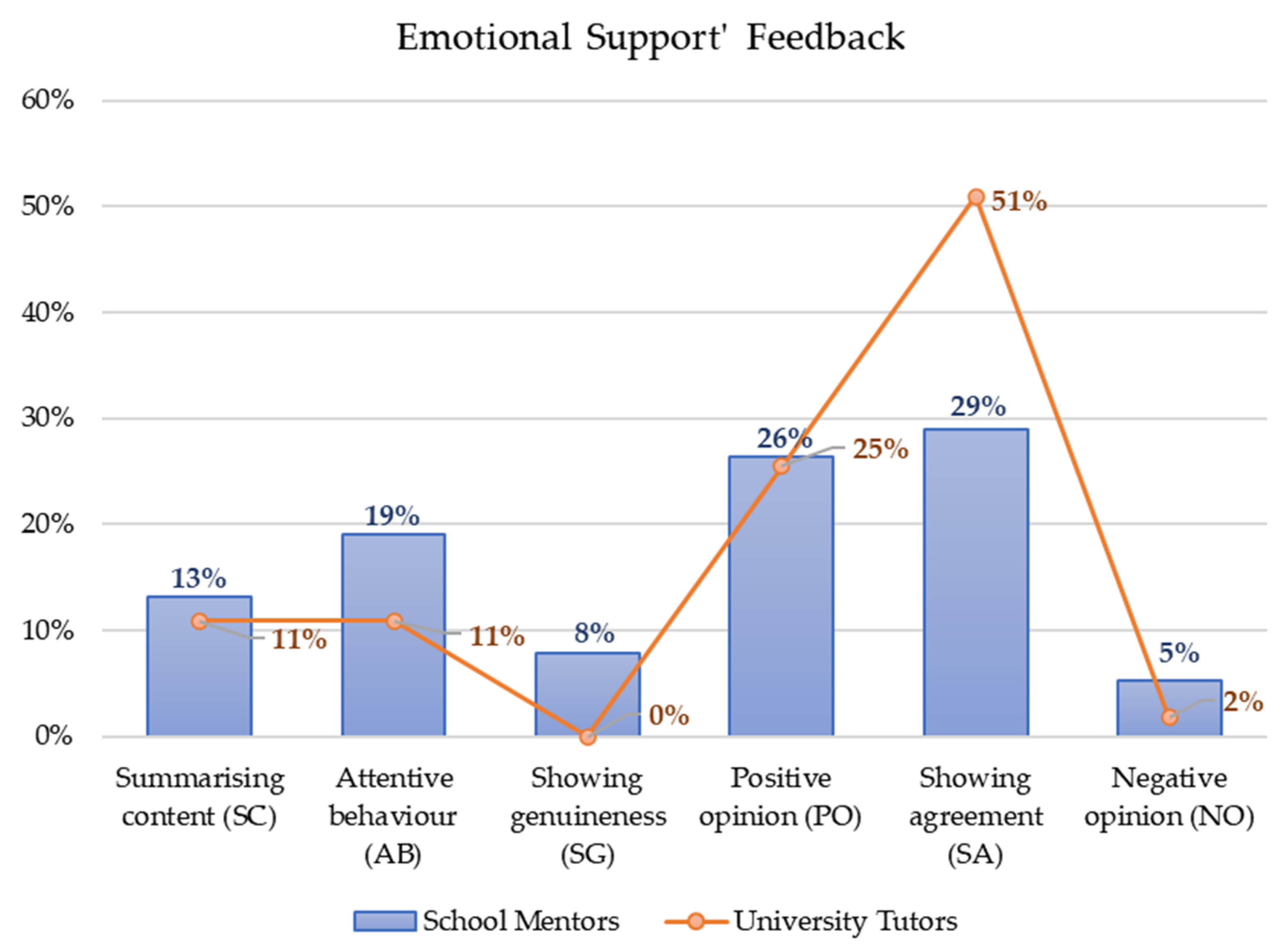

University tutors used emotional strategies more, 55 (59%), than school mentors, 38 (41%). The most frequent in both cases were showing agreement (SA) and giving a positive opinion (PO). It seems that other strategies were differently used. Specifically, the university tutors did not show genuineness and expressed almost no negative opinions about students’ comments.

The strategies used to give emotional support are shown in Figure 1.

Figure 1.

Emotional support from school mentors’ and university tutors’ feedback.

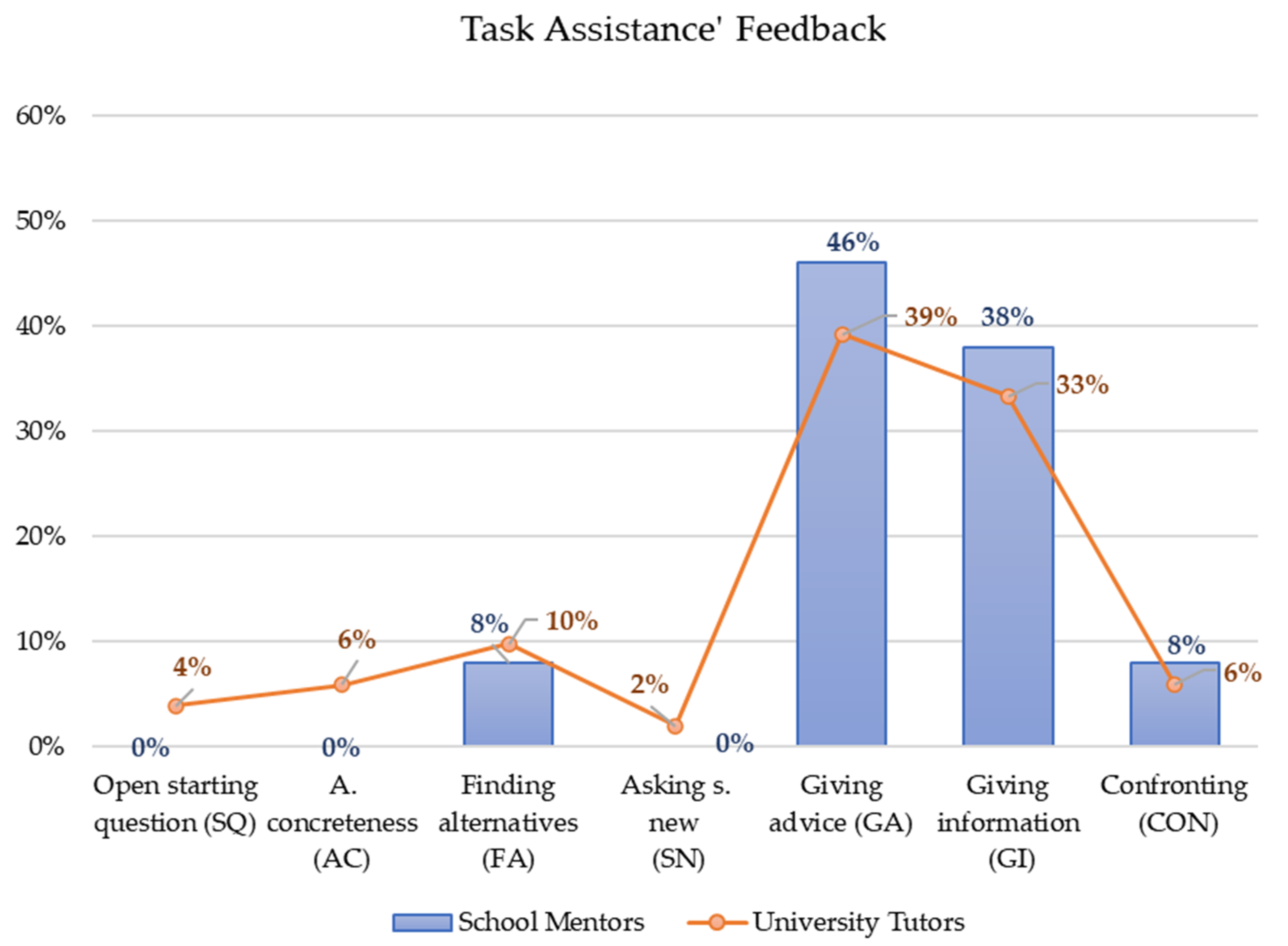

Feedback strategies related to task support are distributed very similarly. Figure 2 shows the mentors’ and university tutors’ feedback strategies to support classroom practice. In this case, there was greater variability in the strategies used. The first relevant fact is that the school mentors only used four of the seven feedback strategies considered, while the tutors used all of them. Mentors did not ask open-ended questions or ask for more specifics to obtain new information. These important feedback strategies were seldom used by tutors.

Figure 2.

Task assistance from university tutors’ feedback.

The predominant feedback from mentors was centered on giving advice/instruction (GA, 46%) and giving information (GI, 38%), probably because they knew the classroom context and the students well. These two feedback strategies were also frequently used by university tutors. On fewer occasions, school mentors used confrontation (CON, 8%) and finding alternatives (FA, 8%).

4. Discussion

The literature shows that effective mentoring could provide emotional support and task assistance and that different feedback strategies help pre-service teachers’ professional development [28]. Our study demonstrates that emotional and task support is possible using video-based feedback with the VEO app. Still, mentors and tutors were not always involved in analyzing some of the practice sequences chosen by the students. Of the total feedback episodes collected (n = 129), only 38.7% were complete episodes in which the mentor and tutor gave feedback. In the incomplete feedback episodes, the lack of participation by the mentor was more frequent than the tutor, even though our instructions indicated that the mentor should speak first. This could be due to the fact that throughout the pre-service teacher’s classroom intervention, the mentor was present and probably gave feedback to the student during class or in person once the educational intervention had been completed. In future research, it would be interesting to explore possible other reasons for this disparity.

In the complete episodes, we observed differences between school mentors and university tutors in the type and frequency of feedback strategies. University tutors used more emotional feedback strategies and a greater range of task assistance feedback. However, both often gave a positive opinion of the student’s performance in the video or showed agreement with their evaluative comment. Other frequent strategies to support the task were giving advice and information. These strategies were intended to help the student understand the situation being analyzed and provide the future teachers with solutions [38]. Surprisingly, the mentors never asked questions, and the tutors only asked a few. This is an issue to be explored in future studies. We believe that, in some cases, asking the student questions in written format is a good strategy to help them think rather than giving a “solution”. In our case, we did not ask the students to intervene again after they received the experts’ comments, and this may explain why so few questions were asked. In the future, it would be interesting to ask the student to respond to the comments in order to close the feedback loop. However, asking questions was also rare in previous studies on mentoring in oral conversations [38].

Using the VEO program to provide feedback has allowed us to identify specific episodes in the feedback provided by school mentors and university tutors. We established an order of participation (student-mentor-tutor), which probably influenced the results. However, we believe using VEO requires establishing clear “participation conditions” regarding when and how the participants are expected to participate.

We identified three types of episodes that may be useful in mentoring training programs to help supervisors become aware of the possible implications of each one. The most frequent situation (“collaborative episode”) implies that the participants’ interventions are not isolated or independent but that they intervene considering the previous intervention in VEO. This is a good way to promote learning, since future teachers perceive the agreement and receive specific information about the sequence analyzed. However, as indicated above, in our study, the collaborative episode focused on providing prospective teachers with information or solutions rather than raising issues or offering alternatives.

The role of the university tutor determines the other two episodes. We called it “complementary feedback” when a university tutor uses the same feedback strategy as the school mentor to complement or reinforce their ideas without providing new data or information. Finally, we identified several episodes where university tutors only showed agreement with the school mentor’s feedback.

Finally, VEO makes it possible to identify situations in which prospective teachers feel less competent according to the results of their practice [39]. Thus, this analysis allows future teachers to recognize what competencies they could improve to develop effective teaching strategies. Using VEO, pre-service teachers could analyze very specific challenges in detail. Our study shows how most of the teachers’ challenges related to developing good communication in the classroom to facilitate the pupils’ understanding of the lesson or the tasks proposed. The second challenge identified was creating a good learning environment to facilitate pupils’ participation in the classroom. These results were consistent with previous studies [40]. In our study, surprisingly, no incidents linked to student behavior or attention to classroom diversity appeared [41].

To conclude, incorporating classroom videos and feedback on platforms such as VEO could offer many advantages, but some conditions should be considered. Sequences can be repeatedly watched, making it possible to revisit and examine specific situations with different foci [42]. Videos of classroom practice act as situated stimuli for eliciting knowledge about teaching and learning [43,44]. Furthermore, analysis of classroom videos has been shown to lead to high activation, immersion, resonance and motivation [45]. However, classroom videos also have potential constraints. Although classroom videos can be considered rich representations of teaching interactions, they offer less contextual information than live observations [42]. This is why we consider it important for school mentors to participate before university tutors in the VEO app. Mentors often provide information that facilitates an understanding of the context. In [46], the authors emphasized that video sequences require contextualization to convey the classroom culture, atmosphere and environment. Furthermore, videos can lead to “attentional biases”, such as only noticing limited aspects of classroom reality, or “cognitive overload”, that is, being overwhelmed by the density of information [47] (p. 787). In our experience, the pre-service teachers analyzed their practice with a reflection guideline that allowed them to pay attention to specific issues. The dimensions of this guideline facilitated the analysis through the VEO. Thus, we recommend providing these guidelines for practice analysis, at least the first few times, so that the student learns to reflect on their practice.

Although we have argued different advantages and constraints involved in using the VEO app to support the development of collaborative reflection in a program of initial teacher education, we would like to finish by pointing out some relevant aspects to be considered in future studies. The VEO app is an excellent tool to promote collaborative reflection among student teachers, school mentors and university tutors about concrete clips involved in the training of pre-service teachers. Using guidelines facilitates joint review using the exact dimensions of observation. VEO makes it possible to identify situations in which prospective teachers feel less competent according to the results of their practice, facilitating pre-service teachers to analyze particular challenges in detail. The use of the program favors student teachers to receive specific feedback about their classroom interventions. Our study demonstrates that emotional and task support is possible using video-based feedback with the VEO app. However, as with oral feedback, it is recommended that mentors and tutors think about the type of written feedback they can give before using VEO. In this sense, promoting questions that encourage student reflection would be advisable. In this sense, it would be convenient for students to end the cycle of interventions by responding to the comments received, an issue that, in this study, was not considered. Finally, it seems essential to establish some conditions for the intervention; for example, the expected order or type of intervention.

Author Contributions

Conceptualization, E.L.; methodology, E.L. and P.M.; validation, P.M., S.A. and M.G.-R.; formal analysis, P.M. and S.A.; research, all the authors; resources, all the authors; data curation, E.L. and P.M.; writing—original draft preparation, E.L.; writing—review and editing, E.L. and P.M.; supervision, all the authors; project administration, E.L.; funding acquisition, E.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Agency for management of university and research grants. Government of Catalonia, grant number 2020 ARMIF 00027.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Batlle, J.; Miller, P. Video Enhanced Observation and teacher development: Teachers’ beliefs as technology users. In EDULEARN17, Proceedings of the 9th International Conference on Education and New Learning Technologies, Barcelona, Spain, 3–7 July 2017; IATED: Valencia, Spain, 2017; pp. 2352–2361. [Google Scholar]

- Batlle, J.; González, M.V. Observar para aprender: Valoración del uso de la herramienta Video Enhanced Observation (VEO) en la formación del profesorado de español como lengua extranjera. In Redes de Investigación e Innovación en Docencia Universitaria; Satorre, R., Ed.; Institut de Ciències de l’Educació de la Universitat d’Alacant: Alicante, Spain, 2021; pp. 175–184. [Google Scholar]

- Seedhouse, P. (Ed.) Video Enhanced Observation for Language teaching: Reflection and Professional Development; Bloomsbury Publishing: London, UK, 2021. [Google Scholar]

- Marsh, B.; Mitchell, N. The role of video in teacher professional development. Teach. Dev. 2014, 18, 403–417. [Google Scholar] [CrossRef]

- Prilop, C.N.; Weber, K.E.; Kleinknecht, M. Effects of digital video-based feedback environments on pre-service teachers’ feedback competence. Comput. Hum. Behav. 2020, 102, 120–131. [Google Scholar] [CrossRef]

- Liu, S.; Tsai, H.; Huang, Y. Collaborative Professional Development of Mentor Teachers and Pre-Service Teachers in Relation to Technology Integration. J. Educ. Technol. Soc. 2015, 3, 161–172. [Google Scholar]

- Overton, D. Findings and implications of the relationship of pre-service educators, their university tutor and in-service teachers regarding professional development in science in the primary school system. Prof. Dev. Educ. 2017, 44, 595–606. [Google Scholar] [CrossRef]

- Hattie, J.; Timperley, H. The power of feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Narciss, S. Designing and evaluating tutoring feedback strategies for digital learning. Digit. Educ. Rev. 2013, 23, 7–26. [Google Scholar]

- Allen, J.P.; Hafen, C.A.; Gregory, A.C.; Mikami, A.Y.; Pianta, R. Enhancing secondary school instruction and student achievement: Replication and extension of the My Teaching Partner-Secondary intervention. J. Res. Educ. Eff. 2015, 8, 475–489. [Google Scholar] [CrossRef]

- Weber, K.E.; Gold, B.; Prilop, C.N.; Kleinknecht, M. Promoting pre-service teachers’ professional vision of classroom management during practical school training: Effects of a structured online-and video-based self-reflection and feedback intervention. Teach. Teach. Educ. 2018, 76, 39–49. [Google Scholar] [CrossRef]

- Matsumura, L.C.; Garnier, H.E.; Spybrook, J. Literacy coaching to improve student reading achievement: A multi-level mediation model. Learn. Instr. 2013, 25, 35–48. [Google Scholar] [CrossRef]

- Sailors, M.; Price, L. Support for the Improvement of Practices through Intensive Coaching (SIPIC): A model of coaching for improving reading instruction and reading achievement. Teach. Teach. Educ. 2015, 45, 115–127. [Google Scholar] [CrossRef]

- Tschannen-Moran, M.; McMaster, P. Sources of self-efficacy: Four professional development formats and their relationship to self-efficacy and implementation of a new teaching strategy. Elem. Sch. J. 2009, 110, 228–245. [Google Scholar] [CrossRef]

- Vogt, F.; Rogalla, M. Developing adaptive teaching competency through coaching. Teach. Teach. Educ. 2009, 25, 1051–1060. [Google Scholar] [CrossRef]

- Kluger, A.N.; DeNisi, A. The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol. Bull. 1996, 119, 254–284. [Google Scholar] [CrossRef]

- Ericsson, K.A. Deliberate practice and the acquisition and maintenance of expert performance in medicine and related domains. Acad. Med. 2004, 79, 70–81. [Google Scholar] [CrossRef]

- Kleinknecht, M.; Gröschner, A. Fostering preservice teachers’ noticing with structured video feedback: Results of an online-and video-based intervention study. Teach. Teach. Educ. 2016, 59, 45–56. [Google Scholar] [CrossRef]

- Joyce, B.R.; Showers, B. Student Achievement through Staff Development; Association for Supervision and Curriculum Development: Alexandria, VA, USA, 2002. [Google Scholar]

- Kraft, M.A.; Blazar, D.; Hogan, D. The effect of teacher coaching on instruction and achievement: A meta-analysis of the causal evidence. Rev. Educ. Res. 2018, 88, 547–588. [Google Scholar] [CrossRef]

- Lu, H.L. Research on peer coaching in preservice teacher education—A review of literature. Teach. Teach. Educ. 2010, 26, 748–753. [Google Scholar] [CrossRef]

- Prins, F.J.; Sluijsmans, D.M.; Kirschner, P.A. Feedback for general practitioners in training: Quality, styles, and preferences. Adv. Health Sci. Educ. 2006, 11, 289–303. [Google Scholar] [CrossRef]

- Gielen, S.; Peeters, E.; Dochy, F.; Onghena, P.; Struyven, K. Improving the effectiveness of peer feedback for learning. Learn. Instr. 2010, 20, 304–315. [Google Scholar] [CrossRef]

- Gielen, M.; De Wever, B. Structuring peer assessment: Comparing the impact of the degree of structure on peer feedback content. Comput. Hum. Behav. 2015, 52, 315–325. [Google Scholar] [CrossRef]

- Sluijsmans, D.M.; Brand-Gruwel, S.; van Merriënboer, J.J. Peer assessment training in teacher education: Effects on performance and perceptions. Assess. Eval. High. Educ. 2002, 27, 443–454. [Google Scholar] [CrossRef]

- Nicol, D.J.; Macfarlane-Dick, D. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Stud. High. Educ. 2006, 31, 199–218. [Google Scholar] [CrossRef]

- Bandura, A.; Cervone, D. Differential engagement of self-reactive influences in cognitive motivation. Organ. Behav. Hum. Decis. Process. 1986, 38, 92–113. [Google Scholar] [CrossRef]

- Hennissen, P.; Crasborn, F.; Brouwer, N.; Korthagen, F.; Bergen, T. Clarifying pre-service teacher perceptions of mentor teachers’ developing use of mentoring skills. Teach. Teach. Educ. 2011, 27, 1049–1058. [Google Scholar] [CrossRef]

- Lindgren, U. Experiences of beginning teachers in a school-based mentoring program in Sweden. Educ. Stud. 2005, 31, 251–263. [Google Scholar] [CrossRef]

- Beck, C.; Kosnik, C. Components of a Good Practicum Placement: Pre-Service Teacher Perceptions. Teach. Educ. Q. 2002, 29, 81–98. [Google Scholar]

- Moody, J. Key elements in a positive practicum: Insights from Australian Post-Primary Preservice teacher. Ir. Educ. Stud. 2009, 28, 155. [Google Scholar] [CrossRef]

- Allen, J.M.; Wright, S.E. Integrating theory and practice in the pre-service teacher education practicum. Teach. Teach. 2014, 20, 136–151. [Google Scholar] [CrossRef]

- Körkkö, M.; Kyrö-Ämmälä, O.; Turunen, T. Professional development through reflection in teacher education. Teach. Teach. Educ. 2016, 55, 198–206. [Google Scholar] [CrossRef]

- Mauri, T.; Onrubia, J.; Colomina, R.; Clarà, M. Sharing initial teacher education between school and university: Participants’ perceptions of their roles and learning. Teach. Teach. 2019, 25, 469–485. [Google Scholar] [CrossRef]

- Ministerio de Educación, Cultura y Deporte. Real Decreto 96/2014, de 14 de Febrero, por el que se Modifican los Reales Decretos 1027/2011, de 15 de Julio, por el que se Establece el Marco Español de Cualificaciones para la Educación Superior (MECES), y 1393/2007, de 29 de Octubre, por el que se Establece la Ordenación de las Enseñanzas Universitarias Oficiales. BOE 2014, 55, 20151–20154. [Google Scholar]

- Körkkö, M.; Kyrö-Ämmälä, O.; Turunen, T. VEO as Part of Reflective Practice in the Primary Teacher Education Programme in Finland. In Video Enhanced Observation for Language teaching: Reflection and Professional Development; Seedhouse, P., Ed.; Bloomsbury Publishing: London, UK, 2021; pp. 83–96. [Google Scholar]

- Van de Grift, W. Quality of teaching in four European countries: A review of the literature and application of an assessment instrument. Educ. Res. 2007, 49, 127–152. [Google Scholar] [CrossRef]

- Toom, A.; Husu, J. Classroom interaction challenges as triggers for improving early career teachers’ pedagogical understanding and competencies through mentoring dialogues. In Teacher Induction and Mentoring: Supporting Beginning Teachers; Mena, J., Clarke, A., Eds.; Palgrave Macmillan: Cham, Switzerland, 2021; pp. 221–241. [Google Scholar] [CrossRef]

- Batlle, J.; Cuesta, A.; González, M.V. El microteaching como secuencia didáctica: Inicios de los procesos reflexivos en la formación de los profesores de ELE. E-AESLA 2020, 3, 62–73. [Google Scholar]

- Kilgour, P.; Northcote, M.; Herman, W. Pre-service teachers’ reflections on critical incidents in their professional teaching experiences. In Research and Development in Higher Education: Learning for Life and Work in a Complex World; Thomas, T., Levin, E., Dawson, P., Fraser, K., Hadgraft, R., Eds.; Higher Education Research and Development Society of Australasia, Inc.: Melbourne, Australia, 2015; pp. 383–393. [Google Scholar]

- Saariaho, E.; Toom, A.; Soini, T.; Pietarinen, J.; Pyhältö, K. Student teachers’ and pupils’ co-regulated learning behaviours in authentic classroom situations in teaching practicums. Teach. Teach. Educ. 2019, 85, 92–104. [Google Scholar] [CrossRef]

- Sherin, M.G. The development of teachers’ professional vision in video clubs. In Video Research in the Learning Sciences; Goldman, R., Pea, R., Barron, B., Denny, S.J., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2007; pp. 383–395. [Google Scholar]

- Kersting, N. Using video clips of mathematics classroom instruction as item prompts to measure teachers’ knowledge of teaching mathematics. Educ. Psychol. Meas. 2008, 68, 845–861. [Google Scholar] [CrossRef]

- Seidel, T.; Stürmer, K. Modelado y medición de la estructura de la visión profesional en futuros docentes. Rev. Investig. Educ. Estadounidense 2014, 51, 739–771. [Google Scholar] [CrossRef]

- Kleinknecht, M.; Schneider, J. What do teachers think and feel when analyzing videos of themselves and other teachers teaching? Teach. Teach. Educ. 2013, 33, 13–23. [Google Scholar] [CrossRef]

- Körkkö, M.; Morales, S.; Kyrö-Ämmälä, O. Using a video app as a tool for reflective practice. Educ. Res. 2019, 61, 22–37. [Google Scholar] [CrossRef]

- Derry, S.J.; Sherin, M.G.; Sherin, B.L. Multimedia learning with video. In The Cambridge Handbook of Multimedia Learning; Mayer, R.E., Ed.; Cambridge University Press: Cambridge, UK, 2014; pp. 785–812. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).