Abstract

This study investigates the impact of generative AI systems like ChatGPT on semi-structured decision-making, specifically in evaluating undergraduate dissertations. We propose using Davis’ technology acceptance model (TAM) and Schulz von Thun’s four-sides communication model to understand human–AI interaction and necessary adaptations for acceptance in dissertation grading. Utilizing an inductive research design, we conducted ten interviews with respondents having varying levels of AI and management expertise, employing four escalating-consequence scenarios mirroring higher education dissertation grading. In all scenarios, the AI functioned as a sender, based on the four-sides model. Findings reveal that technology acceptance for human–AI interaction is adaptive but requires modifications, particularly regarding AI’s transparency. Testing the four-sides model showed support for three sides, with the appeal side receiving negative feedback for AI acceptance as a sender. Respondents struggled to accept the idea of AI, suggesting a grading decision through an appeal. Consequently, transparency about AI’s role emerged as vital. When AI supports instructors transparently, acceptance levels are higher. These results encourage further research on AI as a receiver and the impartiality of AI decision-making without instructor influence. This study emphasizes communication modes in learning-ecosystems, especially in semi-structured decision-making situations with AI as a sender, while highlighting the potential to enhance AI-based decision-making acceptance.

1. Introduction

Artificial intelligence (AI) is no longer science fiction. It is so close to our daily life that there is even existing legislation and norming like in an ISO standard [1]. But despite these developments, AI has barely entered the consciousness of ordinary users of IT. In an academic context, the importance of AI is well recognized and there are notable efforts to integrate AI into teaching and development of teaching, for example, in curricular development [2] or even to pass an exam [3]. However, AI could also assist in decision-making and grading. We investigated whether AI might be accepted as a decision-maker in semi-structured situations in higher education, such as marking a thesis and how communication between AI and humans can be analyzed.

2. Related Work

2.1. Prerequisites for Machine–Human Communication

Under what conditions do humans accept an AI as communication partner on a level playing field? If we consider this question, we must clarify whether there is a difference between the communication of humans with each other or with an AI. We want to point out some differences:

- Capabilities: AI systems are typically designed to perform specific tasks and are not capable of the same level of understanding and general intelligence as a human being. This means that an AI may be able to perform certain tasks accurately but may not be able to understand or respond to complex or abstract concepts in the same way that a human can [4].

- Responses: AI systems are typically programmed to respond to specific inputs in a predetermined way. This means that the responses of an AI may be more limited and predictable than those of a human, who is capable of a wide range of responses based on their own experiences and understanding of the world [5].

- Empathy: AI systems do not have the ability to feel empathy or understand the emotions of others in the same way that a human can. This means that an AI may not be able to respond to emotional cues or provide emotional support in the same way that a human can [6].

- Learning: While AI systems can be trained to perform certain tasks more accurately over time, they do not have the ability to learn and adapt in the same way that a human can. This means that an AI may not be able to adapt to new situations or learn from its own experiences in the same way that a human can [7].

- Trust: Humans are very critical toward any kind of failure an artificial system is permitting. The level of trust in information being delivered from an AI, in the case of violation, is clearly lower than it would be if the information was delivered from the lips of a human [8].

These differences illustrate that in the case of communication between humans and AIs, interpersonal behavior patterns cannot simply be assumed to evaluate the quality of communication. This is the first research question we address: Are existing interpersonal communication models transferable to AI–human interaction (RQ1)?

2.2. The Four-Sides Model in Communication

To analyze interpersonal interaction, we apply the four-sides model, also referred to as the four-ears model, the communication square [9,10,11]. The four-sides model proposes that every communication has four different dimensions: factual, appeal, self-revealing, and relationship. The model suggests that these four dimensions are always present in communication, and that people can use them to understand the different aspects of a communication and the intentions of the speaker.

Other tools to understand the meaning of communication include the model developed by Richards [12] following a similar line. The four-sides model and Richards’ concept of four kinds of meaning are both frameworks for understanding and analyzing communication. However, they have different purposes and focus on different aspects of communication. The Schultz von Thun four-sides approach models interpersonal communication. Richards’ concept of four kinds of meaning, on the other hand, is a framework for understanding the different types of meaning that can be conveyed through language. Richards identified four types of meaning: denotative, connotative, emotional, and thematic, whereas the Schultz von Thun four-sides model is focused on understanding the different dimensions of communication. The main critics of the four-sides model largely corresponds to general criticism of communication models [13].

We apply the four-sides model in our research focus and benefit from a tool that allows us to analyze the different levels of communication but does not center on a linguistic approach.

The four-sides model gives us a framework for analyzing communication between the AI and humans. The student or faculty member communicating with the AI, on the other hand, is aware of the source of the communication. A framework is needed to capture the various technology acceptance factors that influence the outcome of the communication situation. This leads to our second research question: What must be done to ensure that humans accept AI decisions in semi-structured decision situations (RQ2)?

2.3. Technology Acceptance Model

The technology acceptance model (TAM) is successfully used to analyze and understand the different requirements to reach technology acceptance [14]. Although TAM was introduced as early as 1989, the number of publications in which this model has been used as a basis for analyzing technology acceptance continues to increase [15]. TAM has been criticized [16] and modified several times. Venkatesh developed the widely used UTAUT model (unified theory of acceptance and use of technology) [17]. Although more recent modeling approaches are available, we use TAM because, first, TAMs have a higher application than UTAUT in analyzing AI adoption [15], and second, particularly in the education sector, TAMs have very positive support [18]. In addition, TAM has been shown to integrate successfully with a variety of different theoretical approaches [18]. The combination of technology acceptance analysis with our chosen Schultz von Thun model of communication in the education sector argues for the use of TAM.

2.4. AI in Higher Education

Based on these models, our research aims at identifying the technology acceptance of a thesis marking done by AI. Zhang et al. found that assessment for an academic scholarship benefits from a rule-based cloud computing application system [19]. This structured decision-making does not use an AI, but it shows relevant technology acceptance with the affected students [20]. More than 70 scholars were interviewed to obtain information about the adaptability of AI in the use of automatic short answer grading. The results showed that it is of great value and importance to build trust to understand how the AI is conducting the grading. It was found that trust-building was stronger when the AI was proactively explaining its decision itself. In this case, the AI supported the grading and the final grade was given by a responsible lecturer. But there is also research concerning the options that AI may fill in the future. Kaur et al. state that AI will be of value to perform grading in an academic context [21]. If AI is used in qualitative marking, then communication and cooperation requirements significantly exceed system performance compared to the simple case mentioned above. Current research [22,23] shows that there may well be useful starting points for using AI as a co-decision-maker in academic education. But does this extend to the evaluation of scientific work, for example, bachelor theses, which is also conceivable, and under what conditions? Hence, our research question 3: Is the grading process in higher education, explicitly the grading of a bachelor/master thesis, an acceptable field of application for AI (RQ3)?

3. Research Strategy

To address our research inquiries comprehensively, we employed interviews and conducted qualitative data analysis as the chosen research approach. Given the holistic nature of exploring AI acceptance and understanding the factors influencing AI acceptance in semi-structured decision-making contexts [24], qualitative research was deemed most suitable.

To avoid potential result falsification arising from excessively rigid theoretical specifications and standardized research instruments, we adopted the semi-structured interview method for data collection [25]. Following the recommendation by Saunders et al., a sample size of 5 to 25 interview partners was considered appropriate for achieving satisfactory outcomes in semi-structured qualitative interviews [24].

Our study involved conducting semi-structured interviews with 10 individuals possessing backgrounds or expert knowledge in AI. The interview participants exhibited varying levels of AI expertise and held diverse management positions (For further details see Table 1). Each interview session was scheduled for 60 min and was conducted either in-person or via video chat. The interviewees were grouped based on their levels of experience in AI, ranging from novices to experts, utilizing the competence model developed by Dreyfus [26]. This categorization was implemented to enhance the representativeness and applicability of the scenarios explored, as well as to facilitate the interpretation of our findings.

Table 1.

Details of interview partners.

The interview participants were presented with four distinct scenarios, each characterized by varying levels of personal consequence for the individual facing the AI-generated decision.

- Scenario 1: Partner choice: An AI in the form of a dating app independently selects the life partner for the person concerned.

- Scenario 2: Thesis evaluation: The thesis (bachelor/master) is marked by an AI.

- Scenario 3: Salary increase: Intelligent software decides whether to receive a salary increase.

- Scenario 4: Sentence setting: An AI decides in court on the sentence for the person concerned.

Notably, scenario 2 served as the central focus of our investigation, while the remaining three scenarios functioned as controlling variables in our research design. In scenario 2, our primary aim was to comprehend the implications of generative AI technology when employed for evaluating academic dissertations. To achieve this objective, we operationalized the scenario by submitting a thesis to a system akin to ChatGPT and solicited a comprehensive evaluation, including a suggested final grade. Our specific focus during the analysis was on the emergence of unexpected or adverse outcomes.

Essentially the same questions are asked for each individual scenario using aspects of first impression, trust, acceptance and control, and justice and fairness posed. The question which factors (in communication) increase the acceptance of AI as a decision-maker are of great relevance.

Data analysis was performed following the grounded theory. The grounded theory is a research strategy to generate and analyze qualitative data [27]. The data from the interviews are analyzed, compared, and interpreted to identify underlying structures and to gain options for new theories.

The interviews were recorded and analyzed using MAXQDA 2022. The analysis started with a line-by-line analysis and followed by axial-coding to identify causal connections. To check the relevance, the results were proofed using the coding paradigm from Strauss and Corbin [28]. MAXQDA helped to bring the different concepts together and to avoid redundancies.

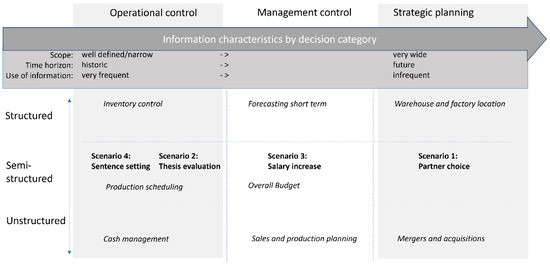

To differentiate the scenarios, we use the framework from [29] (cf. Figure 1). By 1971, information systems were analyzed based on categories and focused explicitly on semi-structured decisions for the decision-making through information systems.

Figure 1.

Scenario used for analysis shown in the framework in [29].

With the set of four scenarios, this research covers the core areas of both academic and corporate decision-making.

To answer the first research question, we had to check whether the four-sides communication model can be used in an AI-to-human communication context. This research was focusing on AI as sender. The four-sides-model used applies the complete four-ears and four-mouths model concept, covering the sender and receiver situation. Here, we stay with the AI as sender.

4. Findings and Discussion

4.1. Applicability

Reflecting upon the four dimensions of communication in light of our interview findings, it becomes apparent that the factual, self-revealing, relationship, and appeal dimensions are variably embraced by our interviewees.

The factual dimension remained unchallenged within the discourse. The general inclination toward embracing an AI system’s factual decision-making process was prevalent. As one interviewee remarked, “I would actually trust that it was decided by the AI based on the facts and not based on what a judge sees in me. Judges also often decide based on external factors.” (Interviewee B-3, Male, Position 95).

The dimension of self-revealing is upheld, as both humans and machines engage in communication at this level. In all scenarios, individuals consciously or unconsciously convey facets of their personality—encompassing emotions, values, and needs. As one interviewee articulated, “If I actually start a data collection somewhere with an intelligence, with a data collection form, I might be more honest in the answers, too, than if I have a homo sapiens sitting across from me and actually have to worry about how the person perceives the answers.” (Interviewee B-3, Male, Position 44). Similarly, the level of self-revelation within artificial intelligence is contingent upon the foundational dataset used for decision-making. The data on which AI operates are derived from human sources, thereby transmitting values and sentiments to the machine.

Furthermore, the communicative dimension encompassing relationships is relevant in AI-human interaction. The interviewees consistently underscored the significance of being addressed at this relational tier, not only in human-to-human communication but also in their expectations from AI. Nonetheless, it became evident that AI’s ability to engage humans at this level is inherently constrained, possibly manifesting through lexical choices or, when necessary, tonal nuances. As articulated by one participant, “Yeah, I think that would be fine for me to have that evaluated by the artificial intelligence, but I think I would still want to have a conversation with a supervisor. I would want to have.” (Interviewee E-3, Position 79).

As per scenario 1, all interview participants indicated a willingness to accept results presented by an AI. In the words of one interviewee, “No, so there I am an engineer again. That’s where I’m pragmatic. As long as it’s the same result, I don’t care who presents it.” (Interviewee J-3, Position 35).

Finding 1.

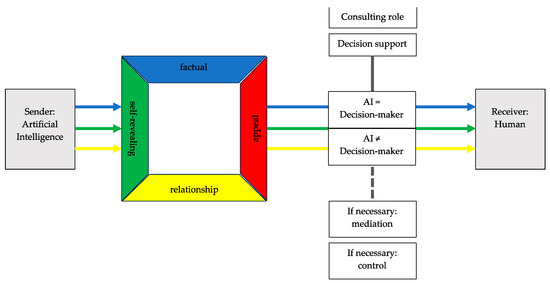

If used in the context of AI-human interaction, the four-sides model can be used but needs an alteration that shows the AI decision-making influence.

To be able to present the necessary adjustments in the four-sides model in a comprehensible way, the original graphic by Schultz von Thun was expanded accordingly (Figure 2).

Figure 2.

Four-sides model adapted to AI–human interaction.

In scenario 2, most of the conducted interviews, specifically 6 out of 10, expressed anticipation for human involvement within a controlling capacity. Notably, one interviewee emphatically dismissed the notion of AI assuming the role of decision-maker for a thesis. Conversely, a subgroup of three interviewees displayed immediate openness to embracing AI-generated decisions. Notably, a prevailing sentiment among the interviewees underscores the substantial impact wielded by the specific function undertaken by the AI within the decision-making context, coupled with its corresponding communicative attributes, upon the level of acceptance observed.

AI cannot shape this factor, because it is predetermined by the framework conditions of the decision situation. Since the influence goes back to the human decision-makers, these aspects were placed between the utterance itself and the human, i.e., on the side of the receiver. First, a distinction was made between whether the AI is or is not a decision-maker.

The graph shows with a solid line that the AI is not used as a decision-maker, but has a consulting or a decision-support function, i.e., it is the basis for the decision. However, if the AI is accepted as a decision-maker, then there are several possibilities, which are illustrated by the dashed line (as this box is optionally applied). In some cases, the AI is accepted directly as the decision-maker and transmitter of the message without further action. In this scenario, the box connected by the dashed line is “omitted”. In other scenarios, however, a human is required to convey the message, or a human controller must be present to take a controlling look at the decision made by the AI.

Finding 2.

According to our interview results, the appeal side in the four-sides model is difficult to accept and is therefore not applied.

The unquestionable existence of factual communication within AI-to-human interactions is a prominent focus in the realm of AI-to-human communication. The discernible self-revelatory dimension is equally discerned, as the values ingrained by programmers/trainers exert a discernible influence upon the AI’s comportment, while the supplied data significantly shape the AI’s resultant determinations. The interpersonal dimension, however, assumes a less pronounced role, given the prevalent anticipation that the recipient of information predominantly seeks an informative rather than relational exchange. Our interviewees have expressed modest expectations concerning the present feasibility of AI attaining this relational objective. Conversely, our interview cohort evinces a lack of endorsement toward the affective dimension. A subset of respondents posits that the AI’s manifestation of emotional acumen need not be overly robust for successful AI-to-human appeals. Further, we ascertain that the AI’s role within the decision-making process remains pivotal to the human recipient’s reception of said decisions.

“Yes, even up to the preparation of the decision, but at the end, someone has to say, I take this. Even if the AI says there’s someone not getting money, then there should be someone there to say, ‘Okay, I can understand why the AI is doing this, and I stand behind it and represent that as a boss. And not hide behind the AI and say, I would have given you more money, but I’m sorry, the AI decided otherwise.’ Very bad!”(Interviewee F-5, M, pos. 89)

4.2. Extensions

What type of factors are influencing the acceptance of decisions made by an AI and are those included in the existing technology acceptance models—like TAM? That was the second research question we wanted to cover with our interviews.

Finding 3.

TAMs and their technology acceptance factors are valid for the AI–human interaction, but the AI’s role in the decision-making process is of extremely high relevance, setting the frame for the overall acceptance of the AI’s acceptance.

The results showed that many existing acceptance factors could be identified, and it was possible to subsume them under the headlines from Davis et al.’s TAM. The following table shows those factors and the relevant headlines. Traceability and transparency were mentioned with the highest frequency and show good fit in the acceptance factor “perceived usefulness”. By a wide margin over the latter, data quality and confidence in the capabilities of AI were cited.

As decision-making by an AI that affects a human being is very specific and up to now not experienced on a wider scale. Therefore, we found acceptance factors that were not fitting in the existing scheme—like personal estimation of the decision outcome.

4.3. Evaluations

The results so far support the clarification of research question 3 on the applicability of AI for grading a bachelor thesis by providing clarity on the adaptations needed to achieve appropriate technology acceptance and the applicability of communication models.

The acceptance level in the educational scenario (scenario 2) was on an average level. The interviewees were mainly accepting the role of an AI in grading an academic work.

“There, I would actually be happy if that were the case, because that’s actually rule-based and comprehensible and consistent, let’s call it that. [...] from the purely scientific perspective and also from the perspective of equality, I think such a procedure makes sense.”(Interviewee I-5, M, pos. 52)

But there was the strong wish presented by five of the interview partners to make sure that there is a human-control instance.

“I am almost certain that a computer judges more objectively than a human being because it makes rule-based judges. I would perhaps wish that someone who has nothing to do with the work, but in especially good or especially bad cases or also in general simply about the result what the AI delivers with the reasoning again very briefly over it looks, whether that makes sense, so as yes as a last check.”(Interviewee J-3, pos. 39)

Overall, there was great acceptance for the decision-making through an AI in scenario 2. But one interviewee was explicitly against the use of an AI for grading a thesis. He states: “I think there should be teachers teaching and evaluating people. That shouldn’t be done by a computer.” (Interviewee G-4, M, pos. 97).

The interview partners showed an average acceptance level for scenarios 2 and 3. Scenario 4 was heavily disputed. Four of the interviewees declared that they would not accept an AI in court, but three declared they could accept it. Obviously, this scenario was affecting the interview partners the most. The overall acceptance levels can be found in Table 2.

Table 2.

Identified factors of technology acceptance.

Finding 4.

Grading by AI achieves average acceptance and, in interaction with a human examiner, even high acceptance values.

The results of this research must be seen in the light of its limitations. The group of interviewees was small because we needed to question AI experts, and the experience with AI in the overall society was limited at the time of the interviews. The expert’s knowledge might give a bias that would not be present by talking to people from the street [30].

5. Further Research

The limitations necessitate further avenues of research. The accessibility of AI tools to the public is steadily expanding, with ChatGPT achieving a historical record as the fastest-growing consumer application [31]. Consequently, conducting a quantitative study to explore the acceptance of AI-generated decisions would prove highly insightful.

The broad applicability of the four-sides model was unsurprising, given the limited time available for the development of distinct communicational behaviors when interacting with an AI. However, our emphasis hitherto has been on the sender’s perspective. It remains pertinent to investigate whether an AI, placed in the role of a recipient, could discern varying levels of communication.

Most participants in our interviews expressed a notable level of comfort with AI’s role as a decision supporter. Nonetheless, their primary focus remained on humans as the ultimate arbiters in decision-making processes.

During our extensive interview sessions, we encountered an individual who displayed a highly positive outlook regarding AI’s potential as a decision-maker. This optimism stemmed from the belief that an AI, in comparison to a human decision-maker, would be less susceptible to the influence of discriminatory tendencies. This aspect presents an intriguing avenue for future research: exploring whether AI, by its nature, possesses greater objectivity in decision-making processes compared to humans.

6. Conclusions

This study reveals that the four-sides model proves suitable for analyzing communication between an AI sender and a human receiver. However, we have identified transparency as a pivotal factor in ensuring a high level of acceptance in the context of AI’s role in decision-making processes. To analyze achieved acceptance, the well-established technology acceptance models (TAMs) can be utilized, albeit with the incorporation of additional factors necessitated by the need for heightened transparency.

Despite the limitations imposed by a relatively small group of interviewees, our findings indicate that we possess adequate groundwork to delve into the analysis of AI–human communication. Moreover, we have garnered valuable insights on its potential application within the domain of higher education, particularly concerning the evaluation of academic theses. Notably, the notion that AI could mitigate the individual biases of an examiner in the grading process of bachelor’s or master’s theses opens intriguing and valuable avenues for the application of artificial intelligence in this realm. The description of modifications made to the existing models warrants further investigation by the academic community.

Author Contributions

Conceptualization, C.G. and T.C.P.; methodology, C.G. and T.C.P.; software, n/a.; validation, C.G., F.H. and T.C.P.; formal analysis, O.B.; investigation, F.H. and O.B.; resources, C.G. and T.C.P.; data curation, C.G. and O.B.; writing—original draft preparation, F.H.; writing—review and editing, C.G., F.H. and T.C.P.; visualization, C.G., F.H. and O.B.; supervision, C.G. and T.C.P.; project administration, C.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Munich University of Applied Sciences’ Statutes for Safeguarding Good Scientific Practice and Handling Suspected Cases of Scientific Misconduct.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Our interview participants were given the assurance that the transcriptions of their interviews would solely serve the purpose of the research project conducted at Munich University of Applied Sciences.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Samoili, S.; López Cobo, M.; Delipetrev, B.; Martínez-Plumed, F.; Gómez, E.; Prato, G.d. AI Watch: Defining Artificial Intelligence 2.0: Towards an Operational Definition and Taxonomy for the AI Landscape; Publications Office of the European Union: Luxembourg, 2021; ISBN 978-92-76-42648-6.

- Southworth, J.; Migliaccio, K.; Glover, J.; Glover, J.; Reed, D.; McCarty, C.; Brendemuhl, J.; Thomas, A. Developing a model for AI Across the curriculum: Transforming the higher education landscape via innovation in AI literacy. Comput. Educ. Artif. Intell. 2023, 4, 100127. [Google Scholar] [CrossRef]

- Terwiesch, C. Would Chat GPT Get a Wharton MBA?: A Prediction Based on Its Performance in the Operations Management Course. Available online: https://mackinstitute.wharton.upenn.edu/wp-content/uploads/2023/01/Christian-Terwiesch-Chat-GTP-1.24.pdf (accessed on 10 July 2023).

- Korteling, J.E.H.; van de Boer-Visschedijk, G.C.; Blankendaal, R.A.M.; Boonekamp, R.C.; Eikelboom, A.R. Human- versus Artificial Intelligence. Front. Artif. Intell. 2021, 4, 622364. [Google Scholar] [CrossRef] [PubMed]

- Hill, J.; Randolph Ford, W.; Farreras, I.G. Real conversations with artificial intelligence: A comparison between human–human online conversations and human–chatbot conversations. Comput. Hum. Behav. 2015, 49, 245–250. [Google Scholar] [CrossRef]

- Alam, L.; Mueller, S. Cognitive Empathy as a Means for CharacterizingHuman-Human and Human-Machine Cooperative Work. In Proceedings of the International Conference on Naturalistic Decision Makin, Orlando, FL, USA, 25–27 October 2022; Naturalistic Decision Making Association: Orlando, FL, USA, 2022; pp. 1–7.

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; 14th Printing 2022; Cambridge University Press: Cambridge, UK, 2022; ISBN 978-1-107-05713-5. [Google Scholar]

- Alarcon, G.M.; Capiola, A.; Hamdan, I.A.; Lee, M.A.; Jessup, S.A. Differential biases in human-human versus human-robot interactions. Appl. Ergon. 2023, 106, 103858. [Google Scholar] [CrossRef] [PubMed]

- Schulz von Thun, F. Miteinander Reden: Allgemeine Psychologie der Kommunikation; Rowohlt: Hamburg, Germany, 1990; ISBN 3499174898. [Google Scholar]

- Ebert, H. Kommunikationsmodelle: Grundlagen. In Praxishandbuch Berufliche Schlüsselkompetenzen: 50 Handlungskompetenzen für Ausbildung, Studium und Beruf; Becker, J.H., Ebert, H., Pastoors, S., Eds.; Springer: Berlin, Germany, 2018; pp. 19–24. ISBN 3662549247. [Google Scholar]

- Bause, H.; Henn, P. Kommunikationstheorien auf dem Prüfstand. Publizistik 2018, 63, 383–405. [Google Scholar] [CrossRef]

- Richards, I.A. Practical Criticism: A Study of Literary Judgment; Kegan Paul, Trenche, Trubner Co., Ltd.: London, UK, 1930. [Google Scholar]

- Czernilofsky-Basalka, B. Kommunikationsmodelle: Was leisten sie? Fragmentarische Überlegungen zu einem weiten Feld. Quo Vadis Rom.—Z. Für Aktuelle Rom. 2014, 43, 24–32. [Google Scholar]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319. [Google Scholar] [CrossRef]

- García de Blanes Sebastián, M.; Sarmiento Guede, J.R.; Antonovica, A. Tam versus utaut models: A contrasting study of scholarly production and its bibliometric analysis. TECHNO REVIEW Int. Technol. Sci. Soc. Rev./Rev. Int. De Tecnol. Cienc. Y Soc. 2022, 12, 1–27. [Google Scholar] [CrossRef]

- Ajibade, P. Technology Acceptance Model Limitations and Criticisms: Exploring the Practical Applications and Use in Technology-Related Studies, Mixed-Method, and Qualitative Researches; University of Nebraska-Lincoln: Lincoln, NE, USA, 2018. [Google Scholar]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425. [Google Scholar] [CrossRef]

- Granić, A. Technology Acceptance and Adoption in Education. In Handbook of Open, Distance and Digital Education; Zawacki-Richter, O., Jung, I., Eds.; Springer Nature: Singapore, 2023; pp. 183–197. ISBN 978-981-19-2079-0. [Google Scholar]

- Zhang, Y.; Liang, R.; Qi, Y.; Fu, X.; Zheng, Y. Assessing Graduate Academic Scholarship Applications with a Rule-Based Cloud System. In Artificial Intelligence in Education Technologies: New Development and Innovative Practices; Cheng, E.C.K., Wang, T., Schlippe, T., Beligiannis, G.N., Eds.; Springer Nature: Singapore, 2023; pp. 102–110. ISBN 978-981-19-8039-8. [Google Scholar]

- Schlippe, T.; Stierstorfer, Q.; Koppel, M.T.; Libbrecht, P. Artificial Intelligence in Education Technologies: New Development and Innovative Practices; Cheng, E.C.K., Wang, T., Schlippe, T., Beligiannis, G.N., Eds.; Springer Nature: Singapore, 2023; ISBN 978-981-19-8039-8. [Google Scholar]

- Kaur, S.; Tandon, N.; Matharou, G. Contemporary Trends in Education Transformation Using Artificial Intelligence. In Transforming Management Using Artificial Intelligence Techniques, 1st ed.; Garg, V., Agrawal, R., Eds.; CRC Press: Boca Raton, FL, USA, 2020; pp. 89–104. ISBN 9781003032410. [Google Scholar]

- Zhai, X.; Chu, X.; Chai, C.S.; Jong, M.S.Y.; Istenic, A.; Spector, M.; Liu, J.-B.; Yuan, J.; Li, Y.; Cai, N. A Review of Artificial Intelligence (AI) in Education from 2010 to 2020. Complexity 2021, 2021, 8812542. [Google Scholar] [CrossRef]

- Razia, B.; Awwad, B.; Taqi, N. The relationship between artificial intelligence (AI) and its aspects in higher education. Dev. Learn. Organ. Int. J. 2023, 37, 21–23. [Google Scholar] [CrossRef]

- Saunders, M.; Lewis, P.; Thornhill, A. Research Methods for Business Students, 7th ed.; Pearson Education Limited: Essex, UK, 2016; ISBN 978-1292016627. [Google Scholar]

- Adeoye-Olatunde, O.A.; Olenik, N.L. Research and scholarly methods: Semi-structured interviews. J. Am. Coll. Clin. Pharm. 2021, 4, 1358–1367. [Google Scholar] [CrossRef]

- Dreyfus, S.E. The Five-Stage Model of Adult Skill Acquisition. Bull. Sci. Technol. Soc. 2004, 24, 177–181. [Google Scholar] [CrossRef]

- Glaser, B.G.; Strauss, A.L. The Discovery of Grounded Theory: Strategies for Qualitative Research; Routledge: London, UK, 2017; ISBN 0-202-30260-1. [Google Scholar]

- Corbin, J.M.; Strauss, A. Grounded theory research: Procedures, canons, and evaluative criteria. Qual. Sociol. 1990, 13, 3–21. [Google Scholar] [CrossRef]

- Gorry, A.G.; Morton, M.S. A Framework for Information Systems; Working Paper Sloane School of Management; MIT: Cambridge, MA, USA, 1971. [Google Scholar]

- Vollaard, B.; van Ours, J.C. Bias in expert product reviews. J. Econ. Behav. Organ. 2022, 202, 105–118. [Google Scholar] [CrossRef]

- Hu, K. ChatGPT Sets Record for Fastest-Growing User Base—Analyst Note. Available online: https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/ (accessed on 28 April 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).