Abstract

This study aims to describe the analysis of the validity and reliability of an instrument that determines the self-perception of natural science teachers using the STEAM approach regarding the planning, development, and evaluation of their pedagogical activities. For its design, empirical studies were obtained from a bibliographic review, theoretical criteria on self-perception and STEAM approach, and population characteristics. For the instrument quality assessment, content validity parameters were analyzed by experts, and construct validity and reliability were assessed with the help of the SPSS statistical package. Ten educational doctors served as expert judges and 143 teachers (pre-service and in-service) participated in the pilot test. As the main finding, the instrument applied to a sample presents a high reliability coefficient (Cronbach’s alpha = 0.920) and validity (KMO = 0.903) in three factors after performing a factor analysis. Thus, it is concluded that the instrument has structure and coherence both in its internal consistency and meaning grid, which facilitates progress in understanding the self-perception of using the STEAM approach in didactic practices in natural sciences.

1. Introduction

In the educational field, the approach involving science, technology, engineering, arts, and mathematics (STEAM) is identified as a recently developed interdisciplinary event [1]. It has evolved and takes different terms according to the flexibility of the environment in which it is developed [2], so it has generated positive expectations regarding its results in learning and solving daily problems from a more holistic vision [3,4]. From this perspective, world organizations have set their sights on the STEAM approach in the search to promote its effective integration and as a strategic method to achieve successful education [5,6]. Meanwhile, the scientific community has focused its attention on studying the interdisciplinary STEAM educational phenomenon with a deeper vision in order to advance both conceptual and practical results [7,8,9,10,11]. One of the guidelines proposed to meet this advance is the possibility of providing teachers with an educational opportunity to close the gaps between a changing world and new educational challenges in order to respond to the need for comprehensive training aimed at motivating their learners, especially high school students, to study careers involving the STEAM areas [12,13,14]. In this context, some studies report that in order to achieve these challenges, it is necessary to start from appropriate teacher training with this approach, a curricular alignment to integrate other disciplines in their subjects, and the consolidation of learning communities for the strengthening of sustainable educational networks [15,16]. On the other hand, there is a solid consensus that the STEAM approach has a relatively low level of standardization and that the theorization criteria are highly diverse, so some studies differ on its vision in terms of inter-, trans- and multidisciplinarity or even the addition and positioning of letters in its acronym [5,17,18,19], which is also a challenge to overcome. In part, all of this likely creates some teacher barriers, especially in the natural sciences, that limit the implementation of STEAM in their classrooms [13,20,21].

The STEAM approach and teacher education in natural science teaching is a field that demands considerable attention [9,22,23,24]. However, natural science teachers might feel more familiar with the STEAM approach because its structure involves the area of knowledge in which they can be considered experts. Several researches suggest that further training and ongoing professional development on this holistic perspective is needed [25,26,27,28] in order to expand the knowledge of the integration process, basic strategies and skills needed for its implementation in the classroom [29]. Thus, the interest in teacher training in the natural sciences from an interdisciplinary viewpoint, as well as the discussion on how the curriculum should be integrated with different areas of knowledge to make it evident in the curricula, along with the most appropriate way to implement a STEAM proposal in the classroom and the evaluation of the learning results of disciplinary integration, are in a progressive condition [30,31,32,33,34,35].

In this context, it is important to strength the pedagogical practices of natural science teachers by incorporating STEAM education within the classroom [36,37,38]. Likewise, the integral curricular composition of STEAM does not limit to the use of technological instruments, dismissing other significant dimensions of science didactics [2,27,39]. Furthermore, the STEAM approach promotes innovative skills in the classroom, as proposed by this holistic vision, and it does not become a task under the responsibility of science teachers. Finally, it is necessary to understand that these strategies might transform lesson planning, development, and evaluation in science classrooms [40]. Everything mentioned above allows us to know what is expected from natural science teachers in terms of their experience and professional development, as well as in the identification of specific needs for the implementation of the STEAM in their pedagogical practices [20,41]. To identify these shortcomings, it is necessary, in some way, to measure self-perception levels of STEAM’s effectiveness in transforming pedagogical practices in science education [42,43].

For this reason, assessing teachers’ self-perception is considered a fundamental condition to favor pedagogical strategies and curriculum design [44]. Thus, defining the perception of STEAM use in terms of its application in the classroom to obtain broader scopes towards its correct implementation may require further attention [11].

To be precise, self-perception is defined from the social cognitive theory as a cognitive process in which a self-analysis of attitudes as a teacher is presented, and a cause–effect of their behaviors is observed [44,45,46]. On the other hand, the importance of the self-perception of the effectiveness of STEAM in the classroom by natural science teachers lies in the influence that this has on the individual’s thinking, actions, and emotions [20,28,41,47,48]. The higher the self-perception of efficacy, the higher the academic achievement, which is consistent with the research of Herro and Quigley [49], who states that the scarce empirical evidence in the classroom remains the efficacy of the STEAM approach.

In this aspect, it is essential to highlight that after applying a teacher professional development for its promotion, studies on self-perception and beliefs in STEAM have reported positive transformations [20,48,50,51,52,53], from classroom management in terms of organizational innovation, the recognition of the value of STEAM education as a teaching criterion, the motivation for learning new physical and technological tools for problem-solving in the context, the stimulation to acquire new teaching methodologies in line with the dynamics of today’s world, and the strengthening of participation in networks and learning communities. However, are teachers’ perceptions of using the STEAM approach influencing the design of their classes? It is interesting to establish where these changes in self-perceptions of the use of STEAM are evident concerning the pedagogical practices observed in planning, development, and evaluation. Thus, it is possible to glimpse the effect that STEAM education has on science teaching [54]. Additionally, different studies have reported that teachers’ self-perception regarding science teaching is favorable, considering that continuous teacher training in scientific and didactic content is an opportunity to improve classroom practices. Thus, it is relevant to identify the key concepts that the teacher must master to achieve a conceptual change, the development of innovative strategies to address them, and the evaluation of the degree of understanding them in an interdisciplinary manner [28,55,56,57].

Although some research has advanced the definition of STEAM and its application from empirical studies that could provide recommendations for its use in the classroom [58,59,60,61], there is scarce evidence of a diagnostic instrument to assess the self-perception of the use of STEAM approach by natural science teachers in terms of planning, development, and evaluation of one of their classes.

Thus, this study aims to validate an instrument to observe the levels of self-perception of natural science teachers regarding the STEAM approach in their classroom practices. Its design was based on the work of Espinosa-Ríos [62], who developed a similar instrument based on the theoretical framework proposed by the German government to observe classes in its institutions abroad https://www.auslandsschulwesen.de/Webs/ZfA/DE/Home/home_node.html (accessed on 10 February 2023).

2. Materials and Methods

2.1. Instrument

The instrument was initially composed of 30 items in three categories: preparation, development, and evaluation of a natural science class in which the STEAM approach is investigated. This identification of the categories originated the elaboration of the items (see Table 1) that respond to a frequency scale (1 = Never, 2 = Rarely, 3 = Occasionally, 4 = Frequently, 5 = Very often) [63,64,65,66]. At the same time, this instrument collects data from the participants regarding their level of experience, age, educational level, and place where they teach anonymously.

Table 1.

Categories and items of the instrument submitted to the expert judges.

2.2. Validation Participants

A group of expert judges was formed to validate the instrument’s content. The criteria to be considered were (1) having a doctorate in education with training in natural sciences or affinity with the STEAM approach, (2) having participated in teacher training processes in natural sciences, and that their line of research is in education or teacher training, and (3) having proven experience in academic research on education.

2.3. Procedure

This study was conducted within the descriptive analysis framework with a mixed approach [63,67]. It was divided into three phases: content validity, construct validity, and reliability.

2.4. Content Validity

For content validity, the expert judges were provided with a rubric that evaluated each item’s sufficiency, clarity, relevance, coherence, and observations. For each question, they had to indicate their degree of agreement (1–4 Likert scale points, where four was the highest agreement and one the lowest agreement in terms of level). When the mean overall opinion per item was >3 ± standard deviation (SD), it was considered valid for discordance [68]. Additionally, the content validation factor for the ten expert judges was calculated using Aiken’s V coefficient (V) where V ≥ 0.8 [69,70,71] and a confidence interval value (CI) greater than 0.50 [72] on each item were considered to be approved taking into account the study of George-Reyes and Valerio-Ureña [73].

2.5. Pilot Participants

After validating the instrument’s content, a pilot test was conducted with natural science teachers in training and service. The non-probabilistic sample comprised 143 volunteer teachers who have oriented the area [74]. The average age for the participants was 36.7 ± 11.840. Women participated with 51%, and the two most representative age ranges were between 21 and 35 years (36.6%) and 35 and 50 years (42.7%). With regard to years of experience, the average was 11.447 ± 10,079. In this case, 13.3% reports less than a year; 25.9% between one and six years; 21% between 7 and 12 years; 25.2% between 13 and 24 years; and the remaining 14% mentioned an experience higher than 25 years. Overall, 75.3% of the participants had an undergraduate in science teaching, whereas 8.5% belonged to the engineering field, and the others obtained tittles in science field. It is important to mention that 47.6% of the participants hold a master’s degree and 4.9% have obtained their Ph.D.

2.6. Construct Validity

For the effectiveness of the construct, the data provided by the pilot test were analyzed using SPSS 25 software. Subsequently, the Kaiser-Meyer-Olkin (KMO) and Bartlett tests were performed, in which values higher than 0.7 show an accurate correspondence of the items with their categories. Additionally, this result indicates whether the instrument is a good candidate for factor analysis as a statistical strategy for scale validation [75]. On the other hand, Bartlett’s test (small values less than 0.05) verifies that the variables are correlated. Based on these results, an exploratory factor analysis was carried out, factor discrimination of the scale was determined by principal component analysis, and varimax orthogonal rotation was used to analyze factor loadings. Validity of the scale was determined by testing total item correlation of the scale by Pearson’s r test

2.7. Reliability

The instrument was analyzed through the reliability index offered by Cronbach’s alpha coefficient, which gives the exposed items a significance level. For an adequate level of reliability, this coefficient should be higher than 0.7. Coefficient of internal consistency was conducted to measure reliability of the scale. Cronbach’s alpha reliability coefficient, split-half reliability correlation, Spearman–Brown formula, and Guttmann split-half reliability formula were used to determine internal consistency level. [76].

3. Results and Discussion

3.1. Content Validity

Table 2 shows the results of the content validation of the instrument submitted to expert judgment. In the validation process, items 3, 10, and 21 were discarded because they did not meet the permanence criteria. On the other hand, items 4, 5, 7, 8, 9, 11, 12, 14, 16, 17, 18, 19, 28, and 30 were restructured because they were on the tolerance border of 3 in X, 0.8 for VAiken, and 0.5 for ICI. Similar studies to this one, in different fields of knowledge, agree on the treatment of the data and the obtaining of results presented here [70,73,77,78].

Table 2.

Results of content validation by expert judges.

With complete attention to the data provided by the experts in verifying the sufficiency, clarity, coherence, relevance, and evaluation of each item, they were reformulated. Table 3 shows some of the most representative comments of their judgments (Jz# enumeration). Items 4, 7, and 9 show examples of restructuring of the instrument in aspects of form, such as the mixture of typologies, good wording, appropriate use of adjectives, and recommendations, such as the expansion of information and the observation of parity between items.

Table 3.

Content validation restructuration by expert judges.

Thus, with this analysis provided by the judges in a qualitative and quantitative form, nine items were eliminated [79,80].

In general, from the evaluation of the instrument, it is essential to highlight that 80% of the judges considered the sufficiency, clarity, and coherence of the instrument to be between adequate and high, which means that the items are easy to understand, their syntax is appropriate, there is a logical congruence between the items and the categories chosen, and they go in the same direction, which is enough to obtain a measurement by making the suggested changes. On the other hand, 63.4% consider the instrument pertinent and closely related to the established purpose. These results highlight the importance and relevance of the need to have closer diagnostic evidence of self-awareness in conducting a natural science class with an interdisciplinary approach, which is the subject of this study. It is so that other researchers, in a similar way, have been concerned about delving into this STEAM phenomenon, taking into account the field of science [28,49].

Finally, as a result of the content validity of the instrument and the theoretical strengthening of the self-perception of the effectiveness of the use of the STEAM approach in the natural sciences classroom [28,49,62], 23 items organized in three categories and eight descriptors are consolidated (see Table 4). The categories were selected a priori and tested for construct validity in the modified instrument, as seen in Appendix A.

Table 4.

Instrument categories and descriptors.

3.2. Construct Validity

After the adequacy of the data, the KMO and Bartlett’s test is performed. The results are shown in Table 5. This covariance matrix shows a good value, which confirms that the items are related to the selected descriptors and confirms that they can be subjected to a factor analysis [81].

Table 5.

Kaiser-Meyer-Olkin (KMO) and Bartlett’s test processed in SPSS 25.

Bartlett’s test shows an appropriate value for the significance of the instrument. This result suggests that the variables analyzed are sufficiently correlated in the sample. Additionally, when the significance is less than 0.05, the instrument can be a candidate for a dimensional analysis due to its low significance values [82].

In the first analysis, when natural factor distribution was examined, there were five factors whose eigenvalue were above 1. However, a considerable part of the items was gathered under three factors, and eigenvalues of these factors were quite large. Thus, factor analysis started as a three-factor solution.

Exploratory factor analysis was carried out based on the obtained scores. Scale discrimination was determined from factor loadings and principal component analysis. For that purpose, the varimax steep rotation technique was used. The factor analysis completed in this study determined whether the items were grouped into the factors suggested [83,84,85,86]. In order to discriminate the items whose loadings are divided into the proposed factors, the following exclusion criteria were considered (in the principal component analysis): (a) the factor loadings should be less than 0.300 and (b) the difference between the factor loadings should be at least 0.100 [83].

Factor loadings are the elementary judgment for evaluating the results of factor analysis [87]. The high factor loading indicates that the variable can be added to the proposed factor [83].

The factor loadings of 23 items of the scale were located between 0.181 and 0.600 without being exposed to rotation; however, by means of the varimax steep rotation technique, they were located between 0.425 and 0.775. The literature for behavioral science suggests that at least 40% of the total variance is sufficient [88,89]. Thus, 49.541% of the total variance is explained by the items and factors included in the scale of this study.

Consequently, from these processes, Table 6 presents the respective results of the loadings of the 23 items that resulted from the scale according to the factors and their quantities related to their eigenvalues and variance.

Table 6.

Factor analysis results of the scale as per factors.

The contribution of the total variance of the first seven items, which correspond to the preparation of the class, is displayed in Table 6. As can be seen, it contributes 21%, while for the next nine items, corresponding to the development of the class, it contributes 15.2%. For the evaluation facto, a value of 13.3% was obtained. Thus, this factor analysis confirms the existence of an underlying construct in the instrument that groups most of the items in these factors.

In this part, correlation between scores of each item in factors and factor scores was calculated and the level of serving for general purpose was tested for each item. Item–factor correlation values of each item are presented in Table 7.

Table 7.

Item–factor scores correlation analysis.

This confirms that a data set is grouped in a standard qualifier limited by the initial eigenvalues. From this, it is highlighted that the factors (categories) of the instrument and their eigenvalues exceed unity, which confirms the a priori determination made in the content validity since it is statistically indicated that the items agree and saturate the model with three categories.

3.3. Reliability through Cronbach’s Alpha

Reliability analysis of each factor as well as the global scale are shown in Table 8, and it was calculated using Cronbach’s alpha coefficient.

Table 8.

Reliability analysis according to the factors.

According to Table 8, these results might indicate that both the whole of the scale and their factors are consistent measurements.

The total statistic is evaluated to determine whether excluding any item would increase Cronbach’s alpha. However, the change is insignificant if a modification is made, and there would be a risk of losing some information. This result indicates that the items in the instrument, from the results obtained in the sample, have a high correlation and favorable internal consistency [90].

4. Conclusions

Considering the obtained results, the five-digit Likert-type scale can be grouped into 23 items and three factors. The evaluation by expert judges using the VAiken and an exploratory factor analysis was carried out in order to verify the structure of the instrument. In this context, the scale has structural validity as it was evidenced by the factor analysis, a factor loading, and the explanation of the factors from the total variance. Thus, based on the obtained results of the exploratory factor analysis, for a variance between the score achieved for each item, and the score achieved for the factor to which the item belongs, it was adequate. [88].

Between 0.590 and 0.770 for the preparation; 0.488 and 0.799 for the development; and 0.461 and 0.764 for the evaluation of the class with STEAM approach is the variance through which each item of the scale and the points achieved of the factor to which the item corresponds oscillate. In this sense, it is possible to uphold that each factor together with its items fulfills a significant function in measuring the quality of the scale in general, where each item differs in the expected level.

The internal consistency coefficients were calculated using Cronbach’s alpha. The obtained value was 0.920. In turn, the reliability coefficients of each factor were situated between 0.782 and 0.813. Therefore, the scale can perform reliable measurements in relation to these values. As a result, this instrument is a valid and reliable scale that can be used to determine the self-perception of science natural teachers regarding the use of the STEAM approach.

The validation process of the instrument has yielded data that allows for the conclusion that the “Self-perception of natural science teachers about pedagogical practices with STEAM approach” survey has a high metric quality to evaluate the self-perception that the natural science teacher has regarding the use of the STEAM approach when planning, developing, and evaluating one of their classes.

The items correlate appropriately with their categories according to the validity indications, content, and construct. Yet, from the statistical point of view, it is demonstrated that the items are grouped into common factors that correspond to the three categories considered in the content validity, which gives the instrument a multidimensional quality.

Its high reliability guarantees that it is a consistent and reliable instrument because its significance, coherence, syntax, and content are distinctive, evidenced by the high correlation between its variables. This reliability result confirms that the items have a high relationship with the descriptors. It allows the interpretation of the data to be valid and to approach the understanding of the teacher’s self-perception regarding science classes, generating reflection processes and thus strengthening their performance. This adaptation can be similar in international environments, where self-perception influences the thinking patterns, emotionality, and actions teachers consider for their classroom practices.

It is hoped that this instrument can inform future research on the reflection of the natural science teacher on the use of the STEAM approach in pedagogical practices and if these impact the design of their classes in terms of planning, development, and evaluation.

Author Contributions

A.B.-B. and E.C.-T.; Methodology, A.B.-B. and E.C.-T.; Validation, A.B.-B. and E.C.-T.; Formal analysis, A.B.-B. and E.C.-T.; Investigation, A.B.-B. and E.C.-T.; Writing—original draft, E.C-T.; Writing—review & editing, A.B.-B. and E.C.-T.; Supervision, A.B.-B.; Project administration, A.B.-B. and E.C.-T.; Funding acquisition, A.B.-B. and E.C.-T.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Decla-ration of Helsinki and approved by the by Comité Ético Científico de la Facultad de Educación de la Universidad Antonio Nariño de Bogotá, Colombia.

Informed Consent Statement

Written informed consents have been obtained from participants to publish this paper, Statements were approved by the Institutional Review Board (or Ethics Committee) of Antonio Nariño University of Bogotá, Colombia (protocol code 02, 18 April 2023).

Data Availability Statement

Due to privacy and confidentiality issues the data are not available.

Conflicts of Interest

The authors declare no conflict of interest.

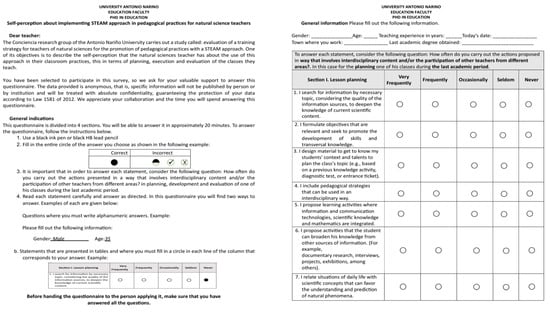

Appendix A

Figure A1.

Final instrument.

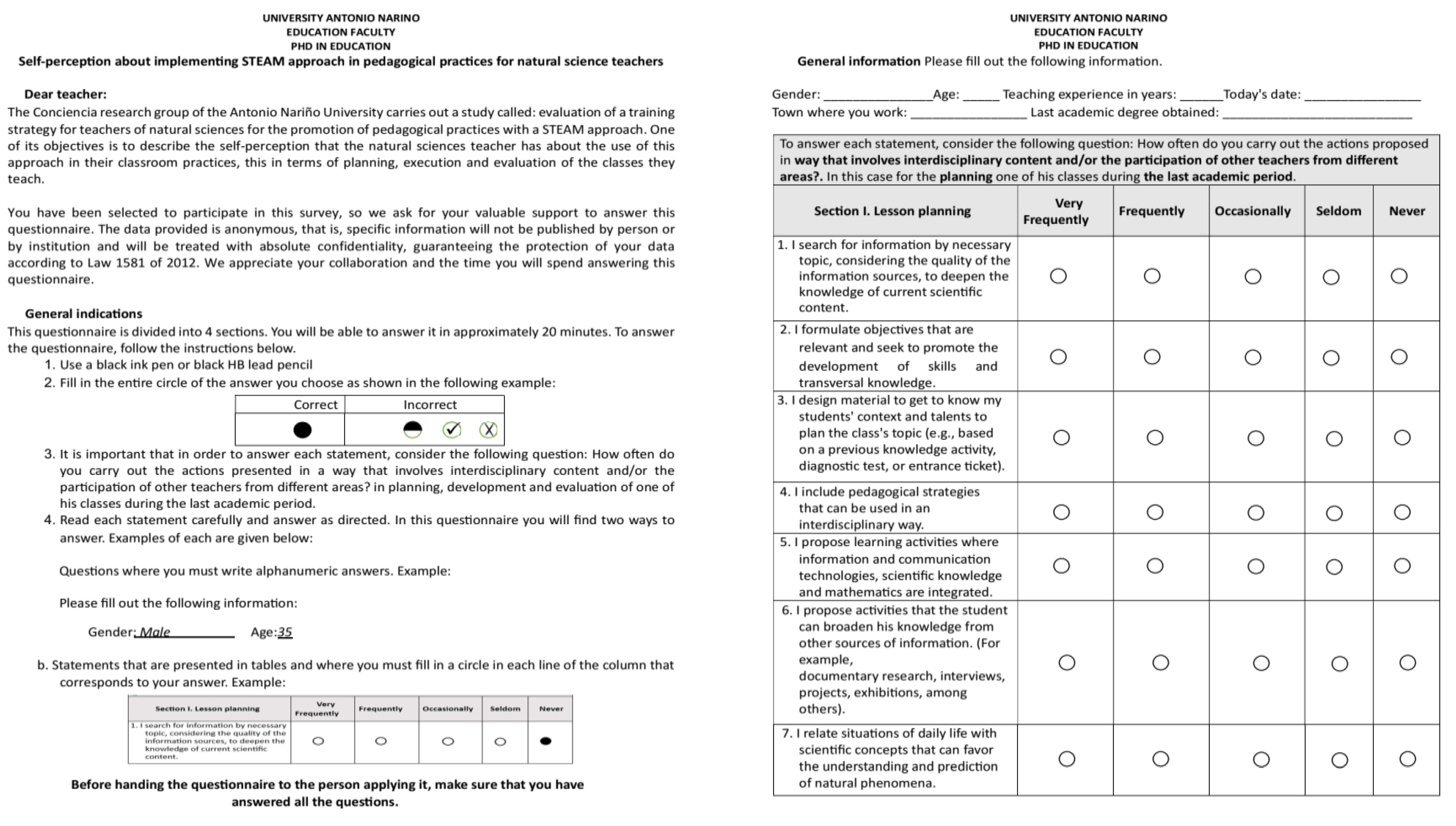

Figure A1.

Final instrument.

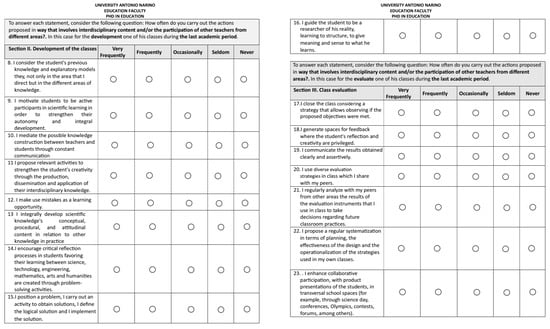

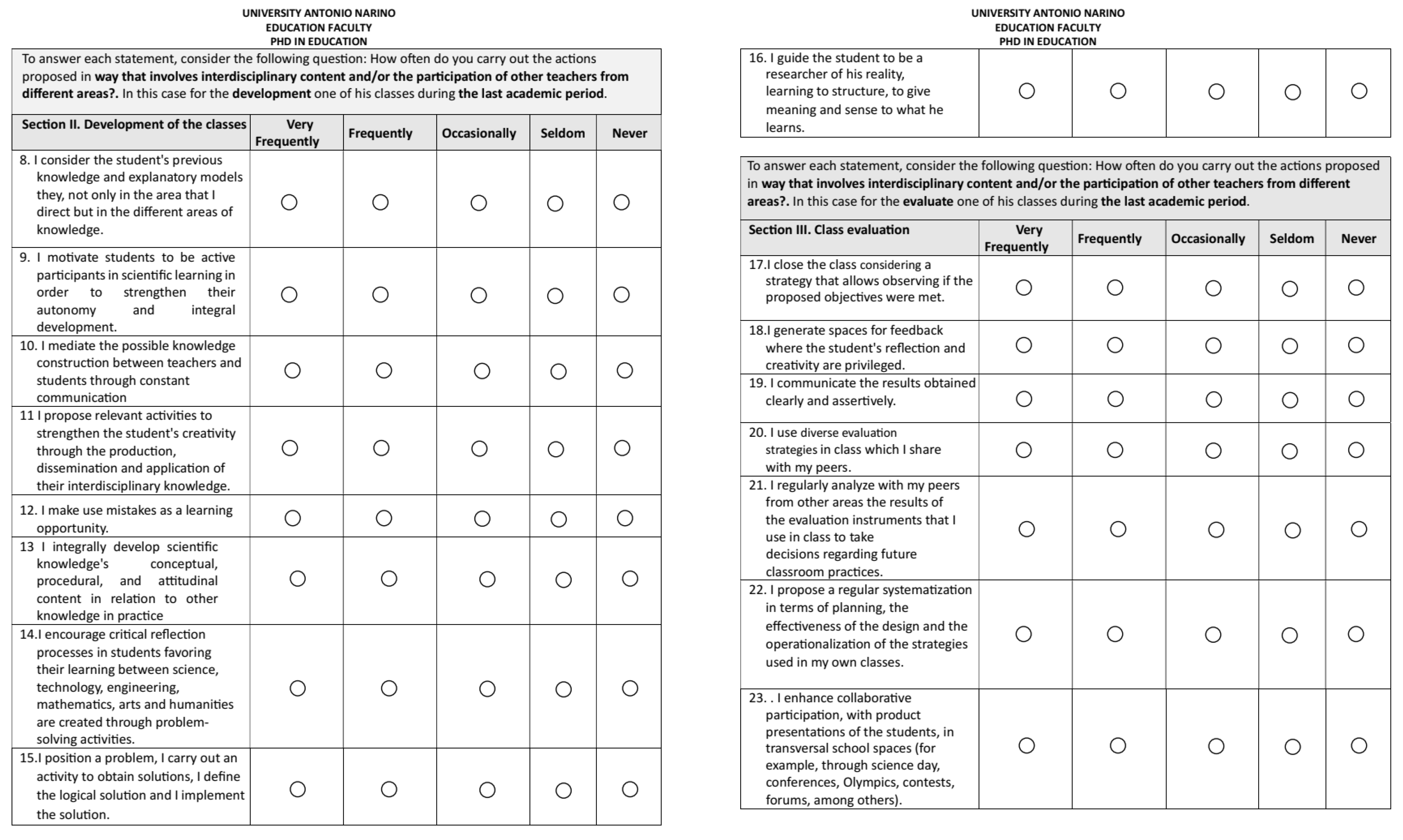

Figure A2.

Final instrument.

Figure A2.

Final instrument.

References

- Yakman, G.; Lee, H. Exploring the Exemplary STEAM Education in the U.S. as a Practical Educational Framework for Korea. J. Korean Assoc. Sci. Educ. 2012, 32, 1072–1086. [Google Scholar] [CrossRef]

- Toma, R.; García-Carmona, A. Of STEM we like everything but STEM. A critical analysis of a buzzing educational trend. Enseñanza Las Cienc. 2021, 39, 65–80. [Google Scholar] [CrossRef]

- Greca, I.; Ortiz-Revilla, J.; Arriassecq, I. Diseño y evaluación de una secuencia de enseñanza-aprendizaje STEAM para Educación Primaria. Rev. Eureka Sobre Ensen. Divulg. Cienc. 2021, 18, 1–20. [Google Scholar] [CrossRef]

- Vega, F.; Morales, S.; Ticona, R.; Gonzales-Macavilca, M.; Iraola-Real, I. Results between STEM and non-STEM Teaching for Integral Learning in Primary School Children in Lima (Peru). In Proceedings of the 2019 IEEE Sciences and Humanities International Research Conference (SHIRCON), Lima, Peru, 13–15 November 2019. [Google Scholar] [CrossRef]

- Harris, A.; de Bruin, L. Secondary school creativity, teacher practice and STEAM education: An international study. J. Educ. Chang. 2017, 19, 153–179. [Google Scholar] [CrossRef]

- Liao, X.; Luo, H.; Xiao, Y.; Ma, L.; Li, J.; Zhu, M. Learning Patterns in STEAM Education: A Comparison of Three Learner Profiles. Educ. Sci. 2022, 12, 614. [Google Scholar] [CrossRef]

- Anisimova, T.I.; Sabirova, F.M.; Shatunova, O.V. Formation of design and research competencies in future teachers in the framework of STEAM education. Int. J. Emerg. Technol. Learn. 2020, 15, 204–217. [Google Scholar] [CrossRef]

- Lage-Gómez, C.; Ros, G. Transdisciplinary integration and its implementation in primary education through two STEAM projects (La integración transdisciplinar y su aplicación en Educación Primaria a través de dos proyectos STEAM). J. Study Educ. Dev. 2021, 44, 801–837. [Google Scholar] [CrossRef]

- Ortiz-Revilla, J.; Sanz-Camarero, R.; Greca, I. Una mirada crítica a los modelos teóricos sobre educación STEAM integrada. Rev. Iberoam. Educ. 2021, 87, 13–33. [Google Scholar] [CrossRef]

- Perignat, E.; Katz-Buonincontro, J. STEAM in practice and research: An integrative literature review. Think. Ski. Creat. 2019, 31, 31–43. [Google Scholar] [CrossRef]

- Radloff, J.; Guzey, S. Investigating Preservice STEM Teacher Conceptions of STEM Education. J. Sci. Educ. Technol. 2016, 25, 759–774. [Google Scholar] [CrossRef]

- Chu, W.W.; Ong, E.T.; Ayop, S.K.; Azmi, M.S.M.; Abdullah, A.S.; Karim, N.S.A.; Tho, S.W. The innovative use of smartphone for sound STEM practical kit: A pilot implementation for secondary classroom. Res. Sci. Technol. Educ. 2021, 39, 1–23. [Google Scholar] [CrossRef]

- Dare, E.A.; Ring-Whalen, E.A.; Roehrig, G.H. Creating a continuum of STEM models: Exploring how K-12 science teachers conceptualize STEM education. Int. J. Sci. Educ. 2019, 41, 1701–1720. [Google Scholar] [CrossRef]

- Schwortz, A.C.; Burrows, A.C. Authentic science experiences with STEM datasets: Post-secondary results and potential gender influences. Res. Sci. Technol. Educ. 2021, 39, 347–367. [Google Scholar] [CrossRef]

- Hong, O. STEAM Education in Korea: Current Policies and Future Directions Science and Technology Trends Policy Trajectories and Initiatives in STEM Education STEAM Education in Korea: Current Policies and Future Directions. Sci. Technol. Trends 2017, 8, 92–102. [Google Scholar]

- Shernoff, D.J.; Sinha, S.; Bressler, D.M.; Ginsburg, L. Assessing teacher education and professional development needs for the implementation of integrated approaches to STEM education. Int. J. STEM Educ. 2017, 4, 13. [Google Scholar] [CrossRef]

- Quigley, C.F.; Herro, D.; Shekell, C.; Cian, H.; Jacques, L. Connected Learning in STEAM Classrooms: Opportunities for Engaging Youth in Science and Math Classrooms. Int. J. Sci. Math. Educ. 2020, 18, 1441–1463. [Google Scholar] [CrossRef]

- White, D.; Delaney, S. Full STEAM ahead, but who has the map for integration?—A PRISMA systematic review on the incorporation of interdisciplinary learning into schools. LUMAT 2021, 9, 9–32. [Google Scholar] [CrossRef]

- Sun, Y.; Ni, C.C.; Kang, Y.Y. Comparison of Four Universities on Both Sides of the Taiwan Strait Regarding the Cognitive Differences in the Transition from STEM to STEAM in Design Education. Educ. Sci. 2023, 13, 241. [Google Scholar] [CrossRef]

- Smith, K.L.; Rayfield, J.; McKim, B.R. Effective Practices in STEM Integration: Describing Teacher Perceptions and Instructional Method Use. J. Agric. Educ. 2015, 56, 182–201. [Google Scholar] [CrossRef]

- Montés, N.; Zapatera, A.; Ruiz, F.; Zuccato, L.; Rainero, S.; Zanetti, A.; Gallon, K.; Pacheco, G.; Mancuso, A.; Kofteros, A.; et al. A Novel Methodology to Develop STEAM Projects According to National Curricula. Educ. Sci. 2023, 13, 169. [Google Scholar] [CrossRef]

- Kelley, T.R.; Knowles, J.G. A conceptual framework for integrated STEM education. Int. J. STEM Educ. 2016, 3, 1. [Google Scholar] [CrossRef]

- Siew, N.M.; Amir, N.; Chong, C.L. The perceptions of pre-service and in-service teachers regarding a project-based STEM approach to teaching science. Springerplus 2015, 4, 8. [Google Scholar] [CrossRef]

- Vale, I.; Barbosa, A.; Peixoto, A.; Fernandes, F. Solving Problems through Engineering Design: An Exploratory Study with Pre-Service Teachers. Educ. Sci. 2022, 12, 889. [Google Scholar] [CrossRef]

- Jamil, F.M.; Linder, S.M.; Stegelin, D.A. Early Childhood Teacher Beliefs About STEAM Education After a Professional Development Conference. Early Child. Educ. J. 2018, 46, 409–417. [Google Scholar] [CrossRef]

- Kwak, Y. Secondary school science teacher education and quality control in Korea based on the teacher qualifications and the teacher employment test in Korea. Asia-Pacific Sci. Educ. 2019, 5, 14. [Google Scholar] [CrossRef]

- Martín-Páez, T.; Aguilera, D.; Perales-Palacios, F.J.; Vílchez-González, J.M. What are we talking about when we talk about STEM education? A review of literature. Sci. Educ. 2019, 103, 799–822. [Google Scholar] [CrossRef]

- Romero-Ariza, M.; Quesada, A.; Abril, A.-M.; Cobo, C. Changing teachers’ self-efficacy, beliefs and practices through STEAM teacher professional development (Cambios en la autoeficacia, creencias y prácticas docentes en la formación STEAM de profesorado). J. Study Educ. Dev. 2021, 44, 942–969. [Google Scholar] [CrossRef]

- Alghamdi, A.A. Exploring Early Childhood Teachers’ Beliefs About STEAM Education in Saudi Arabia. Early Child. Educ. J. 2022, 51, 247–256. [Google Scholar] [CrossRef]

- Boice, K.L.; Jackson, J.R.; Alemdar, M.; Rao, E.; Grossman, S. Supporting Teachers on Their STEAM Journey: A Collaborative STEAM Teacher Training Program. Educ. Sci. 2021, 11, 105. [Google Scholar] [CrossRef]

- Chen, B.; Chen, L. Examining the sources of high school chemistry teachers’ practical knowledge of teaching with practical work: From the teachers’ perspective. Chem. Educ. Res. Pract. 2021, 22, 476–485. [Google Scholar] [CrossRef]

- DeCoito, I.; Myszkal, P. Connecting Science Instruction and Teachers’ Self-Efficacy and Beliefs in STEM Education. J. Sci. Teacher Educ. 2018, 29, 485–503. [Google Scholar] [CrossRef]

- Lu, S.Y.; Lo, C.C.; Syu, J.Y. Project-based learning oriented STEAM: The case of micro–bit paper-cutting lamp. Int. J. Technol. Des. Educ. 2021, 32, 2553–2575. [Google Scholar] [CrossRef]

- Perales, F.J.; Aróstegui, J.L. The STEAM approach: Implementation and educational, social and economic consequences. Arts Educ. Policy Rev. 2021, 1–9. [Google Scholar] [CrossRef]

- Zharylgassova, P.; Assilbayeva, F.; Saidakhmetova, L.; Arenova, A. Psychological and pedagogical foundations of practice-oriented learning of future STEAM teachers. Think. Ski. Creat. 2021, 41, 100886, (Retracted). [Google Scholar] [CrossRef]

- Valovičová, L.; Ondruška, J.; Zelenický, L.; Chytrý, V.; Medová, J. Enhancing computational thinking through interdisciplinary steam activities using tablets. Mathematics 2020, 8, 2128. [Google Scholar] [CrossRef]

- Marques, D.; Neto, T.B.; Guerra, C.; Viseu, F.; Aires, A.P.; Mota, M.; Ravara, A. A STEAM Experience in the Mathematics Classroom: The Role of a Science Cartoon. Educ. Sci. 2023, 13, 392. [Google Scholar] [CrossRef]

- Kalaitzidou, M.; Pachidis, T.P. Recent Robots in STEAM Education. Educ. Sci. 2023, 13, 272. [Google Scholar] [CrossRef]

- Domènech-Casal, J.; Lope, S.; Mora, L. Qué proyectos STEM diseña y qué dificultades expresa el profesorado de secundaria sobre Aprendizaje Basado en Proyectos. Rev. Eureka Sobre Ensen. Divulg. Cienc. 2019, 16, 2203. [Google Scholar] [CrossRef]

- Margot, K.C.; Kettler, T. Teachers’ perception of STEM integration and education: A systematic literature review. Int. J. STEM Educ. 2019, 6, 2. [Google Scholar] [CrossRef]

- Abd-El-Khalick, F. Teaching with and About Nature of Science, and Science Teacher Knowledge Domains. Sci. Educ. 2013, 22, 2087–2107. [Google Scholar] [CrossRef]

- Borda, E.; Schumacher, E.; Hanley, D.; Geary, E.; Warren, S.; Ipsen, C.; Stredicke, L. Initial implementation of active learning strategies in large, lecture STEM courses: Lessons learned from a multi-institutional, interdisciplinary STEM faculty development program. Int. J. STEM Educ. 2020, 7, 4. [Google Scholar] [CrossRef]

- Mäkelä, T.; Tuhkala, A.; Mäki-Kuutti, M.; Rautopuro, J. Enablers and Constraints of STEM Programme Implementation: An External Change Agent Perspective from a National STEM Programme in Finland. Int. J. Sci. Math. Educ. 2022, 21, 969–991. [Google Scholar] [CrossRef]

- Wong, J.T.; Bui, N.N.; Fields, D.T.; Hughes, B.S. A Learning Experience Design Approach to Online Professional Development for Teaching Science through the Arts: Evaluation of Teacher Content Knowledge, Self-Efficacy and STEAM Perceptions A Learning Experience Design Approach to Online Professional Dev. J. Sci. Teacher Educ. 2022, 1–31. [Google Scholar] [CrossRef]

- Agarwal, S.; Kaushik, J.S. Student’s Perception of Online Learning during COVID Pandemic. Indian J. Pediatr. 2020, 87, 554. [Google Scholar] [CrossRef] [PubMed]

- Cash, K.M. Teacher self-perceptions and student academic engagement in elementary school mathematics. Electron. Theses Diss. 2016, 1–93. [Google Scholar] [CrossRef]

- Bandura, A. Self-efficacy mechanism in human agency. Am. Psychol. 1982, 37, 122–147. [Google Scholar] [CrossRef]

- Wang, H.; Moore, T.J.; Roehrig, G.H.; Park, M.S. STEM Integration: Teacher Perceptions and Practice STEM Integration: Teacher Perceptions and Practice. J. Pre-Coll. Eng. Educ. Res. 2011, 1, 2. [Google Scholar] [CrossRef]

- Herro, D.; Quigley, C. Exploring teachers’ perceptions of STEAM teaching through professional development: Implications for teacher educators. Prof. Dev. Educ. 2017, 43, 416–438. [Google Scholar] [CrossRef]

- Al Salami, M.K.; Makela, C.J.; de Miranda, M.A. Assessing changes in teachers’ attitudes toward interdisciplinary STEM teaching. Int. J. Technol. Des. Educ. 2017, 27, 63–88. [Google Scholar] [CrossRef]

- EL-Deghaidy, H.; Mansour, N.; Alzaghibi, M.; Alhammad, K. Context of STEM integration in schools: Views from in-service science teachers. Eurasia J. Math. Sci. Technol. Educ. 2017, 13, 2459–2484. [Google Scholar] [CrossRef]

- Park, H.J.; Byun, S.Y.; Sim, J.; Han, H.; Baek, Y.S. Teachers’ perceptions and practices of STEAM education in South Korea. Eurasia J. Math. Sci. Technol. Educ. 2016, 12, 1739–1753. [Google Scholar] [CrossRef]

- Park, M.H.; Dimitrov, D.M.; Patterson, L.G.; Park, D.Y. Early childhood teachers’ beliefs about readiness for teaching science, technology, engineering, and mathematics. J. Early Child. Res. 2017, 15, 275–291. [Google Scholar] [CrossRef]

- Bossolasco, M.; Chiecher, A.; Santos, D.D. Profiles of access and appropriation of ICT in freshmen students. Comparative study in two Argentine public universities. Píxel-BIT Rev. Medios Educ. 2022, 57, 151–172. [Google Scholar] [CrossRef]

- Baek, E.O.; Sung, Y.H. Pre-service teachers’ perception of technology competencies based on the new ISTE technology standards. J. Digit. Learn. Teach. Educ. 2020, 37, 48–64. [Google Scholar] [CrossRef]

- Haatainen, O.; Turkka, J.; Aksela, M. Science Teachers’ Perceptions and Self-Efficacy Beliefs Related to Integrated Science Education. Educ. Sci. 2021, 11, 272. [Google Scholar] [CrossRef]

- Queiruga-Dios, M.Á.; López-Iñesta, E.; Diez-Ojeda, M.; Sáiz-Manzanares, M.C.; Vázquez-Dorrío, J.B. Implementation of a STEAM project in compulsory secondary education that creates connections with the environment (Implementación de un proyecto STEAM en Educación Secundaria generando conexiones con el entorno). J. Study Educ. Dev. 2021, 44, 871–908. [Google Scholar] [CrossRef]

- Diego-Mantecon, J.M.; Prodromou, T.; Lavicza, Z.; Blanco, T.F.; Ortiz-Laso, Z. An attempt to evaluate STEAM project-based instruction from a school mathematics perspective. ZDM Math. Educ. 2021, 53, 1137–1148. [Google Scholar] [CrossRef]

- Lee, Y.; Paik, S.-H.; Kim, S.-W. A Study on Teachers Practices of STEAM Education in Korea. Int. J. Pure Appl. Math. 2018, 118, 2339–2365. [Google Scholar]

- Ozkan, G.; Topsakal, U.U. Investigating the effectiveness of STEAM education on students’ conceptual understanding of force and energy topics. Res. Sci. Technol. Educ. 2021, 39, 441–460. [Google Scholar] [CrossRef]

- Park, W.; Cho, H. The interaction of history and STEM learning goals in teacher-developed curriculum materials: Opportunities and challenges for STEAM education. Asia Pac. Educ. Rev. 2022, 23, 457–474. [Google Scholar] [CrossRef]

- Espinosa-Ríos, E.A. La formación docente en los procesos de mediación didáctica. Praxis 2016, 12, 90. [Google Scholar] [CrossRef][Green Version]

- Creswell, J.W. Educational Research Planning, Conducting and Evaluating Quantitative and Quailitative Research, 4th ed.; University of Nebraska–Lincoln: Boston, MA, USA, 2012. [Google Scholar]

- Sampieri, R.H.; Collado, C.F.; Lucio, P.B. Metodología de la Investigación, 6th ed.; Carlos Fernandez collado, Pilar Batista Lucio: Ciudad de México, Mexico, 2014. [Google Scholar]

- Simms, L.J.; Kerry, Z.; Williams, T.F.; Bernstein, L. Does the Number of Response Options Matter? Psychometric Perspectives Using Personality Questionnaire Data. Am. Psychol. Assoc. 2019, 31, 557–566. [Google Scholar] [CrossRef] [PubMed]

- Mundfrom, D.J.; Shaw, D.G.; Ke, T.L. Minimum Sample Size Recommendations for Conducting Factor Analyses. Int. J. Test. 2005, 5, 159–168. [Google Scholar] [CrossRef]

- Sampieri, P.; Fernández, R.; Baptista, C. Selección de la muestra. Metodol. Investig. 2010, 75. Available online: www.elosopanda.com (accessed on 5 June 2023).

- Calonge-Pascual, S.; Fuentes-Jiménez, F.; Mallén, J.A.C.; González-Gross, M. Design and validity of a choice-modeling questionnaire to analyze the feasibility of implementing physical activity on prescription at primary health-care settings. Int. J. Environ. Res. Public Health 2020, 17, 6627. [Google Scholar] [CrossRef]

- Escurra, L.M. Cuantificación de la validez de contenido por criterio de jueces. Rev. Psicol. 1969, 6, 103–111. [Google Scholar] [CrossRef]

- Mamani-Vilca, E.M.; Pelayo-Luis, I.P.; Guevara, A.T.; Sosa, J.V.C.; Carranza-Esteban, R.F.; Huancahuire-Vega, S. Validation of a questionnaire that measures perceptions of the role of community nursing professionals in Peru. Aten. Primaria 2022, 54, 102194. [Google Scholar] [CrossRef]

- Merino, C.; Livia, J. Intervalos de confianza asimétricos para el índice la validez de contenido: Un programa Visual Basic para la V de Aiken. An. Psicol. 2009, 25, 169–171. [Google Scholar]

- Wilcox, R.R.; Serang, S. Hypothesis Testing, p Values, Confidence Intervals, Measures of Effect Size, and Bayesian Methods in Light of Modern Robust Techniques. Educ. Psychol. Meas. 2017, 77, 673–689. [Google Scholar] [CrossRef] [PubMed]

- George-Reyes, C.E.; Valerio-Ureña, G. Validación de un instrumento para medir las competencias digitales docentes en entornos no presenciales emergentes. Edutec. Rev. Electron. Tecnol. Educ. 2022, 80, 181–197. [Google Scholar] [CrossRef]

- Sakaluk, J.K.; Short, S.D. A Methodological Review of Exploratory Factor Analysis in Sexuality Research: Used Practices, Best Practices, and Data Analysis Resources. J. Sex Res. 2017, 54, 1–9. [Google Scholar] [CrossRef]

- Barinas, G.; Cañada, F.; Costillo, E.; Amórtegui, E. Validación de un instrumento de creencias sobre las ciencias naturales escolares en educación primaria. Prax. Saber 2022, 13, e14147. [Google Scholar] [CrossRef]

- Taber, K.S. The Use of Cronbach’s Alpha When Developing and Reporting Research Instruments in Science Education. Res. Sci. Educ. 2018, 48, 1273–1296. [Google Scholar] [CrossRef]

- Aidé, L.; Alarcón, G.; Arturo, J.; Trápaga, B.; Navarro, R.E. Validez de contenido por juicio de expertos: Propuesta de una herramienta virtual Content validity by experts judgment: Proposal for a virtual tool. Apertura 2017, 9, 42–53. [Google Scholar] [CrossRef]

- Collet, C.; Nascimento, J.V.; Folle, A.; Ibáñez, S.J. Desing and validation of an instrument for analysis of sportive formation in volleyball. Cuad. Psicol. Deport. 2018, 19, 178–191. [Google Scholar] [CrossRef]

- Juárez-Hernández, L.G.; Tobón, S. Analysis of the elements implicit in the validation of the content of a research instrument. Espacios 2018, 39, 1–7. [Google Scholar]

- Wiersma, L. Measurement in physical education and exercise science conceptualization and development of the sources of enjoyment in youth sport questionnaire. Meas. Phys. Educ. Exerc. Sci. 2001, 5, 153–177. [Google Scholar] [CrossRef]

- Gonzalez-Fernández, M.O.; González-Flores, Y.A.; Muñoz-López, C. Panorama de la robótica educativa a favor del aprendizaje STEAM. Rev. Eureka Sobre Ensen. Divulg. Cienc. 2021, 18, 230101–230123. [Google Scholar] [CrossRef]

- Hancock, G.J.; Mueller, R.S.P.; Stapleton, L.M. The Reviewer’s Guide to Quantitative Methods in the Social Sciences, 2nd ed.; Routledge: New York, NY, USA, 2019. [Google Scholar]

- Osborne, J.W. What is rotating in exploratory factor analysis? Pract. Assess. Res. Eval. 2015, 20, 1–7. [Google Scholar]

- Williams, B.; Onsman, A.; Brown, T. Exploratory factor analysis: A five-step guide for novices. J. Emerg. Prim. Health Care 2010, 8, 1–13. [Google Scholar] [CrossRef]

- Howard, M.C. A systematic literature review of exploratory factor analyses in management. J. Bus. Res. 2023, 164, 113969. [Google Scholar] [CrossRef]

- Gul, D.R.; Ahmad, D.I.; Tahir, D.T.; Ishfaq, D.U. Development and factor analysis of an instrument to measure service-learning management. Heliyon 2022, 8, e09205. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G.; Hattori, M.; Trichtinger, L.A.; Wang, X. Target rotation with both factor loadings and factor correlations. Psychol. Methods 2019, 24, 390–402. [Google Scholar] [CrossRef]

- Korkmaz, Ö.; Çakır, R.; Erdoğmuş, F.U. A validity and reliability study of the Basic STEM Skill Levels Perception Scale. Int. J. Psychol. Educ. Stud. 2020, 7, 111–121. [Google Scholar] [CrossRef]

- Korkmaz, Ö.; Bai, X. Adapting computational thinking scale (CTS) for chinese high school students and their thinking scale skills level. Particip. Educ. Res. 2019, 6, 10–26. [Google Scholar] [CrossRef]

- Elosua, P.; Egaña, M. Psicometría Aplicada: Guía Para el Análisis de Datos y Escalas con Jamovi; UPV/EHU: Bilbo, Spain, 2020. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).