Ensuring Academic Integrity and Trust in Online Learning Environments: A Longitudinal Study of an AI-Centered Proctoring System in Tertiary Educational Institutions

Abstract

1. Introduction

2. Method and Context of the Study

2.1. Research Methodology

2.2. Background of Participating HEIs

3. Insights on Stakeholders’ Views and Experiences in Applying Online Examinations

3.1. Research Questions

3.2. Sampling and Procedure

3.3. Analysis of Results

3.3.1. Perceived Credibility of Online Examinations (RQ1)

3.3.2. Identification of Impersonation Threat Scenarios (RQ2)

3.3.3. Identification of Communication, Collaboration and Resource Access Threat Scenarios (RQ3)

4. Rating of Threat Scenarios

4.1. Sampling and Procedure

4.2. Rating of Impersonation Threats

4.3. Rating of Communication, Collaboration and Resource Access Threats

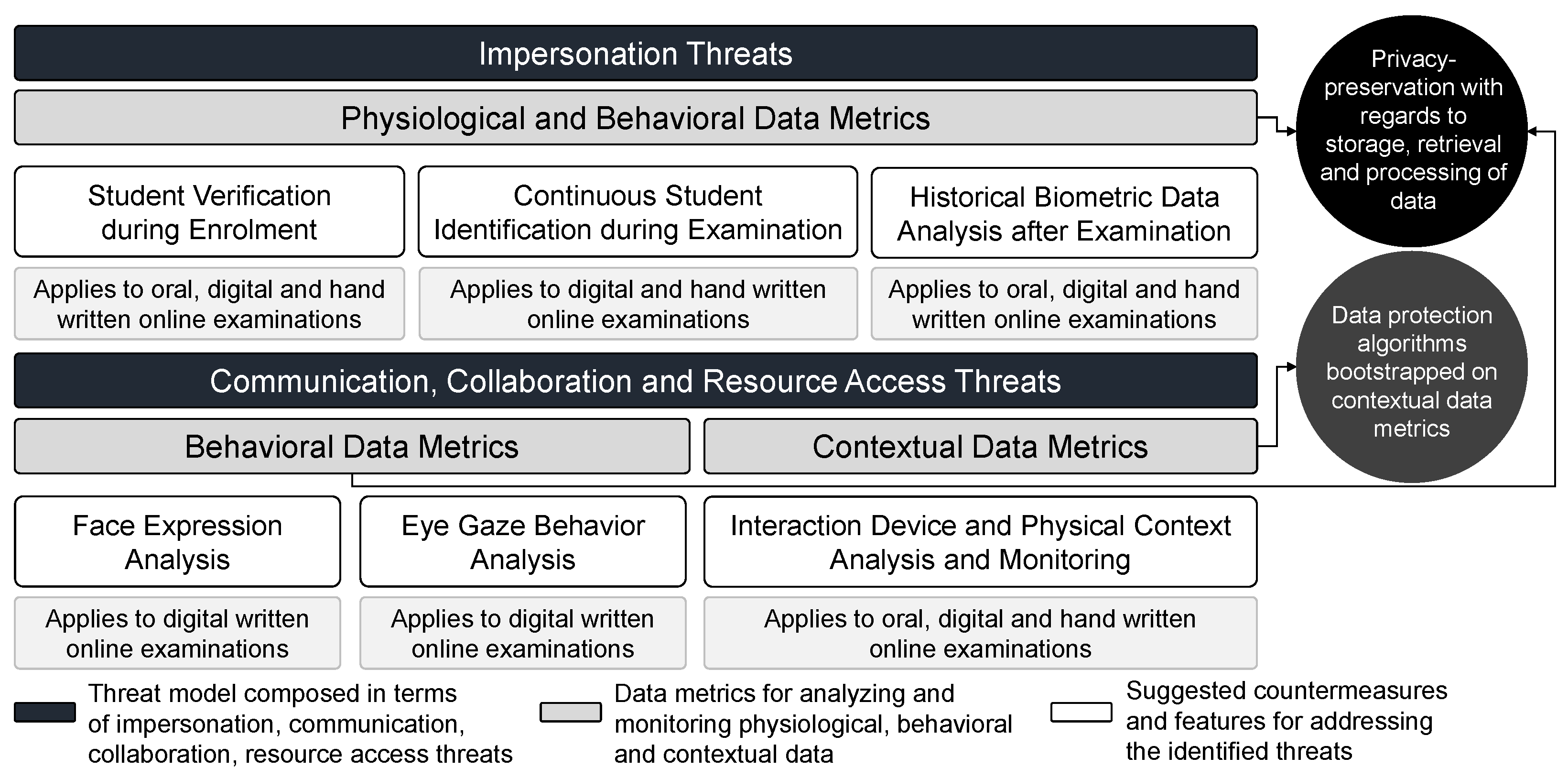

5. Threat Model, Data Metrics and Countermeasures

5.1. Addressing Impersonation Threats through Physiological Data Analysis

5.2. Addressing Communication, Collaboration and Resource Access Threats through Behavioral and Contextual Data Analysis

6. Feasibility Study of an Intelligent and Continuous Online Student Identity Management System: Implementation and User Evaluation

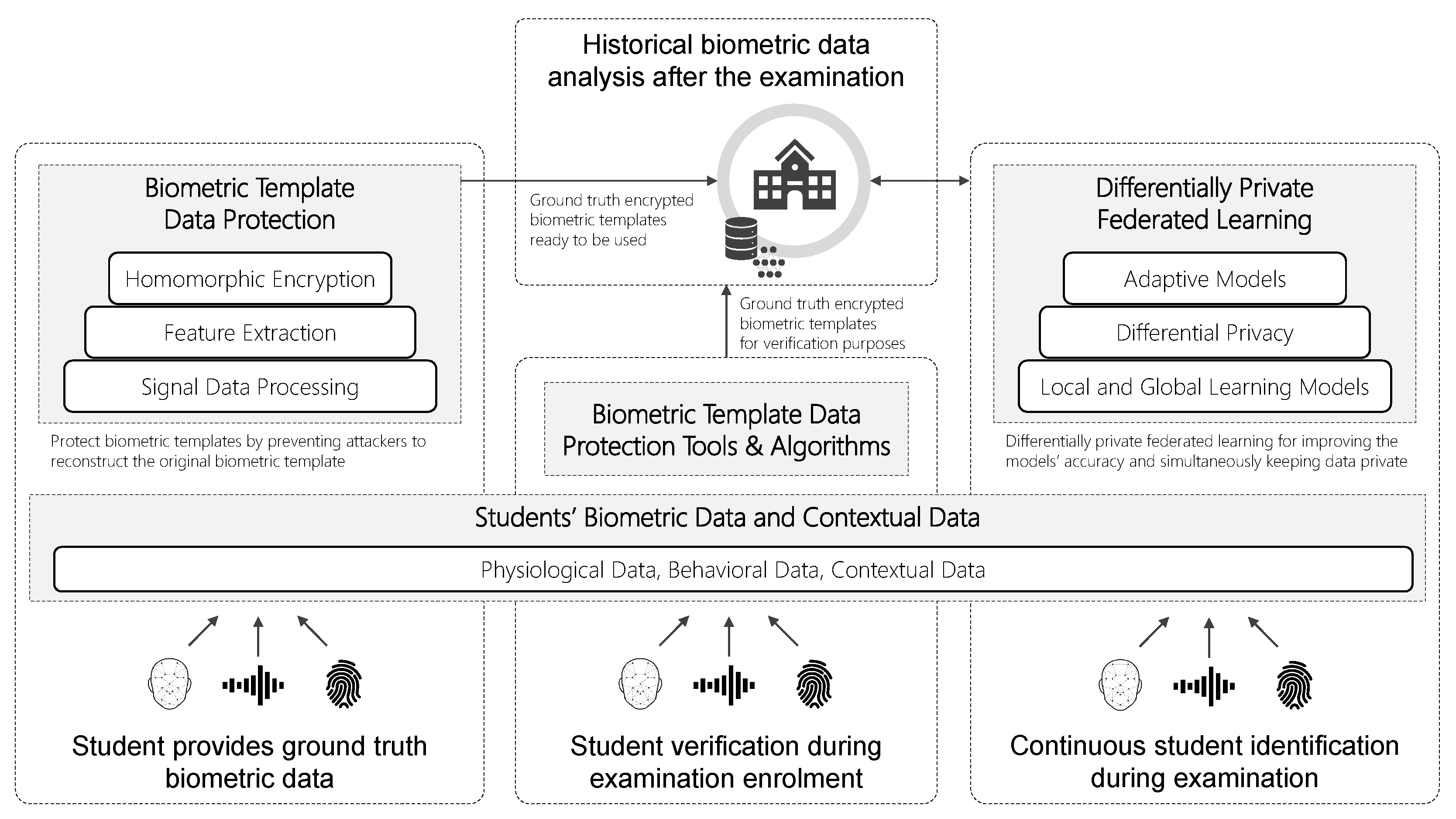

6.1. Conceptual and Architectural Design of the Trustid Framework

6.2. User Evaluation of TRUSTID

6.3. Analysis of Results

7. Privacy Preservation Issues and Challenges in Storing, Retrieving and Processing Biometric Data of Students

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Stakeholder Semi-Structure Interview Discussion Themes and Questions

Appendix A.1. Prerequisites—Guidelines to the Interviewer

Appendix A.2. Discussion Themes and Questions

Appendix A.2.1. Initial Profiling and Acquaintance (Approximately 5–10 min)

- Could you please tell us about your background and position in your organization? [open-ended]

- Which Learning Management System (LMS) does your University currently use? [open-ended]

- Which authentication types does your University currently deploy? [open-ended]

- What is the current authentication policy? [open-ended]

Appendix A.2.2. Experience and Trust with Regards to Critical Online Academic Activities (Approximately 30–40 min)

- Please inform us about the best and worst experiences you had with regards to critical online academic activities (examinations, laboratory work) during the COVID-19 period. [open-ended]

- Do you believe that the current procedure at your organization was trustworthy with regards to critical online academic activities (examinations, laboratory work) during the COVID-19 period? Justify your answer. [Likert Scale (1–5): Not Trustworthy at All—Extremely Trustworthy; open-ended]

- How much do you trust the process in terms of whether the grade a student receives is actually the grade reflecting performance? Justify your answer. [Likert Scale (1–5): Not Trustworthy at All—Extremely Trustworthy; open-ended]

- Share your experiences, relevant to the COVID-19 semesters, related to threats with regards to student identification and verification during critical academic activities. [open-ended]

- Identify, based on your previous experience in online examinations during the COVID-19 period, important threat scenarios. [open-ended]

- Elaborate on specific use cases in which you experienced impersonation behavior by students. [open-ended]

Appendix B. Summary of Threats, Countermeasures and Features

| Identified Threats | Threat Scenario Descriptions, Relevant Countermeasures and Features |

|---|---|

| Student violating identification proofs | A student changes the photograph on the identity card with an imposter’s photograph or the student changes details on the identity card. |

| Countermeasure #1: Student identification and verification; Feature #1: Face- or voice-based identification, and comparison of student’s face characteristics with the picture on the student’s identity card | |

| Student switching seats after identification | A student is correctly identified and verified, and, then, switches seats during the examination session with an imposter. |

| Countermeasure #2: Continuous student identification; Countermeasure #3: Data analytics for historically based impersonation; Feature #2: Continuous scanning of the student’s face characteristics, using the web camera, and/or recording the student’s voice signals with the microphone; Feature #3: Detect authentic vs. pre-recorded input video streams; Feature #6: Perform offline data analytics to detect historically based impersonation cases; Feature #7: Comparison of student handwriting style with existing submitted handwriting style | |

| Non-legitimate person provides answers either digitally or hand written | Another non-legitimate person is concurrently logged in the LMS and provides answers either digitally or hand written, or uploads general examination material. |

| Countermeasure #2: Continuous student identification; Feature #4: Monitoring the student’s login sessions; Feature #5: Monitoring the student’s interaction device | |

| Computer-mediated communication through voice or text-written chat | A student is remotely communicating with another person through voice or text-written chat, either using the same computing device as the one used for the examination, or another computing device. Another person co-listens to the examination question, within an oral examination, and, then, provides answers through text-written or voice communication either using the same computing device as the one used for the examination, or another computing device. |

| Countermeasure #4: Monitor student’s digital context; Countermeasure #5: Monitor the student’s behavior within the physical context; Feature #8: Monitoring and blocking communication and/or collaboration applications; Feature #9: Monitoring and blocking access to specific websites; Feature #10: Keyboard keystroke and computer mouse click analysis; Feature #12: Monitor voice signals, contextual sound and ambient sound; Feature #13: Face behavior and expressions analysis of the student; Feature #14: Eye gaze fixations and behavior analysis of the student | |

| Computer-mediated collaboration through screen sharing and control applications | A student remotely communicates with another person through special applications (e.g., share screen, remote desktop connection), either using the same computing device as the one used for the examination, or another computing device. |

| Countermeasure #4: Monitor student’s digital context; Countermeasure #5: Monitor the student’s behavior within the physical context; Feature #8: Monitoring and blocking communication and/or collaboration applications; Feature #13: Face behavior and expressions analysis of the student; Feature #14: Eye gaze fixations and behavioral analysis of the student | |

| Student access to forbidden online resources | A student finds help from online resources and search engines, not allowed in the examination policy, either using the same computing device as the one used for the examination, or another computing device. |

| Countermeasure #4: Monitor student’s digital context; Feature #9: Monitor and block specific websites | |

| Non-legitimate person providing answers on the student’s computing device through the student’s main input device or a secondary input device | A student sits in front of the camera, and a non-legitimate person sits next to the student and types the answers through the student’s main input device or a secondary device (keyboard, computer mouse, etc.), displayed on the student’s computer screen. |

| Countermeasure #4: Monitor student’s digital context; Countermeasure #5: Monitor the student’s behavior within the physical context; Feature #10: Keyboard keystroke and computer mouse click analysis; Feature #11: Check the drivers at the operating system level of the student’s computing device; Feature #13: Face behavior and expressions analysis of the student; Feature #14: Eye gaze fixations and behavior analysis of the student | |

| Student communicating/ collaborating with another person within the same physical context | Happens when a student communicates/collaborates (i.e., talks) with another person that is not in the view field of the camera within the same physical context. |

| Countermeasure #5: Monitor the student’s behavior within the physical context; Feature #12: Monitor voice signals, contextual sound and ambient sound | |

| Non-legitimate person providing answers on a white board/computing device/hard copy messages | A non-legitimate person provides answers through a computing device and projects the answers through a white board/computing device/hard copy messages. |

| Countermeasure #5: Monitor the student’s behavior within the physical context; Feature #13: Face behavior and expression analysis of the student; Feature #14: Eye gaze fixations and behavioral analysis of the student |

References

- Khan, S.; Kambris, M.E.K.; Alfalahi, H. Perspectives of University Students and Faculty on remote education experiences during COVID-19—A qualitative study. Educ. Inf. Technol. 2021, 27, 4141–4169. [Google Scholar] [CrossRef]

- Raman, R.; Sairam, B.; Veena, G.; Vachharajani, H.; Nedungadi, P. Adoption of online proctored examinations by university students during COVID-19: Innovation diffusion study. Educ. Inf. Technol. 2021, 26, 7339–7358. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, F.R.A.; Ahmed, T.E.; Saeed, R.A.; Alhumyani, H.; Abdel-Khalek, S.; Abu-Zinadah, H. Analysis and challenges of robust E-exams performance under COVID-19. Results Phys. 2021, 23, 103987. [Google Scholar] [CrossRef]

- Cai, H.; King, I. Education technology for online learning in times of crisis. In Proceedings of the 2020 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Online, 8–11 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 758–763. [Google Scholar]

- Gamage, K.A.; de Silva, E.K.; Gunawardhana, N. Online delivery and assessment during COVID-19: Safeguarding academic integrity. Educ. Sci. 2020, 10, 301. [Google Scholar] [CrossRef]

- Butler-Henderson, K.; Crawford, J. A systematic review of online examinations: A pedagogical innovation for scalable authentication and integrity. Comput. Educ. 2020, 159, 104024. [Google Scholar] [CrossRef]

- Bilen, E.; Matros, A. Online cheating amid COVID-19. J. Econ. Behav. Organ. 2021, 182, 196–211. [Google Scholar] [CrossRef]

- Hill, G.; Mason, J.; Dunn, A. Contract cheating: An increasing challenge for global academic community arising from COVID-19. Res. Pract. Technol. Enhanc. Learn. 2021, 16, 24. [Google Scholar] [CrossRef]

- Frank, M.; Biedert, R.; Ma, E.; Martinovic, I.; Song, D. Touchalytics: On the applicability of touchscreen input as a behavioral biometric for continuous authentication. IEEE Trans. Inf. Forensics Secur. 2012, 8, 136–148. [Google Scholar] [CrossRef]

- Shahzad, M.; Singh, M.P. Continuous authentication and authorization for the internet of things. IEEE Internet Comput. 2017, 21, 86–90. [Google Scholar] [CrossRef]

- Rathgeb, C.; Pöppelmann, K.; Gonzalez-Sosa, E. Biometric technologies for elearning: State-of-the-art, issues and challenges. In Proceedings of the Emerging eLearning Technologies and Applications, Kosice, Slovakia, 12–13 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 558–563. [Google Scholar]

- Dzulkifly, S.; Aris, H.; Janahiraman, T.V. Enhanced Continuous Face Recognition Algorithm for Bandwidth Constrained Network in Real Time Application. In Proceedings of the 2020 The 9th Conference on Informatics, Environment, Energy and Applications, Amsterdam, The Netherlands, 13–16 March 2020; pp. 131–135. [Google Scholar]

- Upadhyaya, S.J. Continuous Authentication Using Behavioral Biometrics. In Proceedings of the 3rd ACM on International Workshop on Security and Privacy Analytics, IWSPA ’17, Scottsdale, AZ, USA, 24 March 2017; Association for Computing Machinery: New York, NY, USA, 2017; p. 29. [Google Scholar]

- Centeno, M.P.; Guan, Y.; van Moorsel, A. Mobile Based Continuous Authentication Using Deep Features. In Proceedings of the 2nd International Workshop on Embedded and Mobile Deep Learning, EMDL’18, Munich, Germany, 15–18 June 2018; ACM Press: New York, NY, USA, 2018; pp. 19–24. [Google Scholar]

- De Luca, A.; Hang, A.; Brudy, F.; Lindner, C.; Hussmann, H. Touch Me Once and i Know It’s You! Implicit Authentication Based on Touch Screen Patterns. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’12, Austin, TX, USA, 5–10 May 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 987–996. [Google Scholar]

- Fenu, G.; Marras, M.; Boratto, L. A multi-biometric system for continuous student authentication in e-learning platforms. Pattern Recognit. Lett. 2018, 113, 83–92. [Google Scholar] [CrossRef]

- Kaur, N.; Prasad, P.; Alsadoon, A.; Pham, L.; Elchouemi, A. An enhanced model of biometric authentication in E-Learning: Using a combination of biometric features to access E-Learning environments. In Proceedings of the 2016 International Conference on Advances in Electrical, Electronic and Systems Engineering (ICAEES), Putrajaya, Malaysia, 14–16 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 138–143. [Google Scholar]

- Prakash, A.; Krishnaveni, R.; Dhanalakshmi, R. Continuous user authentication using multimodal biometric traits with optimal feature level fusion. Int. J. Biomed. Eng. Technol. 2020, 34, 1–19. [Google Scholar] [CrossRef]

- Moini, A.; Madni, A.M. Leveraging biometrics for user authentication in online learning: A systems perspective. IEEE Syst. J. 2009, 3, 469–476. [Google Scholar] [CrossRef]

- Agulla, E.G.; Rúa, E.A.; Castro, J.L.A.; Jiménez, D.G.; Rifón, L.A. Multimodal biometrics-based student attendance measurement in learning management systems. In Proceedings of the 2009 11th IEEE International Symposium on Multimedia, San Diego, CA, USA, 14–16 December 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 699–704. [Google Scholar]

- Asha, S.; Chellappan, C. Authentication of e-learners using multimodal biometric technology. In Proceedings of the 2008 International Symposium on Biometrics and Security Technologies, Islamabad, Pakistan, 23–24 April 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1–6. [Google Scholar]

- Flior, E.; Kowalski, K. Continuous biometric user authentication in online examinations. In Proceedings of the 2010 Seventh International Conference on Information Technology: New Generations, Las Vegas, NV, USA, 12–14 April 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 488–492. [Google Scholar]

- Morales, A.; Fierrez, J. Keystroke biometrics for student authentication: A case study. In Proceedings of the 2015 ACM Conference on Innovation and Technology in Computer Science Education, Vilnius, Lithuania, 4–8 July 2015; p. 337. [Google Scholar]

- Hussein, M.J.; Yusuf, J.; Deb, A.S.; Fong, L.; Naidu, S. An evaluation of online proctoring tools. Open Prax. 2020, 12, 509–525. [Google Scholar] [CrossRef]

- Kharbat, F.F.; Daabes, A.S.A. E-proctored exams during the COVID-19 pandemic: A close understanding. Educ. Inf. Technol. 2021, 26, 6589–6605. [Google Scholar] [CrossRef]

- Coghlan, S.; Miller, T.; Paterson, J. Good Proctor or “Big Brother”? Ethics of Online Exam Supervision Technologies. Philos. Technol. 2021, 34, 1581–1606. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Chang, K.M.; Yuan, Y.; Hauptmann, A. Massive open online proctor: Protecting the credibility of MOOCs certificates. In Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing, Vancouver, BC, Canada, 14–18 March 2015; pp. 1129–1137. [Google Scholar]

- Ives, B.; Nehrkorn, A. A Research Review: Post-Secondary Interventions to Improve Academic Integrity. In Prevention and Detection of Academic Misconduct in Higher Education; IGI Global: Hershey, PA, USA, 2019; pp. 39–62. [Google Scholar] [CrossRef]

- Gathuri, J.W.; Luvanda, A.; Matende, S.; Kamundi, S. Impersonation challenges associated with e-assessment of university students. J. Inf. Eng. Appl. 2014, 4, 60–68. [Google Scholar]

- Labayen, M.; Vea, R.; Flórez, J.; Aginako, N.; Sierra, B. Online student authentication and proctoring system based on multimodal biometrics technology. IEEE Access 2021, 9, 72398–72411. [Google Scholar] [CrossRef]

- Brooke, J. SUS-A quick and dirty usability scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., Weerdmeester, B.A., McClelland, I.L., Eds.; CRC Press: Boca Raton, FL, USA, 1996; pp. 189–194. [Google Scholar]

- Acar, A.; Ali, S.; Karabina, K.; Kaygusuz, C.; Aksu, H.; Akkaya, K.; Uluagac, S. A Lightweight Privacy-Aware Continuous Authentication Protocol-PACA. ACM Trans. Priv. Secur. 2021, 24, 1–28. [Google Scholar] [CrossRef]

- Natgunanathan, I.; Mehmood, A.; Xiang, Y.; Beliakov, G.; Yearwood, J. Protection of Privacy in Biometric Data. IEEE Access 2016, 4, 880–892. [Google Scholar] [CrossRef]

- Schanzenbach, M.; Grothoff, C.; Wenger, H.; Kaul, M. Decentralized Identities for Self-sovereign End-users (DISSENS). In Proceedings of the Open Identity Summit 2021, Copenhagen, Denmark, 1–2 June 2021; Volume P-312, pp. 47–58. [Google Scholar]

- Schanzenbach, M.; Kilian, T.; Schütte, J.; Banse, C. ZKlaims: Privacy-preserving Attribute-based Credentials using Non-interactive Zero-knowledge Techniques. In Proceedings of the International Conference on e-Business and Telecommunications, ICETE 2019, Prague, Czech Republic, 26–28 June 2019; pp. 325–332. [Google Scholar]

- Dey, A.; Weis, S. PseudoID: Enhancing Privacy in Federated Login. In Proceedings of the Hot Topics in Privacy Enhancing Technologies, Berlin, Germany, 21–23 July 2010; pp. 95–107. [Google Scholar]

- Jain, A.K.; Nandakumar, K.; Ross, A. 50 years of biometric research: Accomplishments, challenges, and opportunities. Pattern Recognit. Lett. 2016, 79, 80–105. [Google Scholar] [CrossRef]

- Rui, Z.; Yan, Z. A survey on biometric authentication: Toward secure and privacy-preserving identification. IEEE Access 2018, 7, 5994–6009. [Google Scholar] [CrossRef]

- Pagnin, E.; Mitrokotsa, A. Privacy-preserving biometric authentication: Challenges and directions. Secur. Commun. Netw. 2017, 2017, 7129505. [Google Scholar] [CrossRef]

- Sarier, N.D. Privacy preserving biometric identification on the bitcoin blockchain. In Proceedings of the International Symposium on Cyberspace Safety and Security, Amalfi, Italy, 29–31 October 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 254–269. [Google Scholar]

- Tran, Q.N.; Turnbull, B.P.; Hu, J. Biometrics and Privacy-Preservation: How Do They Evolve? IEEE Open J. Comput. Soc. 2021, 2, 179–191. [Google Scholar] [CrossRef]

- Dunphy, P.; Petitcolas, F.A. A First Look at Identity Management Schemes on the Blockchain. IEEE Secur. Priv. 2018, 16, 20–29. [Google Scholar] [CrossRef]

- Rouhani, S.; Deters, R. Blockchain based access control systems: State of the art and challenges. In Proceedings of the IEEE/WIC/ACM International Conference on Web Intelligence, Thessaloniki, Greece, 14–17 October 2019; pp. 423–428. [Google Scholar]

- Zhang, R.; Xue, R.; Liu, L. Security and privacy on blockchain. ACM Comput. Surv. 2019, 52, 1–34. [Google Scholar] [CrossRef]

- Tran, Q.N.; Turnbull, B.P.; Wu, H.T.; de Silva, A.; Kormusheva, K.; Hu, J. A Survey on Privacy-Preserving Blockchain Systems (PPBS) and a Novel PPBS-Based Framework for Smart Agriculture. IEEE Open J. Comput. Soc. 2021, 2, 72–84. [Google Scholar] [CrossRef]

- Zhu, X.; Cao, C. Secure Online Examination with Biometric Authentication and Blockchain-Based Framework. Math. Probl. Eng. 2021, 2021, 5058780. [Google Scholar] [CrossRef]

- Lu, H.; Martin, K.; Bui, F.; Plataniotis, K.N.; Hatzinakos, D. Face recognition with biometric encryption for privacy-enhancing self-exclusion. In Proceedings of the 16th Conference on Digital Signal Processing, Santorini, Greece, 5–7 July 2009; pp. 1–8. [Google Scholar]

- Ao, M.; Li, S.Z. Near Infrared Face Based Biometric Key Binding. In Advances in Biometrics; Springer: Berlin/Heidelberg, Germany, 2009; pp. 376–385. [Google Scholar]

- Jami, S.K.; Chalamala, S.R.; Jindal, A.K. Biometric Template Protection Through Adversarial Learning. In Proceedings of the 2019 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–13 January 2019; pp. 1–6. [Google Scholar]

- Jindal, A.K.; Rao Chalamala, S.; Jami, S.K. Securing Face Templates using Deep Convolutional Neural Network and Random Projection. In Proceedings of the IEEE Conference on Consumer Electronics, Las Vegas, NV, USA, 11–13 January 2019; pp. 1–6. [Google Scholar]

- Pandey, R.K.; Zhou, Y.; Kota, B.U.; Govindaraju, V. Deep Secure Encoding for Face Template Protection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 77–83. [Google Scholar]

- Jindal, A.K.; Chalamala, S.; Jami, S.K. Face Template Protection Using Deep Convolutional Neural Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 575–5758. [Google Scholar]

- Acar, A.; Aksu, H.; Uluagac, A.S.; Conti, M. A Survey on Homomorphic Encryption Schemes: Theory and Implementation. ACM Comput. Surv. 2018, 51, 1–35. [Google Scholar] [CrossRef]

- Jindal, A.K.; Shaik, I.; Vasudha, V.; Chalamala, S.R. Secure and Privacy Preserving Method for Biometric Template Protection using Fully Homomorphic Encryption. In Proceedings of the 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Guangzhou, China, 29 December 2020–1 January 2021; pp. 1127–1134. [Google Scholar]

- Kumar, M.; Moser, B.; Fischer, L.; Freudenthaler, B. Membership-Mappings for Data Representation Learning: Measure Theoretic Conceptualization. In Proceedings of the Database and Expert Systems Applications—DEXA 2021 Workshops, Virtual Event, 27–30 September 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 127–137. [Google Scholar]

- Kumar, M.; Moser, B.; Fischer, L.; Freudenthaler, B. Membership-Mappings for Data Representation Learning: A Bregman Divergence Based Conditionally Deep Autoencoder. In Proceedings of the Database and Expert Systems Applications—DEXA 2021 Workshops, Virtual Event, 27–30 September 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 138–147. [Google Scholar]

- Rodríguez-Barroso, N.; Stipcich, G.; Jiménez-López, D.; José Antonio Ruiz-Millán, J.A. Federated Learning and Differential Privacy: Software tools analysis, the Sherpa.ai FL framework and methodological guidelines for preserving data privacy. Inf. Fusion 2020, 64, 270–292. [Google Scholar] [CrossRef]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farokhi, F.; Jin, S.; Quek, T.Q.; Poor, H.V. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Geyer, R.C.; Klein, T.; Nabi, M. Differentially private federated learning: A client level perspective. In Proceedings of the NIPS Workshop: Machine Learning on the Phone and Other Consumer Devices, Long Beach, CA, USA, 20 December 2017. [Google Scholar]

- Zhang, J.; Wang, J.; Zhao, Y.; Chen, B. An Efficient Federated Learning Scheme with Differential Privacy in Mobile Edge Computing. In Proceedings of the International Conference on Machine Learning and Intelligent Communications, Nanjing, China, 24–25 August 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 538–550. [Google Scholar]

- Kourtellis, N.; Katevas, K.; Perino, D. FLaaS: Federated Learning as a Service. In Proceedings of the 1st Workshop on Distributed Machine Learning, DistributedML’20, Barcelona, Spain, 1 December 2020; ACM: New York, NY, USA, 2020; pp. 7–13. [Google Scholar]

- Mo, F.; Haddadi, H.; Katevas, K.; Marin, E.; Perino, D.; Kourtellis, N. PPFL: Privacy-preserving federated learning with trusted execution environments. In Proceedings of the 19th Annual International Conference on Mobile Systems, Applications, and Services, Virtual, 24 June–2 July 2021; pp. 94–108. [Google Scholar]

- Hosseini, H.; Park, H.; Yun, S.; Louizos, C.; Soriaga, J.; Welling, M. Federated Learning of User Verification Models Without Sharing Embeddings. arXiv 2021, arXiv:2104.08776. [Google Scholar]

- Wiley. New Insights into Academic Integrity: 2022 Update; Wiley: Hoboken, NJ, USA, 2022; pp. 1–10. [Google Scholar]

- Peixoto, B.; Michelassi, C.; Rocha, A. Face liveness detection under bad illumination conditions. In Proceedings of the 2011 18th IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 3557–3560. [Google Scholar]

- Shang, J.; Chen, S.; Wu, J. Defending against voice spoofing: A robust software-based liveness detection system. In Proceedings of the 2018 IEEE 15th International Conference on Mobile Ad Hoc and Sensor Systems (MASS), Chengdu, China, 9–12 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 28–36. [Google Scholar]

- Constantinides, A.; Constantinides, C.; Belk, M.; Fidas, C.; Pitsillides, A. Applying Benford’s Law as an Efficient and Low-Cost Solution for Verifying the Authenticity of Users’ Video Streams in Learning Management Systems. In Proceedings of the IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent Technology, Melbourne, VIC, Australia, 14–17 December 2021; pp. 563–569. [Google Scholar]

- Fidas, C.; Belk, M.; Portugal, D.; Pitsillides, A. Privacy-preserving Biometric-driven Data for Student Identity Management: Challenges and Approaches. In Proceedings of the ACM User Modeling, Adaptation and Personalization (UMAP 2021), Utrecht, The Netherlands, 21–25 June 2021; pp. 368–370. [Google Scholar]

- Gomez-Barrero, M.; Drozdowski, P.; Rathgeb, C.; Patino, J.; Todisco, M.; Nautsch, A.; Damer, N.; Priesnitz, J.; Evans, N.; Busch, C. Biometrics in the Era of COVID-19: Challenges and Opportunities. IEEE Trans. Technol. Soc. 2021, 3, 307–322. [Google Scholar] [CrossRef]

- Hernandez-de Menendez, M.; Morales-Menendez, R.; Escobar, C.; Arinez, J. Biometric applications in education. Int. J. Interact. Des. Manuf. 2021, 15, 365–380. [Google Scholar] [CrossRef]

- Constantinides, A.; Belk, M.; Fidas, C.; Beumers, R.; Vidal, D.; Huang, W.; Bowles, J.; Webber, T.; Silvina, A.; Pitsillides, A. Security and Usability of a Personalized User Authentication Paradigm: Insights from a Longitudinal Study with Three Healthcare Organizations. ACM Trans. Comput. Healthc. 2023, 4, 1–40. [Google Scholar] [CrossRef]

- Hadjidemetriou, G.; Belk, M.; Fidas, C.; Pitsillides, A. Picture Passwords in Mixed Reality: Implementation and Evaluation. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (CHI EA 2019), Glasgow, UK, 4–9 May 2019; pp. 1–6. [Google Scholar]

- Constantinides, A.; Fidas, C.; Belk, M.; Pietron, A.; Han, T.; Pitsillides, A. From hot-spots towards experience-spots: Leveraging on users’ sociocultural experiences to enhance security in cued-recall graphical authentication. Int. J. Hum.-Comput. Stud. 2023, 149, 102602. [Google Scholar] [CrossRef]

- Fidas, C.; Belk, M.; Constantinides, C.; Constantinides, A.; Pitsillides, A. A Field Dependence-Independence Perspective on Eye Gaze Behavior within Affective Activities. In Proceedings of the 18th IFIP TC13 International Conference on Human–Computer Interaction (INTERACT 2021), Bari, Italy, 30 August–3 September 2021; pp. 63–72. [Google Scholar]

- Leonidou, P.; Constantinides, A.; Belk, M.; Fidas, C.; Pitsillides, A. Eye Gaze and Interaction Differences of Holistic Versus Analytic Users in Image-Recognition Human Interaction Proof Schemes. In HCI for Cybersecurity, Privacy and Trust; Moallem, A., Ed.; HCII 2021. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2021; Volume 12788, pp. 66–75. [Google Scholar]

- Costi, A.; Belk, M.; Fidas, C.; Constantinides, A.; Pitsillides, A. CogniKit: An Extensible Tool for Human Cognitive Modeling Based on Eye Gaze Analysis. In Proceedings of the 25th International Conference on Intelligent User Interfaces Companion, Cagliari, Italy, 17–20 March 2020; pp. 130–131. [Google Scholar]

| Online Examination Type | Modalities | Contextual Characteristics |

|---|---|---|

| Oral Online Examination | Instructor asks real-time questions or shares questions (picture, diagram, etc.) through a conference system. Then, each student provides the answer orally. | Time constraints for providing each answer. Examination classrooms usually have a limited number of students to be examined (e.g., up to five). |

| Digital Written Online Examination | Instructor shares the examination questions through the LMS. Students login to the LMS and either view the questions (e.g., multiple-choice questions) and directly provide answers to each question through the LMS, or download a document with questions and further upload their answers to the LMS. Students typically utilize a computer keyboard and computer mouse creating keystroke and computer mouse movement input streams. | Time constraints typically apply for the whole examination session. In some cases, time constraints may be applied for the provision of each answer. Examination classrooms do not have limitations with regards to the number of students attending. Instructors use conferencing systems to monitor between 30 to 70 students simultaneously within a virtual classroom. Direct audiovisual communication with a certain student is performed, when necessary, through the conferencing system. |

| Hand Written Online Examination | Instructor shares the examination questions through the LMS (usually as a PDF). Then, each student either views the questions through the LMS or downloads the PDF on his or her computer. Student writes the answers on hard copy sheets and, finally, uploads the hard copy sheets to the LMS. | Time constraints typically apply for the whole examination, or for each question. Instructors use conferencing systems to monitor between 30 to 70 students simultaneously within a virtual classroom. Direct audiovisual communication with a certain student is performed, when necessary, through the conferencing system. |

| Stakeholder Group | Higher Education Institution 1 | Higher Education Institution 2 | Higher Education Institution 3 |

|---|---|---|---|

| Students | 2 | 3 | 3 |

| Instructors | 3 | 4 | 3 |

| System Administrators | 2 | 2 | 2 |

| Decision Makers | 2 | 1 | 1 |

| Data Protection Experts | 1 | 1 | 1 |

| Total | 10 | 11 | 10 |

| Impersonation Threats | Threat Scenario Descriptions |

|---|---|

| Student violating identification proofs | A student changes the photograph on the identity card with the imposter’s photograph or changes the student details on the identity card |

| Student switching seats after identification | A student is correctly identified and verified, and then switches seats during the examination session with an imposter |

| Non-legitimate person provides answers either digitally or hand written | Another, non-legitimate, person is concurrently logged in the LMS and provides answers either digitally or hand written, or uploads examination material in general |

| Computer-Mediated Communication, Collaboration and Resource Access Threats | Threat Scenario Descriptions |

|---|---|

| Computer-mediated communication through voice or text-written chat | A student remotely communicates with another person through voice or text-written chat, either using the same computing device as the one used for the examination, or with another computing device. Alternatively, another person co-listens to an examination question within an oral examination, and, then, provides answers through written text or voice communication, either using the same computing device as the one used for the examination, or another computing device |

| Computer-mediated collaboration through screen sharing and control applications | A student remotely communicates with another person through special applications (e.g., share screen, remote desktop connection), either using the same computing device as the one used for the examination, or another computing device |

| Student access to forbidden online resources | A student finds help from online resources, search engines, forbidden by the examination policy, either using the same computing device as the one used for the examination, or another computing device |

| In-Situ Communication, Collaboration and Resource Access Threats | Threat Scenario Descriptions |

|---|---|

| Non-legitimate person provides answers on the student’s computing device through the main, or a secondary, input device | A student sits in front of the camera, and a non-legitimate person sits next to the student, typing the answers through the student’s main input device or through a secondary device (keyboard, computer mouse, etc.) displayed on the student’s computer screen |

| Student communicating/collaborating with another person within the same physical context | A student communicates/collaborates (i.e., talks) with another person, that is not in the field of view of the camera, within the same physical context |

| Non-legitimate person provides answers through a white board/computing device/hard copy messages | A non-legitimate person provides answers through a computer and projects the answers using a white board/computing device/hard copy messages |

| Oral | Digital Written | Hand Written | ||||

|---|---|---|---|---|---|---|

| Impersonation Threats | Likelihood | Severity | Likelihood | Severity | Likelihood | Severity |

| Student violating identification proofs | High (7); Medium (0); Low (0) | Major (7); Medium (0); Minor (0) | High (2); Medium (5); Low (0) | Major (6); Medium (1); Minor (0) | High (1); Medium (5); Low (1) | Major (6); Medium (1); Minor (0) |

| Student switching seats after identification | High (0); Medium (1); Low (6) | Major (6); Medium (1); Minor (0) | High (1); Medium (3); Low (3) | Major (6); Medium (1); Minor (0) | High (1); Medium (3); Low (3) | Major (4); Medium (2); Minor (1) |

| Non-legitimate person provides answers either digitally or hand written | N/A | N/A | High (6); Medium (1); Low (0) | Major (7); Medium (0); Minor (0) | High (6); Medium (1); Low (0) | Major (6); Medium (1); Minor (0) |

| Oral | Digital Written | Hand Written | ||||

|---|---|---|---|---|---|---|

| Computer-Mediated Threats | Likelihood | Severity | Likelihood | Severity | Likelihood | Severity |

| Computer-mediated communication through voice or text-written chat | High (7); Medium (0); Low (0) | Major (6); Medium (1); Minor (0) | High (6); Medium (1); Low (0) | Major (5); Medium (2); Minor (0) | High (6); Medium (1); Low (0) | Major (6); Medium (1); Minor (0) |

| Computer-mediated collaboration through screen sharing and control applications | High (0); Medium (3); Low (4) | Major (3); Medium (2); Minor (2) | High (5); Medium (1); Low (1) | Major (5); Medium (1); Minor (1) | High (1); Medium (2); Low (4) | Major (3); Medium (2); Minor (2) |

| Student access to forbidden online resources | High (3); Medium (3); Low (1) | Major (7); Medium (0); Minor (0) | High (5); Medium (1); Low (1) | Major (6); Medium (1); Minor (0) | High (3); Medium (2); Low (2) | Major (5); Medium (0); Minor (2) |

| Oral | Digital Written | Hand Written | ||||

|---|---|---|---|---|---|---|

| In-Situ Threats | Likelihood | Severity | Likelihood | Severity | Likelihood | Severity |

| Non-legitimate person providing answers on the student’s computing device through the main or secondary input device | High (3); Medium (3); Low (1) | Major (3); Medium (2); Minor (2) | High (6); Medium (1); Low (0) | Major (6); Medium (1); Minor (0) | High (3); Medium (2); Low (2) | Major (3); Medium (3); Minor (1) |

| Student communicating/ collaborating with another person within the same physical context | High (1); Medium (1); Low (5) | Major (3); Medium (2); Minor (2) | High (6); Medium (1); Low (0) | Major (6); Medium (1); Minor (0) | High (3); Medium (2); Low (2) | Major (3); Medium (2); Minor (2) |

| Non-legitimate person providing answers on a white board/computing device/ hardcopy messages | High (7); Medium (0); Low (0) | Major (6); Medium (1); Minor (0) | High (6); Medium (1); Low (0) | Major (6); Medium (1); Minor (0) | High (5); Medium (2); Low (0) | Major (5); Medium (2); Minor (0) |

| Mock Examination Type | # of Participants | # of Face Images | Audio Samples Length (in minutes) |

|---|---|---|---|

| Online Written | 65 | 1804 | 75.68 |

| Online Oral | 68 | 1530 | 123.47 |

| Totals | 133 | 3334 | 199.15 |

| Mock Examination Type | # of Participants | # of Face Images | Audio Samples Length (in minutes) |

|---|---|---|---|

| Online Written | 24 | 391 | 31.04 |

| Online Oral | 32 | 582 | 52.73 |

| Totals | 56 | 973 | 83.77 |

| Identification Case | Face Recognition (Success Rate) | Voice Recognition (Success Rate) |

|---|---|---|

| Student identification in order to attend the examination | 100% | 100% |

| Continuous student identification prior to performing an impersonation attack | 94.80% | 91.36% |

| Continuous student identification while performing an impersonation attack | 76.57% | 78.53% |

| Question | Disagree | Moderate | Agree |

|---|---|---|---|

| Overall, how simple and clean is the TRUSTID software’s user interface? | 3 | 10 | 89 |

| Overall, how intuitive to navigate is the TRUSTID software’s user interface? | 2 | 11 | 89 |

| Overall, what’s your opinion on the way features and information in the TRUSTID software are laid out? | 5 | 10 | 87 |

| Overall, how secure do you find the face identification process? | 9 | 22 | 71 |

| Overall, how secure do you find the voice identification process? | 12 | 23 | 67 |

| Overall, do you like the idea to be identified with face-based biometric identification during an online examination? | 21 | 20 | 61 |

| Overall, do you like the idea to be identified with voice-based biometric identification during an online examination? | 26 | 24 | 52 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fidas, C.A.; Belk, M.; Constantinides, A.; Portugal, D.; Martins, P.; Pietron, A.M.; Pitsillides, A.; Avouris, N. Ensuring Academic Integrity and Trust in Online Learning Environments: A Longitudinal Study of an AI-Centered Proctoring System in Tertiary Educational Institutions. Educ. Sci. 2023, 13, 566. https://doi.org/10.3390/educsci13060566

Fidas CA, Belk M, Constantinides A, Portugal D, Martins P, Pietron AM, Pitsillides A, Avouris N. Ensuring Academic Integrity and Trust in Online Learning Environments: A Longitudinal Study of an AI-Centered Proctoring System in Tertiary Educational Institutions. Education Sciences. 2023; 13(6):566. https://doi.org/10.3390/educsci13060566

Chicago/Turabian StyleFidas, Christos A., Marios Belk, Argyris Constantinides, David Portugal, Pedro Martins, Anna Maria Pietron, Andreas Pitsillides, and Nikolaos Avouris. 2023. "Ensuring Academic Integrity and Trust in Online Learning Environments: A Longitudinal Study of an AI-Centered Proctoring System in Tertiary Educational Institutions" Education Sciences 13, no. 6: 566. https://doi.org/10.3390/educsci13060566

APA StyleFidas, C. A., Belk, M., Constantinides, A., Portugal, D., Martins, P., Pietron, A. M., Pitsillides, A., & Avouris, N. (2023). Ensuring Academic Integrity and Trust in Online Learning Environments: A Longitudinal Study of an AI-Centered Proctoring System in Tertiary Educational Institutions. Education Sciences, 13(6), 566. https://doi.org/10.3390/educsci13060566