Abstract

Digital assessment has become relevant as part of the digital learning process, as technology provides not only teaching and learning but also assessment, including productive feedback. With the rapid development of educational technology and the expansion of related research, there is a lack of research-based clarification of aspects of digital assessment without considering the impact of temporary pandemic solutions. The purpose of this thematic review is to summarize key features in studies over a specified period of time (2018–2021); consequently, it does not offer completely new knowledge, but captures essential knowledge of the last few years before the pandemic to avoid losing a significant aspect of digital assessment due to temporary pandemic solutions. The review results in a description of digital assessment that includes its conditions, opportunities and challenges, as well as other characteristics. The findings confirm the importance of digital assessment in the modern educational process and will increase the understanding of digital assessment among those involved in education (administrators, educators and researchers), inviting them to consider possible pedagogical principles. Furthermore, these findings are now comparable to and should be supplemented with post-pandemic insights and knowledge.

1. Introduction

The need for this publication is justified by the entry of technology into educational processes, including assessment. In recent years, education has rapidly and permanently incorporated technology [1,2,3,4]. Consequently, various technological solutions are also involved in assessment [5,6]. As teaching and learning change and develop in a digital direction, assessment is also becoming more digital, opening up new opportunities and also creating new issues that need to be addressed, such as validity, reliability, transparency and plagiarism [7]. Digital assessment and e-assessment are concepts that were chosen from a fairly wide range of different terms (for example, online assessment, computer-aided assessment and so on) used in research to denote an assessment process that uses different types of technology. They are perceived as synonyms in this paper. The definition from Appiah and van Tonder [8] (p. 1454), “e-assessment involves the use of any technological device to create, deliver, store and/or report students’ assessment marks and feedback”, is accepted for the purposes of this review. Therefore, different degrees of use of digital technologies are possible in order to achieve the pedagogical goal; for example, a complete learning management system or only a few devices, applications and sites.

E-assessment can be provided through specific applications or by learning management systems, and is becoming a daily necessity in the education process, especially in higher education [9,10,11]. Dynamic assessment, a form of alternative assessment, is recognized as an effective method for enhancing students’ learning using technology [4]. Technology allows assessment to be smooth and ubiquitous, as it becomes an integral part of any learning activity [12]. Nevertheless, it should be remembered that technology is not a value in itself, and is only important in the process of learning and assessment if it is used to achieve pedagogical goals [13,14]. It is important to note that there are three main aspects when choosing a technological application in educational contexts, i.e., context, student needs and pedagogical considerations [15]. These aspects are also highlighted in the data analysis of this study.

This section of the paper examines the involvement of learners and emphasizes the importance of how learner participation and engagement is increasing in the technology-enriched learning process [16,17,18]. The involvement of students in assessment is important for a productive and efficient learning process [18,19]—not only as the recipients of assessment, but also individually as an assessor of one’s own work and that of one’s colleagues (peer assessment). Using technology has been shown to provide significant support and offer a variety of solutions. One of the classic examples is the e-portfolio, which allows for the accumulation of evidence of the learner’s knowledge and skills [20]. This can be the basis for a student’s reflection and self-assessment, as well as a good opportunity for discussion and peer assessment. The use of technology can ensure that assessment is participatory and collaborative [21]. Students can benefit from online peer assessment, as well as assessment, which benefits students cognitively and affectively [22,23]. Added to this, on-site peer assessment, where comments are seen by other learners, can lead to productive discussions on the learning outcome to be achieved [17], and online peer assessment can support flipped learning activities [24]. This leads us to assume that technology contributes to higher learning outcomes through better learner engagement.

This further raises questions about formative assessment feedback in digital formative assessment, which will be discussed in the next section. It is important for the educator to find the optimal way to provide feedback in order for it to achieve its goal of guiding further learning [25]; for example, using digital textbooks [26]. Research in schools shows that immediate detailed feedback best supports learning [27]. Digital feedback has a number of benefits, such as timeliness, convenience, the ability to streamline the workload of the educator and enabling immediate interaction with possibly a large number of learners [15]. Six conceptual metaphors about feedback in the online learning environment have been defined: feedback is a treatment; a costly commodity; coaching; a command; a learner tool; a dialogue. It is important for the educator to be aware which aspects of feedback are intended to be implemented; for example, these could be addictive or empowering feedback, powerful treatment or a dialogic process. Learners follow or use the feedback information [28]. Publishing video, audio and other formats on the Internet allows for the reception of feedback from a wide audience, including industry professionals [17]. Of course, it is important to consider whether such feedback is always objective and pedagogically valuable. One of the definitions includes applying formative assessment in online or blended learning situations where “the teacher and learners are separated by time and/or space and where a substantial proportion of learning/teaching activities are conducted through web-based ICT” [29] (p. 2337). Another definition outlines a broader use of formative digital assessment, indicating that it evaluates academic achievement during the learning process and depends on feedback [30]. It is digital technologies that can help to enrich feedback and to provide adequate formative assessment, one of the most important components of assessment.

Technologies are intended for objective summative assessment [31,32]. Research highlights concerns about digital summative assessment and authorship confidence in the author of the performance. The exact design of multiple choice questions (MCQ) is widely discussed in the literature regarding how to measure learners’ learning outcomes adequately [33,34] in terms of both the knowledge and skills to be measured and the assurance that these are being demonstrated by a particular student. Another important aspect of online exams is to assure academic integrity by preventing cheating and unauthorized assistance, which can be addressed in part through well-developed authentication and various technological solutions [31,35,36]. P. Dawson defined e-cheating as “cheating that uses or is enabled by digital technology” [36] (p. 4), where it is now possible to generate text using artificial intelligence. Authorship issues are also discussed in the studies analyzed in depth further on.

As noted at the outset, there are different uses of the terms and a lack of a full analysis and characterization of digital assessment. An inspection of the systematic reviews related to e-assessment supported the assumption that the characteristics of digital assessment have not been established at the moment [6,8,31,37,38]. More specific research concerning e-assessment in all educational settings is needed as, in the next few years, digital assessment can become an integral component of the educational process in all levels of education. It is therefore essential to investigate the characteristics and potential of digital assessment and, as gaps in this information have been identified, the present paper aims to analyze the literature on digital assessment over a specific time period, retrospectively, with the potential to compare these results with those of future research.

The research question was developed by using the question-formulating format SPICE (Setting; Perspective; Interest, Phenomenon of; Comparison (if any); Evaluation) [39,40] and was finally formulated into the question: what aspects include the characteristics of digital assessment in the pre-pandemic period?

2. Method

The procedure used is as described in the PRISMA statement [41,42]. A study protocol was developed for full-fledged research management. It recorded the research question, eligibility (inclusion and exclusion) criteria, search strategy and a plan for data acquisition and analysis. It was decided to use only publications about empirical studies on different aspects of e-assessment, and not book chapters, in order to make the data more comparable. Another basic inclusion criterion was the language of the publication; in this case, the language was only English. The boundaries for the publication period to be reviewed were set between 2018 and 2021 as a way of presenting a snapshot of the ongoing research and facilitating the article selection process in a field that has a large body of work. An additional exclusion criterion was publications related to the COVID-19 pandemic, which, of course, marks a rapid development in the use of technology [43,44], but these describe largely temporary solutions, as well as educators working digitally without proper training [45,46,47]. As this literature study was carried out as part of a larger project researching the current situation in digital education, it is important to study the literature that does not reflect the impact of this transient and temporary situation, where authors of such an article used digital assessment even before the pandemic. In summary, inclusion criteria were: focus on e-assessment/digital assessment; empirical study; and the time period between 2018 and 2021. The exclusion criteria were: languages other than English; book chapters; references to COVID-19 specifically.

Three sources were used for the research. After consideration of different databases, the main source was the Web of Science as it is one of the most important resources of scientific literature. The searches were supplemented by publications selected from Google Scholar, as well as publications known from authors’ recent research.

The electronic search strategy for the Web of Science database was developed in this way: assessment AND learning (Author Keywords), and technology* (Author Keywords), or e-assessment (Author Keywords), Not Covid* (All Fields). After a trial search, it was concluded that digital* gives an overwhelming number of results. In addition, the trial of the search strategy concluded that searches using Author Keywords provided more accurate results in contrast to Abstract or Title. The Google Scholar advanced search function has a different search query dialog box. In the line ‘with all words’, the words ‘assessment, learning’ were included, in the line ‘with the exact phrase’, ‘e-assessment’ was added, in the line ‘without words’, ‘Covid’ was included and the search was focused on the titles of the publications chosen. Iterative pilot searches helped to define the most relevant keywords that offer results relevant to the research focus.

Due to the relatively small number of publications analyzed, the data were collected and processed in Google Docs Sheets. A qualitative evidence synthesis approach was used for data analysis [40].

In this study, the risk of bias was mitigated by strict compliance with the inclusion/exclusion criteria and by a well-defined category for thematic coding. The key words chosen did not result in the exclusion of publications with a negative attitude toward digital assessment.

3. Results of Research Strategy

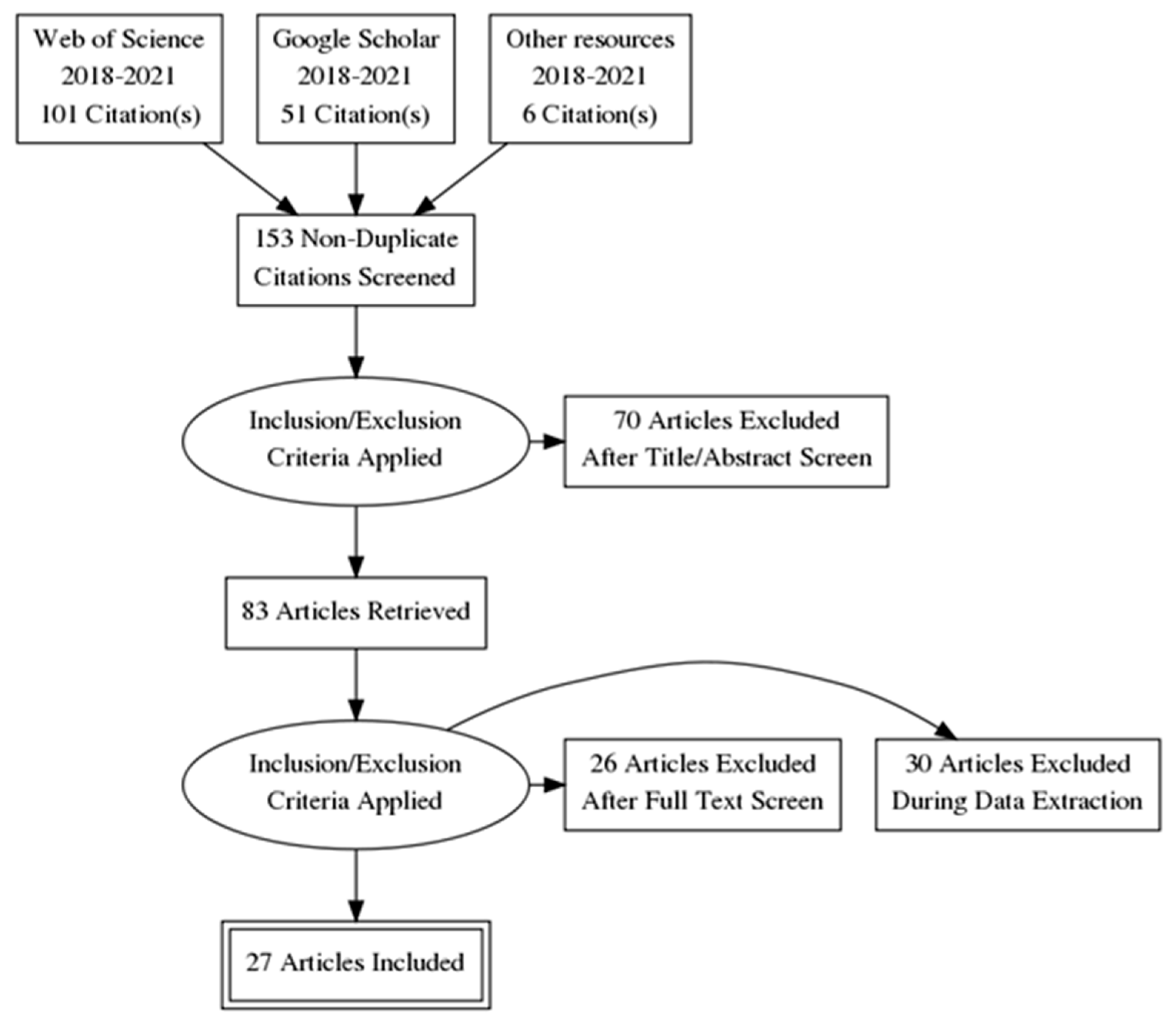

A total of 152 sources were selected using the search strategy described above, and 6 sources were added from the recently read sources (see Figure 1). Consequently, a total of 158 publications were originally obtained. The next step, according to the PRISMA settings, was to eliminate duplicates. A total of 153 publications remained after this action. Subsequently, the publications were selected according to the inclusion/exclusion criteria by carefully examining the publications by their titles and abstracts. This reduced the number of publications to 83. Afterwards, the full texts were read and the inclusion and exclusion criteria were repeated. A total of 26 publications were declared inappropriate. When the data needed for the analysis began to be obtained, a further 30 publications were discarded because digital assessment was not characterized in any way. As a result, 27 publications were included for complete analysis.

Figure 1.

PRISMA flow diagram of study selection.

Table 1 summarizes the general characteristics of the publications, which provide main information and data collection methods, as well as technologies involved in the research and the research units, closing with the research theme. These characteristics were selected as it was concluded that they most accurately describe the selected publications [48].

Table 1.

General characteristics of publications.

In the initial stage of data processing, text fragments were selected that included something descriptive about digital assessment.

Then, data were reduced to phrases, coded and divided into three main categories: conditions and suggestions, opportunities, challenges. Data that included information on potential directions for further research and other important reflections were selected separately and analyzed separately. The next step was to code the data characterizing the digital assessment. Coding was a pre-determined process utilizing a deductive approach where pre-defined categories were used. The codes were then grouped into thematic groups (see Table 2). In the table, they were arranged alphabetically, with no intention of determining significance or any other ranking.

Table 2.

Data codes and thematic groups.

Initially, there were four categories, but after coding and division into thematic groups, it was decided to add the category ‘suggestions’ to the category ‘conditions’. The decision was taken because the suggestions basically set the conditions for digital assessment. Another reason was that there were relatively few suggestions, and thus it was not possible to set up thematic groups.

4. Findings and Discussion

The findings will first be discussed under three categories, followed by data that remained outside of the categories. However, it is important to look at them in the context of this study, as they relate to future perspectives and some important remarks. At the end of each chapter, the possible application or contribution of the findings is highlighted.

4.1. Conditions and Suggestions

The data relevant to the conditions and suggestions describe what is needed for the e-assessment process, as well as recommendations for when to use it. It should be noted that the application of digital assessment is appropriate for student-centered education and self-directed learning, as e-assessment is recommended for self-assessment and to effectively guide student learning [49,50]. These are interrelated concepts that focus on student participation and engagement in learning [18].

In turn, e-assessment components dictate what this assessment should look like. Table 2 lists seventeen such features. Three of them are related to feedback, which is understandable because, in the literature on e-assessment, feedback is specifically emphasized as a benefit [15]. Barana and Marchisio name five automatic assessment system features, two of which are related to feedback: availability, algorithm-based questions and answers, immediate feedback, interactive feedback and contextualization [51]. An important aspect of e-assessment is operating conditions. The most important indicated are training and resources [52] and learnability, availability and constant practice and use [51,53]. Resistance to change (which can also be included in challenges) is mentioned as a negative personal obstacle that may affect the use of e-assessment [52]. It is therefore necessary to determine how to eliminate this disruptive factor in order to implement e-assessment. This is why the avoidance of resistance to change is in the ‘operating conditions’ line.

Of course, the operating conditions include the commitment of involved stakeholders, as well as the participation of students; for example, in the preparation of questions [54,55]. Undoubtedly, the educator has an important role to play in providing the conditions for e-assessment. The educator is the one who provides quality questions and support for achievement [56,57], as well as explaining the assessment process [58]. Important conditions provided by the educator are detecting plagiarism and ensuring fairness [56,59]. Recalling the importance of feedback, the educator should provide useful feedback [58]. This review of the literature indicates the qualities necessary for an educator, i.e., to be flexible and open [60]. First of all, educators provide the process itself, administrative transactions and examinations [56], which include ensuring authorship and authentication; collecting results; and maintaining safe user data [54,59,61]. Regarding the security conditions, it is worth mentioning secure web browser availability and error recognition [53,61]. From the point of view of the user of e-assessment technologies, clear navigation and user satisfaction are essential. In addition, hybrid technologies are recommended [55,61]. The above-mentioned set of conditions and suggestions can help to provide a productive application of digital assessment in different levels of an education system. These findings are an important contribution to the stakeholders’ understanding of the process and for planning the further development of educational processes.

4.2. Conditions and Suggestions

For a full-fledged explanation of the characteristics of digital assessment, it is necessary to evaluate the opportunities that it provides. Below, general characteristics will be indicated initially, then specific benefits will be focused on and, in closing, the specific benefits for educators will be focused on. First, e-assessment is described as an innovative and powerful tool in education [59,62]. This can be explained by society’s focus on technological development and digital transformation [1,63] as this is where most innovation is taking place, including virtual education [4,64]. Of course, in order to be innovative and powerful, technologies must provide an efficient, flexible and also convenient assessment process [49,50,56,62,65]. In conclusion, it should be emphasized that technology in assessment can increase the accessibility for different students, which makes it a beneficial alternative to traditional assessment [54,56].

The specific benefits described highlight in more detail the advantageous characteristics of digital assessment. It is important to ascertain that e-assessment is convenient and useful to use as a training strategy before taking part in summative assessment, thus improving the learner performance [49,53,54,62,65,66,67,68]. The next set of benefits includes opportunities for different assessment formats: individualization; student self-assessment and evaluation and collaborative learning from peer assessment [49,53,54,55,66,67]. The positive impact of e-assessment on learning and meta-cognitive processes is also indicated, including the development of critical thinking, the capacity for analysis and the encouragement of high-order thinking and skills that enrich learning. As a result, there are several benefits for learning [54,56,58,66,69]. Feedback is also one of the benefits of e-assessment, as it has the effect of increasing and detailing knowledge, and technology makes it immediate [49,52,54,62,68]. An important aspect is also the possibility of increasing objectivity, consistency and fairness, as well as academic integrity [53,56,62,66,70], because technologies can provide tools for standardization, automatization, authentication and authorization. Other advantages of e-assessment, related to the above, are temporal and spatial flexibility, access for students with disabilities and more engagement [55,66,71], which are very important in the learning process.

Benefits that are specifically revealed to educators must be allocated separately. Some of the benefits are formulated as opportunities for growth, such as the development of technological competencies, and personal development and growth [56,72]. The next group of benefits refers to the e-assessment process itself, as it produces faster actions, increases efficiency and improves the management and streamlining of the assessment process [3,56,67]. The creation of a digital assessment also benefits participants because technologies facilitate the design, submission and correction of tests, as well as the functionality of the tests [49,56,66,68,71,73]. The processing and application, storage and sharing of assessment data are important benefits for educators [49,56,67,68], as well as for institutions, which benefit from cost reductions [3,54]. Educators are better motivated for digital assessment when they know what opportunities are available. This list of opportunities can be used as a motivational tool in educational institutions.

4.3. Challenges

What follows outlines the challenges faced when fully describing the characteristics of digital assessment. In this category, thematic groups were formed according to the stakeholders involved (educators, students and institutions). In addition, the group of challenges related to both educators and students was revealed. It is important to start with the challenges that are related to the activities of an educator. The most important factor is ensuring effective digital pedagogy in the sense that the pedagogical objectives of assessment are met [62,72,74]. This challenge is compounded by limitations related to different types of study results, the first being the implementation of a high-stake examination [54]. When preparing the assignments and examinations, the pedagogical aim of educators is to avoid a strong reliance on memorization, instead testing conceptual understanding. Assessing complex, open-ended work is a serious challenge [52,53,54,65,66,70,75]. The next important aspect is the fairness and reliability of the assessment, which is related to unwanted activities such as impersonation, contract cheating and plagiarism; the possibility of the ‘hidden’ usage of additional materials; and privacy and data security [54,56,59,60,71,73]. Different technological limitations are also a challenge for the educators. It is worth mentioning the limitations of automated marking and the limited ability to recognize correct input, as well as students’ resistance to using technology [51,54,58,59,68]. This, of course, requires an additional contribution of work and time to overcome these challenges.

Understanding the challenges that educational institutions face is important when providing digital assessment. Of course, it is the institutions that must ensure satisfactory e-assessments for high-stake examinations [54]. Technological preparedness is required for them as it is for any digital assessment. This involves costs for the provision and maintenance of ICT infrastructure (software, hardware, Wi-Fi network) mainly to create an institutional platform [3,54,61,65]. However, it is reasonable to suggest that, in the long term, the digitization of the educational process, including assessment, is still a cost-saving result [54]. In addition to the technological challenges, institutions also have a human resources challenge because training in innovative approaches integrating ICT is needed, but this is not always simply due to the diversity of tasks required from educators [52,62]. Educational institutions may need to review the workload distribution of educators.

The test completion time was mentioned as a challenge for students [57]. This can be complicated by challenges that apply to both the student and the educator. There are a variety of limitations related to the challenges of digital assessment, i.e., a lack of computer skills and technical support; lack of comfort concerning the assessment process; inadequate training; need for connectivity and support; and limited technology experience [52,61,65,71,72,73,74]. It is important to be aware of these challenges in order to plan resources for possible solutions. With regard to the application of the opportunities, the solution may be found by the individual educator, whereas, for others, it could be the task of the institution, namely workload planning and digital skills training for educators, as well as obtaining software resources.

4.4. Future Studies and Significant Notes

The in-depth analysis of the selected studies also focused on indications for possible future studies, and significant notes were made. Recommendations for future research can be divided into two groups: those related to the general theme and those targeted at specific nuances. One recommendation is to compare digital assessment with traditional paper-based tools regarding different aspects: improvement in the student learning outcome; academic integrity [71]; and students’ opinions on the development of skills and competencies [3]. It should be noted that several studies recommend focusing the research on finding out the opinion of learners: how students view their progress with formative digital assessment; students’ expectations on the nature and quality of digital feedback information [68]; learners’ satisfaction with digital formative assessment and diversifying the scope and research instruments [55]. The literature indicates that studies are encouraging research on formative digital assessment. The information above is accompanied by a recommendation to study the time required to spend on developing and responding to digital formative assessments [68] and the issue of digital technology support for deep learning in the context of formative assessment [52]. The need for a study on the acceptance of digital assessment in relation to the cultural differences dimension is also highlighted [53], as well as an investigation into the issues of cheating and plagiarism, and supplementing quantitative data with qualitative data [60]. The group of specific recommendations includes directions for studies on portfolio creation as a type of digital formative assessment [72], specific Moodle options for digital assessment [65] and various formative assessment exercises [68]. A rather extensive field of work for researchers is introduced by these recommendations.

The notes documented in the data address four important aspects of digital assessment. One of them is the significance of authentication and authorship-checking technologies [54,71], which is also reflected in the characteristics of the conditions for digital assessment. The usefulness of Moodle’s various technological capabilities is positively highlighted [49]. Attention is being paid to grading in group work [51], i.e., the topic of fairness in any assessment rather than only in digital assessment. It should be mentioned in conclusion that there is value in using an e-self-assessment tool in learning [66], where the technology makes this process easy and accessible. These findings emphasize the importance of learning management systems in digital assessment. These systems must include both the possibility of various types of assignments and ensure academic honesty. The second important idea is related to the assessment literacy of educators, which is an important necessary competence in the educational process.

5. Conclusions and Limitations

The purpose of any literature review is to summarize and analyze the research into a concept or current issue in the scientific literature. This paper investigated the digital assessment literature in recent years. Conditions and suggestions for digital assessment, opportunities and challenges were the three main categories that digital assessment was created to address. The evidence from this study leads to the conclusion that digital assessment can be an effective and full-fledged component of the learning process. It should be emphasized that this paper is an analytical summary of previously studied concepts that do not qualify for astonishing findings. Thus, thus study is important for researchers in the development of further research and for both practitioners and teachers of educators. Answering this paper’s research question, the most important digital characterization includes the following aspects:

- The development of digital skills (for the educator and learner);

- The meaningful selection of appropriate technologies with clear assessment criteria;

- The guidance of student learning and formative training before summative assessment (applying self-assessment);

- The assessment of knowledge and skills at different levels;

- Useful and timely feedback for educators and learners;

- Availability and individualization;

- Ensuring academic integrity.

It is expected that further research will confirm these findings. Future work should focus on enhancing the quality of digital assessment as it is important to define pedagogical principles for the implementation of digital assessment.

This work clearly has some limitations. First, a period of only four years was chosen to review the current literature on what are rapidly changing technologies. One has to consider that it is possible that research before 2018 revealed important characteristics of digital assessment. The second limitation relates to the exclusion of COVID-19 topics, which also may have provided valuable information. As explained above, this literature review was designed to identify and avoid the conditions created by temporary situations. This should constitute a separate and extensive systematic review to evaluate the benefits of developed practices in remote learning. We can now be confident that the pandemic is over; therefore, this study is a good basis for comparing and evaluating what it is worth keeping from the temporary situation. Another step for future research is to investigate the impact of artificial intelligence in assessment. This forces a rethinking of the type of assignment so that the assessment is authentic.

Funding

This research was funded by European Regional Development Fund grant number 1.1.1.2/VIAA/3/19/561.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The author declares no conflict of interest. The funders had no role in the design of the study; in the collection, analyses or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Daniela, L. (Ed.) Why smart pedagogy? In Didactics of Smart Pedagogy: Smart Pedagogy for Technology Enhanced Learning; Springer: Cham, Switzerland, 2019; pp. 7–12. [Google Scholar]

- Amante, L.; Oliveira, I.R.; Gomes, M.J. E-Assessment in Portuguese higher education: Framework and perceptions of teachers and students. In Handbook of Research on E-Assessment in Higher Education; Azevedo, A., Azevedo, J., Eds.; IGI Global: Hershey, PA, USA, 2019; pp. 312–333. [Google Scholar] [CrossRef]

- Babo, R.; Babo, L.; Suhonen, J.; Tukiainen, M. E-assessment with multiple-choice questions: A 5 year study of students’ opinions and experience. J. Inf. Technol. Educ. Innov. Pract. 2020, 19, 1–29. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.-H.; Koong, C.-S.; Liao, C. Influences of integrating dynamic assessment into a speech recognition learning design to support students’ English speaking skills, learning anxiety and cognitive load. Educ. Technol. Soc. 2022, 25, 1–14. [Google Scholar]

- Alruwais, N.; Wills, G.; Wald, M. Advantages and challenges of using e-assessment. Int. J. Inf. Educ. Technol. 2018, 8, 34–37. [Google Scholar] [CrossRef]

- Timmis, S.; Broadfoot, P.; Sutherland, R.; Oldfield, A. Rethinking assessment in a digital age: Opportunities, challenges and risks. Br. Educ. Res. J. 2016, 42, 454–476. [Google Scholar] [CrossRef]

- Raaheim, A.; Mathiassen, K.; Moen, V.; Lona, I.; Gynnild, V.; Bunæs, B.R.; Hasle, E.T. Digital assessment—How does it challenge local practices and national law? A Norwegian case study. Eur. J. High. Educ. 2019, 9, 219–231. [Google Scholar] [CrossRef]

- Appiah, M.; van Tonder, F. E-Assessment in higher education: A review. Int. J. Bus. Manag. Econ. Res. 2018, 9, 1454–1460. [Google Scholar]

- Al-Fraihat, D.; Joy, M.; Masa’deh, R.; Sinclair, D. Evaluating E-learning systems success: An empirical study. Comput. Hum. Behav. 2020, 102, 67–86. [Google Scholar] [CrossRef]

- Lin, C.-H.; Wu, W.-H.; Lee, T.-N. Using an online learning platform to show students’ achievements and attention in the video lecture and online practice learning environments. Educ. Technol. Soc. 2022, 25, 155–165. [Google Scholar]

- Lavidas, K.; Komis, V.; Achriani, A. Explaining faculty members’ behavioral intention to use learning management systems. J. Comput. Educ. 2022, 9, 707–725. [Google Scholar] [CrossRef]

- Huang, R.; Spector, J.M.; Yang, J. Educational Technology: A Primer for the 21st Century; Springer: Singapore, 2019. [Google Scholar] [CrossRef]

- Milakovich, M.E.; Wise, J.M. Digital Learning: The Challenges of Borderless Education; Edward Elgar Publishing: Cheltenham, UK, 2019. [Google Scholar]

- Marek, M.W.; Wu, P.N. Digital learning curriculum design: Outcomes and affordances. In Pedagogies of Digital Learning in Higher Education; Daniela, L., Ed.; Routledge: Abingdon-on-Thames, UK, 2020; pp. 163–182. [Google Scholar] [CrossRef]

- Carless, D. Feedback for student learning in higher education. In International Encyclopedia of Education, 4th ed.; Tierney, R., Rizvi, F., Ercikan, K., Smith, G., Eds.; Elsevier: Amsterdam, The Netherlands, 2022; pp. 623–629. [Google Scholar]

- Kazmi, B.A.; Riaz, U. Technology-enhanced learning activities and student participation. In Learning and Teaching in Higher Education: Perspectives from a Business School; Daniels, K., Elliott, C., Finley, S., Chapmen, C., Eds.; Edward Elgar Publishing: Cheltenham, UK, 2020; pp. 177–183. [Google Scholar]

- Prensky, M. Teaching Digital Natives: Partnering for Real Learning; SAGE: Richmond, BC, Canada, 2010. [Google Scholar]

- Schofield, K. Theorising about learning and knowing. In Learning and Teaching in Higher Education: Perspectives from a Business School; Daniels, K., Elliott, C., Finley, S., Chapmen, C., Eds.; Edward Elgar Publishing: Cheltenham, UK, 2020; pp. 2–12. [Google Scholar]

- Alemdag, E.; Yildirim, Z. Design and development of an online formative peer assessment environment with instructional scaffolds. Educ. Technol. Res. Dev. 2022, 70, 1359–1389. [Google Scholar] [CrossRef]

- Guardia, L.; Maina, M.; Mancini, F.; Naaman, H. EPICA—Articulating skills for the workplace. In The Envisioning Report for Empowering Universities, 4th ed.; Ubach, G., Ed.; European Association of Distance Teaching Universities: Maastricht, The Netherlands, 2020; pp. 26–29. [Google Scholar]

- Podsiad, M.; Havard, B. Faculty acceptance of the peer assessment collaboration evaluation tool: A quantitative study. Educ. Technol. Res. Dev. 2020, 68, 1381–1407. [Google Scholar] [CrossRef]

- Ibarra-Sáiz, M.S.; Rodríguez-Gómez, G.; Boud, D.; Rotsaert, T.; Brown, S.; Salinas-Salazar, M.L.; Rodríguez-Gómez, H.M. The future of assessment in higher education. RELIEVE 2020, 26, art. M1. [Google Scholar] [CrossRef]

- Loureiro, P.; Gomes, M.J. Online Peer Assessment for Learning: Findings from Higher Education Students. Educ. Sci. 2023, 13, 253. [Google Scholar] [CrossRef]

- Lin, C.-J. An online peer assessment approach to supporting mind-mapping flipped learning activities for college English writing courses. J. Comput. Educ. 2019, 6, 385–415. [Google Scholar] [CrossRef]

- Robertson, S.N.; Humphrey, S.M.; Steele, J.P. Using technology tools for formative assessments. J. Educ. Online 2019, 16, 1–10. [Google Scholar] [CrossRef]

- Kempe, A.L.; Grönlund, Å. Collaborative digital textbooks—A comparison of five different designs shaping teaching and learning. Educ. Inf. Technol. 2019, 24, 2909–2941. [Google Scholar] [CrossRef]

- Chin, H.; Chew, C.M.; Lim, H.L. Incorporating feedback in online cognitive diagnostic assessment for enhancing grade five students’ achievement in ‘time’. J. Comput. Educ. 2021, 8, 183–212. [Google Scholar] [CrossRef]

- Jensen, L.X.; Bearman, M.; Boud, D. Understanding feedback in online learning—A critical review and metaphor analysis. Comput. Educ. 2021, 173, 104271. [Google Scholar] [CrossRef]

- Gikandi, J.W.; Morrow, D.; Davis, N.E. Online formative assessment in higher education: A review of the literature. Comput. Educ. 2011, 57, 2333–2351. [Google Scholar] [CrossRef]

- Alshaikh, A.A. The degree of utilizing e-assessment techniques at Prince Sattam Bin Abdulaziz University: Faculty perspectives. J. Educ. Soc. Res. 2020, 10, 238–249. [Google Scholar] [CrossRef]

- Butler-Henderson, K.; Crawford, J. A systematic review of online examinations: A pedagogical innovation for scalable authentication and integrity. Comput. Educ. 2020, 159, 104024. [Google Scholar] [CrossRef] [PubMed]

- Gallatly, R.; Carciofo, R. Using an online discussion forum in a summative coursework assignment. J. Educ. Online 2020, 17, 1–12. [Google Scholar]

- Finley, S. Writing effective multiple choice questions. In Learning and Teaching in Higher Education: Perspectives from a Business School; Daniels, K., Elliott, C., Finley, S., Chapmen, C., Eds.; Edward Elgar Publishing: Cheltenham, UK, 2020; pp. 295–303. [Google Scholar]

- Ibbet, N.L.; Wheldon, B.J. The incidence of clueing in multiple choice testbank questions in accounting: Some evidence from Australia. E-J. Bus. Educ. Scholarsh. Teach. 2016, 10, 20–35. [Google Scholar]

- Eaton, S.E. Academic integrity during COVID-19: Reflections from the University of Calgary. ISEA 2020, 48, 80–85. [Google Scholar]

- Dawson, P. Defending Assessment Security in a Digital World: Preventing E-Cheating and Supporting Academic Integrity in Higher Education; Routledge: London, UK, 2021. [Google Scholar]

- Stödberg, U. A research review of e-assessment. Assess. Eval. High. Educ. 2012, 37, 591–604. [Google Scholar] [CrossRef]

- Ridgway, J.; McCusker, S.; Pead, D. Literature Review of E-Assessment; Project Report; Futurelab: Bristol, UK, 2004. [Google Scholar]

- Booth, A.; Noyes, J.; Flemming, K.; Gerhardus, A.; Wahlster, P.; Van Der Wilt, G.J.; Mozygemba, K.; Refolo, P.; Sacchini, D.; Tummers, M.; et al. Guidance on Choosing Qualitative Evidence Synthesis Methods for use in Health Technology Assessments of Complex Interventions. 2016. Available online: http://www.integrate-hta.eu/downloads/ (accessed on 17 January 2022).

- Flemming, K.; Noyes, J. Qualitative evidence synthesis: Where are we at? Int. J. Qual. Methods 2021, 20, 1–13. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Kerres, M.; Bedenlier, S.; Bond, M.; Buntins, K. (Eds.) Systematic Reviews in Educational Research: Methodology, Perspectives and Application; Springer: Wiesbaden, Germany, 2020. [Google Scholar]

- The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. Available online: https://www.bmj.com/content/372/bmj.n71 (accessed on 2 February 2022).

- Rof, A.; Bikfalvi, A.; Marques, P. Pandemic-accelerated digital transformation of a born digital higher education institution: Towards a customized multimode learning strategy. Educ. Technol. Soc. 2022, 25, 124–141. [Google Scholar]

- Hong, J.-C.; Liu, X.; Cao, W.; Tai, K.-H.; Zhao, L. Effects of self-efficacy and online learning Mind States on learning ineffectiveness during the COVID-19 Lockdown. Educ. Technol. Soc. 2022, 25, 142–154. [Google Scholar]

- Bozkurt, A.; Sharma, R.C. Emergency remote teaching in a time of global crisis due to CoronaVirus pandemic. Asian J. Distance Educ. 2020, 15, 1–6. [Google Scholar] [CrossRef]

- Chiou, P.Z. Learning cytology in times of pandemic: An educational institutional experience with remote teaching. J. Am. Soc. Cytopathol. 2020, 9, 579–585. [Google Scholar] [CrossRef]

- Hodges, C.; Moore, S.; Lockee, B.; Trust, T.; Bond, A. The Difference Between Emergency Remote Teaching and Online Learning. Available online: https://er.educause.edu/articles/2020/3/the-difference-between-emergency-remote-teaching-and-online-learning#fn17 (accessed on 14 January 2022).

- Newman, M.; Gough, D. Systematic reviews in educational research: Methodology, perspectives and application. In Systematic Reviews in Educational Research: Methodology, Perspectives and Application; Zawacki-Richter, O., Kerres, M., Bedenlier, S., Bond, M., Buntins, K., Eds.; Springer: Wiesbaden, Germany, 2019; pp. 3–22. [Google Scholar] [CrossRef]

- Ghouali, K.; Cecilia, R.R. Towards a Moodle-based assessment of Algerian EFL students’ writing performance. Porta Linguarum 2021, 36, 231–248. [Google Scholar] [CrossRef]

- Poth, C. The contributions of mixed insights to advancing technology-enhanced formative assessments within higher education learning environments: An illustrative example. Int. J. Educ. Technol. High. Educ. 2018, 15, 9. [Google Scholar] [CrossRef]

- Barana, A.; Marchisio, M. An interactive learning environment to empower engagement in Mathematics. Interact. Des. Archit. J. 2020, 45, 302–321. [Google Scholar] [CrossRef]

- Lajane, H.; Gouifrane, R.; Qaisar, R.; Noudmi, F.Z.; Lotfi, S.; Chemsi, G.; Radid, M. Formative e-assessment for Moroccan Polyvalent nurses training: Effects and challenges. Int. J. Emerg. Technol. Learn. 2020, 15, 236–251. [Google Scholar] [CrossRef]

- Babo, R.; Rocha, J.; Fitas, R.; Suhonen, J.; Tukiainen, M. Self and peer e-assessment: A study on software usability. Int. J. Inf. Commun. Technol. Educ. 2021, 17, 68–85. [Google Scholar] [CrossRef]

- Guerrero-Roldán, A.E.; Rodriguez-Gonzalez, M.E.; Karadeniz, A.; Kocdar, R.; Aleksieva, L.; Peytcheva-Forsyth, R. Students’ experiences on using an authentication and authorship checking system in e-assessment. Hacet. Univ. J. Educ. 2019, 35, 6–24. [Google Scholar] [CrossRef]

- Bahati, B.; Fors, U.; Hansen, P.; Nouri, J.; Mukama, E. Measuring learner satisfaction with formative e-assessment strategies. Int. J. Emerg. Technol. Learn. 2019, 14, 61–79. [Google Scholar] [CrossRef]

- Mimirinis, M. Qualitative differences in academics’ conceptions of e-assessment. Assess. Eval. High. Educ. 2018, 44, 233–248. [Google Scholar] [CrossRef]

- Mahmood, H.K.; Hussain, F.; Mahmood, M.; Kumail, R.; Abbas, J. Impact of e-assessment at middle school students’ learning—An empirical study at USA middle school students. Int. J. Sci. Eng. Res. 2020, 11, 1722–1736. [Google Scholar]

- Deelay, S.J. Using technology to facilitate effective assessment for learning and feedback in higher education. Assess. Eval. High. Educ. 2018, 43, 439–448. [Google Scholar] [CrossRef]

- Swart, O.; Shuttleworth, C.C. The new face of alternative assessment in accounting sciences—Technology as an anthropomorphic stakeholder. S. Afr. J. High. Educ. 2021, 35, 200–219. [Google Scholar] [CrossRef]

- Kocdar, S.; Karadeniz, A.; Peytcheva-Forsyth, R.; Stoeva, V. Cheating and plagiarism in e-assessment: Students’ perspectives. Open Prax. 2018, 10, 221–235. [Google Scholar] [CrossRef]

- Moccozet, L.; Benkacem, O.; Berisha, E.; Trindade, R.T.; Bürgi, P.-Y. A versatile and flexible framework for summative e-assessment in higher education. Int. J. Contin. Eng. Educ. Life-Long Learn. 2019, 29, 1–18. [Google Scholar]

- Ghilay, Y. Computer assisted assessment (CAA) in higher education: Multi-text and quantitative courses. J. Online High. Educ. 2019, 3, 13–34. [Google Scholar]

- Garcez, A.; Silva, R.; Franco, M. Digital transformation shaping structural pillars for academic entrepreneurship: A framework proposal and research agenda. Educ. Inf. Technol. 2022, 27, 1159–1182. [Google Scholar] [CrossRef] [PubMed]

- Bamoallem, B.; Altarteer, S. Remote emergency learning during COVID-19 and its impact on university students perception of blended learning in KSA. Educ. Inf. Technol. 2022, 27, 157–179. [Google Scholar] [CrossRef]

- Al-Azawei, A.; Baiee, W.R.; Mohammed, M.A. Learners’ experience towards e-assessment tools: A comparative study on virtual reality and Moodle quiz. Int. J. Emerg. Technol. Learn. 2019, 14, 34–50. [Google Scholar] [CrossRef]

- Martinez, V.; Mon, M.A.; Alvarez, M.; Fueyo, E.; Dobarro, A. E-Self-Assessment as a strategy to improve the learning process at university. Educ. Res. Int. 2020, 2020, 3454783. [Google Scholar] [CrossRef]

- Wu, W.; Berestova, A.; Lobuteva, A.; Stroiteleva, N. An intelligent computer system for assessing student performance. Int. J. Emerg. Technol. Learn. 2021, 16, 31–45. [Google Scholar] [CrossRef]

- McCallum, S.; Milner, M.M. The effectiveness of formative assessment: Student views and staff reflections. Assess. Eval. High. Educ. 2020, 46, 1–16. [Google Scholar] [CrossRef]

- Wong, S.F.; Mahmud, M.M.; Wong, S.S. Effectiveness of formative e-assessment procedure: Learning calculus in blended learning environment. In Proceedings of the 2020 8th International Conference on Communications and Broadband Networking, Auckland, New Zealand, 15–18 April 2020. [Google Scholar] [CrossRef]

- Weir, I.; Gwynllyw, R.; Henderson, K. A case study in the e-assessment of statistics for non-specialists. J. Univ. Teach. Learn. Pract. 2021, 18, 3–20. [Google Scholar] [CrossRef]

- Cramp, J.; Medlin, J.F.; Lake, P.; Sharp, C. Lessons learned from implementing remotely invigilated online exams. J. Univ. Teach. Learn. Pract. 2019, 16, 1–20. [Google Scholar] [CrossRef]

- Makokotlela, M.V. An E-Portfolio as an assessment strategy in an open distance learning context. Int. J. Inf. Commun. Technol. Educ. 2020, 16, 122–134. [Google Scholar] [CrossRef]

- Peytcheva-Forsyth, R.; Aleksieva, L.; Yovkova, B. The impact of prior experience of e-learning and e-assessment on students’ and teachers’ approaches to the use of a student authentication and authorship checking system. In Proceedings of the 10th International Conference on Education and New Learning Technologies, Palma, Spain, 2–4 July 2018; pp. 2311–2321. [Google Scholar]

- Danniels, E.; Pyle, A.; DeLuca, C. The role of technology in supporting classroom assessment in playbased kindergarten. Teach. Teach. Educ. 2020, 88, 102966. [Google Scholar] [CrossRef]

- Babo, R.; Suhonen, J. E-assessment with multiple choice questions: A qualitative study of teachers’ opinions and experience regarding the new assessment strategy. Int. J. Learn. Technol. 2018, 13, 220–248. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).