1. Introduction

Technology has taken up a major role in every aspect of society, and education is no exception, as universities evolve to better suit the needs and requirements of an increasingly technological society [

1] and master the constant introduction of “digital technologies for communication, collaborative knowledge construction, reading, and multimedia learning in education systems” [

2] (p. 3100). The impact of the 21st century digital transformation has influenced education in an unprecedented way. Information is no longer restricted to print books or encyclopedias in the traditional sense; it is now spread across the network of connected digital technologies, may be consumed anytime [

3], and “students can learn from anywhere without losing the facets of a seated classroom” [

4] (p. 41). Besides the increased and diversified access to information, opportunities to contribute to the production and dissemination of knowledge, exclusive to educational environments, have also become possible to each and every individual, who is now both a producer and consumer of information, and this plays an unprecedented role of the individual in society [

3]. Education has shifted from a one-way dissemination of knowledge to the achievement of a mutual-learning community where knowledge is created collaboratively [

5].

Higher education institutions (HEIs) are currently preparing students for jobs that do not yet exist [

1], and this implies equipping learners with a set of requirements seen as quality indicators and necessary traits for future success. Being able to perform meaningful, authentic tasks and to apply the knowledge and skills acquired in real world contexts are essential 21st century skills to help students contribute effectively to the global workforce [

6,

7,

8,

9,

10]. Saykili [

3] classifies 21st century skills into three main categories: learning and innovation skills (critical thinking and problem solving, communication and collaboration, creativity, and innovation skills); information, media, and technology skills (information literacy, media literacy, and information and communication literacy); life and career skills (flexibility and adaptability, initiative and self-direction, social and cross-cultural skills, productivity and accountability, leadership, and responsibility skills). Continuous development and lifelong learning in a fast-changing digital era will make the learners of today become an attractive, hirable, and employable workforce tomorrow [

4,

11].

1.1. Assessment in Higher Education

Assessment influences the way students define their priorities and engage in the learning process. The evolution of this association has been a concern for educational researchers, and the terminology to describe assessment has evolved to represent its purpose [

12].

The way in which the assessment is operationalized influences the motivation and learning of students, being seen as “a tool for learning” [

13].

Earl [

14] presents three distinct and intertwined purposes of assessment: Assessment of Learning (AoL); Assessment for Learning (AfL) and Assessment as Learning (AaL). AoL is “assessment used to confirm what students know, to demonstrate whether or not the students have met the standards and/or show how they are placed in relation to others” [

15] (p. 7). Over the years, assessment in schools has largely had this summative function, where students’ results are measured through tests and examinations, and their progress is subject to socially implemented standards [

16]. More recently, educational policies have focused on using assessment more effectively to enhance student learning [

15], highlighting the formative purpose of AfL and AaL. AfL focuses on learning and is “part of everyday practice by students, teachers and peers that seeks, reflects upon and responds to information from dialogue, demonstration and observation in ways that enhance ongoing learning” [

12] (p. 2). AaL uses “assessment as a process of developing and supporting metacognition for students….focuses on the role of the student as the critical connector between assessment and learning” [

15] (p. 7). AaL and AfL support student-centered learning and aim at strengthening student autonomy, decision making, and participation, particularly through feedback about ongoing progress [

17].

In their study, Pereira et al. [

18] refer the emergence of research on alternative or learner-oriented assessment methods in HEIs over the period 2006–2013, highlighting portfolio assessment and self- and peer-assessment practices as the main foci of the studies analyzed. Research on assessment and evaluation in higher education over the above-mentioned period underlines, for example, the advantages of formative assessment such as enhanced student learning and motivation, the benefits of e-learning assessment such as opportunities for reflection and innovation, the adequacy of alternative assessment methods, other than the conventional written tests, as they enable effective learning and professional development, and the effectiveness of peer assessment, from the student’s perspective, as it allows interaction between students and produces formative feedback.

1.2. Alternative Digital Assessment

Enhanced technological development and the demand for 21st century skills have resulted in the use of alternative and sustainable assessment methods and new learning scenarios [

8] capable of providing a more effective learning environment. Portfolios, checklists, rubrics, surveys, student-centered assessments, as well as reflections are some examples of non-standardized forms of assessment which seek to demonstrate student achievement in a more comprehensive way [

19] and make learning more significant through assessment. Assessment regimes are complex, and aligning instruction, assessment, and effective learning [

20] has become quite challenging for instructors at various levels within the education setting [

21], as all competing priorities must be carefully balanced. As such, a new assessment culture [

22] emerges where assessment goes beyond its summative role of certification and ranking and undertakes a formative perspective in a change of roles which empowers students to actively build their knowledge and develop their skills [

23,

24] and challenges instructors to design deep and meaningful learning experiences free of constraints from time and place [

9]. It also highlights the importance of features such as authenticity, flexibility, adaptability, social interaction, feedback, self-regulation, and scaffolding when designing an assessment for learning strategy [

25].

Recent studies [

26,

27] have found that, although assessment practices have changed to include assessment of skills and learning enhancement in online environments, alternative digital assessment strategies such as online portfolios, podcasts, storytelling, or self and peer assessment are still limited to individual teacher proactivity rather than any assessment process institutionally planned or implemented [

28]. According to Amante et al. [

25], the “assessment culture”, which diverges from the “test culture” respects the three elements of the learning process—student, teacher and knowledge—in a balanced and dynamic way. Online multiple-choice quizzes, for example, incorporate the technological dimension but still favor the psychometric paradigm of assessment and not the development of the student, as in a learner-centered formative approach. The authors hold that the concept of an “alternative digital assessment strategy” refers to all technology-enabled tasks where design, performance, and the feedback is technologically mediated. The literature [

20,

28,

29] points out that assessment is the most powerful tool to influence the way students spend their time, orient their effort, and perform their tasks. Therefore, in a competency based learning environment, assessment requirements must mirror a competency based curriculum, and assessment strategies need to adapt to online contexts and new learning resources while assessing knowledge, abilities, and attitudes [

25,

30].

1.3. The PrACT Model: An Alternative Digital Assessment Framework

Within an alternative evaluation culture, and based on the concerns for quality and validity of assessment practices, the authors Pereira, Oliveira, and Tinoca [

30] propose a conceptual framework that led to the PrACT model [

16,

17,

21,

22]. This framework can be used “as a reference in the definition of an alternative digital strategy for online, hybrid (blended learning) or face-to-face contexts with strong use of technologies” and “constitutes a framework for the quality of a given assessment strategy” [

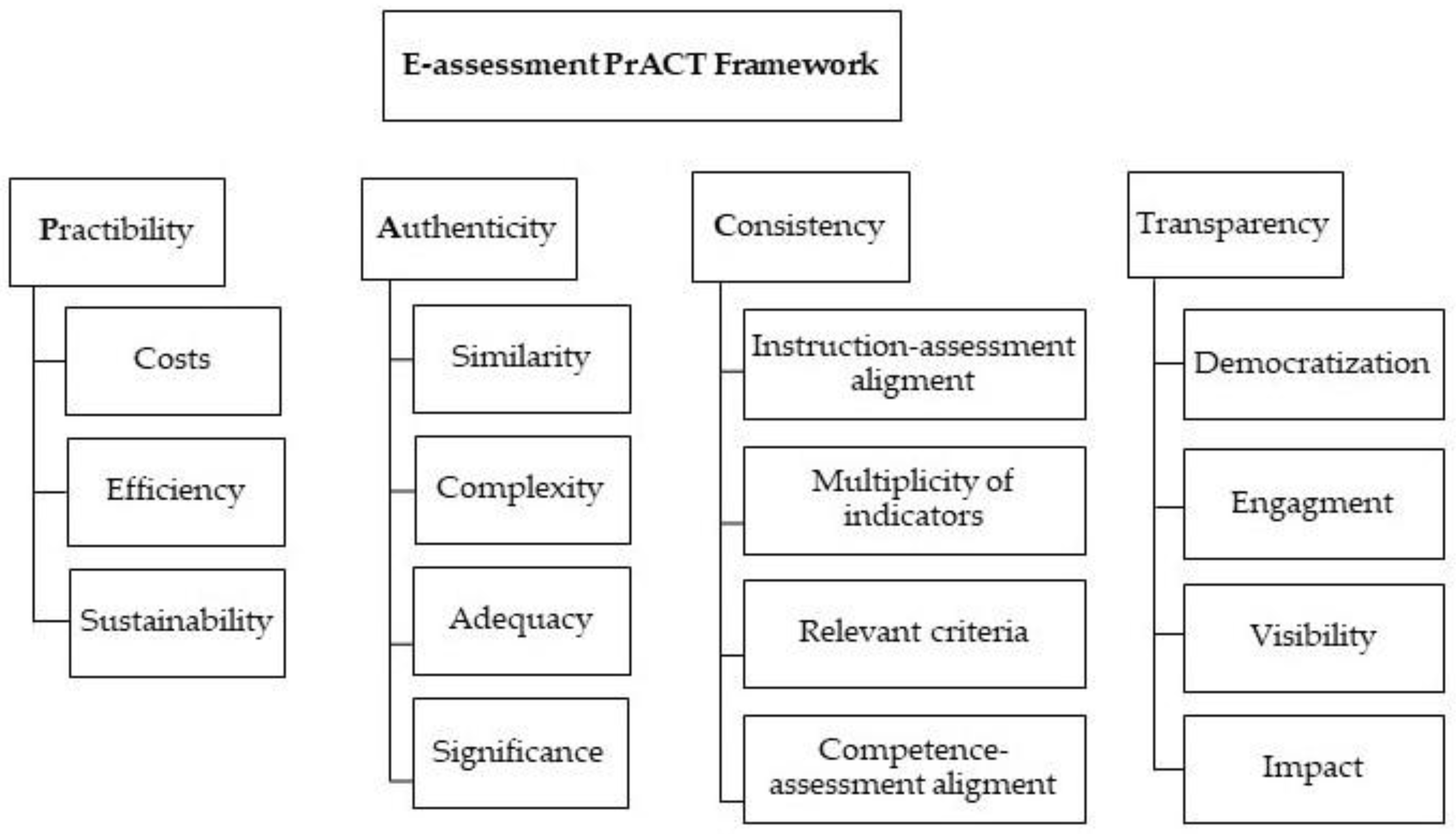

24] (p. 318). The PrACT model is intended to impact the quality standards for e-assessment tasks in an assessment for learning context and emphasizes four main dimensions—Practicability, Authenticity, Consistency, and Transparency—as shown in

Figure 1.

The Practicability dimension is related to the feasibility and sustainability of the assessment strategy used and it considers the resources, time, and training costs for assessors and organizations. Learners must also recognize the assessment tasks as feasible, relevant, and useful for the learning process. This dimension considers the following criteria: costs (related to training time and resources for assessors and assessees), efficiency (related to the costs/effects based on the expected results), and sustainability (related to the feasibility of the implementation of the assessment strategy) [

24,

25,

30,

31,

32].

The Authenticity dimension is related to lifelong learning and the need for the assessment tasks to be complex and significant in order to resemble the skills required for professional life. This dimension includes a set of criteria intended to measure the level of authenticity of the assessment task: similarity (represents the connection to the real-world context), complexity (represents the nature of the e-assessment tasks), adequacy (represents the suitability of the assessment conditions) and significance (represents the meaningful value of the tasks for learners, instructors, and employers) [

24,

25,

30,

31,

32].

The Consistency dimension is linked to the psychometric properties of validity and reliability, implying a variety of assessment indicators. To assess the degree of consistency in the assessment strategy, the following reference criteria are proposed: instruction-assessment alignment (meaning the work done during the learning process is coherent with the assessment tasks), multiplicity of indicators (meaning there is a variety of e-assessment methods, contexts, moments, and assessors), relevant criteria (meaning the applicability of the assessment criteria to assess competencies and skills), competency-assessment alignment (meaning the balance between the competencies developed and the assessment design used) [

24,

25,

30,

31,

32].

The Transparency dimension refers to learner engagement, meaning the e-assessment strategy must be visible and intelligible by all participants. The following criteria are contemplated in this dimension: democratization (meaning the participation of students in defining the assessment criteria), engagement (meaning the participation of students in defining the learning goals and performance criteria), visibility (meaning the possibility for learners to share their learning process/products with others) and impact (meaning the effects that the e-assessment strategies have on the learning process and on the design of the educational program) [

24,

25,

30,

31,

32].

The PrACT e-assessment framework clearly considers an assessment for learning perspective, placing learners as active agents in the learning process and sharing responsibility for the assessment of their own learning and that of others in a collaborative constructivist process that assumes a wide range of sources in a variety of situations and circumstances.

Research on alternative digital assessment [

25,

27] indicates that an important contribution to the development of lifelong learning skills and sustainable assessment practices is online peer assessment (OPA). According to Amendola and Miceli [

33] (p. 72) “peer assessment (also called peer review) is a collaborative learning technique based on a critical analysis by learners of a task or artefact previously undertaken by peers” where “students reciprocally express a critical judgment about the way their peers performed a task assigned by the teacher and, in some cases, they give a grade to it” in a constructive revision of their work. Wang et al. define peer assessment as an effective formative assessment tool since “peers share ideas with each other and evaluate each other’s work by giving grades or ratings based on assessment criteria or by giving feedback to peers in written or oral comments” [

34] (p. 714). According to Liu et al. [

35] (p. 280), “peer feedback is primarily about rich detailed comments but without formal grades, whilst peer assessment denotes grading (irrespective of whether comments are also included)”. Despite these differences, the authors consider that “whether grades are awarded or not, the emphasis is on standards and how peer interaction can lead to enhanced understandings and improved learning” [

35] (p. 280). The utility of feedback for subsequent assessment (i.e., feed-forward) also assumes a critical role in the definition of feedback as research indicates that students often need help to facilitate their efforts to make use of their feedback (i.e., feed-forward) [

36]. The advancement of technology and the variety of learning management systems (LMS) available in universities allow instructors to boost the potential of online peer assessment by easily integrating it into classrooms while replacing traditional face-to-face peer assessment [

37]. In this study, we adopted the use of the term “online peer assessment” (OPA) as it is commonly used in literature to refer to a “peer learning strategy that allows learners to assess peers’ performance by scoring or providing feedback” [

38] (p. 387) as a means to promote learning in a technologically mediated environment.

Learning with peers is a key skill required for lifelong learning and, according to the literature, OPA enhances learning efficiency and student critical thinking while fostering the development of essential professional skills such as higher-order thinking skills, motivation, responsibility, and autonomy [

39,

40]. Being able to autonomously and critically assess their own performance and that of a peer within the required standards implicates students in a self-reflection process that promotes their understanding of the learning content, stimulates them to explore and justify their own artefacts, and strengthens their critical thinking [

33,

38,

41,

42,

43]. Peer assessment also boosts students’ social and collaborative skills while fostering a learning environment with high levels of interactivity [

38,

42]. Peer assessment mediated by technology can assure anonymity of authorship, enhancing a more honest and fair assessment of peers [

37,

44,

45,

46] and reduce restrictions related to time and place, hence providing a ubiquitous learning environment [

38].

Given the relevance of educational assessment of learning in the students’ learning process, this study aims to determine and discuss higher education (HE) students’ perceptions regarding an e-assessment strategy, namely OPA tasks designed based on the PrACT framework, as an assessment for learning strategy. Thus, the following general research question was defined:

The goal of the present article is to present the main findings concerning HE students’ perceptions towards online peer assessment and how those practices can influence the development of essential 21st century lifelong learning skills. It also aims at validating the potential of a peer assessment strategy designed according to the four dimensions of the e-assessment framework which led to the PrACT model. Finally, it intends to find out the students’ perceptions concerning the adequacy and suitability of the functionality of the peer assessment tool available on the learning management system (LMS) used to support teaching and assessment in the university where the study takes place.

2. Methodology

This study reports the implementation of an online peer assessment strategy in an HEI in Portugal. The focus of the study concerned the students’ perceptions about the suitability of an OPA strategy, based on the PrACT model and with an assessment for learning approach. A class of 21 students from the second year of an undergraduate program on education from a Portuguese university participated in the study. The selection of the participants was based on a non-probabilistic sampling by convenience, as this curricular unit was taught by one of the authors, who uses online peer assessment practices. It is important to note that, although this undergraduate program is a degree in education, it does not train teachers; instead, this cycle of studies provides graduates with knowledge and skills that enable them to act within and outside the educational system, particularly in terms of education, training, training management, social and community intervention and educational mediation. The classes began on 14 February and ended on 1 July 2022. The assessment tasks were proposed and discussed with the students in the first class of the semester, and, from time to time, the teacher reminded the students what the aims of the assessment tasks were and how important they were for their learning. At the same time, the teacher briefly explained and discussed with the students the importance of peer assessment for self-learning and for peer learning. All the students participated in the online peer assessment activities proposed in the first class of the curricular unit to which students agreed to participate. Nevertheless, only 16 students from the class actually responded to the online questionnaire sent to them.

The OPA process was implemented using the functionality available for that purpose in the Blackboard Collaborate platform, the LMS adopted by the HEI. This peer assessment tool allows teachers to randomly and anonymously designate the different tasks to the students so that they do not know which colleagues are assigned to evaluate their work. As such, this OPA tool allowed students to submit their work, designate the assessors randomly and anonymously, submit the assessment remarks anonymously, and consult each peer assessment document as soon as they were done. The use of an OPA tool with random and anonymous designation of assessors ensures that all the students submit their assessment tasks on time. If they do not do that, they will not be considered on the poll of assessors so they will not participate in the peer assessment task. Some students did not submit their assessment tasks on time, and so did not participate in that specific peer assessment task. The peer assessment tool used does not have a proper process for group peer assessment. To overcome this limitation, only one member of each group was included in the assessment group task so we could also designate, randomly and anonymously, the students to do the group peer assessment task. It is important to note that the students did not know which colleagues their peer assessors were but each one of the assessors knew whom they were assessing. This situation was impossible to avoid as they were assessing individual learning portfolios, which, according to their nature, reveal their authorship. Concerning the group essays, every student knew which theme was discussed by each group, so it was also very easy to identify their authors.

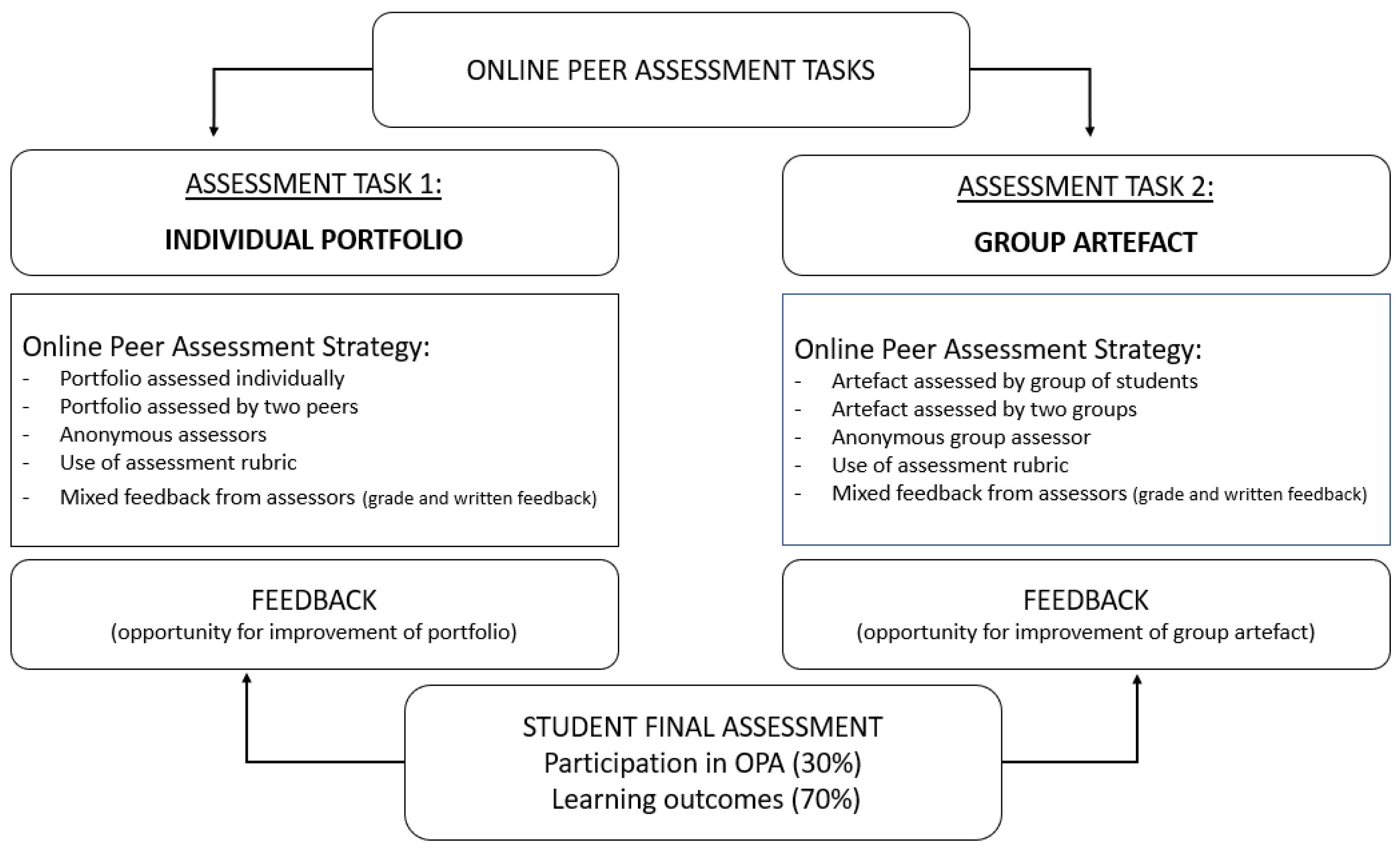

The peer assessment tool allows the teacher to define the moments to begin and end the assessment process. As an online tool, it also allows students to access the assessment task anytime, almost anywhere. Although it is true that access to technology may still be limited in some cases, the literature greatly emphasizes these benefits associated with technology mediated peer assessment. It is important to note that the students have free internet access on the university campus, everywhere at the university and in almost the whole city. It is very common for public places (like libraries, public services, or city plazas) and commercial places (like coffee shops or malls) to have free internet access. The students could access the university LMS (and therefore the online peer assessment tool) on their computer desktop or mobile devices. The OPA strategy was designed taking into consideration two main assessment tasks: an individual portfolio and a group essay, as shown in

Figure 2.

Peer assessment of the portfolio occurred at two separate moments—the middle and the end of the class calendar—and by two anonymous (student) assessors. Peer assessment of group essays was performed by two different groups of students at the end of the semester. The multiple moments and assessors involved in the peer assessment process created opportunities for students to improve their work upon receiving written feedback from different peers. To support peer assessment activities, students were asked to use rubrics indicating assessment criteria and performance levels. The two rubrics were presented, explained, and discussed with the students at the beginning of the semester to help them understand better how they should do the peer assessment tasks. The students did not define the criteria but were asked to discuss the rubrics and were given an opportunity to express their opinion about the relevance and value of the criteria and suggest modifications. None of the students suggested modifications, and all the students present in the class agreed with the criteria used.

The teacher also developed a rubric to assess student participation and performance in the OPA activities and took that into consideration for the students’ final marks, as discussed with the students on their first class of the semester. As suggested by [

35], awarding a percentage of the assignment marks for the quality of peer marking may encourage students to think carefully about the assessment criteria and the writing of feedback. This rubric was also discussed with the students at the beginning of the semester. The idea was to help students understand better how they should perform during peer assessment tasks. The rubric included criteria about: (i) effective use of the rubrics to assess colleagues, referring and justifying their attribution of levels; (ii) quality of writing; (iii) evidence of scientific knowledge about the themes of their colleagues’ work; (iv) evidence of critical analysis of peer work; and (v) participation on the activities of peer assessment (participation on some or all the activities).

Data collection was based on the application of an online questionnaire which was organized in six parts, four of which concerned each dimension of the PrACT framework. It included a total of 36 multiple-choice questions, using a 4-point Likert scale (1—totally disagree; 2—disagree, 3—agree, 4—totally agree) with a no-opinion option and two open-ended questions. An initial draft of the questionnaire was sent to two authors of the PrACT framework to analyze it and, after that, two working meetings were organized with them to discuss the questionnaire. These working meetings provided valuable suggestions and improvements and were very important in developing the final version of the questionnaire. It was then submitted for approval to the Ethics Committee of the HEI, where it was validated. Ethical concerns were considered in all phases of data collection and analysis, including informed consent, confidentiality, and anonymity. After this process, an email with the link to the online survey was sent to the students, only once the classes of the curricular unit in which the study took place ended and after students were graded. The link was available for approximately one month, and no reminders were sent, considering it was exam period for some of the students and holiday period for others.

Figure 3 presents the different phases of the data collection process.

3. Results and Discussion

Results from the survey are presented and discussed according to the four main sections of the questionnaire which correspond to the four dimensions of the PrACT model—Practicability, Authenticity, Consistency and Transparency—and the criteria which contribute to the definition of each of the dimensions.

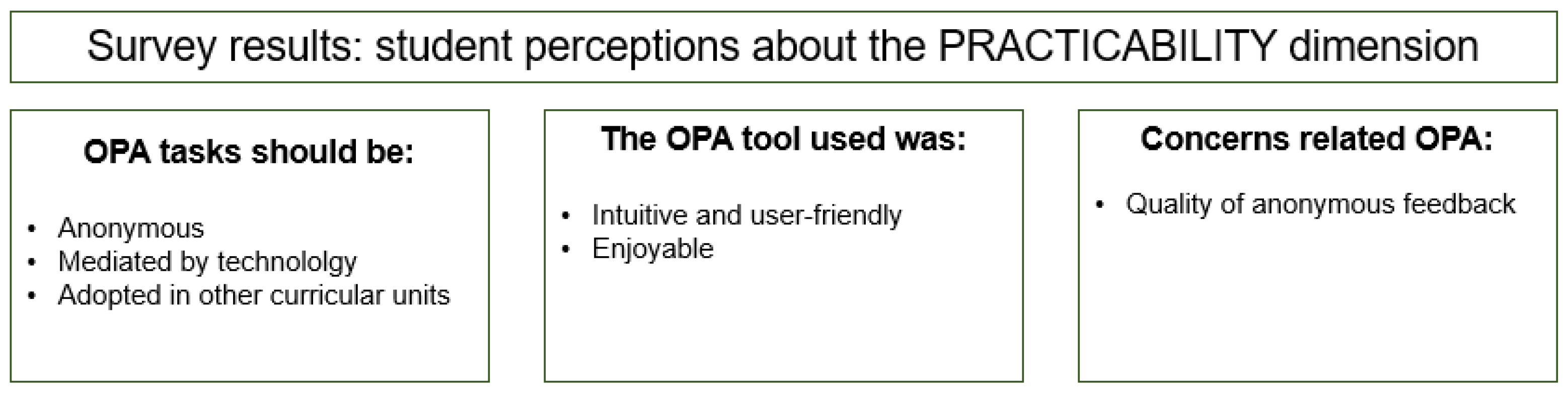

3.1. Practicability Dimension

When implementing competency based e-assessment, the Practicability dimension could help assure the quality of the process, particularly through cost reduction, higher efficiency and recognized sustainability.

Regarding costs related to time or digital resources required to implement e-assessment activities, studies have proven that technology can help reduce teacher workload and the amount of time required to assess tasks and provide individual feedback [

34,

45,

47,

48]. Technology could also eliminate any restriction related to time or place and promotes a ubiquitous learning environment [

38,

49]. According to the questionnaire and the students’ perspectives of the time costs while engaged in the OPA activities, all students agreed that the use of a digital tool did facilitate the peer assessment process.

Efficiency relates costs to the expected results. Several authors [

34,

37,

50] explain that online peer assessment has been replacing traditional peer assessment due to the proliferation of LMSs in academic institutions, the multiple benefits associated with significant learning, and the anonymity it provides. According to the perceptions of the students involved in the current study, one might agree that the digital tool used to perform the online peer assessment activities contributed to the efficiency of the assessment strategy. Most of the students agreed that the assessment tool used was intuitive and user-friendly, and disagreed that it might have made the process slow or tiring. All students, except one, enjoyed using this tool. The students also responded well to the peer assessment activities, feeling no social pressure or constraints due to the anonymity of the online process, as seen in previous research [

37,

44,

45]. The students did, however, disagree when the question involved the quality of the anonymous feedback, as half of the students (8 out of 16) consider anonymous feedback less valid or reliable than non-anonymous feedback [

37].

Most of the students (12 out of 16) responded that they would like to see the current OPA process implemented in other curricular units (classes), which leads us to conclude that they consider it feasible, relevant, and useful “assuring that it is possible to successfully implement and sustain the proposed assessment design” [

23].

Practicability is particularly important in online contexts, given their specificities considering resources, time, and training costs, as well as their efficiency and sustainability. Student perceptions concerning this dimension may be summarized in

Figure 4:

3.2. Authenticity Dimension

The Authenticity dimension considers the degree of similarity between the skills assessed and those required in professional life. In this section of the questionnaire, students’ answers were almost unanimous, as a great majority of the students acknowledged the enhanced learning and competency development promoted by online peer assessment practices.

Similarity is one of the reference criteria which contributes to the quality of competency based e-assessment tasks and refers precisely to the resemblance of the tasks to real-world contexts. OPA is known for fostering soft skills useful for students’ job placement, such as critical thinking, self-assessment, responsibility, autonomy, and team building [

33,

38,

39,

40,

41,

42,

43]. Consequently, in the survey, all the students agreed that participating in the OPA tasks contributed to the development of assessment and analytical skills, digital competencies, critical reasoning, and the promotion of collaborative learning through contact with other points of view.

Real or professional, daily life contexts are often complex and with a variety of possible solutions, imposing a cognitive challenge that could be met through significant learning and e-assessment tasks, such as OPA [

2,

32], particularly in what concerns HE students [

38]. Hence, most students in this study (14 of 16) agree that peer assessment is, indeed, a complex task, emphasized by the lack of any previous experience in such type of task. Panadero [

51] suggests that more intensive peer assessment implementations can produce better human and social results, as students become aware of the complexities of peer assessment.

It is known that, in students’ professional life, there will be situations in which they will be asked to comment on or evaluate the work of others, and HEIs should reflect this demand. Nicol et al. [

52] refer to recent studies to highlight the benefits of producing feedback and developing assessment skills for meaningful learning. This resembles the significant value of the e-assessment task for students, instructors, and employers. In the survey, almost all students believe that peer assessment relates to what they will do in the future, although only half of the students agreed that this task could actually be useful for their future. Based on this disagreement, we might conclude that this could occur because skills such as the ability to take ownership of the assessment criteria, to make informed judgments and articulate those judgments in written feedback, or even the ability to improve one’s work based on this reflective process, are currently not specifically developed through the curriculum as students recognize it, despite being an important requirement beyond university [

52].

The quality of an alternative assessment task also depends on the adequacy of its complexity with the performing conditions. In the survey, when asked about the time available to perform the OPA tasks, all students, but one, considered it appropriate. The presentation of the rubrics and setting of deadlines in the first class of the semester could have contributed to effective time management by students.

The Authenticity dimension emphasizes the need for students to perceive online assessment tasks as complex, related to real-life context, and significant.

Figure 5 illustrates student perceptions concerning the online peer assessment tasks they participated in.

3.3. Consistency Dimension

The Consistency dimension “takes into account that the assessment of competences requires the implication of a variety of assessment methods, in diverse contexts, by different assessors, as well as the adequacy of the employed strategies” [

23].

One of the criteria this dimension comprises is the multiplicity of indicators to which the entire online peer assessment process contributes: products are assessed by multiple peers assigned randomly and anonymously by technology [

37], products are reviewed in multiple moments due to the ubiquitous learning environment technology creates [

49], and products can be saved, recovered, and shared in multiple formats and contexts [

40]. Nicol et al. [

52] suggest that student exposure to work of varying levels and to feedback from different sources helps produce high quality work. Besides the impact on learning, Amante et al. [

25] note that skill assessment implies a variety of methods, contexts, and assessors to help provide validity and credibility to the assessment process [

25]. Corroborating this perspective, in the survey, all the students but one agreed that receiving feedback about their work in multiple moments and by multiple peers helps ensure a reliable assessment process.

The literature [

42,

53] supports that the use of rubrics improves both the quantity and quality of peer assessment feedback and contributes to more appropriate and reasonable feedback. As mentioned in

Section 2, all the students had access to two rubrics with relevant criteria and performance indicators to support their revision and production of feedback concerning both the portfolio and the group essay. When asked about the appropriateness of the rubrics, all the students agreed that they assured the quality of the assessment strategy. Although the rubrics were presented and discussed in the beginning of the semester, when questioned about them during the online survey at the end of the semester, all the students considered the criteria used for the assessment of the portfolio adequate, and all but one agreed on the criteria used to assess the group essay.

According to the student perceptions stated in the questionnaire, all the students agreed that the online peer assessment design used was aligned with the competences meant to be developed in the curricular unit, assuring the quality of the e-assessment task according to the criteria proposed [

23]. Panadero [

51] holds that, when the purpose of the peer assessment is enhanced learning, focus should be given to the clarity and validity of the feedback provided by the peers instead of the assignment of a specific mark. The importance of feedback production and critical reasoning was discussed with the students in the first class along with the implementation of the OPA process.

Finally, the Consistency dimension of the PrACT model comprises the criterion which measures “instruction-assessment alignment”, which resembles “the need to provide e-assessment scenarios that are representative of the learning situations experienced by the students” [

23] (p. 13). All the students agreed that there was agreement between the work developed during classes and the assessment tasks used, and only two students disagreed that the assessment criteria were aligned with the work methodology of the curricular unit. All the students, except for one, believed that student participation in the peer assessment process should be included in their final marks, acknowledging the validity and reliability of this OPA design.

Figure 6 shows students’ perceptions concerning the consistency domain which stresses “the importance of aligning the competences being assessed with the e-assessment strategies being used and the assessment criteria, as well as the need to use a variety of indicators” [

23] (p. 12).

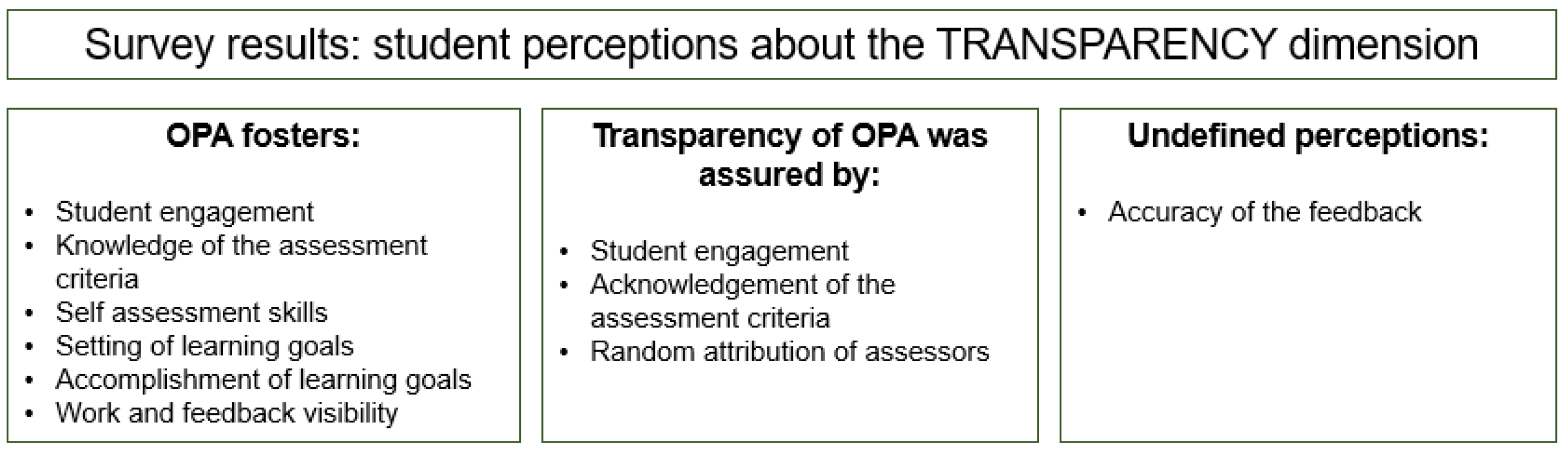

3.4. Transparency Dimension

“The transparency dimension promotes student engagement in online tasks through the democratization and visibility of the e-assessment strategies being used” [

24].

As mentioned before, the students were made aware of the OPA strategy and the assessment goals and rubrics from the first class of the semester. This awareness helps students know what is expected from them and adjust their learning process [

23], promoting autonomy and significant learning [

54]. Due to this democratization of the e-assessment task, all the students in the survey regarded the assessment process as transparent. Panadero [

51] suggests that, through peer assessment, students are given the opportunity to state their point of view and share responsibility for the assessment process, implying human and social factors undervalued in the literature until very recently. This author believes peer assessment “does not happen in a vacuum; rather it produces thoughts, actions, and emotions as a consequence of the interaction of assessees and assessors” [

51] (p. 2) When questioned during the survey about the validity of the feedback received, students showed different opinions, and the majority believed that the peer review of their work was not done according to the established criteria. This perspective is supported by previous results pointed out in the literature that suggest students do not acknowledge competence or authority among their peers to perform assessment tasks, and still prefer teacher assessment solely [

26,

37,

40,

51,

52]. To reduce the distrustfulness felt by students and increase the reliability of the online peer assessment strategy, regular training and interaction is strongly recommended in the literature [

37,

40,

51,

55]. It is important to note that, when asked in the open-ended questions, these students responded that they had never been involved in peer assessment activities. This could help explain the distrustfulness felt.

Student engagement in the definition of the learning goals contributes to their active participation, commitment, and responsibility, and defines the degree of transparency of an assessment task [

23,

45]. Considered a collaborative learning method, online peer assessment creates the opportunities for students to articulate and negotiate their perspective of the assessment criteria, and co-construct knowledge by engaging in explanation, justification, and questioning processes to “negotiate meaning and reach consensus” [

40] (p. 209). Therefore, in our study, all the students responded that having participated in the peer assessment activities enabled a greater engagement in the activities of the curricular unit, making the assessment process more transparent.

Web-based peer assessment has several advantages which contribute to the visibility of an assessment strategy, meaning the possibility of students sharing their learning processes or products with others [

23]. Besides other benefits already mentioned (ubiquity, anonymity, format diversity…) technology permits “instant electronic submission, storage, distribution and retrieval of student work as well as assessment data” [

40] (p. 208) making assessment visible to the student or to others at any moment of the learning process and supporting collaboration within the learning community. As such, all the students in the study agreed that using a digital tool allowed them to keep record of the peer feedback received. All the students but one also agreed that the random distribution and anonymity of assessors provided by technology made the assessment process transparent.

According to Nicol et al. [

52], peer assessment presents the sole opportunity for students to constructively use the significant feedback they have received to reformulate their initial products before final submission. This does not usually happen when the feedback is provided by the teacher, as teacher correction generally tends to mean moving on to the next task in the curriculum and not reformulating the task reviewed. This opportunity for improvement can be related to the impact or effects that the e-assessment strategies have in the learning process. Accordingly, in the questionnaire, only one student disagreed that the feedback provided by the peers helped them reach their learning goals. Fukuda et al. [

56] highlights that learners can be taught how to learn with the development of self-regulated learning skills which monitor and regulate their learning process, hence impacting their academic success. Skills such as self-reflection and self-efficacy can be fostered by the participation, critical judgement, and self-regulation required for the formulation of evaluative feedback in peer assessment activities [

33,

37,

55,

57,

58]. Corroborating literature, all the participants in the study reported that having received feedback from their peers impacted their self-assessment and helped them define learning goals for competence development.

Students’ perceptions toward their engagement in the learning process through online peer assessment and promoted by the Transparency dimension is summarized in

Figure 7:

3.5. Open-Ended Responses

Student responses in the open-ended question section of the survey corroborated the findings from the previous sections of this study regarding the dimensions of the PrACT model. There were two open-ended questions in the survey. The first question (Q1) asked students if they had participated in all the set OPA activities, requesting that they either talk about their experience or explain why they had not participated. The second question (Q2) asked if they had previously participated in any other OPA activities, and if that previous experience (or lack of experience) influenced their engagement in the present task. Concerning Q1, four students mentioned they had not participated in all the activities, either for professional reasons or lack of time. The 12 students who did participate in all the activities either simply replied that they had enjoyed it or listed some of the positive outcomes they had perceived (see

Table 1). Regarding Q2, the results were surprising as none of the students had ever participated in OPA activities. Twelve students felt that this lack of experience could have influenced their engagement, and stated this as a main reason for the complexity and difficulties associated with the task (see

Table 1). The other four students felt that previous practice would not have influenced their engagement, simply mentioning that it was a new experience and that they had never assessed portfolios.

After performing a six-phase thematic analysis [

59] to the open-ended answers to find patterns of meaning, it was possible to code the students’ perceptions concerning online peer assessment into two main themes: the benefits of OPA and the constraints of OPA. Within the first theme, three sub-themes were identified:

Within the second theme, it was possible to identify one sub-theme:

Generally speaking, the students pointed out the benefits they associated with the practice of peer assessment tasks and highlighted the complexity it represents. Emphasis was placed on the development of general skills, with specific mention of the promotion of critical thinking and digital literacy skills (Q1). Findings also reveal that none of the students had participated in peer assessment activities before, which led them to consider peer assessment a complex and difficult task (Q2).

Table 1 shows some sample comments for each of the main themes mentioned.

The limited or lack of participation of students in the assessment processes is an aspect corroborated by Iglesias Péres et al. [

60] and by Flores et al. [

13], who highlight the predominance of traditional assessment practices, or even by Ibarra Saiz and Gómez [

61], who call attention to the scarce training in assessment recognized by teachers and students themselves. As stated by Panadero and Brown [

62], HE is the most suitable context for the implementation of peer assessment, due to, on the one hand, the considerable maturity and competence young adults possess to perform such a task, and, on the other hand, the implicit need to be able to assess their own work and that of others as well as to have their work assessed in a very near professional future. Nevertheless, when teachers’ motivation for the use of peer assessment is studied in the Spanish context [

62], it is possible to conclude that it is in the HE setting that the use of peer assessment is lower, possibly due to the natural result of its non-use in previous levels of education, the duration of the courses with reduced opportunities for collaborative activities, the large number of students in HE classes preventing a trust relation between student assessor and assessee, the teaching-learning perspective focused on the transmission of knowledge and not on the development of competences, or, finally, the minimal pedagogical training of HE teachers in assessment [

62].

3.6. Main Findings

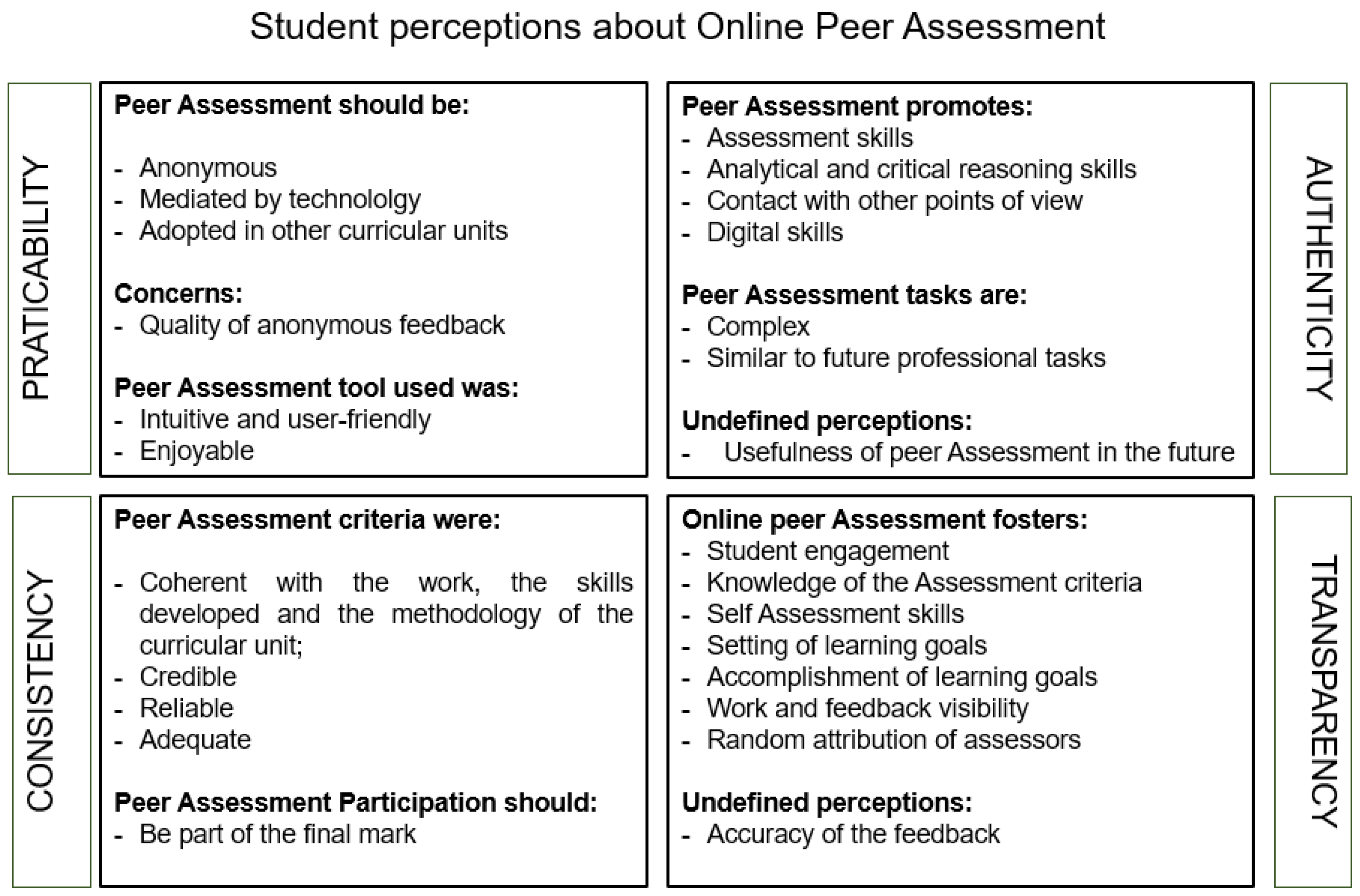

This study intended to portray and discuss student perceptions in relation to the implementation of an OPA design based on the dimensions and criteria of the PrACT framework. This framework seeks to contribute to the promotion of the quality of competency based e-assessment strategies considering technological mediation and teacher and learner needs in an assessment for learning approach. As such,

Figure 8 shows the main findings obtained from the online survey applied to the HE students who, during one semester, participated in the OPA tasks promoted in one of their curricular units.

4. Conclusions

The demands and concerns of the knowledge society of the 21st century has triggered awareness of alternative assessment practices. This investigation intends to reflect upon three key areas of assessment: formative assessment, digital assessment, and assessment in higher education. Grounded on the principles of these forms of assessment, our research comprises the implementation, in a higher education setting, of an alternative digital assessment strategy, with an assessment for learning foundation and inspired by the PrACT framework.

When we consider the several roles and conceptions of assessment, we notice the emphasis given in recent decades to competency based assessment or formative assessment. This conception of assessment implies the use of a variety of strategies and assessment methods and emerges in the context of an “assessment culture” [

22] which is essentially qualitative and student-centered, and supports the assessment of authentic, real-world tasks, comprises reflection and self-knowledge, includes collaborative and co-regulated learning, and prioritizes the learning process [

63]. Due to recent technological advances having created a shift to a highly connected, information-rich society, today’s students have different educational needs, namely, the need for lifelong learning and continuous development which prepares them for their professional future. Traditional assessment only allows the student to find out his/her level of intellectual knowledge, limiting and regulating access to new knowledge [

7]. Alternative assessment seeks to create opportunities for continuous development, monitoring learning strategies centered on student singularity and on the application of acquired skills to the real world.

In the new assessment culture, technology-mediated assessment also has the potential to contribute to more meaningful and powerful learning [

4,

64]. The diversification and improvement of methodologies and tools used to carry out digital assessment has increased over time, providing instructors with a set of mobile applications and digital platforms which make the assessment process easier and far more beneficial, promoting the constructivist and formative dimension of the learning process [

5]. Self-assessment and peer assessment tasks can also be simplified with the use of technology and can help increase student motivation and participation [

64] if implemented thoughtfully and carefully. However, successfully integrating technology in the classroom and validating its impact on assessment for learning remains quite challenging and requires technology to effectively blend in with the learning objectives. The use of technology per se may not represent a transformative and worthwhile pedagogical practice. The effective application of technology, on the other hand, enables greater student involvement, access to feedback from teachers and peers in a collaborative construction of knowledge, storage of the learning artefacts reinforcing reflection and self-regulation, promotion of autonomy, and the obvious elimination of time and place restrictions [

65]. The literature suggests that technology may be a solution to the challenges posed to HEIs as it responds to the new learning environments, to students’ educational needs, and to the pedagogical skills required for 21st century teachers [

3].

The PrACT model conceptual framework emerges from the need to ensure the validity of competency based assessment and to authenticate the design of an alternative digital assessment strategy [

23,

25]. This study focuses on the implementation of an online peer assessment process based on the dimensions of the PrACT framework. According to the results from the student survey, it is possible to infer student awareness of three main learning achievements from the implementation of the mentioned OPA design:

Development of scientific/cognitive skills due to greater student participation and motivation in the activities;

Development of metacognitive skills, critical thinking, and collaboration due to the production and reception of constructive and significant feedback;

Development of digital skills and consolidation of learning due to the use of a digital tool.

On the one hand, the students believe that the implementation of this digital assessment strategy may have contributed to increased student engagement and motivation in the activities presented in class [

66,

67]. Consequently, this participation could have motivated the students to play an active role in the learning process by setting learning goals and doing their best to fulfill them, stimulating the development of cognitive skills [

33,

34,

62,

66,

68].

On the other hand, the students also perceived that producing constructive and meaningful feedback, a cognitively more demanding and complex task than receiving it [

68], helped them engage in metacognitive processes and promoted the development of essential skills such as critical thinking, self-assessment, and analytical skills which enhance significant learning [

32,

37,

43,

60,

66,

67].

Finally, according to the students’ perceptions, the technological dimension not only fosters the development of critical digital skills [

5,

23], but also provides the setting for future reference and consolidation of learning through storage of the entire learning process [

23,

40,

67].

The survey results also point out some concerns the students have when involved in peer assessment practices, such as the accuracy and quality of anonymous feedback or the usefulness of such activities in their future. In this particular point, is important to note that contradictory results were found, as students considered peer assessment as relevant to their future, but did not see the task of doing it as developing their skills, which suggests the need for further studies.

In general terms, the results obtained in this study add to the existing literature [

5,

32,

33,

37,

43,

60,

62,

66,

67] regarding the benefits and constraints felt by HE students when engaged in technology-mediated peer assessment. Although there are several studies worldwide considering different variables about digital peer assessment, in the context of Portuguese higher education institutions, there is very little literature on this topic, particularly considering the conceptual framework of the PrACT model used in this study.

Online peer assessment, as presented in this study, is a student-centered alternative assessment strategy which enhances learning through the development of core competencies. Training teachers and students to understand the benefits of peer assessment, as well as its regular implementation, should be a primary objective in higher education curricula, given that these constraints have a strong impact on the development of essential skills in students during HE and beyond. Given the demands of today’s society, resorting to innovative, authentic, and multifaceted assessment practices which enhance meaningful and lifelong learning is one of the greatest challenges of educational institutions, namely HEIs.

5. Limitations of the Study and Future Research

In spite of the encouraging results of this study, some limitations should be acknowledged which indicate the need for future studies. First, the sample size was relatively small, as the participants were undergraduate students from one single curricular unit. Due to the characteristics and size of the sample, at this point of the study we did not consider using inferential statistics. Additionally, the study was implemented over the course of a semester. Studies [

37,

40,

55] indicate that regular training and practice is required to enhance student motivation and quality feedback, as students’ beliefs of the utility of the task may relate to their effort and performance. Finally, the data collection method used was the student survey. Other collection data methods, such as interviews or document analysis, were considered at the beginning of the study, but not possible to accomplish.

In future studies, it would be interesting to consider the implementation of this assessment design in different levels of education throughout consecutive school years, with the inclusion of other data collection methods such as observation, document analysis and interviews, not only to contemplate student perceptions, but also teachers’ and employers’ perspectives about the usefulness of the implementation of an online peer assessment strategy based on the PrACT framework during students’ school years, particularly tertiary education.