Rubric’s Development Process for Assessment of Project Management Competences

Abstract

:1. Introduction

2. Theoretical Background

2.1. Assessment of Competences

2.2. Rubrics for Assessment

2.3. Individual Competence Baseline (ICB)

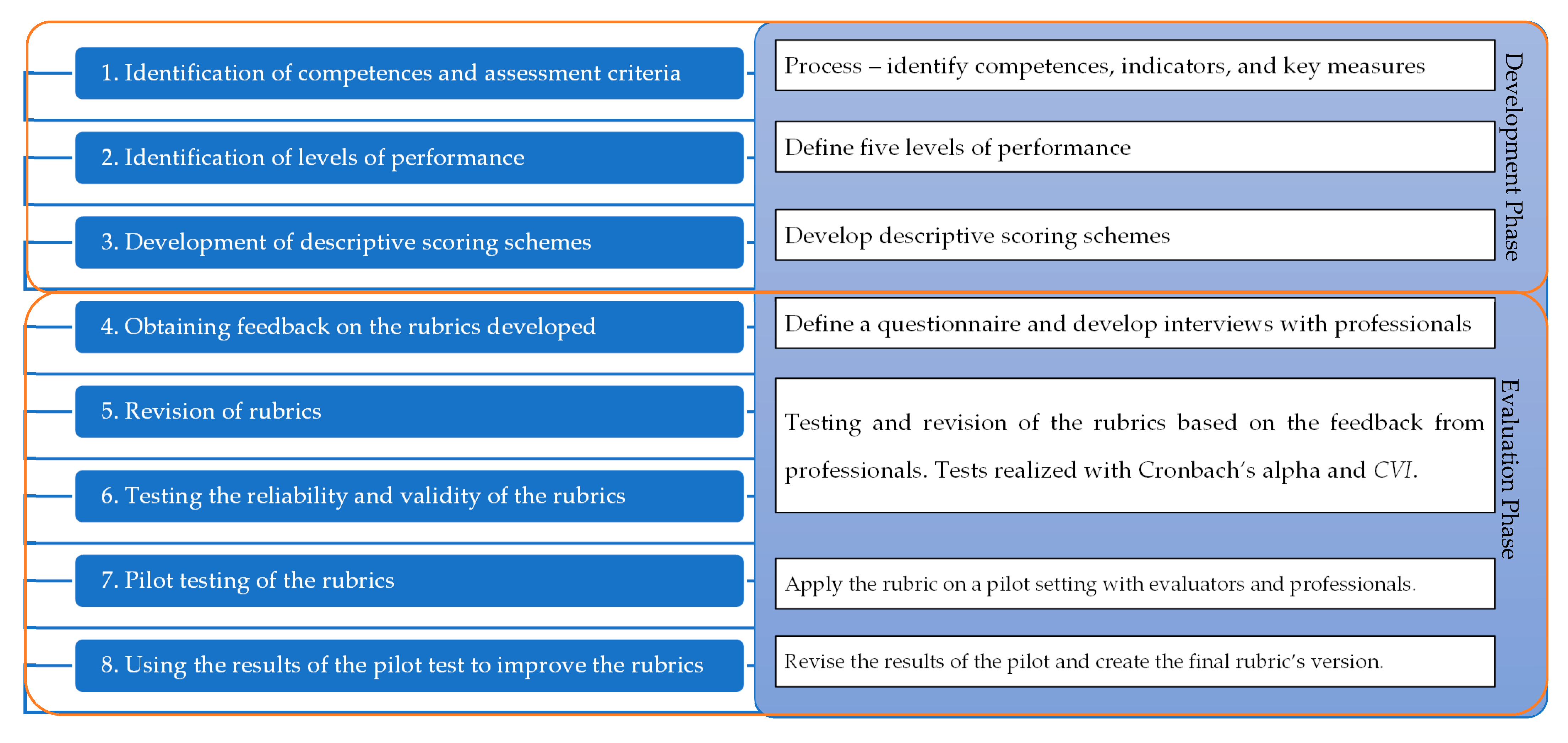

3. Research Methodology

- (1)

- Awareness of the problem: Refers to the understanding of the problem involved [42]. The result is the definition and formalization of the problem to be solved, its boundaries (external environment), and the necessary satisfactory outcome.

- (2)

- Suggestion: To suggest key concepts needed to solve the problem.

- (3)

- Development: To construct candidates for the problem from the key concepts using various types of design knowledge (when developing a candidate, if something unsolved is found, it becomes a new problem that should be solved in another design cycle).

- (4)

- Evaluation: Defined as the process of verifying the behavior of the artefact in the environment for which it was designed.

- (5)

- Conclusion: General formalization of the process and its communication to academic and professional communities.

- The rubric’s assessment criteria are relevant and measure important competences related to leadership practices in project contexts.

- The rubric’s indicators do not address extraneous content and address all aspects of the intended content.

- The rubric’s rating scale and performance levels are adequate for the assessment of the important indicators of leadership competence.

4. Rubrics Development Process

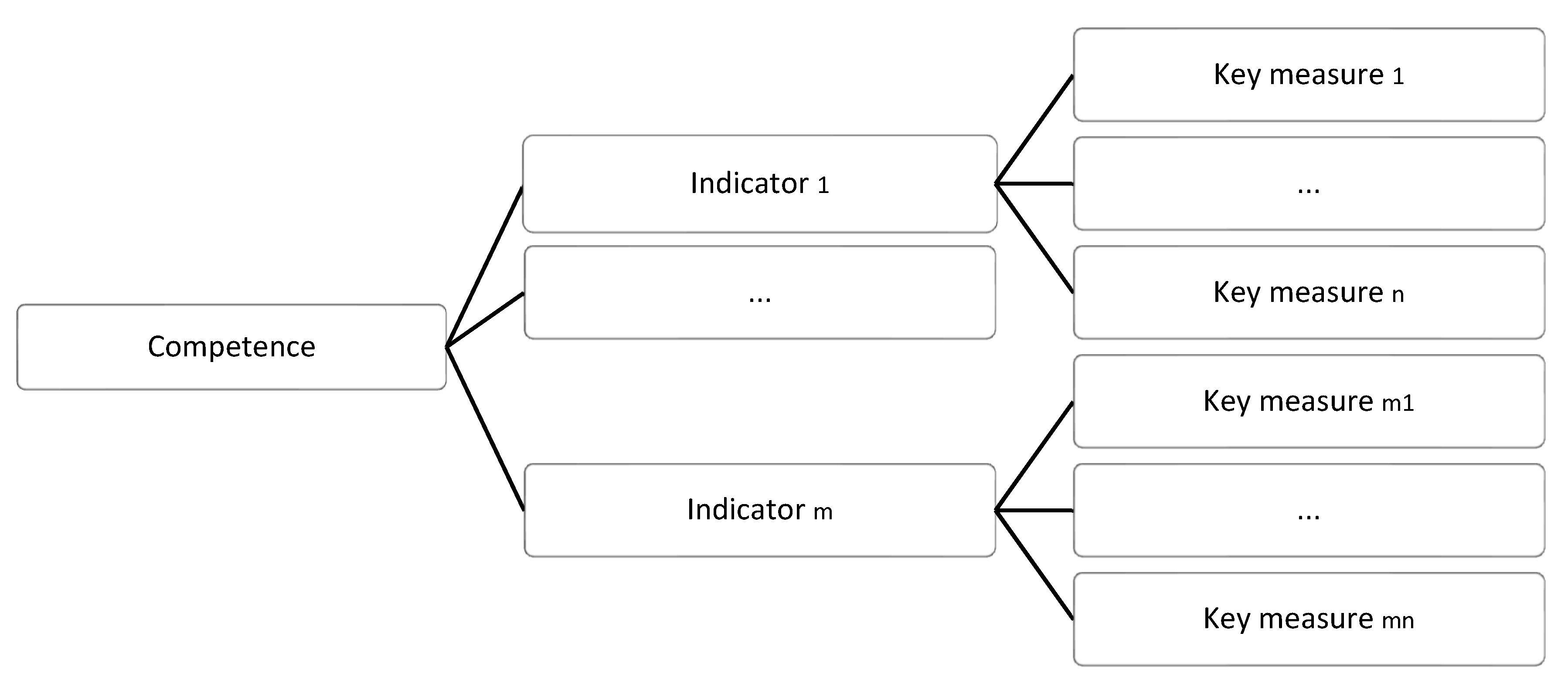

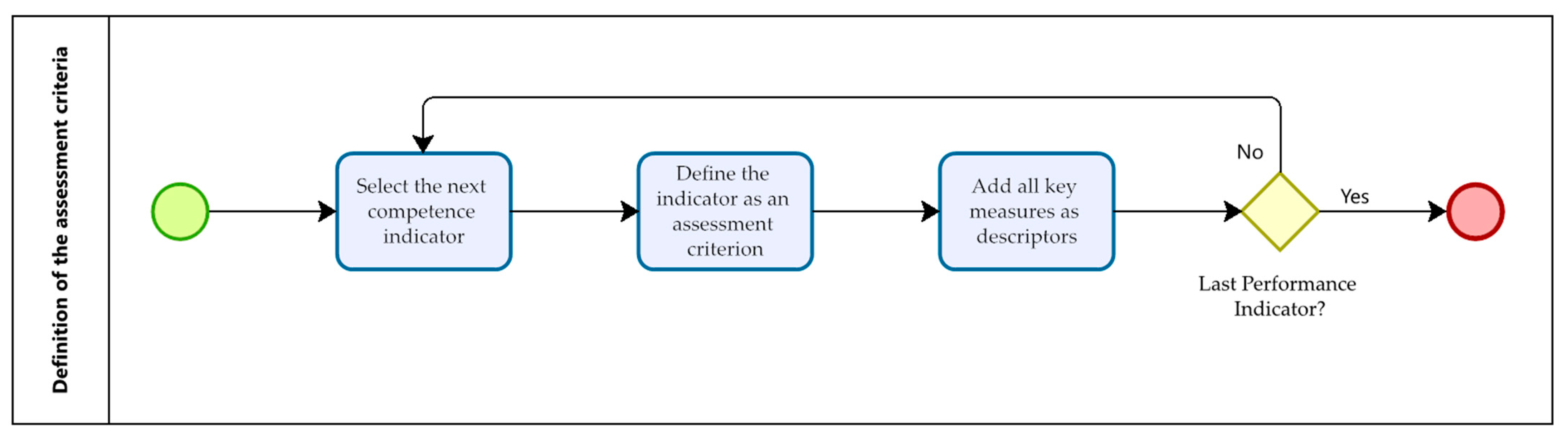

4.1. Identification of the Competences and Evaluation Criteria

4.2. Identification of Levels of Performance

4.3. Development of Descriptive Scoring Schemes

- Each performance level assigns a numeric level from 1 to 5 to the competence indicator/criterion. If there are several evaluators, the translation between the evaluation average of all evaluators is performed according to Table 3.

- The competence assessment level is defined by the weighted average of all performance levels of competence indicators. This is of the utmost importance if, in some specific context, a criterion (indicator) has greater impact and/or significance in the assessment process, and in this case, different weights can be assigned.

5. Development, Application and Evaluation of a Rubric

5.1. Evaluation of the Leadership Rubric

5.2. Application and Improvement of the Leadership Rubric

“During the assessment process, I initially felt some difficulty to capture all the variants of the assessment elements. Because there are many indicators and many measures, it is difficult for us to be prepared to hear what is happening in the scenario, to observe, and at the same time link it to all those elements. So, in the beginning, it’s a little hard to get started, but after a while, you can handle the model.”(Expert 1)

“As the process went on, I felt more comfortable and secure, I was even more agile in using the score sheet.”(Expert 2)

“At the beginning using the rubric and being aware of the scenario, etc. Familiarizing myself in practice with the instruments helped. Hence, suggesting a simulation beforehand would help in preparation for the assessment process.”(Expert 2)

“The main difficulty I felt was covering all the assessment measures and all the indicators. In my case, it was easier when I didn’t have to evaluate by measures.”(Expert 1)

“I felt more comfortable with an assessment by indicator rather than by measure; however, the information from the measures was critical to a better understanding of each indicator.”(Expert 2)

- (a)

- Choice of the descriptive scoring scheme.

- (b)

- Changing the layout of key measures.

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Magano, J.; Silva, C.; Figueiredo, C.; Vitória, A.; Nogueira, T.; Dinis, M.A.P. Generation Z: Fitting project management soft skills competencies—A mixed-method approach. Educ. Sci. 2020, 10, 187. [Google Scholar] [CrossRef]

- Schoper, Y.G.; Wald, A.; Ingason, H.T.; Fridgeirsson, T.V. Projectification in Western economies: A comparative study of Germany, Norway and Iceland. Int. J. Proj. Manag. 2018, 36, 71–82. [Google Scholar] [CrossRef]

- IPMA. Individual Competence Baseline for Project, Programme & Portfolio Management. In International Project Management Association; IPMA: Nijkerk, The Netherlands, 2015; Volume 4, Available online: https://www.ipma.world/ (accessed on 1 March 2022).

- Burke, R.; Barron, S. Project Management Leadership: Building Creative Teams, 2nd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Hagler, D.; Wilson, R. Designing nursing staff competency assessment using simulation. J. Radiol. Nurs. 2013, 32, 165–169. [Google Scholar] [CrossRef]

- Baartman, L.K.J.; Bastiaens, T.J.; Kirschner, P.A.; van der Vleuten, C.P.M. Evaluating assessment quality in competence-based education: A qualitative comparison of two frameworks. Educ. Res. Rev. 2007, 2, 114–129. [Google Scholar] [CrossRef]

- Petrov, M. An Approach to Changing Competence Assessment for Human Resources in Expert Networks. Future Internet 2020, 12, 169. [Google Scholar] [CrossRef]

- Oh, M.; Choi, S. The competence of project team members and success factors with open innovation. J. Open Innov. Technol. Mark. Complex. 2020, 6, 51. [Google Scholar] [CrossRef]

- Starkweather, J.A.; Stevenson, D.H. PMP certification as a core competency: Necessary but not sufficient. Proj. Manag. J. 2011, 42, 31–41. [Google Scholar] [CrossRef]

- Farashah, A.D.; Thomas, J.; Blomquist, T. Exploring the value of project management certification in selection and recruiting. Int. J. Proj. Manag. 2019, 37, 14–26. [Google Scholar] [CrossRef]

- Tinoco, E.; Lima, R.; Mesquita, D.; Souza, M. Using scenarios for the development of personal communication competence in project management. Int. J. Proj. Organ. Manag. 2022; forthcoming. Available online: https://www.inderscience.com/info/ingeneral/forthcoming.php?jcode=ijpom(accessed on 1 October 2022).

- Reddy, M. Design and development of rubrics to improve assessment outcomes: A pilot study in a Master’s level business program in India. Qual. Assur. Educ. 2011, 9, 84–104. [Google Scholar] [CrossRef]

- Salinas, J.; Erochko, J. Using Weighted Scoring Rubrics in Engineering Assessment. In Proceedings of the Canadian Engineering Education Association (CEEA), Hamilton, ON, Canada, 31 May–3 June 2015. [Google Scholar]

- Zlatkin-Troitschanskaia, O.; Shavelson, R.; Kuhn, C. The International state of Research on Measurement of Competency in Higher Education. Stud. High. Educ. 2015, 40, 393–411. [Google Scholar] [CrossRef]

- Hatcher, R.; Fouad, N.; Grus, C.; Campbell, L.; McCutcheon, S.; Leahy, K. Competency benchmarks: Practical steps toward a culture of competence. Train. Educ. Prof. Psychol. 2013, 7, 84–91. [Google Scholar] [CrossRef]

- Van Der Vleuten, C.; Schuwirth, L. Assessing professional competence: From methods to programmes. Med. Educ. 2005, 39, 309–317. [Google Scholar] [CrossRef] [PubMed]

- Succar, B.; Sher, W.; Williams, A. An integrated approach to BIM competency assessment, acquisition and application. Autom. Constr. 2013, 35, 174–189. [Google Scholar] [CrossRef]

- Tobajas, M.; Molina, C.; Quintanilla, A.; Alonso-Morales, N.; Casas, J.A. Development and application of scoring rubrics for evaluating students’ competencies and learning outcomes in Chemical Engineering experimental courses. Educ. Chem. Eng. 2019, 26, 80–88. [Google Scholar] [CrossRef]

- Sillat, L.; Tammets, K.; Laanpere, M. Digital competence assessment methods in higher education: A systematic literature review. Educ. Sci. 2021, 11, 402. [Google Scholar] [CrossRef]

- Redman, A.; Wiek, A.; Barth, M. Current practice of assessing students’ sustainability competencies: A review of tools. Sustain. Sci. 2021, 16, 117–135. [Google Scholar] [CrossRef]

- Drisko, J. Competencies and their assessment. J. Soc. Work. Educ. 2014, 50, 414–426. [Google Scholar] [CrossRef]

- Bredillet, C.; Tywoniak, S.; Dwivedula, R. What is a good project manager? An Aristotelian perspective. Int. J. Proj. Manag. 2015, 33, 254–266. [Google Scholar] [CrossRef] [Green Version]

- Pellegrino, J.; Baxter, G.; Glaser, R. Addressing the “two disciplines” problem: Linking theories of cognition and learning with assessment and instructional practice. Rev. Res. Educ. 1999, 24, 307–353. [Google Scholar]

- Arcuria, P.; Morgan, W.; Fikes, T. Validating the use of LMS-derived rubric structural features to facilitate automated measurement of rubric quality. In Proceedings of the 9th International Conference on Learning Analytics & Knowledge, New York, NY, USA, 4–8 March 2019; pp. 270–274. [Google Scholar]

- Shipman, D.; Roa, M.; Hooten, J.; Wang, Z. Using the analytic rubric as an evaluation tool in nursing education: The positive and the negative. Nurse Educ. Today 2012, 32, 246–249. [Google Scholar] [CrossRef] [PubMed]

- Dawson, P. Assessment rubrics: Towards clearer and more replicable design, research and practice. Assess. Eval. High. Educ. 2017, 42, 347–360. [Google Scholar] [CrossRef]

- Fernandes, D. Avaliação de Rubricas. Critério 2021, 1, 3. [Google Scholar]

- Brookhart, S.; Chen, F. The quality and effectiveness of descriptive rubrics. Educ. Rev. 2015, 67, 343–368. [Google Scholar] [CrossRef]

- Andrade, H.; Du, Y.; Mycek, K. Rubric-referenced self-assessment and middle school students’ writing. Assess. Educ. Princ. Policy Pract. 2010, 17, 199–214. [Google Scholar] [CrossRef]

- Papadakis, S.; Kalogiannakis, M.; Zaranis, N. Designing and creating an educational app rubric for preschool teachers. Educ. Inf. Technol. 2017, 22, 3147–3165. [Google Scholar] [CrossRef]

- Tai, J.; Ajjawi, R.; Boud, D.; Dawson, P.; Panadero, E. Developing evaluative judgement: Enabling students to make decisions about the quality of work. High. Educ. 2018, 76, 467–481. [Google Scholar] [CrossRef] [Green Version]

- Lo, K.-W.; Yang, B.-H. Development and learning efficacy of a simulation rubric in childhood pneumonia for nursing students: A mixed methods study. Nurse Educ. Today 2022, 119, 105544. [Google Scholar] [CrossRef]

- Martell, K. Assessing student learning: Are business schools making the grade? J. Educ. Bus. 2007, 82, 189–195. [Google Scholar] [CrossRef]

- Brookhart, S. How to Create and Use Rubrics for Formative Assessment and Grading; ASCD: Alexandria, VA, USA, 2013. [Google Scholar]

- Popham, W. What’s Wrong—and What’s Right—With Rubrics. Educ. Leadersh. 1997, 55, 72–75. [Google Scholar]

- Stevens, D.; Levi, A. Leveling the field: Using Rubrics to achieve greater equity in teaching and grading. Essays Teach. Excell. 2005, 17, 1. [Google Scholar]

- Chen, P.; Partington, D. Three conceptual levels of construction project management work. Int. J. Proj. Manag. 2006, 24, 412–421. [Google Scholar] [CrossRef]

- Wawak, S.; Woźniak, K. Evolution of project management studies in the XXI century. Int. J. Manag. Proj. Bus. 2020, 13, 867–888. [Google Scholar] [CrossRef]

- Albert, M.; Balve, P.; Spang, K. Evaluation of project success: A structured literature review. Int. J. Manag. Proj. Bus. 2017, 10, 796–821. [Google Scholar] [CrossRef]

- Vukomanović, M.; Young, M.; Huynink, S. IPMA ICB 4.0—A global standard for project, programme and portfolio management competences. Int. J. Proj. Manag. 2016, 34, 1703–1705. [Google Scholar] [CrossRef]

- Carstensen, A.-K.; Bernhard, J. Design science research–a powerful tool for improving methods in engineering education research. Eur. J. Eng. Educ. 2019, 44, 85–102. [Google Scholar] [CrossRef] [Green Version]

- Dresch, A.; Lacerda, D.; Miguel, P. Uma análise distintiva entre o estudo de caso, a pesquisa-ação e a design science research. Rev. Bras. Gestão Negócios 2015, 17, 1116–1133. [Google Scholar]

- Takeda, H.; Veerkamp, P.; Yoshikawa, H. Modeling design process. AI Mag. 1990, 11, 37. [Google Scholar]

- Cronbach, L. Coefficient alpha and the internal structure of tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef] [Green Version]

- Souza, A.C.; Alexandre, N.; Guirardello, E. Propriedades psicométricas na avaliação de instrumentos: Avaliação da fiabilidade e validade. Epidemiol. Serviços Saúde 2017, 26, 649–659. [Google Scholar] [CrossRef]

- Polit, D.; Beck, C. The content validity index: Are you sure you know what’s being reported? Critique and recommendations. Res. Nurs. Health 2006, 29, 489–497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shrotryia, V.; Dhanda, U. Content validity of assessment instrument for employee engagement. Sage Open 2019, 9, 2158244018821751. [Google Scholar] [CrossRef] [Green Version]

- Wroe, E.; McBain, R.; Michaelis, A.; Dunbar, E.; Hirschhorn, L.; Cancedda, C. A novel scenario-based interview tool to evaluate nontechnical skills and competencies in global health delivery. J. Grad. Med. Educ. 2017, 9, 467–472. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zarour, K.; Benmerzoug, D.; Guermouche, N.; Drira, K. A systematic literature review on BPMN extensions. Bus. Process Manag. J. 2019, 26, 1473–1503. [Google Scholar] [CrossRef]

- PMI. A Guide to the Project Management Body of Knowledge PMBOK®GUIDE, 7th ed.; Project Management Institute, Inc.: Newtown Township, PA, USA, 2021. [Google Scholar]

- Jain, S.; Angural, V. Use of Cronbach’s alpha in dental research. Med. Res. Chron. 2017, 4, 285–291. [Google Scholar]

- Marinho-Araujo, C.; Rabelo, M. Avaliação educacional: A abordagem por competências. Avaliação Rev. Avaliação Educ. Super. (Campinas) 2015, 20, 443–466. [Google Scholar]

| # | Steps |

|---|---|

| 1. | Identification of the learning objectives and identification of qualities (criteria) |

| 2. | Identification of levels of performance |

| 3. | Development of separate descriptive scoring schemes |

| 4. | Obtaining feedback on the rubrics developed |

| 5. | Revision of rubrics |

| 6. | Testing the reliability and validity of the rubrics |

| 7. | Pilot testing of the rubrics |

| 8. | Using the results of the pilot test to improve the rubrics |

| Practice Focused Competences (13) | People Focused Competences (10) | Perspective Focused Competences (5) |

|---|---|---|

| Project design | Self-reflection and self-management | Strategy |

| Requirements and objectives | Personal integrity and reliability | Governance, structures, processes |

| Scope | Personal communication | Compliance, standards, regulation |

| Time | Relationships and engagement | Power and interest |

| Organisation and information | Leadership | Culture and values |

| Quality | Teamwork | |

| Finance | Conflict and crisis | |

| Resources | Resourcefulness | |

| Procurement | Negotiation | |

| Plan and control | Results orientation | |

| Risk and opportunity | ||

| Stakeholders | ||

| Change and transformation | ||

| Selection and balancing |

| Performance Scale | Level | Evaluation |

|---|---|---|

| Inadequate | 1 | evaluation ≤ 1.0 points |

| Lower than expected | 2 | 1 < evaluation ≤ 2.0 points |

| Satisfactory | 3 | 2 < evaluation ≤ 3.0 points |

| Good | 4 | 3 < evaluation ≤ 4.0 points |

| Excellent | 5 | 4 < evaluation ≤ 5.0 points |

| Indicators | Key Measures |

|---|---|

| I1. Initiate actions and proactively offer help and advice | 1.1 Proposes or exerts actions; 1.2 Offers unrequested help or advice; 1.3 Thinks and acts with a future orientation (i.e., one step ahead); 1.4 Balances initiative and risk. |

| I2. Take ownership and show commitment | 2.1 Demonstrates ownership and commitment in behaviour, speech and attitudes; 2.2 Talks about the project in positive terms; 2.3 Rallies and generates enthusiasm for the project; 2.4 Sets up measures and performance indicators; 2.5 Looks for ways to improve the project processes; 2.6 Drives learning. |

| I3. Provide direction, coaching and mentoring to guide and improve the work of individuals and teams | 3.1 Provides direction for people and teams; 3.2 Coaches and mentors team members to improve their capabilities; 3.3 Establishes a vision and values and leads according to these principles; 3.4 Aligns individual objectives with common objectives and describes the way to achieve them. |

| I4. Exert appropriate power and influence over others to achieve the goals | 4.1 Uses various means of exerting influence and power; 4.2 Demonstrates timely use of influence and/or power; 4.3 Perceived by stakeholders as the leader of the project or team. |

| I5. Make, enforce and review decisions | 5.1 Deals with uncertainty; 5.2 Invites opinion and discussion before decision-making in a timely and appropriate fashion; 5.3 Explains the rationale for decisions; 5.4 Influences decisions of stakeholders by offering analyses and interpretations; 5.5 Communicates the decision and intent clearly; 5.6 Reviews decisions and changes decisions according to new facts; 5.7 Reflects on past situations to improve decision processes. |

| Version | Inadequate (1) | Lower than expected (2) | Satisfactory (3) | Good (4) | Excellent (5) |

|---|---|---|---|---|---|

| “Version 1” | Proactivity, help and advice are not adequate, as the initiative to propose or carry out actions, including offering help and advice, is lacking. Does not show anticipatory thinking about situations. Initiatives are not balanced in terms of their pros and cons. | Proactivity, help and advice are lower than expected in that there is little initiative to propose or carry out actions, including offering help and advice. Demonstrates poor anticipatory thinking about situations. Demonstrates difficulty in balancing initiatives, taking into account their pros and cons. | Proactivity, help and advice are satisfactory in that initiatives are partially developed to propose or carry out actions, including offering help and advice. Demonstrates effort in anticipatory thinking of situations. Demonstrates balancing some initiatives, taking into account their pros and cons. | Proactivity, help and advice are good, in that, overall, initiatives are shown to propose or carry out actions, including offering help and advice. Demonstrates some anticipatory thinking about situations. Demonstrates balancing initiatives and risks well, taking into account their pros and cons. | Proactivity, help and advice are excellent as initiatives are shown to propose or take action, including offering help and advice. Demonstrates excellent anticipatory thinking of situations. Demonstrates exceptional balancing of initiatives and risks, taking into account their pros and cons. |

| “Version 2” | The demonstration of this indicator and its measures is not adequate. | The demonstration of this indicator and its measures is lower than expected. | The demonstration of this indicator and its measures is satisfactory. | The demonstration of this indicator and its measures is good. | The demonstration of this indicator and its measures is excellent. |

| Indicators | (−) | ( ) | (+) |

|---|---|---|---|

| I1. Initiate actions and proactively offer help and advice | 1 | 4 | |

| I2. Take ownership and show commitment | 2 | 3 | |

| I3. Provide direction, coaching and mentoring to guide and improve the work of individuals and teams | 1 | 4 | |

| I4. Exert appropriate power and influence over others to achieve the goals | 1 | 4 | |

| I5. Make, enforce and review decisions | 1 | 4 |

| Question | Professional 1 | Professional 2 | Professional 3 | Professional 4 | Professional 5 | n | I-CVI |

|---|---|---|---|---|---|---|---|

| 1 | 4 | 5 | 5 | 5 | 4 | 5 | 1 |

| 2 | 5 | 5 | 4 | 5 | 5 | 5 | 1 |

| 3 | 5 | 5 | 4 | 5 | 5 | 5 | 1 |

| 4 | 4 | 5 | 3 | 5 | 4 | 4 | 0.8 |

| 5 | 4 | 3 | 3 | 5 | 4 | 3 | 0.6 |

| 6 | 4 | 4 | 3 | 5 | 5 | 4 | 0.8 |

| 7 | 4 | 5 | 3 | 4 | 4 | 4 | 0.8 |

| 8 | 4 | 4 | 3 | 4 | 5 | 4 | 0.8 |

| S-CVI 0.85 |

| (1) | (2) | (3) | (4) | (5) | |

| Indicators | The demonstration of this indicator and its measures is not adequate. | The demonstration of this indicator and its measures is lower than expected. | The demonstration of this indicator and its measures is satisfactory. | The demonstration of this indicator and its measures is good. | The demonstration of this indicator and its measures is excellent. |

| I1—Initiate actions and proactively offer help and advice | |||||

| I2—Take ownership and show commitment | |||||

| I3—Provide direction, coaching and mentoring to guide and improve the work of individuals and teams | |||||

| I4—Exert appropriate power and influence over others to achieve the goals | |||||

| I5—Make, enforce and review decisions |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Souza, M.; Margalho, É.; Lima, R.M.; Mesquita, D.; Costa, M.J. Rubric’s Development Process for Assessment of Project Management Competences. Educ. Sci. 2022, 12, 902. https://doi.org/10.3390/educsci12120902

Souza M, Margalho É, Lima RM, Mesquita D, Costa MJ. Rubric’s Development Process for Assessment of Project Management Competences. Education Sciences. 2022; 12(12):902. https://doi.org/10.3390/educsci12120902

Chicago/Turabian StyleSouza, Mariane, Élida Margalho, Rui M. Lima, Diana Mesquita, and Manuel João Costa. 2022. "Rubric’s Development Process for Assessment of Project Management Competences" Education Sciences 12, no. 12: 902. https://doi.org/10.3390/educsci12120902

APA StyleSouza, M., Margalho, É., Lima, R. M., Mesquita, D., & Costa, M. J. (2022). Rubric’s Development Process for Assessment of Project Management Competences. Education Sciences, 12(12), 902. https://doi.org/10.3390/educsci12120902