Awareness and Adoption of Evidence-Based Instructional Practices by STEM Faculty in the UAE and USA

Abstract

1. Introduction

2. Materials and Methods

2.1. Research Questions

- What are the United Arab Emirates University (UAEU) STEM faculty levels of awareness, adoption, and ease-of-implementation perceptions regarding specific EBIPs?

- What factors (such as gender, department, number of years teaching, job responsibilities, exposure to teaching workshops) are correlated with UAEU STEM faculty responses?

- What contextual factors influence UAEU STEM faculty adoption of EBIPs?

- How does the collected data compare to that of STEM faculty at a top-tier research and teaching university in the USA?

- What findings emerge for research questions 1–3 when taking into consideration STEM faculty teaching practices from top universities representing countries at both ends of the STEM-EBIP-focus spectrum, with one being a historical leader (USA) and the other a newcomer (UAE)?

2.2. Participants

2.3. Survey Instrument

- Chosen EBIPs and their descriptions: The authors of this study developed a list of 16 teaching practices, comprising 13 EBIPs and three traditional, teacher-centered practices. The EBIPs were chosen based on the following, with the second being influenced by Sturtevant & Wheeler’s work [62] and the third by Froyd’s work [63]: (1) consistent representation in STEM literature, (2) varied ease-of-implementation levels (authors wanted both simple and more complex EBIPs to be represented), and (3) varied types (e.g., in-class activities, small group work, formative assessment, scenario-based content). The EBIPs included in the three key surveys were also utilized as a helpful gauge in that they provided some of the largest lists of EBIPs when compared to other surveys of similar nature. The three teacher-centered practices included in this current study were added with the intent of ensuring that even participants who were unaware of EBIPs would be able to likely confirm recognizing and/or utilizing at least three of the included teaching practices, which in turn might help them answer honestly about not recognizing the other 13 EBIPs. A similar approach of including three traditional, teacher-centered teaching practices in the midst of student-centered teaching practices was utilized by Marbach-Ad et al. [64]. Concise, straight-forward descriptions for each teaching practice were adapted from the literature. The selected teaching practices were then grouped according to their type, as influenced by Froyd’s work [63], as well as by their perceived effort to implement, based on Sturtevant and Wheeler’s work [62], as shown in Table 1 below. The method section of Table 1 lists each EBIP’s critical elements.

- Barriers and contextual factors: The authors adapted the barriers and contextual factors included in the three key surveys, specifically rewording for clarity and succinctness, as well as removing items that were not applicable in the UAEU context (i.e., tenure).

- Likert scales: Likert scales were utilized in both the contextual factors and teaching practices sections of this current study.

- In the contextual factors section, the authors utilized the following Likert scale: 1 = Never true, 2 = Seldom true, 3 = True some of the time, 4 = True most of the time, 5 = True all of the time, which was also utilized in the Statistics Teaching Inventory [65].

- In the teaching practices section, the authors asked three questions, each with its own Likert scale. The first question, “How familiar are you with this strategy?”, utilized the following Likert scale: 1 = Never heard of it, 2 = Heard of it but don’t know much else, 3 = Familiar with it but have never used it, 4 = Currently use/have used but don’t plan to use anymore, 5 = Currently use/have used and plan to use in the future. The Likert scales found in the Lund & Stains [9] and Sturtevant & Wheeler [62] surveys were utilized as a basis for the selected scale and were modified for clarity, succinctness, and the objectives of this study. It is important to note that only faculty who selected options 4 or 5 were considered to be EBIP users, and as such, only they were directed to answer the following second and third questions in the section. The second question, “How often did you/do you utilize this strategy?”, utilized a modified version of a Likert scale found in Sturtevant & Wheeler’s survey [62], with the final version in this survey being 1 = Use(d) it once or twice/term, 2 = Use(d) it multiple times/term, 3 = Use(d) it about once/week, and 4 = Use(d) it multiple times/week. Finally, the third question, “How difficult is it for you to use this strategy?”, utilized the following Likert scale: 1 = Very easy, 2 = Easy, 3 = Somewhat difficult, 4 = Difficult, 5 = Very difficult. All Likert scales utilized in this study were 5-point scales in order to maximize variance in responses [66,67], except for the second question regarding frequency of use.

- Perceived ease-of-implementation questions: The authors chose to add the third question in the teaching practices section, “How difficult is it for you to use this strategy?”, which was not present in the three key surveys.

- Participant background/demographics: The authors modified demographic questions from various surveys, including the three key surveys, the Faculty Survey of Student Engagement [68], the National Survey of Geoscience Teaching Practices [69], and the Higher Education Research Institute (HERI) Faculty Survey [70]. Examined demographics included faculty gender, academic rank, department, university teaching experience (i.e., number of years), research activity, teaching load, typical time spent lecturing, graduate student supervision, and teaching workshops/programs/courses participation.

2.4. Data Analysis

- STEM faculty levels of awareness, adoption, and ease-of-implementation perceptions and the selected 16 teaching practices as a whole;

- Specific demographic factors (such as gender, department, number of years teaching, job responsibilities, exposure to teaching workshops) and STEM faculty levels of awareness, adoption, and ease-of-implementation perceptions for:

- All 16 teaching practices in general (e.g., faculty EBIP awareness versus rank), and

- Each specific teaching practice (e.g., faculty adoption of Student Presentations versus rank);

- Awareness versus adoption levels, as well as adoption versus perceived ease-of-implementation levels regarding all 16 teaching practices as a whole; and finally,

- STEM faculty identification with specific contextual factors and their adoption levels of:

- c

- All 16 teaching practices as a whole, and

- d

- Each specific teaching practice.

3. Results

3.1. Research Question 2: Characteristics of Participating Faculty

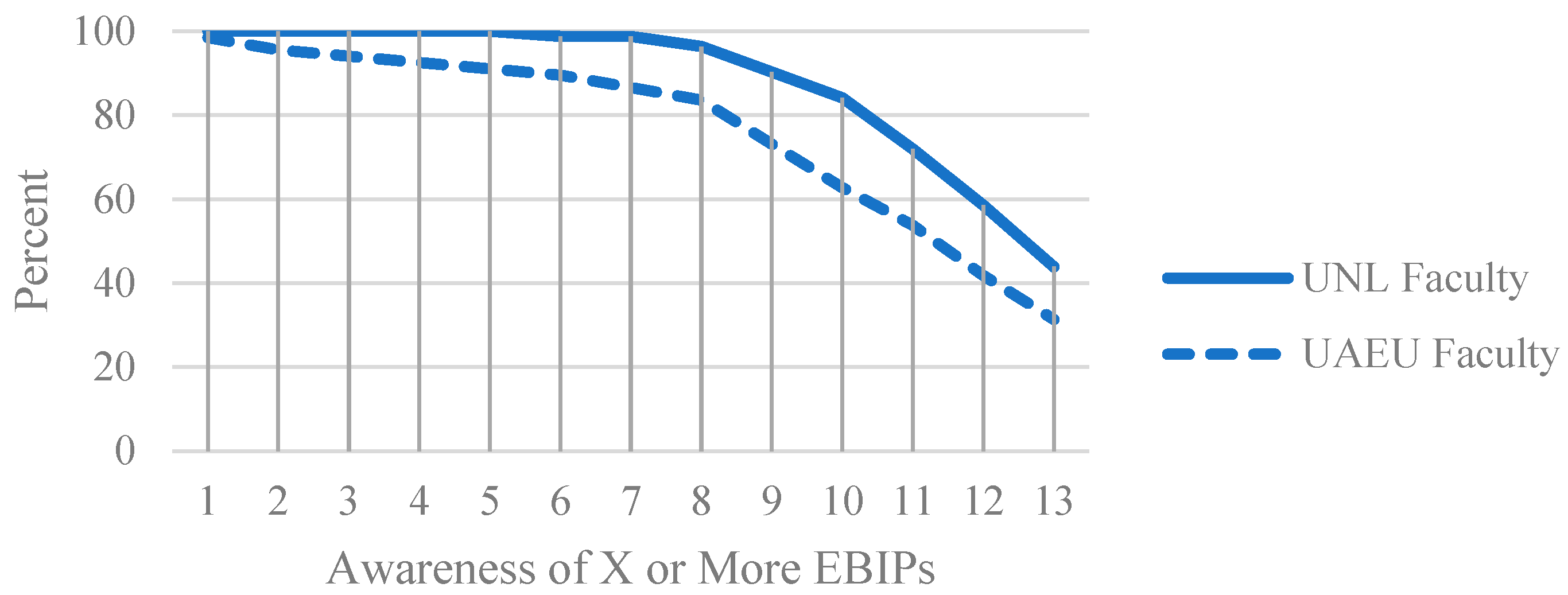

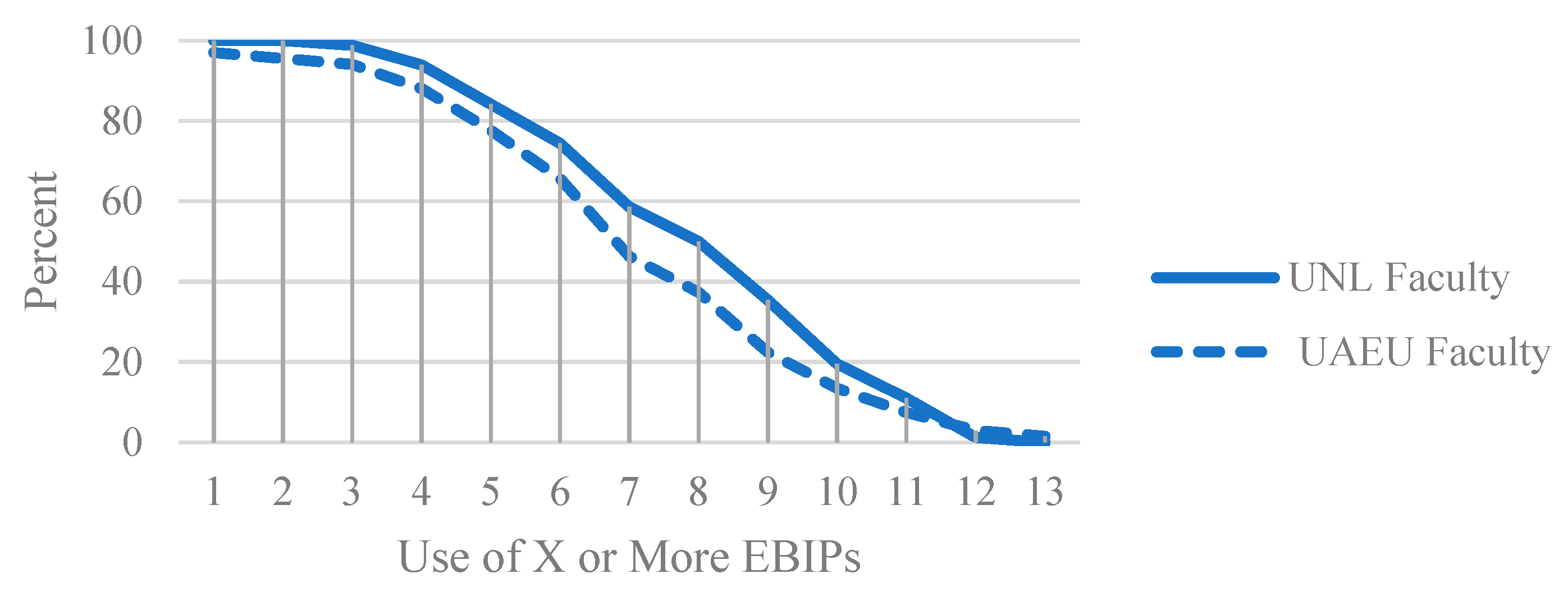

3.2. Research Questions 1 and 4: UAEU versus UNL Faculty—Awareness and Adoption of Selected Teaching Practices

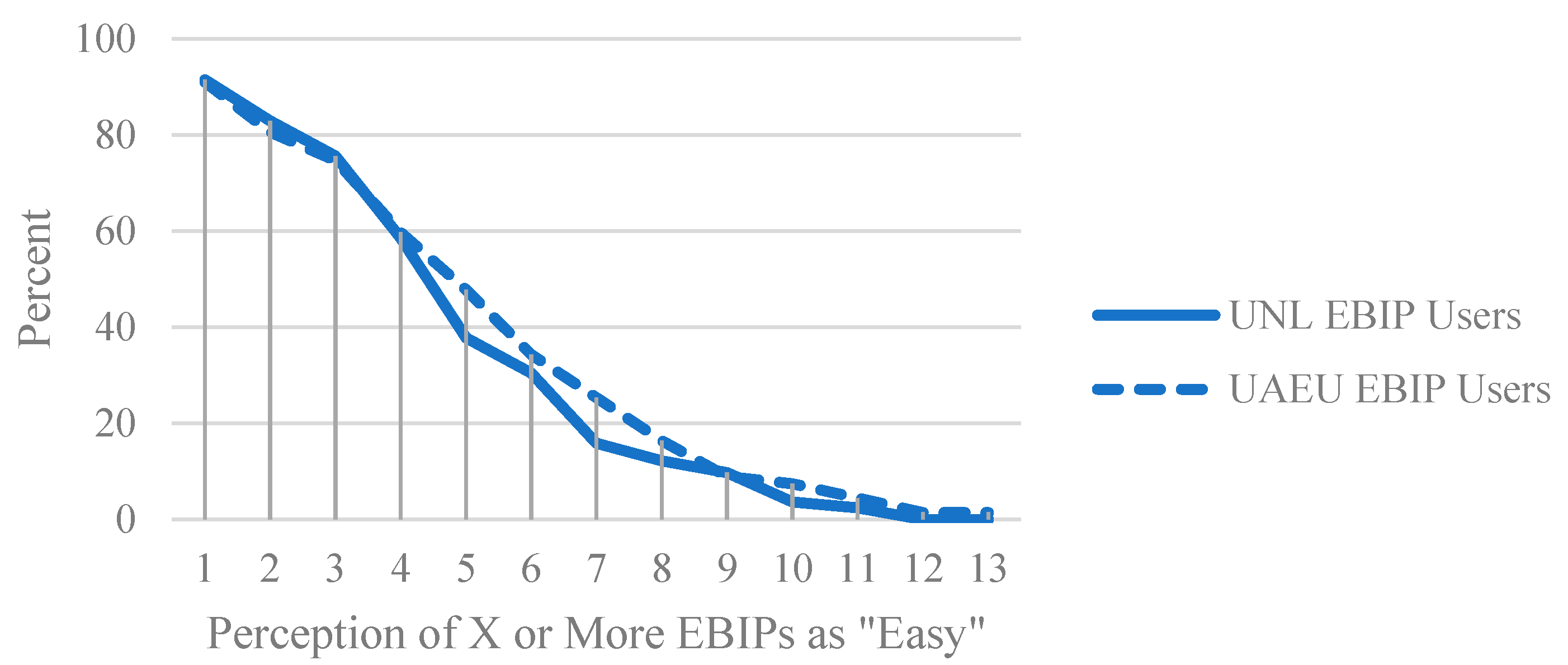

3.3. Research Questions 1 and 4: UAEU versus UNL Faculty—Ease-of-Implementation Perceptions of Selected Teaching Practices

3.4. Research Questions 3 and 4: UAEU versus UNL—Contextual Factors That Influence Adoption of EBIPs

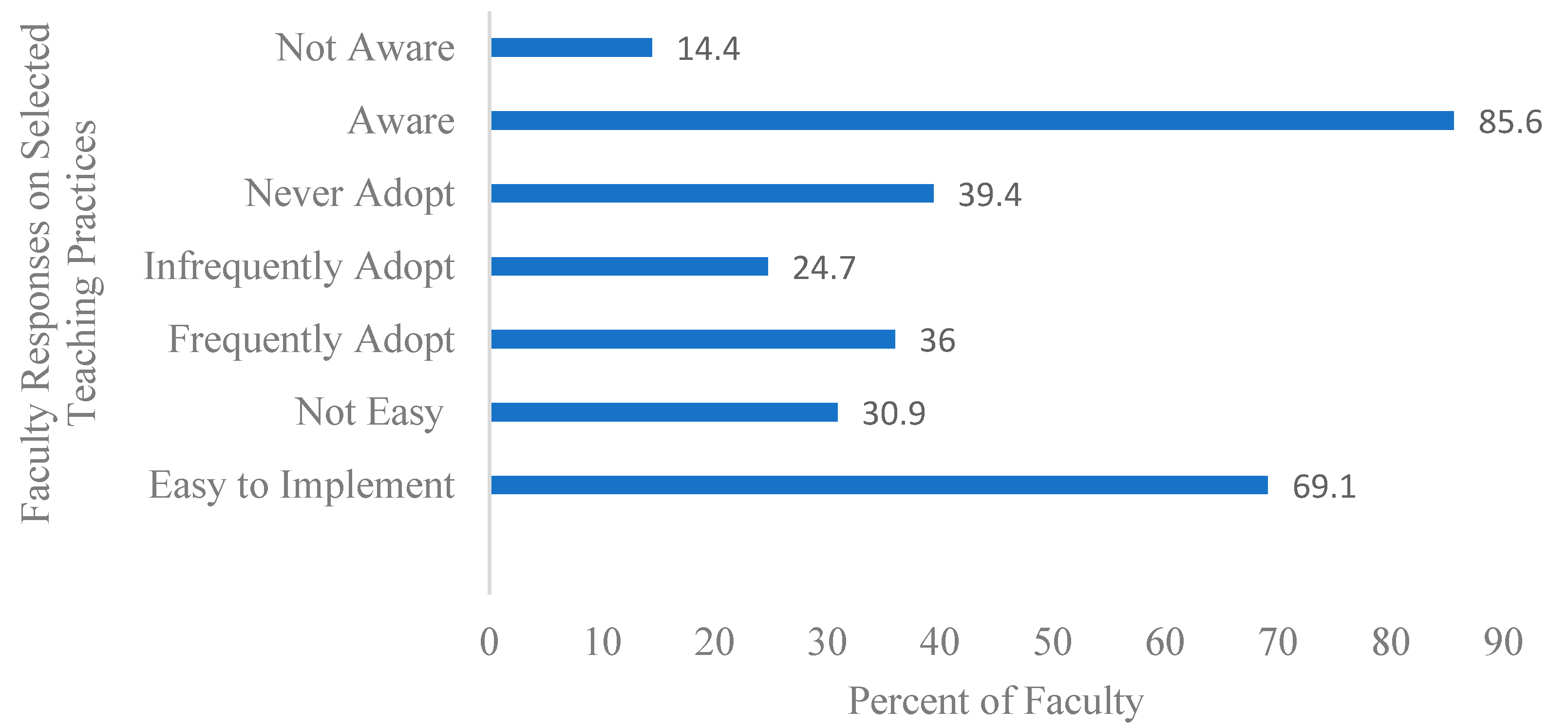

3.5. Research Question 5: Combined UAEU and UNL Faculty Results—EBIP Awareness, Adoption, and Ease-of-Perception Levels

3.6. Research Question 5: Combined UAEU and UNL Faculty Results—Contextual Factors That Influence Adoption of EBIPs

4. Discussion

4.1. Research Questions 1–4: UAEU versus UNL Faculty

4.1.1. Faculty Demographics

- clearly have a higher teaching load (20% of UAEU faculty teach 7–8 courses per academic year versus 5% of UNL faculty; 8% of UAEU faculty teach more than 8 courses per academic year versus 1% of UNL faculty),

- have attended fewer teaching workshops/programs/courses (67% of UAEU faculty have attended 0–3 workshops versus 34% of UNL faculty; 47% of UNL faculty have attended more than six teaching workshops/programs/courses compared to 21% of UAEU faculty), and

- spend more time lecturing (30% of UAEU faculty spend 81–100% of class time lecturing versus 14% of UNL faculty).

4.1.2. Faculty EBIP Awareness, Adoption, Ease-of-Implementation Perceptions

- less aware of the selected EBIPs (nearly every UNL faculty member, 99%, are aware of at least 7 EBIPs, compared to only 87% of UAEU faculty; 84% of UNL faculty are aware of at least 10 EBIPs, compared to 63% of UAEU faculty),

- similar in terms of their overall average EBIP adoption (6.5 versus 7.3 for UNL), but less frequent adopters of EBIPs when considering their weekly usage (UNL faculty utilize seven out of the total 13 EBIPs more frequently on a weekly basis than UAEU faculty, as shown in Table 5), and

- similar in terms of their perceived ease-of-EBIP-implementation levels.

4.1.3. Contextual Factors

- required textbooks or a syllabus planned by others (57% of UAEU faculty versus 12% of UNL faculty),

- time constraints due to teaching load (54% of UAEU faculty versus 31% of UNL faculty),

- student ability levels (94% of UAEU faculty versus 58% of UNL faculty),

- teaching evaluations based on students’ ratings (31% of UAEU faculty versus 8% of UNL faculty), and

- number of students in a class (57% of UNL faculty versus 29% of UAEU faculty).

4.2. Research Question 5: Combined UAEU and UNL Faculty Results

4.2.1. Combined Faculty EBIP Awareness and Adoption

- percentage of time spent on teaching,

- teaching experience,

- typical class time spent lecturing, and

- the number of teaching workshops/programs/courses attended.

4.2.2. Combined Faculty EBIP Ease-of-Implementation Perceptions

4.2.3. EBIP Awareness Versus Adoption, EBIP Adoption versus Ease-of-Implementation Perceptions

4.2.4. Contextual Factors

- the level of flexibility they are given by their department in choosing the way they teach a course (p = 0.003),

- the textbook/s that they choose (p = 0.000),

- the number of students in a class (p = 0.006), and

- the physical space of the classroom (p = 0.003).

4.3. Summary of Findings and Implications

4.3.1. UAEU Versus UNL Faculty

4.3.2. Combined Faculty

4.4. Limitations

- More surveyed universities: Given the limitations of surveying STEM faculty from only two universities, further studies may be conducted involving more institutions.

- Larger sample size: Future studies may seek to provide incentives to achieve higher instructor participation in the survey or mandatory instructor participation at the university or department levels.

- Construct validity and internal consistency tests: Future studies may assess the construct validity (e.g., with factor analysis) and internal consistency (e.g., with Cronbach’s Alpha) of this study’s survey instrument.

- Faculty interviews: Given the potential faculty misinterpretation of described EBIPs, future studies may utilize interviews with surveyed faculty in order to check for faculty understanding.

- Classroom observations: Given the limitations of self-reported surveys, future studies may carry out classroom observations of surveyed STEM faculty to allow for comparison of faculty survey results to that of actual teaching sessions, likely leading to a more holistic picture of STEM faculty teaching practices.

- Data on specific faculty teaching workshop/program/course participation: Future studies may obtain more detailed information on the kinds of teaching workshops/programs/courses in which faculty have participated.

5. Recommendations

- Incentives for faculty prioritization of teaching development: Many universities have centers for teaching and learning that provide workshops, resources, and overall support for its faculty’s professional development. The more an institution’s faculty take part in these centers and their initiatives, especially teaching workshops, the better. However, it is likely that given the varying demands on faculty time, workshops may go unnoticed or are not prioritized by faculty [71]. As such, the authors recommend more incentives for faculty participation in university centers for teaching and learning. This could involve putting more emphasis on teaching development in promotion criteria, as well as mandating that all faculty participate in a certain number of self-selected teaching workshops per academic year. Moreover, implementation of EBIPs could be factored into faculty annual merit decisions [18].

- Clear EBIP descriptions and usefulness presented in teaching workshops: Given the important role that EBIPs play in improving STEM education, teaching workshops should ensure that their presentations are clear and easy to understand. They should also present clear reasons (preferably backed by research findings) as to the usefulness of each EBIP as well as of EBIPs in general in order to aid faculty understanding and interest in implementing them. Furthermore, they should include instruction on how to easily implement them in varying contexts that can potentially discourage EBIP usage (e.g., in classes with limited space, classes with a large number of students, and classes with varying student ability levels).

- New faculty training: It is known that university instructors often must teach themselves to use new teaching practices that they did not experience as students, as graduate and post-doctoral education rarely focuses on teaching [75]. Thus, the authors recommend that new faculty be required to take part in training related to teaching that focuses primarily on incorporating active learning strategies in all courses. The training should occur before they begin teaching at an institution.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lane, A.K.; Skvoretz, J.; Ziker, J.P.; Couch, B.A.; Earl, B.; Lewis, J.E.; McAlpin, J.D.; Prevost, L.B.; Shadle, S.E.; Stains, M. Investigating how faculty social networks and peer influence relate to knowledge and use of evidence-based teaching practices. Int. J. STEM Educ. 2019, 6, 28. Available online: https://link.springer.com/content/pdf/10.1186/s40594-019-0182-3.pdf (accessed on 5 September 2020). [CrossRef]

- Prince, M.; Borrego, M.; Henderson, C.; Cutler, S.; Froyd, J. Use of research-based instructional strategies in core chemical engineering courses. Chem. Eng. Educ. 2013, 47, 27–37. Available online: https://journals.flvc.org/cee/article/view/118512 (accessed on 5 September 2020).

- Bradforth, S.E.; Miller, E.R.; Dichtel, W.R.; Leibovich, A.K.; Feig, A.L.; Martin, J.D.; Bjorkman, K.S.; Schultz, Z.D.; Smith, T.L. University learning: Improve undergraduate science education. Nature 2015, 523, 282–284. [Google Scholar] [CrossRef]

- Handelsman, J.; Ebert-May, D.; Beichner, R.; Bruns, P.; Chang, A.; DeHaan, R.; Gentile, J.; Lauffer, S.; Stewart, J.; Tilghman, S.M.; et al. Scientific teaching. Science 2004, 304, 521–522. Available online: https://www.science.org/doi/full/10.1126/science.1096022 (accessed on 12 October 2020). [CrossRef]

- National Research Council. Evaluating and Improving Undergraduate Teaching in Science, Technology, Engineering, and Mathematics; National Academy Press: Washington, DC, USA, 2003. [Google Scholar]

- Pfund, C.; Miller, S.; Brenner, K.; Bruns, P.; Chang, A.; Ebert-May, D.; Fagen, A.P.; Gentile, J.; Gossens, S.; Khan, I.M.; et al. Summer institute to improve university science teaching. Science 2009, 324, 470–471. [Google Scholar] [CrossRef]

- Committee on Science, Engineering, and Public Policy. Rising above the Gathering Storm: Energizing and Employing America for a Brighter Economic Future; National Academies Press: Washington, DC, USA, 2009. [Google Scholar]

- Olson, S.; Riordan, D.G. Engage to Excel: Producing One Million Additional College Graduates with Degrees in Science, Technology, Engineering, and Mathematics; Executive Office of the President: Washington, DC, USA, 2012. Available online: https://eric.ed.gov/?id=ED541511 (accessed on 21 September 2022).

- Lund, T.J.; Stains, M. The importance of context: An exploration of factors influencing the adoption of student-centered teaching among chemistry, biology, and physics faculty. Int. J. STEM Educ. 2015, 2, 13. [Google Scholar] [CrossRef]

- Bathgate, M.E.; Aragón, O.R.; Cavanagh, A.J.; Waterhouse, J.K.; Frederick, J.; Graham, M.J. Perceived supports and evidence-based teaching in college STEM. Int. J. STEM Educ. 2019, 6, 11. [Google Scholar] [CrossRef]

- Cavanagh, A.J.; Aragón, O.R.; Chen, X.; Couch, B.A.; Durham, M.F.; Bobrownicki, A.; Hanauer, D.I.; Graham, M.J. Student buy-in to active learning in a college science course. CBE—Life Sci. Educ. 2016, 15, ar76. [Google Scholar] [CrossRef]

- Freeman, S.; Eddy, S.L.; McDonough, M.; Smith, M.K.; Okoroafor, N.; Jordt, H.; Wenderoth, M.P. Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acad. Sci. USA 2014, 111, 8410–8415. [Google Scholar] [CrossRef]

- Gross, D.; Pietri, E.S.; Anderson, G.; Moyano-Camihort, K.; Graham, M.J. Increased preclass preparation underlies student outcome improvement in the flipped classroom. CBE—Life Sci. Educ. 2015, 14, ar36. [Google Scholar] [CrossRef]

- Wieman, C. Large-scale comparison of science teaching methods sends clear message. Proc. Natl. Acad. Sci. USA 2014, 111, 8319–8320. [Google Scholar] [CrossRef]

- Borrego, M.; Froyd, J.E.; Hall, T.S. Diffusion of engineering education innovations: A survey of awareness and adoption rates in U.S. engineering departments. J. Eng. Educ. 2010, 99, 185–207. [Google Scholar] [CrossRef]

- Durham, M.F.; Knight, J.K.; Couch, B.A. Measurement instrument for scientific teaching (MIST): A tool to measure the frequencies of research-based teaching practices in undergraduate science courses. CBE—Life Sci. Educ. 2017, 16, ar67. [Google Scholar] [CrossRef] [PubMed]

- Henderson, C.; Dancy, M.H. Impact of physics education research on the teaching of introductory quantitative physics in the United States. Phys. Rev. Spec. Top.—Phys. Educ. Res. 2009, 5, 020107. [Google Scholar] [CrossRef]

- Stains, M.; Harshman, J.; Barker, M.K.; Chasteen, S.V.; Cole, R.; DeChenne-Peters, S.E.; Eagan, M.K., Jr.; Esson, J.M.; Knight, J.K.; Laski, F.A.; et al. Anatomy of STEM teaching in North American universities. Science 2018, 359, 1468–1470. [Google Scholar] [CrossRef]

- Henderson, C. Promoting instructional change in new faculty: An evaluation of the physics and astronomy new faculty workshop. Am. J. Phys. 2008, 76, 179–187. [Google Scholar] [CrossRef]

- Turpen, C.; Finkelstein, N.D. Not all interactive engagement is the same: Variations in physics professors’ implementation of peer instruction. Phys. Rev. Spec. Top.—Phys. Educ. Res. 2009, 5, 020101. [Google Scholar] [CrossRef]

- Turpen, C.; Finkelstein, N.D. The construction of different classroom norms during peer instruction: Students perceive differences. Phys. Rev. Spec. Top.—Phys. Educ. Res. 2010, 6, 020123. [Google Scholar] [CrossRef]

- Chase, A.; Pakhira, D.; Stains, M. Implementing process-oriented, guided-inquiry learning for the first time: Adaptations and short-term impacts on students’ attitude and performance. J. Chem. Educ. 2013, 90, 409–416. [Google Scholar] [CrossRef]

- Daubenmire, P.; Frazier, M.; Bunce, D.; Draus, C.; Gessell, A.; Van Opstal, M. Research and teaching: During POGIL implementation the professor still makes a difference. J. Coll. Sci. Teach. 2015, 44, 72–81. [Google Scholar] [CrossRef]

- American Society for Engineering Education (ASEE). Creating a Culture for Scholarly and Systematic Innovation in Engineering Education: Ensuring U.S. Engineering Has the Right People with the Right Talent for A Global Society; American Society for Engineering Education: Washington, DC, USA, 2009. [Google Scholar]

- National Academy of Engineering. Educating the Engineer of 2020: Adapting Engineering Education to the New Century; National Academies Press: Washington, DC, USA, 2005. [Google Scholar]

- Association of American Universities. Framework for Systemic Change in Undergraduate STEM Teaching and Learning; Association of American Universities: Washington, DC, USA, 2013. [Google Scholar]

- Association of American Universities. Undergraduate STEM Education Initiative; Association of American Universities: Washington, DC, USA, 2022; Available online: https://www.aau.edu/education-community-impact/undergraduate-education/undergraduate-stem-education-initiative (accessed on 25 November 2022).

- National Science Board. Preparing the Next Generation of STEM Innovators: Identifying and Developing Our Nation’s Human Capital; National Science Foundation: Arlington, VA, USA, 2010. Available online: https://www.nsf.gov/nsb/publications/2010/nsb1033.pdf (accessed on 10 November 2022).

- American Association for the Advancement of Science (AAAS). Blueprints for Reform: Science, Mathematics, and Technology Education; Oxford University Press: Oxford, UK, 1998. [Google Scholar]

- Carlisle, D.L.; Weaver, G.C. STEM education centers: Catalyzing the improvement of undergraduate STEM education. Int. J. STEM Educ. 2018, 5, 47. [Google Scholar] [CrossRef]

- Henderson, C.; Dancy, M.H. Barriers to the use of research-based instructional strategies: The influence of both individual and situational characteristics. Phys. Rev. Spec. Top.—Phys. Educ. Res. 2007, 3, 020102. [Google Scholar] [CrossRef]

- Brownell, S.E.; Tanner, K.D. Barriers to faculty pedagogical change: Lack of training, time, incentives, and…tensions with professional identity? CBE—Life Sci. Educ. 2012, 11, 339–346. [Google Scholar] [CrossRef] [PubMed]

- Hora, M.T. Organizational factors and instructional decision-making: A cognitive perspective. Rev. High. Educ. 2012, 35, 207–235. [Google Scholar] [CrossRef]

- Michael, J. Faculty perceptions about barriers to active learning. Coll. Teach. 2007, 55, 42–47. [Google Scholar] [CrossRef]

- Wieman, C. Improving How Universities Teach Science; Harvard University Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Shadle, S.E.; Marker, A.; Earl, B. Faculty drivers and barriers: Laying the groundwork for undergraduate STEM education reform in academic departments. Int. J. STEM Educ. 2017, 4, 8. [Google Scholar] [CrossRef]

- Al-Ansari, H. The Challenges of Education Reform in GCC Societies. LSE Middle East Centre Blog; The London School of Economics and Political Science. Available online: https://blogs.lse.ac.uk/mec/2020/02/24/the-challenges-of-education-reform-in-gcc-societies/ (accessed on 10 January 2021).

- Burt, J. Impact of active learning on performance and motivation in female Emirati students. Learn. Teach. High. Educ. Gulf Perspect. 2004, 1, 1. Available online: https://www.zu.ac.ae/lthe/vol1/lthe01_04.pdf (accessed on 4 January 2021). [CrossRef]

- Mahrous, A.A.; Ahmed, A.A. A cross-cultural investigation of students’ perceptions of the effectiveness of pedagogical tools. J. Stud. Int. Educ. 2009, 14, 289–306. [Google Scholar] [CrossRef]

- Shaw, K.E.; Badri, A.A.M.A.; Hukul, A. Management concerns in United Arab Emirates state schools. Int. J. Educ. Manag. 1995, 9, 8–13. [Google Scholar] [CrossRef]

- Smith, L. Teachers’ conceptions of teaching at a Gulf university: A starting point for revising teacher development program. Learn. Teach. High. Educ. Gulf Perspect. 2006, 3, 1. [Google Scholar] [CrossRef]

- Alpen Capital. GCC Education Industry; Alpen Capital: Dubai, United Arab Emirates, 2018. [Google Scholar]

- Gallagher, K. Education in the United Arab Emirates: Innovation and Transformation; Springer: Singapore, 2019. [Google Scholar]

- Gokulan, D. Education in the UAE: Then, now and tomorrow. Khaleej Times. 15 April 2018. Available online: https://www.khaleejtimes.com/kt-40-anniversary/education-in-the-uae-then-now-and-tomorrow (accessed on 5 October 2020).

- Kalambelkar, D. Classroom experience: Innovations in teaching and learning. Gulf News. 15 May 2019. Available online: https://gulfnews.com/uae/education/classroom-experience-innovations-in-teaching-and-learning-1.1557909302122 (accessed on 3 December 2020).

- Kulkarni, J. Modern curricula have assorted teaching methods in UAE. Khaleej Times. 18 June 2016. Available online: https://www.khaleejtimes.com/nation/education/modern-curricula-have-assorted-teaching-methods-in-uae (accessed on 10 September 2020).

- Vassall-Fall, D. Arab students’ perceptions of strategies to reduce memorization. Arab. World Engl. J. 2011, 2, 48–69. Available online: https://awej.org/images/AllIssues/Volume2/Volume2Number3Aug2011/3.pdf (accessed on 5 February 2021).

- United Arab Emirates. UAE Vision 2021. Vision 2021. 2018. Available online: https://www.vision2021.ae/en/uae-vision (accessed on 15 February 2022).

- United Arab Emirates. National Strategy for Higher Education 2030; The Official Portal of the UAE Government: Dubai, United Arab Emirates, 2022; Available online: https://u.ae/en/about-the-uae/strategies-initiatives-and-awards/federal-governments-strategies-and-plans/national-strategy-for-higher-education-2030 (accessed on 15 July 2022).

- Shetty, J.K.; Begum, G.S.; Goud, M.B.K.; Zaki, B. Comparison of didactic lectures and case-based learning in an undergraduate biochemistry course at RAK Medical and Health Sciences University, UAE. J. Evol. Med. Dent. Sci. 2016, 5, 3212–3216. [Google Scholar] [CrossRef]

- Clarke, M. Beyond antagonism? The discursive construction of ‘new’ teachers in the United Arab Emirates. Teach. Educ. 2006, 17, 225–237. [Google Scholar] [CrossRef]

- Madsen, S.R. Leadership development in the United Arab Emirates: The transformational learning experiences of women. J. Leadersh. Organ. Stud. 2009, 17, 100–110. [Google Scholar] [CrossRef]

- McClusky, B. Investigating the Relationships between Education and Culture for Female Students in Tertiary Settings in the UAE. Ph.D. Thesis, Edith Cowan University, Perth, Australia, 2017. [Google Scholar]

- McLaughlin, J.; Durrant, P. Student learning approaches in the UAE: The case for the achieving domain. High. Educ. Res. Dev. 2016, 36, 158–170. [Google Scholar] [CrossRef]

- Murshidi, G.A. Stem education in the United Arab Emirates: Challenges and possibilities. Int. J. Learn. Teach. Educ. Res. 2019, 18, 316–332. [Google Scholar] [CrossRef]

- McMinn, M.; Dickson, M.; Areepattamannil, S. Reported pedagogical practices of faculty in higher education in the UAE. High. Educ. 2020, 83, 395–410. [Google Scholar] [CrossRef]

- Kayan-Fadlelmula, F.; Sellami, A.; Abdelkader, N.; Umer, S. A systematic review of STEM education research in the GCC countries: Trends, gaps and barriers. Int. J. STEM Educ. 2022, 9, 2. [Google Scholar] [CrossRef]

- Alzaabi, I. Debunking the STEM myth: A case of the UAE and MENA countries. In Proceedings of the 13th International Conference on Education and New Learning Technologies, Online, 5–6 July 2021; pp. 4671–4680. [Google Scholar] [CrossRef]

- United Arab Emirates University. About UAEU; United Arab Emirates University: Dubai, United Arab Emirates, 2017; Available online: https://www.uaeu.ac.ae/en/about/aboutuaeu.shtml (accessed on 20 October 2021).

- University of Nebraska-Lincoln. About University of Nebraska–Lincoln. 2018. Available online: https://www.unl.edu/about/ (accessed on 21 October 2021).

- Williams, C.T.; Walter, E.M.; Henderson, C.; Beach, A.L. Describing undergraduate STEM teaching practices: A comparison of instructor self-report instruments. Int. J. STEM Educ. 2015, 2, 18. [Google Scholar] [CrossRef]

- Sturtevant, H.; Wheeler, L. The STEM Faculty Instructional Barriers and Identity Survey (FIBIS): Development and exploratory results. Int. J. STEM Educ. 2019, 6, 35. [Google Scholar] [CrossRef]

- Froyd, J.E. White paper on promising practices in undergraduate STEM education. In Proceedings of the National Research Council’s Workshop Linking Evidence to Promising Practices in Undergraduate Science, Technology, Engineering, and Mathematics (STEM) Education, Washington, DC, USA, 31 July 2008. [Google Scholar]

- Marbach-Ad, G.; Schaefer Ziemer, K.; Orgler, M.; Thompson, K.V. Science teaching beliefs and reported approaches within a research university: Perspectives from faculty, graduate students, and undergraduates. Int. J. Teach. Learn. High. Educ. 2014, 26, 232–250. Available online: https://files.eric.ed.gov/fulltext/EJ1060872.pdf (accessed on 23 June 2021).

- Zieffler, A.; Park, J.; Garfield, J.; delMas, R.; Bjornsdottir, A. The statistics teaching inventory: A survey on statistics teachers’ classroom practices and beliefs. J. Stat. Educ. 2012, 20, 2012. [Google Scholar] [CrossRef]

- Bass, B.M.; Cascio, W.F.; O’Connor, E.J. Magnitude estimations of expressions of frequency and amount. J. Appl. Psychol. 1974, 59, 313–320. [Google Scholar] [CrossRef]

- Clark, L.A.; Watson, D. Constructing validity: Basic issues in objective scale development. Psychol. Assess. 1995, 7, 309–319. [Google Scholar] [CrossRef]

- Center for Postsecondary Research Indiana University School of Education. Faculty Survey of Student Engagement (FSSE). Evidence-Based Improvement in Higher Education. 2021. Available online: https://nsse.indiana.edu/fsse/ (accessed on 15 September 2022).

- Lally, D.; Forbes, C.T.; Mcneal, K.S.; Soltis, N.A. National survey of geoscience teaching practices 2016: Current trends in geoscience instruction of scientific modeling and systems thinking. J. Geosci. Educ. 2019, 67, 174–191. [Google Scholar] [CrossRef]

- Eagan, M.K.; Stolzenberg, E.B.; Berdan Lozano, J.; Aragon, M.C.; Suchard, M.R.; Hurtado, S. Undergraduate Teaching Faculty: The 2013–2014 HERI Faculty Survey; Higher Education Research Institute, UCLA: Los Angeles, CA, USA, 2014; Available online: https://heri.ucla.edu/monographs/HERI-FAC2014-monograph.pdf (accessed on 5 February 2021).

- Felder, R.M.; Brent, R.; Prince, M.J. Engineering instructional development: Programs, best practices, and recommendations. J. Eng. Educ. 2011, 100, 89–122. [Google Scholar] [CrossRef]

- D’Eon, M.; Sadownik, L.; Harrison, A.; Nation, J. Using self-assessments to detect workshop success. Am. J. Eval. 2008, 29, 92–98. [Google Scholar] [CrossRef]

- Ebert-May, D.; Derting, T.L.; Hodder, J.; Momsen, J.L.; Long, T.M.; Jardeleza, S.E. What we say is not what we do: Effective evaluation of faculty professional development programs. Bioscience 2011, 61, 550–558. [Google Scholar] [CrossRef]

- Kane, R.; Sandretto, S.; Heath, C. Telling half the story: A critical review of research on the teaching beliefs and practices of university academics. Rev. Educ. Res. 2002, 72, 177–228. [Google Scholar] [CrossRef]

- Schell, J.A.; Butler, A.C. Insights from the science of learning can inform evidence-based implementation of peer instruction. Front. Educ. 2018, 3, 33. [Google Scholar] [CrossRef]

| Practice Type | Effort to Implement | # | Method |

|---|---|---|---|

| Traditional (Not EBIPs) | Low | 1 | Instructor-Led Lecture: Students listen while instructor explains course content. |

| Low | 2 | Instructor-Led Problem Solving: Students observe instructor solve problems during class. | |

| Low | 3 | Individual Student Response: Students are invited to respond to instructor questions individually during a lecture. | |

| In-Class Activities | Moderate | 4 | Interactive Lecture Demonstration: Students: (i) hypothesize the outcome of a demonstration, (ii) watch the demonstration, and (iii) reflect on it. |

| Moderate | 5 | Teaching with Simulations: Students/instructor use computer simulations. | |

| Low | 6 | Clickers/Personal Response Systems: Students’ responses/data are collected in real-time through an interactive classroom response system. | |

| Small Group Work | Low | 7 | Collaborative Learning: Students perform group-work toward a common goal. |

| Low | 8 | Think-Pair-Share: Students are: (i) given “think time” to internalize content, (ii) asked to discuss ideas with a peer partner, and (iii) asked to share thinking with the rest of the class. | |

| Low | 9 | Peer Instruction: Students: (i) vote on an answer (often with “Clickers”) to an instructor-posed concept test question, (ii) see the answer distribution, (iii) form pairs and discuss answers, and (iv) vote again. | |

| Formative Assessment | Low | 10 | Concept Maps: Students diagram the relationships that exist between concepts. |

| Low | 11 | Minute Paper: Students briefly write their answers to questions at the end of class: What was the most important thing you learned in class today? What point remains unclear/unanswered? | |

| Moderate | 12 | Concept Tests/Inventories: Students respond to short, multiple-choice questions regarding a concept. Common wrong answers/misunderstandings are part of the answer choices. | |

| Moderate | 13 | Just-in-Time Teaching: Students: (i) complete a set of Web-based activities outside of class, and (ii) submit these to the instructor who identifies misunderstandings and adjusts the lesson. | |

| Low | 14 | Student Presentations: Students present their work orally and/or in the form of a poster. | |

| Scenario-Based Content Organization | High | 15 | Problem-Based Learning: Students are given a complex, real-world problem and are asked to apply their knowledge and direct their own learning in order to solve it. |

| High | 16 | Service Learning: Students are integrated into community service experiences that help reinforce course content (e.g., students work on a project with a real, not-for-profit client in the community). |

| UAEU Faculty (66) | UNL Faculty (81) | Total Surveyed Faculty (147) | |

|---|---|---|---|

| Rank | |||

| Instructor/Lecturer | 17% | 14% | 15% |

| Assistant Professor | 29% | 23% | 26% |

| Associate Professor | 36% | 20% | 27% |

| Full Professor | 18% | 43% | 32% |

| Gender | |||

| Men | 85% | 64% | 73% |

| Women | 12% | 31% | 22% |

| Prefer Not to Respond | 3% | 5% | 4% |

| Colleges | |||

| Engineering | 59% | 33% | 45% |

| Science | 41% | 67% | 55% |

| UAEU Faculty (66) | UNL Faculty (81) | Total Surveyed Faculty (147) | |

|---|---|---|---|

| Years Teaching Experience | |||

| First year teaching | 6% | 0% | 3% |

| 1 | 0% | 1% | 1% |

| 2–5 | 23% | 11% | 16% |

| 6–10 | 20% | 26% | 23% |

| 11+ | 52% | 62% | 57% |

| Average # of Courses Taught/Academic Year | |||

| Up to 4 | 42% | 74% | 60% |

| 5–6 | 30% | 20% | 24% |

| 7–8 | 20% | 5% | 12% |

| More than 8 | 8% | 1% | 4% |

| Average # of Research Papers Published/Academic Year | |||

| Up to 2 | 36% | 58% | 48% |

| 3–4 | 50% | 30% | 39% |

| 5–6 | 8% | 5% | 6% |

| More than 6 | 6% | 7% | 7% |

| Percent of Typical Class Time Spent Lecturing | |||

| 0–20% | 6% | 14% | 10% |

| 21–40% | 12% | 15% | 14% |

| 41–60% | 17% | 35% | 27% |

| 61–80% | 35% | 23% | 29% |

| 81–100% | 30% | 14% | 21% |

| # of Attended Teaching Workshops, Programs, Courses | |||

| None | 11% | 6% | 8% |

| 1–3 | 56% | 28% | 41% |

| 4–5 | 12% | 19% | 16% |

| More than 6 | 21% | 47% | 35% |

| UAEU Faculty | UNL Faculty | ||||

|---|---|---|---|---|---|

| Teaching Practices | Total Aware (%) | Total Users (%) | Total Aware (%) | Total Users (%) | |

| 1 | Instructor-Led Lecture | 94 | 90 | 100 | 98 |

| 2 | Instructor-Led Problem Solving | 96 | 86 | 99 | 93 |

| 3 | Individual Student Response | 94 | 88 | 100 | 98 |

| 4 | Interactive Lecture Demonstration | 86 | 62 | 89 | 61 |

| 5 | Teaching with Simulations | 81 | 64 | 88 | 61 |

| 6 | Clickers/Personal Response Systems | 66 | 34 | 95 | 57 |

| 7 | Concept Maps | 66 | 21 | 74 | 35 |

| 8 | Minute Paper | 66 | 16 | 79 | 39 |

| 9 | Concept Tests/Inventories | 79 | 55 | 90 | 61 |

| 10 | Just-in-Time Teaching | 67 | 39 | 79 | 41 |

| 11 | Collaborative Learning | 93 | 81 | 99 | 85 |

| 12 | Peer Instruction | 63 | 37 | 90 | 55 |

| 13 | Think-Pair-Share | 76 | 55 | 93 | 63 |

| 14 | Student Presentations | 96 | 82 | 98 | 76 |

| 15 | Problem-Based Learning | 94 | 78 | 98 | 77 |

| 16 | Service Learning | 64 | 25 | 72 | 15 |

| Utilized on a Termly Basis | Utilized on a Weekly Basis | ||||

|---|---|---|---|---|---|

| Teaching Practices | UAEU Faculty (%) | UNL Faculty (%) | UAEU Faculty (%) | UNL Faculty (%) | |

| 1 | Instructor-Led Lecture | 6 | 11 | 94 | 89 |

| 2 | Instructor-Led Problem Solving | 19 | 17 | 81 | 83 |

| 3 | Individual Student Response | 18 | 4 | 82 | 96 |

| 4 | Interactive Lecture Demonstration | 33 | 33 | 67 | 67 |

| 5 | Teaching with Simulations | 50 | 59 | 50 | 41 |

| 6 | Clickers/Personal Response Systems | 52 | 17 | 48 | 83 |

| 7 | Concept Maps | 43 | 79 | 57 | 21 |

| 8 | Minute Paper | 45 | 72 | 55 | 28 |

| 9 | Concept Tests/Inventories | 68 | 52 | 32 | 48 |

| 10 | Just-in-Time Teaching | 67 | 41 | 33 | 59 |

| 11 | Collaborative Learning | 59 | 34 | 41 | 66 |

| 12 | Peer Instruction | 40 | 31 | 60 | 69 |

| 13 | Think-Pair-Share | 54 | 29 | 46 | 71 |

| 14 | Student Presentations | 73 | 81 | 27 | 19 |

| 15 | Problem-Based Learning | 69 | 54 | 31 | 46 |

| 16 | Service Learning | 59 | 75 | 41 | 25 |

| Easy | Not Easy | ||||

|---|---|---|---|---|---|

| Teaching Practices | UAEU Users (%) | UNL Users (%) | UAEU Users (%) | UNL Users (%) | |

| 1 | Instructor-Led Lecture | 95% | 94% | 5% | 6% |

| 2 | Instructor-Led Problem Solving | 90% | 86% | 10% | 14% |

| 3 | Individual Student Response | 80% | 69% | 20% | 31% |

| 4 | Interactive Lecture Demonstration | 86% | 54% | 14% | 46% |

| 5 | Teaching with Simulations | 68% | 45% | 32% | 55% |

| 6 | Clickers/Personal Response Systems | 74% | 70% | 26% | 30% |

| 7 | Concept Maps | 71% | 76% | 29% | 24% |

| 8 | Minute Paper | 82% | 78% | 18% | 22% |

| 9 | Concept Tests/Inventories | 76% | 68% | 24% | 32% |

| 10 | Just-in-Time Teaching | 50% | 41% | 50% | 59% |

| 11 | Collaborative Learning | 72% | 49% | 28% | 51% |

| 12 | Peer Instruction | 84% | 58% | 16% | 42% |

| 13 | Think-Pair-Share | 68% | 79% | 32% | 21% |

| 14 | Student Presentations | 76% | 60% | 24% | 40% |

| 15 | Problem-Based Learning | 56% | 44% | 44% | 56% |

| 16 | Service Learning | 47% | 17% | 53% | 83% |

| Never True | Sometimes True | True Nearly All of the Time | ||||

|---|---|---|---|---|---|---|

| Contextual Factors that May Influence Faculty Teaching Practices | UAEU Faculty | UNL Faculty | UAEU Faculty | UNL Faculty | UAEU Faculty | UNL Faculty |

| Department’s priority on teaching | 6% | 6% | 28% | 45% | 67% | 49% |

| Department’s priority on research | 21% | 24% | 50% | 55% | 29% | 21% |

| Department’s promotion requirements | 13% | 23% | 49% | 54% | 39% | 24% |

| Department’s reward system | 33% | 26% | 47% | 56% | 19% | 18% |

| Level of flexibility given by department in choosing the way a course is taught | 14% | 1% | 15% | 19% | 71% | 80% |

| Required textbooks or a syllabus planned by others | 6% | 36% | 38% | 52% | 57% | 12% |

| Textbook/s faculty choose | 3% | 13% | 50% | 56% | 47% | 31% |

| Number of students in a class | 15% | 1% | 56% | 42% | 29% | 57% |

| Physical space of the classroom | 25% | 6% | 47% | 54% | 28% | 40% |

| Availability of teaching assistants | 39% | 13% | 38% | 46% | 24% | 40% |

| Availability of required resources | 7% | 5% | 50% | 55% | 43% | 40% |

| Time constraints due to research commitments | 17% | 19% | 47% | 44% | 36% | 37% |

| Time constraints due to administrative or service commitments | 17% | 17% | 42% | 60% | 42% | 24% |

| Time constraints due to teaching load | 11% | 8% | 35% | 61% | 54% | 31% |

| Ability to cover all necessary content | 18% | 4% | 32% | 52% | 50% | 44% |

| Knowledge of appropriate instructional methods | 18% | 6% | 38% | 56% | 44% | 38% |

| Student preparation for class | 8% | 5% | 61% | 46% | 53% | 49% |

| Student ability levels | 3% | 4% | 39% | 38% | 94% | 58% |

| Student willingness to interact during class | 1% | 4% | 50% | 42% | 72% | 55% |

| Teaching evaluations based on students’ ratings | 21% | 19% | 58% | 73% | 31% | 8% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Albuquerque, M.J.B.; Awadalla, D.M.M.; de Albuquerque, F.D.B.; Aly Hassan, A. Awareness and Adoption of Evidence-Based Instructional Practices by STEM Faculty in the UAE and USA. Educ. Sci. 2023, 13, 204. https://doi.org/10.3390/educsci13020204

Albuquerque MJB, Awadalla DMM, de Albuquerque FDB, Aly Hassan A. Awareness and Adoption of Evidence-Based Instructional Practices by STEM Faculty in the UAE and USA. Education Sciences. 2023; 13(2):204. https://doi.org/10.3390/educsci13020204

Chicago/Turabian StyleAlbuquerque, Melinda Joy Biggs, Dina Mustafa Mohammad Awadalla, Francisco Daniel Benicio de Albuquerque, and Ashraf Aly Hassan. 2023. "Awareness and Adoption of Evidence-Based Instructional Practices by STEM Faculty in the UAE and USA" Education Sciences 13, no. 2: 204. https://doi.org/10.3390/educsci13020204

APA StyleAlbuquerque, M. J. B., Awadalla, D. M. M., de Albuquerque, F. D. B., & Aly Hassan, A. (2023). Awareness and Adoption of Evidence-Based Instructional Practices by STEM Faculty in the UAE and USA. Education Sciences, 13(2), 204. https://doi.org/10.3390/educsci13020204