Modeling Students’ Perceptions of Chatbots in Learning: Integrating Technology Acceptance with the Value-Based Adoption Model

Abstract

:1. Introduction

2. Theoretical Foundations

2.1. Chatbots in Education

2.2. Related Work on Chatbot Acceptance in Learning

2.3. Technology Acceptance Model

2.4. Value-Based Adoption Model

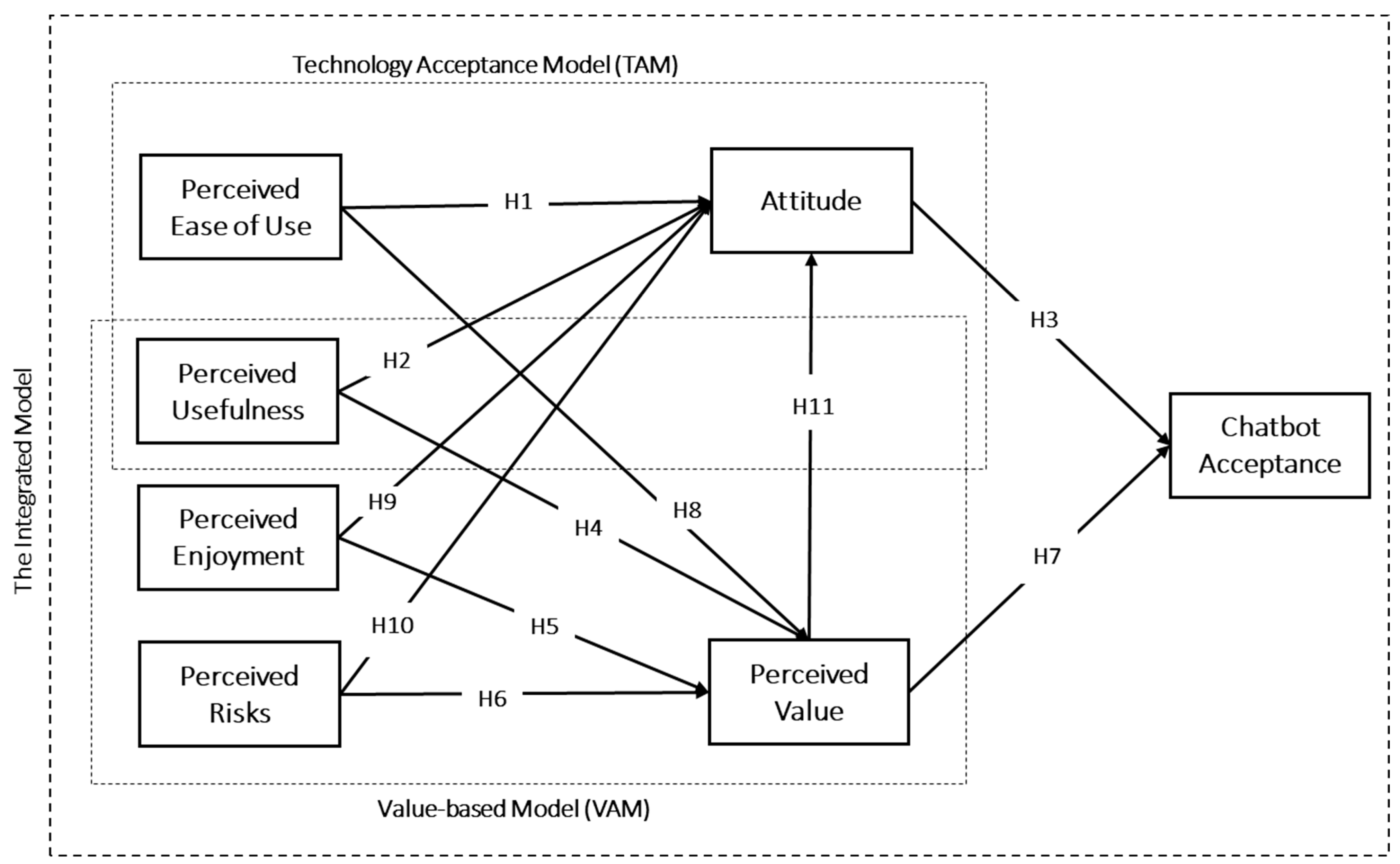

2.5. The Integrated Model of TAM and VAM in Accepting Chatbots in Learning

2.6. Relationships in the Proposed Model

2.6.1. Relationships in the TAM

2.6.2. Relationships in the VAM

2.6.3. The Integrated Relationships of the TAM and VAM

3. Methods

3.1. Data Collection and Participants

3.2. Data Analysis

3.3. Measurement

4. Results

4.1. Measurement Model Analysis

4.2. Structural Model Analysis

5. Discussion and Implications

5.1. Perceived Ese of Use, Attitude, and Perceived Value

5.2. Perceived Usefulness, Attitude, and Perceived Value

5.3. Perceived Enjoyment, Attitude, and Perceived Value

5.4. Perceived Risk, Attitude, and Perceived Value

5.5. Attitude, Perceived Value, and Chatbot Acceptance

6. Conclusions, Limitations, and Future Work

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Survey Questionnaire

| Constructs | |

| Perceived Ease of Use | 1. Learning how to use a chatbot is easy for me. |

| 2. My interaction with the chatbot is clear and simple. | |

| 3. I find chatbots easy to use for my learning. | |

| 4. It is easy for me to become skilled in using chatbots. | |

| Perceived Usefulness | 1. I find chatbots useful for performing my learning tasks. |

| 2. Using a chatbot increases my chances of achieving high performance. | |

| 3. Using a chatbot helps me accomplish my learning tasks effortlessly. | |

| 4. Using chatbots increases my productivity. | |

| Perceived Enjoyment | 1. I have fun interacting with chatbots. |

| 2. Using chatbots provides me with a lot of enjoyment. | |

| 3. I enjoy using chatbots for learning. | |

| Perceived Risk | 1. I feel unsafe when using a chatbot. |

| 2. I am worried that personal information would be leaked when using a chatbot. | |

| 3. I am worried about personal information suffering from unauthorized use when using a chatbot. | |

| Attitude | 1. Using chatbots makes learning more interesting. |

| 2. Using chatbots has a positive influence on my learning. | |

| 3. I think learning with a chatbot is valuable. | |

| 4. I think it is a trend to use chatbots in learning. | |

| Perceived Value | 1. I believe that using a chatbot is a valuable idea. |

| 2. Chatbot is advantageous to me due to the general amount of effort I need to put in. | |

| 3. Chatbot is worthwhile for me based on the amount of time I need to spend. | |

| 4. Chatbots provide me with good value in general. | |

| Chatbot Acceptance | 1. I look forward to using chatbots in my learning. |

| 2. I intend to use chatbots in my future learning. | |

| 3. I plan to use chatbots in my future learning. | |

| 4. I think using chatbots will increase my future learning. | |

| 5. I support the adoption of chatbots in higher education. | |

References

- Chang, C.Y.; Hwang, G.J.; Gau, M.L. Promoting Students’ Learning Achievement and Self-Efficacy: A Mobile Chatbot Approach for Nursing Training. Br. J. Educ. Technol. 2022, 53, 171–188. [Google Scholar] [CrossRef]

- Pérez, J.Q.; Daradoumis, T.; Puig, J.M.M. Rediscovering the Use of Chatbots in Education: A Systematic Literature Review. Comput. Appl. Eng. Educ. 2020, 28, 1549–1565. [Google Scholar] [CrossRef]

- Fryer, L.K.; Nakao, K.; Thompson, A. Chatbot Learning Partners: Connecting Learning Experiences, Interest and Competence. Comput. Hum. Behav. 2019, 93, 279–289. [Google Scholar] [CrossRef]

- Research and Markets. Global Chatbot Market Size, Share & Trends Analysis Report by End Use (Large Enterprises, Medium Enterprises), by Application, by Type, by Product Landscape, by Vertical, by Region, and Segment Forecasts, 2022–2030. Available online: https://www.researchandmarkets.com/reports/4396458/global-chatbot-market-size-share-and-trends#sp-pos-1 (accessed on 17 July 2023).

- Cunningham-Nelson, S.; Boles, W.; Trouton, L.; Margerison, E. A Review of Chatbots in Education: Practical Steps Forward. In Proceedings of the 30th Annual Conference for the Australasian Association for Engineering Education (AAEE 2019): Educators Becoming Agents of Change: Innovate, Integrate, Motivate, Brisbane, Australia, 8–11 December 2019. [Google Scholar]

- Troussas, C.; Krouska, A.; Alepis, E.; Virvou, M. Intelligent and Adaptive Tutoring Through a Social Network for Higher education. New Rev. Hypermedia Multimed. 2020, 26, 138–167. [Google Scholar] [CrossRef]

- Wollny, S.; Schneider, J.; Di Mitri, D.; Weidlich, J.; Rittberger, M.; Drachsler, H. Are We There Yet?—A Systematic Literature Review on Chatbots in Education. Front. Artif. Intell. 2021, 4, 654924. [Google Scholar] [CrossRef] [PubMed]

- Clarizia, F.; Colace, F.; Lombardi, M.; Pascale, F.; Santaniello, D. Chatbot: An Education Support System for Student. In International Symposium on Cyberspace Safety and Security; Springer: Berlin/Heidelberg, Germany, 2018; pp. 291–302. [Google Scholar] [CrossRef]

- Colace, F.; De Santo, M.; Lombardi, M.; Pascale, F.; Pietrosanto, A.; Lemma, S. Chatbot for E-Learning: A Case of Study. Int. J. Mech. Eng. Robot. Res. 2018, 7, 528–533. [Google Scholar] [CrossRef]

- Bezverhny, E.; Dadteev, K.; Barykin, L.; Nemeshaev, S.; Klimov, V. Use of Chat Bots in Learning Management Systems. Procedia Comput. Sci. 2020, 169, 652–655. [Google Scholar] [CrossRef]

- Haristiani, N.; Rifai, M.M. Chatbot-Based Application Development and Implementation as an Autonomous Language Learning Medium. Indones. J. Sci. Technol. 2021, 6, 561–576. [Google Scholar] [CrossRef]

- Aleedy, M.; Atwell, E.; Meshoul, S. Using AI Chatbots in Education: Recent Advances Challenges and Use Case. In Artificial Intelligence and Sustainable Computing. Algorithms for Intelligent Systems; Pandit, M., Gaur, M.K., Rana, P.S., Tiwari, A., Eds.; Springer: Singapore, 2022. [Google Scholar] [CrossRef]

- Sandu, N.; Gide, E. Adoption of AI-Chatbots to Enhance Student Learning Experience in Higher Education in India. In Proceedings of the 2019 18th International Conference on Information Technology Based Higher Education and Training (ITHET), Magdeburg, Germany, 26–27 September 2019; pp. 1–5. [Google Scholar]

- Winkler, R.; Söllner, M. Unleashing the Potential of Chatbots in Education: A State-of-the-Art Analysis. Acad. Manag. Annu. Meet. 2018. [Google Scholar] [CrossRef]

- Hwang, G.J.; Chang, C.Y. A Review of Opportunities and Challenges of Chatbots in Education. Interact. Learn. Environ. 2021, 31, 4099–4112. [Google Scholar] [CrossRef]

- Malik, R.; Shrama, A.; Trivedi, S.; Mishra, R. Adoption of Chatbots for Learning among University Students: Role of Perceived Convenience and Enhanced Performance. Int. J. Emerg. Technol. Learn. (IJET) 2021, 16, 200–211. [Google Scholar] [CrossRef]

- Chen, Y.; Jensen, S.; Albert, L.J.; Gupta, S.; Lee, T. Artificial Intelligence (AI) Student Assistants in the Classroom: Designing Chatbots to Support Student Success. Inf. Syst. Front. 2023, 25, 161–182. [Google Scholar] [CrossRef]

- Hammad, R.; Bahja, M. Opportunities and Challenges in Educational Chatbots. In Trends, Applications, and Challenges of Chatbot Technology; IGI Global: Hershey, PA, USA, 2023; pp. 119–136. [Google Scholar] [CrossRef]

- Yang, S.; Evans, C. Opportunities and Challenges in Using AI Chatbots in Higher Education. In Proceedings of the 2019 3rd International Conference on Education and E-Learning, Barcelona, Spain, 5–7 November 2019; pp. 79–83. [Google Scholar]

- Hasal, M.; Nowaková, J.; Ahmed Saghair, K.; Abdulla, H.; Snášel, V.; Ogiela, L. Chatbots: Security, Privacy, Data Protection, and Social Aspects. Concurr. Comput. Pract. Exp. 2021, 33, e6426. [Google Scholar] [CrossRef]

- Wu, W.; Zhang, B.; Li, S.; Liu, H. Exploring Factors of the Willingness to Accept AI-Assisted Learning Environments: An Empirical Investigation Based on the Utaut Model and Perceived Risk Theory. Front. Psychol. 2022, 13, 870777. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots Applications in Education: A Systematic Review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Fidan, M.; Gencel, N. Supporting the Instructional Videos with Chatbot and Peer Feedback Mechanisms in Online Learning: The Effects on Learning Performance and Intrinsic Motivation. J. Educ. Comput. Res. 2022, 60, 1716–1741. [Google Scholar] [CrossRef]

- Kuhail, M.A.; Alturki, N.; Alramlawi, S.; Alhejori, K. Interacting with Educational Chatbots: A Systematic Review. Educ. Inf. Technol. 2023, 28, 973–1018. [Google Scholar] [CrossRef]

- Pereira, J.; Fernández-Raga, M.; Osuna-Acedo, S.; Roura-Redondo, M.; Almazán-López, O.; Buldón-Olalla, A. Promoting Learners’ Voice Productions Using Chatbots as a Tool for Improving the Learning Process in a MOOC. Technol. Knowl. Learn. 2019, 24, 545–565. [Google Scholar] [CrossRef]

- Kazoun, N.; Kokkinaki, A.; Chedrawi, C. Factors That Affect the Use of AI Agents in Adaptive Learning: A Sociomaterial and McDonaldization Approach in the Higher Education Sector. In Information Systems, Proceedings of the 18th European, Mediterranean, and Middle Eastern Conference, EMCIS 2021, Virtual Event, 8–9 December 2021; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 414–426. [Google Scholar]

- Yin, J.; Goh, T.T.; Yang, B.; Xiaobin, Y. Conversation Technology with Micro-Learning: The Impact of Chatbot-Based Learning on Students’ Learning Motivation and Performance. J. Educ. Comput. Res. 2021, 59, 154–177. [Google Scholar] [CrossRef]

- Mohd Rahim, N.I.; Iahad, N.A.; Yusof, A.F.; Al-Sharafi, M.A. AI-Based Chatbots Adoption Model for Higher-Education Institutions: A Hybrid PLS-SEM-Neural Network Modelling Approach. Sustainability 2022, 14, 12726. [Google Scholar] [CrossRef]

- Essel, H.B.; Vlachopoulos, D.; Tachie-Menson, A.; Johnson, E.E.; Baah, P.K. The Impact of a Virtual Teaching Assistant (Chatbot) on Students’ Learning in Ghanaian Higher Education. Int. J. Educ. Technol. High. Educ. 2022, 19, 57. [Google Scholar] [CrossRef]

- Al-Abdullatif, A.M.; Al-Dokhny, A.A.; Drwish, A.M. Implementing the Bashayer Chatbot in Saudi Higher Education: Measuring the Influence on Students’ Motivation and Learning Strategies. Front. Psychol. 2023, 14, 1129070. [Google Scholar] [CrossRef]

- Chen, H.L.; Vicki Widarso, G.; Sutrisno, H. A Chatbot for Learning Chinese: Learning Achievement and Technology Acceptance. J. Educ. Comput. Res. 2020, 58, 1161–1189. [Google Scholar] [CrossRef]

- Troussas, C.; Krouska, A.; Virvou, M. Integrating an Adjusted Conversational Agent into a Mobile-Assisted Language Learning Application. In Proceedings of the 2017 IEEE 29th International Conference on Tools with Artificial Intelligence (ICTAI), Boston, MA, USA, 6–8 November 2017; pp. 1153–1157. [Google Scholar] [CrossRef]

- Troussas, C.; Krouska, A.; Virvou, M. MACE: Mobile Artificial Conversational Entity for Adapting Domain Knowledge and Generating Personalized Advice. Int. J. Artif. Intell. Tools 2019, 28, 1–16. [Google Scholar] [CrossRef]

- Lee, L.K.; Fung, Y.C.; Pun, Y.W.; Wong, K.K.; Yu, M.T.Y.; Wu, N.I. Using a Multiplatform Chatbot as an Online Tutor in a University Course. In Proceedings of the 2020 International Symposium on Educational Technology (ISET), Bangkok, Thailand, 24–27 August 2020; pp. 53–56. [Google Scholar]

- Al Darayseh, A. Acceptance of Artificial Intelligence in Teaching Science: Science Teachers’ Perspective. Comput. Educ. Artif. Intell. 2023, 4, 100132. [Google Scholar] [CrossRef]

- Chocarro, R.; Cortiñas, M.; Marcos-Matás, G. Teachers’ Attitudes Towards Chatbots in Education: A Technology Acceptance Model Approach Considering the Effect of Social Language, Bot Proactiveness, and Users’ Characteristics. Educ. Stud. 2021, 49, 295–313. [Google Scholar] [CrossRef]

- Chuah, K.M.; Kabilan, M. Teachers’ Views on the Use of Chatbots to Support English Language Teaching in a Mobile Environment. Int. J. Emerg. Technol. Learn. (IJET) 2021, 16, 223–237. [Google Scholar] [CrossRef]

- Merelo, J.J.; Castillo, P.A.; Mora, A.M.; Barranco, F.; Abbas, N.; Guillén, A.; Tsivitanidou, O. Chatbots and Messaging Platforms in the Classroom: An Analysis from the Teacher’s Perspective. Educ. Inf. Technol. 2023, 1–36. [Google Scholar] [CrossRef]

- Nikou, S.A.; Chang, M. Learning by Building Chatbot: A System Usability Study and Teachers’ Views About the Educational Uses of Chatbots. In International Conference on Intelligent Tutoring Systems, Proceedings of the 19th International Conference, ITS 2023, Corfu, Greece, 2–5 June 2023; Springer Nature Switzerland: Cham, Switzerland, 2023; pp. 342–351. [Google Scholar]

- Yang, T.C.; Chen, J.H. Pre-Service Teachers’ Perceptions and Intentions Regarding the Use of Chatbots Through Statistical and Lag Sequential Analysis. Comput. Educ. Artif. Intell. 2023, 4, 100119. [Google Scholar] [CrossRef]

- Davis, F. A Technology Acceptance Model for Empirically Testing New End-User Information Systems. Ph.D. Thesis, Massachusetts Institute of Technology, Cambrige, MA, USA, 1985. [Google Scholar]

- Kim, H.W.; Chan, H.C.; Gupta, S. Value-Based Adoption of Mobile Internet: An Empirical Investigation. Decis. Support Syst. 2007, 43, 111–126. [Google Scholar] [CrossRef]

- Rejón-Guardia, F.; Vich-I-Martorell, G.A. Design and Acceptance of Chatbots for Information Automation in University Classrooms. In EDULEARN20 Proceedings, Proceedings of the 12th International Conference on Education and New Learning Technologies, Online Conference, 6–7 July 2020; IATED: Valencia, Spain, 2020; pp. 2452–2462. [Google Scholar]

- Bahja, M.; Hammad, R.; Hassouna, M. Talk2Learn: A Framework for Chatbot Learning. In Transforming Learning with Meaningful Technologies; Scheffel, M., Broisin, J., Pammer-Schindler, V., Ioannou, A., Schneider, J., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Pérez-Marín, D. A Review of the Practical Applications of Pedagogic Conversational Agents to be Used in School and University Classrooms. Digital 2021, 1, 18–33. [Google Scholar] [CrossRef]

- Sriwisathiyakun, K.; Dhamanitayakul, C. Enhancing Digital Literacy with an Intelligent Conversational Agent for Senior Citizens in Thailand. Educ. Inf. Technol. 2022, 27, 6251–6271. [Google Scholar] [CrossRef] [PubMed]

- Sjöström, J.; Dahlin, M. Tutorbot: A Chatbot for Higher Education Practice. In Designing for Digital Transformation. Co-Creating Services with Citizens and Industry, Proceedings of the 15th International Conference on Design Science Research in Information Systems and Technology, DESRIST 2020, Kristiansand, Norway, 2–4 December 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 93–98. [Google Scholar]

- Cabrera, N.; Fernández-Ferrer, M.; Maina, M.; Guàrdia, L. Peer Assessment in Online Learning: Promoting Self-Regulation Strategies Through the Use of Chatbots in Higher education. Envisioning Rep. 2022, 49, 49–51. [Google Scholar]

- Calle, M.; Narváez, E.; Maldonado-Mahauad, J. Proposal for the Design and Implementation of Miranda: A Chatbot-Type Recommender for Supporting Self-Regulated Learning in Online Environments. LALA 2021, 21, 19–21. [Google Scholar]

- Park, S.; Choi, J.; Lee, S.; Oh, C.; Kim, C.; La, S.; Lee, J.; Suh, B. Designing a Chatbot for a Brief Motivational Interview on Stress Management: Qualitative Case Study. J. Med. Internet Res. 2019, 21, e12231. [Google Scholar] [CrossRef]

- Mai, N.E.O. The Merlin Project: Malaysian Students’ Acceptance of an AI Chatbot in Their Learning Process. Turk. Online J. Distance Educ. 2022, 23, 31–48. [Google Scholar] [CrossRef]

- Belda-Medina, J.; Calvo-Ferrer, J.R. Using Chatbots as AI Conversational Partners in Language Learning. Appl. Sci. 2022, 12, 8427. [Google Scholar] [CrossRef]

- Huang, W.; Hew, K.F.; Fryer, L.K. Chatbots for Language Learning—Are They Really Useful? A Systematic Review of Chatbot-Supported Language Learning. J. Comput. Assist. Learn. 2021, 38, 237–257. [Google Scholar] [CrossRef]

- Pillai, R.; Sivathanu, B.; Metri, B.; Kaushik, N. Students’ Adoption of AI-Based Teacher-Bots (T-Bots) for Learning in Higher Education. Inf. Technol. People 2023, 1–25. [Google Scholar] [CrossRef]

- Kumar;Silva, P.A. Work-in-Progress: A Preliminary Study on Students’ Acceptance of Chatbots for Studio-Based Learning. In Proceedings of the 2020 IEEE Global Engineering Education Conference (EDUCON), Porto, Portugal, 27–30 April 2020; pp. 1627–1631. [Google Scholar] [CrossRef]

- Chatterjee, S.; Bhattacharjee, K.K. Adoption of Artificial Intelligence in Higher Education: A Quantitative Analysis Using Structural Equation Modelling. Educ. Inf. Technol. 2020, 25, 3443–3463. [Google Scholar] [CrossRef]

- Slepankova, M. Possibilities of Artificial Intelligence in Education: An Assessment of the Role of AI Chatbots as a Communication Medium in Higher Education. Master’s Thesis, Linnaeus University, Växjö, Sweden, 2021. Available online: https://urn.kb.se/resolve?urn=urn:nbn:se:lnu:diva-108427 (accessed on 17 September 2023).

- Mokmin, N.A.M.; Ibrahim, N.A. The Evaluation of Chatbot as a Tool for Health Literacy Education among Undergraduate Students. Educ. Inf. Technol. 2021, 2, 6033–6049. [Google Scholar] [CrossRef] [PubMed]

- Keong, W.E.Y. Factors Influencing Adoption Intention Towards Chatbots as a Learning Tool. In The International Conference in Education (ICE), Proceedings; Faculty of Social Sciences and Humanities, UTM: Johor Bahru, Malaysia, 2022; pp. 96–99. [Google Scholar]

- Almahri, F.A.J.; Bell, D.; Merhi, M. Understanding Student Acceptance and Use of Chatbots in the United Kingdom Universities: A Structural Equation Modelling Approach. In Proceedings of the 2020 6th International Conference on Information Management (ICIM), London, UK, 27–29 March 2020; pp. 284–288. [Google Scholar] [CrossRef]

- Ragheb, M.A.; Tantawi, P.; Farouk, N.; Hatata, A. Investigating the Acceptance of Applying Chat-Bot (Artificial Intelligence) Technology among Higher Education Students in Egypt. Int. J. High. Educ. Manag. 2022, 8, 1–13. [Google Scholar] [CrossRef]

- Liao, Y.-K.; Wu, W.-Y.; Le, T.Q.; Phung, T.T.T. The Integration of the Technology Acceptance Model and Value-Based Adoption Model to Study the Adoption of E-Learning: The Moderating Role of e-WOM. Sustainability 2022, 14, 815. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User Acceptance of Computer Technology: A Comparison of Two Theoretical Models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Park, S.Y. An Analysis of the Technology Acceptance Model in Understanding University Students’ Behavioral Intention to Use E-Learning. J. Educ. Technol. Soc. 2009, 12, 150–162. [Google Scholar]

- King, W.R.; He, J. A Meta-Analysis of the Technology Acceptance Model. Inf. Manag. 2006, 43, 740–755. [Google Scholar] [CrossRef]

- Zeithaml, V.A. Consumer Perceptions of Price, Quality, and Value: A Means-End Model and Synthesis of Evidence. J. Mark. 1988, 52, 2–22. [Google Scholar] [CrossRef]

- Kim, J.; Kim, J. An Integrated Analysis of Value-Based Adoption Model and Information Systems Success Model for Prop Tech Service Platform. Sustainability 2021, 13, 12974. [Google Scholar] [CrossRef]

- Kim, Y.; Park, Y.; Choi, J. A Study on the Adoption of IoT Smart Home Service: Using Value-Based Adoption Model. Total Qual. Manag. Bus. Excell. 2017, 28, 1149–1165. [Google Scholar] [CrossRef]

- Sohn, K.; Kwon, O. Technology Acceptance Theories and Factors Influencing Artificial Intelligence–Based Intelligent Products. Telemat. Inform. 2020, 47, 101324. [Google Scholar] [CrossRef]

- Hsiao, K.L.; Chen, C.C. Value-Based Adoption of E-Book Subscription Services: The Roles of Environmental Concerns and Reading Habits. Telemat. Inform. 2017, 34, 434–448. [Google Scholar] [CrossRef]

- Kim, S.H.; Bae, J.H.; Jeon, H.M. Continuous Intention on Accommodation Apps: Integrated Value-Based Adoption and Expectation-Confirmation Model Analysis. Sustainability 2019, 11, 1578. [Google Scholar] [CrossRef]

- Liang, T.P.; Lin, Y.L.; Hou, H.C. What Drives Consumers to Adopt a Sharing Platform: An Integrated Model of Value-Based and Transaction Cost Theories. Inf. Manag. 2021, 58, 103471. [Google Scholar] [CrossRef]

- Aslam, W.; Ahmed Siddiqui, D.; Arif, I.; Farhat, K. Chatbots in the Frontline: Drivers of Acceptance. Kybernetes 2022. ahead-of-print. [Google Scholar] [CrossRef]

- Kelly, S.; Kaye, S.A.; Oviedo-Trespalacios, O. What Factors Contribute to Acceptance of Artificial Intelligence? A Systematic Review. Telemat. Inform. 2022, 77, 101925. [Google Scholar] [CrossRef]

- Yu, J.; Lee, H.; Ha, I.; Zo, H. User Acceptance of Media Tablets: An Empirical Examination of Perceived Value. Telemat. Inform. 2017, 34, 206–223. [Google Scholar] [CrossRef]

- Lau, C.K.H.; Chui, C.F.R.; Au, N. Examination of the Adoption of Augmented Reality: A VAM Approach. Asia Pac. J. Tour. Res. 2019, 24, 1005–1020. [Google Scholar] [CrossRef]

- Teo, T. Factors Influencing Teachers’ Intention to Use Technology: Model Development and Test. Comput. Educ. 2011, 57, 2432–2440. [Google Scholar] [CrossRef]

- Yang, H.; Yu, J.; Zo, H.; Choi, M. User Acceptance of Wearable Devices: An Extended Perspective of Perceived Value. Telemat. Inform. 2016, 33, 256–269. [Google Scholar] [CrossRef]

- Rapp, A.; Curti, L.; Boldi, A. The Human Side of Human-Chatbot Interaction: A Systematic Literature Review of Ten Years of Research on Text-Based Chatbots. Int. J. Hum.-Comput. Stud. 2021, 151, 102630. [Google Scholar] [CrossRef]

- Marjerison, R.K.; Zhang, Y.; Zheng, H. AI in E-Commerce: Application of the Use and Gratification Model to the Acceptance of Chatbots. Sustainability 2022, 14, 14270. [Google Scholar] [CrossRef]

- Fatima, T.; Kashif, S.; Kamran, M.; Awan, T.M. Examining Factors Influencing Adoption of M-Payment: Extending UTAUT2 with Perceived Value. Int. J. Innov. Creat. Chang. 2021, 15, 276–299. [Google Scholar]

- Huang, W.; Hew, K.F.; Gonda, D.E. Designing and Evaluating Three Chatbot-Enhanced Activities for a Flipped Graduate Course. Int. J. Mech. Eng. Robot. Res. 2019, 8, 813–818. [Google Scholar] [CrossRef]

- Sacchetti, F.D.; Dohan, M.; Wu, S. Factors Influencing the Clinician’s Intention to Use AI Systems in Healthcare: A Value-Based Approach. In AMCIS 2022 Proceedings; Americas Conference on Information Systems (AMCIS): Minnesota, Country, 2022; p. 17. Available online: https://aisel.aisnet.org/amcis2022/sig_health/sig_health/17 (accessed on 26 August 2023).

- Zarouali, B.; Van den Broeck, E.; Walrave, M.; Poels, K. Predicting Consumer Responses to a Chatbot on Facebook. Cyberpsychol. Behav. Soc. Netw. 2018, 21, 491–497. [Google Scholar] [CrossRef] [PubMed]

- De Cicco, R.; Iacobucci, S.; Aquino, A.; Romana Alparone, F.; Palumbo, R. Understanding Users’ Acceptance of Chatbots: An Extended TAM Approach. In Chatbot Research and Design, Proceedings of the 5th International Workshop, CONVERSATIONS 2021, Virtual Event, 23–24 November 2021, Revised Selected Papers; Springer International Publishing: Berlin/Heidelberg, Germany, 2022; pp. 3–22. [Google Scholar]

- Zhang, R.; Zhao, W.; Wang, Y. Big data analytics for intelligent online education. J. Intell. Fuzzy Syst. 2021, 40, 2815–2825. [Google Scholar] [CrossRef]

- Völkel, S.T.; Haeuslschmid, R.; Werner, A.; Hussmann, H.; Butz, A. How to Trick AI: Users’ Strategies for Protecting Themselves from Automatic Personality Assessment. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–15. [Google Scholar]

- Turel, O.; Serenko, A.; Bontis, N. User Acceptance of Hedonic Digital Artifacts: A Theory of Consumption Values Perspective. Inf. Manag. 2010, 47, 53–59. [Google Scholar] [CrossRef]

- Ashfaq, M.; Yun, J.; Yu, S. My Smart Speaker is Cool! Perceived Coolness, Perceived Values, and Users’ Attitude toward Smart Speakers. Int. J. Hum.-Comput. Interact. 2021, 37, 560–573. [Google Scholar] [CrossRef]

- Hill, R. What Sample Size is “Enough” in Internet Survey Research? Interpers. Comput. Technol. Electron. J. 21st Century 1998, 6, 1–12. [Google Scholar]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to Use and How to Report the Results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Hair, J.F., Jr.; Sarstedt, M.; Ringle, C.M.; Gudergan, S.P. Advanced Issues in Partial Least Squares Structural Equation Modeling (PLS-SEM), 2nd ed; Sage Publications: New York, NY, USA, 2017. [Google Scholar]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A New Criterion for Assessing Discriminant Validity in Variance-Based Structural Equation Modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Liu, Q.; Huang, J.; Wu, L.; Zhu, K.; Ba, S. CBET: Design and Evaluation of a Domain-Specific Chatbot for Mobile Learning. Univers. Access Inf. Soc. 2020, 19, 655–673. [Google Scholar] [CrossRef]

- Othman, K. Towards Implementing AI Mobile Application Chatbots for EFL Learners at Primary Schools in Saudi Arabia. J. Namib. Stud. Hist. Politics Cult. 2023, 33, 271–287. [Google Scholar] [CrossRef]

- Laupichler, M.C.; Aster, A.; Schirch, J.; Raupach, T. Artificial Intelligence Literacy in Higher and Adult Education: A Scoping Literature Review. Comput. Educ. Artif. Intell. 2022, 3, 100101. [Google Scholar] [CrossRef]

- Wang, B.; Rau, P.L.P.; Yuan, T. Measuring User Competence in Using Artificial Intelligence: Validity and Reliability of Artificial Intelligence Literacy Scale. Behav. Inf. Technol. 2022, 42, 1324–1337. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer Acceptance and Use of Information Technology: Extending the Unified Theory of Acceptance and Use of Technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

| Source | Aim | Theoretical Model | Highlights | |

|---|---|---|---|---|

| 1. | [13] | Exploring the factors affecting higher education students’ adoption of chatbots in India. | Not clearly stated | Personalized learning experiences and timely assistance resulted in students’ increased willingness to adopt chatbots. |

| 2. | [55] | Investigating students’ acceptance of chatbot use in studio-based learning in a Malaysian university. | Extended TAM | Accessibility, perceived ease of use, prompt feedback, human-like interaction, and privacy positively influence intention to use. |

| 3. | [31] | Examining chatbots’ impact on learning Chinese vocabulary and measuring students’ acceptance of chatbot technology. | TAM | Positive learning outcomes, perceived usefulness emerged as a powerful indicator of use intention, and perceived ease of use was not an indicator. |

| 4. | [60] | Exploring the factors that influence the chatbots’ acceptance among university students. | UTAUT2 | Effort expectancy performance expectancy and habit positively impact students’ intentions to adopt chatbots. |

| 5. | [28] | Identifying the factors that affect chatbot adoption in higher education. | UTAUT2 | Habit, perceived trust, and performance expectancy influence the use intentions of chatbots. Interactivity, design, and ethics influence students’ perceived trust. |

| 6. | [16] | Examining the drivers of students’ adoption of chatbots in higher education in India. | Extended TAM | Students’ adoption of chatbot technology is positively impacted by ease of use, usefulness, attitude, perceived convenience, and enhanced performance. |

| 7. | [58] | Investigating undergraduates’ technological acceptance of chatbots as well as their impact on students’ health literacy. | UTAUT2 | Positive impact on students’ health literacy. Seventy percent of the participants responded positively in terms of self-efficacy, effort expectancy, attitude, performance expectancy, and behavioral intention. |

| 8. | [57] | Examining the acceptability of chatbot use among university students in Europe. | UTAUT2 | Effort expectancy, nonjudgmental expectancy, and performance expectancy significantly predict intention to use. |

| 9. | [51] | Assessing university students’ acceptance of adopting chatbot technology in online courses in Malaysia. | TAM | Students demonstrated a high level of readiness to accept and use chatbot technology in their online courses. |

| 10. | [61] | Measuring the determinants that impact chatbot acceptance among students in Egypt. | UTAUT | Social influence, effort expectancy, and performance expectancy positively affect students’ acceptance of adopting chatbots in learning. |

| 11. | [43] | Evaluating students’ acceptance and satisfaction with using chatbot technology. | UTAUT2 | Chatbots effectively improved students’ learning and overall performance. |

| 12. | [52] | Measuring the technological acceptance of chatbot integration in language learning. | Extended TAM | Students positively rated their chatbot experience, particularly in their responses to their perceived usefulness, attitude, perceived ease of use, and self-efficacy. |

| 13. | [59] | Investigating what makes students more likely to adopt chatbots as e-learning tools. | Extended TAM | Students’ acceptance of chatbots was significantly influenced by perceived usefulness, perceived trust, perceived risk, perceived enjoyment, and attitude. |

| 14. | [54] | Investigating students’ actual use and intention of chatbots in learning. | Extended TAM | Personalization, perceived intelligence, anthropomorphism, perceived trust, perceived ease of use, interactivity, and perceived usefulness determine the intention to adopt. |

| Characteristics | n | % | |

|---|---|---|---|

| Gender | Male | 120 | 27.8 |

| Female | 312 | 72.2 | |

| Age | ≤18 | 14 | 3.2 |

| 19–20 | 194 | 44.9 | |

| 21–22 | 178 | 41.2 | |

| 23–24 | 28 | 6.5 | |

| ≥25 | 18 | 4.2 | |

| Educational Level | Undergraduate | 386 | 89.4 |

| Graduate | 46 | 10.6 | |

| Academic Major | Health sciences | 50 | 11.6 |

| Humanities | 144 | 33.3 | |

| Social sciences | 103 | 23.8 | |

| Pure sciences | 92 | 21.3 | |

| Computer science and information technology | 43 | 10.0 | |

| Construct | Indicator (In) | Indicator Loadings | α | CR | AVE | R2 | R2 Adjusted | Q2 |

|---|---|---|---|---|---|---|---|---|

| Perceived Ease of Use | In 1 | 0.85 | 0.88 | 0.92 | 0.73 | |||

| In 2 | 0.90 | |||||||

| In 3 | 0.88 | |||||||

| In 4 | 0.80 | |||||||

| Perceived Usefulness | In 1 | 0.85 | 0.86 | 0.90 | 0.70 | |||

| In 2 | 0.87 | |||||||

| In 3 | 0.79 | |||||||

| In 4 | 0.84 | |||||||

| Perceived Enjoyment | In 1 | 0.89 | 0.89 | 0.93 | 0.83 | |||

| In 2 | 0.94 | |||||||

| In 3 | 0.89 | |||||||

| Perceived Risk | In 1 | 0.95 | 0.88 | 0.92 | 0.79 | |||

| In 2 | 0.84 | |||||||

| In 3 | 0.86 | |||||||

| Attitude | In 1 | 0.81 | 0.84 | 0.89 | 0.68 | 0.58 | 0.57 | 0.49 |

| In 2 | 0.84 | |||||||

| In 3 | 0.86 | |||||||

| In 4 | 0.78 | |||||||

| Perceived Value | In 1 | 0.83 | 0.89 | 0.92 | 0.75 | 0.53 | 0.52 | 0.51 |

| In 2 | 0.90 | |||||||

| In 3 | 0.89 | |||||||

| In 4 | 0.84 | |||||||

| Chatbot Acceptance | In 1 | 0.78 | 0.90 | 0.92 | 0.71 | 0.57 | 0.56 | 0.44 |

| In 2 | 0.88 | |||||||

| In 3 | 0.87 | |||||||

| In 4 | 0.84 | |||||||

| In 5 | 0.84 |

| Constructs | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

|---|---|---|---|---|---|---|---|

| 1. Perceived Ease of Use | 0.86 | ||||||

| 2. Perceived Usefulness | 0.61 (0.69) | 0.84 | |||||

| 3. Perceived Enjoyment | 0.53 (0.59) | 0.55 (0.62) | 0.91 | ||||

| 4. Perceived Risk | −0.10 (0.11) | −0.07 (0.07) | −0.03 (0.07) | 0.89 | |||

| 5. Attitude | 0.60 (0.69) | 0.63 (0.73) | 0.59 (0.68) | −0.08 (0.08) | 0.82 | ||

| 6. Perceived Value | 0.61 (0.79) | 0.51 (0.57) | 0.65 (0.73) | −0.09 (0.09) | 0.67 (0.77) | 0.89 | |

| 7. Chatbot Acceptance | 0.56 (0.63) | 0.50 (0.56) | 0.62 (0.68) | −0.12 (0.09) | 0.65 (0.79) | 0.63 (0.76) | 0.84 |

| H | Independent Variables | Path | Dependent Variables | Path Coefficients (β) | Standard Errors (SE) | t-Values | p-Values |

|---|---|---|---|---|---|---|---|

| H1 | Perceived Ease of Use | → | Attitude | 0.14 | 0.08 | 1.73 | 0.08 |

| H2 | Perceived Usefulness | → | Attitude | 0.30 | 0.06 | 4.51 | 0.00 * |

| H3 | Attitude | → | Chatbot Acceptance | 0.42 | 0.09 | 4.62 | 0.00 * |

| H4 | Perceived Usefulness | → | Perceived Value | 0.04 | 0.07 | 0.56 | 0.57 |

| H5 | Perceived Enjoyment | → | Perceived Value | 0.45 | 0.06 | 7.28 | 0.00 * |

| H6 | Perceived Risk | → | Perceived Value | −0.03 | 0.06 | 0.59 | 0.55 |

| H7 | Perceived Value | → | Chatbot Acceptance | 0.41 | 0.08 | 4.97 | 0.00 * |

| H8 | Perceived Ease of Use | → | Perceived Value | 0.34 | 0.08 | 4.19 | 0.00 * |

| H9 | Perceived Enjoyment | → | Attitude | 0.13 | 0.08 | 1.68 | 0.09 |

| H10 | Perceived Risk | → | Attitude | −0.01 | 0.06 | 0.19 | 0.84 |

| H11 | Perceived Value | → | Attitude | 0.35 | 0.10 | 3.50 | 0.00 * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Abdullatif, A.M. Modeling Students’ Perceptions of Chatbots in Learning: Integrating Technology Acceptance with the Value-Based Adoption Model. Educ. Sci. 2023, 13, 1151. https://doi.org/10.3390/educsci13111151

Al-Abdullatif AM. Modeling Students’ Perceptions of Chatbots in Learning: Integrating Technology Acceptance with the Value-Based Adoption Model. Education Sciences. 2023; 13(11):1151. https://doi.org/10.3390/educsci13111151

Chicago/Turabian StyleAl-Abdullatif, Ahlam Mohammed. 2023. "Modeling Students’ Perceptions of Chatbots in Learning: Integrating Technology Acceptance with the Value-Based Adoption Model" Education Sciences 13, no. 11: 1151. https://doi.org/10.3390/educsci13111151

APA StyleAl-Abdullatif, A. M. (2023). Modeling Students’ Perceptions of Chatbots in Learning: Integrating Technology Acceptance with the Value-Based Adoption Model. Education Sciences, 13(11), 1151. https://doi.org/10.3390/educsci13111151